Abstract

The precise segmentation of white blood cells (WBCs) within blood smear images is a significant challenge with implications for both medical research and image processing. Of particular importance is the often neglected task of accurately segmenting WBC nuclei, an aspect that currently lacks dedicated methodologies. This paper introduces a straightforward and efficient method designed to fill this critical gap, providing an effective solution for the efficient segmentation of WBC nuclei. In blood smear imagery, the distinctive coloration of WBCs contrasts with the hues of other blood components. The inherent obscurity of WBCs prompts their segmentation by isolating pixels with minimal intensities. To streamline this process, our proposed method employs the Laplacian pyramid technique to decorrelate pixels in blood smear images, thereby amplifying the contrast. Subsequently, the intensities of pixels constituting blood cells, encompassing WBCs and the background, are modeled using three Gaussian random variables. Capitalizing on this feature, we implement the Gaussian mixture model (GMM) clustering method to determine the optimal threshold value, facilitating a highly precise segmentation of WBC nuclei. The proposed method demonstrates the capability to process images containing a single WBC as well as effectively functioning with images containing multiple cells of this type. Evaluation of the method on the ALL-IDB, ALL-IDB2, CellaVision, and JTSC datasets yielded accuracy values of 0.9802, 0.9725, 0.9772, and 0.9730, respectively. Comparative analysis with state-of-the-art methods revealed a notably comparable performance, underscoring the effectiveness of the proposed approach. The method presented in this article is highly competitive for segmenting the nuclei of WBCs compared to state-of-the-art methods. The three main advantages of our method are its ability to process images containing one or more WBCs, the automatic calculation of threshold values for each processed image, eliminating the need for manual parameter adjustments. Lastly, the method is efficient, as its algorithmic complexity is approximately \(\varvec{\mathcal {O}(nm)}\).

Graphical abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

White blood cells (WBCs), also known as leukocytes, are part of the human immune system that defend, among other cells of the immune system, the body against infections by increasing the number of WBCs when a person suffers one, so the number of WBCs in blood is one of the features that the physicians employ to diagnose illnesses and to assess the adequate treatment [1, 2]. Analyzing the nuclei of WBCs individually holds significance as it offers insights into the maturity of the WBCs. This, in turn, aids in determining the extent of infection expansion. Furthermore, scrutinizing the WBC nuclei provides valuable data for the detection of specific illnesses, such as leukemia [3], among others.

The analysis of WBCs is frequently carried out manually by experts; as a result, the outcomes may be affected not only by the fatigue of the hematologist but also by their level of expertise. Given that WBC detection relies on visual examination by humans, the introduction of an automated vision system could prove invaluable in addressing the aforementioned issues.

In an automated vision system designed for both WBC counting and nucleus analysis, the quality of WBC segmentation constitutes a critical stage. However, achieving accurate segmentation of WBCs proves challenging due to various factors such as image features and acquisition conditions, including but not limited to image scale, hue staining, and overall image size [4].

Therefore, previous studies demonstrate a variety of methodologies for WBC segmentation, encompassing convolutional neural networks [5,6,7,8,9,10,11], methods relying on the extraction of color features [12,13,14,15], and other techniques [16,17,18,19,20,21,22,23,24,25,26,27]. It is noteworthy that several of these approaches exclusively target cropped WBC images containing a solitary cell per image [7,8,9, 12, 13, 15, 17,18,19,20], while others present algorithms designed for segmenting WBCs solely in images featuring multiple WBCs [5, 6, 14, 16]. Notably, none of the existing methods is specifically designed to segment WBCs in both single-cell and multi-cell images. More significantly, as far as we know, there are currently no studies in which the nucleus of WBCs has been segmented.

Motivated by the relevance of WBCs segmentation in the medical and computational fields, and in the absence of methods addressing the challenges mentioned above, this article introduces a method with the capability to segment the nuclei of WBCs in smear images containing one or more of these cells. The formulation of the proposed method arises from the following observations.

-

Blood smear images used for WBC detection encompass three primary elements: red blood cells, WBCs, and the background. Upon transforming these blood smear images into gray scale, the following features become apparent:

-

The intensity histogram of the image is tri-modal, since each peak represents the pixels of the WBCs, red blood cells, and the background.

-

The intensity of the pixels that shape the WBCs is low.

The trivial approach of segmenting WBCs based on selecting pixels whose intensities are within a low-intensity range achieves a low performance. This is because neighboring pixels are highly correlated [28, 29]; hence, selecting low-intensity pixels does not guarantee an accurate WBC segmentation.

Hence, our method decorrelates pixels, facilitating a more precise characterization of the pixel distribution that shapes the WBCs. To achieve this, we employ a variant of the image processing technique called the Laplacian pyramid, commonly used to decorrelate pixels for coding and compressing images [28, 29]. The application of this technique to WBC segmentation enhances image contrast, thereby providing a more accurate definition of the range of pixel intensities that make up the WBCs.

Moreover, building upon the aforementioned observations, we advocate for modeling the pixel intensities of WBCs, blood cells, and the background individually through three Gaussian distributions.

The main contributions of this work are the following:

-

We introduce an approach to segment the nuclei of WBC in smear images. Most of current methods segment the whole cell.

-

Our method is capable to segment WBCs in images both containing several WBCs or in cropped images containing only one white WBC. The vast majority of prior studies introduce algorithms specifically tailored for segmenting WBCs either in images featuring multiple WBCs or in cropped images containing a single cell, but not in both types of images using a unified algorithm.

-

The proposed method automatically computes the optimal threshold for WBCs nuclei segmentation, eliminating the need for user-provided values for image binarization. In contrast, current state-of-the-art methods require users to set parameters that impact performance.

The rest of the paper is organized as follows: in Section 2, we present the previous works addressing the segmentation of WBCs in blood smear images; besides, a summary of related methods is included. We comprehensively present and elucidate our segmentation approach in Section 3. In Section 4, we delineate the widely recognized datasets utilized for conducting experiments and expound on the metrics employed to assess the performance of our method. The results of the experiments are presented in Section 5, and the results are discussed and compared regarding related works in Section 6. Finally, the paper closes with conclusions in Section 7.

2 Related works

The segmentation of WBCs in blood images is difficult because the features of the images acquired may vary, for instance, the image size, image scale, illumination conditions, and the hue of the WBCs staining. There are several previous works that present WBC segmentation proposals under different approaches. Next, we review different related works addressing this issue.

The authors of reference [5] propose a framework where the original images along with ground truth masks of each blood cell class are pre-processed. In the preprocessing stage, the RGB input image is converted to a gray scale of masked image and pixel fusing and unity mask generation. A pretrained VGG16 is employed for feature extraction at pixel level, and then the training process is initiated.

In reference [6], the authors present a proposal that transforms the blood smear images from RGB to HSV color space to detect and extract the leukocytes. A U-Net analyzes the cells’ border to set the cell class between red blood cells, platelets, and WBCs; the features of the cells are enhanced with a weighted cross-entropy loss function based on class weight and distance transformation weight so that the U-Net learns the border features of the cells.

The proposal of Kumar and Vasuki [12] consists on to map the RGB input image to the HSV space. The H and S components are grouped in four clusters using the k-means technique; the nucleus of the WBCs is segmented by selecting the pixels grouped in the cluster with the lowest red value. The authors of reference [13] present a segmentation approach where the contrast between the nucleus of the WBCs and the background increases. The method normalizes and enhances the contrast of the G component, from the RGB input image, and then, it replaces the R and G components of the input image.

In reference [14], the authors present a proposal where, firstly, they perform a color correction process using the CIELAB color space. Then, the WBCs, red blood cells, and background areas are segmented by using the Otsu method and combining an area analysis of the image mapped in the HSV, CMYK, and RGB color spaces. The pixels are labeled by applying the connected component approach, after using a morphological filter is employed to remove noise. Finally, the overlapped cells are separated by using the circle Hough transform.

Sudha and Geetha [16] present a method based on edge strength Grabcut. The user provides, as a rectangular box around the object, initial data addressing the background and foreground of the object. The pixels inside the box are labeled as unknown while the pixels outside the box are labeled as background. Then, a method is used to determine if the unknown pixels are the object’s foreground or background.

The authors of reference [17] propose an approach based on Atanassov’s intuitionistic fuzzy sets and fuzzy mathematical morphology. The proposed approach is applied to the H component, of the HSV color space, so as to model the color of the input image. The pixels identified as part of the WBC are compared regarding the whole image with a similarity metric.

In reference [18], the authors present a method that locates the WBCs using multiple windows obtained by scoring multi-scale cues. The method is initialized with the sub-images pre-processed from the location windows; then, the dilation-based GrabCut algorithm is iteratively applied. Li et al. [7] combine an attention mechanism and dilated convolutions of a U-Net model. The dilated convolution replaces the traditional convolution, and the receptive field is expanded to obtain multi-scale features. In the up-sampling layers, after each convolution layer, the adaptive features are refined to improve the segmentation performance of the model.

The authors of reference [19] segment the nucleus of the WBCs using the k-means clustering technique with an adaptive value of K. Along with a method that performs arithmetical operations following a control parameter, mean shift technique to smooth and preserve boundaries and the texture is enhanced with the Fourier transform. In reference [30], after the image is mapped to the L*a*b* and CMYK color spaces, the contrast of the M and b* components is adjusted. Then, the noise is removed from the images by applying a median filter. The components M and b* are subtracted between them; the k-means technique is employed to divide the image into three clusters. Finally, the nucleus of the WBCs is segmented by selecting the pixels grouped with the brighter intensities.

Banik et al. [15] present a method where the RGB input image is mapped to the L*a*b* and HSI color spaces. Then, the mean of each component is computed, and the minimum average value obtained between the components of L*a*b* and HSI is selected to divide the values of the components. The WBCs are segmented by dividing the image into two groups by using the k-means technique.

The authors of reference [31] employ morphological properties of the WBCs to segment them. Ellipse curve fitting, thresholding, and morphological operations are combined to segment the WBCs. The WBCs are classified by selecting the most discriminative features extracted from the nucleus and the cytoplasm.

In reference [8], the authors propose a deep learning network based on UNet++ and ResNet that designs a feature encoder to extract multi-scale features, introducing skip pathways on convolutional blocks to fuse features at different scales. The network refines the segmentation of the WBCs by using convolution and deconvolution as a decoder. Reena et al. [9] detect the WBCs by using a semantic segmentation approach that combines DeepLabv3+ architecture, and as a feature extractor, the ResNet-50 is employed.

In reference [32], the authors present a method to fuse the components of the colors, based on the principal component analysis, derived from the CMYK and HSV color spaces. The approach extracts the nucleus of the WBCs by the enhanced contrast by the principal component analysis-based fusion and independence to color and brightness variation.

The authors of reference [20] propose a segmentation method based on a multi-scale information fusion network. The network employs mechanisms to fuse internal and external data. The initial layers of the network include boundary information by processing the spatial characteristics. The network divides and propagates the boundary data on multi-scale to fuse the external information.

Zhong et al. [33] present an algorithm to segment the nucleus of WBCs that uses sparsity and geometry constraints. The RGB and HSL components of the input image are combined to build a new image. Useful data of the features of the WBC nucleus are preserved by applying a sparsity constraint. The robustness of the proposed segmentation algorithm against outliers is improved using a model fitting strategy.

Table 1 displays a summary of the related works. It is noteworthy to observe that the majority of the methods listed in Table 1 are not specifically tailored to handle images containing both single-cell and multi-cell instances.

3 Segmentation proposal

As we mentioned before, usually the images of red blood smear samples contain three elements: blood cells, WBCs, and the background. In order to make the WBCs visible to the human eye, the blood smear samples are stained. The color the WBCs acquire contrasts with the colors of the other elements of the blood smear; thus, it is easy for the humans to locate the WBCs within the images. But also, it is possible to locate the WBCs by intensity features, since, after the blood smear sample is stained, the WBCs tend to be obscure. Hence, the WBCs can be segmented by selecting the pixels with the lowest intensities.

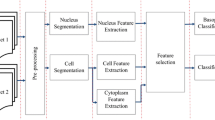

Thus, we propose to represent the intensities of the pixels as a distribution of three random variables, where each random variable represents the pixels of the WBCs, blood cells, and the background. Therefore, the WBCs can be segmented by selecting the pixels whose intensity is within the distribution of the random variable that represents the WBCs. But each pixel is highly correlated with its neighbor pixels; therefore, there may be pixels with low intensity that do not shape the WBCs. So, before the WBCs are segmented, we process the image with a variant of the Laplacian pyramid so as to decorrelate the pixels. Our proposal consists on the following steps (see Fig. 1):

-

1.

The color input image is converted to gray scale.

-

2.

The gray scale image is processed with the variant of the Laplacian pyramid that we propose.

-

3.

The resulting image is binarized by selecting the pixels whose intensity is within the distribution modeled by the random variable that represents the intensity of the WBCs’ pixels.

Next, we present in detail the mathematical operations of our methodology.

3.1 Laplacian pyramid

The Laplacian pyramid is an image processing technique developed to compact images by coding the pixels in the spatial frequency as well as in space. Coding is obtained by, firstly, removing the correlations between pixels by subtracting a low-pass filtered copy of the image from the image itself; secondly, compression is achieved by quantizing the difference image [28, 29]. For our purpose, we are interested on to decorrelate the pixels. Hence, the image processing is as follows.

An image can be represented in multiple scales (different resolutions of the image) by using a Gaussian pyramid. In order to create the latter one, firstly, the high-frequency components of the image are removed; then, the size of the image is reduced by halving the image. One of the simplest ways to get rid of the high-frequency components in an image is to use a low-pass filtering or “smoothing” filter. This is implemented by applying the convolution operation with a Gaussian kernel whose values are generated as indicated by the Eq. 1:

When applying this smoothing filter to an image, the result is a blurred version of the original one. That is, let I be the gray scale input image that we denote as \(g_0\); the next level \(g_1\) is obtained by convolving the Gaussian kernel H with \(g_0\), and then the resolution of the image is reduced by halving the image; similarly, \(g_2\) is obtained by convolving H with \(g_1\), and the resolution is reduced, and so on. Hence, we denote this operation as follows:

where the operator \(*\) denotes convolution and the function REDUCE halves the image. Let N be the number of the pyramid’s maximum level, and the function EXPAND that doubles the size of the image, the upside direction is performed with the following:

Figure 2 shows schematically the mathematical operations required to build the Laplacian pyramid. The resulting image \(g'_0\) is binarized in order to segment the WBCs. Figures 3 and 4 show two examples of how the images are processed through each level of the Laplacian pyramid, \(g_0\) is the input image and \(g'_0\) is the output or resulting image.

It is important to mention that the pixels’ values of the image obtained by using the Laplacian pyramid are inverted regarding the original image. That is, the high-intensity pixels in the input image are the low-intensity pixels in the resulting image, and vice versa.

For instance, Fig. 5 a and b show the intensity histograms of the output and input images of Fig. 3, respectively. In the same way, Figs. 6 a and b show the intensity histograms of the output and input images of Fig. 4, respectively.

a and b The intensity histograms of the output and input images shown in Fig. 3, respectively

a and b The intensity histograms of the output and input images shown in Fig. 4, respectively

Notice that the contrast of the output images is improved, because the intensity histograms show that the input images do not employ all the intensity levels, while the intensity histograms of the output images show that almost all the intensity levels are employed.

3.2 Binarization through clustering to detect the nuclei of WBCs

In this work, the binarization to segment the WBC nucleus through the use of blood smear images is based on the following three main assumptions.

-

a)

The blood smear image to be analyzed contains one or more WBCs. Applying the proposed method on an image that does not contain WBC leads to incorrect results.

-

b)

The size of the image to be analyzed is at least \(100 \times 100\) pixels. Then, the total number of pixels is at least \(10^{4}\). In consequence, the distribution of values of pixels in the image can be approximated by normal distributions, according to the central limit theorem [34]. Additionally, data sets publicly available on the internet usually contain blood smear images much bigger than \(100 \times 100\) pixels.

-

c)

Each pixel of the image is obtained from one of a set of independent and identically distributed random variables. Consequently, each pixel can be treated as a value randomly generated by a process that does not depend on the other the values.

We address the problem of segmenting the nucleus of one (or more) WBC within a blood smear image as a clustering problem, based on the assumptions above, and on the observation that the Laplacian of a blood smear image has three main pixel values. Figure 7 shows the Laplacian of a WBC image processed by a Gaussian pyramid. In this figure, it can be observed the predominance of three distributions of intensities; these are the following: background (pixels tending to black), WBCs (pixels tending to white), and red blood cells (pixels tending to gray). However, the threshold values are different from one image to another. Also, the pixel values of the nucleus of WBC are quite similar to other pixels in the image.

The clustering problem is simplified, since the number of existing clusters is known (three). Furthermore, it is assumed that the pixel values follow one (of a total of three) Gaussian distributions. The goal is to assign each value of the pixels in one of these three distributions, automatically.

a and b The intensity histogram and the binarization of Fig. 7, respectively

One of the most effective methods to cluster values that are generated by a statistical process is based on mixture models. In this approach, it is assumed that each sample is obtained from a probability distribution (like the normal or Gaussian distribution) and that the parameters of the latter can be estimated from the data. It is also assumed that there may be one or more distributions that generate these data.

For the case of WBC images, the three distributions (background, white blood cells, and red blood cells) are assumed to be Gaussian, and the probability density function for this univariate scenario is shown below:

where \(\Theta = \{ (\mu _{1}, \sigma _{1}), (\mu _{2}, \sigma _{2}), (\mu _{3}, \sigma _{3})\}\) are the medians and standard deviations of each distribution. The expectation-maximization algorithm is used to obtain the values of these parameters.

On the other hand, it is well known that most of clustering algorithms do not scale well for large data sets. Gaussian mixture model clustering has a computational complexity \(\mathcal {O}(pkd^{3})\), with p the number of samples, k the total of clusters, and d the number of dimensions. This is a problem with large-size blood smear images because the number of samples (pixels) to process can be huge. To face this problem, the image to be analyzed is transformed into a one-dimensional data set, and the number of pixels is reduced by several orders of magnitude. This is accomplished as explained below.

-

1.

The value of each pixel is used as the unique feature of the image. Their locations are not taken into account. This reduces the number of dimensions from three (pixel value and location (x, y)) to one (pixel value). This is done by reshaping the image to make it a one-dimensional vector. No other feature extraction method or transformations to the image are necessary.

-

2.

The pixel values are grouped into N \(\le 256\) bins by a histogram method. Building a histogram has a computational complexity \(\mathcal {O}(nm)\), for an image of \(n\times m\) pixels.

The Gaussian mixture model clustering is applied to the N centers of the bins of the histogram. Therefore, the complexity of clustering the pixels is reduced to \(\mathcal {O}(3N)\). The total time of the binarization (histogram and clustering) is \(\mathcal {O}(3N) + \mathcal {O}(nm) \approx \mathcal {O}(nm)\). The pixel values that are closest to the largest mean correspond to the nucleus of WBCs. Algorithm 1 shows this method.

Figure 8a shows the intensity histogram for the image shown in Fig. 7. It can be observed that two overlapping normal distributions (corresponding to background and red blood cells) have an approximate mean of 30 and 80, respectively. The third normal distribution (corresponding to WBCs) is far from the first two, with a mean of about 220. The last of these three means is used to identify the nuclei of WBCs. Figure 8b shows the identified nuclei.

4 Experimental setup

In this section, we describe the benchmarks utilized to assess our approach. Subsequently, in the following section, we will compare the performance of our method with that of previous works. It should be noted that our proposal is applicable to images containing multiple WBCs as well as cropped images containing only a single WBC. Therefore, we conducted experiments using two types of datasets: one consisting of images containing several WBCs and three others containing cropped images, each featuring only one WBC per image.

The performance of our approach is assessed quantitatively by conducting a pixel-level segmentation evaluation, using different metrics that the related works employ. Next, we detail the features of the datasets and the metrics employed.

4.1 Datasets

We utilized the renowned Acute Lymphoblastic Leukemia Image DatabaseFootnote 1 (ALL-IDB) as our benchmark database for evaluating algorithms pertaining to WBC counting, cancer detection, and classification. The ALL-IDB comprises 108 color images of blood smear samples and is categorized into two distinct sets: a collection of 33 images sized \(1368\times 1712\) pixels and another set featuring 75 images with dimensions of \(1944\times 2592\) pixels. Notably, these two image sets within the ALL-IDB exhibit variations in scale, brightness, and staining conditions, and a majority of them contain multiple WBCs. For instance, the input images depicted in Figs. 3 and 4 are extracted from the aforementioned first and second sets, respectively. The corresponding ground truth data for the images within ALL-IDB can be downloaded from this repository.Footnote 2

For the experiments involving cropped images, each featuring a single WBC, the following datasets were utilized: ALL-IDB2 comprises 260 color images, each with dimensions of \(257\times 257\) pixels; CellaVision includes 100 color images, each sized \(300\times 300\) pixels; and JTSC encompasses 300 color images, each of size \(120\times 120\) pixels. It is noteworthy that the images within these three databases exhibit significant diversity in terms of brightness and staining conditions. These databases, along with their corresponding ground truth data, can be accessed from this repository.Footnote 3

4.2 Evaluation metrics

We perform a pixel-level segmentation evaluation to assess the quality of segmentation by comparing the binary versions of the segmented images obtained through our approach with the corresponding ground truth, which consists of hand-segmented images created by human experts. Table 2 provides a summary of the pixel classification, including true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN).

The performance of the proposed method was evaluated using the metrics accuracy (Ac), specificity (Sp), precision (Pr), sensitivity (Se), and F-score (F).

Studies focusing on WBC segmentation typically validate algorithms by assessing the pixel-level classification of segmented images. However, this approach precludes the possibility of conducting object-based segmentation evaluations. Consequently, in the current state of the art in image segmentation, algorithms that assess their performance against ground truth often utilize metrics such as probabilistic Rand index (PRI), variation of information (VOI), and global consistency error (GCE). These metrics, including PRI, VOI, and GCE, enable object-based evaluation of segmented images [35].

Let I and S represent the ground truth and the segmentation generated by the algorithm, respectively. The probabilistic Rand index (PRI) quantifies the similarity between these two data clusters as follows:

where n represents the number of pixels, and \(c_{i,j}\) is a Boolean function defined as follows: \(c_{i,j}=1\) if \(L_i^I=L_j^S\), and \(c_{i,j}=0\) otherwise. Here, \(L_i^I\) denotes the label of pixel \(x_i\) in the ground truth, \(L_j^S\) signifies the label of pixel \(x_j\) in the segmented image, and \(p_{i,j}\) denotes the expected value of the Bernoulli distribution for the pixel pair.

The variation of information (VOI) index quantifies the cumulative loss and gain of information between the two clusters within the lattice of potential partitions, as depicted below:

where \(H=-\sum _{i=1}^c (n_i/n)\log (n_i/n)\), with \(n_i\) representing the number of points in the ith cluster and c denoting the total number of clusters. The term F corresponds to the mutual information between the two clusters and is defined as follows:

where \(n_{i,j}\) signifies the number of points in the intersection of cluster i from S and cluster j from I, while \(c_S\) and \(c_I\) denote the numbers of clusters in S and I, respectively.

The global consistency error (GCE) quantifies the degree to which a segmented image is considered a refinement of the other. An expression for the error at each pixel \(x_i\) can be formulated as follows:

where \(C(S,I,x_i)=|R(S,x_i)\setminus R(I,x_i) |\div |R(S,x_i)|\), with \(|\cdot |\) denoting cardinality, \(\setminus \) representing the set difference, and \(R(S,x_i)\) indicating the set of pixels belonging to the region in segmentation S that includes pixel \(x_i\).

The PRI, VOI, and GCE metrics have ranges of [0, 1], \([0,\infty )\), and [0, 1], respectively. A higher PRI value indicates better segmentation, while lower values of VOI and GCE indicate better segmentation alignment with the ground truth.

5 Results

In this section, we present the quantitative results obtained by applying our image segmentation method to images from the selected datasets.

Table 3 presents the average performance achieved by our method concerning the metrics outlined in Section 4.2. This table corresponds to the evaluation of processed images from the ALL-IDB dataset.

Table 4 shows several examples of the ALL-IDB images processed with our approach.

Table 5 shows a summary of the scores achieved by our method on the datasets ALL-IDB2, CellaVision and JTSC. The scores for sensitivity, specificity, precision, accuracy, f-score and PRI are around 0.9. The values obtained for the metrics VOI and GCE are close to 0.0.

Tables 6, 7, and 8 showcase a selection of results achieved through the application of our method on the datasets ALL-IDB2, CellaVision, and JTSC. The nuclei of the WBCs are segmented, without including the cytoplasm in the ground truth of these datasets.

It is crucial to conduct a thorough comparison of our results with those documented in prior studies. In Section 6.1, we analyze and discuss our findings in relation to the outcomes reported in the existing literature.

6 Discussion

Within this section, we scrutinize the results outlined in the preceding Section 5, drawing comparisons with related works that utilize identical metrics and image datasets to assess their algorithms. Additionally, we delve into the robustness of our approach.

6.1 Comparison of our approach against methods of the state of the art

Table 9 shows the results obtained using our proposal and the reported ones in previous works by using the ALL-IDB dataset. Our proposal obtains the highest score for specificity. The scores for precision and accuracy are very close to the maximum possible. The values of sensitivity and F-score are low, regarding the results reported in the works cited. The performance of our method is lower for these metrics due to the following:

-

Our proposal segments the nuclei of the WBCs, while in the ground truth, the nuclei and the cytoplasm are segmented jointly;

-

The imbalance between the positive-class and negative-class pixels in several images damages the scores of sensitivity and F-score metrics. In these ones, the value of the true positives plays an important role.

The accuracy reached with our approach is the second best among all the previous works cited in Table 9. Hence, in general, we can claim that our proposal is quite competitive for WBC segmentation in images containing various WBCs.

Some important observations about the works presented in Table 9 are described below.

-

The method presented in [14] segments WBCs using information of color. However, the color is not processed as a feature because the color components are decoupled, and arithmetic operations are performed between them, as if they were intensity channels. It is also important to mention that the results presented are obtained using only the first 30 images of the ALL-IDB. To make a fairer comparison, we used the same 30 images from ALL-IDB and obtained the following results: 0.7103 for sensibility, 0.9997 for specificity, 0.9784 for precision, 0.9795 for accuracy, 0.8132 for F-score, 0.9604 for PRI, 0.1905 for VOI, and 0.0267 for GCE.

-

The Grabcut method presented in [16] is not entirely automated. Several parameters of the model need to be defined by the user beforehand. Additionally, the images need to be resized to \(256\times 256\) pixels for the method to be applicable. In contrast, our method eliminates the necessity for pre-established parameters as it automatically determines threshold values. Furthermore, it operates without necessitating any modifications to the image dimensions.

-

In [5], the VGG16 architecture is utilized, while in [6], the U-Net architecture is employed. It is evident that the performance achieved using these convolutional neural network architectures is not the highest, as indicated in Table 9. It is widely recognized that implementing a convolutional neural network incurs a substantial computational burden due to the extensive number of parameters that need adjustment during training and image processing. In contrast, our proposed approach exhibits a notably lower computational load, yet attains comparable or superior performance compared to the results reported in references [5] and [6].

Regarding the results of our method using cropped images, Tables 10, 11, and 12 show the performance comparison between the results of different methods with respect to our proposal on the datasets ALL-IDB2, CellaVision, and JTSC, respectively.

The related works present different approaches or methods to segment the nuclei of the WBCs, though it is important to remark to the following.

-

In Table 10, Hedge et al. [13] fulfill the highest values for accuracy and f-score. Nevertheless, the results reported are for 160 images only. The highest values for specificity and precision are obtained by Makem et al. [19]. Kumar and Vasuki [12] obtained the highest value for sensitivity. The references [19, 30] and [15] are based on the k-means algorithm; hence, the performance of these proposals depends on the initial values of the centers. This means that in each execution of the method, different results can be obtained. Quite differently, our method always returns the same results on every run.

-

In Table 11, the highest values for specificity and accuracy are obtained by Lu et al. [8]. They make use of two architectures of convolutional neural networks. For precision, Sudah and Geetha [16] reach the highest value; the best value for sensitivity is obtained by Nadeem et al. [20]. Bouchet et al. obtained the best value for the F-score. [8, 9] and [7] presented different architectures of convolutional neural networks; as we mentioned previously, the computational burden of implementing this type of method is high. Notice that the results obtained with our proposal are close to the results reported in the related works that use convolutional neural networks. The advantage of our method is that its computational load is low.

-

In Table 12, the highest values for specificity, precision, and accuracy is achieved by the method of Lu et al. [8]; Reenaa and Ameer [9] reached the best value for f-score. The highest value for sensitivity corresponds to Zhong et al. [33], but the results they reported are for 138 images of the JTSC dataset.

Color data is often employed to segment the WBCs; however, color is not used as a feature; references [12, 13, 15, 30] and [32] process color information by performing, as mentioned before, arithmetic operations between the color components, as if they were intensity channels. The arithmetic operations to perform and the color components are selected intuitively. Relying on the ability of a human expert to choose features of a problem always leaves uncertainty as to whether there are better ways to solve it.

One very important difference between our approach and those found in related works is that their methods focus on to segment WBCs in images containing several WBCs or cropped images with only one WBC per image. Their methods are designed to work only with one of these two types of images. We demonstrated that our model can process both kinds of images. The reference [16] is the only work, besides us, that presents segmentation results by processing these two kinds of images. However, as we mentioned above, the proposal of Sudha and Geetha [16] is not totally automated because it requires that the user defines previous parameters to process the images. For instance, within the steps of their methodology, the images are binarized, and the threshold value needs to be set manually. In constrast, in our proposal, the threshold value is computed automatically.

The Otsu and the K-means are usually used for image segmentation. Some of the works addressing WBC segmentation make use of these methods [30]. However, they are successful when applied to cropped images. When these algorithms are used on images with a few WBCs, like in ALL-IDB, the segmentation is poor.

The Otsu method works well on balanced problems, i.e., when the number of positive-class and negative-class pixels are similar. These assumptions are not true for images with one or a few WBCs. On the other hand, the K-means technique tends to build the groups with the same size. But in class imbalance problems, the sizes of the groups are very different. The above Otsu and K-means-based methods do not always segment correctly the images containing a few WBCs within the images.

To alleviate the above problem, the related works cited in Table 9 add color information so as to ease the detection of the WBCs.

Figure 9 shows two examples of images and their respective resulting images using the Otsu method, K-means, and our approach. The input images are (a) and (e), and the images (b) and (f) are obtained by using the Otsu method. The images (c) and (g) are obtained by applying the K-means method. The images (d) and (h) are obtained with our approach. It is easy to appreciate that in the images (a) and (e), the imbalance between the pixels that shape the WBCs and the pixels of the other elements of the image is huge; thus, techniques such as the Otsu method or K-means do not segment successfully images like these ones. Our method outperforms them.

The pixel class imbalance does not affect significantly the performance of our method. This is because the image is modeled as a set of three random variables that represent the pixels that shape the nuclei of the WBCs, the red blood cells, and the background. The pixels are classified by computing the probability of being part of one of these three pixel classes. Due to the amount of data, and according to the large number theorem, the probability distribution of the three random variables approximates a Gaussian distribution. Our method takes advantage of this observation, making it superior to other approaches that do not consider this.

6.2 Robustness of the proposed method

As mentioned in the introduction section, the main problems of segmenting the nuclei of WBCs are hue staining, brightness, and image scale.

The hue staining of the WBCs in the ALL-IDB2, CellaVision, and JTSC datasets is different between them; in the ALL-IDB dataset, the hue staining of the first 33 images is different of the remaining 78 images. However, our proposal is robust to hue staining, as the results obtained support this claim, because the images of the dataset are processed in gray scale, thereby, the chromatic characteristics of the images are not employed.

Regarding the image scale, our method is robust to image scale because the WBCs are segmented by selecting the pixels that belong to the highest intensity distribution; thus, it does not depend on the image scale. Hence, our approach successfully segmented the WBCs both in the ALL-IDB images and the cropped images datasets, where the WBCs were captured under different magnifications. That is, the WBCs captured in the ALL-IDB, proportional to the images’ size, are smaller than the WBCs captured in the cropped images datasets; nevertheless, our proposal successfully segments the nuclei, as the results shown in Tables 3 and 5 support our method.

In gray scale images, the brightness affects the features of the image. It is important to remark that the brightness between the ALL-IDB, ALL-IDB2, CellaVision, and JTSC is different. Similarly, to the image scale robustness, our model is robust to brightness because there are selected the pixels that belong to the highest intensity distribution. Here is where the image processing described in Section 3.1 is an important stage because, as mentioned above, the contrast of the image is improved without affecting the intensity distribution, as other techniques for contrast improvement do. For instance, the well-known histogram equalization technique improves the contrast of the images by adjusting the occurrences of each intensity level, such that all the occurrence levels have almost the same value, so the intensity distributions are modified. With our processing proposal, the contrast is improved by employing all the intensity levels but without modifying the shape of the intensity distributions, as shown in Figs. 5 and 6.

It is important to remark that our method is tested with the four datasets mentioned in the experimental setup section, while the works employed to compare our results do not use all the four datasets to validate their algorithms, as we did; hence, it is not clear how robust their algorithms are regarding the image scale, hue staining, and brightness.

For instance, Andrade et al. [30], Banik et al. [15], and Makem et al. [19] present their results using the ALL-IDB2, CellaVision, and JTSC datasets, but they do not report using the ALL-IDB dataset; hence, it is unknown how robust their algorithms are for image scale. Sudha and Geetha [16] use ALL-IDB and CellaVision datasets. As mentioned above, their model requires that the user defines a priori certain parameters to segment the WBCs, while in our proposal, the threshold value to binarize (segment) the WBCs is obtained with Algorithm 1, where the complexity of the algorithm is \(\mathcal {O}(nm)\), being n and m the number of rows and columns of the image, respectively.

As most of the image processing algorithms, our model depends on the image acquisition quality. That is, if the image is blurred, too noisy, or the WBCs are not clearly visible within the image, among other characteristics that degrade the quality of the image, the efficiency of our proposal would be affected. Nevertheless, because we obtain competitive results by performing experiments with the four most commonly employed datasets to validate algorithms for WBC nuclei segmentation, we claim that our model is robust to image scale, hue staining, and brightness.

7 Conclusions

Automatic segmentation of WBC nuclei in images is a problem that has been the subject of numerous studies in recent years. Most of the works found in the literature are designed for images that contain only one WBC, excluding cases in which two or more cells of this type are present. In addition, state-of-the-art methods focus on identifying and segmenting the entire WBC, without discriminating between the body and the nucleus. Also, several of these methods require the images to be cropped, or the background to have been pre-processed.

In this paper, we address these problems, for which we propose a method to segment the nuclei of WBCs, in images that contain one or more WBCs. Our method discriminates the body of the WBC and its nucleus, treating them as different elements. The segmentation achieved with our method is competitive with other state-of-the-art methods, but at a remarkably low computational cost.

In our method, firstly, we apply a variant of the Laplacian pyramid technique so as to decorrelate the pixels; hence, the contrast of the image is improved, and the distribution models of the random variables are more precise. From the images obtained, the intensity histograms are extracted. Considering the number of pixels, and based on the large number theorem, it is considered that the values of the pixels can be treated as generated by a random variable with Gaussian distribution. Taking advantage of this last observation, the Gaussian mixture model clustering method is applied to automatically calculate the threshold for binarizing the images.

To evaluate our method, it was applied to images of publicly available data sets. A quantitative and qualitative evaluation was carried out, comparing it with the results found in other papers. The results obtained demonstrate the effectiveness of the proposed method, which achieves a performance similar to or in some cases superior to that of other methods found in the literature. The metrics in which our proposal reaches the highest value are specificity, precision, accuracy, and PRI. On the other hand, as in the images of the data sets, the WBCs were segmented manually, and the nucleus and body of the WBC were considered a single object; our method obtains low values in the VOI and GCE metrics.

The qualitative results justify these results, due to the discrepancy when considering the entire WBC as a single object, while our method separates the nucleus from the rest of the cell. The results achieved are similar for images with a single WBC, or several of them. This proves its consistency and robustness to process images with one or several WBCs.

Future research paths include the treatment of overlapping WBC images. This is an open issue that affects the automatic counting of the WBCs and a modification of the method proposed in this paper to segment the cytoplasm of WBCs. This is because the size of the cytoplasm may provide data addressing possible cancerous cells.

References

Liu H, Cao H, Song E (2019) Bone marrow cells detection: a technique for the microscopic image analysis. J Med Syst 43:82. https://doi.org/10.1007/s10916-019-1185-9

Mishra S, Majhi B, Sa PK (2019) Texture feature based classification on microscopic blood smear for acute lymphoblastic leukemia detection. Biomed Signal Process Control 47:303–311. https://doi.org/10.1016/j.bspc.2018.08.012

Parente J (2019) Diagnosis for white blood cell abnormalities: leukocytes and leukopenia. Physician Assist Clin 4(3):625–635. https://doi.org/10.1016.j.cpha.2019.02.010

Al-Dulaimi K, Banks J, Nugyen K, Al-Sabaawi A, Tomeo-Reyes I, Chandran V (2021) Segmentation of white blood cell, nucleus and cytoplasm in digital haematology microscope images: a review-challenges, current and future potential techniques. IEEE Rev Biomed Eng 14:290–306. https://doi.org/10.1109/RBME.2020.3004639

Shahzad M, Umar AI, Khan MA, Shirazi SH, Khan Z, Yousaf W (2020) Robust method for semantic segmentation of whole-slide blood cell microscopic images. Comput Math Methods Med 2020:4015323

Li H, Zhao X, Su A, Zhang H, Liu J, Gu G (2020) Color space transformation and multi-class weighted loss for adhesive white blood cell segmentation. IEEE Access 8:24808–24818. https://doi.org/10.1109/ACCESS.2020.2970485

Li D, Yin S, Lei Y, Qian J, Zhao C, Zhang L (2023) Segmentation of white blood cells based on CBAM-DC-UNet. IEEE Access 11:1074–1082. https://doi.org/10.1109/ACCESS.2022.3233078

Lu Y, Qin X, Fan H, Lai T, Li Z (2021) WBC-Net: a white blood cell segmentation network based on UNet++ and ResNet. Appl Soft Comput 101:107006. https://doi.org/10.1016/j.asoc.2020.107006

Reena MR, Ameer PM (2021) Segmentation of leukocyte by semantic segmentation model: a deep learning approach. Biomed Signal Process Control 65:102385

Devi TG, Patil N, Rai S, Sarah CP (2023) Segmentation and classification of white blood cancer cells from bone marrow microscopic images using duplet-convolutional neural network design. Multimed Tools Appl. https://doi.org/10.1007/s11042-023-14899-9

Huynh HT, Dat VVT, Anh HB (2021) White blood cell segmentation and classification using deep learning coupled with image processing technique. In: Dang TK, Küng J, Chung TM, Takizawa M (eds) Future data and security engineering. Big data, security and privacy, smart city and industry 4.0 applications. Springer, Singapore, pp 399–410

Kumar PS, Vasuki S (2017) Automated diagnosis of acute lymphocytic leukemia and acute myeloid leukemia using multi-SV. J Biomed Imaging Bioeng 1(1):20–24

Hegde RB, Prasad K, Hebbar H, Singh BMK (2019) Development of a robust algorithm for detection of nuclei of white blood cells in peripheral blood smear images. Multimed Tools Appl 78:17879–17898

Mohd Safuan SN, Md Tomari MR, Wan Zakaria WN (2018) White blood cell (WBC) counting analysis in blood smear images using various color segmentation methods. Measurement 116:543–555. https://doi.org/10.1016/j.measurement.2017.11.002

Banik PP, Saha R, Kim K-D (2020) An automatic nucleus segmentation and CNN model based classification method of white blood cell. Expert Syst Appl 149:113211. https://doi.org/10.1016/j.eswa.2020.113211

Sudha K, Geetha P (2020) A novel approach for segmentation and counting of overlapped leukocytes in microscopic blood images. Biocybern Biomed Eng 40(2):639–648. https://doi.org/10.1016/j.bbe.2020.02.005

Bouchet A, Montes S, Ballarin V, Díaz I (2020) Intuitionistic fuzzy set and fuzzy mathematical morphology applied to color leukocytes segmentation. SIViP 14:557–564

Liu Y, Cao F, Zhao J, Chu J (2017) Segmentation of white blood cells image using adaptive location and iteration. IEEE J Biomed Health Inform 21(6):1644–1655. https://doi.org/10.1109/JBHI.2016.2623421

Makem M, Tiedeu A, Kom G, Kamdeu Nkandeu YP (2022) A robust algorithm for white blood cell nuclei segmentation. Multimed Tools Appl 81:17849–17874

Akram N, Adnan S, Asif M, Imran SMA, Yasir MN, Naqvi RA, Hussain D (2022) Exploiting the multiscale information fusion capabilities for aiding the leukemia diagnosis through white blood cells segmentation. IEEE Access 10:48747–48760. https://doi.org/10.1109/ACCESS.2022.3171916

Shahin AI, Guo Y, Amin KM, Sharawi AA (2018) A novel white blood cells segmentation algorithm based on adaptive neutrosophic similarity score. Health Inf Sci Syst 6(1):1–12. https://doi.org/10.1007/s13755-017-0038-5

Cao F, Liu Y, Huang Z, Chu J, Zhao J (2019) Effective segmentations in white blood cell images using \(\epsilon \)-SVR-based detection method. Neural Comput Appl 31:6767–6780. https://doi.org/10.1007/s00521-018-3480-7

Settouti N, Saidi M, Bechar MEA, Daho MEH, Chikh MA (2020) An instance and variable selection approach in pixel-based classification for automatic white blood cells segmentation. Pattern Anal Applic 23:1709–1726. https://doi.org/10.1007/s10044-020-00873-w

Abdurrazzaq A, Junoh AK, Yahya Z, Mohd I (2021) New white blood cell detection technique by using singular value decomposition concept. Multimed Tools Appl 80:4627–4638. https://doi.org/10.1007/s11042-020-09946-8

Sayed GI, Solyman M (2019) A novel chaotic optimal foraging algorithm for unconstrained and constrained problems and its application in white blood cell segmentation. Neural Comput & Applic 31:7633–7664. https://doi.org/10.1007/s00521-018-3597-8

Mahanta LB, Bora K, Kalita SJ, Yogi P (2019) Automated counting of platelets and white blood cells from blood smear images. In: Deka B, Maji P, Mitra S, Bhattacharyya DK, Bora PK, Pal SK (eds) Pattern recognition and machine intelligence. Springer, Cham, pp 13–20

Devi TG, Patil N, Rai S, Philipose CS (2022) Survey of leukemia cancer cell detection using image processing. In: Raman B, Murala S, Chowdhury A, Dhall A, Goyal P (eds) Computer vision and image processing. Springer, Cham, pp 468–488

Burt P, Adelson E (1983) The Laplacian pyramid as a compact image code. IEEE Trans Commun COM–31(4):532–540

Gonzalez-Ruiz V, Garcia-Ortiz JP, Fernandez-Fernandez MR, Fernandez JJ (2022) Optical flow driven interpolation for isotropic FIB-SEM reconstructions. Comput Methods Prog Biomed 221:106856. https://doi.org/10.1016/j.cmpb.2022.106856

Andrade AR, Vogado LHS, de MS Veras R, Silva RRV, Araujo FHD, Medeiros FNS (2019) Recent computational methods for white blood cell nuclei segmentation: a comparative study. Comput Methods Prog Biomed 173:1–14

Prinyakupt J, Pluempitiwiriyawej C (2015) Segmentation of white blood cells and comparison of cell morphology by linear and Naïve Bayes classifiers. Biomed Eng Online 14:63

Makem M, Tiedeu A (2020) An efficient algorithm for detection of white blood cell nuclei using adaptive three stage PCA-based fusion. Inform Med Unlocked 20:100416

Zhong Z, Wang T, Zeng K, Zhou X, Li Z (2019) White blood cell segmentation via sparsity and geometry constraints. IEEE Access 7:167593–167604. https://doi.org/10.1109/ACCESS.2019.2954457

Stark BA (2017) Studying moments of the central limit theorem. Math Enthus 14(1):53–76. https://doi.org/10.54870/1551-3440.1388

Garcia-Lamont F, Cervantes J, Lopez A, Rodriguez L (2018) Segmentation of images by color features: a survey. Neurocomputing 292:1–27. https://doi.org/10.1016/j.neucom.2018.01.091

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Garcia-Lamont, F., Lopez-Chau, A., Cervantes, J. et al. Nucleus segmentation of white blood cells in blood smear images by modeling the pixels’ intensities as a set of three Gaussian distributions. Med Biol Eng Comput 62, 2371–2388 (2024). https://doi.org/10.1007/s11517-024-03065-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11517-024-03065-4