Abstract

This paper explores technology integration and the role of teacher beliefs in this integration to assess a ‘smart-class’ initiative that was introduced in 3173 Grade 7–8 classrooms of 1609 public schools in India in 2017. It first reports on the impact of the initiative at the end of its first year, using a sample of 2574 children drawn from 155 project schools and 155 non-project schools. A two-level multivariate analysis did not indicate any significant effect of the project on student subject knowledge, attitude towards subject and subject self-efficacy beliefs. A follow-up interpretive study that used the open-ended responses of 170 project teachers and four in-depth case studies revealed that the e-content supplied supported some traditional beliefs of teachers while challenging others; the latter, however, led to resistance that hindered learning processes. Thus, both support and challenge seem to have led to a reproduction of the traditional classroom, resulting in no significant differences in outcomes between project and non-project classrooms. The paper calls for greater awareness among content developers of how their beliefs can subvert technology integration, and for supportive professional development of teachers that will help them incorporate technology in their pedagogical practice.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Amidst growing concern about a ‘learning crisis’ in public schools in the developing world (World Bank 2018), countries such as India are turning to Information and Communication Technology (ICT)-led interventions in schools in the belief that technology by itself can improve learning levels (Negroponte et al. 2006). Informed by this “technocentric thinking” (Papert 1993), policy and practice have tended to focus on introducing ICT in schools (Trucano 2012, 2015) and assessing its impact on learning outcomes. But since any technology is part of a complex web of interactions among pedagogical, cultural and institutional practices, it is often difficult to identify and control the factors that influence the efficacy of ICT. Hence, it is no surprise that assessments of the impact of ICT on outcomes have shown “mixed evidence with a pattern of null results” (Bulman and Fairlie 2016)—positive results in some cases (Muralidharan et al. 2019; Naik et al. 2016) and no or negative effect in others (Fuchs and Woessmann 2004; Peña-López 2015). The realization that technology is enmeshed in human processes has led to the development of ‘Technology Integration’ as a framework to examine how technology might be used to improve learning (Liu et al. 2017). The pedagogical beliefs of teachers, who are ultimately responsible for using the technology to improve learning in their classrooms, are key to this process of integration (Tondeur et al. 2017). In this paper, we draw on ‘Technology Integration’ and the role of teacher beliefs in this process to assess a smart-class initiative that was introduced in 1609 public schools in one province in India in September 2017. We further illustrate how a program designed to improve learning outcomes can fail to meet its objectives in the absence of a clear commitment to Technology Integration through changing teacher beliefs and a clear understanding of how the assumptions made by those in charge of designing an ICT intervention interact with these beliefs.

Technology integration and teacher beliefs

The realization that technology can impact learning positively if it is part of cultural change in the system in which it is embedded, is not new (Papert 1980, 1993; Selwyn 2011). The role of the key human actor—the teacher—in making technology part of a new teaching–learning culture has, therefore, attracted scholarly attention for quite some time (Lowther et al. 2008). Much of this attention, drawing particularly on Ertmer (1999, 2005), has focused on identifying and overcoming the first and second order barriers that teachers are likely to face as they weave technology into cultural change. Hew and Brush (2007), in their review, identified four first-order barriers that are external to the teacher (resources, institutions, subject culture, and assessment) and two second-order barriers (teacher attitudes and beliefs, and knowledge and skills). The first-order barriers, though formidable in certain contexts (O’Mahony 2003; Pelgrum 2001), were seen as less significant than the second-order barriers (Dexter et al. 2002; Ertmer 1999; Newhouse 2001; Zhao et al. 2002; Judson 2006).

The focus on the second-order barriers has led to the exploration of a number of dimensions associated with teacher attitudes, beliefs, knowledge and skills. Teacher-student relationships, self-efficacy beliefs of teachers and students, and teachers’ technological-pedagogical-content knowledge and beliefs have been shown to have a mediating role on the technology-learning link (Ponticell 2003; Haney et al. 2002; Wozney et al. 2006; Buabeng-Andoh 2012; Taimalu and Luik 2019). The positive role of constructivist beliefs of teachers (Judson 2006), and teacher-directed use of ICT by students (Miranda and Russell 2012), have also been studied. Tondeur et al. (2017) summarize the key role that teacher beliefs play in effective technology use: “Ultimately, teachers’ personal pedagogical beliefs play a key role in their pedagogical decisions regarding whether and how to integrate technology within their classroom practices” (p.556). They show that the relationship is bi-directional. On the one hand, technology-rich learning experiences can change teachers’ beliefs towards student-centered beliefs. On the other, teachers with such beliefs are more likely to use technology for student-centered learning. In both cases, however, the relationship is affected by perceived barriers or beliefs, and needs sustained professional development to develop.

The technology integration literature also indicates that teacher attitudes, beliefs, knowledge and skills have to be seen in relation to the contexts in which teachers work. Thus, the need to take teachers’ perspectives into account while implementing technology in classrooms (Muller et al. 2008), involve teachers in decision making about technology in the classroom (de Koster et al. 2017), and address institutional complexities that affect teachers (Miglani and Burch 2019) has also been noted. Liu et al. (2017) sort these teacher-related and context-related factors into three clusters: teacher characteristics, school characteristics, and contextual characteristics; taken together, these influence technology integration, which Liu and colleagues operationalize as the use of technology to support a “variety of instructional methods” (p. 798) in the classroom, as measured by a self-report on the frequency of the use of technology to support instruction. The teacher characteristics they consider include teaching experience with technology, level of education, teaching experience and gender. But the influence of the three clusters on technology integration is mediated by two other teacher-related factors: teacher confidence and comfort in using technology, and teacher use of technology outside the classroom. Thus, teacher-related factors play an important role in the effective use of technology for learning.

However, in spite of this recognition of the role of teacher beliefs and other teacher-related factors in technology integration, successful implementation of a technology-enabled classroom is still a complex issue. Teachers’ stated beliefs have not always predicted the use of technology in practice. Han et al. (2018), found that South Korean teachers, who held constructivist pedagogy beliefs that were similar to those of teachers in the United States, were unable to convert their beliefs into technology-enabled learning practices. In a case study of an immersive virtual classroom environment, Mills et al. (2019) found that although training changed teacher beliefs, it did not change classroom practice. Scherer and Teo (2019) found a significant positive relationship between perceived ease of use and behavioral intention to use technology, but others report that teachers who may value technology in their personal lives and employ it usefully there, are unable to integrate technology in their classroom practice effectively (Marwan and Sweeney 2019; Nath 2019). Ursavaş et al. (2019) called for a renewed focus on subjective norms (“an individual’s perceptions regarding the approval or disapproval of important others of a target behaviour”, p. 2503) to better understand intention to use technology and the conversion of beliefs into practice, especially among pre-service teachers. Hosek and Handsfield (2019) have shown how school-level imperatives, in this case policies related to critical digital literacy, led to a “disconnect between their [teachers’] theoretical and pedagogical beliefs and their actual classroom decisions regarding student participation in their digital classrooms” (p. 10). These studies show that though teacher beliefs may be central to the process of facilitating or hindering technology integration, their interaction with a number of contextual factors still needs careful study through an examination of the classroom practices of teachers and students (Matos et al. 2019) or of teacher decision-making processes (Kopcha et al. 2020). An interaction that seems to be missing in the literature discussed here is that between the beliefs of teachers and the assumptions of content developers that are inferred by the teachers as they use the material that is supplied to them. It is on this interaction, in a public system that had mandated a smart-class intervention, that we focus in this paper.

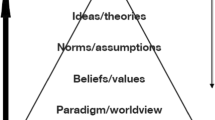

The “technocentric thinking” that informs educational public policy assumes an uncritical faith in the ability of technology to improve learning outcomes. However, given the mixed evidence about the impact of technological interventions, it is reasonable to assume that any ICT-led intervention has to be carefully assessed for its impact on learning, before the processes that influence the relationship between the intervention and the outcomes are explored. Using this reasoning, the importance attached to teacher-related factors by Liu et al. (2017), and more specifically the focus on teacher beliefs about teaching and learning highlighted by Tondeur et al. (2017), we derive the following framework to study technology integration and the role of teacher beliefs in the Smart-Class Initiative (hereafter referred to as SCI) under study (Fig. 1).

Research questions

SCI focused mainly on Mathematics and Science, and hence we use learning in these two subjects to assess academic outcomes—SCI also had content on the local language. ICT is also expected to influence non-cognitive competencies in children; specifically, attitudes to subjects being taught and self-efficacy beliefs have been found to be strong predictors of academic success (Nicolaidou and Philippou 2003; Li 2012; Uitto 2014). Students’ prior achievement and teacher technology self-efficacy (Laver et al. 2012) can influence student outcomes. In addition, student factors—caste, gender and parental education and occupation (Kingdon 2002; Pritchett 2013), the availability of educational reading material at home (Marjoribanks 1996), and attending paid private tuition (Dongre and Tewary 2015) can influence academic performance. Therefore, we formulate our first question:

-

1.

How do children who have been exposed to SCI classrooms for one year compare with children in non-SCI classrooms in terms of academic performance in Math and Science, and certain non-cognitive competencies such as attitude to Math and Science, and Math and Science self-efficacy beliefs, after controlling for prior academic achievement, student factors, availability of reading material at home, and private tuitions?

Answering this question, derived from the stated purpose of the project—namely improving academic outcomes through digital classrooms, should generate an objective assessment of SCI outcomes. A study of how teacher beliefs and practices interact with the introduction and implementation of the technology should then help explain this objective assessment of outcomes.

-

2.

How does the process of technology integration interact with teacher beliefs about teaching and learning, and how can this interaction explain the objective knowledge generated by the assessment of SCI outcomes?

Method

SCI was launched in September 2017 in 3173 classrooms of Grades 7 and 8 (age group 13–14) in 1609 schools. The hardware supplied was one projector, one laptop, one infrared camera, one speaker, one stylus pen, one laser pointer and one whiteboard (which acted as a smartboard) to each classroom, and a common wireless router for both classrooms. The e-content for the program (Fig. 2) was prepared by a private company, using the textbook as a base, in collaboration with officials of the government. The content was certified for use by the government agency in charge of curriculum development. Teachers were trained over 2 days in the use of the package; a Technical Support Person of the hardware vendor was deployed in each school for the first three months.

Phase-1: comparative assessment of cognitive and non-cognitive outcomes

The assessment of learning outcomes and non-academic outcomes was carried out with two groups of children, one group of students studying in SCI-enabled schools and the second studying in non-SCI schools. The sample size was determined using Optimal Design Plus Empirical Evidence Software (Raudenbush et al. 2011). Assuming school level factors explained 25% variance (i.e. ICC = .25) (Spybrook et al. 2011) and testing of five students per school, we estimated a requirement of 306 schools in order to have a minimum detectable effect size of .2 (significance level = .05, power set at .8). The schools for the study were randomly selected from the list of 5112 schools that had applied for SCI when it was announced in mid-2017. Thus, the treatment schools were schools that had been granted SCI, whereas the control schools were those that intended to adopt SCI but were not provided the program. A total of 310 schools (155 in each group) were selected. Students from Grade-8 were evaluated as part of the study. The evaluation instruments consisting of subject test and survey of attitudes and self-beliefs were administered after exactly one year of installation of SCI in treatment schools, in September 2018. The administration of test and surveys was supervised by a test supervisor and all data were collected online. If there were less than five students in Grade-8, the school was dropped, if there were 5 to 10 students all had to take the test, and if there were more than 10 students, 10 students were selected at random by the test supervisor.

Subject tests

The student test was developed in collaboration with the Education Department of the province, and had 15 questions in mathematics, 15 in science and five in the basics of computers—a module taught within mathematics to all children, with 30% of the questions assessing application of knowledge. A question bank of 50 questions for mathematics, 50 for science and 20 questions for computers was prepared. The questions were selected randomly from this bank. In the schools, the test was supervised by the test supervisor; the teacher was not present in the testing room.

Attitude and self-efficacy surveys

The questionnaires to measure student’s attitude and self-efficacy beliefs towards the subject were adapted from standard scales. The 15-item attitude to mathematics scale by Mattila (2005) (Metsämuuronen 2009; as cited in Metsämuuronen 2012) was adapted for both mathematics and science. It measured students liking of the subject (5 items), self-concept in the subject (5 items) and perceived utility of the subject (5 items). Self-efficacy beliefs towards the subject were measured using the 8-item scale from Motivated Strategies for Learning Questionnaire by Pintrich et al. (1991). To measure students’ non-cognitive competencies towards technology a 9-item survey was administered. The instrument consisted of a 5-item scale adapted from Liou and Kuo (2014) which measures students’ motivation and self-regulation towards learning technology, and a 4-item scale measuring student’s self-efficacy beliefs about using technology from Venkatesh et al. (2003) and Gu et al. (2013). All survey items were translated into the regional language and translation validity confirmed by back-translating the questionnaire to English. Responses to students’ self-efficacy beliefs towards technology were recorded on a 6-point Likert scale whereas all others were on a 5-point scale.

Scores in two State Academic Tests conducted by the government in January 2017 and April 2018 (SAT1 and SAT2, with reading, writing and numeral ability scores and subject scores in science and math) were also collected to have a measure of pre-SCI academic achievement; SAT1 was therefore taken when both groups of children were in Grade-6, and SAT2 when they were in Grade-7, but with the SCI children having had exposure to SCI for around seven months. The test administered under the present study in September 2018 provided a third measure, when the children were in Grade-8.

Phase-2: interpretive understanding of teacher beliefs and technology practice

One-hundred-and-seventy teachers teaching in SCI classrooms filled out a semi-structured survey form that asked about their pre-SCI practices and their current practices. This was pretested in eight schools before finalization. In addition, pilot case studies, spread over 6 days, were conducted in two SCI schools, to develop a case study protocol covering teacher beliefs and use of SCI, student practices, and home-school interactions. After the data of Phase-1 had been analyzed and the schools ranked by performance, two schools were selected at random from the top ten, and another two from the bottom ten. These four schools were the sites for in-depth observations and teacher interviews. After studying the individual cases, we looked for contrasts between the two sets of schools, examining in particular the pedagogical practices associated with SCI, methods of assessment and the pacing of the lessons. However, the practices turned out to be very similar across the four schools. Hence, in the following discussion we treat all four case studies as a set; the four schools are denoted as [S1], [S2], [S3], and [S4]. Data collection was mainly through classroom observations, and individual and group interviews of teachers. In addition, all four administrators in charge of SCI and the SCI content development core team of three members were interviewed. The analytic approach drew on coding and thematic analytic procedures recommended for observational and interview data (Elo and Kyngas 2007; Ryan and Bernard 2003; Bazeley 2013). We used the broad categories identified in Tondeur et al. 2017, more specifically, ‘Technology enabling beliefs, and beliefs enabling technology integration’, ‘Beliefs as perceived barriers, and perceived barriers related to beliefs and technology use’, and ‘Alignment between beliefs and practices’, to guide the development of the themes discussed below.

Analysis and findings

Phase-1: comparative assessment of cognitive and non-cognitive outcomes in SCI and non-SCI classrooms

Overall, 2574 students from 310 schools, 1314 students from 155 SCI schools and 1260 students from 155 non-SCI schools, responded to the survey and test. Two students belonging to two different SCI schools did not fill the survey but took the tests. Table 1 provides a summary of the respondents’ demographic information. Many of the children’s parents (70%) have not studied beyond Grade-8; the fathers of most students worked in agriculture. Very few students reported that they attended paid tuitions. Less than a third of the respondents indicated that their parents purchased additional reading materials for them. The SAT1 and SAT2 scores were available for 2364 of the 2574 students (1206 in SCI-schools and 1158 in non-SCI schools).

We conducted a two-level multivariate analysis to determine the effect of SCI on student knowledge, attitude and self-efficacy beliefs while controlling for individual student background and school level influences. This accounts for the effects of individual student-level characteristics (gender, caste, parental background, etc.) and school-level factors (teacher, school facilities, school management, etc.) on student outcomes. Data analysis was performed in Mplus 8.3 (Muthén and Muthén 2017) using maximum likelihood estimator with robust standard errors (MLR). Confirmatory factor analysis (CFA) was performed for measures of ‘attitude to subject’ and ‘subject self-efficacy beliefs’. CFA indicated that the responses to the subscales of attitude to subject, liking of the subject and self-concept in the subject were highly correlated with each other and self-efficacy beliefs towards the subjects (r > .9, p < .05 for both Science and Math). Due to high correlation, responses to the subscales on liking of the subject and self-concept in the subject were dropped from further analysis. We computed the reliabilities of the remaining scales using both Cronbach’s alpha (Geldhof et al. 2014) and Spearman Brown formula (Muthén 1991). Model fit was ascertained using the following criteria: root mean square error of approximation (RMSEA) less than.05, comparative fit index (CFI) greater than.95, Tucker-Lewis index (TLI) greater than.95, and standardized root-mean-square residual (SRMR) less than .08 (Hu and Bentler 1999). Table 2 presents the summary of student responses to the attitude and self-efficacy surveys.

The interclass correlation coefficient (ICC), which is a measure of variation in student responses attributable to school level factors, for the survey items is sufficiently high requiring the use of multilevel analysis (Muthén 1991). Table 3 presents the summary of scores obtained by the students in the test and the annual state academic tests.

A dummy variable indicating presence of SCI was modelled at the school level. Thus, a significant coefficient of the SCI dummy variable would indicate the effect of SCI on the students’ performance on subject tests or subject-specific attitude and self-efficacy belief surveys. The analysis was performed separately for each of the topics Science, Mathematics and Technology. All three models (Figs. 3, 4, 5) were a good fit as per criteria provided by Hu and Bentler (1999).

The results of the analysis indicated no significant effect of SCI on student subject knowledge (Science: β = − .005, p = .968 & Math: β = − .087, p = .462), attitude towards subject (Science: β = − .162, p = .211 & Math: β = − .057, p = .653) and subject self-efficacy beliefs (Science: β = − .167, p = .197 & Math: β = − .053, p = .684). Analysis also indicated no significant effect of SCI on knowledge of ICT (β = − .135, p = .263) and self-efficacy beliefs towards either learning (β = − .018, p = .882) or using (β = − .092, p = .458) technology. Thus, there was no significant difference in student cognitive and non-cognitive outcomes when comparing schools with SCI with those without SCI. But we did find some student-level factors that had significant positive influence on learning outcomes. Noteworthy among these were parental education (father educated beyond Grade-8) and availability of additional reading materials at home.

Phase-2: interpretive understanding of teacher beliefs and technology practice

We now turn to an interpretive understanding of the interaction between SCI and teacher beliefs and practices, to arrive at a few tentative explanations for the failure of SCI to leverage technology for quality improvement. The content that made up the ‘SCI package’ was developed by a private agency that used the official textbooks as a base. It was certified for use by the government agency in charge of curriculum development. The overall theme that emerges from the discussion below is that the beliefs of and the assumptions made by the designers seem to have played a crucial role in either supporting traditional beliefs of teachers or challenging them—the former leading to a reinforcement of the features and effects of a traditional classroom, but the latter leading to resistance that hinders learning processes. In the absence of supportive interventions to ensure technology integration, both responses have led to a reproduction of the traditional classroom, thus resulting in no significant differences in outcomes between SCI and non-SCI classrooms.

SCI reinforcing traditional beliefs about importance of ‘knowing content’

Public schooling in India has traditionally considered ‘knowing content’ more important that the process of learning (Kumar 1993); the textbook has been the key support for this belief among teachers and students (Kumar 1988). The structuring of the e-content in SCI seems to have reinforced this common belief, with the ‘textbook culture’ being replicated by the teachers through a construction of the traditional textbook as “lacking in resources to explain topics,” “time consuming,” “deficient in multiple representations of knowledge,” and SCI content as a superior alternative. Two factors have facilitated such a construction. First, the e-content, by failing to exploit the dynamic potential of technology and relying on converting text and printed visuals into electronic form (for example, through the extensive use of pdf documents), conveys the impression that it is, in the words of one teacher, a “more engaging text.” As one of the key content developers commented, “Teachers are used to textbooks; so we have followed the content in use, but we want to make things easy for them, and so give as much detail as possible.” The latter belief spills over into assumptions about the role and autonomy of the teacher, which we take up later.

Second, a key feature noted by most teachers of the “more engaging text” is the gamification of assessment that the developers have built in. Paradoxically, this has only led to a reinforcement of the primacy of knowing content over the process of learning. A good example is SCI’s quizzes that follow the so-called “KBCFootnote 1 format”. A typical observation from [S1], a class of 24 students, is described below. A student is asked to come up and navigate to KBC through the stylus on the smartboard. After KBC is opened, the teacher initiates what is a ‘participation round’, in which many children try their hand at answering one question each. One student gets up, answers a question and sits down without waiting for confirmation about the correctness of the answer. The process continues with students coming up one by one. The process is quite fast. Both the students and the teacher are eager to get to the right answer quickly. When the answer is wrong, the teacher says, “Find out the right answer.” Then, the ‘regular round’ starts. On average, a student gets about five questions right. One girl gets 10 questions correct, wins the quiz and the class claps. Around six students mark the wrong answer on their very first attempt; they go back to their seats without saying anything. This pattern is repeated in the other three case study schools, with minor variations. It is possible that this method of assessment would work well if the learning process is fairly robust and if students get feedback on why some of the answers they give are wrong. In the absence of these conditions, the gamification built into SCI reinforces the traditional neglect of the process of learning, instead focusing on the “what” of learning, to even, as we observed, the extent of memorizing the right answers that appeared on the screen. In other words, the technological features and a traditional belief in the primacy of ‘knowing content’ reinforced each other to reproduce the features of a traditional classroom.

SCI reinforcing beliefs about inequalities in learning

Given the inequalities in levels of learning among children within the same classroom (GCERT 2015), one of the stated objectives of SCI was to ensure that all children learned. In the SCI classroom, the teachers’ continued focus on getting the answers right, which the software reinforces, tends to respond better to the needs of the “cleverer children” [teachers in S1 and S3 and teacher survey]. “The weaker children do not take much interest. Giving individual attention to such children requires a lot more time than is available.” This is echoed by a teacher in [S4] who in addition blames the socio-economic background of some children for their poor learning and inability to capitalize on the possible benefits of SCI. These beliefs are no different from what one would expect in a typical government school—the consequence is that the disadvantages of a traditional classroom get reproduced in spite of a technology that ostensibly was meant to improve learning levels of all children, regardless of their socio-economic condition. The categorization of children as described here has another consequence, often found in traditional classrooms. The teachers continue to rely on what they call “overall assessment” of the class; the more active participation of the academically better children in operating the software or in the assessment exercises is equated with “class performance.” This may work against the interests of those who are academically weaker and unable to keep up with the pace of instruction.

The academic inequalities that characterize the classrooms would have demanded a degree of personalization of instruction, but in its absence, SCI reinforces the teachers’ belief that an overall assessment is sufficient. The key problem, which the teachers do acknowledge but seem unable to address, is that many students seem to be poorly equipped to deal with the demands made by the syllabus of the grades in which they are studying. Paradoxically, when the students are thus underprepared, the teachers tend to focus on the right answers and leave it to the students to figure out the process. Thus, inequalities in prior preparation carried into the classroom and a discourse that focuses on “knowing content” work against many children in both SCI and non-SCI classrooms.

SCI challenging ideas of teacher autonomy: teacher responses as accommodation

The interaction of SCI with teacher beliefs is nuanced; it is not that it always reinforces traditional teacher beliefs. In some cases, it challenges them. This effect, unintended as the interviews with officials revealed, provokes accommodative responses, as in the case of challenge to teacher authority and autonomy, or resistances that take the shape of conversational performances substituting for dialogue. We discuss the first response in this section. As noted earlier, SCI content has been interpreted as a “more engaging text.” The theory section is a repetition of the textbook, but the “engaging” part comes from the videos and animations that accompany the text, and the gamified assessments. In an effort to make things easy for the teacher, the software, through its animations, substitutes for what a teacher would do. Given the limitations of space, we describe just one vignette from a language class, a lesson on a poem. After the ‘theory’ portion, which the students read on the screen as the teacher moved around the class, the sections that followed were the poem set to music; “Explanation of the Poem”, during which the teacher often paused the video to ask for the meanings of a few words; and the poem but with a few blank spaces which the students were required to complete orally. As this was happening, the teacher joked with the class, “Are you reading properly? This is what you might be asked in the exam.” Finally, the teacher announced, “We will play the quiz.” This proceeded rapidly, with no discussion of the wrong answers.

A number of experiences similar to this have shaped a new understanding of teacher autonomy and pedagogical practice. As many teachers note in the survey, “Everything is there; we just have to play it.” This finding was explained by one teacher: “If the teacher has to give her own explanation, the audio explanation in SCI would become redundant and we might be seen as not using the resource; if we do not give the explanation, then we just have to implement the program.” Many teachers have reacted with an accommodative response that ascribes a dominant, almost messianic, role to the ‘SCI package’. The implementer role that the teachers adopt as a consequence is justified by the increased student engagement that is visible. Learning is then expected to follow from this higher engagement—an assumption that is more based in hope than classroom realities. This behavior is consistent with the beliefs of the SCI administrators interviewed: “we want systems that teachers can implement with ease” (emphasis added). Such beliefs, the assumptions of the content developers that technology had to “make everything simple for the teachers,” and the lack of training in the pedagogical use of the technology, serve to construct the teacher as just an implementer of a package. This has as yet poorly understood implications for the teacher as someone who has pedagogical autonomy and is expected to integrate technology into ongoing pedagogical practice.

SCI challenging teacher-centric beliefs: conversational performance substituting for learning dialogue

SCI, through its design, was challenging the teacher-centered belief about lecturing as a dominant method. But when teachers resist this, learning suffers. In a mathematics class [S3], while the teacher was engaged in manipulating different geometric shapes and tools from the smartboard, he was engaged in a stream of one-way conversations, punctuated by questions. In [S2], in a geometry class, the teacher began with a series of content-related questions which required yes or no answers. As he used the geometry toolbox of the smartboard, his questioning followed a pattern: A student who was asked a question had to stand up and answer; if the answer turned out to be wrong another student got a chance to answer, but the first student remained standing. This pattern continued till the right answer was given. These two episodes illustrate a common practice across the schools: an attempt to reinstate the belief about teacher-centered ‘talk’ as superior to other modes of delivery in response to the challenge posed by the demonstration and interactive possibilities of the technology. The consequence was that the pattern of interaction was no different from what one would observe in a traditional classroom—only the smartboard had replaced the blackboard. The conversational performance, however, created an image of an interactive classroom—students did respond with answers, though not necessarily the right answers. The focus remained on getting the content right. Once again, in spite of the interactive possibilities that could have led to better technology integration, beliefs about the ‘right’ pedagogy and its goals tend to reinforce the classroom as a place where genuine democratic conversation focusing on understanding is difficult.

Discussion and conclusion

This paper has examined a large smart-class initiative (SCI) in a public schooling system with a view to understanding the relationship among technology integration, teacher beliefs and the interpretation by teachers of the content that is made available to them. Consistent with studies that do not report a significant impact of the introduction of technology-led initiatives on student academic or non-cognitive outcomes, we did not find a significant difference between SCI classrooms and non-SCI classrooms. We then explored the reasons for this finding by studying how teacher pedagogical beliefs interacted with a new technology to reproduce traditional classroom processes and effects in an environment that was ostensibly technology-rich. The pedagogical beliefs that teachers hold are complex (Ertmer and Ottenbreit-Leftwich 2010), and are determined by the formative processes that teachers have gone through and the contexts in which they work. Such beliefs may broadly be seen as teacher-centered or student-centered (Tondeur et al. 2008). In the strongly hierarchical pedagogical system that obtains in many Indian public schools, practices tend to be teacher-centric and teachers tend to be “strict” (Tiwari 2015). Classrooms are characterized by a dominant role for the teachers, and negative teacher behaviors are often present (Tiwari 2015, 2018; Anand 2014), prompting India’s National Curriculum Framework to call for teacher beliefs to move towards student-centeredness (NCERT 2005, p.82). Generally speaking, at least in the public system, it is less likely that teachers would hold constructivist beliefs and be highly active users of technology (Ertmer et al. 2015). This context demands that those in charge of introducing technology in the public system be aware of how the assumptions made by content developers and trainers interact with the traditional beliefs of teachers. Tondeur et al. (2017) note that over time, teachers’ use of technology in their practice would lead them to adopt more student-centered beliefs, which in turn would influence technology integration. However, the role of the beliefs of those in charge of designing and implementing ICT-led interventions in the public system—part of the contextual factors (Tondeur et al. 2017), may play a significant role in supporting or hindering such technology integration in the public system. Even an idea such as gamification for which there is positive evidence (Deterding et al. 2011; Dicheva et al. 2015) can easily be subverted as shown in this paper if the developers are not aware of a functional integration of pedagogy and play (Tulloch 2014).

Governments in countries such as India have come to rely on vendors and other agencies not just for the hardware but also for educational material (Gurumurthy 2015), mainly because of a lack of content development capability within. Ensuring that the beliefs of content developers do not militate against technology integration needs a more broad-based consultative process involving a range of educational actors such as knowledgeable teachers, academics and other nongovernmental agencies dealing with ICT in education. Second, support for technology integration in the form of ongoing training is necessary. Such training should ensure that, regardless of the teacher-centered or student-centered assumptions that teachers may hold, technology can be worked into the pedagogical plans of the teachers. It should also help guard against the ICT-intervention reinforcing traditional beliefs, for instance, the dominance attributed to ‘knowing content’ and the textbook, or provoking resistance behaviors. A third important implication that arises is the need for governments to be aware of the requirements of a personalized learning system while considering technology integration in the future. Lee (2014) and Lee et al. (2018) discuss five features of such a system: (1) a personalized learning plan which was missing in the SCI case, (2) competency-based student progress rather than time-based progress and (3) criterion-referenced assessment rather than norm-referenced assessment, both of which were not built into SCI, (4) project- or problem-based learning, for which SCI offered no opportunity, and (5) multi-year mentoring of students. This last point is important, since current thinking, as in SCI, limits technology integration to selected grades. The SCI experience opens up the possibility of improving the design of technology-based learning interventions by incorporating a personalized learning focus.

In sum, greater awareness of the bi-directional relationships between technology integration and teacher beliefs, and of the processes by which pedagogical beliefs hinder technology integration or perceived belief-related barriers, as in the resistance behaviors (Tondeur et al. 2017), is necessary to ensure that learning processes are not hindered. This attention at the design stage must be complemented by rigorous attention to long-term professional development of teachers that is situated in the context of teachers’ work with technology (Sang et al. 2012; Tondeur et al. 2016; Kopcha 2010). Ultimately, how teachers respond to externally generated content and how teacher beliefs and practices influence technology integration in the classroom will determine the extent to which the cognitive and non-cognitive outcomes expected from ICT-led initiatives are realized.

Notes

KBC refers to ‘Kaun Banega Crorepati’, an Indian television quiz show modeled on the British ‘Who Wants to Be a Millionaire’. Participants answer a series of questions, and win a cash prize if they answer all the questions correctly.

References

Anand, M. (2014). Corporal punishment in schools: Reflections from Delhi, India. Practice, 26, 225–238.

Bazeley, P. (2013). Qualitative data analysis: Practical strategies. Thousand Oaks: Sage.

Buabeng-Andoh, C. (2012). Factors influencing teachers’ adoption and integration of information and communication technology into teaching: A review of the literature. International Journal of Education and Development using Information and Communication Technology, 8(1), 136–155.

Bulman, G., & Fairlie, R. W. (2016). Technology and education: Computers, software, and the internet. In E. A. Hanushek, S. J. Machin, & L. Woessmann (Eds.), Handbook of the economics of education 5 (pp. 239–280). Amsterdam: Elsevier.

de Koster, S., Volman, M., & Kuiper, E. (2017). Concept-guided development of technology in ‘traditional’ and ‘innovative’ schools: Quantitative and qualitative differences in technology integration. Educational Technology Research and Development, 65(5), 1325–1344.

Deterding, S., Dixon, D., Khaled, R. & Nacke, L. (2011). From game design elements to gamefulness: defining gamification: Proceedings of the 15th International Academic MindTrek Conference: Envisioning future media environments (pp. 9–15). ACM.

Dexter, S. L., Anderson, R. E., & Ronnkvist, A. M. (2002). Quality technology support: What is it? Who has it? And what difference does it make? Journal of Educational Computing Research, 26(3), 265–285.

Dicheva, D., Dichev, C., Agre, G., & Angelova, G. (2015). Gamification in education: A systematic mapping study. Educational Technology & Society, 18(3), 1–14.

Dongre, A. A. & Tewary, V. (2015). Impact of private tutoring on learning levels: Evidence from India. Available at SSRN 2401475.

Elo, S., & Kyngas, H. (2007). The qualitative content analysis process. JAN Research Methodology, 62, 107–115.

Ertmer, P. A. (2005). Teacher pedagogical beliefs: The final frontier in our quest for technology integration? Educational Technology Research and Development, 53(4), 25–39.

Ertmer, P. A. (1999). Addressing first-and second-order barriers to change: Strategies for technology integration. Educational Technology Research and Development, 47(4), 47–61.

Ertmer, P. A., & Ottenbreit-Leftwich, A. T. (2010). Teacher technology change: How knowledge, confidence, beliefs, and culture intersect. Journal of Research on Technology in Education, 42, 255–284.

Ertmer, P. A., Ottenbreit-Leftwich, A., & Tondeur, J. (2015). Teacher beliefs and uses of technology to support 21st century teaching and learning. In H. R. Fives & M. Gill (Eds.), International handbook of research on teacher beliefs (pp. 403–418). New York: Routledge.

Fuchs, T. & Woessmann, L. (2004). Computers and student learning: Bivariate and multivariate evidence on the availability and use of computers at home and at School. CESifo Working Paper Series 1321, CESifo Group Munich.

GCERT. (2015). Report on State Achievement Survey. Gandhinagar: Gujarat Council of Educational Research and Training.

Geldhof, G. J., Preacher, K. J., & Zyphur, M. J. (2014). Reliability estimation in a multilevel confirmatory factor analysis framework. Psychological Methods, 19(1), 72.

Gu, X., Zhu, Y., & Guo, X. (2013). Meeting the “Digital Natives”: Understanding the acceptance of technology in classrooms. Educational Technology & Society, 16(1), 392–402.

Gurumurthy, K. (2015). Domination and emancipation: A framework for assessing ICT and education programs. Paper presented at 6th Annual International Conference of the Comparative Education Society of India (CESI), December 14–16, Bengaluru.

Han, I., Byun, S. Y., & Shin, W. S. (2018). A comparative study of factors associated with technology-enabled learning between the United States and South Korea. Educational Technology Research and Development, 66(5), 1303–1320.

Haney, J. J., Lumpe, A. T., Czerniak, C. M., & Egan, V. (2002). From beliefs to actions: The beliefs and actions of teachers implementing change. Journal of Science Teacher Education, 13, 171–187.

Hew, K. F., & Brush, T. (2007). Integrating technology into K-12 teaching and learning: Current knowledge gaps and recommendations for future research. Educational Technology Research and Development, 55(3), 223–252.

Hosek, V. A., & Handsfield, L. J. (2019). Monological practices, authoritative discourses and the missing “C” in digital classroom communities. Practice & Critique English Teaching. https://doi.org/10.1108/ETPC-05-2019-0067.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

Judson, E. (2006). How teachers integrate technology and their beliefs about learning: Is there a connection? Journal of Technology and Teacher Education, 14, 581–597.

Kingdon, G. G. (2002). The gender gap in educational attainment in India: How much can be explained? Journal of Development Studies, 39(2), 25–53.

Kopcha, T. (2010). A systems-based approach to technology integration using mentoring and communities of practice. Educational Technology Research and Development, 58(2), 175–190.

Kopcha, T. J., Neumann, K. L., Ottenbreit-Leftwich, A., & Pitman, E. (2020). Process over product: The next evolution of our quest for technology integration. Educational Technology Research and Development. https://doi.org/10.1007/s11423-020-09735-y.

Kumar, K. (1993). Literacy and primary education in India. Knowledge, culture and power: International perspectives on literacy as policy and practice (pp. 102–113). London: Routledge.

Kumar, K. (1988). Origins of India's "textbook culture". Comparative Education Review, 32(4), 452–464.

Laver, K., George, S., Ratcliffe, J., & Crotty, M. (2012). Measuring technology self-efficacy: Reliability and construct validity of a modified computer self-efficacy scale in a clinical rehabilitation setting. Disability and Rehabilitation, 34(3), 220–227.

Lee, D. (2014). How to personalize learning in K-12 schools: Five essential design features. Educational Technology, 54(2), 12–17.

Lee, D., Huh, Y., Lin, C.-Y., & Reigeluth, C. M. (2018). Technology functions for personalized learning in learner-centered Schools. Educational Technology Research and Development, 66(5), 1269–1309.

Li, L. K. (2012). A study of the attitude, self-efficacy, effort and academic achievement of city U students towards research methods and statistics. Discovery–SS Student E-Journal, 1(54), 154–183.

Liou, P. Y., & Kuo, P. J. (2014). Validation of an instrument to measure students’ motivation and self-regulation towards technology learning. Research in Science & Technology Education, 32(2), 79–96.

Liu, F., Ritzhaupt, A. D., Dawson, K., & Barron, A. E. (2017). Explaining technology integration in K-12 classrooms: A multilevel path analysis model. Educational Technology Research and Development, 65, 795–813. https://doi.org/10.1007/s11423-016-9487-9.

Lowther, D. L., Inan, F. A., Daniel Strahl, J., & Ross, S. M. (2008). Does technology integration “work” when key barriers are removed? Educational Media International, 45(3), 195–213.

Marjoribanks, K. (1996). Family learning environments and students’ outcomes: A review. Journal of Comparative Family Studies, 27(2), 373–394.

Marwan, A., & Sweeney, T. (2019). Using activity theory to analyse contradictions in English teachers’ technology integration. The Asia-Pacific Education Researcher, 28(2), 115–125.

Matos, J., Pedro, A., & Piedade, J. (2019). Integrating digital technology in the school curriculum. International Journal of Emerging Technologies in Learning, 14(21), 4–15.

Mattila, L. (2005). National achievement results in mathematics in compulsory education in 9th grade 2004. Oppimistulostenarviointi 2/2005.Opetushallitus. Helsinki: Yliopistopaino. [In Finnish.]

Metsämuuronen, J. (2012). Challenges of the Fennema-Sherman test in the international comparisons. International Journal of Psychological Studies, 4(3), 1.

Metsämuuronen, J. (2009). Methods assisting assessment; methodological solutions for the national assessments and follow-ups in the Finnish National Board of Education. Oppimistulosten arviointi 1/2009. Opetushallitus. Helsinki: Yliopistopaino.

Miglani, N., & Burch, P. (2019). Educational technology in India: The field and teacher’s sensemaking. Contemporary Education Dialogue, 16(1), 26–53.

Mills, K., Jass Ketelhut, D., & Gong, X. (2019). Change of teacher beliefs, but not practices, following integration of immersive virtual environment in the classroom. Journal of Educational Computing Research, 57(7), 1786–1811.

Miranda, H., & Russell, M. (2012). Understanding factors associated with teacher-directed student use of technology in elementary classrooms: A structural equation modeling approach. British Journal of Educational Technology, 43, 652–666.

Mueller, J., Wood, E., Willoughby, T., Ross, C., & Specht, J. (2008). Identifying discriminating variables between teachers who fully integrate computers and teachers with limited integration. Computers & Education, 51(4), 1523–1537.

Muralidharan, K., Singh, A., & Ganimian, A. J. (2019). Disrupting education? Experimental evidence on technology-aided instruction in India. American Economic Review, 109(4), 1426–1460.

Muthén, B. O. (1991). Multilevel factor analysis of class and student achievement components. Journal of Educational Measurement, 28, 338–354.

Muthén, L. K., & Muthén, B. O. (2017). Mplus user’s guide (8th ed.). Los Angeles, CA: Muthén & Muthén.

Naik, G., Chitre, C., Bhalla, M. & Rajan, J. (2016). Can technology overcome social disadvantage of school children's learning outcomes? Evidence from a large-scale experiment in India. IIM Bangalore Research Paper, (512).

Nath, S. (2019). ICT integration in Fiji schools: A case of in-service teachers. Education and Information Technologies, 24(2), 963–972.

NCERT. (2005). National Curriculum Framework. New Delhi: National Council of Educational Research and Training. Retrieved August 10, 2018 from http://www.ncert.nic.in/rightside/links/nc_framework.html.

Negroponte, N., Bender, W., Battro, A. & Cavallo, D. (2006). One laptop per child. Keynote Address at National Educational Computing Conference, San Diego, CA.

Newhouse, C. P. (2001). Applying the concerns-based adoption model to research on computers in classrooms. Journal of Research on Computing in Education, 33(5), 2001.

Nicolaidou, M., & Philippou, G. (2003). Attitudes towards mathematics, self-efficacy and achievement in problem solving. European Research in Mathematics Education III (pp. 1–11). Pisa: University of Pisa.

O'Mahony, C. (2003). Getting the information and communications technology formula right: Access+ ability= confident use. Technology, Pedagogy and Education, 12(2), 295–311.

Papert, S. (1993). The children's machine: Rethinking school in the age of the computer. New York: Basic Books.

Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. New York: Basic Books.

Pelgrum, W. J. (2001). Obstacles to the integration of ICT in education: Results from a worldwide educational assessment. Computers & Education, 37(2), 163–178.

Peña-López, I. (2015). Students, computers and learning. Making the connection. OCED Report.

Pintrich, P. R., Smith, D. A. F., Garcia, T., & McKeachie, W. J. (1991). A manual for the use of the Motivated Strategies for Learning Questionnaire (MSLQ). Ann Arbor, MI: National Center for Research to Improve Postsecondary Teaching and Learning.

Ponticell, J. A. (2003). Enhancers and inhibitors of teacher risk taking: A case study. Peabody Journal of Education, 78(3), 5–24.

Pritchett, L. (2013). The rebirth of education: Schooling ain't learning. Cambridge: CGD Books.

Raudenbush, S. W., Spybrook, J., Congdon, R., Liu, X., Martinez, A., Bloom, H. & Hill, C. (2011). Optimal design plus empirical evidence. Retrieved April 26, 2018, from https://hlmsoft.net/od/od301.zip.

Ryan, G. W., & Bernard, H. R. (2003). Techniques to identify themes. Field Methods, 15(1), 85–109.

Sang, G., Valcke, M., van Braak, J., Tondeur, J., Zhu, Ch, & Yu, K. (2012). Challenging science teachers’ beliefs and practices through a video-case-based intervention in China’s primary schools. Asia-Pacific Journal of Teacher Education, 40(4), 363–378.

Scherer, R., & Teo, T. (2019). Unpacking teachers’ intentions to integrate technology: A meta-analysis. Educational Research Review, 27, 90–109. https://doi.org/10.1016/j.edurev.2019.03.001.

Selwyn, N. (2011). Editorial: In praise of pessimism—The need for negativity in educational technology. British Journal of Educational Technology, 42(5), 713–718.

Spybrook, J., Bloom, H., Congdon, R., Hill, C., Martinez, A., Raudenbush, S. & To, A. (2011). Optimal design plus empirical evidence: Documentation for the “Optimal Design” software. William T. Grant Foundation. Retrieved April 26, 2018, from https://hlmsoft.net/od/od-manual-20111016-v300.pdf.

Taimalu, M., & Luik, P. (2019). The impact of beliefs and knowledge on the integration of technology among teacher educators: A path analysis. Teaching and Teacher Education, 79, 101–110.

Tiwari, A. (2018). The corporal punishment ban in schools: Teachers’ attitudes and classroom practices. Educational Studies, 45(3), 271–284.

Tiwari, A. (2015). Proceedings at AERA ’15. Corporal punishment in India: Caste system context. Chicago, IL.

Tondeur, J., Valcke, M., & Van Braak, J. (2008). A multidimensional approach to determinants of computer use in primary education: Teacher and school characteristics. Journal of Computer Assisted Learning, 24(6), 494–506.

Tondeur, J., van Braak, J., Ertmer, P. A., & Ottenbreit-Leftwich, A. T. (2017). Understanding the relationship between teachers’ pedagogical beliefs and technology use in education: A systematic review of qualitative evidence. Educational Technology Research and Development, 65, 555–575. https://doi.org/10.1007/s11423-016-9481-2.

Tondeur, J., van Braak, J., Siddiq, F., & Scherer, R. (2016). Time for a new approach to prepare future teachers for educational technology use: Its meaning and measurement. Computers & Education, 94, 134–150.

Trucano, M. (2015). Key themes in national educational technology policies [Web log post]. Retrieved May 15, 2015, from https://blogs.worldbank.org/edutech/key-themes-national-educational-technology-policies.

Trucano, M. (2012). Analyzing ICT and education policies in developing countries. Retrieved November 9, 2012, from https://blogs.worldbank.org/edutech/ict-education-policies.

Tulloch, R. (2014). Reconceptualising gamification: Play and pedagogy. Digital Culture & Education, 6(4), 317–333.

Uitto, A. (2014). Interest, attitudes and self-efficacy beliefs explaining upper-secondary school students’ orientation towards biology-related careers. International Journal of Science and Mathematics Education, 12(6), 1425–1444.

Ursavaş, Ö. F., Yalçın, Y., & Bakır, E. (2019). The effect of subjective norms on preservice and in-service teachers’ behavioural intentions to use technology: A multigroup multimodel study. British Journal of Educational Technology, 50(5), 2501–2519.

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27, 425–478.

World Bank. (2018). World Development Report 2018: Learning to realize education’s promise. Washington, DC: World Bank.

Wozney, L., Venkatesh, V., & Abrami, P. C. (2006). Implementing computer technologies: Teachers’ perceptions and practices. Journal of Technology and Teacher Education, 14, 120–173.

Zhao, Y., Pugh, K., Sheldon, S., & Byers, J. L. (2002). Conditions for classroom technology innovations. Teachers College Record, 104(3), 482–515.

Acknowledgements

We thank Avinash Bhandari, Megha Gajjar, Lalji Nakhrani, Sanket Savaliya, Nishanshi Shukla and Niroopa Khokar for their field work assistance.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Permission for the study was granted by the provincial government (SSA/TT/2018, dated March 22, 2018), which arranged for all school-level permissions, and communicated the purpose and procedures of the study to the heads of the selected schools, the selected teachers and administrators. The students who responded to the surveys were briefed by their teachers and the administrators and then invited to participate in the study. The participation of the schools, teachers and children in the study was optional, but no school, teacher or child refused to participate. The data used for the analysis is available at https://www.inshodh.org/sci-data-set.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chand, V.S., Deshmukh, K.S. & Shukla, A. Why does technology integration fail? Teacher beliefs and content developer assumptions in an Indian initiative. Education Tech Research Dev 68, 2753–2774 (2020). https://doi.org/10.1007/s11423-020-09760-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-020-09760-x