Abstract

In a game-based learning (GBL) environment for seventh-grade students, this study investigated the impact of competition, engagement in games, and the relationship between the two on students’ in-game performance and flow experience, which, in turn, impacted their science learning outcomes. Structural equation modeling was employed to test a hypothesized path model. The findings showed that students’ engagement in games not only predicted their in-game performance, but also had an impact on science learning outcomes via the mediation of in-game performance. While competition alone did not have a direct effect on either in-game performance or flow experience, it was indirectly related to in-game performance via the moderation of students’ engagement in games. The study concludes with implications for future GBL interventions and studies.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Game-based learning (GBL) has become prevalent in many subject areas. For younger generations, games are fun, enjoyable, and motivating. Several studies have claimed that GBL affords observations, challenges, situated learning, cognitive apprenticeship, and problem-solving, which all lead to the improvement in cognition, learning performance, and motivation (Connolly et al. 2012; Sánchez and Olivares 2011). In science education, gaming environments also showed promising results in contextualizing science processes, which could be a challenge in the traditional classroom. For example, Quest Atlantis (Barab et al. 2007), River City (Ketelhut et al. 2007), and Crystal Island: Outbreak (Nietfeld et al. 2014) have successfully used rich narrative settings to contextualize inquiry-based learning.

While GBL can stimulate motivation for learning, the specific elements in GBL that trigger self-initiated and optimal learning require further empirical investigations (Huizenga et al. 2009; Vandercruysse et al. 2013). Considered by some as an indispensable game element, competition has attracted the attention of many researchers (Cagiltay et al. 2015; Williams and Clippinger 2002). Yet, due to competition’s multifaceted nature, research to date has not reached univocal results on the role of competition in GBL.

While GBL has been broadly implemented as a context for learning, the existing literature focuses more on GBL’s impact on learning outcomes than on the processes of GBL. Players’ in-game performance, for example, can serve as a window to examine the gameplay process (e.g., Nietfeld et al. 2014). Empirical examinations of learners’ in-game performance may provide insights into and implications for the design of effective GBL. Motivated by this prospect, this paper first introduces and synthesizes the literature on GBL and its relevant constructs, including competition, engagement, and game flow experience; we then propose research questions that were intended to examine the relationships between the aforementioned constructs and learners’ performance both in and after games; after describing the adopted research methods, we report key findings and conclude with a discussion of implications for theory and practice.

Theoretical background

GBL for learning and motivation

In GBL, learners acquire knowledge and achieve a variety of learning objectives from playing games. A game is an organized play environment that includes elements such as rules, interaction, goals and objectives, outcomes and feedback, story, and competition (Ke 2016; Wouters et al. 2013). Due to games’ motivational appeal (Malone 1981), GBL is often believed to have the potential to facilitate both the cognitive and affective/motivational processes of learning (Ke 2016; Wouters et al. 2013).

Several meta-analyses appear to agree that GBL leads to improved learning outcomes (e.g., Sitzmann 2011; Vogel et al. 2006; Wouters et al. 2013). On the other hand, some studies on specific subject areas drew different conclusions. For example, after reviewing more than three hundred studies, Young et al. (2012) concluded that the literature lacked support for the academic value of games in math and science learning. The finding calls for more rigorous studies to examine the effects of GBL in science education, which this study chose to focus on. In examining the effects of GBL, most studies focused on the learning outcomes at the completion of gameplay while learners’ in-game performance has been largely ignored (e.g., Huang 2011; Hwang et al. 2012) with only few exceptions (Admiraal et al. 2011; Nietfeld et al. 2014). With the advancement of technology, in-game performance can often be tracked and analyzed to provide valuable insights into the GBL process that leads to the ultimate learning outcome (Cheng et al. 2015). Accordingly, this study incorporated into its investigation learners’ performance in the process of playing a science-based problem-solving game.

Games may motivate learners to spend more time exploring and studying than they would otherwise (Alessi and Trollip 2000), but empirical evidence for the motivational benefits of GBL has been much less conclusive than that for its cognitive benefits. While some contend that GBL facilitates motivation across different learners and learning situations (e.g., Ke 2009; Papastergiou 2009; Tüzün et al. 2009; Vogel et al. 2006), others did not find strong evidence. For example, a recent meta-analysis by Wouters et al. (2013) concluded that GBL was effective for learning, but not for motivation.

A few authors’ discussions offer some insight into why GBL does not always lead to high levels of motivation. Chen and Law (2016) reasoned that the effects of GBL might vary with the nature of learning activities: when the activities are not engaging or too difficult, learners may lose the motivation to continue. This discussion points to an important construct that gauges learners’ motivation in gameplay-flow (Csikszentmihalyi 1990), which is a state when an individual feels intrinsically motivated to do something. Analyzing GBL’s lack of motivational benefits from another angle, Wouters et al. (2013) suggested that GBL is different from leisure game playing: While leisure game players can choose when and what games to play, students in GBL often do not have a similar sense of control, which may lead to a lack of motivation. Considering the realistic constraints of GBL, we believe that learners’ engagement indicators in gameplay may provide an additional lens to complement such motivational constructs as flow. Together, flow and engagement indicators may provide a more comprehensive view of the motivational aspect of GBL.

Echoing other researchers (Vandercruysse et al. 2013), we argue that a fruitful direction for GBL research is to investigate GBL’s impact mechanism by teasing out the effects of specific game elements instead of treating GBL as a homogeneous whole. In Ke’s (2009) qualitative meta-analysis, most of the reviewed studies examined GBL as a package, except for only a few that investigated the effects of individual GBL elements or features. Competition, for instance, is an element often introduced in games to engage learners, but it is unclear how the presence of competition affects GBL processes and outcomes (e.g., Admiraal et al. 2011; Vandercruysse et al. 2013). Therefore, one purpose of the current study was to examine the impact of competition as an element of GBL.

The remainder of this section provides a detailed review of the constructs examined in this study: Competition as a GBL element, learners’ engagement as a productive lens to examine GBL, and flow as a motivational gauge in GBL.

Competition in game-based learning (GBL)

Although not all theorists agree that competition is a defining characteristic of GBL (Wouters et al. 2013), it is frequently integrated in GBL (e.g., Cagiltay et al. 2015). From the lens of social interdependence theory, competition has been historically viewed as a negative force in learning environments (Johnson and Johnson 1989). Recently, however, social interdependence theory has been expanded to explore how competition can play a constructive role in learning (Johnson and Johnson 2009). Specifically, Johnson and Johnson (2009) suggested that clear, specific, and fair rules and procedures could help make competition more constructive. Since rules and procedures are inherent in games, competition in the context of GBL can potentially benefit learning.

Competition in GBL can take many forms. Learners can compete with themselves, the game system, other players, other teams, or a combination of these elements (Alessi and Trollip 2000; Fisher 1976; Yu 2003). Due to the social reward or punishment associated with winning or losing (Foo et al. 2017), competition can make GBL activities more game-like (e.g., Ke and Grabowski 2007). With competition, learners are likely to be motivated and demonstrate attention, effort, and excitement (Cheng et al. 2009; Malone and Lepper, 1987). Empirical research has yielded mixed findings regarding the effects of competition in GBL. For example, in Cagiltay et al. (2015) study, competition in the form of seeing other players’ scores enhanced students’ learning and motivation in GBL. Similarly, (Admiraal et al. 2011) found that the extent to which student teams engaged in competing with other teams positively influenced learning outcomes. On the other hand, other studies reported competition’s lack of effect on learning or motivation, in the case of competing with other players by seeing others’ scores (e.g., ter Vrugte et al. 2015) and competing against a virtual player (e.g., Vandercruysse et al. 2013). Some researchers argue that competition can force learners to focus more on winning than the educational content of GBL, thus weakening their intrinsic motivation to learn (ter Vrugte et al. 2015; Van Eck and Dempsey 2002). As a result, competition may negatively impact students’ self-efficacy beliefs, motivation, and performance (Bandura and Locke 2003).

Several reasons might have caused the conflicting findings regarding the effects of competition. First, as described earlier, competition takes various forms. Although not many studies to date examined the role of competition in the GBL context, among those that did, various forms of competition were implemented. For example, in some studies, competition was in the form of seeing fellow players’ scores (e.g., Cagiltay et al. 2015; ter Vrugte et al. 2015); in other studies, students competed with a virtual player in the gaming system (e.g., Vandercruysse et al. 2013; Van Eck and Dempsey 2002); in still others, players competed in teams to beat and take over other teams (e.g., Admiraal et al. 2011). The various forms of competition were likely to trigger different cognitive processes and gaming behaviors, which could impact learners’ motivation and learning differently. Indeed, in explaining why their study found a positive effect of competition whereas Vandercruysse et al. (2013) did not find any effect, Cagiltay et al. (2015) attributed to the different forms of competition in the two studies (competing with fellow players vs. a virtual player). To clarify the impact of competition, the current study focused on one type of competition similar to that implemented in Cagitay et al. (2015), where students individually competed with each other by comparing their own game performance with that of other players.

Even with the same form of competition, it may still not have a universal effect. Competition may interact with other factors to influence performance and motivation in GBL. For example, van Eck and Dempsey (2002) found that the effects of competition in GBL varied as a function of students’ access to contextual help within games. Due to its potential interaction with other factors, it becomes helpful to examine competition through additional lenses. In this study, we chose learners’ engagement in gameplay as an additional lens to examine competition in GBL. The next section provides a review of engagement in GBL and discusses its potential interaction with competition.

Engagement in GBL and potential interaction with competition

Engagement in learning has received increasing attention in the past two decades (Fredricks et al. 2016). In their seminal work, Fredricks et al. (2004) distinguished three dimensions of engagement: behavioral, cognitive, and emotional engagement. Behavioral engagement is related to learner behaviors such as participation, attention, effort, and persistence; cognitive engagement is shown in levels of investment or self-regulation in learning; emotional engagement has to do with negative or positive reactions associated with learning. Enhanced engagement has a positive impact on learning (e.g., Corno and Mandinach 1983; Huizenga et al. 2009; Miller et al. 1996). The same positive impact on learning has also been found in the context of GBL (e.g., Eseryel et al. 2014).

While engagement is an important factor in successful GBL, its measurement has mainly relied on self-report psychometric questionnaires (e.g., Hamari et al. 2016). For example, a widely used engagement scale was developed by Fredricks et al. (2005) to measure behavioral, cognitive, and emotional engagement in schools. Other researchers measured student engagement using Keller’s (1993) motivation lens which consists of four dimensions: attention, relevance, confidence, and satisfaction (Lee and Spector 2012). However, there are inherent issues with self-report engagement instruments, such as the lack of immediacy between recalls and the actual events (e.g., Greene 2015). Other measurements of engagement in GBL include observational protocols (e.g., Annetta et al. 2009; Huizenga et al. 2009) and behavioral data (e.g., Eseryel et al. 2014). We argue that behavioral data can provide an objective lens to examine engagement in GBL. In this study, we used students’ number of attempts in playing a game as a proxy of engagement. A high number of attempts suggests a willingness to try and a high level of engagement. When students engage in repeated attempts, the effort may show a connection to their flow experience, in-game performance, and learning outcomes. It is worth noting that the number of attempts in gameplay represents a facet of behavioral engagement, which is different from cognitive engagement. While cognitive engagement may show in students’ use of strategies in attempting a game, behavioral engagement focuses on effort. Although repeated attempts may not be strategic, they show engagement in the form of investing effort, not giving up easily. This conceptualization is in line with recent research on student engagement in math and science, where behavioral engagement was measured with items such as “I put effort into learning,” or “If I don’t understand, I give up right away” (Fredricks et al. 2016; Wang et al. 2016).

Various features have been applied to increase engagement in GBL, such as feedback, challenges, and interactions (Gee 2005; Rieber 1996). Competition is also frequently used to promote learners’ engagement in GBL (e.g., Malone and Lepper 1987). Plausibly, with its goal-oriented nature towards winning (Hong et al. 2009), competition is likely to increase engagement. However, it is not always the case. For example, learners were found to lack engagement or did not put in effort when they saw their competitors won consistently (Cheng et al. 2009). One may speculate whether the presence of competition would lead to engagement behaviors that are different from those without competition. Research provides some support for this speculation. Competition has been found to induce performance goals (Ames and Ames 1984; Lam et al. 2004). In the GBL context, Chen et al. (2018) found that students in non-competition groups tended to adopt learning goals, whereas those in competition groups were likely to adopt performance goals. The literature on goals theory has clearly established that performance-goal and learning-goal oriented learners demonstrate different learning strategies, cognitive processing patterns, and persistence levels (e.g., Ames and Archer 1988; Greene and Miller 1996; Vansteenkiste et al. 2004). As such, it became worthwhile to examine whether there is a relationship between competition and students’ engagement in GBL settings.

While attempting to examine the effects of competition and engagement on learners’ performance, we also considered how the two constructs impact motivation, which was operationalized in this study as learners’ flow experience in the process of playing a game. The next section provides a review of flow.

Flow experience in GBL and its relationship with competition and engagement

GBL has been considered as an approach to encouraging positive affect, engagement, and motivation (e.g., Ke 2009; Sitzmann 2011; Vogel et al. 2006). Various empirical studies suggested that games could lead to flow experience (e.g., Kiili 2006; Jayakanthan 2002; Sweetser and Wyeth 2005). Flow is the ultimate outcome of deep engagement in activities. In the current study, students’ flow experience was considered as the process variable that played a motivational role in the learning process (Seligman and Csikszentmihalyi 2000).

Gameplay allows the player to undergo a feeling of pleasure and satisfaction (Csikszentmihalyi 1975). Many researchers have examined the factors that influence flow experience, such as motivation (Wan and Choiu 2006) and self-regulation (Chen and Sun 2016). While existing literature argues that games increase the flow experience in the learning process (Sitzmann 2011; Tennyson and Jorczak 2008), others suggested additional lenses to examine the impact of games on flow experience (Wouters et al. 2013). Engagement is considered one of those lenses (e.g., Chen and Law 2016). In addition to engagement, competition is another factor that may trigger flow experience in GBL (Jayakanthan 2002). Admiraal et al. (2011) found that when students were distracted from a game, they were less likely to engage in group competition. In the current study, we hypothesize that competition and engagement in games may enhance students’ flow experience.

Meanwhile, much research has explored the impact of flow experience on learning outcomes in GBL (e.g., Inal and Cagiltay 2007; Sun et al. 2017). Pearce et al. (2005) found that flow experience enabled learners to achieve higher levels of performance. Flow was also found to significantly predict perceived learning and enjoyment (Barzilai and Blau 2014). On the other hand, Admiraal et al. (2011) found that flow had no effect on learning outcomes. In this study, we hypothesized that learners who have achieved a flow state in GBL would focus on game activities and proactively face challenges, leading to better learning outcomes.

Purpose of the study and research questions

The purpose of the study was to explore the roles of engagement and competition in students’ in-game performance and flow experience in GBL, which in turn would influence learning outcomes. Figure 1 depicts the proposed theoretical model of the study.

Specifically, we asked the following research questions:

-

1.

Do competition and learners’ engagement affect their in-game performance?

-

2.

Do competition and learners’ engagement affect their flow experience?

-

3.

Does learners’ engagement moderate the relationship between competition and learners’ in-game performance?

-

4.

Does learners’ engagement moderate the relationship between competition and learners’ flow experience?

-

5.

Does learners’ engagement influence science learning outcomes via the mediation of in-game performance and flow experience?

-

6.

Does competition influence science learning outcomes via the mediation of in-game performance and flow experience?

Methodology

Participants

Four intact classes of 114 seventh-grade students (55 females and 59 males) from a middle school in central Taiwan participated in this study. The students were randomly assigned to either a competition or a non-competition group. Prior to the study, participants were informed that their participation was voluntary. They had the freedom to stop participating at any time, and participation or non-participation would not affect their grades. Both groups showed equivalent prior knowledge of the target science content in this study.

Features of SumMagic

A central component of this study was a problem-solving game named SumMagic, which is a science learning game grounded in the constructivist learning approach. The science concepts covered in this game included time, distance, position, and velocity. The specific learning objectives were for students to (1) observe the timeline of an object’s movement, (2) identify an object’s moving distance, (3) define speed as a scalar quantity that involves a magnitude, and (4) calculate an object’s speed from its moving distance and time data. The game scenario was situated in a village that was under attack by a hoarding dragon. Players were charged with ensuring the village’s food supply. During the process, they were presented with various challenges or problems. The problems had two levels. Players had to solve basic-level problems to activate advanced-level problems. An example basic-level problem required players to find out the speed at which relief food could arrive in 20 minutes to a destination that was 500 meters away. An example advanced problem asked students to determine the time needed for a particular type of food to appear in a sequence. Players had a variety of tools to facilitate problem solving, including a timer, a ruler, a notebook, and a calculator. In addition, players could request procedural guidance if they were unsure of what to do. As seen in Fig. 2, players could select the non-player character (NPC; on the left) to access the procedural guidance, which served to scaffold player’s problem solving and direct their efforts to complete a specific cognitive task. After reading the guidance, players can try to put down their answers for the procedural guidance by clicking on the NPC (on the right).

As students worked on solving problems, they could collect stars which would earn them points. As shown in Fig. 3, when they successfully solved a basic problem, they would receive one star. If they then solved an advanced problem, they would receive the second star. They were able to earn the third star if they solved both the basic and advanced problems with only one failed attempt or no failed attempt at all. Upon completion of the game, a final scoreboard would appear. The only difference between the competition and the non-competition groups was in the final score dashboard: while the non-competition group only saw their own final scores (Fig. 3), the competition group could additionally see those peer players who ranked top five in final scores and completion time, with their own rankings in comparison (Fig. 4).

Operationalization and measurement of the variables in the study

Engagement

In this study, we used students’ number of attempts at playing the game as a proxy to measure their engagement. Specially, the number of attempts by each student throughout the duration of the game was tracked and recorded. Higher numbers of attempts infer higher levels of engagement.

Competition

Competition was operationalized through the experimental design. The non-competition group, where students only saw their own performance in the scoreboard, was coded as 0 for further data analysis. The competition group, where students saw both their own scores and rankings of top players, was coded as 1 for subsequent data analysis. According to Kline (2011), it is appropriate to model dichotomous variables as predictors in path analysis. Thus, competition, a dichotomous variable in this study, was incorporated as a predictor in the proposed path model.

Engagement × Competition interaction

Research questions 3 and 4 sought to find out whether engagement moderated the relationship between competition and learning (in-game performance and flow). To address the questions, we followed the suggested practice of multiplying the independent variable and the moderator to create an interaction term in the proposed model (Cohen et al. 2003; Gefen et al. 2011; Kline 2011). As suggested by Kline (2011), we first centered the competition variable by subtracting the mean value from it, and then multiplied the centered competition with the engagement variable to create the interaction term.

In-game performance

In-game performance was calculated based on the number of stars students collected and the number of times they used the timer during gameplay. As described earlier, when students solved a basic problem, they obtained the first star. When they solved an advanced problem, they obtained the second star. If they solved both the basic and the advanced problems with one or less failed attempt, they would earn the third star. The one, two, or three stars would earn students 200, 300, or 500 points, respectively. In addition to the points earned through the collection of stars, the in-game performance also included up to 50 bonus points. The calculation of the bonus points worked as follows: 50–(2 × number of timer clicks). For example, if a student used the timer 16 times, the student would receive 18 bonus points. If students played multiple times, the game would record their highest scores.

Flow experience

The measurement of the flow experience in the current study was adopted from Kiili (2006). The instrument measured four key flow dimensions: Concentration, loss of self-consciousness, transformation of time, and autotelic experience (Csikszentmihalyi 1990). Previously, a Chinese version of Kiili’s instrument was used by Hou and Li (2014), which found reasonable reliability of the items. The instrument contains 12 five-point Likert-type items. Example items include “My attention was focused entirely on playing the game” and “I really enjoyed the playing experience.” The reliability of the instrument was .94 in the current study.

Learning outcomes

Learning outcomes were measured with a content knowledge test of six items that asked both conceptual and problem-solving questions. Students earn one point for each correctly answered question, totaling up to six points possible. Examples of both types of questions are provided in Appendix A. To ensure the alignment between the test and the target content of this study, the test items were based on a middle school science textbook used in Taiwan, and were developed and validated by two experienced science teachers (with 5–10 years of teaching experience) and the instructional designers of the study. The test was previously used to measure learning outcomes in Chen et al. (2018).

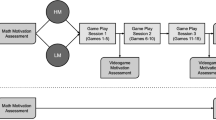

Study procedure

At the beginning of the study, all participants took the 15-min pretest on their content knowledge. Following the pretest, students were assigned to either competition or non-competition group based on their intact classes. In both groups, students were first given a 5-min introduction to the study. The students then started to play the game over a period of 2 weeks, for 40 minutes per week. During the 40 minutes, students were required to work on the game only, while not being able to use other programs on their computers. Both groups played in the same game environment. The only difference was that the competition group was informed that they were in the competition group and that students in this group were able to see top players’ performance on the scoreboard as compared with theirs. On the other hand, those in the non-competition group proceeded with gameplay where they could only see their own scores. A week after the experiment, the students took the post-test on their content knowledge, and completed the flow experience questionnaire. Figure 5 shows the procedure of the experiment.

Results

Descriptive statistics and correlation analysis

Table 1 depicts the descriptive statistics and correlation of the variables. Significant correlations were found between engagement and competition, and in-game performance and engagement, and competition. Flow experience was significantly correlated with engagement and in-game performance. Learning outcomes had significant correlations with engagement, in-game performance, and flow experience. Table 2 depicts the means and standard deviations of engagement, flow, in-game performance, and learning outcomes of the two groups, competition versus non-competition.

Structural equation modeling

Structural equation modeling was employed to test the proposed theoretical model. As previously described, an interaction term was created to model the interaction effect between engagement and competition. Before conducting the analysis, we centered all the independent variables. Then, univariate normality of the variables was checked. The highest skewness was 2.22 and the highest kurtosis was 4.78, well below the threshold of normality violation (Kline 2011). Besides univariate normality, we also tested multivariate assumptions such as outlier and multicollinearity. Both Cook’s Distance and VIF were calculated. Cook’s Distances were > 1 and VIF’s were > 10 for all independent variables (Tabachnick and Fidell 2007). Thus, multivariate assumptions of the model were satisfied. The fit indices of the hypothesized model (Chi square/df = .960; CFI = 1.00; GFI = .989; NFI = .985; RMSEA = .000) suggested that the data fit the model well (Hu and Bentler 1999). Figure 6 depicts the final model with significant path coefficients.

Effects of engagement and competition on in-game performance and flow

The results showed that engagement had a significant positive effect on in-game performance (β = 10.721; t = 3.293; p < .01). However, engagement did not have a significant effect on flow (t = .172; p > .05). No significant effect of competition was found on in-game performance (t = 1.680; p > .05), nor on flow (t = . 716; p > .05). In other words, neither the presence of competition nor engagement in game had a significant impact on students’ flow experience.

Interaction effects of engagement and competition

The results suggested that engagement moderated the relationships between competition and in-game performance (β = – 13.237; t = – 2.001; p < .05). In order to visually interpret an interaction effect, Cohen et al. (2003) suggested plotting regression lines at different levels of the moderator variable. Following the suggestion, we plotted two regression lines that visually depict the effects of engagement at two levels (low and high). As Fig. 7 shows, the effect of engagement on performance was relatively weak in the competition group when compared with the same effect in the non-competition group. In other words, engagements’ effect on performance was more pronounced in the non-competition group. This finding suggested that highly engaged students in the non-competition group tended to perform significantly better than those who were less engaged.

Mediation effects of in-game performance

As shown in Fig. 6, the results indicated that the relationship between engagement and learning outcomes was mediated by in-game performance (p < .05), but not flow (p > .05).

Discussion

Mayer (2011) called for more empirical research on GBL that explores how specific game features affect learning and motivation. In response to the call, the current study focused on examining competition as a game feature. By comparing a competition group with a non-competition group, and by incorporating players’ engagement as an additional lens, we examined the performance and motivation across the two groups in a game-based science learning environment. In addition to learning outcomes after gameplay, the current study also examined students’ in-game performance in the hope of gaining more insights into the constructs under investigation.

Although the effects of competition have long been studied in educational research (e.g., Ames and Ames 1984; Johnson and Johnson 1989; 2009; Lam et al. 2004), this study sought to examine competition in the context of GBL. The results showed that competition did not have a significant main effect on students’ in-game performance nor their flow experience. While few studies examined the impact of competition on in-game performance, the finding aligned with Cagiltay et al. (2015), which compared the in-game performance between a competition group and a non-competition group and found no difference. Our finding suggests that competition alone was not sufficient to make a difference on students’ motivation and performance in playing the game. The students in the competition group might have focused more on winning and been distracted by the changes in their game scores (ter Vrugte et al. 2015; Van Eck and Dempsey, 2002). Comparatively, those in the non-competition group were more likely to play without distractions and able to focus more on the game context. As such, the competition might have prevented students from focusing on the science content of the game to understand and internalize the embedded concepts. Therefore, competition that emphasizes winning and losing may potentially turn a flow activity into distractions (Cheng et al. 2009; ter Vrugte et al. 2015).

On the other hand, students’ engagement, which was measured by their number of attempts at gameplay, was found to affect their in-game performance. Moreover, engagement was also linked to students’ science learning outcomes through the mediation of in-game performance. While the findings are aligned with previous studies that identified the positive impact of GBL on learning outcomes (e.g., Clark et al. 2011; Erhel and Jamet 2013), the current study was able to pinpoint the specific role of engagement that led the positive outcomes, both in game and after the completion of the game. Compared with competition, learners’ engagement in gameplay appeared to be a construct of better utility and an insightful indicator in GBL. The more attempts students made at playing the game, the more likely they would achieve a better game performance, which would subsequently contribute to improved conceptual understandings and problem-solving abilities in the science content area.

Contrary to what we had expected, engagement did not affect students’ flow experience despite its impact on performance. In other words, repeated attempts did not warrant a better experience of flow. The lack of effects on flow experience leads us to reflect on the difference between leisure games and GBL (Wouters et al. 2013). While it is the intention of GBL to immerse learners in gameplay to acquire embedded knowledge and skills, the goal is not always achievable. Linking to the significant effects of engagement in the current study, we suggest that a productive approach in GBL research is to examine learners’ motivation in conjunction with their engagement indicators.

More importantly, the findings suggest that engagement did not always improve students’ performance when we consider competition in the model. The significant interaction between engagement and competition indicate that engagement only had an effect when there was no competition in the games. Specifically, in the non-competition group, highly engaged students tended to perform significantly better than those who were less engaged. However, within the competition group, students’ engagement levels did not make a significant difference in their performance. As illustrated in Fig. 7, the lowest level of performance was shown by students in the non-competition group who demonstrated a low engagement. Comparatively, students in the competition group had an overall better performance, although their engagement levels did not make a significant influence on their performance. The highest performers were in the non-competition group but showed a high level of engagement. We attributed the results to the different goals students adopted in the two groups which might have led to different engagement patterns. As found in previous research, competition is likely to induce performance goals among learners (Ames and Ames 1984; Lam et al. 2004). For the students in the competition group in this study, when they showed a performance-goal orientation, their actions, such as high or low engagement, were likely to be driven by a need to show competence or to avoid showing incompetence. For example, when these students saw themselves rank high in a game, they might lose the momentum to keep trying. On the other hand, without competition in the other group, the students were more likely to focus on the game itself and adopt a mastery-oriented goal. Their high engagement might have been driven by a different motive—to establish a genuine mastery and understanding of the game rather than showcasing their competence. Such an effort might have led them to achieve the highest performance level. The findings suggest that although competition had some benefits, it also has limitations. Competition may have a negative effect on students who could have otherwise been driven to even better performance.

Conclusions and implications

This study contributed to GBL research in two unique areas. First, the study introduced learners’ engagement as a lens, and used objective behavioral engagement data to examine motivation and learning in GBL. Second, the study examined learners’ in-game performance, an integral but often overlooked part of learning in GBL. The significant effects of engagement found in this study pointed to the importance of introducing more objective engagement data into GBL research. While this study used learners’ number of attempts as a proxy of behavioral engagement, future research should both explore more indicators of behavioral engagement (e.g., time factors, Eseryel et al. 2014) and go beyond to examine cognitive and emotional engagement in GBL (Fredricks et al. 2004). Moreover, with advanced tracking and analysis techniques, future studies can examine learners’ quality of engagement in addition to its quantity (Greene 2015). For example, random trials and errors may be distinguished from strategic attempts. With a more comprehensive capture of learners’ engagement in the GBL process, we may establish a better understanding of its impact on in-game performance and learning outcomes.

In light of the current study, the role of competition in GBL should be carefully examined in future research. Does the presence of competition necessarily promote learning and flow in GBL? Could the effect of competition manifest in other constructs that have more fidelity in the GBL process? These are questions that need to be answered before competition can be solidified as a way to gamify learning activities to help meet learning objectives.

Compared with learning performance in GBL, the study indicated that motivation (i.e., flow) was harder to improve, even among those students with a high level of engagement. To promote learners’ motivation, game design for GBL should consider providing learners with more control and autonomy.

This study provides a few implications for educators who plan to use GBL in science education. For games that do not incorporate competition, teachers should monitor learners’ engagement in gameplay as an important indicator of learning. For games that do provide an opportunity to compete, teachers should keep in mind that competition has its limitations, and that high levels of engagement do not necessarily mean good performance. As learners make progress in the GBL process, teachers should pay special attention to help learners establish learning goals that go beyond merely winning a game.

Finally, it should be noted that the findings of this study are likely to be applicable only to games with similar parameters. As previously discussed, different forms of competition (e.g., competing with peers or with virtual players) can lead to different engagement patterns and learning outcomes, which may confound GBL research. Future research should pay close attention to different game parameters and avoid making generalized claims about GBL.

References

Admiraal, W., Huizenga, J., Akkerman, S., & Dam, G. T. (2011). The concept of flow in collaborative game-based learning. Computers in Human Behavior, 27(3), 1185–1194. https://doi.org/10.1016/j.chb.2010.12.013.

Alessi, S. M., & Trollip, S. R. (2000). Multimedia for learning: Methods and development. Needham Heights: Allyn & Bacon Inc.

Alexander, P. A., Murphy, P. K., Woods, B. S., Duhon, K. E., & Parker, D. (1997). College instruction and concomitant changes in students’ knowledge, interest, and strategy use: A study of domain learning. Contemporary Educational Psychology, 22, 125–146.

Ames, C., & Ames, R. (1984). Goal structures and motivation. Elementary School Journal, 85, 39–50.

Ames, C., & Archer, J. (1988). Achievement goals in the classroom students’ learning strategies and motivation processes. Journal of Educational Psychology, 80, 260–267.

Annetta, L., Minogue, J., Holmes, S. Y., & Cheng, M. (2009). Investigating the impact of video games on high school students’ engagement and learning about genetics. Computers & Education, 53(1), 74–85.

Bandura, A. (2000). Exercise of human agency through collective efficacy. Current Directions in Psychological Science, 9(3), 75–78. https://doi.org/10.1111/1467-8721.00064.

Bandura, A., & Locke, E. A. (2003). Negative self-efficacy and goals effects revisited. Journal of Applied Psychology, 88, 87–99.

Barab, S. A., Dodge, T., Thomas, M. K., Jackson, C., & Tuzun, H. (2007). Our designs and the social agendas they carry. The Journal of the Learning Sciences, 16(2), 263–305. https://doi.org/10.1080/10508400701193713.

Barzilai, S., & Blau, I. (2014). Scaffolding game-based learning: Impact on learning achievements, perceived learning, and game experiences. Computers & Education, 70, 65–79. https://doi.org/10.1016/j.compedu.2013.08.003.

Cagiltay, N. E., Ozcelik, E., & Ozcelik, N. S. (2015). The effect of competition on learning in games. Computers & Education, 87, 35–41.

Chen, C.-H., & Law, V. (2016). Scaffolding individual and collaborative game-based learning in learning performance and intrinsic motivation. Computers in Human Behavior, 55, 1201–1212. https://doi.org/10.1016/j.chb.2015.03.010.

Chen, C.-H., Law, V., & Chen, W. Y. (2018a). The effects of peer competition-based science learning game on secondary students’ performance, achievement goals, and perceived ability. Interactive Learning Environments, 26(2), 235–244.

Chen, C.-H., Liu, J.-H., & Shou, W.-C. (2018b). How competition in a game-based science learning environment influences students’ learning achievement, flow experience and learning behavioral patterns. Educational Technology & Society, 21(2), 164–176.

Chen, L.-X., & Sun, C.-T. (2016). Self-regulation influence on game play flow state. Computers in Human Behavior, 54, 341–350.

Cheng, M.-T., Lin, Y.-W., & She, H. C. (2015). Learning through playing virtual age: Exploring the interactions among student concept learning, gaming performance, in-game behaviors, and the use of in-game characters. Computers & Education, 86, 18–29.

Cheng, H. N. H., Wu, W. M. C., Liao, C. C. Y., & Chan, T. W. (2009). Equal opportunity tactic: Redesigning and applying competition games in classrooms. Computers & Education, 53(3), 866–876. https://doi.org/10.1016/j.compedu.2009.05.006.

Clark, D., Nelson, B. C., Chang, H.-Y., Martinez, M., Slack, K., & D’Angelo, C. M. (2011). Exploring Newtonian mechanics in a conceptually-integrated digital game: Comparison of learning and affective outcomes for students in Taiwan and the United States. Computers & Education, 57, 2178–2195.

Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Applied multiple regression/correlation analysis for the behavioral sciences. London: Routledge.

Connell, J. P., & Wellborn, J. G. (1991). Competence, autonomy, and relatedness: A motivational analysis of self-system processes. In M. R. Gunnar & L. A. Sroufe (Eds.), Self processes in development: Minnesota Symposium on Child Psychology (Vol. 23, pp. 43–77). Hillsdale: Erlbaum.

Connolly, T. M., Boyle, E. A., MacArthur, E., Hainey, T., & Boyle, J. M. (2012). A systematic literature review of empirical evidence on computer games and serious games. Computers & Education, 59, 661–686.

Corno, L., & Mandinach, E. B. (1983). The role of cognitive engagement in classroom learning and motivation. Educational Psychologist, 18(2), 88–108. https://doi.org/10.1080/00461528309529266.

Csikszentmihalyi, M. (1975). Beyond boredom and anxiety. San Francisco: Jossey-Bass.

Csikszentmihalyi, M. (1990). Flow: The psychology of optimal experience. New York: Harper and Row.

Erhel, S., & Jamet, E. (2013). Digital game-based learning: Impact of instructions and feedback on motivation and learning effectiveness. Computers & Education, 67, 156–167.

Eseryel, D., Law, V., Ifenthaler, D., Ge, X., & Miller, R. (2014). An investigation of the interrelationships between motivation, engagement, and complex problem solving in game-based learning. Journal of Educational Technology & Society, 17(1), 42–53.

Eslinger, E., White, B., Frederiksen, J., & Brobst, J. (2008). Supporting inquiry processes with an interactive learning environment: Inquiry Island. Journal of Science Education and Technology, 17, 610–617.

Fisher, J. E. (1976). Competition and gaming: An experimental study. Simulation & Gaming, 7(3), 321–328.

Foo, J. C., Nagase, K., Naramura-Ohno, S., Yoshiuchi, K., Yamamoto, Y., & Morita, K. (2017). Rank among peers during game competition affects the tendency to make risky choices in adolescent males. Frontiers in Psychology, 8, 16. https://doi.org/10.3389/fpsyg.2017.00016.

Fredricks, J. A., Blumenfeld, P. C., Friedel, J., & Paris, A. (2005). School engagement. In K. A. Moore & L. Lippman (Eds.), Conceptualizing and measuring indicators of positive development: What do children need to flourish (pp. 305–321). New York: Kluwer Academic/Plenum Press.

Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: potential of the concept, state of the evidence. Review of Educational Research, 74, 59–109.

Fredricks, J. A., Filsecker, J., & Lawson, M. A. (2016a). Student engagement, context, and adjustment: Addressing definitional, measurement, and methodological issues. Learning and Instruction, 43, 1–4.

Fredricks, J. A., Wang, M. T., Linn, J. S., Hofkens, T. L., Sung, H., Parr, A., et al. (2016b). Using qualitative methods to develop a survey measure of math and science engagement. Learning and Instruction, 43, 5–15.

Gee, J. P. (2005). Why video games are good for your soul: Pleasure and learning. Melbourne: Common Ground.

Gefen, D., Rigdon, E. E., & Straub, D. (2011). An update and extension to SEM guidelines for administrative and social science research. MIS Quarterly. https://doi.org/10.2307/23044042.

Gredler, M. E. (2004). Games and simulations and their relationships to learning. In D. H. Jonassen (Ed.), Handbook of research for educational communications and technology (2nd ed., pp. 571–582). Mahwah: Lawrence Erlbaum Associates.

Greene, B. A. (2015). Measuring cognitive engagement with self-report scales: Reflections from over 20 years of research. Educational Psychologist, 50(1), 14–30. https://doi.org/10.1080/00461520.2014.989230.

Greene, B. A., & Miller, R. B. (1996). Influences on achievement: Goals, perceived ability, and cognitive engagement. Contemporary Educational Psychology, 21(2), 181.

Hamari, J., Shernoff, D. J., Rowe, E. B. C., & Edwards, T. (2016). Challenging games help students learn: An empirical study on engagement, flow and immersion in game-based learning. Computers in Human Behavior, 54, 170–179. https://doi.org/10.1016/j.chb.2015.07.045.

Hong, J. C., Hwang, M. Y., Lu, C. H., Cheng, C. L., Lee, Y. C., & Lin, C. L. (2009). Playfulness-based design in educational games: A perspective on an evolutionary contest game. Interactive Learning Environments, 17(1), 15e35.

Hou, H.-T., & Li, M.-C. (2014). Evaluating multiple aspects of a digital educational problem-solving-based adventure game. Computers in Human Behavior, 30, 29–38.

Hu, L.-T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus. Structural Equation Modeling, 6(1), 1–55.

Huang, W.-H. (2011). Evaluating learners’ motivational and cognitive processing in an online game-based learning environment. Computers in Human Behavior, 27(2), 694–704. https://doi.org/10.1016/j.chb.2010.07.021.

Huizenga, J., Admiraal, W., Akkerman, S., & Dam, G. (2009). Mobile game-based learning in secondary education: Engagement, motivation and learning in a mobile city game. Journal of Computer Assisted Learning, 25(4), 332–344. https://doi.org/10.1111/j.1365-2729.2009.00316.x.

Hwang, G. J., Wu, P.-H., & Chen, C.-C. (2012). An online game approach for improving students’ learning performance in web-based problem-solving activities. Computers & Education, 59, 1246–1256.

Inal, Y., & Cagiltay, K. (2007). Flow experiences of children in an interactive social game environment. British Journal of Educational Technology, 38(3), 455–464. https://doi.org/10.1111/j.1467-8535.2007.00709.x.

Jayakanthan, R. (2002). Application of computer games in the field of education. The Electronic Library, 20(2), 98–102. https://doi.org/10.1108/02640470210697471.

Johnson, D. W., & Johnson, R. T. (1989). Cooperation and competition: A meta-analysis of the research. Hillsdale: Lawrence Erlbaum.

Johnson, D. W., & Johnson, R. T. (2009). An educational psychology success story: Social interdependence theory and cooperative learning. Educational Researcher, 38(5), 365–379. https://doi.org/10.3102/0013189x09339057.

Jonassen, D. H. (1999). Designing constructivist learning environments. In C. M. Reigeluth (Ed.), Instructional-design theories and models: A new paradigm of instructional theory (Vol. II). Mahwah: Lawrence Erlbaum Associates.

Ke, F. (2009). A qualitative meta-analysis of computer games as learning tools. In E. F. Richard (Ed.), Handbook of research on effective electronic gaming in education (pp. 1–32). Hershey: IGI Global.

Ke, F. (2016). Designing and integrating purposeful learning in game play: A systematic review. Educational Technology Research and Development, 64(2), 219–244. https://doi.org/10.1007/s11423-015-9418-1.

Ke, F., & Grabowski, B. (2007). Gameplaying for math learning: Cooperative or not? British Journal of Educational Technology, 38(2), 249–259. https://doi.org/10.1111/j.1467-8535.2006.00593.x.

Keller, J. M. (1993). Instructional material motivational survey. Unpublished documents: Florida State University.

Ketelhut, D. J., Dede, C., Clarke, J., Nelson, B., & Bowman, C. (2007). Studying situated learning in a multi-user virtual environment. In E. Baker, J. Dickieson, W. Wulfeck, & H. O’Neil (Eds.), Assessment of problem solving using simulations. Mahwah: Lawrence Erlbaum Associates.

Kiili, K. (2006). Evaluations of an experimential gaming model. Human Technology, 2(2), 187–201.

Kline, R. (2011). Principles and practice of structural equation modeling. New York: The Guilford Press.

Lam, S., Yim, P., Law, J. S. F., & Cheung, R. W. Y. (2004). The effects of competition on achievement motivation in Chinese classrooms. British Journal of Educational Psychology, 74, 281–296.

Lee, J., & Spector, J. M. (2012). Effects of model-centered instruction on effectiveness, efficiency, and engagement with ill-structured problem solving. Instructional Science, 40, 537–557.

Li, S. C., Law, N., & Lui, K. F. A. (2006). Cognitive perturbation through dynamic modelling: a pedagogical approach to conceptual change in science. Journal of Computer Assisted Learning, 22(6), 405–422. https://doi.org/10.1111/j.1365-2729.2006.00187.x.

Malone, T. W. (1981). Toward a theory of intrinsically motivating instruction. Cognitive Science, 5(4), 333–369. https://doi.org/10.1207/s15516709cog0504_2.

Malone, T. W., & Lepper, M. R. (1987). Making learning fun: A taxonomy of intrinsic motivations for learning. In R. Snow & M. J. Farr (Eds.), Aptitude, learning, and instruction. Hillsdale: Erlbaum.

Mayer, R. E. (Ed.). (2011). Multimedia learning and games. Charlotte: Information Age.

Miller, R. B., Greene, B. A., Montalvo, G. P., Ravindran, B., & Nichols, J. D. (1996). Engagement in academic work: The role of learning goals, future consequences, pleasing others, and perceived ability. Contemporary Educational Psychology, 21(4), 388–422. https://doi.org/10.1006/ceps.1996.0028.

Nietfeld, J. L., Shores, L. R., & Hoffmann, K. F. (2014). Self-regulation and gender within a game-based learning environment. Journal of Educational Psychology, 106(4), 961–973. https://doi.org/10.1037/a0037116.

Pajares, F. (1996). Self-efficacy beliefs in academic settings. Review of Educational Research, 66(4), 543–578.

Papastergiou, M. (2009). Digital game-based learning in high school computer science education: Impact on educational effectiveness and student motivation. Computers & Education, 52(1), 1–12. https://doi.org/10.1016/j.compedu.2008.06.004.

Pearce, J. M., Ainley, M., & Howard, S. (2005). The Ebb and flow of online learning. Computers in Human Behavior, 21(5), 745–771. https://doi.org/10.1016/j.chb.2004.02.019.

Pintrich, P. R. (2000). The role of goal orientation in self-regulated learning. In M. Boekaerts, P. R. Pintrich, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 451–502). San Diego: Academic Press.

Rieber, L. P. (1996). Seriously considering play: Designing interactive learning environments based on the blending of microworlds, simulations, and games. Educational Technology Research and Development, 44(2), 43–48.

Sánchez, J., & Olivares, R. (2011). Problem solving and collaboration using mobile serious games. Computers & Education, 57(3), 1943–1952. https://doi.org/10.1016/j.compedu.2011.04.012.

Sandoval, W. A., & Reiser, B. J. (2004). Explanation-driven inquiry: Integrating conceptual and epistemic scaffolds for scientific inquiry. Science Education, 88(3), 345–372.

Seligman, M. E. P., & Csikszentmihalyi, M. (2000). Positive psychology: An introduction. American Psychologist, 55(1), 5–14. https://doi.org/10.1037/0003-066X.55.1.5.

Sitzmann, T. (2011). A meta-analytic examination of the instructional effectiveness of computer-based simulation games. Personnel Psychology, 64, 489–528. https://doi.org/10.1111/j.1744-6570.2011.01190.x.

Sun, C.-Y., Kuo, C.-Y., Hou, H.-T., & Lin, Y.-Y. (2017). Exploring learners’ sequential behavioral patterns, flow experience, and learning performance in an anti-phishing educational game. Educational Technology & Society, 20(1), 45–60.

Sweetser, P., & Wyeth, P. (2005). Gameflow: A model for evaluating player enjoyment in games. ACM Computers in Entertainment, 3(3), 1–24.

Tabachnick, B. G., & Fidell, L. S. (2007). Using multivariate statistics (5th ed.). Needham Heights: Allyn & Bacon.

Tennyson, R. D., & Jorczak, R. L. (2008). A conceptual framework for the empirical study of games. In H. O’Neil & R. Perez (Eds.), Computer games and team and individual learning (pp. 3–20). Mahwah: Erlbaum.

ter Vrugte, J., de Jong, T., Vandercruysse, S., Wouters, P., van Oostendorp, H., & Elen, J. (2015). How competition and heterogeneous collaboration interact in prevocational game-based mathematics education. Computers & Education, 89, 42–52.

Tüzün, H., Yilmaz-Soylu, M., Karakus, T., Inal, Y., & Kızılkaya, G. (2009). The effects of computer games on primary school students’ achievement and motivation in geography learning. Computers & Education, 52(1), 68–77. https://doi.org/10.1016/j.compedu.2008.06.008.

Van Eck, R., & Dempsey, J. V. (2002). The effect of competition and contextualized advisement on the transfer of mathematics skills in a computer-based instructional simulation game. Educational Technology Research and Development, 50(3), 23–41. https://doi.org/10.1007/BF02505023.

Vandercruysse, S., Vandewaetere, M., Cornillie, F., & Clarebout, G. (2013). Competition and students’ perceptions in a game-based language learning environment. Educational Technology Research and Development, 61(6), 927–950. https://doi.org/10.1007/s11423-013-9314-5.

Vansteenkiste, M., Simons, J., Lens, W., Sheldon, K. M., & Deci, E. L. (2004). Motivating learning, performance, and persistence: The synergistic effects of intrinsic goal contents and autonomy supportive contexts. Journal of Personality and Social Psychology, 87, 246–260.

Vogel, J. F., Vogel, D. S., Cannon-Bowers, J., Bowers, C. A., Muse, K., & Wright, M. (2006). Computer gaming and interactive simulations for learning: A meta-analysis. Journal of Educational Computing Research, 34(3), 229–243.

Wan, C.-S., & Choiu, W.-B. (2006). Why are adolescents addicted to online gaming? An interview study in Taiwan. Cyberpsychology & Behavior, 9(6), 762–766.

Wang, M. T., Fredricks, J. A., Ye, F., Hofkens, T. L., & Linn, J. S. (2016). The Math and Science Engagement Scales: Scale development, validation, and psychometric properties. Learning and Instruction, 43, 16–26.

White, B., & Frederiksen, J. R. (1998). Inquiry, modeling, and metacognition: Making science accessible to all students. Cognition and Instruction, 16(1), 3–118.

Williams, R. B., & Clippinger, C. A. (2002). Aggression, competition and computer games: Computer and human opponents. Computers in Human Behavior, 18(5), 495–506.

Wouters, P., van Nimwegen, C., van Oostendorp, H., & van der Spek, E. D. (2013). A meta-analysis of the cognitive and motivational effects of serious games. Journal of Educational Psychology. https://doi.org/10.1037/a0031311.

Young, M. F., Slota, S., Cutter, A. B., Jalette, G., Mullin, G., Lai, B., et al. (2012). Our princess is in another castle: A review of trends in serious gaming for education. Review of Educational Research, 82(1), 61–89.

Yu, F. Y. (2003). The mediating effects of anonymity and proximity in an online synchronized competitive learning environment. Journal of Educational Computing Research, 29(2), 153–167.

Zimmerman, B. J., & Schunk, D. H. (2001). Self-regulated learning and academic achievement: Theoretical perspectives. Mahwah: Lawrence Erlbaum.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A. Samples of content knowledge test

Appendix A. Samples of content knowledge test

Rights and permissions

About this article

Cite this article

Chen, CH., Law, V. & Huang, K. The roles of engagement and competition on learner’s performance and motivation in game-based science learning. Education Tech Research Dev 67, 1003–1024 (2019). https://doi.org/10.1007/s11423-019-09670-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-019-09670-7