Abstract

A proof-of-concept practice-based implementation network was developed in the US Departments of Veteran Affairs (VA) and Defense to increase the speed of implementation of mental health practices, derive lessons learned prior to larger-scale implementation, and facilitate organizational learning. One hundred thirty-four clinicians in 18 VA clinics received brief training in the use of the PTSD checklist (PCL) in clinical care. Two implementation strategies, external facilitation and technical assistance, were used to encourage the use of outcomes data to inform treatment decisions and increase discussion of results with patients. There were mixed results for changes in the frequency of PCL administration, but consistent increases in clinician use of data and incorporation into the treatment process via discussion. Programs and clinicians were successfully recruited to participate in a 2-year initiative, suggesting the feasibility of using this organizational structure to facilitate the implementation of new practices in treatment systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Despite the development of evidence-based psychotherapies (EBPs) for many mental health problems and the delineation of best practices in clinical practice guidelines, there remains an enormous gap between the services available in routine care and those supported by research evidence and/or espoused in guidelines.1 Implementation science theory and research has emerged to study and address these discrepancies.2, 3 To effectively facilitate the ongoing implementation of best practices, however, it is important to create pathways to distribute information about EBPs thereby creating dissemination infrastructure.

Practice-based research networks (PBRNs) are groups of providers and researchers working together to examine health care processes in broad populations of patients and settings in an effort to improve health care outcomes. This model was adapted to develop a practice-based implementation network in the US Department of Veteran Affairs (VA) and the Department of Defense (DoD). Potentially, the establishment of a standing practice-based implementation network (PBI network) could represent one key element of organizational dissemination and implementation infrastructure intended to enable more rapid and effective implementation of new practices. The PBI network is a first-of-its-kind collaboration between stakeholders in the VA and DoD—providers, clinics, evaluators, and leadership—brought together to help improve the implementation of mental health practice changes on a national scale.

The rationale for the creation of standing PBI networks includes several elements. First, they can enable the study of factors affecting uptake and sustainment of practice changes. Second, if established as ongoing operations, they can increase the speed of implementation by offering immediately available implementation laboratories to pilot-test implementation prior to larger-scale or enterprise-wide implementation initiatives. Third, they can conserve resources. Start-up costs (e.g., hiring of personnel) and time and costs associated with the development of evaluation instruments, training methodologies, and the like can be reduced for successive projects. Fourth, the development of PBI networks will facilitate organizational learning by providing continuing opportunities for refining and improving implementation methods.

The broad goals of the PBI network described within this manuscript are to improve the uptake of best practices in post-traumatic stress disorder (PTSD) treatment while examining barriers to and facilitators of practice change. Our primary goal in this first phase of development was to explore the feasibility of establishing such a network in a complex healthcare system. The inaugural practice change implemented in our PBI network was routine outcome monitoring, a practice that was highlighted by the Institute of Medicine4 as essential to, yet underused in, PTSD treatment. Two implementation strategies,5 external facilitation (EF)6, 7 paired with evidence-based quality improvement and technical assistance (TA),8 were used and will be described below. This paper will focus on the implementation of the PBI network within the VA national health care system and provide an overview of the structure of the PBI network; describe how it was created; outline the implementation strategies utilized; and present pre-, post-, and follow-up data on the implementation of PTSD routine outcome monitoring using the PTSD checklist (PCL).9 Only VA data is reported here because the organizational contexts of VA and DoD are very different. The implementation of the PBI network within the DoD will be described in a separate publication.

Methods

Design

The PBI network described here was initiated with resources provided by the VA/DoD Joint Incentive Fund. The original project plan was to use external facilitation (EF) in 10 sites, with a simple pre-post evaluation design. However, after the first 10 sites were recruited, another eight sites asked to participate. Given the high demand for participation and limited capacity to deliver EF, we provided a less resource-intensive strategy, technical assistance (TA), to the additional sites and compared the outcomes of both efforts. However, because sites self-selected into the two conditions and were not intentionally matched, we approached this as two parallel pre-post studies rather than as a quasi-experimental design. This evaluation was determined to be program evaluation rather than research by the local institutional review board. Participation by clinicians was voluntary and all survey data were collected anonymously and aggregated at the site level.

Conceptual framework

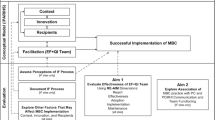

The Promoting Action on Research Implementation in Health Services (PARIHS)10 theoretical framework guided the operationalization of the implementation strategies and development of measures. In the PARIHS framework, successful implementation is represented as a function of the nature and type of evidence, the qualities of the context in which the evidence is being introduced, and the way the process is facilitated. PARIHS domains assessed in the current study included (1) perceived evidence for outcomes monitoring, (2) context factors shown to predict sustained implementation (especially fit with culture, leadership support, and infrastructure for outcomes monitoring), and (3) facilitation factors. The present report focuses on the process of setting up the network and overall results. Analyses of contextual factors and facilitation processes will be addressed in later papers.

Sample

The PBI network comprised of 18 VA clinics based in 16 VA medical centers located across the US. To be eligible to participate, sites had to identify as being one of three settings where PTSD is treated in VA (specialty PTSD clinics, general mental health clinics, or primary care clinics with integrated mental health services). They had to commit a minimum of three participating treatment providers, including one internal clinical champion to lead local implementation. They agreed to obtain appropriate local approvals prior to participation and to participate in 2 h of training, complete online surveys, join regular follow-up calls between the internal champion and external facilitator, and indicate a willingness to consider ongoing PBI network collaboration. They were recruited via the VA mental health listservs, direct outreach to mental health managers, and e-mails to VA contacts known to the project team. The recruitment target of ten clinics (four specialty PTSD, four general mental health, and two primary care) was far exceeded. Eighteen applications from the clinics were received and all sites were accommodated, in line with the objective of building a large network of field settings and clinicians that can continue to expand. See Table 1 for a breakdown of the types of clinics, implementation strategy, and average number of participating clinicians.

Implementation strategies

Two implementation strategies were used: EF and technical assistance TA. The common elements of EF and TA and how the EF strategy differed from TA (see Fig. 1) are described below. Both EF and TA sites nominated an internal champion, usually a clinical provider within the participating clinic. While internal champions typically did not have any administrative authority within their clinics, they had to reside within the clinical structure of the clinic and be endorsed by local leadership as being knowledgeable and enthusiastic about outcomes monitoring and well-respected by peers and colleagues. Internal champions helped lead local implementation efforts, including the development of an implementation plan. Both EF and TA sites were also assigned a person to support implementation (an external facilitator or technical assistant, respectively); internal champions were the primary point of contact between the clinic and external facilitator/technical assistant.

Both EF and TA sites received a 2-h clinician training. External facilitators delivered an in-person interactive workshop during a site visit for clinics assigned to the EF cohort. The eight clinics receiving TA participated in the training via web teleconference prior to implementation. Training content covered: presentation of rationale for outcomes monitoring; VA measurement requirements; ways of incorporating outcome monitoring into PTSD treatment; methods of achieving patient buy-in; patient education about assessment instruments; options for tracking changes; feedback to patients; and role-play practice. Clinicians were given the option of also administering VA-mandated measures for depression (Patient Health Questionnaire-9, PHQ-9)11 and substance abuse (Brief Addiction Monitor).12 Collaboration among the PBI network sites was facilitated during a 6-month active implementation phase for both EF and TA sites, with monthly internal champion calls, access to a PBI network website, and distribution of a newsletter fostering peer support.

External facilitation

In addition to the elements common to both EF and TA strategies, the EF strategy included a needs assessment, on-site visit, development of a written implementation plan, and implementation support from the external facilitator after the visit (1–4 scheduled calls per month and additional support via e-mail/phone as needed). Prior to scheduling the on-site visit, the external facilitator conducted a 1-h needs assessment telephone interview with the internal champion. During the site visit, the external facilitator met with the internal champion, site mental health leadership, and participating clinicians to discuss the initiative and collaboratively develop an implementation plan. The overarching goal of the EF process was to establish a partnership to develop local strategies for implementing routine outcomes monitoring. After the site visit, the external facilitator and internal champion held phone calls at least monthly for 6 months. Monthly phone calls were allowed for check in with the internal champion about implementation progress, including any challenges or issues with which they needed assistance. For example, external facilitators and internal champions used the calls to troubleshoot unanticipated challenges (e.g., technical issues with tracking uptake, getting team consensus on additional measures to implement) as well as enhancing local supports (e.g., strategizing ways to involve local leadership). External facilitators provided assistance using three key components of facilitation: education, interactive problem-solving, and support. The EF strategy utilized a manual to provide clear guidance to facilitators.13 Clinical psychologist staff members were trained as facilitators by Dr. Kirchner and Mr. Smith, national experts in EF. In addition to providing a 2-day training in EF, they reviewed facilitator activities in monthly mentoring calls to ensure adherence to the EF model. The three external facilitators were VA clinical psychologists with expertise in evidence-based treatments and routine outcome monitoring for PTSD. External facilitators selected for this role had demonstrated skills in building relationships with key stakeholders to facilitate practice change within clinics, programs, or facilities.

Procedures

Study evaluation procedures were approved by the local VA hospital research and development committee and the university-affiliated institutional review board.

Two weeks prior to the initiation of implementation of routine outcome monitoring, a link to an online survey was e-mailed to site participants. Following the completion of the survey, a 6-month phase of active implementation commenced. In this phase, the ten EF sites received on-site visits from their external facilitator that included in-person training for clinicians, followed by the development of the implementation plan. The eight TA sites received video-teleconference training for clinicians, followed by completion of implementation plans with limited support from their technical assistant. During the 6-month active implementation phase, TA site internal champions had one telephone call with the assigned technical assistant followed by e-mail contact as needed for implementation support. Both EF and TA internal champions had access to an optional monthly community of practice calls and a website for implementation support and sharing of materials. After the active implementation phase, participants completed a post-implementation survey. During the second 6-month (inactive) phase of implementation, both EF and TA sites were offered additional assistance if requested and continued to have access to community of practice and website resources, but were not actively engaged. All participants received a third and final follow-up survey after the inactive phase of implementation had ended, approximately 1 year after implementation began.

Measures

A survey previously developed to assess a measurement feedback system14 was adapted for the current study. The survey assessed demographics, attitudes toward standardized assessment, organizational readiness for change, work group context, and four key aspects of PTSD outcome monitoring: PCL administration at intake, repeated administration of the PCL, use of the PCL to make treatment decisions, and discussion of PCL results with patients.

Demographics

Demographic information included gender, profession (e.g., nurse, social worker), type of clinic worked in most often (e.g., PTSD specialty clinic, primary care), and number of years of clinical experience since finishing a professional degree. Participants also reported average direct patient care hours per week, caseload size, how many patients with PTSD they are currently treating, and the type of clinical services they provide (e.g., care management, therapy, medications).

Attitudes toward standardized assessment scales

The Attitudes Toward Standardized Assessment Scales (ASA),15 a 22-item measure of clinician attitudes about using standardized assessment, was included as a potential moderator or mediator of increased use of the PCL during the project. The measure was originally worded to focus on child mental health; the wording was adjusted for providers treating adult patients. For example, one item states “Completing a standardized measure is too much of a burden for children and their families.” For items like these, the word “children” was changed to “clients.” Participants rate items on a 1–5 scale (“strongly disagree” to “strongly agree”). Three subscale scores are calculated: benefit over clinical judgment, psychometric quality, and practicality.

Assessment of the evidence for the clinical practice guideline and work group context

As potential moderators or mediators of changes in practice, two portions of the organizational readiness to change assessment (ORCA)16 were used to assess participant assessment of the evidence for the clinical practice guideline and their work group context. The practice change was identified as the clinical practice guideline regarding the use of outcome monitoring in PTSD treatment and was stated as, “Patients should be assessed at least every three months after initiating treatment for PTSD, in order to monitor changes in clinical status and revise the intervention plan accordingly. Comprehensive re-assessment and evaluation of treatment progress should include a measure of PTSD symptomatology (e.g., PCL) and strongly consider a measure of depression symptomatology (e.g., PHQ-9).” Participants rated the strength of the evidence for the guideline on a scale of 1 (very weak evidence) to 5 (very strong evidence) based on their opinion and based on how they thought respected clinical experts in their clinic feel about the strength of the evidence. Participants then rated from 1 (strongly disagree) to 5 (strongly agree) how strongly they believed ten statements regarding the strength of evidence for the guideline based on research, clinical experience, and patient preferences. Participants rated statements about work context on a scale of 1 (strongly disagree) to 5 (strongly agree). Context included statements regarding culture, leadership, measurement, readiness for change, and resources that could support implementation.

PTSD outcomes monitoring

Two items asked about the initial administration of the PCL for assessing patients at intake. The items distinguished between patients receiving one of the EBPs for PTSD implemented in the VA, cognitive processing therapy (CPT) or prolonged exposure (PE). This distinction was made because the training programs for each EBP emphasize the use of the PCL in these treatments. These items were as follows: “Of those new PTSD patients who are getting PE or CPT, what proportion of them had PTSD Checklist (PCL) completed as part of their initial assessment?” and “Of those new PTSD patients who are receiving other services (not PE or CPT), what proportion of them had PTSD Checklist (PCL) completed as part of their initial assessment?” Two additional items asked participants about repeated administration of the PCL. The first was, “Of those repeat or continuing PTSD patients who are getting PE or CPT, what proportion of them had a follow-up PTSD Checklist (PCL) completed to measure their progress since intake?” The second item was, “Of those repeat or continuing PTSD patients who are receiving other services (not PE or CPT), what proportion of them completed a follow-up PTSD Checklist (PCL) to measure their progress since intake?” Responses to these four items were measured on a 12-point scale from 1 = “none” to 12 = “91–100%.”

Each participant completed two items designed to assess the incorporation of assessment data into the treatment process itself: “In the last month, thinking only about patients for whom you had follow-up PCL scores, for what proportion of those patients did you use that PCL score to help make treatment decisions?” and “In the last month, thinking only about patients for whom you had follow-up PCL scores, for what proportion of those patients did you discuss the result of the measure with the patient?” These items were only asked of participants who reported repeatedly administering the PCL to 11% or more of their patients. They were measured on a 7-point scale from 1 = “never or 0%” to 7 = “91% or more of my patients.”

Statistical analyses

The overall project design was a mixed-methods program evaluation consisting of quantitative self-report surveys and qualitative semi-structured interviews. This paper will focus on the initial quantitative outcomes. Data were analyzed using PAWS Statistics 21. To facilitate interpretation, responses to Likert frequency scales were recoded to the value corresponding to mid-point of the category. For examples, for responses measured on a 12-point scale from 1 = “none” to 12 = “91–100%,” “7 (41 to 50 percent)” was recoded to “45.5” and “12 (91 to 100 percent)” was recoded to “95.5.” To account for the clustering of subjects within sites and to accommodate missing data, changes in reported practices were estimated using the mixed linear model procedure. Mixed modeling (also known as hierarchical linear modeling) differs from linear regression in that it accounts for intraclass correlations (clustering) among observations from the same person and from different participants at the same site. Responses at each time point (baseline, 6-month, and 12-month surveys) were nested within each participant, and participants were nested with each site. Another strength of mixed modeling is that it uses all available data, so cases did not need to be excluded if they had missing data at one or more time points. Analyses of changes in practices included site and time (baseline, 6-month, or 12-month survey) as fixed effects. Additional analyses assessed whether ASA total scores, ORCA evidence scale scores, or ORCA context scale scores at pretest predicted changes in practice during the project (moderation), or whether changes in ASA or ORCA scores during the project correlated with changes in practice (potential mediation).

Results

Participants

A total of 134 participating providers were identified by internal champions before the beginning of the active phase of implementation. The EF sample involves staff in the first ten sites that were offered external facilitation. Of the 66 participants in the EF sample, 58 (88%) provided demographic information (see Table 2). Their most common professions were psychologists (45%, n = 26), social workers (38%, n = 18), or psychiatrists (17%, n = 10). They were employed in general mental health (45%, n = 26), PTSD specialty (36%, n = 21), and primary care (16%, n = 9) settings. Two-thirds (66%, n = 38) were female. They had an average 3.6 years of experience, spent an average of 24 h per week providing patient care, and had an average panel size of 112 patients.

The TA sample consisted of staff in the additional eight sites added to the project. Of the 68 participants in the TA sample, 64 (94%) provided demographic information (see Table 2). Most were psychologists (58%, n = 37) or social workers (23%, n = 15). Over three-quarters (78%, n = 50) worked in PTSD specialty clinics; most of the rest worked in general mental health clinics (19%, n = 12). Seventy percent (n = 45) were female. They had an average of 3.0 years of professional experience working as a mental health provider, spent an average of 24 h per week providing direct patient care, and had an average panel size of 74 patients.

Subject retention and missing data

Of all 134 participants (TA and EF combined), 113 (84%) provided survey data at baseline, 93 (69%) completed the 6-month survey, and 87 (65%) completed the 12-month survey. Response rates did not differ significantly between the TA and EF samples. Respondents and non-respondents at each time point did not differ by gender, clinic type, years of experience, or panel size. However, there were significant differences by profession in response to the 12-month survey (chi-square, 8 df = 17.8, p < .03). Specifically, non-responders at 12 months included more psychiatrists (23% vs. 5% among responders), substance abuse counselors (3% vs. 0%) and peer support counselors (3% vs. 0%), and fewer nurses (0% vs. 6%). Similar differences in response by profession were observed at 6 months but the differences were not statistically significant (chi-square, 8 df = 15.4, p < .06).

Technical assistance sites

Estimated mean scores on outcomes monitoring variables for TA sites at all time points are shown in Fig. 2. In TA sites, the administration of the PCL at intake was near ceiling at all time points and did not change over time. Reported means ranged from 89 to 95% for patients who were receiving PE or CPT. Reports of repeated administration of the PCL to patients not receiving PE or CPT were similar, between a mean of 84 and 93% at all time points. Repeated administration of the PCL to patients receiving PE or CPT was also near ceiling at all time points. Reported means ranged from 78 to 86% patients, with no significant change over time. Reports of repeated administration of the PCL to patients not receiving PE or CPT were lower, between a mean of 60 and 72% of patients, and did not change significantly over time.

There was a statistically significant change between pre- and post-strategy means in using repeated PCL scores to help make treatment decisions (F 2, 54 df = 5.88, p < .01). Among clinicians who administered repeated PCLs, the use of data to inform treatment decisions increased from a mean of 63% of patients at baseline to a reported mean of 75% at 6 months and 76% at 12 months (see Fig. 2). Clinicians who administered repeated PCLs also reported increases in their discussion of PCL results with patients (F 2, 54 df = 3.90, p < .03). These rose from a reported 62% of patients at baseline and 66% at 6 months to 76% of patients at 12 months.

External facilitation sites

Estimated means for EF sites at all time points are shown in Fig. 3. In EF sites, there was a change over time in the administration of the PCL at intake to patients receiving PE or CPT, from an average of 66% of patients at baseline to 81% at 6 months and 71% at 12 months (F 2, 61 df = 4.74, p < .02). Moreover, there was significant time × site interaction (F 17, 61 df = 2.52, p < .004). Visual inspection of the data suggests that administration of the PCL at intake to patients receiving CPT or PE tended to increase over time in 3 sites, was stable in 4 sites (3 were at ceiling across all time points), declined at 2 sites, and could not be assessed at the 10th site. There was no significant change over time in the administration of the PCL at intake for patients not receiving CPT or PE: this ranged between 65 and 74% of patients at all time points.

Repeated PCL administration throughout treatment for both EBP (e.g., PE, CPT) and non-EBP patients was lower in the EF sites than in the TA sites. In the EF sites, reported repeated use of the PCL with patients receiving PE or CPT ranged between means of 45 and 55% of patients, with no significant change over time. Yet, there was a significant increase over time in repeated administration of the PCL with patients who were not receiving PE or CPT (F 2, 67 df = 6.68, p < .01), from 32% of patients at baseline to 48% of patients at both 6 and 12 months.

There was also a statistically significant improvement in reports of using PCL scores to help make treatment decisions (F 2, 48 df = 3.51, p < .04). Clinicians who administered repeated PCLs reported using them for treatment decisions with a mean of 48% of patients at baseline; this increased to 55% of patients at 6 months and 65% of patients at 12 months. There was a similar improvement over time in participant reports of discussing PCL scores with patients (F 2, 41 df = 8.88, p < .001). The baseline mean was 54% of patients; this rose to 63% at 6 months and 77% at 12 months. The degree of change in discussion of PCL scores varied significantly by site (site × time interaction term; F 18, 41 df = 1.90, p < .05). Visual inspection of the data showed that discussion of PCL scores increased in four sites (from under 41% of patients at baseline to over 68% of patients at 12 months), with little change in the other six sites. Five of the six sites already had high (65% to 81%) baseline rates of discussing results with patients.

We also examined the effects of perceived evidence and organizational context on changes in practice. Relative to EF sites, TA sites had significantly higher pretest scores on the ASA (d = .49, p < .01) and the evidence scale of the ORCA (d = .70, p < .01), but not on the context scale of the ORCA (d = .38, p < .06). However, when the EF and TA sites were combined in one analysis, neither pretest ASA scores, ORCA evidence scores, nor ORCA context scores significantly predicted changes in practice over time. Mean ASA, ORCA evidence, and ORCA context scores did not change significantly over time in either EF or TA sites, and changes in those scores do not predict changes in practice.

Discussion

This paper reports on a pilot effort to establish and evaluate a practice-based implementation network designed to enable more rapid implementation of changes in mental health practices in the VHA treatment system. Implementation of routine outcomes monitoring in PTSD treatment was selected as our first practice change. Results of the project indicate a mixed impact on outcomes monitoring variables. Administration of the PCL for intake assessment or repeated assessment did not increase in the TA sites. In the EF sites, administration of the PCL increased on only two of our four measures (intake assessment of patients getting EBPs and repeated assessment of patients not getting EBPs). Possible reasons for this limited impact include ceiling effects due to the high baseline rates of administration at intake and during treatment and the limited accuracy of clinician self-report. Participation by sites was voluntary and this may have led to the recruitment of many sites that were already evidencing significant use of measurement-based care. Sites with lower rates of administration prior to initiation of implementation strategies tended to show increases in these rates. The finding that rates of repeated PCL administration were lower in EF sites than in TA sites may have been affected by the significantly larger panel sizes in the EF sites.

Although there were mixed results for changes in how often the PCL was administered, there were consistent increases in the use of the data and incorporation of results into the treatment process. In both TA and EF sites, there were significant pre-post increases in the use of repeated administrations of the PCL to help make treatment decisions, and these changes were maintained or increased at follow-up. Similarly, clinicians in both the TA and EF cohorts reported significant increases by follow-up in their rates of discussing PCL scores with patients.

The aggregate outcomes reported here do not fully reflect the ways in which implementation goals and change processes were tailored to each site. For example, sites that were not yet using the PCL on a regular basis may have focused on getting those data collected, with less attention to how those data would be used. In contrast, sites that were already implementing the PCL regularly may have focused on better integrating PCL data into treatment. More detailed analyses are planned to look at how site outcomes were affected by their initial level of performance prior to implementation, the specific outcomes prioritized as targets for quality improvement in their implementation plan, readiness for change and staff attitudes toward standardized assessment, and degree of participation in implementation activities.

This PBI network was established as a time-limited grant-funded initiative. However, to achieve the cost savings and other efficiencies that may be associated with such a network and to create a sustainable resource for VA and DoD, it must retain participation of field sites and champions, attract new participants and funding, and be integrated as a routine component of implementation activities. In VHA, a permanent PBI network coordinator position has been funded to oversee network operations. In DoD, the network has been established as a permanent infrastructure to enable ongoing implementation.

There are several important limitations of this study. The lack of a control condition means that changes in the use of outcomes questionnaires cannot be attributed to the implementation strategies or to the establishment of the PBI network. We were unable to use the electronic health record to either verify the administration of measures or remove the need for provider report all together. Updates to the electronic health record within VA would allow this for future work. The lack of random assignment to implementation strategies makes it impossible to draw conclusions about the relative effectiveness of the two strategies. Voluntary site participation means that results cannot be generalized to VA PTSD treatment settings more generally, or to other treatment systems. Measures of implementation were developed for the study and have not been validated. Larger claims of the utility of the PBI Network, such as the ability to enable more rapid implementation of new practices and capacity for reduction in implementation costs, remain to be tested.

PBRNs have been established in VHA (e.g., Pomernacki et al.17) and elsewhere, especially in primary care settings.18 In a recent paper, Heintzman et al.19 suggested that practice-based research networks could be adapted to enable more effective implementation of practice change. Based on similar reasoning, a mental health-focused practice-based implementation network that included VA and DoD PTSD treatment settings was established in this initiative, with a pilot evaluation in terms of feasibility and impact. Recruitment and sustained involvement of 18 VA (and 10 DoD) field sites were accomplished, and several aspects of PBI Network operation, site recruitment process, development of implementation planning documents, and use of a web-based survey evaluation system were systematized. This work provides an initial demonstration of the feasibility and potential impact of PBI networks in extending the PBRN model to focus on evaluating implementation rather than treatment effectiveness. The PBI network was useful, both in enabling the study of barriers and facilitators of innovation and in increasing the implementation of specific practices. More demonstrations of the feasibility of creating and maintaining such networks in relation to a range of mental health and health problems are required, as are more rigorous studies of network processes and effectiveness. More broadly, the PBI network approach should be seen as one core way of helping address a larger set of issues related to successful implementation20 and integrated and compared with other ways of accomplishing implementation.

Implications for Behavioral Health

To improve behavioral health services, it is necessary to develop systems to allow rapid and effective implementation of research-based and other emerging best practices. While the relatively new field of implementation science is now beginning to identify methods for implementation, health care systems are relatively unprepared to use these practices. This case study illustrates the development of a practice-based implementation network that can be used to improve the effectiveness of implementation as a routine part of healthcare systems operations. Potentially, this kind of network can be established in existing behavioral health service delivery systems to enable effective process improvement and system change.

References

Shafran R, Clark DM, Fairburn CG, et al. Mind the gap: Improving the dissemination of CBT. Behaviour Research and Therapy. 2009;47(11):902–909. doi:https://doi.org/10.1016/j.brat.2009.07.003

Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50. doi:https://doi.org/10.1186/1748-5908-4-50

Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2012.

Institute of Medicine (IOM). Treatment for Posttraumatic Stress Disorder in Military and Veteran Populations: Final Assessment. Washington, D.C: The National Academies Press; 2014.

Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: Results from the expert recommendations for implementing change (ERIC) project. Implementation Science. 2015;10:21.

Kirchner JE, Ritchie MJ, Pitcock JA, et al. Outcomes of a partnered facilitation strategy to implement primary care–mental health. Journal of General Internal Medicine. 2014;29(S4):904–912. doi:https://doi.org/10.1007/s11606-014-3027-2

Kirchner JE, Ritchie MJ, Dollar KM, et al. Implementation Facilitation Training Manual Using External and Internal Facilitation to Improve Care in the Veterans Health Administration (Version 1). n.d. http://www.queri.research.va.gov/tools/implementation/Facilitation-Manual.pdf. Accessed January 5, 2017.

Chinman MJ, Hannah G, Wandersman A, et al. Developing a community science research agenda for building community capacity for effective preventive interventions. American Journal of Community Psychology. 2005;35:143–157. doi:https://doi.org/10.1007/s10464-005-3390-6

Blevins CA, Weathers FW, Davis MT, et al. The posttraumatic stress disorder checklist for DSM-5 (PCL-5): Development and initial psychometric evaluation. Journal of Traumatic Stress. 2015;28:489–498. doi:https://doi.org/10.1002/jts.22059

Kitson AL, Rycroft-Malone J, Harvey G, et al. Evaluating the successful implementation of evidence into practice using the PARiHS framework: Theoretical and practical challenges. Implementation Science. 2008;3(1):1. doi:https://doi.org/10.1186/1748-5908-3-1

Kroenke K, Spitzer RL, Williams JBW. The PHQ-9. Journal of General Internal Medicine. 2001;16(9):606–613. doi:https://doi.org/10.1046/j.1525-1497.2001.016009606.x

Cacciola JS, Alterman AI, DePhilippis D, et al. Development and initial evaluation of the brief addiction monitor (BAM). Journal of Substance Abuse Treatment. 2013;44(3):256–263.

Kirchner J, Ritchie M, Curran C, et al. Facilitation: Designing, using, and evaluating a facilitation strategy. Presented at the: CIPRS-EIS 2011; September 15, 2011.

Landes SJ, Carlson EB, Ruzek JI, et al. Provider-driven development of a measurement feedback system to enhance measurement-based care in VA mental health. Cognitive and Behavioral Practice. 2015;22(1):87–100.

Jensen-Doss A, Hawley KM. Understanding clinicians’ diagnostic practices: Attitudes toward the utility of diagnosis and standardized diagnostic tools. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(6):476–485. doi:https://doi.org/10.1007/s10488-011-0334-3

Helfrich CD, Li Y-F, Sharp ND, et al. Organizational readiness to change assessment (ORCA): Development of an instrument based on the promoting action on research in health services (PARIHS) framework. Implementation Science. 2009;4(1):38. doi:https://doi.org/10.1186/1748-5908-4-38

Pomernacki A, Carney DV, Kimerling R, et al. Lessons from initiating the first Veterans Health Administration (VA) women’s health practice-based research network (WH-PBRN) Study. Journal of the American Board of Family Medicine. 2015;2:649–657.

Green LA, Hickner J. A short history of primary care practice-based research networks: From concept to essential research laboratories. Journal of the American Board of Family Medicine. 2006;19:1–10.

Heintzman J, Gold R, Krist A, et al. Practice-based research networks (PBRNs) are promising laboratories for conducting dissemination and implementation research. The Journal of the American Board of Family Medicine. 2014;27(6):759–762. doi:https://doi.org/10.3122/jabfm.2014.06.140092

Ruzek JI, Landes SJ. Implementation of best practices for management of PTSD and other trauma-related problems. In: Handbook of PTSD: Science and Practice (2nd Edition). New York: Guilford Press; 2014:628–654.

Funding

This study was funded by the Department of Defense/Veterans Affairs Joint Incentive Fund, grant number JIF13159.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Study evaluation procedures were approved by the local VA hospital research and development committee and the university-affiliated institutional review board.

Conflict of Interest

The authors declare that they have no conflicts of interest.

Disclaimer

The results described are based on data analyzed by the authors and do not represent the views of the Department of Veterans Affairs (VA), Department of Defense (DOD), or the United States Government.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ruzek, J.I., Landes, S.J., McGee-Vincent, P. et al. Creating a Practice-Based Implementation Network: Facilitating Practice Change Across Health Care Systems. J Behav Health Serv Res 47, 449–463 (2020). https://doi.org/10.1007/s11414-020-09696-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11414-020-09696-3