Abstract

Peer feedback is known to have positive effects on knowledge improvement in a collaborative learning environment. Attributed to technology affordances, class-wide peer feedback could be garnered at a wider range in the networked learning environment. However, more empirical studies are needed to explore further the effects of type and depth of feedback on knowledge improvement. In this mixed method research, 38 students underwent a computer-supported collaborative learning (CSCL) lesson in an authentic classroom environment. Both quantitative and qualitative analyses were conducted on the collected data. Pre- and post-test comparison results showed that students’ conceptual knowledge on adaptations improved significantly after the CSCL lesson. Qualitative analysis was conducted to examine how the knowledge improved before and after the peer feedback process. The results showed that the class-wide intergroup peer feedback supported learners, with improvement to the quality of their conceptual knowledge when cognitive capacity had reached its maximum at the group level. The peer comments that seek further clarity and suggestions prompted deeper conceptual understanding, leading to knowledge improvement. However, such types of feedback were cognitively more demanding to process. The implications of the effects of type of peer feedback on knowledge improvement and the practical implications of the findings for authentic classroom environments are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Peer feedback is an important component in collaborative learning, and it has been widely known to benefit students’ learning (Zong et al., 2021). Among the many pedagogical approaches for promoting collaborative knowledge improvement, peer feedback is often deemed as an important collaborative learning process with the potential to influence learning (Hattie & Timperley, 2007; Kollar & Fischer, 2010). Involvement in peer feedback benefits peer learners in their work improvement process when they are given the opportunity to observe and compare their work with that of their peers during the commenting process (Chang et al., 2012a, b). Besides learning from observing and commenting on the work of their peers, the process of engaging in peer feedback also includes benefits in terms of learning and improvement of ideas through acting upon the received comments (Chen et al., 2022).

There are generally two roles in a peer feedback loop: the giver and the receiver. By providing feedback on the work of their peers, students participate in the learning process and thus achieve greater understanding and appreciation for the experiences and perspectives of their peers (Van Popta et al., 2017). Thus through the peer feedback process, not only are the given comments able to aid the receivers in knowledge improvement, the givers also advance their own cognitive development (Chen et al., 2021). While peer feedback is frequently practiced in the class environment, class-wide peer feedback at a group level is uncommon (Chen et al., 2021). The research benefits from further insights into methods for leveraging the cognitive capacity of the entire class to elevate processes of knowledge improvement.

Not all peer feedback is successful in promoting knowledge construction (Van Popta et al., 2017). The type of feedback and the depth of elaboration play important roles in helping the receiver construct knowledge. In primary and secondary school classrooms, more empirical studies are needed to understand further how the type and intensification of the depth of feedback might affect students, and whether peer feedback is beneficial for theprovider's own learning as well as that of their peers (Hovardas et al., 2014). Research studies on peer feedback have shown that elaborated feedback leads to higher learning outcomes than verification or simple feedback (Tan & Chen, 2022; Van der Kleij et al., 2015). However, Zong et al. (2021) challenged the need to investigate further the effect of long elaborated feedback at the receiving end. In understanding elaborated feedback, researchers have reckoned that specific feedback is more effective than generalized feedback (Goodman et al., 2004; Park et al., 2019). Beyond the variation in specificity of feedback types, these types can be further classified as confirmatory, suggestive, or corrective (Topping, 2009). The specificity could lead to a differential impact on the receiver as well as differential effects on knowledge improvement. Since providing feedback promotes knowledge improvement (Cheng et al., 2015), it would be beneficial to the Computer-Supported Collaborative Learning (CSCL) community to better understanding what type of feedback and what depth of elaboration are required in order to influence conceptual knowledge improvement for the purpose of improving future lesson design and research. As for the scope of peer feedback, Chen et al. (2021) posited that class-wide feedback benefits collaborative groups in knowledge improvement more than peer learning at the group level. Hence, more empirical studies are needed to explore learning within the context of coarser grained units, such as the classes that house the collaborating groups (Kirschner & Erkens, 2013).

In this study, we aimed to contribute to the peer feedback research field in the CSCL environment by examining the mechanism and effect of class-wide peer feedback on conceptual knowledge improvement. Through a deeper analysis of the feedback type and depth of elaboration in a class-wide peer feedback environment, we illustrate what type of peer feedback matters and how it impacts knowledge improvement. The following research questions were crafted:

-

1.

Does class-wide peer feedback benefit conceptual knowledge improvement and lead to improved learning outcomes?

-

2.

What types of feedback and what depth of elaboration support conceptual knowledge improvement?

-

3.

How do types of feedback and different levels of depth of elaboration support conceptual knowledge improvement during the CSCL process?

Literature review

Class-wide peer feedback in the collaborative learning environment

Peer feedback can be implemented at the class-wide level or at the group level. However, hosting a class-wide collaborative learning activity between groups within an authentic classroom is not common (Chen et al., 2021). This is because implementing class-wide collaboration activities is not easy since individuals and groups tend to engage in dysfunctional social processes, which can be stressful for the teacher and distracting to the students (Borge & Mercier, 2019). Common issues encountered such as free riding and unequal level of collaborative effort may not be easily addressed (Hämäläinen & Arvaja, 2009). Therefore, teachers often adopt a divide-and-conquer approach to avoid such a situation (Barron, 2003). However, such a strategy does not lead to the co-construction of knowledge and the essence of collaboration is lost. Thus, ensuring that students attain the deepening of knowledge in a class-wide CSCL environment is a difficult task (Jeong et al., 2019).

Chen et al. (2021) proposed a collaboration script, the Spiral Model of Collaborative Knowledge Improvement (SMCKI), to support the facilitation of collaborative learning processes with the inclusion of a class-wide collaborative learning phase. In the SMCKI script, individual members within each group would first commence with their own ideation before group members come together to synergize their ideas. This preparatory individual work process helps to address situations the problem of silent participants in the way that it enables each member to be prepared with the necessary capacity for group collaboration. While most collaborative learning activities end with a group product, the SMCKI script proposed the sharing of knowledge at the class-wide level, where each member within each group engaged in a class-wide intergroup peer critique process. This phase maximized the opportunity for class-wide knowledge sharing, where multiple perspectives could be garnered for knowledge improvement from students outside the group during this feedback process. This is where the next refinement phase allows each group to scrutinize the feedback given. Discussion takes place within each group with a focus on reviewing the feedback. When refinements are made based on the feedback, group ideas could be elevated to a higher level as these perspectives could propel further knowledge improvements. With a class-wide intergroup peer critique phase injected between two intragroup collaboration phases, the class-wide collaboration maximizes learning opportunities among peers within the CSCL process. This setting combines the benefits of class-wide and small group collaborative learning environments, which could yield desirable results that surpass the usual group-level collaboration activities. Furthermore, within the intergroup peer critique session, individual group members are accountable to critique assigned groups before returning to their home group to consolidate learning and review the feedback given by other group members. This protocol helps to address some frequent issues reported in the CSCL environment. Research findings by Chen et al. (2021) found that this class-wide peer critique positively impacts collaborative knowledge improvement. The intergroup peer critique promotes a deeper understanding of the concepts during the feedback process. When peers come together to review the feedback from other group members, the reviewing of alternative solutions and resolution of conflicts leads to productive CSCL processes and outcomes. The resulting positive interdependence (Prins et al., 2005) is important for enabling students to work and coordinate with others towards knowledge improvement. Therefore, this study builds on the idea of this class-wide intergroup peer feedback process within the CSCL environment with the goal of furthering examination of the mechanism and the effect of the different peer feedback types on knowledge improvement.

Feedback types and the depth of elaboration

Research has shown that different types of feedback and the associated depth of its elaboration have different effects on knowledge improvement in a CSCL environment (Tan & Chen, 2022). Lam and Habil (2020) reviewed a range of research studies involving peer feedback in the computer-supported environment from 2015 to 2019 and found a wide range of classification of general feedback types, including providing suggestions, giving praise, providing criticism, questioning intentions, or offering feedback. Other works of classifying peer feedback (e.g., Cheng et al., 2015; Huisman et al., 2018; Lu & Law, 2012) revealed that feedback types are common, with comments such as supporting, questionings, direct corrections, or providing suggestions. Another manner of classifying feedback type is the Ladder of Feedback, developed by Daniel Wilson and Heidi Goodrich Andrade, from Harvard Project Zero. The types of feedback recommended in the ladder of feedback include clarify, value, concerns, and suggest. As the protocol depicts, the sequence in which feedback is provided is important in the order for the feedback to support learning, both from the giver and the receiver’s end. The feedback type “clarify” allows the clarification of the works that the giver may not understand. If there is nothing to clarify, the giver could give praise to the work, which is an affirmation of the content. Further, the feedback giver could raise concerns or issues, which could involve problems detected, or identification of challenges related to a conceptual understanding. Finally, at the top of the ladder is where the giver could offer suggestions for addressing the concerns raised. This ladder of feedback formalizes a procedure that is structured to help students build their feedback comments effectively (Lara et al., 2016). Hence, more research studies are recommended to explore the different types of feedback in order to further expand understanding of the ways such formalized procedures might enable subsequent improvement (Fong et al., 2021).

When feedback is given, it should be relevant to the content of the work, and thus the feedback comments should be specific in explaining the aspects of the work under review (Lu & Law, 2012). Being specific in the feedback implies that elaborations are given for correction, confirmation, justification, questioning, or suggesting, rather than a simple acknowledgement (Alqassab et al., 2019). Elaborated feedback is important as it fosters deep learning of the target information and is an opportunity for learning (Finn et al., 2018). In fact, elaborated feedback is crucial for supporting knowledge improvement (Tan & Chen, 2022). Therefore, besides examining the feedback type, we include the examination of feedback type together with its depth of elaboration to provide a deeper understanding of the mechanism and effect of feedback on knowledge improvement.

Peer feedback loop

Peer feedback is a formative assessment tool (Tai et al., 2016). The process of assessing the work and giving feedback enables the giver to make judgments about the quality of their own work and that of their peers (McConlogue, 2020). In other words, feedback is not just about how the feedback can bring about improvement at the receiving end, but it benefits the metacognitive development of the giver as well (Tan & Chen, 2022).

In a feedback loop, the commencement of giving the feedback should end with closing the feedback loop. Closing the feedback loop is critical to ensure that the feedback achieve its intended objective (Carless, 2019). The focus of peer feedback is not merely about giving the comments but also about the given feedback being actionable. Students ought to appreciate that they are active partners in feedback processes by responding to the feedback and not just being passive recipients of feedback (Carless, 2022). However, if all feedback receives only a mere appreciation note in response, the learning might be shallow due to the low level of cognitive impact. For feedback to be powerful in its effect, there must be a specific learning context to which feedback is addressed (Hattie & Timperley, 2007).

While a number of studies paid much attention to analyzing different types of feedback from the perspective of students’ perceptions on their peer feedback experience, and the impact of peer feedback on learning performance (Gielen et al., 2010; Huisman et al., 2018; Lam & Habil, 2020; Latifi et al., 2021; Wu & Schunn, 2021), there is relatively little research that delves into the way students integrate the given peer feedback into their revised work. Essentially, if the received peer feedback is of good quality, it should benefit and enhance the quality of the revised work (Gielen & De Wever, 2015). That is, information becomes effective feedback only when students are able to act on it to improve work or learning strategies (Carless & Boud, 2018).

Responding to the feedback is important as it reflects its effectiveness on the given feedback (Carless, 2022). In general, the success of feedback largely depends on the way specific the feedback is able to address the context (Carless et al., 2011; Hattie & Timperley, 2007; Shute, 2008). For feedback to be effective, it should contain specificities that identify and localize the problem(s), provide solutions to the identified problem(s), or provide suggestions for further improvements (Lu & Law, 2012; Patchan et al., 2016; Wu & Schunn, 2021). If the received feedback lacks such features, the receiver tends to neglect it rather than implement it (Patchan et al., 2016; Wu & Schunn, 2020b). By examining how feedback is integrated into the subsequent work, we are able to offer some valuable insights related to the efficacy of peer feedback (Lam & Habil, 2020). Hence, this study attempts to examine the underlying mechanism and the effect of the feedback type and its depth of elaboration with a specific focus on impact related to knowledge improvement in the CSCL environment.

Conceptual knowledge improvement during a CSCL peer feedback environment

Understanding the process of knowledge change is one essential goal in education (Rittle-Johnson et al., 2001). The process of collaborative learning potentially leads to gains in conceptual knowledge (Tolmie et al., 2010). In fact, several past studies have claimed that collective wisdom garnered in a collaborative learning environment leads to improved learning outcomes (Chen et al., 2021; Papadopoulos et al., 2013). Conceptual knowledge improvement can be examined at the individual or at the group level. In this study, we attempt to provide a deep analysis of the process of conceptual knowledge improvement in a class-wide environment, thus illustrating the mechanism and its effects both at the individual and group level.

Collaborative learning environments provide learning experiences that could go further than simply acquisition of facts through mere transfer of knowledge (Renkl et al., 1996). In an authentic classroom with many groups of students working together, collaborative learning becomes more complex and challenging (Chen et al., 2021). It is not easy to have students achieve a deepening of knowledge in class-wide collaboration practices (Jeong et al., 2019). Hence, different pedagogical models and strategies have been developed seeking to enhance the process of collaborative learning and knowledge improvement within collaborative learning settings (Chen et al., 2021). In this study, we leverage the collaboration script SMCKI to examine how the process of class-wide peer feedback enables collaborative knowledge improvement and impacts conceptual knowledge improvement at the individual and group level.

The concept of collaborative knowledge improvement originates from the notion Rapid Collaborative Knowledge Improvement (RCKI) proposed by Looi et al. (2010). RCKI specifies a CSCL environment where knowledge improvement is situated within a short period of a class session. It “seeks to harness the collective intelligence of the group to learn faster, envision new possibilities, and to reveal latent knowledge in a dynamic live setting, characterized by rapid cycles of knowledge building activities in a face-to-face setting” (Looi et al., 2010, p. 26). Knowledge improvement differs from knowledge building. When knowledge is constructed within a limited time constraint, it means that the community’s efforts toward social processes were aimed at improvement (Scardamalia & Bereiter, 1994), as opposed to collaborative knowledge building, which may not be fulfilled within a short duration of a class session (Wen et al., 2011).

One promising avenue to promote collaborative knowledge improvement in a class-wide CSCL environment is to leverage peer feedback (Tan & Chen, 2022). When peer feedback can be garnered at the class-wide level, the collective intelligence harnessed promotes higher gains compared with just synergizing perspectives at the group level. Zong et al. (2021) observed that the depth rather than the quantity of peer feedback is what is strongly associated with higher gains in task performance. In a class-wide peer feedback environment, any ambiguity with any feedback could be further built upon by another student within the class. When peers build upon each other’s feedback, there is a deepening of the quality of the feedback given. Moreover, if students receive similar feedback on the work being examined, it further affirms the content of the feedback given.

As mentioned earlier, it is important for the given feedback to be specific and constructive in order for it to bring about knowledge improvement (Misiejuk et al., 2021). In addition, collaborative knowledge improvement must promote learning for collaborative learning to achieve its intended objectives. If the feedback reviewer were to merely copy the feedback suggestions, this process may not bring about knowledge improvement and does not promote learning. In contrast, when the receiving peer is able to act upon the given feedback by revising and improving their work, the learning process promotes knowledge improvement (Chen et al., 2022). That is, when students review the feedback by discovering gaps, attempting corrections, or adopting recommend suggestions for improvements based on the given criteria, the students improve in the knowledge related to the learning goals (Farrokhnia et al., 2019; Latifi et al., 2021; Lizzio & Wilson, 2008).

Since acting upon the feedback for work refinement is an important process for conceptual knowledge improvement, there is a need to explore the different types of feedback and examine how these feedback comments help with work improvement (Misiejuk et al., 2021). Therefore, this study was designed to examine the mechanism and the effect of the way the different feedback types and level of depth of elaboration impacts knowledge improvement in the CSCL environment.

Method

An exploratory case study was conducted to explore whether class-wide peer feedback benefited conceptual knowledge improvement in an authentic science class. Specifically, both quantitative and qualitative data analyses were conducted on the types and depth of feedback in order to examine how they benefited students’ knowledge improvement, leading to improved learning outcomes based on the post-test results.

Participants and learning context

There were 38 sixth-grade students who participated in this study. They were between 11 and 12 years old at the time of the study. Convenience sampling was adopted in the selection of the participants. In particular, these students had prior experience with the CSCL platform, the AppleTree system (see Section "The CSCL environment"), hence no further training for these students was needed. The science teacher who conducted this lesson had taught these students for more than a year. At the time of the study, she had more than 20 years of teaching primary science in the mainstream school environment of Singapore. The intervention procedure took place during curriculum time, and the lesson was part of the curriculum.

Before the collaborative learning lesson, the teacher grouped the students into five groups of three students and six groups of four students according to their class seating plan. Due to the COVID-19 pandemic with mandatory safe-distancing management, the students could only mingle with peers while maintaining proximity. The students knew each other for more than a year and had experience working in groups but not in this group setting. It was also their first time working under the constraints of this COVID safe-distancing management in the computer lab.

The task given to all the students in this study was to design an organism (animal or plant) that would be able to adapt to a certain habitat in a list of prescribed conditions, with the additional constraint that there were three adaptations that the organism should not have. The design of this task took into consideration the scientific conceptual knowledge the students ought to have possessed by the time of the study and their expected level of development of critical thinking skills, which is a requirement of the Singapore primary school’s curriculum. The cognitive ability to balance reasoning is an important aspect of twenty-first century cognitive skills that students ought to develop.

The research procedure

The entire CSCL session conducted in the computer lab was facilitated by the science teacher. To help the students recall their experience with the collaborative pedagogical procedure and AppleTree system, the teacher demonstrated the features of the system to the students before lesson commencement.

Prior to the intervention, the pre-test was conducted. The post-test was conducted immediately after the intervention. The pre- and post-test items were from the topical worksheet, which were given to the students in the cohort to test their conceptual understanding after learning each topic. The worksheet was developed by the teachers from the science department. IBM SPSS Statistics 26 was used to analyze the validity and reliability of the test items. Descriptive statistics, Kaiser–Meyer–Olki (KMO) and Bartlett tests, and Cronbach’s alpha were computed. Only the data having values valid for all the variables of the scale were analyzed. The test results based on Cronbach alpha coefficient of the pre-test was 0.733, indicating that the internal consistency is good. KMO of the pre-test was 0.783, and the significance level of Bartlett’s test of sphericity was 0.000, indicating that the test of is of high validity.

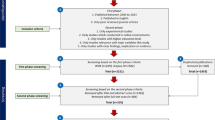

Dillenbourg et al. (2010) acknowledged that there could be many constraints when implementing CSCL in an authentic setting. Time is one of them; particularly when the lesson was conducted in the school computer lab, which required walking the students from the classroom to the lab, in which case, the teacher would have to allocate at least 5–10 min for the students to settle down in their seats and log in to the computers using their students’ ID. To meet the objective of the research and align to the authentic context of the classroom setting, the following procedure was conducted in two separate lessons. Each lesson was 60 min. Figure 1 shows the procedure of the study, with approximately 50 min allocated for each lesson. As shown in Fig. 1, the procedure of lesson one is depicted by the green boxes, and the procedure of lesson two by the blue boxes. The CSCL lesson procedures took place on the AppleTree system. How this system supports the online collaborative work for the imaginary organism design will be elaborated in the next section.

The CSCL environment

The AppleTree system (Chen et al., 2023) is a CSCL platform developed by the research team from the National Institute of Education, Nanyang Technological University, Singapore. Supported by the collaboration script, the Spiral Model of Collaborative Knowledge Improvement (SMCKI) (Chen et al., 2021), the AppleTree system features four phase switches that draw alignment with the phases in the SMCKI script. The specific switches are Ideation and Synergy, Peer Critique, Refinement, and Achievement. These switches ease the facilitation of the CSCL processes. When the teacher switches from one phase to the next, the students would be directed to perform the task within that phase, e.g., during the peer critique phase, students would not be able to work on their group workspace but could only access the workspace of other groups and perform peer feedback. In particular, the graph-based workspace of the AppleTree system allows the students to present their ideas as a concept map. Figure 2 shows the graph-based workspace of the AppleTree system using G01 (group one) as an example.

As the focus of this study is on the group performance that arises from peer feedback, only the first three collaborative learning phases were implemented in this study. The final individual achievement from the AppleTree system was omitted, and instead, the individual performance at the end of the CSCL was measured through the post-test worksheet.

In this study, the students commenced with the Ideation and Synergy phase. During this phase, group members within each group first created their own ideas regarding the imaginary organism with the supporting adaptions and the adaptions that the organism should not have. As shown in Fig. 2, the construction of the organism design should form a concept map with the name of the imaginary organism as the root node. The evidence bubbles that branch out are the supporting adaptation (green arrows) and opposing adaptations (red arrows). There are four concept maps shown in the bird’s eye view (left bottom square of Fig. 2), which are the outcomes from the individual ideation phase. This ideation phase allows each group member to share their ideas before the group discussion takes place. Once the group members completed their ideation, they commenced with their intragroup discussion, bringing their organism design together into one idea that represented the group’s best idea. The expanded view at the shared workspace shows the integrated concept map. As shown in Fig. 2, the students’ identity code was automatically tagged above each bubble by the AppleTree system. (Note: All the names shown in the figures are pseudonyms).

The Peer Critique phase comes after the Ideation and Synergy phase. During this phase, the students are not able to view or edit their own group’s workspace. A drop-down box (refer to the Switch Group box in Fig. 2) will appear in order to allow the students to select a group from the list to provide their peer feedback to. This feature prevents the peer feedback phase from being used for the group to work on their group product.

Figure 3 shows the Peer Critique window. The students can click on any of the content bubbles, whereby a window would pop-up for the students to enter their comments.

Students have the option to click on any one of three opinion buttons represented by three emoticons. The emoticons are “Smiling face,” which represents an agreement, “Neutral face,” which represents a neutral opinion, and “Sad face,” which represents a disagreement. After selecting the opinion (emoticons), the “Explain why” text box with mandatory text entry prompts the students to elaborate the reason for their opinion. This comment box with the text “Explain why” at the top helps elicit constructive and elaborated feedback. There is no limit to the number of comments each bubble is able to receive.

It is important to ensure that students provide constructive comments. In this study, the students were given a peer critique rubric (Fig. 4) to refer to before the Peer Critique phase took place. This peer critique rubric aimed to help students provide targeted and constructive feedback to the receiving groups. Upon completion of the Peer critique phase, the teacher switches to the Refinement phase where the students are routed back to their group page to view and respond to the critiques given by the other groups.

One feature of the AppleTree system is the ability to provide anonymous feedback. Technically, the students will not see the names of the peer who provided the comments. The names of the critics are only visible to the teacher. The intention of this feature is to reduce the tension of providing honest feedback, which could lead to students avoiding giving critical constructive feedback in order to maintain positive relations, which is one critical aspect in the Asian culture. In this study, the teachers reminded the students of this feature before the commencement of the peer critique process.

Data collection

The artifacts produced by the students were collected and examined as part of the analysis used to answer the three research questions. These artifacts include the pre-test and post-test, which were the hard copy worksheets that all 38 students completed during curriculum time. The artifacts from the AppleTree system included the concept maps of the 11 groups before and after the peer feedback and all the peer comments during the peer critique phase.

The organism design concept map data was collected by downloading the AppleTree system Report A. This report captures all the process logs of the posts generated during the collaborative learning phases. There were a total of 1600 process logs generated over the individual ideation, group synergy, and group refinement phases. Each process log was tagged with the students’ identity code and a timestamp, which were automatically captured by the AppleTree system. The peer feedback data were collected by downloading Report B through the AppleTree system. There were a total of 416 peer comments generated during the intergroup peer feedback phase. Like Report A, each peer comment log was tagged with the students’ identity code and a timestamp. Each row in this report refers to one peer comment contributed by one student.

Data analysis

This study adopted both quantitative and qualitative data analysis techniques to answer the research questions. To answer the first research question, a paired samples t-test was conducted before and after the class-wide peer feedback to examine the impact of peer feedback at the individual level. The students’ pre- and post-test worksheets were collected and scored using the mark scheme provided by the teacher. This analysis aimed to examine whether the conceptual knowledge improvement during the CSCL process translates to improved learning outcomes.

Subsequently, content analysis was conducted on the concept map of the organism design for each group before and after the peer feedback based on the organism design coding scheme (Table 1). This analysis aimed to examine the conceptual knowledge improvement of each group after the peer feedback process.

To address the second research question on what the effect of types of peer feedback and the depth of elaboration that supports conceptual knowledge improvement, each peer comment was segmented into the different feedback types and further coded based on their depth of elaboration (see Tables 2, 3). In addition, the response rate within each feedback type and its depth of elaboration was also analyzed to examine whether the type and level of elaborated comments affects how the peer comments elicited a response.

Finally, to answer the third research question on how the different feedback types and elaboration depth supported different groups in their conceptual knowledge improvement, a statistical analysis was conducted at the group level to identify the groups with the highest and lowest improvement gains for deeper analysis.

Further content analysis was conducted on the two selected groups to examine the mechanism and effect on the types of peer feedback and depth of elaboration and these contributed to differentiated improvements. The content analysis scrutinized the type of feedback received and what type of feedback lead to the feedback response as revealed by the peer feedback response coding scheme (Table 4). An epistemic network analysis (ENA) was conducted on the coded data to examine how the different feedback types impacted these two groups. This web-based analytic tool https://www.epistemicnetwork.org/, is a free tool for researchers to identify and quantify the connections among elements in coded data and represent them in the dynamic network models.

ENA is a quantitative ethnographic technique for modeling the interconnected structure within the features of data. ENA assumes the following: (1) that it is possible to systematically identify a set of meaningful features in the data (codes); (2) that the data has local structure (conversations); and (3) that codes are connected to one another within conversations (Shaffer & Ruis, 2017; Shaffer et al., 2016). ENA models the connections between codes by quantifying the co-occurrence of codes within conversations, producing a weighted network of co-occurrences, along with associated visualizations for each unit of analysis in the data. Compared with the traditional coding-and-counting approach, the ENA provides deeper insights into the socio-cognitive learning activities of students (Csanadi et al., 2018). Critically, ENA goes beyond the statistical results as it holds the potential to demonstrate the relationships between the feedback type and the connection to the feedback actions. The actions that respond to the feedback, either by adopting the suggestions to refine the work or by revising the existing content, could be easily visualized and analyzed statistically via the network models.

To substantiate the results of ENA, a qualitative uptake analysis was conducted to show how the feedback were acted upon. The purpose of this analysis is to conceptualize, represent, and analyze distributed technology-mediated interactions that uncovers or characterizes the organization of interaction in the records of events (Suthers et al., 2010). The concept of uptake is based on the traces of which the students take up or build on prior contributions to form new or revised input (Suthers, 2006). Uptake can also be understood as a contingency, which is an observed relationship between events evidencing how one event may have enabled or been influenced by other events (Suthers et al., 2010). Therefore, by applying this qualitative analysis in this study, we could illustrate the traces that reveal how students took up the peer comments to improve on the concept map, leading to higher quality work. This analysis goes beyond the quantitative results as it aims to reveal deeper meanings of the interactions by identifying contingencies between events.

To conduct the uptake analysis, the historical log files of the AppleTree system were collected. The log file records capture the timestamp of each edit by each student, enabling the abstraction of the interactions to interpret and examine the influences between the uptake activities among the students. By tracing these influences, the happenings within the refinement process could be examined to understand the traces of knowledge improvement.

The unit of analysis for the uptake analysis in this paper is one bubble or one peer comment from the AppleTree system. The purpose of uptake analysis serves to conceptualize, represent, and analyze distributed technology-mediated interactions that uncovers or characterizes the organization of interaction in the records of events (Suthers et al., 2010). The concept of uptake is based on the traces of which the students take up or build on prior contributions to form new or revised input (Suthers, 2006). Uptake can also be understood as a contingency, which is an observed relationship between events evidencing how one event may have enabled or been influenced by other events (Suthers et al., 2010). Therefore, by applying this qualitative analysis in this study, we could illustrate the traces revealing the way students took up the peer comments to improve on the concept map, thus leading to higher quality work. This analysis goes beyond the quantitative results as it aims to reveal deeper meanings of the interactions by identifying contingencies between events.

Coding of organism design based on scientific concepts

The unit of analysis of the organism design was a complete graphical concept map of the organism. The coding scheme to examine the quality of the organism design was adapted from Huang et al. (2018) to capture the representations of the students’ use of scientific knowledge and practices and group dynamics factors during the discussions. Only the category of scientific knowledge was adopted, and the definition was further elaborated for this study. The scientific practice category was redefined to adapt to the extensiveness and the depth of the adaptations used to describe the designed organism. The quality of the organism design for each category was measured on a scale of zero to four, with one being the lowest quality and four being the highest quality. For the scientific knowledge category, the adaptations associated with the organism were coded according to the scale of the coding scheme, and the mean score was computed. For the scientific practice category, the entire concept map was assessed based on the requirements of task requirement. The difference between scientific knowledge and scientific practice is between the content of the concept map and the structure of the concept map. The coder will scrutinize the concept maps and rate the scientific practice before coding the content within each concept map on the depth of the scientific knowledge. To ensure the reliability of the ratings, an interrater reliability analysis was conducted. Two independent coders, the first author and another trained coder, coded all the organism designs using the coding scheme (Table 1). The Cronbach alpha for the scientific knowledge of all 11 groups was 0.82. The Cronbach alpha for the scientific practice of these 11 groups was 0.724. These results indicated substantial agreement between the coders.

Coding of formative peer feedback

The coding scheme for the feedback was adapted from the ladder of feedback by Perkins (2003). This feedback protocol benefits from the structuring of the feedback comments and promotes effective peer feedback between students (Salmon, 2010). The four types of feedback based on Perkins (2003) are Clarity, Value, Suggestion, and Questions and Concern. By coding the feedback comments using these feedback types, we were able to examine in detail how each feedback type promotes knowledge construction.

As the definition of the feedback types Clarity and Questions and Concern are relatively similar, the later was subsumed into the feedback type Clarity for ease of classification during the coding process. The unit of analysis is one peer feedback comment. During the coding process, it was found that one comment may contain different peer feedback types, for example, the peer comment “Describe why it needs moist skin. I have a question: Is moist skin linked to permeable skin?” has two parts: the first part “Describe why it needs moist skin” is an affirmation to the posted content “it should have moist skin;” the second part “I have a question: Is moist skin linked to permeable skin?” is seeking clarity to the posted content. Instead of segmenting the peer comment into different sentences segregated by different feedback types, three additional secondary feedback types, which concatenate the primary feedback types (e.g., Value and Clarity, Value and Suggestion) were created to provide finer precision to code the feedback comments. In the given example, the feedback comment is coded as Value and Clarity. After scrutinizing all the peer comments data and coding them according to the concatenation of the primary feedback types, the final concatenated feedback types were formulated as presented in Table 2. This concatenation of the coding is important as the receiver of the peer feedback would view each peer comment as one unit and the uptake of the peer feedback is based on the entire unit itself. This precision is crucial to further our understanding on the type of feedback that could lead to knowledge improvement.

Besides coding each peer comment based on the type of feedback, each peer comment was also coded based on its level of elaboration (specificity). According to De Sixte et al. (2020), a verification comment refers to a simple judgment to indicate if the content is correct. In contrast, elaborated feedback provides scaffolding and good examples to assist the receiving party in better understanding the issue (Meyer et al., 2010). The depth of elaboration coding scheme defined for this study has three levels of elaboration. The simplest level is a verification for support (e.g., That’s COOL!), acknowledgement (e.g., good), or just a question (e.g., why?) without any keywords that are related to any scientific knowledge. The second level is an opinion with at least one reason related to scientific knowledge with elaboration (e.g., How does this help it to escape? You may want to explain whether it confuses or blinds its prey or predator), and the third level is an opinion with more than one reason related to scientific knowledge with elaboration (e.g., what does it help them do? Tear flesh? Catch prey? Open seeds? And are they carnivores, omnivores, or herbivores?). Table 3 illustrates the coding scheme for each level of elaboration and its definitions.

As the codes for peer comments are categorical variables, Cohen’s kappa was calculated to examine the intercoder reliability. The first author trained two other coders to code the peer comments for all six groups, with one trained coder coding three independent groups. The Cohen’s kappa values for the six dimensions of coding are 0.84 for Clarity, 0.97 for Value, 0.56 for Suggest, 0.56 for Clarity and Suggestion, 0.67 for Value and Clarity, and 1 for Value and Suggestion. The overall score is 0.704, suggesting satisfactory agreement among the three coders. De Wever et al. (2006) argued that Cohen’s kappa values between 0.4 and 0.75 represent fair to good agreement beyond chance.

To close the feedback loop, each comment was examined to see if there were revisions made in relation to the peer comment based on semantic match. The coder would scrutinize the refined content against the peer comment to determine if it is a knowledge adoption or knowledge response. The determination of a knowledge adoption is the based on recognition of semantic content from the feedback comment that was being acted upon within the revised content. For knowledge response, the determination was based on seeing a revision of the content and underlying conceptual knowledge with no exact visible presence of content from the feedback in the revised content. Take, for example, the words “makes it colder” from the peer comment “This adaptation is actually applicable since sweating makes it colder so you can add it in your evidence” was included into the content “do not sweat as much” with the final refinement as “do not sweat as much as sweating makes it colder.” This is coded as knowledge adoption since there is an exact semantic match of “makes it colder” in the revised content. On the other hand, the inclusion of “so that it can breathe underwater” to the content “it should have moist skin” based on the peer comments “Describe why it needs moist skin. I have a question: Is moist skin linked to permeable skin?” and “describe why it needs moist skin? to breathe?” is coded as knowledge response instead of knowledge adoption as there were phrases in the revised content with a semantic match with something from the feedback. Any revision with no content revised is classified as simply a format edit. No response refers to no revision made. Table 4 shows the four types of response and their respective definitions. This coding is used for the ENA. Upon scrutinizing the above coded data against the interrater agreement scores and finding them acceptable, the researcher’s coded results were used for reporting in this paper.

Results

Pre-test and Post-test results

Figure 5 shows the results of the content analysis conducted on the concept map of the organism design for each group before and after the peer feedback based on the organism design coding scheme (Table 1). As shown in Fig. 5, there is substantial improvement to the total score of scientific knowledge and practice before and after feedback at the class level, shown by the large difference in means for both the scientific knowledge (MD = 1.08) and the scientific practice (MD = 1.37).

The total score difference (TD) for each of the adaptation concepts (movement in water, TD = 5.5; breathing underwater, TD = 7.0; obtaining light, TD = 7.5) is shown in Fig. 6. This result shows a large improvement between the conceptual knowledge score before and after the feedback phase.

Besides the results showing the conceptual knowledge improvement measured at the group level, results of the paired t-test indicated that there is a significantly large difference between the pre-test (M = 15.2, SD = 4.2) and the post-test (M = 21.8, SD = 3.3), t (2) = 11.1, p < 0.01 for the three adaptation concepts tested. The large difference between the averages of the pre-test and the post-test shows the conceptual knowledge improvement at the individual level. The observed effect size d is 6.41, illustrating a large effect size.

Peer feedback types and its response rate

Based on the content analysis, there was a total of 319 peer comments generated by the 38 students within the 20 min duration. The high number of peer comments given (M = 8) and the range of peer feedback given by each group is shown by the sociogram in Fig. 7.

Out of the 319 peer comments generated, 62% were acted upon, with refinement made to their group concept map. Figure 8 shows the types of peer comments that were responded to, with refinement made to the concept map. These feedback comments positively impact the refinement of the concept maps, which led to improvement in the scoring of the scientific knowledge and practices. As shown in Fig. 6, the highest uptake rate in response to the feedback received are Value and Suggestion and the Suggestion feedback type (75%), followed by Clarity and Suggestion (74%), and Clarity (69%). Based on Fig. 7, the feedback type that is least acted upon is Value (13%). However, when Value feedback type was coupled with Clarity comments, the acting upon rate is high (Value and Clarity = 43%). When Value was coupled with Suggestion, it yielded the highest acted upon rate (Value and Suggestion = 75%).

From the perspective of giving peer comments, the highest number of comments given were the Clarity feedback type (N = 208), followed by Value (N = 78) and Suggestion (N = 59). The highest number of responded comments were the Clarity feedback type (N = 158), which are peer comments that seek clarifications and ask questions. Besides the primary level of peer feedback, there were a substantial number of secondary level feedback types given by the students. Secondary level feedback types of Clarity and Suggestion were higher (N = 31) compared with the other two feedback types of Value and Clarity (N = 7) and Value and Suggestion (N = 8). The results show that feedback types with suggestions (Suggestion, Value & Suggestion) yielded the highest response rate compared with the rest of the feedback types.

Figure 9 shows the depth of elaboration of the total peer comments according to feedback type. The number of elaborated comments with at least one reason related to scientific knowledge is the highest (N = 313), followed by opinion and verifications only (N = 52), then by comments that have more than one reason related to scientific knowledge (N = 26).

Figure 10 illustrates the three levels of elaboration within each responded feedback type. Those elaborated comments with more than one reason related to scientific knowledge have the lowest response rate (E2 = 7%), follow by comments with no elaboration but verification and opinion only (V = 13%). The highest responses were those elaborated comments with at least one reason related to scientific knowledge (E1 = 80%).

Group level knowledge advancement

To identify groups for illustrating how the different feedback types and depth of elaboration supported conceptual knowledge improvement, the different feedback type and elaboration depth of the different groups and their conceptual knowledge improvement before and after peer feedback were analyzed, as shown in Fig. 11. The group that improved the most was G03, and the group with no improvement was G09. Hence, these two groups were identified for the in-depth analysis of the conceptual knowledge improvement process.

Figure 12 shows the ENA comparison model of G03 and G09. The red dotted lines show the network model associated with G03, while the blue dotted lines are the network model associated with G09. The conversations are defined as all lines (solid lines) of data associated with a single value of Group.ID subset by feedback type and feedback responses. The feedback type codes shown by the black round dots are Clarity (C), Value (Va), Suggestion (S), Clarity and Suggestion (C.S), Value and Clarity (Va.C), and Value and Suggestion (Va.S). The feedback response code is knowledge adopted, knowledge response, no response and format edit, shown by the black round dots. The bigger the dots, the higher number of connections between the feedback type and the feedback responses. The conversation data lines refer to the association between a user and the feedback type. The group members within each group are represented by the red and blue dots, which are G03 and G09, respectively. The square boxes represent the two groups. Each group connects all the group members and the conversations. We defined the units of analysis as all lines of data associated with a single value of Group.ID subset by the feedback types (e.g., Va = Value, S = Suggestion, C = Clarity) and the acted upon code (KR = knowledge response or KA = knowledge adopted). For example, one unit consisted of all the lines associated with feedback type C and its feedback response KR.

As shown in Fig. 12, there is a thin red line showing the connection between the Suggestion feedback type and the Clarify feedback type with knowledge response for G03 and a thicker red line connecting between Clarify feedback and knowledge response. The thicker line illustrates a stronger connection between Clarify feedback and knowledge response compared with the weaker connection between Suggestion feedback type and the knowledge response feedback type. For G09, there are two thin blue lines showing the connection for knowledge response between Value and Clarity and Clarity and Suggestion feedback. The thin line illustrates weak connections between the feedback types and the feedback response.

From the ENA, it was noted that the knowledge response and knowledge adopted for G03 were initiated from feedback type Clarity, Suggestion, and Value and Suggestion. Although the line is faint for the knowledge adopted, the Suggestion peer comment was seen as able to contribute to the majority of the text in the revised content. The two connection lines between knowledge response and the feedback type Clarity and Suggestion and Value and Clarity from G09 shows that the peer comment given was acted upon with revisions made to the existing content. Although the ENA of G09 showed that the group members acted upon the peer feedback, there was no improvement to the score on the concept map. Therefore, an uptake analysis was conducted on G09 to further elaborate the details of the responses made as illustrated by the ENA on the connections between the feedback types of Value and Clarity and Clarity and Suggestion with knowledge response.

The uptake analysis for G09 was illustrated by the uptake graph, Fig. 13. This uptake graph describes the process of knowledge improvement before and after the peer feedback event. As shown in Fig. 13, the top segment of the uptake graph shows the content of the bubbles in the AppleTree system before the intergroup peer critique phase. The blue rectangle illustrates a claim (organism), and the yellow bubbles illustrate evidence (adaptations). The bottom segment shows the refinement in response to peer feedback. The text in red indicates the revised content. The bottom label of each rectangle indicates the user id and the timestamp. The middle segment shows all the peer feedback received by G09. The label below the peer comments indicates the user id and the type of the feedback comment. The dotted arrows represent intersubjective contingencies where interactions involve other elements (e.g., editing on other group members’ bubbles or commenting on other group’s bubbles).

As shown in the bottom panel, there is only one refined content bubble despite the number of comments given. Based on the uptake analysis, the two comments given were from two different members from two separate groups. The Value and Clarity comment “Describe why it needs moist skin. I have a question: Is moist skin linked to permeable skin?” given by a member of G07 and the Clarity and Suggestion comment “describe why it needs moist skin? To breathe?” given by a member of G08 led to the refinement of a further elaboration of “it should have moist skin” with the reason “so that it can breathe underwater” to answer the “why” question”. This refinement concurred with the earlier ENA that there is Knowledge Response from G09. However, the refinement was not substantial, thus leading to no further improvement to the score on the concept map. The No Response dot connecting the various feedback types shown in Fig. 12 coincides with the no uptakes of the various peer feedback comments as shown in the uptake graph.

Discussion and limitations

Discussion

This study examined how class-wide peer feedback benefited conceptual knowledge improvement and learning outcomes in a primary school environment. The networked environment maximized the learning opportunities during the peer feedback process for both the feedback givers and the receivers. The findings show that the class-wide peer comments contributed by the givers supported the receiving group in improving their science conceptual understanding, leading to knowledge improvement.

Results from the first research question show that the diverse perspectives contributed during the class-wide peer feedback led to knowledge improvement. The scorings from the concept maps before and after the peer feedback illustrated an improvement in the mean scores for both the content knowledge and the structure of the concept maps. This result suggests that the class-wide peer feedback benefited conceptual knowledge improvement. The improved quality of the concept map after the peer feedback could be attributed to two sources. Firstly, when the students gave feedback on the concept maps of other groups, they could have gained knowledge when they scrutinized the concept maps in preparation for giving comments. Secondly, when the students viewed the multiple perspectives through the peer comments from the other group members, the new insights gained could bring about new gains in conceptual knowledge. When the students acted upon the peer comments received, the improvements made to their concept map could also enable conceptual knowledge improvement. Beside the improvements measured at the group level, results from the pre- and post-tests indicate that the class-wide peer feedback benefited the students in their conceptual understanding of the adaptations concept, resulting in an improvement in their learning outcomes at the individual level. The large difference between the averages of the pre-test and the post-test indicates that the result is statistically significant. The observed effect size d is 6.41, indicating that the magnitude of the difference between the average of the differences and the expected average of the differences is large, illustrating its practical significance.

Research studies have advocated that collaborative learning benefits conceptual change in science learning with higher learning outcomes (Khosa & Volet, 2014; Tao & Gunstone, 1999). The findings from research question one coheres with the findings from Khosa and Volet (2014), that the collaborative learning activity provided the opportunity for students to grasp complex knowledge when it is difficult to grapple with at the individual or group level. Specifically, the results have illustrated that having a class-wide peer feedback between group collaborative learning sessions could support both the groups and individuals to gain improvements in their conceptual knowledge.

Results from the second research question demonstrate that different peer feedback types and depth of elaboration has differential impact on learning outcomes. Feedback type Value and Suggestion invited the highest response rate, followed by the single feedback type Suggestion, then Clarity and Suggestion, and finally Clarity. The results showing more ready adoption of the feedback type Suggestion could suggest that the Suggestion feedback type might be easier to act upon as compared with other feedback types. Within a limited timeframe for refinement of the concept maps, the students might select feedback that is easier to act upon since it could be just about accepting the suggestions into the concept map or some minor refinements. In contrast, the Clarity feedback type, which involves asking questions of clarification or seeking clarity about the work being reviewed, may require one to reflect upon the question asked before deriving a solution in order to act upon the feedback. This process may demand higher cognitive effort and more time to work on, which could be a possible reason why the Suggestion feedback type is more readily responded to. When Clarity and Suggestion are combined, the feedback giver might have provided a possible suggested solution to the question raised, hence such feedback is also frequently responded to.

Based on the results, it was noted that the adopted suggestions helped improve the quality of the concept maps. This could imply that the peer comments given were targeted and helpful for improvement. This scenario echoes the findings by Ma (2020), which indicated that the critical peer comments, including suggestions, are linked to the quality of the final work. From the analyzed results for research question two, the Clarity feedback type was also frequently responded to, although not as much compared with the Suggestion feedback type. Moore and Teather (2013) found that students can reflect more critically on their work when they receive constructive feedback such as seeking clarification and suggestions. However, Wu and Schunn (2020a, b) noted that some students may have difficulties attempting to identify the issues raised. Hence, when clarifications were sought and students were bombarded with different perspectives, they may have needed more time to think through the questions asked before refinement could have been made to the work. Given that it is cognitively more demanding to act upon comments that seek clarification, it could also be one possible reason that the Suggestion feedback type was more readily adopted compared with the Clarity feedback type. Since the duration of the refinement phase in this study was only 20 min, time constraints could be a possible factor affecting the number of feedback messages responded to.

It was also observed that there was a high number of elaborated comments with at least one reason related to scientific knowledge within the analyzed feedback comment. The highest responses to the feedback were those elaborated comments with at least one reason related to scientific knowledge. This could suggest that comments that were too elaborated may be cognitively too demanding to process. An appropriate level of elaboration was sufficient to support students in knowledge improvement. Based on the results, the Clarity feedback type benefited knowledge improvement the most, follow by the Suggestion feedback type. Moreover, messages of the Clarity feedback type were well acted upon regardless of the level of elaboration. In terms of the depth of elaboration on the feedback comments, Tan and Chen (2022) found that elaborated comments support knowledge improvement. Giving elaborated feedback is also an area of concern in the feedback research field (Gielen & De Wever, 2015). The feedback rubric with question prompts and sentence starters that was made available to the students before the intergroup peer feedback could have supplemented the elaborated feedback comments and might have supported the learners to include elaboration of their opinion with supporting or opposing reasons. Providing elaborated comments is helpful for developing deeper understanding of the conceptual knowledge, specifically on the Clarity feedback type, which cultivates critical thinking. This is consistent with the findings of Gielen and De Wever (2015), concluding that a peer feedback template promotes critical feedback.

The process of responding to the given feedback enables taking the giver’s perspective. Furthermore, that process illustrates that being able to analyze and make sound judgement with discretion before making refinement to the group concept map could lead to deeper understanding in the scientific conceptual knowledge. The deliberate thinking process when processing the given feedback enables the students to reflect in a critical way, which promotes critical thinking (Ekahitanond, 2013).

Results from the third research question illustrate that the group with higher knowledge improvement acted upon feedback types that seek clarity, with revisions made to the existing content rather than adopting directly from the suggestions given. This phenomenon might suggest that comments that include seeking clarifications might be a good trigger to inspire knowledge improvement. This finding concurs with the findings from Wu and Schunn (2020a, b), who concluded that peer comments that identify problems are a significant predictor for subsequent improvement. Essentially, a strong connection between the Clarity feedback type and refinement of the product and resulting in conceptual knowledge improvement suggests that the Clarify feedback type, which seeks to ask questions about areas of concern, supported problem identification that led to rectifications of the problem. Although the connection between suggestion comments and adopting the suggestions to the revised work is weak, this result could still indicate that giving suggestions does have a positive effect as it promotes knowledge improvement. These findings concur with Tan and Chen (2022) that Clarity and Suggestion feedback types are contributing factors that support knowledge improvement.

As for the group with lower knowledge improvement scores, results from the ENA show a weak connection with the clarity feedback type and revisions made to the content of the group product. Drawing reference from Fan and Xu (2020), the low engagement to the given feedback could be due to the receivers not having sufficient knowledge capacity to act upon the peer feedback. Another possible reason could be that the students do not have the capacity to manage too many comments within a limited time frame. As a result, when the students were faced with excessive numbers of different perspectives, they could only consider those comments that were within their cognitive ability to manage and thus had to forgo seriously considering those that they deemed not possible to act upon within the limited duration of the refinement phase. Despite the limited refinement and improvement, the uptake analysis demonstrates that inter-group peer feedback did bring about knowledge improvement. In situations where the group had reached its maximum cognitive capacity during the group synergy phase, the inter-group peer feedback was able to promote further knowledge advancement even when the improvement may not lead to substantial improvement immediately. From the uptake analysis, it was evident that the feedback type Clarity best supports knowledge improvement, followed by the feedback type Suggestion. This syndrome is consistent in groups with different cognitive abilities, suggesting that the determining factor to conceptual knowledge improvement could be on the prior knowledge and cognitive capacity of group members. Further, when dealing with feedback types Clarity and Suggestion, one would need to leverage existing knowledge and consider the comments given before refinement of the work can be done. This process is indeed cognitively demanding, specifically for group members who may need more response time. Regardless, conceptual knowledge improvement attributed to peer feedback is evident as the ideas refined were not sighted before the peer feedback phase. This phenomenon echoes the findings by Chen et al. (2021) that the inter-group peer feedback is an important phase that supports the conceptual knowledge improvement when the cognitive limit is maximized at the group level. In all, the research results show that the multiple perspectives from other groups could bring forth new insights that support knowledge improvement, regardless of the differentiated cognitive capacity.

The work presented in this study has theoretical and practical contributions. While most researchers agree that collaborative learning benefits education, this study contributes to the quest for more empirical studies to explore the process of collaborative learning that leads to knowledge outcomes (Khosa & Volet, 2014; Van Leeuwen & Janssen, 2019). The class-wide peer feedback process promotes critical thinking that enables the development of a metacognitive construct as the students think over their prior knowledge during the refinement process. This metacognitive process is important even if the suggestions given by the peers are not accepted as the comments could give the receiver something to think about and could perhaps prompt the receiving party with a different thought process (Topping, 2018). In essence, the detailed analysis in this study could contribute to further understanding of the structure and dynamics of metacognition emerging in the CSCL environment (Garrison & Akyol, 2015). Furthermore, the evidence provided about students’ action and uptake in response to feedback received is an important line of further inquiry into the feedback loop (Carless & Boud, 2018).

The large effect size of this study suggests that this research finding has practical significance. In an authentic classroom with a large class size, implementing class-wide peer feedback is common yet challenging. Understanding how members within the collaborative group interconnect with one another for effective collaboration in a large class setting is crucial (Roschelle, 2013). Therefore, the results showing the effectiveness of the pedagogical approach provide further insights into the implementation process in such a networked collaborative learning environment. In essence, when implementing a class-wide peer feedback CSCL environment, some practical implications to consider could be the groupings and differentiated duration allocation. Amara et al. (2016) deemed that forming effective learning groups is one of the important factors that determines the efficiency of a CSCL process. In this study, the groups were formed via seating arrangement as post-COVID-19 safety measurements were still in place, and large movements of students within the space was discouraged. In future classroom scenarios without safety measurement considerations, different group formations as follows could be considered. Recent research studies have shown that algorithmic group formation enhances collaborative learning outcomes and could help with the complexity and diversity of educational contexts (Li et al., 2022). While findings from algorithm-based grouping in the CSCL environment show encouraging results, it may not be easily implemented in an authentic setting. Another possible avenue would be to group members heterogeneously or homogeneously based on their pre-test results. Other considerations such as personal characteristics, learning behaviors, and the teachers’ understanding of each learner’s contextual prior knowledge could also be a consideration for group formation (Amara et al., 2016). As for the time allocated for the refinement phase after peer feedback, the teacher could allow the groups to continue their refinement out of class by instructing all students in each group to respond to every given feedback by replying to the feedback comment or to make refinement to the group product. In this way, constructive feedback (e.g., Clarity feedback type) that requires more think time to process would not be wasted and could benefit the knowledge improvement both cognitively and in the outcome product of the receiving group.

Limitations

Despite the positive findings, there are some limitations and recommendations for consideration in future studies. Firstly, the quantitative data analysis regarding conceptual knowledge improvement is reported at the group level, which did not consider its effect on the individual knowledge improvement. Further studies can be conducted to examine how collaborative learning process influence individual knowledge improvement, especially when Chen et al. (2021) found that the more knowledgeable students within the group do have a positive influence over the group knowledge improvement.

Secondly, as there was no control group in this study, it was unclear what effect the peer feedback had on the conceptual knowledge improvement. In the current CSCL setting, the large number of peer comments received (416 comments) within the short 20 min duration could be attributed to the affordances of technology. It was not clear if the same amount and diverse types of feedback could be gathered in a nontechnological collaborative learning environment. Therefore, further studies could be designed to yield more conclusive evidence. Illustrating results using inferential statistics between the experimental and control groups can provide the best representation of the findings relative to the population of interest.

Thirdly, the 20 min duration of the refinement phase could have restricted the response to the peer comments. As mentioned earlier, feedback type Clarity, which identifies problems or seeks clarification may demand more cognitive effort compared with the Suggestion feedback type. Since different feedback types may demand different cognitive effort, more time may be needed for the groups to process feedback types that seek clarifications or problem solving with no provided suggestions. Hence, when designing a feedback process, careful consideration is needed to ensure that sufficient time is given for each group to process the feedback comments for effective group knowledge construction and maximize collaborative knowledge improvement. Hovardas et al. (2014) posited that groups could spent three times the amount of time reviewing expert feedback. Therefore, if a longer duration is accorded to the refinement phase, the response rate to the peer comments could possibly increase when group members deliberate, discuss, and refine the feedback comments against the product. Further, the longer duration accorded could also enable deeper discussion with higher quality improvement of the final product.

Finally, the context of this study was science education in the primary school setting, which could limit the generalization of the results to other contexts. Therefore, further research studies could be conducted in different contexts to explore the benefit of class-wide peer feedback and the types of feedback that have higher uptake rates that lead to conceptual knowledge improvement.

Conclusions

This study shows that class-wide peer feedback did benefit students’ conceptual knowledge improvement, which led to improved learning outcomes. Feedback type Clarity could promote deeper conceptual knowledge improvement, followed by Suggestion feedback type. Conceptual knowledge improvement can be illustrated via knowledge adoption and knowledge response. The uptake of the Suggestion feedback type is usually associated with knowledge adoption, which is cognitively less demanding. Feedback types that require clarification of issues raises are commonly responded with refinement made to the prior knowledge, leading to improvement to the group’s conceptual knowledge. In all, for feedback to be effective, the comments given ought to be acted upon. Hence, in a CSCL environment where peer feedback is part of the collaborative learning process, it is important to ensure that the feedback loop is carefully considered to harvest the benefits of collaborative learning work.

Data availability

Data are not publicly available to preserve individuals’ privacy under the Personal Data Protection Commission (PDPC) Singapore.

References

Alqassab, M., Strijbos, J. W., & Ufer, S. (2019). Preservice mathematics teachers’ beliefs about peer feedback, perceptions of their peer feedback message, and emotions as predictors of peer feedback accuracy and comprehension of the learning task. Assessment & Evaluation in Higher Education, 44(1), 139–154.

Amara, S., Macedo, J., Bendella, F., & Santos, A. (2016). Group formation in mobile computer supported collaborative learning contexts: A systematic literature review. Journal of Educational Technology & Society, 19(2), 258–273.

Barron, B. (2003). When smart groups fail. The Journal of the Learning Sciences, 12(3), 307–359.

Borge, M., & Mercier, E. (2019). Towards a micro-ecological approach to CSCL. International Journal of Computer-Supported Collaborative Learning, 14(2), 219–235.

Carless, D. (2019). Feedback loops and the longer-term: Towards feedback spirals. Assessment & Evaluation in Higher Education, 44(5), 705–714.

Carless, D. (2022). From teacher transmission of information to student feedback literacy: Activating the learner role in feedback processes. Active Learning in Higher Education, 23(2), 143–153.

Carless, D., & Boud, D. (2018). The development of student feedback literacy: Enabling uptake of feedback. Assessment & Evaluation in Higher Education, 43(8), 1315–1325.

Carless, D., Salter, D., Yang, M., & Lam, J. (2011). Developing sustainable feedback practices. Studies in Higher Education, 36(4), 395–407.

Chang, C.-C., Tseng, K.-H., & Lou, S.-J. (2012a). A comparative analysis of the consistency and difference among teacher-assessment, student self-assessment and peer-assessment in a web-based portfolio assessment environment for high school students. Computers & Education, 58(1), 303–320.

Chang, N., Watson, A. B., Bakerson, M. A., Williams, E. E., McGoron, F. X., & Spitzer, B. (2012). Electronic feedback or handwritten feedback: What do undergraduate students prefer and why?. Journal of Teaching and Learning with Technology, 1(1), 1–23.

Chen, W., Tan, J. S., & Pi, Z. (2021). The spiral model of collaborative knowledge improvement: An exploratory study of a networked collaborative classroom. International Journal of Computer-Supported Collaborative Learning, 16(1), 7–35.

Chen, W., Pi, Z., Tan, J. S., & Lyu, Q. (2022). Preparing pre-service teachers for instructional innovation with ICT via co-design practice. Australasian Journal of Educational Technology, 38(5), 133–145.

Chen, W., Tan, J. S., Zhang, S., Pi, Z., & Lyu, Q. (2023). AppleTree system for effective computer-supported collaborative argumentation: an exploratory study. Educational technology research and development, 1–34. https://doi.org/10.1007/s11423-023-10258-5

Cheng, K.-H., Liang, J.-C., & Tsai, C.-C. (2015). Examining the role of feedback messages in undergraduate students’ writing performance during an online peer assessment activity. The Internet and Higher Education, 25, 78–84.

Csanadi, A., Eagan, B., Kollar, I., Shaffer, D. W., & Fischer, F. (2018). When coding-and-counting is not enough: Using epistemic network analysis (ENA) to analyze verbal data in CSCL research. International Journal of Computer-Supported Collaborative Learning, 13(4), 419–438.

De Sixte, R., Mañá, A., Ávila, V., & Sánchez, E. (2020). Warm elaborated feedback. Exploring its benefits on post-feedback behaviour. Educational Psychology, 40(9), 1094–1112.