Abstract

In a society which is calling for more productive modes of collaboration to address increasingly complex scientific and social issues, greater involvement of students in dialogue, and increased emphasis on collaborative discourse and argumentation, become essential modes of engagement and learning. This paper investigates the effects of facilitator-driven versus peer-driven prompts on perceived and objective consensus, perceived efficacy, team orientation, discomfort in group learning, and argumentation style in a computer-supported collaborative learning session using Interactive Management. Eight groups of undergraduate students (N = 101) came together to discuss either critical thinking, or collaborative learning. Participants in the facilitator-driven condition received prompts in relation to the task from a facilitator throughout the process. In the peer-driven condition, the facilitator initially modelled the process of peer prompting, followed by a phase of coordinating participants in engaging in peer prompting, before the process of prompting was passed over to the participants themselves. During this final phase, participants provided each other with peer-to-peer prompts. Results indicated that those in the peer-driven condition scored significantly higher on perceived consensus, perceived efficacy of the IM methodology, and team orientation. Those in the peer-driven condition also scored significantly lower on discomfort in group learning. Furthermore, analysis of the dialogue using the Conversational Argument Coding Scheme revealed significant differences between conditions in the style of argumentation used, with those in the peer-driven condition exhibiting a greater range of argumentation codes. Results are discussed in light of theory and research on instructional support and facilitation in computer-supported collaborative learning.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Studies of discourse and dialogue in classrooms have consistently reported that teacher’s talk dominates the conversation during lessons (Dillon, 1985; Edwards & Mercer, 1987; Lemke, 1990). A study by Newton et al. (1999) found that less than 5% of in-class time is allocated to group discussions, while less than 2% of teacher-student interactions involve meaningful discussion of ideas and productive exchange of views and opinions. Similarly, at university level, didacticism remains the common approach (Hogan, 2006). Traditional and dominant approaches to teaching are often slow to change. Classic and commonly used forms of discourse prevalent in education involve teacher initiation, student response, and teacher evaluation, known as IRE (Mehan, 1979) or IRF when the third step involves follow-up or feedback (Sinclair & Coulthard, 1975; Chin, 2006). These forms of interaction are widely regarded as teacher-dominated, and are found to be ineffective in fostering students’ collaborative dialogue (Alexander, 2004; Duschl & Osborne, 2002; Mercer & Littleton, 2007). Lemke (1990), for example, found that this form of dialogue results in limited learning outcomes as teachers typically focus on using classroom dialogue and exchange to cue simple fact recall and assess declarative knowledge. While classroom discourse is seen as an avenue for collaborative knowledge construction and meaning making, studies have shown that this is not always the case (Hardman & Abd-Kadir, 2010, Beauchamp & Kennewell, 2010, Lemke, 1990; Russell, 1983). Recent studies have reported that teacher-dominated discourse continues to be prevalent in classrooms and, more crucially, this approach often limits opportunities for student involvement, access to different modes of communication, and purposeful practice in the use of language (Strayer, 2012; Alexander, 2004; Cazden, 2001; Nystrand et al., 2003). Crucially, in a society which, in the face of many complex scientific and social problems, is calling for more productive modes of collaboration, the development of key critical, collaborative, and systems thinking skills become highly significant educational outcomes (Hogan, Hall, & Harney, 2017). As such, greater involvement of students in dialogue, and increased emphasis on various forms of collaborative discourse, become essential modes of engagement and learning.

A primary objective of classroom discourse is meaning-making in both teacher-student and student-student interactions (Gan, 2011). This process involves both students and teachers using language and discourse for thinking and reasoning about the topic at hand: a process succinctly described by Lemke (1990, p.1) as “talking science”. The term talking science refers broadly to talk characterized by active and engaged reasoning in relation to problems that are the focus of instruction. “Talking science means observing, hypothesizing, describing, comparing, classifying, and analyzing” (Lemke, 1990, p.1). It reflects a pattern of social exchange that involves the application of critical thinking and reflective judgment skills. Critical thinking is a process, which through the use of analysis, evaluation and inference, increases the chances of producing a logical conclusion to an argument (Hogan et al., 2015a), and reflective judgment is a process which is used in the context of critical thinking to make judgments and decisions in a reflective manner, in light of an awareness of the limits of knowledge and the various ways in which knowledge and understanding can be achieved (Dwyer et al., 2015). Cultivating these metacognitive processes is now seen as a top priority in university education and this priority has highlighted the need to move beyond didacticism and teacher-driven discourse to more collaborative forms of discourse that include peer-to-peer learning (Hogan, 2006; Havnes, 2008). Furthermore, argumentation has been recognised as an essential skill, with considerable efforts in educational research devoted to improving methods of supporting and teaching argumentation (Scheuer et al., 2010). In particular, the field of CSCL has sought to shed light on the process of argumentation, and how students can benefit from argumentation in collaborative learning contexts (Baker, 2003; Schwarz & Glassner, 2003; Andriessen, 2006; Stegmann, Weinberger, & Fischer, 2007; Muller Mirza, Tartas, Perret-Clermont, & de Pietro, 2007). Importantly, as with talking science, collaborative argumentation is viewed as a key way in which students can acquire critical and reflective thinking skills (Andriessen, 2006).

In order to promote and encourage students to talk science, or engage in classroom-based argumentation, teachers can use prompts or other forms of feedback to support students’ dialogue and critical, reflective thinking (Davis, 2003; Harney et al., 2015). For example, teachers can use the third step in the IRF to promote further dialogue by scaffolding students’ thoughts and ideas through elaborative feedback (Mortimer & Scott, 2003) or responsive questioning (Chin, 2006). By delivering feedback and prompts, the teacher may encourage students to actively participate in co-construction of meaning, by prompting elaborations, justifications, and challenges (Harney et al., 2015). While both feedback and prompts are routinely delivered by teachers, and although the power and potential of peer learning and assessment is being increasingly recognized, empirical understanding of how feedback and prompts can be used to facilitate peer learning in collaborative learning settings is still poorly understood. Therefore, in the current study, we examined the effects of facilitator-driven versus peer-driven prompts in the context of a collaborative problem-solving session.

Peer learning is a unique form of collaborative learning. Peer learning is a complex social process that may involve talking science, and other key learning processes. Critical to educational practice in higher education is the development of lifelong learning skills (Prins et al., 2005), including the ability to provide feedback, suggestions, and advice to peers for performance improvement. While collaborative learning can be useful in developing lifelong skills of teamwork (Beckman, 1990), it does not necessarily involve an explicit focus on prompting, feedback, and assessment amongst peers. In contrast, peer learning, as an approach to collaborative learning, promotes a participatory culture of learning (Kollar & Fischer, 2010), explicitly requiring peers to interact in a constructive manner, which often implies a more direct focus on peer prompting, feedback, and assessment.

The literature on peer learning includes a variety of terminologies, approaches and methodologies including peer assessment (van Gennip et al., 2010), peer feedback (Gielen et al., 2010) and peer revision (Cho & MacArthur, 2010). Peer assessment refers to a combination of peer learning behaviours, for example, collaborative development of criteria for student success, peer discussion of learning and task completion strategies, peer reading and feedback. Peer revision can include a subset of these learning behaviours, with a primary focus on revision of previous work with the aim of improving quality. Peer feedback includes behaviours which peers engage in to support insight or input into the performance on a given task. Kollar & Fischer (2010) argue that while these various terminologies, approaches and methodologies of peer learning may refer to different sub-processes, the central activity focus remain conceptually similar, reflecting one pedagogical approach, falling under the broader concept of peer learning.

Peer learning as a pedagogical tool

In university education contexts, many studies have found that formative assessment involving feedback on students’ work is critical for learning (Boud, 1990; Dierick & Dochy, 2001; Topping, 2003). Resource constraints in many universities have led to a reduction in the quantity and quality of feedback received by students (Gibbs & Simpson, 2004). Increased modularisation, for example, has generally reduced course delivery time, thereby reducing the number of assignments and available feedback cycles (Higgins et al., 2002). Furthermore, as class sizes increase, many courses have removed all formative assessment entirely, relying solely on exams as a measure of learning, whereas other courses that use continuous assessment rather than end-of-course exams provide feedback late in the term, often after exams have been completed (Gibbs & Simpson, 2004). One solution to the limited scope for instructor feedback is better use of in-class formative peer learning designed to facilitate and accelerate learning for individuals and groups. Formative assessment, when adapted for peer-to-peer interaction, highlights the potential for new forms of peer learning (Falchikov, 1995). Specifically, formative peer assessment involves the provision of qualitative comments in addition to (or instead of) the provision of marks or grades. The comments provided in this context are referred to as peer feedback (Gielen et al., 2010). Importantly, peer feedback provides mutual benefits. The learner is provided with a performance check, set against the criteria of the task, as well as feedback on strengths and weakness (Falchikov, 1995); at the same time the peer providing feedback may learn by reviewing the work of their peer, observing different strategies or approaches to the task at hand, and internalising key learning criteria and standards used for assessment (Topping, 1998).

An examination of the empirical literature reveals that peer learning has been operationalised in a number of different ways, with both positive and negative effects on learning observed across different studies. In a review of the literature, Boud, Cohen, and Sampson (2001) highlight five potential positive effects of peer assessment strategies including: working with others; critical enquiry and reflection; communication and articulation of knowledge, understanding and skills; managing learning and how to learn; and self and peer assessment. However, Boud and colleagues also note peer learning is typically used in an informal and ad hoc manner, and until peer learning methodologies are formalised into curriculae, as with other pedagogical approaches, results are likely to be mixed. This need for formalisation is echoed by Sluijsmans and van Merriënboer (2000), who, after analysing peer assessment skill in teacher education, conclude that peer learning must be integrated into the regular course content and assessment if consistent learning benefits are to be observed.

Numerous studies have investigated the effects of peer feedback versus teacher driven feedback (e.g., Cho & MacArthur, 2010; Prins et al., 2006; Yang et al., 2006). Each of these studies has found that peer learning provides additional benefits to conventional teacher-driven learning. Cho & MacArthur, for example, conducted a study investigating the effects of receiving feedback from a single expert (e.g. a teacher), a single peer, or multiple peers in the context of a written task. The results of their study found that students who received feedback from multiple peers improved the quality of their writing to a significantly greater degree than those who received feedback from an expert (Cohen’s d = 1.23). The results of this study indicated no significant differences in quality improvement between the single expert and single peer condition. The authors offer a number of possible explanations for this result. For example, while peers may not have the same extensive, elaborated content-related knowledge as experts, they may provide comments which are more accessible and understandable to other peers. As peers often experience the same difficulties, they may be more effective in detecting these difficulties from the perspective of the learner when reviewing the work of their peers. Furthermore, peers may be more effective in communicating both perceived difficulties and potential solutions, as they tend to use the same language as their peers, with less jargon. This claim is supported by research conducted by Cho et al. (2008) who asked technology users to evaluate written responses to technical questions raised by other users. These responses had either been written by experts or other novice users. The reviewers, who believed all responses had been written by experts, rated as most useful the responses which were, in fact, written by novice users. As such, in some cases, peers may be better able to produce effective, accessible feedback.

While these research findings highlight benefits of peer learning, significant gaps in the peer learning literature remain. For example, less research has examined the effects of peer feedback in computer supported collaborative argumentation or computer-supported problem-solving, with the majority of studies investigating the effects on written tasks (e.g. Patchan et al., 2016; Novakovich, 2016) as opposed to discussion based tasks. Furthermore, less is known about what kinds of instructional support is necessary to cultivate effective peer feedback, especially in the context of discourse based tasks. Gan and Hattie (2014) used a graphic organiser to provide students with feedback prompts, which they could then deliver to their peers, in the context of a collaborative, written chemistry task. They found that the use of this graphic organiser resulted in a significant increase in the number of peer comments related to knowledge of errors, suggestions for improvement, and process level feedback. Importantly, it is not enough to simply transfer and apply results and insights about the effects of peer feedback on written tasks, to discourse based tasks. Just as findings from studies of feedback or prompting at the individual level cannot simply be translated to the group level (Gabelica et al., 2012), these different learning outcomes may require different levels of peer interaction, and also may require different supports for the peer interaction, for successful outcomes to be achieved. As such, the current study used an adapted version of Hattie and Gan’s graphic organiser, in the context of a CSCL problem-solving task, thereby extending use of this peer feedback tool into a new context. Specifically, the current study sought to examine if the positive effects of peer feedback observed in the context of collaborative written tasks, can be extended to collaborative, discourse-based, problem-solving tasks.

Cultivating peer learning skills

It is imperative that peer learning, assessment, and prompting processes are managed to ensure consistency of positive learning outcomes; that is, through the implementation of clearly defined criteria derived from effective evidence-based practices. It is also important to encourage students to develop the skills to provide effective peer feedback, a process which may initially require guidance and instruction from the teacher or facilitator. In this context, the facilitator is providing metafeedback, which empowers students to engage in peer learning processes (Prins et al., 2005). However, teachers cannot be expected to intuitively understand and design the delivery of guidance and instruction for peer learning in various scenarios, and further research is needed to support the development of teacher practice in this regard. While research in the area of peer learning focused on written tasks is well-advanced, more research is needed to ascertain the benefits of peer learning for other forms of collaboration, including collaborative discourse and argumentation.

One approach to understanding the benefits of peer learning and peer feedback is investigating the collaborative dialogue (i.e., the interactive talk) between peers in the classroom (e.g., O‘Donnell, 2006; O‘Donnell & King, 1998; Nussbaum, 2008). For example, Webb et al. (2008) found that the prevalence and development of explanations among students in collaborative groups predicted individual learning in mathematics, with the highest growth observed in students who generated more explanations in exchanges with peers. This is consistent with research conducted by Chinn et al. (2000), who found that more complex explanations given by students working in small groups correlated with individual learning gains. In a recent review of collaborative discourse and argumentation, Nussbaum (2008, p. 345) coined the term “critical, elaborative discourse” which emphasizes the importance of students’ “considering different viewpoints” and “generating connections among ideas and between ideas and prior knowledge”. Peers thus provide much feedback to each other through such elaborations and purposeful discussions; they are not merely providers of correct or incorrect feedback, they interpret the usefulness of feedback, and they deliver feedback in turn based on these interpretations.

It must be noted, however, that not all students provide such elaborations or quality feedback (Lockhart & Ng, 1995; Strijbos et al., 2010). Generally, the more able, more committed, and more vocal students provide greater elaboration and critical feedback and thus, are more advantaged in peer interactions. This suggests that it is crucial that teachers demonstrate, facilitate, and cultivate these skills. In practice, this may involve providing specific interventions, including instructional support designed to ensure all students can benefit from peer interactions.

There can also, however, be resistance to the implementation of peer feedback in the classroom. Reservations about the use of peer feedback often relate to concerns about the reliability of students’ grading or marking of peers’ work, power relations among peers and with teachers, and social loafing (Gan, 2011). As a result, efforts are often made to train or support students in their delivery of feedback. This can be done in various ways, including ensuring that peer feedback is clearly integrated into a lesson, or providing feedback guides or rubrics to the students to help them in their provision of feedback (Cho & MacArthur, 2010; Lundstrom & Baker, 2009, Min, 2005; Prins et al., 2006; Rollinson, 2005; Zhu, 1995).

Instructional support for peer learning

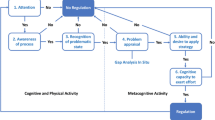

As noted above, one of the primary reasons for resistance to adopting peer feedback approaches in the classroom relates to the quality of feedback provided by peers, which may be perceived as lacking in quality and depth of content (Gan, 2011). This may be exacerbated by ineffective feedback interactions between the feedback provider and the feedback receiver (Prins et al., 2006). A common cause of poor peer feedback quality is a lack of information and skills concerning how to provide, receive, and use peer feedback. Crucially, however, this problem can be overcome through the use of instructional support, which may take the form of facilitation tools such as guiding sheets with prompts, peer review sheets with criteria, and graphic organisers. For example, Hattie and Gan (2011) provided students with a graphic organiser, informed by the framework developed by Hattie and Timperley (2007) to help them to provide feedback to each other (see Fig. 1.). In one condition, second-level chemistry students were taught to use graphic a organiser with prompts to formulate feedback to their peers, while students in a control condition received instruction about chemistry investigation skills, but no training in the delivery of peer feedback. Results revealed that students who were instructed in the use of the graphic organiser formulated higher quality written peer feedback.

Graphic Organiser (Hattie & Gan, 2011)

Similarly, in a study also informed by Hattie and Timperley (2007), Gielen and De Wever (2015) provided students with one of three levels of structural support for peer feedback on a written task. These levels of structure were: 1) peer feedback template alone, which addressed key components of the written task; 2) template plus basic structure, which consisted of two additional questions designed to prompt feedback and feed forward (“What was good about your peers’ work?”, and “What would you change in your peers’ work?”); or 3) template plus elaborate structure, in which students received a feedback template which was divided into sections for feed up, feedback, and feed forward (Hattie & Timperley, 2007), with the criteria again listed in each section. This study took place over three feedback cycles, during which students wrote and received feedback on the abstract, which was the focus on their written task. The results of this study found that, while peer feedback improved significantly in all conditions, students in the elaborate condition displayed significantly greater increases in peer feedback quality when compared with both the template only, and the template plus basic structure conditions. As such, these results are consistent with Hattie and Gan (2011) in that they suggest that additional instructional and structural support, beyond the provision of a feedback template alone, is necessary for optimal peer feedback delivery by students.

Feedback skills can also be developed through demonstration and simulation. For example, Van Steendam et al., 2010) found that training students through modelling of peer feedback behaviour followed by emulation of this behaviour, led to more correct and explicit feedback when evaluating a peer’s text. Peer feedback interventions can also take the form of explicit training. For example, Sluijsmans et al. (2002) conducted a study of the effects of peer assessment training on the performance of student teachers (n = 93). This intervention involved defining performance criteria, giving feedback, and writing assessment reports. Results of this study showed that students in the experimental group, those that received training in feedback, outperformed the students in the control group in terms of quality of the assessment skills, as well as the end products of the course. Students in the experimental group were more likely to use the performance criteria and to provide more constructive comments (i.e., specific, direct, accurate, achievable, practicable, and comprehensible comments) to peers than the students in the control group. This study provides further evidence that training in peer feedback skills positively affects students’ ability to provide peer feedback.

The efficacy of scaffolding by means of prompts and higher order questions is well-founded (King et al., 1998; Palincsar & Brown, 1984). In studies of peer feedback, Prins et al. (2006) suggested that “feedback instruments such as performance scoring rubrics with criteria, or structured feedback forms that force feedback providers to ask reflective questions and give suggestions for improvement could be valuable instruments for increasing the quality of the peer feedback” (p. 300). Building on this line of research, the current study used a graphic organiser, as well as modelling of peer prompting by the facilitator, to support peer prompting in groups of students in a collaborative discussion session using the Interactive Management methodology. The graphic organiser, and prompting modelled by the facilitators, was comprised of prompts adapted from Hattie and Gan (2011).

Interactive Management

Interactive Management (IM) is a computer facilitated thought and action mapping methodology designed to facilitate group creativity, group problem solving, group design, and collective action in response to complex issues (Warfield & Cardenas 1994). Established as a formal system of facilitation in 1980 after a developmental phase that started in 1974, IM was designed to assist groups in dealing with complexity (see Ackoff, 1981; Argyris, 1982; Deal & Kennedy, 1982; Rittel & Webber, 1973; Simon, 1960). The theoretical constructs that inform IM draw from both behavioural and cognitive sciences, with a strong basis in general systems thinking. Emphasis is given to balancing behavioural and technical demands of group work (Broome & Chen, 1992), while honoring design laws concerning variety, parsimony, and saliency (Ashby, 1958; Boulding, 1966; Miller, 1956).

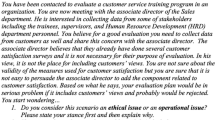

There are a series of steps in the IM process (see Fig. 2). First, a group of (typically, between 12 and 20) people, with an interest in understanding a complex issue or resolving a problematic situation come together to generate a set of ideas which they feel might have an influence on the problem in question. Through group discussion and voting, the group identifies the factors which they agree have the most critical impact on the problem. Next, using IM software, Interpretative Structural Modelling (ISM), each of the critical issues are compared systematically in pairs by asking the question: “Does issue A significantly influence issue B?” Unless there is a clear majority consensus that A impacts on B, the relation does not appear in the final analysis. This process continues until all of the critical issues have been compared in this way. The ISM software then generates a problematique, which is a graphical representation of the problem-structure, showing how all the critical problem factors are interrelated. This consensus-based problematique, which maps the logical structure of the issue, becomes the catalyst for discussion, planning of solutions and collective action in response to the problem (Warfield, 2006).

While IM has primarily been used in organisational settings, it offers many affordances which suggest its potential as an educational tool (Harney et al., 2015; Hogan et al., 2017; Hogan et al., 2015b). From a learning perspective, IM allows students and teachers to structure relationships between multiple ideas, while minimising cognitive load. The ISM software supports a focus on one single relational statement at a time, and uses matrix structuring algorithms to generate a systems model based on the decisions made by a collaborative group. By reducing the cognitive demands on the students and teacher, the group is free to focus on the processes of collaboration and deliberation.

Social psychological factors in collaborative settings

Interactive Management, and other collaborative learning tools, by design, involve interaction between students who are engaging in a shared learning task. According to Stahl (2010), the power of collaborative learning stems from its potential to unite multiple people in achieving the coherent cognitive effort of a group. Thus, a primary goal of CSCL is to explore how this synergy occurs and seek to design and implement methodologies which can support and enhance this process. With this in mind, a number of social psychological variables were considered in the current study.

One outcome of interest in the current study is consensus. Both perceived and objective consensus are potentially critical variables which need to be considered in efforts to enhance the successful workings of groups using CSCL tools, particularly if the goal is to use CSCL tools to enhance group problem solving and decision making. The term consensus refers to the extent to which two or more people agree in their ratings of a target (Kenny et al., 1994). Reaching consensus on a solution to a problem is advantageous for many reasons, especially with regard to implementing an action plan designed to resolve a problematic situation. If there is a high level of consensus amongst group members as to key decisions and conclusions, progress toward a solution to a shared problem may be easier to achieve. For example, Mohammed and Ringseis (2001) found that groups who reported higher levels of consensus in relation to a problem had greater expectations about the implementation of decisions reached by the group, and also experienced higher levels of overall satisfaction. The authors also found that the highest levels of consensus were evident in groups in which the members questioned each other’s suggestions, accepted legitimate suggestions and incorporated other’s viewpoints into their own perspective. What is less clear from such results is whether the facilitation and support provided to groups during collaborative discussions influences consensus-building, and if these effects are similar for both perceived and objective consensus. Perceived consensus refers to the extent to which members of a group report feeling that consensus exists within the group. Objective consensus, on the other hand, refers to actual levels of agreement, as opposed to perceived levels of agreement.

Notably, a core objective of Warfield in developing Interactive Management was to facilitate groups in reaching high levels of shared understanding and consensus when addressing complex problems (Warfield, 1976; Warfield & Cardenas, 1994). Warfield, however, did not investigate the optimal conditions under which the IM methodology supports the development of consensus, and this question remains under-explored in the research literature. While Harney et al. (2015) did investigate the effects of different kinds of facilitator prompts on levels of perceived consensus, finding that process-level prompts were more effective than task-level prompts in generating consensus, they did not investigate whether or not this effect remains when the prompts are peer-driven, as opposed to facilitator-driven. As such, the effects of peer-driven prompts on consensus warrants further investigation.

It is important to note however, that Warfield’s goal was not to harness consensus for the sake of consensus. Rather, consensus for Warfield was predicated on the participatory design of the methodology. This design sought to ensure that each participant was afforded equal opportunity to contribute, and that the ideas of each participant were acknowledged by others, such that each individual felt that their views have been listened to and understood (Janes, Ellis, & Hanner, 1993). Warfield sought to cultivate consensus through dialogue and democratic voting, such that participants do not feel compelled to agree (Alexander, 2002). This is important, as dissent and critique conducive to learning and reflection, both at a societal level (Sunstein, 2005) and in the classroom (Johnson, Johnson, & Smith, 2007).

As noted above, IM has primarily been used as an organisational tool, but its potential as a CSCL tool has recently been explored, with promising results (Harney et al., 2015). When designing and implementing any new educational tool, it is vital to consider both the actual efficacy of the method or tool, for example, in terms of learning gains, and also the user’s judgement of the efficacy of the method or tool. As such, another important outcome considered in the current study is the group’s judgment of the efficacy of the CSCL tool they are using. Higher levels of perceived efficacy of the CSCL tool are an important outcome. If CSCL tools such as IM are to be adopted by groups for use in educational settings, it is imperative that they are perceived as efficacious by the user group. Again, it is unclear if specific types of facilitation and prompting influence the perception that group members have in relation to the tools and methodologies they are using.

While levels of consensus, both perceived and objective, and perceived efficacy, are important outcomes in the context of collaborative learning, it is also necessary to consider variables relating to teamwork or collaboration itself. With this in mind, the current study measured levels of team orientation both before and after the CSCL process. According to a review by Boud et al. (2001), peer learning promotes team-skills such as working with others, and communication. Such learning gains provide benefits beyond education, with team-based skills widely sought after by employers (Koc et al., 2015). Therefore, the potential of peer-driven prompts as a means of enhancing team orientation, represents a worthwhile investigation, which may have considerable positive effects on the broader learning experience.

Another variable which may impact adoption rates of a CSCL process, or perceptions of the process, relates to individuals’ levels of discomfort in group learning. While high levels of team orientation are desirable in group learning or working contexts, it is not uncommon for students to be averse to group work, with many students reporting the experience to be discomforting (Cantwell & Andrews, 2002). Such negative attitudes towards groupwork can have negative consequences for learning, as research has found that while positive perceptions of groupwork are associated with feelings of achievement in university students, negative perceptions are not (Volet & Mansfield, 2006). As such, it is important to consider the impact that various types of feedback interactions can have on individual students engaged in the process, and whether or not certain kinds of facilitation or prompting can be used to reduce levels of discomfort in the collaborative learning environment.

Finally, an important social psychological variable to consider in the context of CSCL is the level of trust that exists amongst group members. Research suggests that higher levels of shared trust in a group leads to increased levels of knowledge sharing (Roberts & O’Reilly, 1974), with individual group members perceiving knowledge sharing as less costly (Currall & Judge, 1995). Furthermore, higher levels of shared trust in a group may increase the likelihood that knowledge received is adequately understood and absorbed so that the individual can put it to use (Mayer et al., 1995). This research suggests that both trust and the facilitation of dialogue may influence other important outcomes in collaborative learning environments, including perceived and objective consensus and perceived efficacy of the methodologies and tools that support learning. Consistent with this view, Harney et al. (2012) found that collaborative groups working in an environment that encouraged open dialogue and discussion, and groups higher in dispositional trust, reported higher levels of perceived consensus, objective consensus and perceived efficacy of collaborative learning methodologies, when compared with groups where levels of dispositional trust were lower and where open dialogue and discussion was restricted.

The current study investigates the effects of facilitator versus peer prompts on perceived and objective consensus, perceived efficacy, discomfort in group learning, team orientation, argumentation style, and collaborative systems model complexity in the context of an IM session. In light of the evidence reviewed above, it was hypothesised that prompting style during collaborative dialogue and argumentation is a critical factor in shaping key outcomes of collaborative learning. Specifically, it was hypothesised that:

-

1.

Peer-driven prompts would produce higher levels of perceived and objective consensus than groups who receive facilitator-driven prompts.

-

2.

Peer-driven prompts would produce higher levels of perceived efficacy of the CSCL process using IM than groups who receive facilitator-driven prompts.

-

3.

Peer-driven prompts would result in lower levels of discomfort towards group learning and higher levels of group trust than groups who receive facilitator-driven prompts.

-

4.

Peer-driven prompts would result in higher levels of team orientation than groups who receive facilitator-driven prompts.

-

5.

Peer-driven prompts would result in more complex and varied forms of argumentation than groups who receive facilitator-driven prompts.

Method

Design

A one way ANCOVA was used to assess the effects of prompting style (facilitator-driven versus peer-driven) on perceived efficacy of IM, while controlling for dispositional trust. A series of three 2 (condition: facilitator-driven versus informative) × 2 (time: pre-intervention versus post-intervention) mixed ANCOVAs were used to assess the effects of facilitator-driven versus peer-driven prompts on perceived consensus, team orientation, and discomfort in group learning again controlling for dispositional trust. A Statistica™ coefficient comparison test was used to assess the statistical significance of differences in objective consensus across groups before and after the experimental manipulation (i.e., differences in Kendall’s W). A series of 2 (condition: facilitator-driven versus peer-driven) × 2 (present versus not present) chi-squared tests were used to examine frequency differences in dialogic argumentation events across prompting conditions using the CACS coding system. Finally, complexity score calculations were conducted for each of the problematiques across conditions, to analyse complexity of IM structures.

Participants

Participants were first year psychology students (N = 101) comprising 45 males and 56 females, aged between 17 and 31 years (M = 20.7, SD = 4.5), from the National University of Ireland, Galway. Participants were offered research participation credits in exchange for their participation.

Measures/Materials

Perceived consensus

To test hypothesis 1, the method of measurement of perceived consensus used in this study was similar to that used by Kenworthy and Miller (2001): participants first gave their opinion (via the voting of problems relations) and were then asked to rate how representative their opinions were in relation to the opinion of other members of their group. While Kenworthy and Miller asked participants for a percentage estimate, we decided to test their perceived consensus using a 5-item scale with five-point likert ratings (1 = strongly agree, 5 = strongly disagree; e.g., “Generally speaking, my peers and I approach online social media in a similar manner”). The scale had good internal consistency (α = .75).

Objective consensus

Also to test hypothesis 1, objective consensus was measured using Kendall’s coefficient of concordance (Kendall’s W) in relation to likert scale judgement across a random set of ten relational statements. These relational statements were generated from a set of propositions compiled by the authors in advance of the IM session, and which participants considered during the IM session. A sample item from this set is: “Increased dissatisfaction with one’s own life significantly aggravates increased unfair judgements of others”. Items were scored by each individual using a 5-point likert scale (1 = strongly agree, 7 = strongly disagree). Objective consensus, as measured by Kendall’s W, was computed for each group before and after the experimental manipulation (i.e., facilitator-driven versus informative prompts). High values occur when there is greater agreement between raters in the group.

Perceived efficacy

To test hypothesis 2, Perceived efficacy of the IM process itself was measured using a scale developed for use in a previous study (Harney et al., 2012). The scale included 7 items rated on a 5-point likert scale (1 = strongly agree, 5 = strongly disagree; e.g. “I believe that Interactive Management can be used to solve problems effectively”). The scale had good internal consistency (α = .7).

Trust

To test hypothesis 3, Dispositional trust was measured using a combination of the scales developed by Pearce et al. (1992) and that of Jarvenpaa et al. (1998). The Pearce et al. scale included 5 items; the Jarvenpaa, Knoll and Leidner scale included 6 items. The 11 items were rated on a 5-point likert scale (1 = strongly agree, 5 = strongly disagree; e.g., “Most people tell the truth about the limits of their knowledge”, “Most people can be counted on to do what they say they will do”, and “One should be very cautious to openly trust others when working with other people”). The scale had good internal consistency in the current study (α = .75).

Discomfort in group learning

Also to test hypothesis 3, the discomfort in group learning scale was used. The discomfort in group learning scale is one of three components of the Feelings Towards Group Work (FTGW) scale (Cantwell & Andrews, 2002). The other two components of the FTGW scale, preference for individual work and preference for group work, were not included due to their high levels of similarity to items on the Team Orientation scale. Discomfort in group learning however, was deemed sufficiently distinct to warrant inclusion. The scale had good internal consistency (α = .76).

Team orientation

To test hypothesis 4, team orientation was measured using the 21-item Team Orientation scale (Mohammed and Angell, 2004). Item responses were rated on a 5-point likert scale (1 = strongly agree, 5 = strongly disagree; e.g. “All else being equal, teams are more productive than the same people would be working alone;” “I generally prefer to work alone than with others” (reverse scored); and “I find that other people often have interesting contributions that I might not have thought of myself.” The scale had good internal consistency (α = .82).

Style of argument

To test hypothesis 5, style of argument was assessed using the Conversational Argument Coding Scheme (Seibold & Meyers, 2007). The Conversational Argument Coding Scheme (CACS) was developed to investigate the argumentative microprocesses of group interaction (Beck et al., 2012). The CACS includes five argument categories, which contain a total of sixteen argument codes (See Table 1). The five argument categories include: generative mechanisms (assertions and arguables), which are “potentially disagreeable statements” and are considered to reflect simple arguments (Meyers & Brashers, 1998); reasoning activities (elaborations, responses, amplifications, and justifications) which are higher-level argument messages and are most often extensions of generative mechanisms; convergence-seeking activities (agreement and acknowledgements), which include recognition and/or agreement with other statements; disagreement-relevant intrusions, which consist of statements denying agreement with arguables, or posing further questions; and delimitors (frames, forestall/secure and forestall/remove), which consist of messages designed to frame or contextualize the conversation. The remaining codes are termed nonarguables (process, unrelated and incompletes) which consist of statements regarding how the group approach the task, side issues and incomplete or unclear ideas and statements. Multiple Episode Protocol Analysis (MEPA; Erkens, 2005) was used to facilitate the CACS analysis. MEPA is computer software designed for interaction analysis, in which transcribed data can be coded or labelled on several dimensions or levels.

Complexity of IM problematiques

Also to test hypothesis 5, a measure of complexity of IM problematiques was used. These complexity scores are based on total activity of the paths of influence in the IM structure. This involves computing the sum of the antecedent and succedent scores for each element. The antecedent score is the number of elements lying to the left of an element, which influences it. The succedent score is the number of elements lying to the right of an element in the structure, which influences it (Warfield & Cardenas, 1994).

Interpretative Structural Modelling

Interpretive Structural Modelling (ISM) is a computer-mediated, idea-structuring methodology that is designed to facilitate group problem solving (Warfield & Cardenas, 1994). The ISM programme was run on a PC by facilitators. The relations which groups were asked to consider and vote on were displayed on a large screen via an overhead projector.

Procedure

During recruitment, prospective participants were presented with information in relation to the nature of the study, including details as to its focus on collaborative learning and critical thinking. Participants were invited to register online via SurveyGizmo, and were required to complete a dispositional trust scale as part of the registration process. Participants were randomly allocated to one of eight groups, 4 in the facilitator-driven condition (n = 12, n = 13, n = 13, n = 14) and 4 in the peer-driven condition (n = 12, n = 13, n = 10, n = 14). There were two topics of discussion across the eight groups, with students in 4 groups discussing collaborative learning (n = 51) and 4 groups discussing critical thinking (n = 50).

Facilitators

The IM sessions were facilitated by PhD candidates from the same university as the authors. They were provided with training in the use of the IM methodology in advance of the sessions, and were provided with training materials and detailed instructions for facilitation, within the confines of the study protocol. This protocol is described in more detail in the following section.

Interactive Management sessions

The IM sessions took place over two weeks; each of the eight groups took part in 2 sessions, with no more than 14 students in any one session. Each session lasted approximately 120 min. In week 1, participants in each of the eight groups were directed to a room in which chairs were arranged in a circle, such that all of the group members could see each other. Before the IM session began, each participant was given a document which contained a participation information sheet, a perceived consensus scale, an objective consensus scale, a discomfort in group learning scale, and a team orientation scale. The participants were asked to read the information sheet, which contained an introductory paragraph about either collaborative learning, or critical thinking. Participants were then required to complete the aforementioned scales. Once all scales had been completed, a short introductory presentation on examples of dispositions associated with good collaborative learning, or good critical thinking, was delivered by the facilitator to provide additional context for participants. Next, the IM process was explained to participants and then the session began.

The Idea Generation phase of IM took place during week one. In both the collaborative learning and critical thinking groups, participants were asked to silently generate a set of dispositions which they felt had a significant positive impact on the topic at hand (collaborative learning, or critical thinking). To facilitate this stage, the nominal group technique (NGT) was used (Delbeq et al., 1975). The NGT is a method that allows individual ideas to be pooled, and is ideally used when there are high levels of uncertainty during the idea generation phase. NGT involves five steps: (a) presentation of a stimulus question; (b) silent generation of ideas in writing by each participant working alone; (c) presentation of ideas by participants, with recording on flipchart by the facilitator of these ideas and posting of the flipchart paper on walls surrounding the group; (d) serial discussion of the listed ideas by participants for sole purpose of clarifying their meaning; and (e) implementation of a closed voting process in which each participant is asked to select and rank five ideas from the list, with the results compiled and displayed for review by the group. This work covered steps 1 and 2 in Fig. 2. The method of facilitation was the same for both conditions during week one, with the only exception being that the peer-driven group were introduced to the concept of peer prompting, and the graphic organisers for use in week 2 were distributed (see Fig. 3.).

In week two, each of the eight groups returned to structure the relationships between the ideas generated in week one (i.e., step 3 in Fig. 2). This is the phase during which the primary computer supported collaboration took place, using the ISM software. Given the goal of structuring relationships between multiple ideas, the ISM software plays a crucial role in reducing cognitive load, supporting focus on one relational statement at a time, and building the components of the systems model. The ISM software presents on screen two elements at a time, asking the question “Does A significantly influence B?”. As each relational statement is presented on screen, the facilitator opens the discussion to the room, and asks if anyone has a “yes” or “no” preference at this stage. This is also the stage during which the prompt manipulation was implemented. As participants indicated their preference, the facilitator would ask why they had this stated preference, and then request other opinions from the group, using a variety of prompts from Fig. 3. After a period of discussion, the facilitator would request a show of hands from the group, and a vote would be taken and recorded by the ISM software.

This process took place during both the facilitator-driven and peer-driven conditions. However, during the peer-driven condition, participants were again introduced to the graph organiser, and were encouraged to review and consider the prompt questions throughout the process, as they were told that they would be taking over control of the facilitation process over the course of the session. In this peer-driven condition, the role of facilitator was gradually transferred from the facilitator to the participants. This process involved three phases: modelling, coordinating, and handing over. In the modelling phase, the facilitation was conducted in the same manner as in the facilitator-driven condition, as the facilitator modelled the use of questions to prompt participants during the structuring process. This phase lasted approximately 30 min.

Next, during the coordinating phase, the facilitator began to introduce peer prompts into the facilitation process. This was achieved by explicitly directing the attention of participants to the contributions made by others, via the questions on the graphic organiser (e.g. “John, we’ve heard an argument provided by Anne, how could we provide some support for this claim?” or “Susan, what else do we need to know about Michael’s suggestion before we can make a decision?”). During this phase, participants were once again encouraged to review the graphic organiser and consider questions which would be relevant to pose to peers at any given time during the process. This coordinating phase lasted approximately 30 min, giving participants a chance to gain a clearer understanding of how peer prompts work, and to become more familiar with the process.

Finally, when beginning the handing over phase, the facilitator explained to participants they were now to facilitate each other, in a manner consistent with the process which was initially guided by the facilitator in the modelling phase, and then further demonstrated in the coordinating phase. To begin this phase, the facilitator selected one participant to read out the next relational statement (e.g. “In the context of good critical thinking, does willingness to persevere significantly enhance willingness to take the ideas of others into account?”). Once the participant read out the relational statement, he or she invited input from the group of participants, as the facilitator did earlier in the process. The group was once again reminded to review and consider the graphic organiser when providing prompts to other peers, and the discussion was handed over to the group. Once an adequate level of discussion about the relational statement had been conducted (i.e. when participants discussed arguments for and against), the facilitator called for a vote, and the process continued with the next participant (sitting to the right of the previous reader of the relational statement), who in turn read out another relational statement, before calling for input. This process continued for approximately 60 min.

Results

Perceived efficacy of IM

Perceived efficacy of the IM methodology was assessed at post-test only. A one way ANCOVA was used to assess the effects of prompting style (condition: facilitator-driven versus peer-driven) on perceived efficacy of IM, while controlling for dispositional trust. The ANCOVA revealed a significant main effect of condition, F(1,93) = 7.172, p = .009, ηp2 = .072, d = 0.53, with higher perceived efficacy in the process-level group (M = 25.26, SD = 2.31) than in the facilitator-driven group (M = 23.84, SD = 3.01). No other effects were observed.

Perceived consensus

A 2 (condition: facilitator-driven versus peer-driven) × 2 (time: pre-intervention versus post-intervention) mixed ANCOVA was used to assess the effects of facilitator-driven versus peer-driven prompts on perceived consensus, again controlling for dispositional trust. The ANCOVA revealed a significant time x condition interaction, F(1,93) = 4.70, p = .03, ηp2 = .05, d = 0.27, with a significant increase in perceived consensus in the peer-driven condition from pre (M = 14.74, SD = 2.19) to post (M = 15.91, SD = 2.03; t = 2.18, p = .03) but not in the facilitator condition from pre (M = 14.88, SD = 1.95) to post (M = 15.20, SD = 2.12; t = .36, p = .72). The results also revealed a significant main effect of the covariate, dispositional trust, on perceived consensus, F(1,93) = 11.63, p = .001, ηp2 = .111, with higher trust associated with higher levels of perceived consensus.

Objective consensus

Kendall’s coefficient of concordance (Kendall’s W) was used to measure concordance (i.e., agreement of ratings in relation to specific ISM paths of influence) within groups before and after the experimental manipulation. No significant effects were observed.

Discomfort in group learning

A 2 (condition: facilitator-driven versus peer-driven) × 2 (time: pre-intervention versus post-intervention) mixed ANCOVA was used to assess the effects of facilitator-driven versus peer-driven prompts on discomfort in group learning, again controlling for dispositional trust. The ANCOVA revealed a significant time x condition interaction, F(1,94) = 5.70, p = .02, ηp2 = .06, d = 0.64, with a significant decrease in discomfort in group learning in the peer-driven condition from pre (M = 12.52, SD = 2.79) to post (M = 10.78, SD = 2.95; t = 1.97, p = .04) but not in the facilitator-driven condition from pre (M = 12.50, SD = 2.79) to post (M = 11.90, SD = 3.02; t = .40, p = .69). No other effects were observed.

Team Orientation

A 2 (condition: facilitator-driven versus peer-driven) × 2 (time: pre-intervention versus post-intervention) mixed ANCOVA was used to assess the effects of facilitator-driven versus peer-driven prompts on team orientation, again controlling for dispositional trust. The ANCOVA revealed a significant time x condition interaction, F(1,66) = 8.23, p = .006, ηp2 = .111., with an increase of team orientation from pre (M = 71.86, SD = 8.50) to post (M = 74.78, SD = 6.80; t = 2.78, p = .009) in the peer-driven condition but not in the facilitator-driven condition from pre (M = 71.88, SD = 9.62) to post (M = 70.91, SD = 8.48; t = 1.2, p = .24) The results also revealed a significant main effect of the covariate, dispositional trust, on team orientation, F(1,66) = 10.07, p = .002, ηp2 = .13, with higher trust associated with higher levels of team orientation.

Conversational argument coding scheme

A series of chi-squared tests were used to assess the statistical significance of differences in argumentation codes (as per the CACS) across prompting conditions. Of the 16 possible CACS argument codes which comprise the five argument categories, 15 were observed in the peer-driven condition at least once, 12 were observed in the facilitator-driven condition at least once, and 1 was not observed in any condition. Significant differences were observed across conditions for 4 argument codes, with higher frequency occurrence in the process-level prompt condition in each case, specifically, for amplifications (x 2(1) = 5.132. p = .014, v = .05, d = .504), justifications (x 2(1) = 7.089, p = .005, v = .058, d = .582), acknowledgements (x 2(1) = 4.681, p = .021, v = .047, d = .472), and challenges (x 2(1) = 6.793, p = .005, v = .056, d = .582). In each of the remaining codes, with the exception of objections, forestall/secures, and forestall/remove higher incidence was also observed in the peer condition than in the facilitator-driven condition, however, these differences were not statistically different. Descriptive data are presented in Fig. 4.

Finally, analysis of the IM-generated problematiques revealed no significant difference in complexity of argument structures across conditions. The average complexity score for the problematiques generated by groups in the peer-driven condition was 36.75. The average complexity score for the problematiques generated by groups in the facilitator-driven condition was 40.

Discussion

The current study examined the effects of facilitator driven versus peer-driven prompts on perceived and objective consensus, perceived efficacy of the IM method, team orientation and discomfort in group learning, and argumentation style and complexity in the context of an IM session. Results indicated that, compared to those in the facilitator-driven condition, those in the peer-driven condition reported higher levels of perceived efficacy of the IM process. Furthermore, those in the peer-driven condition reported higher levels of perceived consensus in relation to the topical focus of the IM sessions, lower levels of discomfort in group learning, and higher levels of team orientation after the IM sessions. Finally, analysis of the dialogue from the IM sessions revealed that those in the peer-driven condition exhibited higher levels of sophistication in their arguments, as revealed by their CACS scores.

As noted above, although Warfield (1976) designed IM as a consensus-based problem-solving tool, there remains a paucity of research investigating the role of facilitation and prompting in an IM systems thinking environment, and whether or not peers, when engaging in peer learning behaviours, can cultivate a greater level of consensus when compared with a facilitator-driven session. Building upon findings from Harney et al. (2015), who found that process-level prompts were more effective than task-level prompts in generating consensus, the current study extended prompt research into a peer learning scenario. In the current study, while post-IM perceived consensus levels were relatively high in both conditions, a significant pre-post increase was only recorded in the peer-driven prompt condition. This suggests that while the methodology and facilitation process itself may support consensus-building in a collaborative group, the objective of achieving consensus may be particularly enhanced by transferring the role of facilitator and prompt-provider to the group members themselves.

The finding that the peer-driven prompt group reported greater perceived consensus has significant implications, as higher levels of perceived consensus are likely to lead to higher levels of endorsement and engagement by the group in any action or response to a shared problem. For example, if a group feel strongly that there is a strong level of consensus in relation to the understanding and conception of a problem that they are working on together, they are more likely to be committed to, and satisfied with, any plan which comes from the newly-formed collaborative understanding (Mohammed and Ringseis, 2001). Most crucially, this finding suggests that if teachers or facilitators want to promote a high level of consensus in a group, then taking a step back and passing over the role of prompting to the students is a potentially powerful method, particularly if students have received some structured training and understand how to use prompts in context. This finding is consistent with Boud et al.’s (2001) review of peer learning, which highlights benefits such as: working with others, communication and articulation of knowledge, and critical enquiry and reflection, all of which are conducive to consensus, as well as research which suggests that peer learning promotes motivation (Bloxham & West, 2004).

Also consistent with Boud et al. (2001) is the finding that peer-driven prompting has a positive impact on levels of team orientation, which represents another important finding in the context of peer learning, and collaborative learning more broadly. This result also has important implications beyond education due to the fact that, in recent years, teamwork has become one of the skills which employers most desire in university graduates. For example, in 2015, the National Association of Colleges and Employers collected survey responses from 260 employers, including large multi-national companies such as Chevron, IBM, and Seagate Technology, to ascertain which skills were most sought after by employers. The results of the survey showed that “ability to work in a team structure” was the top-ranked skill sought by employers (Koc et al., 2015). Given that research has found that team orientation enhances decision making, cooperation, coordination, and overall team performance (Eby & Dobbins, 1997), it follows that any educational intervention which promotes team orientation may have positive implications for graduate’s approach to teamwork beyond education. Notably, Fransen, Weinberger, and Kirschner (2013) highlight that choice of educational task is an important factor in understanding the effects of team orientation in a learning context, with the best results seen when tasks which are authentic, complex, challenging, and collaborative (Blumenfeld et al., 1991; Kirschner et al., 2009a, 2009b, 2011). In the current study, the tasks were authentic, as they focused on two key components of the student’s education: critical thinking and collaborative learning. Furthermore, in the context of the IM methodology, the tasks were inherently complex, challenging and, particularly in the peer-driven condition, highly collaborative. As such, the findings in relation to increased team orientation in the peer-driven condition are consistent with the work of Fransen, Weinberger, and Kirschner (2013).

The current finding in relation to perceived efficacy of IM represents another important finding for IM-based CSCL methodologies. While, broadly speaking, students in both conditions found the process useful and engaging, those in the peer-driven condition reported significantly higher levels of perceived efficacy of the IM process, suggesting that the increased empowerment of the students, who were driving the deliberation and prompting process, contributed to a greater sense of value and perceived success of the process. Importantly, these findings are consistent with the findings of Cho and MacArthur (2010), who found that students who received feedback from multiple peers made greater improvements on written tasks than those who received feedback from a single teacher. Cho and MacArthur suggest that while peers may not have the same level of expertise as teachers, they may provide comments which are more accessible to fellow students, and may be better able to recognise difficulties in conceptualisation or understanding due to their own similar perspective. It seems plausible that, in the same way that peer engagement contributed to greater improvements in written tasks, receiving accessible, relevant prompts from a peer can contribute to a greater sense of consensus, and perceived efficacy of the collaborative process.

As well as considering students’ outcomes-related perceptions of the process, including perceived efficacy, perceived consensus, and team orientation, it is also necessary to consider how students respond emotionally to peer-driven prompting, particularly given that the experience of peer-prompting may be unfamiliar to students. In the current study, although the IM process was new to students, results highlighted a positive response to the peer learning experience. In addition to reporting higher levels of both perceived consensus and perceived efficacy of the process, those in the peer-driven group reported a significantly greater reduction in discomfort in the group learning process. This may be due to the increasingly open nature of the sessions, where the facilitator gradually models, simulates, and passes over control of the task to the students. More generally, these findings have implications for the adoption and sustained use of such collaborative methodologies by students or other working groups as, in effect, high levels of perceived consensus and perceived efficacy, and reduced levels of discomfort in group learning, suggest a significant level of endorsement of the methodology.

With regard to the types of argumentation coded during the IM sessions, the results of the CACS analysis in MEPA showed that students in the peer-driven prompt condition displayed higher levels of argument sophistication, with higher incidence of CACS codes across all major categories. More specifically, when compared with the facilitator-driven condition, participants in the peer-driven prompt condition demonstrated significantly higher levels of amplifications, justifications, acknowledgements, and challenges. This suggests that those in the peer-driven prompt condition were engaging at a higher level of consideration, analysis, and evaluation of the claims presented during IM work, and made more effective efforts towards achieving a level of understanding and consensus within the group, prior to voting. For example, in the category of reasoning activities, while elaborations (i.e., statements that support other statements by providing evidence, reasons or other support e.g. “Yes because if you’re open minded you hear everyone’s ideas” were similarly evident in both conditions, amplifications (i.e., statements that explain or expound upon other statements to establish the relevance of an argument through inference e.g. “I think it’s a yes because if you put yourself in the situation, say, if you were in a group and you were set as the team leader you could be like right guys this is what we’re doing and that’s how it has to be done but if you have the patience you can take the time to listen and decide as a group, if you aren’t patient you wouldn’t do that”) were observed more often in the peer-driven prompt condition. In this way, those in the peer-driven prompt condition were moving beyond accumulation of evidence and support in their reasoning activity - they were working further to establish how this reasoning relates to the problem at hand. Similarly, in the category of convergence-seeking activities, while there was no significant difference between levels of agreements (i.e. statements that express agreement with another statement e.g. “Yeah that could happen too”) across the two conditions, the level of acknowledgements (i.e. statements that indicate recognition and/or comprehension of another statement but not necessarily agreement with another’s point e.g. “I think you can be motivated like to want to achieve the goal but it doesn’t necessarily mean that you’re going to try and encourage everyone like”) was significantly higher in the peer-driven prompting condition. This suggests that students in the peer-driven prompt condition, while engaging in convergence-seeking activities and moving towards consensus, remained open to the suggestions of others, while also remaining critical in their analysis. Importantly, these patterns of argumentation also suggest that students were not moving towards consensus for the sake of consensus but rather they were engaging in a process of deeper analysis and evaluation of their peer’s arguments, before reaching a level of perceived consensus. From a learning perspective, this is an important distinction as dissent and critique are conducive to learning and reflection, both at a societal level (Sunstein, 2005) and in the classroom (Johnson, Johnson, & Smith, 2007).

When examining the relational complexity of the models or structural hypotheses generated by students, the current study revealed no significant differences between the two experimental conditions. This suggests that, although those in the peer-driven prompt groups engaged in more complex patterns of argumentation, this was not reflected in the structural complexity of yes/no relationships in the IM matrix structures generated. This finding is in contrast to a previous study, where it was found that differences in structural model complexity were coupled with differences in argumentation complexity in groups that received either task-level facilitator prompts or process-level prompts (Harney et al., 2015). In other words, while structural models may accurately reflect the consequences of more or less complex and varied patterns and argumentation when process prompting is compared with task-level prompting, in situations where process prompts are delivered by a either facilitator or by peers, while differences in argumentation complexity may be observed, these differences may not translate into differences in the structural complexity of systems models generated by groups.

The IM methodology is well established in the applied systems science literature and has been successfully applied in a wide variety of scenarios to accomplish many different goals, including assisting city councils in making budget cuts (Coke & Moore, 1981), developing instructional units (Sato, 1979), improving the U.S. Department of Defence acquisition process (Alberts, 1992), promoting world peace (Christakis, 1987), improving tribal governance processes in Native American communities (Broome, 1995a, 1995b; Broome & Christakis, 1988; Broome & Cromer, 1991), and training facilitators (Broome & Fulbright, 1995). However, as noted by Harney et al. (2015), the type of the prompts, instruction, and guidance provided by the facilitator throughout the IM process is crucial. Building upon such findings, the results of this study suggest that control of the delivery of prompts in a collaborative learning exercise such as IM, can be passed over to students, with positive implications for learning. However, when considering the implications of these results, it is important to note that the teacher remains crucial to this process. As detailed in the procedure, in the peer-driven prompt condition the teacher first models the use of the graphic organiser to deliver prompts (i.e. uses the prompts to elicit discussion), then coordinates peer-to-peer interaction, by using prompts from the graphic organiser to facilitate interaction and engagement (e.g. “John, we’ve heard an argument provided by Anne, how could we provide some support for this claim?”), before finally handing over control of the prompting to the students. As such, these findings are consistent with previous research by Van Steendam et al. (2010) who found that modelling of peer feedback behaviour, followed by emulation of this behaviour can improve the quality of peer feedback delivered by students. These results are also consistent with research by Sluijsmans et al. (2002), who found that students who received training in peer assessment and feedback outperformed a control group on peer assessment and feedback quality, in that the modelling and coordinating phases in the peer-driven condition in the current study essentially amount to a form of training.

In the current study, we have extended this research to focus on the potential role of peer groups in taking over the role of the facilitator, thus empowering their collaborative experience, while also providing one of the first experimental demonstrations of the effects of peer-driven prompting on outcomes in the application of IM in an educational context. Furthermore, the finding that peer-driven prompting resulted in both positive perceptions of the learning process, and key indications of higher-level learning outcomes, has important implications as students often fail to realise how much they have learned in team-based learning (Michaelsen and Sweet, 2008). These key collaborative learning outcomes, uniquely supported by peer learning, were reflected in the current study not only in students’ argumentation and complexity of their reasoning, but also in their perceptions and attitudes towards the learning process and group experience. Overall, these findings highlight the potential for a range of key benefits of peer-driven learning in CSCL.

Limitations

There are a number of limitations which must be taken into account in the current study. First, while considerable efforts were made to standardise the learning conditions in each group, the nature of collaborative learning research is that differences in interactions between group members is possible, and, as such, the interactions between students within the four groups in each prompt condition may have varied in ways beyond the control of the researchers. However, all efforts to minimise such variability were made, including the fact that trained facilitators operated within strict protocols at all times during the study.

Second, there was a gender imbalance in the sample of this study with a ratio of approximately 4:3 females to males. As noted by Skinner and Louw (2009), this is a common sampling issue in university samples, especially in the case of psychology students. While there is limited evidence to suggest gender differences in peer learning, a study by Webb (1984) found that high level elaboration was more likely to be elicited by asking a question of a female peer, than a male. However, other studies have found that gender differences in peer learning are diminished when hints (e.g. prompts) are provided (e.g. Ding & Harskamp, 2009) or when students are given guidance in facilitating interactions (Gillies and Ashman, 1995).

Third, the participants in this study were predominantly students who received all of their education to date within the Irish education system. As such, their prior experiences of peer learning and groupwork may vary from students in other countries. It is possible that these results would be more or less pronounced in the case of students who have differing levels of prior experience with methods of peer learning. As such, future research should seek to replicate these findings within the educational systems of other countries.

Conclusion

The results of this study suggest that the positive effects associated with process-level prompts in collaborative learning contexts (Harney et al., 2015) can be replicated when prompts are driven by peers as opposed to expert facilitators or teachers. This is an important finding when one considers (a) that many studies have found that formative assessment (including feedback and prompting) is a vital component of education, and (b) resource constraints in many Universities have led to a reduction in the quantity and quality of feedback received by students (Gibbs & Simpson, 2004). One possible solution to the limited scope for instructor feedback is better use of in-class formative peer learning, designed to facilitate and accelerate learning for individuals and groups. While the results of the current study may have positive implications for teachers, in terms of reducing the burden placed on them by diminished resources (time), critically, the positive effects of peer prompting on students learning experiences was clear. In the current study, students reported a positive response to the peer learning experience, reporting higher levels of both perceived consensus, and perceived efficacy, suggesting that students found the process to be more efficacious and beneficial than a predominantly facilitator-driven learning process. Students reported lower levels of discomfort in group learning, with results showing that those in the peer-driven condition reported a significant reduction in discomfort in the group learning process. Students in the peer-driven condition also reported increased levels of team orientation, which may have positive implications for the development of teamwork skills in both educational and organizational contexts. Finally, students in the peer learning condition demonstrated more complex modes of argumentation which suggests that supportive and structured peer learning conditions can facilitate the development of key critical, collaborative, and systems thinking skills that are highly significant educational outcomes in a world that is calling for more productive modes of collaboration across all sectors of society.

References

Ackoff, R. L. (1981). Creating the corporate future: Plan or be planned for. New York: John Wiley and Sons.

Alberts, H. (1992). Acquisition: past, present and future. Paper presented at the meeting of the Institute of Management Sciences and Operations Research Society, Orlando, FL.

Alexander, G. C. (2002). Interactive management: An emancipatory methodology. Systemic Practice and Action Research, 15(2), 111–122.

Alexander, R. (2004). Towards dialogic teaching. York: Dialogos.

Andriessen, J. (2006). Arguing to learn. In: Sawyer, R.K. (Ed.), The Cambridge Handbook of the Learning Sciences (pp. 443–460). New York: Cambridge University Press.