Abstract

Small sensors may now be used to analyze and evaluate sports and physical activities via communication with smart tools and ubiquitous computing devices. Digital Financial support is the extensive motive of this research where smart monitoring reduces the cost of players paying to extra labors. Also, accurate engineering solutions are achieved for sports monitoring using 6G network. Through the use of the underlying framework and these sensors, we are able to gather data from a variety of physical activities from any place at any time. That being said, a lot of data is produced by these gadgets. In order to evaluate a sportsperson’s physical activity, it is thus essential to have as much data as possible. Sports physical activity analysis is now done at a level that might be changed by wearable devices with small sensors, edge and cloud computing, and artificial intelligence with deep learning technology. In order to improve the athlete’s profile by forecasting their physique and suggesting specific training, this research attempts to integrate the three pillars of physical activity based on these attributes. We can create a dataset from the genuine ecological settings thanks to a unique framework that is offered for this aim. We have read a great deal of material on sports and physical activity to guarantee the effectiveness of our job. The limits of the sensors, data gathering, and processing methods from the literature were highlighted. We conjecture that with the aid of edge and cloud computing devices that let the data to stream without limitation, the collection of data, continuous measurement, and analysis of various processes will result in a more dependable model. The swimming athletes in this article are trained using personalized programmes and profile-type techniques. Based on the trial findings, the proposed integrated system offers coaches and players important data that facilitates targeted training, enhanced athlete healthcare, and performance optimization.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The use of tiny sensors, low-power computer devices, and communication technologies has revolutionized the way we collect environmental data via ubiquitous computing. Numerous uses of this technology may be found in sports and athletics, especially when it comes to tracking players’ physical activity and performance. In running-based sports like rugby, football, and hockey, for example, motion analysis is crucial because it allows researchers to examine players’ movements in authentic settings. While tactical and strategic analysis before and after games in sports like football, hockey, and cricket have become standard, wearable technology offers insightful information on the health and well-being of players. Microcomputers, both wired and wireless, have been used more and more for data exchange and data transmission during the last thirty years. Smaller, more accessible computer systems are being developed as a result of this trend [1, 2]. It is clear from looking forward that as computer devices become more widely accessible and inexpensive, the future of data and information resides in the networked world. The internet of items (IoT) has emerged as a result of this change, with its emphasis on linking individual items or devices anywhere, at any time. However, there are a number of obstacles that the Internet of Things must overcome. These include issues with security and privacy, data sensing and monitoring, processing and storage capacities, interoperability, and specialized protocols [3, 4].

Edge computing has become a factor in addressing some of these problems, including data processing, aggregation, and network latency. Edge computing makes it possible to process information locally by concentrating processing power closer to the source sensor at the edge. This method facilitates data transfer both upstream from the source to the cloud and downstream from the cloud to the source. Wearable technology that tracks training load and health is now advised by sports scientists and coaches at the source level. Wristwatches cover the majority of these factors, and these small, light devices which are often worn on the skin’s surface provide useful information on things like heart rate, acceleration, movement artifacts, and length of sleep [5, 6]. To improve data collecting, streamline data processing, and eliminate delay, integrating these technological pillars is essential. This will eventually result in more efficient models for managing athletes and sports. Because swimming is done underwater and requires precise movement skills owing to the complexities of human movement in water, it is a speed-based activity with special demands on physical ability and technical competency.

Many young swimmers still need the use of traditional teaching methods that may need to be updated, despite the principled simplicity of physical swimming training procedures. To help swimmers achieve systematic, thorough, and efficient fundamental physical training, it is imperative to address this disparity and investigate advanced training ideas and methodologies. To maintain the safety and general development of swimmers, this problem is very important and has to be addressed quickly within the swimming community [7, 8]. In light of the aforementioned, we will talk about the methodology and structure in this article for gathering data from individuals (in this example, athletes or gamers) and processing it at the edge level to remove latency and interruptions. Sending the gathered data to cloud computing via networking devices is the next step. In order to create the athlete’s model (profile), the cloud computing devices will receive the dataset from Edge computing and feed it to an artificial neural network. The proposed model proposes training rest and sleeps regimens and uses many indicators and factors to predict athletes’ performance. Figure 1 shows the usual system structure in a more comprehensive perspective. Sensing devices are the foundation of measurement since they perceive their surroundings and gather data from them. As shown in Fig. 1, this pillar is known as measurement, and the other is processing, or cloud computing. Analysis is the last tenet of this method. The processing and analysis pillars will be the focus of this article. Using an ANN model, the model will automate profiling. There will be data gathering, dataset compilation, and data cleansing at the Edge level.

The structure of this document is as follows: Sect. 2 provides a comprehensive overview of swimmer movement tactics, highlighting the shortcomings of conventional coaching approaches and exploring the potential advantages of artificial intelligence and cloud computing. Our research methodology is outlined in Sect. 3 and involves data collection using wearable technology and sensors, as well as analysis utilizing cloud computing and artificial intelligence techniques. The results and observations from the data analysis are presented in Sect. 4, emphasizing the limitations and new insights that were found. Section 5 concludes by summarizing the main conclusions, including the use of augmented and virtual reality to sports education.

The main goal of this research is to integrate cutting-edge technology, such as artificial intelligence with deep learning skills, edge and cloud computing, compact sensors, and deep learning capabilities, to transform the analysis of physical activity in sports for financial friendly playing. By utilizing these technologies, the following goals will be achieved: Use tiny, wearable sensors to continuously gather a variety of data from different physical activities in real-time and from any place reduces the cost of in person monitoring and time. This method makes it possible to collect extensive data sets that accurately depict natural environments, offering a solid basis for study.

1.1 Motivation

The study of sports and physical activity has been transformed by the development of tiny sensors and ubiquitous computer devices, but there are still several drawbacks to take into consideration.

-

Privacy Concerns A major privacy risk arises from the mass gathering of information about an individual’s physical activity. Private information on a person’s performance, health, and even whereabouts may be included in the data gathered. There’s a chance that this data may be abused or exploited if appropriate rules and protections aren’t in place, which might result in ethical issues and privacy violations.

-

Overloading and Managing Data The amount of data produced by these sensors might easily become too much to handle. Large computing resources and advanced data management strategies are needed to process, store, and manage such massive datasets. Without effective techniques for handling data and storage, the insightful information hidden within the data can go undiscovered or take too long to uncover.

-

Dependability and Accuracy Even with their increased sophistication, tiny sensors may still make mistakes and inconsistencies. The quality and dependability of the data gathered might be impacted by variables such sensor calibration, ambient circumstances, and individual variances. In the context of sports and fitness, ensuring the data’s quality and consistency becomes essential for insightful analysis and decision-making.

-

Interpretation and Setting Contextual knowledge is necessary for successful interpretation of sensor data, which offers insightful information about a person’s physical activity. Without background information, such knowledge of the particular sport or activity being engaged in, the data might be interpreted incorrectly or lead to incorrect conclusions. To get valuable insights and prevent making mistakes, sensor data must be combined with domain knowledge.

2 Research Contribution

Some of the precise highlights the outcomes of this study are the following,

-

The study offers a revolutionary framework that combines edge and cloud computing, wearable technology, and artificial intelligence with deep learning method to improve athlete profiles by forecasting their physical characteristics and suggesting tailored training. This contribution is the creation of a novel strategy that makes use of contemporary technology to solve problems in sports analytics.

-

The study does an extensive literature assessment on sports and physical activity, emphasizing the shortcomings of current sensors, data gathering methods, and processing approaches. This contribution offers a comprehensive grasp of the state-of-the-art at the moment and guides the creation of the suggested framework.

-

Through the use of edge and cloud computing devices, the study helps to surmount data streaming constraints, allowing unrestricted continuous monitoring and analysis of diverse physical processes. The suggested framework’s efficiency and scalability are improved by this integration, making it easier to monitor and analyze athlete performance in real time.

-

With a focus on swimming athletes, the study uses profile-type techniques and personalized training. This contribution highlights the feasibility of the suggested framework for customized athlete development plans by illustrating its adaptation and versatility across several sports disciplines.

-

The integrated method’s efficacy is confirmed by the experimental findings, which also show that coaches and players get substantial advantages. Athlete management techniques and sports analytics will profit from targeted training, performance optimization, and enhanced healthcare for athletes. These advantages demonstrate the usefulness and influence of the suggested framework.

3 Literature Survey

In the past, athlete testing was carried out in a lab setting with predetermined parameters. Intelligent mobile and network gateways evaluate and monitor the player or athlete. Physical ailments and chronic diseases may be examples of this. In this system, health databases are essential. The training equipment was designed only for use in lab settings; it was not meant to be utilized in an athlete’s actual environment. Since these devices were used in the player’s almost stationary posture, the sensor sampling result was precise; nevertheless, it does not represent the athlete’s actual playing field. It has long been unclear how precision and playing field conditions are traded off [9]. Wearables also referred to as wearable devices or wearable technologies are tiny electrical and computer devices or wireless communication devices that may be integrated into clothing, gadgets, or human bodies.

In addition to being linked to other technologies that communicate with human bodies, these devices may be worn on people. Certain devices, like microchips, are intrusive. Because they provide athletes’ biometry and physiological data via scanning and other sensors, these wearables vary from smartphones and tablets. It is simple to connect the gadgets to human bodies since they are compact and portable. These gadgets are thus battery-light and hand-free [10]. With rising yearly orders from 2016 to 2021–2022, wearable technology has been growing steadily over time. That being said, as

Fig. 3 shows, there was a significant increase in sales from 2019 to 2022. The worldwide Covid-19 epidemic was one of the main causes of this rise, but there were other contributing aspects as well. A greater emphasis on health and wellbeing resulted from the disruption of everyday living caused by the epidemic.

The need for wearable technology to track and monitor numerous health metrics increased as individuals grew more aware of their own well-being. People could remain connected, keep an eye on their activity levels, and check their vital signs using these gadgets, which ranged from fitness trackers to smartwatches with integrated health sensors. This led to the creation of the moniker “internet of wearable devices” (IoWT), which highlights the integration of integrating wearable tech into the networked system of connected gadgets and data exchange [11]. Covid-19’s effects were felt globally, which sped up the development and widespread use of chips and wearable technology. These gadgets were essential for tracking down contacts, identifying symptoms, and keeping an eye on people’s health. In the battle against the pandemic, wearable technology with temperature sensors, heart rate monitors, and oxygen saturation measuring capabilities proved indispensable. The wearable device market grew dramatically during this time due in large part to the growing acceptance of such devices. Eight different product categories have been discovered by research and surveys by looking at the rise of wearables over the last ten years. These include of wearables, smart jewellery, health monitoring gadgets, smartwatches, smart clothes, virtual and augmented reality headsets, sports and fitness trackers, and implantable gadgets.

Products in the sports, fitness, health, and lifestyle categories like smartwatches have continuously attracted the most attention and interest from customers [12]. These gadgets appeal to those who value their health and want to lead active lives since they provide functions like step counting, heart rate monitoring, sleep tracking, and stress management. The user experience is further improved by integrating these wearable devices with web and smartphone apps, which provide data analysis, goal monitoring, and personalized insights. The emergence of edge computing as a crucial technology in data processing has made it possible to generate and analyze data locally and instantly. Edge computing provides a multifaceted approach to data processing and storage, linking the physical and digital domains, as reported in studies and surveys [13]. The many uses of edge computing are shown in Fig. 1 which also includes its incorporation with cloud computing, blockchain, the Internet of Things (IoT), and artificial intelligence (AI). Wearable technology may use edge computing to move certain processing duties from the cloud to the edge of the network. This lowers latency and enables real-time analysis, making it especially useful for time-sensitive applications like activity tracking, health monitoring, and sports performance evaluation.

Data security and integrity are ensured by combining edge computing with other technologies, such as blockchain technology. Simultaneous data exchange and smooth interaction between wearables and other smart devices in the environment are made possible by the integration with IoT. AI and cloud computing are also essential for sophisticated analytics, data storage, and the extraction of insightful information from the massive amounts of data that wearables gather. Wearable technology is driven by innovation in part by the use of edge computing, the influence of the COVID-19 pandemic, and the interconnection of wearable devices. The growing awareness of one’s own health and well-being is driving the need for wearable technology, which will be further enhanced by developments in edge computing and related fields. Utilizing real-time data processing, data management, data quality, and communication are the goals of this study. The cloud receives this communication as well as accurate and clean data so that it may be used to make more wise judgments [14]. The accuracy and precision of wearable technology for real-time athlete monitoring during swimming are the main topics of the research in [15]. The writers examine several wearable gadgets that are applied to the body and employed on diverse areas of a swimmer, such as body suits, caps, and the body itself.

An optical sensor attached to the swimmer’s hat is one instance of a wearable technology included in the research. The sensor calculates a number of swimming-related metrics, including the swimmer’s speed and stroke count. The swimmer’s heartbeat is detected using optical sensors and smartwatches. The results show that the best way to minimize artifacts and provide more accurate readings is to make contact with the skin sensors. Sensors worn on the chest are also used for this. The absence of skin contact in the water is a significant challenge for underwater ECG recording. The research therefore highlights the need of gathering data in the same environment in which the swimmer performs. For this reason, sensors that can consistently gather data when immersed in water must be developed by researchers. The research also emphasizes how important it is to reduce noise interference from other sources, since they might compromise the accuracy of the data that is captured. Because cloud computing is dispersed, it provides a strong answer to artificial intelligence’s (AI) computationally and data-intensive needs. AI now relies heavily on cloud computing, which is essential for large-scale processing and scalable storage. AI is sometimes said to have its roots in the machine learning process, which entails obtaining information from enormous datasets. Deep learning has evolved as a more sophisticated method, enabling more sophisticated analysis and presentation of solutions than machine learning, which offers insightful analysis of data intricate data processing and knowledge extraction [16]. There are benefits and drawbacks to cloud computing with AI integration.

Cloud services have access to vast amounts of data, yet some AI models may only need to use a portion of it. On the other hand, information transmission becomes smooth as neural networks grow over time and models share knowledge to overcome obstacles. AI and cloud computing work together more harmoniously as a result of this ongoing learning process, which improves productivity and efficiency [17]. Using cloud computing and AI to its full potential may greatly help swimming. Improving swimming movement skills is one of the main areas where this integration might have a revolutionary effect. Widely dispersed data from wearables and underwater sensors, among other sources, may be effectively stored and analyzed by using cloud-based resources. In order to assess and derive important insights about swimmers’ stroke mechanics, general performance, and movement patterns, this data may then be fed into AI algorithms and neural networks.

AI models may continually train and become better because to the cloud’s scalable storage, which makes it possible to gather enormous volumes of data over time. Evaluations of swimmer patterns of motion may be made more thorough and precise by pooling the learning from several designs to address individual algorithm limitations [18]. Coaches and swimmers may get immediate feedback and tailored suggestions since AI algorithms can analyze this data fast due to the cloud’s processing power. Artificial intelligence and cloud computing together facilitate the development of personalized training plans and efficiency optimization while also advancing our understanding of swimmer movement techniques. Coaches may customize training routines by using AI-powered statistics to pinpoint each swimmer’s areas of growth. The AI models grow in tandem with the swimmers, responding to their unique demands and changing tactics thanks to the cloud’s continual learning process.

4 Investigative Techniques

4.1 The Structure

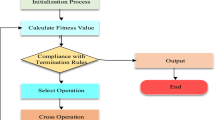

Wearable technology, in particular the addition of a Gyroscope, a muscle movement sensor, and a heartbeat sensor, is the first step in putting the fundamental framework into practice. To guarantee correct findings and minimize erroneous data readings, data sampling is done at the edge level. By reducing the possibility of noise interference, this method guarantees the authenticity and dependability of the data gathered from wearable devices [19, 20]. By gathering data from wearable devices and sending it in bulk to the cloud computing infrastructure, the edge device serves as a receiver. After that, the information is processed and stored on the cloud, where it is analyzed using a profile model that is based on mobility and health databases. Figure 2 shows the overall structure. This framework is used to automate the swimmers’ profiles. A swimmer’s profile incorporates their motion style, heart rate, and muscular motions [17]. As previously said, the picture illustrating the AI implementation shows how cloud computing is used on the dataset. When determining the athlete’s motion and angle, the gyroscope is essential. The movements are divided into five groups using artificial intelligence: swimming, turning, block, diving, and underwater [21]. The K-Mean Algorithm’s unsupervised learning strategy is used to accomplish this categorization.

A deep neural network (DNN) is a type of artificial neural network that has multiple layers between the input and output layers [22, 23]. This data includes transaction information and other vital records. Storage sustainability is crucial because blockchain networks generate increasing amounts of data over time, and maintaining this data can be resource-intensive [24, 25]. A central control unit, sometimes called a base station or sink node, oversees network operations and manages clusters [26, 27]. In communication networks, clustering helps to organize the network and optimize the allocation of resources [28, 29]. In control systems, gain determines how strongly the control system responds to changes or errors in the desired movement [30, 31].

The categorization of movements into these predetermined groups is made easier by this algorithm.

Edge computing plays a crucial role in the context of swimming pools. Even while submerged in water, athletes maintain a continuous connection to computers that are fully supported. The swimmer is in continuous skin contact with the wearable devices, guaranteeing that the data is immediately taken and stored in the storage devices linked to the edge computer. As this technique minimizes artifacts and noise while preserving the integrity of the signals. Moreover, edge High processing power and low power consumption are features of computing. Edge computing helps ensure that data is sent efficiently, saving time and money, by giving the cloud a clean and dependable dataset.

4.2 Data Flow

Data capture, data pre-processing, and AI algorithm are the three steps of the comprehensive system that are split into the flow diagram, as shown in Fig. 2. Gyroscopes, pulse sensors, and muscular contraction sensors are just a few of the sensors built into the swimmer’s body to get the data that’s required. In order to gather accurate and reliable data, these mobile gadgets are safely attached to the swimmer’s physique. In addition to providing vital movement-related data, the gyroscope sensor plays a critical role in determining the swimmer’s path and velocity in the water. In order to fully assess the swimmer’s style and effectiveness, the Gyroscope sensor records the swimmer’s precise rotational and vertical movements. In contrast, the swimmer’s heart rate is continually monitored by the heartbeat sensor as they exercise or swim. Calculating the swimmer’s heart rate and exertion levels need this information. Trainers and instructors may modify training regimens to optimize efficiency and reduce undue exertion by regularly monitoring swimmers’ heart rates.

The muscle motion sensor additionally measures and records the swimmer’s repeated muscular contractions throughout strokes and movements. The areas for technique and overall water effectiveness development may be determined by analyzing this data, which provides important information into the swimmer’s muscle engagement and synchronization. A sizable and dynamic dataset that captures several facets of the swimmer’s performance is created when these sensors are connected. The AI Process and Data Pre-processing, two further stages of the system, are built on top of this data. The ultimate goal of this comprehensive monitoring system is to record crucial data that will provide significant insights into the swimmer’s technical proficiency and biological responses.

To analyze the swimmer’s cardiovascular reaction throughout the exercise, the swimmer’s pulse is taken at the start of the swim. Trainers and coaches may tailor training regimens and maximize performance by evaluating the swimmer’s degree of effort and endurance. In tandem, the apparatus records the swimmer’s muscular actions, painstakingly analyzing the coordinated movements and synchronization of the muscles used in various strokes. With this information, a detailed analysis of the swimmer’s technique may be conducted to find any flaws or potential improvements. The device also captures the swimmer’s style of moving their organs, concentrating on internal organ alignments and synchronization during swimming. In order to decrease drag and increase the swimmer’s speed, Efficaciousness in the water, this element offers details on the swimmer’s body alignment and water movement.

A thorough picture of the swimmer’s technical and physical characteristics at every performance level is produced by combining these many data points. Coaches and trainers may analyze and improve the swimmer’s training methods, stroke mechanics, and overall performance using this thorough recording approach, resulting in the best outcomes.

4.3 Computation at the Edge Level

The process then moves on to edge-level data cleaning and processing after the initial data gathering. Edge computing is split into two distinct phases, each with a unique function. The collected signals are suitably sampled in the first stage to prevent spurious motion or pulses. This sampling procedure guarantees that the data correctly depicts the swimmer’s movements and heartbeats, reducing the possibility of errors or incorrect readings. The data is sorted and processed using the K-Mean Method in the next stage of the edge-level procedure. This programme divides data into discrete groups so that suitable and well-organized data analysis may be performed. Finding trends and patterns in the data may help with the examination of the swimmer’s efficiency by providing the right labels or comments. The sample rate is based on the maximum allowable error in order to optimize the sampling procedure.

The difference between the final output of the algorithm at a given time (t) and the received signal from the sensor is represented by this error, which is represented by the symbol ε(t). By closely regulating the sample rate and reducing error, it is possible to reliably collect and analyzed data with high-quality outcomes. Furthermore, it’s critical to remember that temporal continuity is preserved when the sampling rate (Ts) is raised while the input remains constant. The link between the sampling rate and the smooth representation of time throughout the data processing process is shown in Fig. 3, which illustrates this temporal continuity. One may compute it using Eq. 1.

The mistake in the equation above is denoted by \(\varepsilon \left(e\right)\), and the particular time is represented by \({e}\)

The Nyquist criteria in signal processing plays a major role in determining the right sample rate needed for precise signal reconstruction. This criteria states that the sample rate need to be at least twice as fast as the input signal’s highest frequency component. We can be sure that the reconstructed signal accurately depicts the original input by using the Nyquist criteria. It is important to remember, nevertheless, that certain systems or devices may not be able to process signals in accordance with the Nyquist criteria. Edge coutation shows to be a useful substitute in certain circumstances. Edge computing is the process of carrying out computational operations directly on edge sensors or devices that are closer to the data source. We can get beyond the constraints of devices that are unable to handle signals using the Nyquist criteria by using edge computing. These edge devices have the computing capacity required to carry out local signal processing operations. It eliminates the need for conventional techniques that need a greater sample rate by enabling real-time analysis and processing of the input signal to provide prompt and accurate findings. Therefore, using edge computing offers a workable alternative for gadgets that could need to satisfy the Nyquist criterion’s criteria. Signal processing may be made dependable and efficient by combining even in situations when the Nyquist condition cannot be satisfied, the computation closer to the data source.

The lowest sampling rate, denoted \({f}_{min}\), is found by employing the highest component of the frequency system while applying edge computing to the Nyquist criterion. This highest component is defined as \(\mathcal{G}\), and the definition is \(=2\pi \mathcal{G} \) now

The number of bits handled by the edge computer is denoted by n in the equation above. When the derivative of \(\mathcalligra{f}\left({e}\right)\) is maximised, the greatest rate of change, \(\left({e}\right)\), indicated by \(\mathcal{F}\), takes place. As a result, \(\mathcal{F}\)’s value may be determined using Eq. 3.

Denoising the cardiac signal is another issue as swimming causes many signal incursions.

The windowed analysis is used to remove interference from the cardiac signal. It is also referred to as the window after the location of the R-wave and the QRS dominant are determined. According to Eq. 4, the length of this window QRS dominating scale, QRS itself, and the position of each detected R-wave are all regarded as the integer function.

The sample frequency is represented by \(f\), the sample index that varies using Eq. 5, and \({\mathcal{Q}}_{\mathcalligra{j}}\) is thought to be the \({\mathcalligra{j}}\)th \(\mathcal{H}\)-wave discovered in the equation above. The constant parameters in the equation are and \(\beta \).

After defining the window using a formula, we can see that its size varies from position 1 to its maximum, \(\gamma .\gamma \mathcal{P}\mathcal{H}\mathcal{V}\), which occurs in between each pair of \(\mathcal{H}\)-wave detections.

The denoising window now moves through the noisy signal to reduce the noise, and as a result, the mean is determined by the signal’s centre. Since the ECG signal acts like a discrete distance function \(\mathcalligra{x}(\mathcalligra{y})\), where \(\mathcalligra{y}\) is the sample signal index, denoising signal \(\mathcalligra{x}(\mathcalligra{y})\) at the sample index \({\mathcalligra{y}}\)th will be obtained using Eq. 6.

4.4 Pre-processing Algorithms

Signal processing is done using method-1 in the Nyquist method on the Raspberry \({\mathcal{Q}}_{\mathcalligra{j}}\). The first step in the procedure is to attach an analog-to-digital converter (ADC) to the Raspberry \({\mathcal{Q}}_{\mathcalligra{j}}\) in order to capture signals from sensors. Once the signal has been acquired, the highest frequency component is found and the sampling frequency is cut in half to get the Nyquist frequency. The signal is subjected to a digital low-pass filter to eliminate noise above the Nyquist frequency after it has been identified. Ultimately, signal decimation is carried out, which lowers the sample rate to twice the Nyquist frequency and lessens the computational burden on further processing steps. With the Raspberry \({\mathcal{Q}}_{\mathcalligra{j}}\), this technique enables effective signal processing and noise reduction.

As per the above equation, once the edge computer samples and receives the data in real time, it’s necessary to categorize and mark the data for further processing. The following is the ANN Algorithm:

Note: An overall foundation for the Artificial Neural Network (ANN) algorithm is provided by the pseudocode. Depending on the details of your implementation, you may have to add further functions or operations to compute the new centre, assign points to cluster centres, calculate distances, and establish convergence criteria.

To categorize the five data points in our dataset into \(\mathcal{S}\) points,, we used the K-means method. The K-means technique may be used to categorize random data without predetermined annotations since it is an unsupervised learning approach. In our solution, we used Raspberry Pi as an edge computing device. After being sampled and categorized, the edge device’s data is sent to cloud computing for further intelligent processing. Wi-Fi in bulk enables the data transmission from the edge device to cloud computing, guaranteeing effective data transfer. The time of the data transmission corresponds to one whole swimming cycle. The data is sent to the cloud computing infrastructure as soon as the swimmer completes a cycle. convolutional neural networks (CNNs) are deep learning algorithms used in cloud computing environments to assess and learn from athletes’ swimming motions. CNNs work well with complicated data, such photos or time series data, and their implementation usually calls for specialized hardware like GPUs and a lot of computing power. Through the use of cloud computing’s scale processing capabilities, the CNN algorithm is able to effectively evaluate the sent data and derive significant insights into the swimmer’s movements. Figure 4 shows the general procedure and operation of the CNN algorithm in the cloud computing environment, emphasizing the many levels and processes involved in processing the swimmer’s data.

The following method may be used to calculate the two-dimensional convolution of two matrices, \(\mathcalligra{g}\) and \(f\). To ascertain the meaning of each letter, we shall examine the notation in more detail. \(\mathcal{H}\left[\mathcalligra{k},\mathcalligra{t}\right]\) is the output matrix value at position (\(\mathcalligra{k},\mathcalligra{t}\)). In other words, the outcome of the convolving of the matrices \(\mathcalligra{g}\) and \(f\) at (\(\mathcalligra{k},\mathcalligra{t}\)). (\(f*\mathcalligra{t}\))[\( \mathcalligra{k},\mathcalligra{t}\)]: Here is an extra method to describe the convolution on (\(\mathcalligra{k},\mathcalligra{t}\)) of matrices \(f\) and \(\mathcalligra{t}\). \(\mathcalligra{g}\) [\(\mathcalligra{i},\mathcalligra{m}\)]:

At coordinates \((\mathcalligra{i},\mathcalligra{m}\)), this is the component of matrix \(\mathcalligra{g}\).\( f\) [\(\mathcalligra{k}-\mathcalligra{i},\mathcalligra{t}-\mathcalligra{m}\)]: This is the matrix \(f\) component located at (\(\mathcalligra{k}-\mathcalligra{i},\mathcalligra{t}-\mathcalligra{m}\)) coordinates. We must convolve the matrices \(\mathcalligra{g}\) and f to get the output matrix \(\mathcalligra{H}\left[\mathcalligra{k},\mathcalligra{t}\right]\) point (\(\mathcalligra{k},\mathcalligra{t}\)) in the output matrix and uses the double sum symbol (\(\mathcalligra{i}\) \(\mathcalligra{m}\)) to add these products at all legitimate places of \(\mathcalligra{g}\) in \(f\). The subscripts \(\mathcalligra{i}\) and \(\mathcalligra{m}\), which range from 0 to the dimension of \(\mathcalligra{g}\), represent the relative positions of the components of \(\mathcalligra{g}\) in \(f\). When combined, these equations comprise a basic signal and image processing procedure that is used to filter two-dimensional input and extract attributes from it.

Based on collected data, the Hybrid Artificial Neural Network for Swimmer Motion Clustering Algorithm-3 is designed to classify and cluster swimmer movements. The swimmer’s movement data is initially gathered and prepared by the software. After that, an unsupervised Artificial Neural Network (ANN) is trained using a self-organizing map (SOM) to group movements that are similar without the need for labelled data. A supervised neural network (ANN), such a multilayer perceptron (MLP), is trained using representative samples from each cluster to classify swimmer movements and assign them to distinct classes that correspond to various swimming styles. The supervised artificial neural network’s performance is assessed using a validation dataset. Last but not least, the hybrid ANN is used in real-time to cluster swimmer movements, using the data gathered from the supervised and unsupervised ANNs to achieve accurate and efficient classification.

We suggest a hybrid strategy in which characteristics are taken out of the first model’s layers and passed on to a second model for modelling and learning. Researchers began to take 1D CNN into account because of its ability to extract spatial and discriminative features from data. Nevertheless, LSTM has been used by several researchers, demonstrating its effectiveness with sequential and time-series data. After combining these two models, we use 1D CNN to extract features, which are then sent to LSTM for modelling and learning. The filter sizes in the first two layers of 1D CNN vary; for example, the first layer’s filter size is 64, whereas the second layer’s filter size is 128. Aside from filter size, both layers have a 3 kernel size and the ReLU activation function is used in both levels. The Max pooling layer, which has a pool size of two, comes after these two layers. Two LSTM layers are traversed by these CNN layer characteristics with each layer’s 64 cells having the same size. The flatten layer and dense layer with a softmax activation function come after the LSTM layer. Using this method, the optimizer is Adam has a 0.0001 learning rate. Figure 4 depicts the major structure of the suggested paradigm.

When athletes are engaged in physical activity, use small sensors that are integrated with smart tools and ubiquitous computing devices to gather various forms of data. With the help of these sensors, data can be collected in real-time and from any position, resulting in an extensive dataset that fully records the spectrum of motions and physiological reactions made by athletes.

Utilize edge and cloud computing to implement advanced data processing techniques. Edge computing makes it possible to process data instantly at the source, which is essential for giving coaches and athletes feedback in real time. The processing capacity required for more intricate studies, including deep learning procedures that examine enormous datasets in order to identify important patterns and insights, is made available via cloud computing.

5 Result and Discussion

The method for analyzing and categorizing swimmers’ motions is divided into three primary phases. The signal is sampled and de-noised in the first step to guarantee reliable results. The signals from the muscle movement sensors and gyroscope are recorded, and methods are used to remove noise and other artifacts that reduce the quality of the data. The second step’s primary objectives are to annotate and organize the data. This step involves evaluating the pre-processed signals and assigning labels or annotations to different movements, such as butterfly, breaststroke, and freestyle. To improve the accuracy of the subsequent analysis, any residual noise or unwanted data is also filtered out. The last phase of the programme involves classifying the swimmers’ movements using an AI-based classification system. Using the labelled and cleaned data, an algorithm may be trained using deep learning methods. Then, using the features and patterns it had extracted from the data, the AI programme may recognize new movements by breaking down the process into three distinct categories: the artificial intelligence algorithm for classification, cleaning and annotating, and sampling and denoising. The algorithm provides a thorough and organized way to accurately evaluate and categorize swimmers’ movements.

5.1 Sampling of Data

In edge computing, the Nyquist criteria were used to ensure accurate data sampling. The sample rate has to be at least twice as high as the element that appears in the data the most often, as per the Nyquist criteria. The edge computer system may get enough samples if these instructions are followed, allowing it to accurately represent the original signal. The gyroscope functioned flawlessly whenever the data was recorded at the edge level. The gyroscope measurements were captured more accurately and efficiently thanks to the edge-level sampling approach, which produced a more accurate depiction of the real motion. The method made sure that the sampled data gave a thorough and trustworthy representation of the original signal by using edge computing and the Nyquist criterion; the gyroscope data benefited from this strategy.

5.2 Applying Sampled Data to Heartbeat Sensor

To ensure precise sampling of the heart rate data, the Nyquist criterion was also applied to the heartbeat sensor. The use of the Nyquist criterion for sampling the cardiac signal is shown in Fig. 5. Accurate and timely heartbeat data collection is essential for the convolutional neural network (CNN) classification process since swimming motions are dynamic and have an influence on an athlete’s heart rate. For the purpose of efficiently capturing the heartbeat data, it was sampled 80 times per frequency. The realistic depiction of the athlete’s heart rate variations throughout different swimming positions and actions was made possible by the high sampling rate. The CNN classification method might increase the swimmer’s overall movement classification accuracy and make better judgements by taking into account the heartbeat data in addition to other sensor data.

5.3 KNN Algorithm for Unsupervised Classification

Figure 6 illustrates how to get the mean of five data points using the K-nearest neighbours (KNN) approach. The athlete’s heart rate and movement at each swimming activity stage are included in the data distribution shown in the figure. The KNN approach is used to calculate the average value of a given data point’s closest neighbours. This approach allows us to capture the athlete’s total pulse and motion pattern and characteristics, providing us with important insights into their performance throughout the various swimming phases.

The accuracy and error graphs for the K-nearest neighbours (KNN) Algorithm are shown in Fig. 7. As shown in Fig. 7a and b, respectively, the graph illustrates the relationship between the values and their accuracy and error rates. The graph shows the inverse relationship between the mistake rate and the values, showing that the error rate decreases as the values increase. On the other hand, the accuracy and values are directly correlated, meaning that as the values increase, so performs the accuracy. This graph shows how well the KNN Algorithm performs and how effective it is at increasing accuracy as well as lower mistake rates.

Following bulk data transmission and batch processing, the cloud system trains the data using convolutional neural networks (CNNs). The training and validation curves, which provide details about CNN’s performance throughout the training phase, are shown in Fig. 8. The graph illustrates how the system iteratively goes through the data during training in order to get the best match. Remarkably, the system uses 8 hidden layers of convolutions, 20,000 epochs, 28,000 gathered data points, and 20,000 epochs to attain an optimal state of convergence. This indicates that the model is very good at correctly recognizing swimmer movements as it reaches maximum accuracy in predicting the athlete’s proper motion.

The athlete’s exact real-time action prediction by the algorithm is shown in Fig. 9. This feature makes it possible to utilize the system for both athlete healthcare and coaching sessions.

Coaches may use the algorithm’s ability to accurately identify and classify different swimmer behaviours to evaluate athletes’ performances and provide tailored comments and instruction. Additionally, the accurate projections provided by the system provide crucial insights into the health and wellbeing of athletes, enabling the implementation of proactive measures to maximize training, avoid injuries, and enhance overall sports performance.

A swimmer may be identified by their distinct motions or swimming techniques using the yaw, pitch, and roll angles. Through the examination of these angles’ variations over time, we are able to pinpoint certain trends and attributes linked to various swimming methods.

In the simulation seen in Fig. 9, the swimmer’s position varies according to angle, defined by various movements inside the pool. This is the proposed categorization based on the swimmer’s movement as determined by the simulation model after training.

-

1.

Front crawling, or Freestyle

Yaw: The swimmer has almost constant yaw angle with just a few lateral head movements.

Pitch: During the stroke, the swimmer’s breathing causes an alteration in the angle of inclination. Your head rises slightly above the water’s surface on an inhale and falls during an exhale.

Role: With each stroke, the swimmer rotates evenly left and right as the body rotates along the longitudinal axis.

-

2.

Breaststroke

Yaw: The swimmers maintain a somewhat constant yaw angle, much as in freestyle.

Pitch: As the swimmer stretches his body forward and puts the swimsuit into position, the pitch angle varies throughout the planning phase bringing his head nearer the surface. Raise your head gently while you’re still recovering your arms.

Part: The circular action of the breaststroke is distinct. When doing an arm pull, the swimmer rotates their body in a side-to-side motion, enabling their head to follow suit.

-

3.

Butterfly

Yaw: while a swimmer makes wave body motions, particularly while pushing a dolphin, the yaw angle may vary somewhat.

Pitch: As the swimmer performs the stroke, their pitch angle dynamically changes. Both the underwater and arm recovery phases require you to raise your head above the waterline.

Role: The head follows the body’s movement as the body rotates left and right during the butterfly stroke.

-

4.

Backstroke

Yaw: The swimmer looks up and maintains a nearly constant yaw angle throughout the stroke.

Pitch: Because the swimmer’s head is close to the water’s surface, his angle of inclination is almost constant.

Role: The body rotates uniformly along the longitudinal axis and to the left and right during the backstroke.

The proposed method for enhancing swimmer movement techniques using cloud computing and artificial intelligence with deep learning offers several significant advantages, as shown by the findings of the previous trials. With the use of potent algorithms like convolutional neural networks (CNN), the system can accurately classify and predict swimmer actions in real-time, providing coaches and players with quick feedback and analysis. Second, edge computing makes it possible to efficiently sample data and reduce noise, producing high-quality input for artificial intelligence algorithms. Third, the system can handle enormous volumes of data because to the scalability of cloud computing, which enables ongoing learning and development over time. Moreover, the system’s capacity to gather and evaluate information from wearables like heartbeat monitors and gyroscopes enhances knowledge of swimmers’ health and technique. This integrated approach thus gives coaches and players access to a wealth of data, enabling targeted training, performance optimization, and enhanced athlete care.

The thorough approach used to evaluate and classify swimmers’ movements into three main stages has shown notable progress in the field of sports analytics. First, the signals from gyroscopes and muscle movement sensors are sampled and denoised. This ensures high-quality data by removing artifacts and noise. The careful cleansing of the data is essential since it creates a solid basis for additional investigation. After that, the data is annotated and arranged by analyzing the pre-processed signals and assigning labels to various swimming strokes, such as freestyle, butterfly, and breaststroke. This stage is essential for improving data accuracy since it guarantees that clean, well-organized datasets will serve as the foundation for all next studies.

6 Conclusion

In conclusion, our study effectively combined Edge and Cloud computing to maximise artificial intelligence’s data processing capabilities. The problems of data cleansing and sampling were solved by using Edge computing. Simultaneously, the categorization of swimmer movement groups was made easier by the Edge-level use of artificial neural networks (ANN). Python was used for data annotation, and a Raspberry Pi was used to transport the data to the cloud so that it could be further classified into movements. The results showed that the distribution’s accuracy improved the mistake rate dropped as the number of data increased, suggesting ongoing progress in unsupervised categorization each swimmer cycle and batch. Additionally, when the dataset changed, the performance of convolutional neural networks (CNNs with LSTM) in cloud computing improved. The system can train many swimmers in real-time and track each athlete’s health state because to its increased precision and scalability. It’s crucial to recognize that the existing framework has drawbacks when it comes to implementing new methods and real-time learning. As a result, this study establishes the groundwork for a precise and scalable approach that has great potential to improve swimmer monitoring and training.

Data Availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Feng, H., Lv, H., & Lv, Z. (2023). Resilience towarded digital twins to improve the adaptability of transportation systems. Transportation Research Part A: Policy and Practice, 173, 103686.

Verma, S. K., Verma, R., Singh, B. K., & Sinha, R. S. (2024). Management of intelligent transportation systems and advanced technology. Intelligent transportation system and advanced technology (pp. 159–175). Springer.

Xu, G., Zhang, Q., Song, Z., & Ai, B. (2023). Relay-assisted deep space optical communication system over coronal fading channels. IEEE Transactions on Aerospace and Electronic Systems, 59(6), 8297–8312.

Sudeep, M., Manjunatha, C., Sudarshan, B., Khosla, A., & Sekhar, P. (2023). Trends in graphene-based e-skin and artificial intelligence for biomedical applications—A review. IEEE Sensors Journal.

Ma, X., Dong, Z., Quan, W., Dong, Y., & Tan, Y. (2023). Real-time assessment of asphalt pavement moduli and traffic loads using monitoring data from built-in sensors: Optimal sensor placement and identification algorithm. Mechanical Systems and Signal Processing, 187, 109930.

Sarah, A., Nencioni, G., & Khan, M. M. I. (2023). Resource allocation in multi-access edge computing for 5G-and-beyond networks. Computer Networks, 227, 109720.

Wang, K., Williams, H., Qian, Z., Wei, G., Xiu, H., Chen, W., & Ren, L. (2023). Design and evaluation of a smooth-locking-based customizable prosthetic knee joint. Journal of Mechanisms and Robotics, 16(4), 041008.

Wang, K., Boonpratatong, A., Chen, W., Ren, R., Wei, G., Qian, Z., Lu, X., & Zhao, D. (2023). The fundamental property of human leg during walking: Linearity and nonlinearity. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 31, 4871–4881.

Li, J., Li, J., Wang, C., Verbeek, F. J., Schultz, T., & Liu, H. (2024). MS2OD: Outlier detection using minimum spanning tree and medoid selection. Machine Learning: Science and Technology, 5(1), 15025.

Guppy, F., Muniz-Pardos, B., Angeloudis, K., Grivas, G. V., Pitsiladis, A., Bundy, R., Zelenkova, I., Tanisawa, K., Akiyama, H., Keramitsoglou, I., & Miller, M. (2023). Technology innovation and guardrails in elite sport: the future is now. Sports Medicine, 53(Suppl 1), 97–113.

Khan, D., Alonazi, M., Abdelhaq, M., Al Mudawi, N., Algarni, A., Jalal, A., & Liu, H. (2024). Robust human locomotion and localization activity recognition over multisensory. Frontiers in Physiology, 15, 1344887.

Li, X., & Sun, Y. (2021). Application of RBF neural network optimal segmentation algorithm in credit rating. Neural Computing and Applications, 33(14), 8227–8235.

Sun, G., Xu, Z., Yu, H., & Chang, V. (2021). Dynamic network function provisioning to enable network in box for industrial applications. IEEE Transactions on Industrial Informatics, 17(10), 7155–7164.

Sun, G., Sheng, L., Luo, L., & Yu, H. (2022). Game theoretic approach for multipriority data transmission in 5G vehicular networks. IEEE Transactions on Intelligent Transportation Systems, 23(12), 24672–24685.

Bajic, B., Suzic, N., Moraca, S., Stefanović, M., Jovicic, M., & Rikalovic, A. (2023). Edge computing data optimization for smart quality management: Industry 5 0 perspective. Sustainability, 15(7), 6032.

Dai, M., Luo, L., Ren, J., Yu, H., & Sun, G. (2022). PSACCF: Prioritized online slice admission control considering fairness in 5G/B5G networks. IEEE Transactions on Network Science and Engineering, 9(6), 4101–4114.

Jayachitra, S., Prasanth, A., Hariprasath, S., Benazir Begam, R., & Madiajagan, M. (2023). AI Enabled internet of medical things in smart healthcare. In AI models for blockchain-based intelligent networks in IoT systems: Concepts, methodologies, tools, and applications (pp. 141–161). Springer.

Dai, M., Sun, G., Yu, H., & Niyato, D. (2024). Maximize the long-term average revenue of network slice provider via admission control among heterogeneous slices. IEEE/ACM Transactions on Networking, 32(1), 745–760.

Savanović, N., Toskovic, A., Petrovic, A., Zivkovic, M., Damaševičius, R., Jovanovic, L., Bacanin, N., & Nikolic, B. (2023). Intrusion detection in healthcare 4.0 internet of things systems via metaheuristics optimized machine learning. Sustainability, 15(16), 12563.

Sun, G., Liao, D., Zhao, D., Xu, Z., & Yu, H. (2018). Live migration for multiple correlated virtual machines in cloud-based data centers. IEEE Transactions on Services Computing, 11(2), 279–291.

Shang, M., & Luo, J. (2021). The tapio decoupling principle and key strategies for changing factors of Chinese urban carbon footprint based on cloud computing. International Journal of Environmental Research and Public Health, 18(4), 2101.

Luo, J., Zhuo, W., Xu, B., & Deep, A. (2023). Neural network-based assistive decision method for financial risk prediction in carbon trading market. Journal of Circuits, Systems and Computers, 33(8), 2450153.

Chen, C., & Pan, J. (2019). The effect of the health poverty alleviation project on financial risk protection for rural residents: Evidence from Chishui City, China. International Journal for Equity in Health, 18(1), 79.

Li, Q., Hu, J., & Yu, B. (2021). Spatiotemporal patterns and influencing mechanism of urban residential energy consumption in China. Energies, 14(13), 3864.

Liu, Y., Fang, Z., Cheung, M. H., Cai, W., & Huang, J. (2023). Mechanism design for blockchain storage sustainability. IEEE Communications Magazine, 61(8), 102–107.

Tian, W., Zhao, Y., Hou, R., Dong, M., Ota, K., Zeng, D., & Zhang, J. (2023). A centralized control-based clustering scheme for energy efficiency in underwater acoustic sensor networks. IEEE Transactions on Green Communications and Networking, 7(2), 668–679.

Li, D. (2024). An interactive teaching evaluation system for preschool education in universities based on machine learning algorithm. Computers in Human Behavior, 157, 108211.

Wang, K., Hu, Y., Zhou, J., & Hu, F. (2023). Fintech, financial constraints and OFDI: Evidence from China. Global Economic Review, 52(4), 326.

Ban, Y., Liu, Y., Yin, Z., Liu, X., Liu, M., Yin, L., & Zheng, W. (2024). Micro-directional propagation method based on user clustering. Computing and Informatics, 42(6), 1445–1470.

Dang, W., Cai, L., Liu, M., Li, X., Yin, Z., Liu, X., & Zheng, W. (2024). Increasing text filtering accuracy with improved LSTM. Computing and Informatics, 42(6), 1491–1517.

Song, F., Liu, Y., Shen, D., Li, L., & Tan, J. (2022). Learning control for motion coordination in water scanners: Toward gain adaptation. IEEE Transactions on Industrial Electronics, 69(12), 13428–13438.

Funding

The authors received no funding for this project.

Author information

Authors and Affiliations

Contributions

Jia Zhou: conceptualization, methodology, formal analysis, validation, resources, supervision, writing—original draft, writing—review and editing. Yabin Shi: formal analysis, validation, resources, supervision, writing—original draft, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, J., Shi, Y. Risk Control Analysis of Digital Financial Engineering Based on 6G Physical Information System. Wireless Pers Commun (2024). https://doi.org/10.1007/s11277-024-11240-x

Accepted:

Published:

DOI: https://doi.org/10.1007/s11277-024-11240-x