Abstract

Palm-print recognition system is extensively deployed in a variety of applications, ranging from forensic to mobile phones. This paper proposes a new feature extraction technique for robust palm-print based recognition. The method combines the angle information of an edge operator and multi-scale uniform patterns, which extracts texture patterns at different angular space and spatial resolution. Thus, making the extracted uniform patterns less sensitive to the pixel level values. Further, an optimal artificial neural network structure is developed for classification, which helps in maintaining the higher classification accuracy by significantly reducing the computational complexity. The proposed method is tested on standard PolyU, IIT-Delhi and CASIA palm-print databases. The method yields an equal error rate of 0.2% and classification accuracy of 98.52% on PolyU database.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Biometric systems have been one of the most reliable and robust method for human authentication. The biometric system uses certain physiological or behavioral traits for security identification and access [1]. The ever-increasing digitization has made it almost necessary to incorporate the use of biometric system. In recent years, research has progressed in the field of fingerprint [2, 3], iris [4], gait [5, 6], palm-print [7], ear [8], voice [9], and face [10,11,12] for human verification and authentication. Face recognition is one of the most flexible biometric modalities, working even when subject is unaware of being scanned. Face biometric has been limited by the problems related with expressions, pose and illumination. Fingerprint is widely used biometric modality due to its easy and inexpensive capturing and recognition. Fingerprint recognition has been limited by the difficulties such as manual workers and aged people fail to give acceptable quality fingerprint images. As a biometric, iris is another decent method, but its data acquisition system is costly and inconvenient [13].

Among these, palm-print, is one of widely accepted biometric trait offers several advantages compared with other biometrics [14]. The use of palm-prints for user access and human authentication has increased in the last decade due to its unique and stable characteristics along with rich feature set (large area). Palm-print region (the region of the hand between the fingers and the wrist) consists of a unique pattern for every individual in the form of ridges, valleys, geometry and discriminatory line patterns [15, 16]. In recent past, numbers of algorithms, to extract efficient and accurate features have been proposed.

1.1 Literature Review

Existing approaches can be broadly classified into three main categories such as texture based, line-based and subspace-based methods [17].

Palm Code which is based on the computational architecture of Iris Code, extract the phase information of palm-print using Gabor filter [18, 19]. The phase has been quantized in bit form and comparison of two Palm codes is computed by bitwise hamming distance. Palm code features are highly correlated which could further reduce the individuality of Palm codes and hence reducing the performance of palm-print identification system.

Fusion code [20] based method was proposed which overcome the correlation problem associated with Palm codes. The phase information of palm-print images is extracted using multiple elliptical Gabor filters with different orientations. The resulting features are merged and produce a single feature vector called the Fusion code. Finally, the similarity of two Fusion codes is computed by normalized hamming distance. Dynamic threshold as a replacement of fixed threshold is applied for final decision. Ordinal features [21] are proposed by fusing the best performing methods like Fusion code and Competitive code.

It has been observed that the scale features of palm-print cannot be well exploited by utilizing the coding-based methods. The existing literature has demonstrated that the orientations of palm lines hold more steady and discriminative features contrasting with magnitude features. Jia et al. [22] proposed histogram of oriented lines (HOL), a variant of histogram of oriented gradients (HOG). The method is insensitive to variations of illumination.

Mokni et al. [23] proposed a feature extraction method that extracts the line and geometric features. The method is robust in terms of orientation and re-parameterization.

Luo et al. [24] proposed an image descriptor i.e., local line directional patterns (LLDP). Different LLDP variations such as modified Radon transform (MFRAT) and the Gabor filters (real part) square measure investigated to extract the sturdy orientation features.

The above discussed methods cannot be exploited the specific attributes of the palm-prints for example orientation and scale features, which restricts their recognition performance.

Texture based approach extract texture features of given palm images. Texture features are not extracted uniformly, due to the variations in scale, orientation or other visual appearance. Local binary pattern (LBP) is a powerful local image descriptor, which extract uniform patterns, is widely used in personal authentication [25].

Tamrakar and Khanna [26] proposed uniform LBP (ULBP) for palm-print recognition. It offers the uniform appearance having restricted discontinuities. The method has the flexibility to handle occlusion up to 36%. On the premise of average entropy, the images are classified as occluded or non-occluded. The match score is calculated just for non-occluded images.

Guo et al. [27] presents a collaborative model named hierarchical multiscale LBP (HM-LBP) for palm-print recognition. The strategy is strong in terms of rotation, gray scale and illumination.

The matter of high dimensions of HM-LBP may be overcome by applying dimensionality reduction principal component analysis (PCA).

Li and Kim [28] proposed a local descriptor named local micro-structure tetra pattern (LMTrP) for palm-print recognition. The descriptor takes advantage of local descriptors’ direction similarly as thickness. The strategy is least sensitive to the variations of translation, rotation, and blurriness.

Zhang et al. [29] proposed a palm-print recognition method by combining weighted adaptive center symmetric LBP (WACS-LBP) and weighted sparse representation-based classification (WSRC). One of the main rationales of this method is that it reasonably makes the classification problem become a simpler one with fewer classes. In the proposed method, five parameters are decided empirically by the cross-validation strategy. The method is limited by parameter selection. The descriptor based on edge gradient will give preferable recognition performance because the edge gradient is more consistent than the pixel intensity. Hence, the edge gradient will give better outcomes over unique LBP for face and expression acknowledgment [30].

Michael et al. [31] has proposed a gradient operator to attain directional response of palm-print. LBP extract the texture description of palm pattern in different directions and probabilistic neural network is applied for matching. The method is limited by some inherent problems such as gray-scale variant, non-directional patterns, and sensitive to certain texture patterns.

The literature writing suggests that the methods discussed above could not successfully exploit the discriminant orientation features and the discriminant scale features at the same time.

The main contributions of the paper are:

-

This paper proposes a new descriptor named multi-scale edge angles LBP (MSEALBP) for palm-print recognition. Sobel vertical and horizontal edges of the ROIs have been used to produce the edge angle images. Then, a local binary pattern having multiple scales (MSLBP) is employed to the Sobel edge angle images. The resulting characteristics are formed into non-overlapping blocks and statistical calculations are implemented to form a texture vector. Different experiments are performed to validate the proposed method.

-

The paper uses an optimal technique that improves the accuracy of the classifier by tuning the network parameters. The extracted texture features are applied as an input to the artificial neural network (ANN). The method has great generalization capacity and less training cost. Levenberg–Marquardt is employed to develop an optimized network. The ANN structure is optimized to find out network parameters. In addition, new information can be added whenever without the need to re-train the whole network.

The general points of this paper are to upgrade the recognition performance by implementing the combined approach of Sobel direction angles with the multi-scale LBP. Comparative experiments were carried out to exhibit the accuracy of the proposed approach. Its high discrimination capacity and effortlessness in calculation have made it truly appropriate for online recognition framework.

The organization of the paper is as follows: Sect. 2 explains the proposed multi-scale edge angle LBP method along with the theoretical part of the LBP, multi-scale LBP and the fundamentals of the artificial neural network for palm-print recognition. Section 3 details the experimental results, discussions and comparisons of the proposed methodology on three large scale publicly available datasets. Finally, Sect. 4concludes findings of the proposed work and also gives future directions.

2 Proposed Methodology

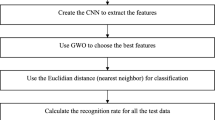

This section elaborates the proposed multi-scale palm-print recognition process. The block diagram of the proposed scheme is shown in Fig. 1.

The first step is preprocessing, which extracts aligned and stable ROI (Region of Interest) from input palm-print images. The resulting ROI images are filtered with Sobel gradient operator in both vertical and horizontal directions to produce directional angle images. The directional angle images are, then passed through multi-scale LBP to produce uniform patterns of palm-prints. The uniform images are divided into non-overlapping blocks of size 5 × 5 pixels. Then, coefficient of variation (CV) value of each block is computed. Further, the CV values of each block are concatenated to form a 1-D vector for a palm-print. Then, the resulting vector is feed as an input into the ANN [32]. PSO is utilized to find out network parameters.

2.1 Preprocessing

Pre-processing is an important step for palm-print recognition, which has a significant impact on the outcome of recognition. The existing palm print ROI extraction algorithms are based on a common criterion of choosing the points in and around the fingers for segmenting the palm region [18, 33,34,35]. In this paper, distance-based ROI extraction method is used, which reduces the effects of pose variation and hand rotation [34]. Figure 2 shows the respective ROI extraction steps. Initially, an original hand image is selected from the available palm-print database. Then, a lowpass filter (Gaussian smoothing) is applied to the original image that overcomes the initial level image abnormalities. Thresholding (Multilevel ostu’s method) is applied on the filtered image to obtain a binarized image [33]. The resulting binarized image is used to obtain the boundary of the hand. Point-finding algorithm is used to locate the key points (fingers tips and finger valley), as these points are insensitive to rotation of the image caused during image acquisition [18]. Further, a reference point within the palm is chosen as centroid using valley points of the index finger and the middle finger. A square region is formed using the centroid as shown in Fig. 2e. The resulting square region is region of interest, extracted from the image as shown in Fig. 2f.

2.2 Local Binary Patterns (LBP)

The standard LBP was first introduced by Ojala et al. [25] as a texture descriptor, which extract uniform patterns of images. Figure 3 shows an example of LBP code. Firstly, the 3 × 3 sub-blocks are obtained by ROI image segmentation.

A comparison has been carried out in each sub-block between the value of the center pixel and the values of its surrounding eight neighbor pixels. This comparison results in a logical number i.e. either ‘0’ or ‘1’ as depicted in Fig. 3.

Further, the binary number is converted into decimal equivalent by using weighted sum method as given in Eq. (1)

where \(g_{c}\) and \(g_{p}\) are the gray value of center pixel and circular surrounding of \(p^{th}\) neighbor pixel of 3 × 3 sub-block respectively. \(s\) is the LBP transformation. LBP is limited by some inherent problems such as gray-scale and rotation variant, non-directional patterns, and sensitive to certain texture patterns.

2.3 Multi-scale LBP (MSLBP)

A gray-scale and rotation invariant texture operator based on LBP i.e., Multi-scale LBP was proposed to enhance the LBP features [36].

It uses circular neighborhood pixels having dissimilar spatial sampling (P) and different radius value (R). Figure 4 shows LBP with different (P, R) sets. The MSLBP is computed as given in Eq. (2)

The multi-scale LBP is sensitive to certain pattern of texture features and also produce non-directional patterns.

2.4 Proposed Multi-scale Edge Angles LBP (MSEALBP)

Multi-scale edge angles LBP is proposed to extract effective features of palm-prints. It produces uniform patterns as well as gray-scale and rotation invariant texture information. Due to the utilization of phase information, it produces less sensitive directional patterns to the pixel level values. Firstly, the ROI image is filtered with gradient operator mask such as Robert, Prewitt and Sobel in both horizontal and vertical directions.

The magnitude and phase information can be computed by fusing horizontal and vertical operators as given in Eqs. (3) and (4).

where \(\left| G \right|\) is the gradient magnitude, \(\theta\) represents angle direction and \(atan2\) represents the 4-quadrant inverse as discussed in [37].

The gradients of certain patterns are described more effectively with less sensitivity to the pixel values by angle features than magnitude features. The magnitude can be affected by noise, brightness and range problems [38]. Also, the directional information produced by the magnitude calculation is not robust as in angle calculation [39, 40]. Hence, the angle direction of the Sobel operator can be designed to achieve consistent performance. Figure 5 shows Sobel horizontal operator \(G_{x}\) and Sobel vertical operator \(G_{y}\). The experimental results proves that the angle direction gives better results as compared to the magnitude. Figure 6 shows the resulting sample images of the proposed MSEALBP.

Multi-scale edge angle LBP operator results: Each row shows a palm-print; from the Top; PolyU palm-print, IIT-Delhi hand image, CASIA palm-print databases respectively. Each column depicts; from the left: original palm images, the ROI extracted images, horizontal edge images, vertical edge images, Multi-scale LBP of the angle images respectively

Equation (2) has been modified to calculate proposed MSEALBP on the edge angle direction as follows:

where, \(gt_{p}\) and \(gt_{c}\) are the \(p{\text{th}}\) neighbor pixel value and the center pixel value of each sub-block after applying Sobel angle direction images respectively.

The proficiency of the proposed approach is evaluated by using different values of \(P\) and \(R\) of palm-print patterns.

Before, the blocking operation, the pixels of the ROI image are allocated with the values of MSEALBP of each palm-print. The extracted images will be divided into non-overlapping blocks.

Equations (6–8) are used to compute Coefficient of variation values of each block.

where \(n\) is the number of pixels in each block,\(bl\) is the block size here it is 5 × 5 pixels, \(M_{bl}\) refers to average of block pixels, \(i\) is the pointer of pixels in a block,\(STD_{bl}\) is standard deviation and \(CV_{bl}\) is the coefficient of variation. The CV values of palm-prints will be concatenated to form a 1-D vector for further classification stage.

2.5 Artificial Neural Network (ANN)

Artificial neural network-based systems are broadly used in the area of image processing, pattern recognition and classification [41,42,43,44]. The 1-D feature vector is feed as an input to the classification algorithm. Within the customary classifiers, network structure is taken into account to be fixed and known to be as un-optimized network structure [45, 46].

The accuracy of a fixed network structure depends on the network parameters: (a) number of hidden layers \((HL_{n} )\), (b) number of neurons in each hidden layer \((NHL_{n} )\). These parameters are not defined properly, which results poor accuracy for some categories [47].

The paper uses an optimization technique that improves the accuracy of the classifier by tuning the network parameters. Levenberg–Marquardt [48] is employed to develop an optimized network. The ANN structure is optimized to find out number of hidden layers and number of neurons in each hidden layer. The PSO optimization technique is used and then palm-print classification is carried.

2.5.1 Optimization Scheme

Kennedy and Eberhart [49] introduced particle swarm optimization (PSO) as an optimization algorithm in 1995. Wang et al. [50] suggested different methods of utilizing PSO with other search algorithms. The best features of the particles square measure searched to pick out the optimum weights and appointed a fitness value for each particle. Every particle possesses a velocity and it can be categorized as a swarm component, an inertial component and a cognitive component.

In this paper, PSO is effectively employed to get optimal values of \((HL_{n} )\) and \((NHL_{n} )\) of the neural structure to maximize the fitness function (accuracy).

The updated velocity of the PSO is computed as given in Eq. (9),

The updated particle position is given as

where, \(a_{1}\) and \(a_{2}\) are accelerating coefficients. The cognitive elements ensuring exploitation the positions of the particle’s best configuration \(p\_b \, (t)\). The global elements are determined using the global best \(g\_b \, (t)\) position among the population.\(R_{1}\) and \(R_{2}\) are two random numbers in [0, 1]. The inertia weight \(w(t)\) manages the contribution of the previous velocity. During the experiment, 60% samples are used to minimize the error function by adjusting weight vector and reaming samples are used for testing.

The steps of the ANN with PSO are given as follows:

Step 1 Initialize parameters.

Maximum iteration \((I_{{_{\max } }} )\) = 500, population size \((p_{{_{s} }} )\) = 30, \(a_{1}\) = 1.5, \(a_{2}\) = 2 and \(w\) = 1. Initialize two parameters number of hidden layers \((HL_{n} )\) and number of neurons in each hidden layer \((NHL_{n} )\) as.

\((HL_{n} )_{min} = 1\),\((HL_{n} )_{max} = 10\), \((NHL_{n} )_{min} = 1\),\((NHL_{n} )_{max} = 50\);

Step 2 Initialize population.

\({\text{HL}}_{n} value = randi((HL_{n} )_{min} , (HL_{n} )_{max} )\);

Step 3 Fitness computation.

Update particles best position as

Update global best: \({\text{if}} (bestfitness (t) > g\_b(t))\),\({\text{g}} \_b (t) = bestfitness (t);\)

Step 4 Update velocity as given in Eq. (9)

Update position as given in Eq. (10)

Update best position as

Update global best with maximum iteration as \(p \, (t).\)

Step 5 Repeat step 4 until \({\text{iteration}} \le {\text{maximum}} iteration\).

3 Results and Discussion

3.1 Database

The proposed framework is validated on three different large-scale publicly accessible palm print databases viz.

(a) PolyU palm-print [51], (b) CASIA palm-print [52] and (c) IIT-Delhi palm-print [53].

The PolyU database consists of palm images of 193 individuals acquired in two separate sessions. This database has a total of 7752 images corresponding to 386 individual palms. Each individual contributed 10 samples of each hand in both the sessions.

The CASIA database contains a total of 5502 palm-print images from 312 different subjects using a self-designed device. Each individual provides eight samples of each hand (both left and right).

The IIT-Delhi database consists a total of 4080 palm images of 230 individuals with each individual providing 6–7 samples of each hand (both left and right). These images are acquired with varying hand pose variations with an image size of 800 × 600 pixels. The IIT-Delhi database also contains auto-cropped (150 × 150 pixel) ROI images.

3.2 Experimental Setup

This section describes experimental setup to evaluate the proposed method and its comparison with other state-of-art methods. In the experimental setup of proposed method, primarily 60% images are used for training and remaining images are used for testing for all the three databases. There is no overlap in training and testing samples. The extracted ROI is of size 128 × 128 pixels for all the databases.

Cumulative Match Characteristic (CMC) curve and classification accuracy are considered for evaluating the accuracy of biometric frameworks. EER is characterized as the rates at which both reject and accept errors are equivalent. The performance of the classifier is evaluated using sensitivity, accuracy and specificity as defined in Eqs. (11–13),

where \({\text{tp}}\) = True Positive \(tn\) = True Negative,\({\text{fp}}\) = False Positive,\({\text{fn}}\) = False Negative.

The experiments are conducted on Dell Precision Tower 5810 by using MATLAB (R2020a). CPU as Intel Xeon Processor and two 2-GB NvidiaQuadro K620 GPUs, windows 10 (operating system 64 bit).

Various edge analysis techniques such as Roberts, Prewitt and Sobel have been examined on the three palm-print databases to decide the gradient operator for filtering the ROIs images. The summary of parameters used in the proposed method is listed in Table 1.

After extracting the ROI using preprocessing, Sobel horizontal and vertical edges operator are applied on the ROI images to compute the magnitude and angle. As the mask weights of Sobel operator is higher than the Robert and Prewitt it discloses the key palm-print features in better fashion [31]. Table 2 shows the comparison of EER for magnitude and angle of different edge detection methods. The EER for the PolyU database was diminished from 2.79% using the magnitude to 0.26% with the angle and for IIT-Delhi database from 4.01% to 3.25%. Also, EER was decreased from 5.11 % using the magnitude to 2.76% with the angle for CASIA database.

The performance of angle in terms of EER is better than magnitude as magnitude is easily affected by the illumination, imagining contrast and camera gain of an image. Furthermore, Sobel angular approach utilizes the ratio of the outputs of vertical and horizontal operators, resulting in producing effective information by angle patterns in comparisons to magnitude patterns.

The results of Sobel are better than Prewitt, because Sobel is less effected by image contrast in comparisons to Prewitt. The EER for PolyU database was diminished from 1.31% using the magnitude to 1.05% with the angle for the Prewitt operator. Prewitt and Sobel operations utilize both horizontal and vertical edges, while Robert considered only diagonal edges. The EER for PolyU database was increased from 1.53% using magnitude to 2.19 % with the angle and from 8.19% to 9.28% for the IIT-Delhi database for Robert operator.

Multi-scale LBP (MSLBP) as discussed in Sect. 2.3 is applied on the resulting Sobel edge angle images to obtain the uniform patterns of palm-prints.

The MSLBP is tested for different values of neighbor Pixel (P) and Radius (R) on PolyU, CASIA and IIT-Delhi palm-print databases for Robert, Prewitt and Sobel operators as listed in Table 3, 4 and 5 respectively.

The best recognition performance in terms of EER is achieved using the Sobel angles LBP with a multi-scale parameter of P = 8 and R = 2 for all three palm-print databases.

The percentage EER achieved for P = 8, R = 2 is 0.26%, 2.76% and 3.25% on PolyU, CASIA and IIT-Delhi databases respectively. As the number of neighboring pixels (P) relates to the amount of processed information, increasing its value will increase the redundant information processed. Similarly, increasing the value of radius (R) greater than 2 will cause a loss of the micro texture information while decreasing the value of R less than 1 will incorporate the miniature surfaces and the implanted noise also. To obtain CV, the resulting uniform images obtained from MS-LBP are divided into N × N non-overlapping blocks.

The performance of the proposed framework is analyzed over the different block size of 3 × 3, 5 × 5, 7 × 7, 9 × 9, 11 × 11, 13 × 13and 15 × 15 on all three palm-print database as shown in Fig. 7. The best recognition performance is achieved for the block size of 5 × 5, as from the experiment it is observed that by changing impeding size to more than or not exactly the reasonable size, raises the error rate. The best EER value is obtained with a block size of 5 × 5 is 0.26% and 2.76% and 3.25% for PolyU, CASIA and IIT-Delhi palm-print databases respectively. Table 6 shows the performance of the suggested neural network structure in terms of accuracy, sensitivity and specificity.

The method yields an accuracy of 98.52%, specificity of 98.60% and sensitivity of 92.50% for P = 8, and R = 2. In the similar manner, CASIA and IIT-Delhi databases generates an accuracy of 97.85% and 95.32% respectively.

The Cumulative Match Characteristic (CMC) curves are created by collecting the viable ANN yield esteems from the summation layer and remapping these qualities as per the ANN characterizations [54]. Comparison of CMC curves of the proposed method with other methods such as LBP, LDP, DGLBP, HOL, VGG-16 and VGG-19 on PolyU, CASIA and IIT-Delhi palm-print databases are shown in Fig. 8.

The method is compared with the existing methods discussed in literature and yields better performance in terms of EER and accuracy. Table 7 listed the percentage EER comparison of the proposed method with existing method.

Directional gradient LBP (DGLBP) proposed by Michael et al. [31] gives an EER of 1.52%, 6.45% and 8.34% on PolyU, CASIA and IIT-Delhi databases respectively.

Neural network-based method VGG-16 and VGG-19 yields EER of 5.14% and 5.29% respectively. The best recognition performance is observed for the proposed method with percentage EER as 0.26%, 2.76% and 3.25% on PolyU, CASIA and IIT-Delhi databases respectively. The proposed method drives better results as it produces uniform patterns and rotation invariant texture information, which enhances the performance.

A comparative analysis of the proposed framework with the state-of-art methods is tabulated in terms of accuracy is listed in Table 8. Tarawneh et al. [57] proposed neural network-based methods that yields an accuracy of 98.12% for VGG-16 and 98.02% for VGG-19. The accuracy of the proposed classifier is 98.52% for PolyU database, 97.85% for CASIA database and 95.32% for IIT-Delhi database.

Table 9 shows the False Acceptance Rate (FAR) and False Rejection Rate (FRR) of the proposed method for all the databases. The descriptor based on edge gradient will give preferable recognition performance because the edge gradient is more consistent than the pixel intensity. Hence, the edge gradient will give better outcomes over unique LBP for face and expression acknowledgment [48]. Hence, the performance of the proposed method is better in terms of EER is 0.26% and accuracy of 98.52% on the PolyU database.

4 Conclusion

This paper discusses a feature extraction method for palm-print recognition which integrates the multi-scale LBP with angle directions from Sobel filter. This method has the ability to detect uniform patterns at different angular space and different spatial resolution. The remarkable result of 0.26% EER on PolyU palm-print database were obtained with P = 8 and R = 2. The combination of angle direction and multi-scale local binary patterns has ability to produce less sensitive directional patterns. The texture details are classified by the LM trained multi-layer perceptron neural network. ANN is optimized with respective N HL and NN HL through an optimization technique (PSO) for maximizing the accuracy of the classifier. The experimental analysis was carried out on three large scale publicly available palm-print databases.

Optimized neural structure gives an accuracy of 98.52%, specificity of 98.60% and sensitivity of 92.50% on PolyU database. Results show that the optimized neural structures give better classification compared to other ANN structure.

The result shows that the proposed approach has improved the performance when compared with other state-of-art methods. The best recognition results were achieved with an EER of 0.26% and accuracy of 98.52% on PolyU database.

The total execution time is approximately 1 s, which is quick enough for real time application. The method also possesses good generalization ability with little training cost.

Data Availability

The datasets generated during and/or analyzed during the current study are not publicly available due to the further research work but are available from the corresponding author on reasonable request.

References

Jain, A. K., Ross, A., & Prabhakar, S. (2004). An introduction to biometric recognition. IEEE Transactions on Circuits and Systems for Video Technology, 14(1), 4–20.

Valdes-Ramirez, D., Medina-Pérez, M. A., & Monroy, R. (2021). An ensemble of fingerprint matching algorithms based on cylinder codes and mtriplets for latent fingerprint identification. Pattern Analysis and Applications, 24, 433–444. https://doi.org/10.1007/s10044-020-00911-7

Nachar, R., Inaty, E., Bonnin, P. J., et al. (2020). Hybrid minutiae and edge corners feature points for increased fingerprint recognition performance. Pattern Analysis and Applications, 23, 213–224. https://doi.org/10.1007/s10044-018-00766-z

Langoni, V., & Gonzaga, A. (2020). Evaluating dynamic texture descriptors to recognize human iris in video image sequence. Pattern Analysis and Applications, 23, 771–784. https://doi.org/10.1007/s10044-019-00836-w

Lima, V. C. D., Melo, V. H. C., & Schwartz, W. R. (2021). Correction to: Simple and efficient pose-based gait recognition method for challenging environments. Pattern Analysis and Applications, 24, 509. https://doi.org/10.1007/s10044-020-00945-x

Lima, V. C. D., Melo, V. H. C., & Schwartz, W. R. (2021). Simple and efficient pose-based gait recognition method for challenging environments. Pattern Analysis and Applications, 24, 497–507. https://doi.org/10.1007/s10044-020-00935-z

Aguado-Martínez, M., Hernández-Palancar, J., Castillo-Rosado, K., et al. (2021). Document scanners for minutiae-based palmprint recognition: A feasibility study. Pattern Analysis and Applications, 24, 459–472. https://doi.org/10.1007/s10044-020-00923-3

Kamboj, A., Rani, R., Nigam, A., et al. (2021). CED-Net: Context-aware ear detection network for unconstrained images. Pattern Analysis and Applications, 24, 779–800. https://doi.org/10.1007/s10044-020-00914-4

Ajmera, P., Jadhav, R., & Holambe, R. S. (2011). Text-independent speaker identification using Radon and discrete cosine transforms based features from speech spectrogram. Pattern Recognition, 44(10–11), 2749–2759.

Kagawade, V. C., & Angadi, S. A. (2021). Savitzky-Golay filter energy features-based approach to face recognition using symbolic modeling. Pattern Analysis and Applications. https://doi.org/10.1007/s10044-021-00991-z

Ayeche, F., & Alti, A. (2021). HDG and HDGG: An extensible feature extraction descriptor for effective face and facial expressions recognition. Pattern Analysis and Applications. https://doi.org/10.1007/s10044-021-00972-2

Nakouri, H. (2021). Two-dimensional Subclass Discriminant Analysis for face recognition. Pattern Analysis and Applications, 24, 109–117. https://doi.org/10.1007/s10044-020-00905-5

Qingqiao, H., Siyang, Y., Huiyang, N., et al. (2020). An end to end deep neural network for iris recognition. Procedia Computer Science, 174, 505–517.

Zhang, S., & Gu, X. (2013). Palmprint recognition based on the representation in the feature space. Optik, 124, 5434–5439.

Jing, L., Jian, C., & Kaixuan, L. (2013). Improve the two-phase test samples representation method for palmprint recognition. Optik, 1124(24), 6651–6656.

Ali, M. M., Yannawar, P., & Gaikwad, A. T. (2016, March). Study of edge detection methods based on palmprint lines. In International Conference on Electrical, Electronics, and Optimization Techniques (pp. 1344-1350).

You, J., Li, W. X., & Zhang, D. (2002). Hierarchical palmprint identification via multiple feature extraction. Pattern Recognition, 35, 847–859.

Zhang, D., Kong, W. K., You, J., et al. (2003). On-line palmprint identification. IEEE Transactions on Pattern Analysis and Machine Intelligence., 25(9), 1041–1050.

Diaz, M. R., Travieso, C. M. Alonso, J. B.. et al. (2004). Biometric system based in the feature of hand palm. In Proceedings of International Carnahan Conference on Security Technology (pp. 136–139).

Kong, A., Zhang, D., & Kamel, M. (2006). Palmprint identification using feature-level fusion. Pattern Recognit., 39(3), 478–487.

Sun, Z., Tan, T., Wang, Y., et al. (2005). Ordinal palmprint representation for personal identification. 2005. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. (pp. 279–284).

Jia, W., Hu, R. X., Lei, Y. K., et al. (2014). Histogram of oriented lines for palmprint recognition. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 44(3), 385–395.

Mokni, R., Hassen, D., & Monji, K. (2017). Combining shape analysis and texture pattern for palmprint identification. Multimedia Tools and Applications, 76, 23981–24008.

Luo, Y. T., Zhao, L. Y., Bob, Z., et al. (2016). Local line directional pattern for palmprint recognition. Pattern Recognition, 50, 26–44.

Ojala, T., Pietikäinen, M., & Harwood, D. (1996). A comparative study of texture measures with classification based on featured distributions. Pattern Recognition, 29(1), 51–59.

Tamrakar, D., & Khanna, P. (2015). Occlusion invariant palmprint recognition with ULBP histograms. In Int. conf. on Image and Signal processing (pp. 491–500).

Guo, X., Zhou, W., & Yanli, Z. (2017). Collaborative representation with HM-LBP features for palmprint recognition. Machine Vision and Applications., 28, 283–291.

Li, G., & Kim, J. (2017). Palmprint recognition with local micro-structure tetra pattern. Pattern Recognition, 61, 29–46.

Zhang, S., Wang, H., Wenzhun, H., et al. (2018). Combining Modified LBP and Weighted SRC for Palmprint Recognition. Signal, Image and Video Processing, 12, 1035–1042.

Karanwal, S., & Diwakar, M. (2021). Neighborhood and center difference-based-LBP for face recognition. Pattern Analysis and Applications, 24, 741–761. https://doi.org/10.1007/s10044-020-00948-8

Michael, G. K. O., Connie, T., & Jin, A. T. B. (2008). Touch-less palm print biometrics: Novel design and implementation. Image and Vision Computing, 26(12), 1551–1560.

Shorrock, S., Yannopoulos, A., Dlay, S., et al. (2000). Biometric verification of computer users with probabilistic and cascade forward neural networks (pp. 267–272). Advances in Physics.

Connie, T., Jin, A. T. B., Ong, M. G. K., et al. (2005). An automated palmprint recognition system. Image and Vision Computing., 23(5), 501–515.

Nigam, A., & Gupta, P. (2015). Designing an accurate hand biometric based authentication system fusing finger knuckle print and palmprint. Neurocomputing, 151(1), 120–132.

Jaswal, G., Amit, K., & Ravinder, N. (2018). Multiple feature fusion for unconstrained palm print authentication. Computers and Electrical Engineering, 72, 53–78.

Ojala, T., Pietikainen, M., & Maenpaa, T. (2002). Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(7), 971–987.

Ukil, A., Shah, V. H., & Deck, B. (2011). Fast computation of arctangent functions for embedded applications: a comparative analysis. In IEEE International Sym-posium on Industrial Electronics (pp. 1206–1211).

Huang, T., Burnett, J., & Deczky, A. (1975). The importance of phase in image processing filters. IEEE Transactions on Acoustics, Speech, and Signal Processing, 23(6), 529–542.

Oppenheim, A. V., & Lim, J. S. (1981). The importance of phase in signals. Proceedings of the IEEE, 69(5), 529–541.

Mazumdar, D., Mitra, S., Ghosh, K., et al. (2021). Analysing the patterns of spatial contrast discontinuities in natural images for robust edge detection. Pattern Analysis and Applications. https://doi.org/10.1007/s10044-021-00976-y

Junli, L., Gengyun, Y., & Guanghui, Z. (2012). Evaluation of tobacco mixing uniformity based on chemical composition. In 31st Chinese Control Conference (pp. 7552–7555).

Fausett, L. V., & Hall, P. (1994). Fundamentals of neural networks: Architectures, algorithms, and applications. Prentice-Hall.

Woo, W., & Dlay, S. (2005). Regularised nonlinear blind signal separation using sparsely connected network. IEE Proceedings-Vision, Image and Signal Processing, 152(1), 61–73.

Kou, J., Xiong, S., & Wan, S. (2010). The incremental probabilistic neural network. Sixth International Conference on Natural Computation., 3, 1330–1333.

Kwak, C., Ventura, J. A., & Tofang-Sazi, K. (2000). A neural network approach for defect identification and classification on leather fabric. Journal of Intelligent Manufacturing, 11, 485–499.

Li, Y., & Zhang, C. (2016). Automated vision system for fabric defect inspection using Gabor filters and PCNN. Springerplus. https://doi.org/10.1186/s40064-016-2452-6

Wei, P., Liu, C., Liu, M., Gao, Y., & Liu, H. (2018). CNN-based reference comparison method for classifying bare PCB defects. J. Eng., 2018(16), 1528–1533. https://doi.org/10.1049/joe.2018.8271

Liu, F., Su, L., Fan, M., Yin, J., He, Z., & Lu, X. (2017). Using scanning acoustic microscopy and LM-BP algorithm for defect inspection of micro solder bumps. Microelectronics Reliability, 79, 166–174. https://doi.org/10.1016/j.microrel.2017.10.029

Kennedy, J., & Eberhart, R. C. (1995). Particle swarm optimization. In IEEE International Conference on Neural Networks, Piscataway (pp. 1942–1948).

Wang, D., Tan, D., & Liu, L. (2017). Particle swarm optimization algorithm: An overview. Soft Computing, 22, 387–408.

PolyU palmprint database. Available at http://www.comp.polyu.edu.hk/~biometrics/:

CASIA palm-print image database: Available at http://biometrics.idealtest.org/

IIT Delhi touchless palmprint database. Available athttp://www4.comp.polyu.edu.hk/~csajaykr/IITD/Database_Palm.htm

Al-Nima, R. R. O., Dlay, S. S., Woo, W. L., et al. (2016). A novel biometric approach to generate ROC curve from the probabilistic neural network. In 24th Signal Processing and Communication Application Conference, SIU. (pp. 141–144).

Wang, X., Gong, H., Zhang, H., Li, B., et al. (2016). Palmprint identification using boosting local binary pattern. International Conference on Pattern Recognition, 3, 503–506.

Jabid, T., Kabir, M. H., & Chae, O. (2010). Robust facial expression recognition based on local directional pattern. ETRI Journal, 32(5), 784–794.

Tarawneh, A. S., Chetverikov, D., and Hassanat, A. B. (2018). Pilot comparative study of different deep features for palmprint identification in low-quality images. https://arxiv.org/abs/1804.04602

Funding

The authors have not received any funding.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. The first draft of the manuscript was written by Poonam Poonia and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Poonia, P., Ajmera, P.K. Robust Palm-print Recognition Using Multi-resolution Texture Patterns with Artificial Neural Network. Wireless Pers Commun 133, 1305–1323 (2023). https://doi.org/10.1007/s11277-023-10819-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-023-10819-0