Abstract

Data replication is an important mechanism for managing data distribution. The general purpose of data replication is to place data in different locations so that it is available to the requester as quickly as possible. In replication, multiple copies of data are stored in multiple locations, which increases availability, fault tolerance, load balancing, and scalability. It also decreases bandwidth consumption and response time. In this paper, data replication scenarios classify to the types of methods, the number of replications, locations, best replication based on energy, types of architecture, replication management, and criteria selection for best replication. The parameters of a good replication strategy are identified and expressed, which simultaneously include reducing access time, reducing bandwidth consumption, increasing the availability of storage resources, and balancing replication. By examining and analyzing of recent papers, solutions classify to the dynamic solution, meta-heuristic solution, multiple criteria decision-making solution, and machine learning solution. Our research show that the best of which is the multiple criteria decision-making solution methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to the producing of huge amounts information in all fields, it is difficult to maintain and manage data and quickly and effectively access them. To increase data availability, distributed data storage system plays a fundamental role. A huge amount of data is created for various purposes and management of this data is required. In a system that it’s data is not available locally, it is shared for better use and increase system performance. Data replication is a method used to improve performance and availability and reduce system latency.

One of the most important issues affecting the performance of grid systems is data replication strategies. Replication strategies specify which, when and where data should be replicated to increase system performance. The problem of data replication in distributed systems is Np-hardFootnote 1, and the goal of data replication solutions is to create a state close to optimal in these systems. Therefore, there is a growing need for algorithms that determine the optimal replication configuration in the system according to the available resources.

Figure 1 shows a diagram of the study process of data replication, which is categorized based on past studies. As can be seen from Fig. 1, this flowchart has analyzed and categorized all the challenges and their solutions based on the previous works.There are two types of replications: static replication and dynamic replication. Dynamics actually means changes in access to resources and requests during replication, but in a static replication, the number of replications and the number of nodes are determined at the beginning of the life cycle and at the beginning of initial design. After that, no replication or migration can be done [ 1], and until the user deletes the replication manually or its duration has expired, the created replication will be available in the same locations. The disadvantage of this method is the inability to adapt to changes in user behavior, and this method is not suitable if there is a large amount of data and users, but the advantages of this method are that it does not overflow with dynamic algorithms and the scheduling work is done quickly [2]. In dynamic replication, the act of creating replication is based on the pattern of user requests to access files and environment changes, and creating replication in new nodes are based on storage capacity and bandwidth [3] and Due to the change of the access pattern, it is done periodically [4].

The data replication in different places can be fixed size from the beginning or it can be done with non-identical replications of data. In this method, the data that have been used the most recently, according to the pattern of access, will likely be used again in the future. Due to this fact, popular data are determined by analyzing the requests of users to access the data, as well as the history of access and the allocation of specific weight, and are placed in places close to the users who have made the most requests. This method has increased availability and has reduced system bandwidth consumption.

Various papers have been presented to determine the location of data replication. Some of the presented papers determine the location of data randomly and the data is distributed among data centers. Another group has determined the location of the data based on the preference of data replication based on the proximity of the data center to the users who have the most requests to access the data. Another category is based on the data owner, where the data is replicated in the data centers around the location of the data owner. A group of papers also work based on traffic, which means that data centers that have the most traffic for data replication are preferable.

Energy-oriented replication strategies are part of green computing. Green computing makes the environment pollution-free by focusing on better storage, temperature reduction and lower energy consumption. Therefore, for minimum energy consumption, the total number of active servers should be minimized and the level of utilization of replications should be considered, although reducing energy consumption and maintaining high computing capacity is achieved by implementing replication strategies. However, the number of data replications is directly proportional to energy consumption, which directly affects the performance and cost of creating and maintaining new replications [94]. Therefore, the main issue is to decide on the number of replications needed and their location.

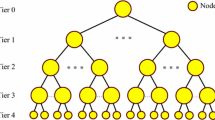

In data replication, it is possible to identify the best node based on the network delay and the user’s request, but the network architecture imposes conditions that the replication result is not suitable. In each of the architectures, the data replication models in the grid are peer-to-peer, multi-layer, hierarchical, and graph hybrid architectures. The data replication mechanism is completely dependent on the architecture of the distributed system, and this system can be supported by several types of architecture. Choice of the type of architecture will determine the form of replication.

Data replication is an important mechanism for fast access to data and a technique for managing large distributed data. The goal is to place replications in different locations so that there are multiple copies of a particular file replicated at different sites [1]. Data replication is an effective solution to have better efficiency in load balancing and response time, in the use of distributed data. In replication an effective method to obtain high performance and availability by storing replication in different locations is presented, which reduces bandwidth consumption and cost, with replication transparency, in fact, multiple replications from the same source.

By examining and analyzing of recent papers, solutions classify to the dynamic solution, meta-heuristic solution, multiple criteria decision making solution, and machine learning solution. The most important meta-heuristic algorithms are genetic, ant colony, artificial cloning, firefly and PSO that are explained in this paper.

Unlike the previous review papers that usually examine the issue of replication only in the data grid, distributed databases, cloud, and distributed systems separately, in this article, it is tried not to focus only on one type of distributed system, but to categorize them based on the common issues raised in them. Therefore, all the researchers in different fields of science who intend to optimize replication can solve their problems with the ideas presented in this paper. One of the most important innovations is that, the researchers can establish a mapping between all the important challenges in the replication in all topics of distributed systems. It can also introduce the recent challenges of replication and get acquainted with the most used and updated methods of solving it.

In the continuation of this paper, firstly, in Sect. 2, a complete background of the replication, challenges and problems are presented. In Sect. 3, relying on previously reviewed papers, different types of solutions are stated. In Sect. 4, a comparison of the methods to find the best solution method and suggestions for solving the existing problems are presented, and in Sect. 5, the conclusion and open issue are stated.

2 Data Replication Overview and Challenges

In this section, the important challenges in the field of reproduction that have been reviewed by various articles are presented and categorized.

2.1 Types of Data Replication

Much research has been done on improving the data replication strategy in distributed file systems in order to achieve the desired availability and above all, the reliability of data in the distributed environment.

In Kingsy Grace and Animegalai [6], the concept of static replication is expressed. In this method, the reproduction process is fixed from the beginning and is considered a part of the configuration, and during the initial design, the location of the reproduction is determined, and at the time of execution, the reproductions are placed in the predetermined places and until the end, by changing the pattern The application or network architecture of the applied policy remains constant. The disadvantage of this method is the lack of proper use of resources and reduced efficiency, because if the number of users is assumed to be 50 at the beginning of the static replication policy, and after some time the number of users changes, the traffic will increase. So, the application of this method is to request users who are predictable in advance and the only solution to solve the problem is a redesign, but the advantage of this method is its simplicity.

The static replication technique was first applied in the Hadoop distributed file system [7], where no replication is defined by the user and data replication is done automatically in three ways. Two of them are placed in the neighbor of the user’s node, and the other is randomly placed in other nodes. If more than three replicates need to be placed, the replicates are randomly assigned. Creating static replication reduces latency and increases data availability, but the disadvantage of static replication is that it cannot be performed in all situations. When static replication is applied, the storage capacity of the nodes is not taken into account.

In [2], a static central data replication algorithm is presented. This method first finds p to the area to locate the data replications, then takes action to minimize the distance of the replication requests from the requester to the system that has this data. This method reduces internal bandwidth consumption and balances It includes consumption in the cluster nodes and reduces the cluster overhead. Here, a probabilistic model is proposed to monitor the relationship between data block access, data location, and network traffic. The simulation has been done on a Hadoop cluster and the results show that this method keeps the storage space consumption in the cluster balanced and reduces the internal bandwidth consumption.

The data replication mechanism in the Google file system can also uses the static distributed cloud data replication algorithm. In a Google File System cluster, the master server replicates the fragmented data on the server with below-average space utilization between racks with a location policy. The main server considers three steps in deciding on replication [9].

1- Replications whose occupied space is less than the total average are selected in the chunk servers to place the new replication.

2- The number of recent replications on a chunk server is limited.

3-Replications of a chunk among racks mean a rack-aware positioning policy.

A data chunk is duplicated when the number of its duplicates in the system is less than the value set by the user. Much research has been done on improving the data replication approach in distributed file systems to achieve the desired availability and above all data reliability in the distributed computing environment.

In [10], a method called the VCG mechanism is proposed, using the concept of acceleration, and based on access to the location of files in the system, duplicates are created and placed in the node that requests the most data.

[11] suggests a dynamic decentralized data replica placement and management technique that operates at edge nodes to decrease transmission latency and enhance user experience. To find a balance between the cost of storage and decreased latency, it investigates the location, access frequency, latency improvement, and cost spent on putting copies on edge nodes. In particular, the load guarantee for edge nodes in the dynamic and decentralized replica placement strategy algorithm prevents overload by dynamically adding or removing data replicas on the edge nodes by the frequency of requests. To reduce transmission costs, a decentralized, dynamic replica placement method is used. The results of experiments demonstrate that a dynamic and decentralized replica placement plan algorithm can significantly minimize transmission latency, and balance the load on edge nodes.

A method for dynamic data replication in the cloud is proposed in [12]. The proposed method applies replication by maintaining data availability in the system, which consists of two stages file application and replication operations. In the first step, a list is created for replication in the location of replication, and in the second step, optimization is done among the performed replications.

Shovachko et al. have presented Hadoop’s distributed file system mechanism. Hadoop’s distributed file system method is similar to Google’s file system, except that it is lightweight and open source. A Hadoop distributed system cluster like the Google distributed system consists of three components, the main component is the name node and several storage components are the data node and the client component. In this way, a program can determine the number of file duplications. Also, the volume of data blocks can be adjusted [13].

In [14], a Byzantine fault tolerance framework is presented, which uses the replication technique as a fault tolerance approach. In addition, it chooses the node voluntarily based on the service quality features and performance reliability. Their tests in various cloud environments show that robustness is guaranteed when up to f sources out of 1f + 3 available sources are broken or faulty. It has been claimed in [15] that by reducing this ratio to f2 + 1 while keeping the same features as traditional algorithms, the number of replications can be reduced. It is possible to reduce the cost of cloud infrastructure by reducing the number of replications.

In [16], a better fault tolerance system than the traditional BFT is presented. In the presented model, there are passive and active replicas, and the system status is updated periodically. The results show that the failure of the system tolerates up to f replication out of 1 + 2f replication, that is, with f + 1 active replication in the absence of intrusion, it guarantees execution. In dynamic or dynamic replication, three strategies are defined These include which data file should be replicated, and how the replication time is according to the needs of users, considering reducing the waiting time and increasing the speed. A few new replications should be done as per the access requirement. and where the new replication should be placed according to the success rate of system tasks and bandwidth [5].

In many articles, dynamic propagation methods are divided based on two parameters:

• Dynamic replication based on distance.

Collaborative networks called NC are presented in [55]. Networks that work under the supervision of a set and based on the nearest replication selection, which is called Rigel, determine the best replication, and the shortest access time to each site. And it has a scalable solution to choose replication for users in a component called NC Computing System and NC Storage Infrastructure. This presented method reduces the response time and availability, but it does not cause scalability, fault tolerance, reliability, and load balance and reduces the bandwidth consumption.

In [56], a simple method called the nearest neighbor KNN rule is presented to select the best reproduction according to local information. This method selects the best reproduction according to the previously transferred files. Here, a method for predicting the estimation of the transfer time between locations is proposed, and this predicted transfer time helps to choose the best replication among different locations.

•Dynamic replication based on response time.

If the same files are placed on different sites, their response time and Qos are different. Among the quality factors, the entities of quality assurance have been defined as reliability, security, and response time. This model causes availability, response time reduction, and reliability, but it causes fault tolerance, scalability, and load balancing, and reduced Bandwidth is not consumed [57].

In [58], a data replication service named GRESS is presented, which selection of replication reduces the response time. Response time is calculated based on parameters such as bandwidth and access time delay. This model does not provide scalability, fault tolerance, load balancing, and reliability.

Replication selection algorithms that are adaptable to network bandwidth fluctuations in environments with wide bandwidth have been presented in [59], and the algorithm is used to transfer different replications of the same files using dynamic network information. For each replication location, bandwidth and access time latency are preserved for previously transferred files, but scalability, fault tolerance, load balancing, and reliability are not compromised.

In [60], the MLFU method, which includes two dynamic replication methods called MFU and LFU, is presented, which are used in many cases such as disk and cache memory. If there is enough storage space for duplicating, one of two methods is called to select the file. Finally, the LFU method is used to select the destination cluster that has enough space to duplicate the file.

Ribeiro et al. have presented a dynamic propagation method called Largest Access Last Weight (LALW). The architecture of this method is based on data replication management. In this method, the weight of the files is evaluated using the half-life concept, and by selecting a popular file for duplication, the appropriate number of duplications and duplication locations are calculated, and the higher the frequency of access, the greater the weight of the file. The results show a better performance of this method [61].

In [39], a hierarchical replication method called LALW is presented based on data grid clustering, which is an extended replication of the hierarchical bandwidth of HRS, which does not consider data access patterns and therefore cannot be synchronized with changing user access behavior. and give access. In this method, the cost of transferring replication from one cluster to another has been reduced.

An adaptive data replication algorithm called PFRF is presented in [62], which is developed with limited storage space on a star-shaped network, and this algorithm calculates the file access rate in each period based on the need to change the location of file duplication with the change of users’ behavior. In fact, it has improved access and reduced bandwidth consumption in replication.

Sharfuzaman et al. presented a method called PBRP which is based on popularity based on replication location in a hierarchical data grid with directed file popularity. According to the access rate to a file, the popularity of that file among customers is determined, and to reduce the access delay, duplicates are placed close to the customers. This method depends on the calculation of the threshold related to the popularity of the file and based on the data request arrival rate; the threshold value is obtained dynamically. Simulation has shown that this method has a better performance in execution time and bandwidth consumption compared to other dynamic propagation methods [63].

2.2 The Number of Replications

The number of replications can be divided into two categories. In the first category, the same value is considered for all data. This process is called fixed multiple replications where all data is replicated to a fixed number in the system. In the second category, the number of replications of each data is determined dynamically in each period according to the popularity of the data. To make optimal use of system resources and increase system efficiency, data that are rarely used are replicated in fewer numbers, and data that are accessed and used a lot are replicated more. The number of replications can change according to the popularity of the data in each period for each data.

In [17], a dynamic data replication strategy (D2RS) has been presented, which has analyzed the relationship between accessibility and the number of replications. Using a threshold, it detects popular data and calculates the appropriate number of replications with the aim of greater effectiveness, and then places replications evenly among nodes in locations close to users who have made the most requests.

A cost-effective dynamic data replication management (CDRM) method for the relationship between data availability and the number of replications on the Hadoop file system platform is presented in [18]. The placement of data is based on the changing workload and capacity of the storage nodes. The minimum number of replications for each data is determined based on the required access. This method is used to calculate the minimum number of replications in the system to meet the desired data access requirement. The location of the data based on the capacity and probability of deletion shows the number of connections it can establish at the moment to respond to requests. This method can dynamically redistribute the load between the nodes in the heterogeneous cloud by adjusting the number of replications and determining their location based on the load changes and storage node capacity. The results show that with the increase in the number of replications, the availability of data also increases.

In [19], a centralized replication algorithm called RCP was presented, which predicts the required number of replications for each file in each region. When there is not enough storage space for replication, RCP deletes the least used file.

A Markov-based replication method in cloud computing is proposed in [20], where a gray Markov chain prediction model calculates the number of replications required for each block of files. This place makes the algorithm based on genetic algorithm fast sorting. This algorithm improves response time, write latency, read throughput, and workload balance across nodes.

In [21], the data-aware replication algorithm is presented, and based on the data in the system, it calculates the number of replications and uses the concept of the backpack to improve the replication cost. For this purpose, replications are moved from high-cost nodes to low-cost nodes.

2.3 How to Determine the Data Location

In [2], the presented CRMS method is a solution to the important challenge of location determination in duplicate data. Determining the location of the data near the place where the number of access requests is higher saves bandwidth consumption for data transfer between the user and the system. In order to reduce internal traffic, this method performs replication by measuring the access pattern of users. The experiments have been performed on a cluster similar to the Hadoop file system. The results show that this method reduces the internal bandwidth consumption, while the storage space in the cluster remains balanced.

Kumar and colleagues have presented a method for determining the location of the load aware (SWORD) to reduce the consumption of system resources [23]. In this method, query span is used to measure the load. In each step, by running LMBR, part of the data is transferred between the storage devices, and with this method, the query span is reduced the most. In this method, data is located so that the system can respond to all requests by executing the least number of transactions and using the least amount of resources and bandwidth. Here, with the incremental repartitioning method, the data is moved step by step and its goal is to achieve maximum profit and minimum cost. This method has been able to improve by migrating 21% of the data to 31% and shows a good balance between the degree of parallelism and the response time.

In [24], a flexible data replication method with high fault tolerance called RFH is presented. This method is a decentralized data replication algorithm, based on traffic, in which the decision to replicate or move or delete data in each virtual machine is based on It is based on the weight of the car. The weight is calculated based on the traffic of the node, that is, the node with the most traffic in terms of routing, data replication, or migration destination is selected, and in fact, the data is replicated in the nodes based on the traffic they created in the nodes. The simulation results show that this method performs better than request-based, owner-based, and random-based methods. Since request-based positioning approaches do not perform well when congestion changes, this method is introduced in terms of proper performance against congestion changes and providing reasonable performance. A query is a request for information sent by a customer, and the request generates traffic in data centers by considering the routing method in its direct path to the target. With this method, duplications are located in the necessary routing paths. Since determining the location based on the request at the time of changes is not very effective, this algorithm was presented with the purpose of proper performance against traffic changes. This method uses a traffic-based positioning policy, the results show that it has better performance than other positioning methods.

Janpet et al. have proposed a method called RADR to shorten the access time. Storing each replication in data centers is to prevent data loss and is a location-aware method of data centers. This technology is cheap, has no storage limitations, and expands accessibility. The disadvantage of this method is that it is not known how to reproduce data in data centers that are close to users and users have a shorter path to access data [25].

In [26], a quality-of-service-aware dynamic deduplication method aimed at saving disk space and maintenance cost called DRDS is presented. Additional replication blocks consume more disk space and also increase maintenance costs significantly. The results of the experiments show that this method can increase the disk space and maintenance cost for distributed storage systems, but it increases the availability and efficiency of the system. A large number of replications takes up a lot of disk space, increases maintenance costs, and does nothing to meet the quality of service requirements. This method provides a function called OEF that calculates the appropriate number of replications in the system to meet the needs of performance and availability of the user. This method consists of three steps:

1- Determining the minimum number of copies for each file to guarantee the availability of user data in the system.

2- Calculating the appropriate number of replications that should be maintained in the system to ensure performance requirements.

3- Identifying non-essential duplicates and removing them from disks to free up storage space.

2.4 Replication by the Energy-oriented Method

Recent research showed that large-scale data centers consume a large amount of electricity [22]. Therefore, in data centers, resources and power distribution and cooling systems work together for a high level of reliability and each consumes a percentage of energy. To reduce this energy consumption, two methods can be mentioned, one is to turn off the parts and the other is to reduce the efficiency scale, which means that the parts do not use the maximum efficiency. For more reliability, high performance, and low latency, data sources should be replicated close to the physical infrastructure of cloud applications or close to clients.

In [27], an energy-efficient data replication method is presented that replicates data between data centers and within the data center. The purpose of this method is to reduce system-level energy consumption, network bandwidth consumption, and communication delays in the data center network.

A closed-level network simulator, focusing on cloud communication, is presented in [28], which is designed for energy-aware data centers to obtain information about the energy consumption of data center components (servers, switches, etc.). Based on the NS2 network simulator, this method has fully implemented the link layer protocol and provides the possibility of simulating other protocols as well. The disadvantage of this method is that it is suitable for small-scale data centers because it requires a lot of time and memory for simulation.

In [29], a dynamic replication secure deployment strategy is introduced, which proposes a Competitive Swarm Optimizer (CSO) based approach and improves energy consumption and network bandwidth, and by designing an intelligent fuzzy inference system with four inputs centrality, energy, utilization from storage and load, it determines the appropriate data center for the new replication. The proposed algorithm is evaluated with CloudSim and the experiments show that this strategy can reduce the total energy consumption and response time compared to other related algorithms and in terms of storage space utilization, effective network utilization, average delay, load variance, the number of replications, efficiency and bandwidth consumption, the obtained results show that the proposed strategy is better than the previous replication methods by a significant difference.

Seguela et al. [8] compared different data replication strategies. They generally aimed to minimize energy consumption while others aimed to maximize profit. The results showed that few data replication strategies offer concurrent solutions to these issues, even though there is a great demand from industries to reduce energy consumption. The PEPR strategy [133] leads to cost reduction without any impact on energy consumption. In addition, Bro et al. [132] By using more energy, they reduced the cost. On the other hand, MORM [134] consumes less energy but causes more cost due to a large number of replications.

2.5 Architecture Models of Data Replication

The architecture of distributed systems is in two forms, flat and hierarchical, and the types mentioned in the following sections are highly effective in data reproduction and have optimal results.

2.5.1 Decentralized Peer-to-peer Architecture

It is a type of network in which each peer can be both a server and a client. In this architecture, the nodes operate independently without the intervention of the central authority. Nodes typically have enough performance for both server and client at the same time. This decentralized structure is more disruptive to the structure of the distributed system than other architectures because nodes can connect to any part of the network and leave without notice. Replication strategies for this architecture are developed with this highly dynamic nature of peer-to-peer networks in mind [30].

Kavita et al. [31] presented a decentralized method of creating replication in a peer-to-peer network. In this algorithm, multiplication is done with some possible measurements. This method has no breaking point compared to other methods and is not dependent on any central monitoring plan. One of the disadvantages of this method is unnecessary reproduction.

In [32], a dynamic replication strategy for data availability in large-scale peer-to-peer networks is presented. In this method, replication points can automatically perform decentralized replication at the time of request for availability. Therefore, a threshold is always maintained. In decentralized decision-making, there is no point in failure. This method is suitable for finding the number of replications and determines the best host for replication and causes availability, reduced response time, and reduced bandwidth consumption, but does not include scalability, reliability, fault tolerance, and load balancing.

Chalal et al. have presented the priority strategy of the replication site in [33]. In this method, identical reproductions are placed far from each other, and different reproductions are placed near each other. Dijkstra’s algorithm has been used to select the shortest path between two sites. This method focuses on efficiency and reduces access time. This method increases availability, reduces response time, and reduces bandwidth consumption, but does not increase scalability, reliability, fault tolerance, and load balance.

A peer-to-peer model is proposed in [34]. In this method, peers operate independently within a peer group, all pairs working in agreement for a common set of services. Pairs can join or leave the group at any time, this model is presented for availability, reliability, scalability, and response time, several nodes and average bandwidth consumption are evaluated as basic parameters. have taken. This method increases availability, reduces response time, and reduces bandwidth consumption, and scalability, but does not increase reliability, fault tolerance, and load balancing.

2.5.2 Decentralized Multilayer Architecture

This architecture is like a tree structure, to create data grids, this architecture provides effective cost-effective techniques for sharing resources between users [35].

Ranganathan et al. have presented six different replication techniques for the access pattern. 1- No replication: in this case, only the root is replicated 2- Best client: replication is created for the client that has the most access to the file. 3-Waterfall: A replication is created in the path of the best client. 4- Simple storage: It is a local replication and is stored based on the initial request. 5- Simple cascade storage: It is a combination of simple storage and cascade strategy. 6- Fast expansion: Duplication of files in each node is stored in the path of the best client. In this method, response time and bandwidth consumption have been evaluated. This model causes scalability, availability, and reliability, and reduces response time and bandwidth consumption, but does not reduce fault tolerance and load balance [36].

In [37], it presented the available memory between different nodes and the available bandwidth between different nodes. This method is optimal access to global data through optimal access to local data, and each node can reliably perform replication locally in the area it belongs to. This model causes scalability, availability, response time reduction, and reduced bandwidth consumption, but it does not reduce fault tolerance, load balance, and reliability.

Chen et al. have proposed a dynamic propagation algorithm in a data grid called PHFS, which is a more advanced propagation than fast spread. It strives to anticipate future needs and replicate them hierarchically to increase local availability and thereby improve performance. In addition to multiplying hierarchical data in different layers, this method uses multi-layer grid data to obtain local access for optimization and is intended for reading compressed grid data. Experiments show that it has better performance and less delay than other methods [38].

2.5.3 Decentralized Hierarchical Architecture

Creating a cluster in the network is a solution to reduce data transmission in paths that have long distances. Therefore, signaling and control from above can reduce data.

In [39] efficient hierarchical cluster scheduling (HCS) and hierarchical replication strategy (HRS) are proposed. They presented this model to exploit high bandwidth, fast data access, and low communication cost. HCS scheduler is the cost of access and the number of tasks performed in the queue of a particular node. Using the optimizer, HRS selects the region of the best cluster and selects the best site from the connections of the clusters with the lowest connection time and the cluster with the lowest connection cost. This model causes availability and response time reduction but does not cause scalability, fault tolerance, reliability, load balance, and bandwidth reduction.

Mansoori et al. presented an improved dynamic replication strategy based on LALW’s last access strategy and introduced it as DHR dynamic replication hierarchy algorithm. This algorithm reduces execution time. This algorithm places duplicate files on inappropriate sites. The best site for any replication is the site that has the highest number of accesses to that particular file. This method selects the location with the least number of replication requests. Also, by preparing a list of all the storage elements that have requested a file, the replication is placed in the first storage element from the list, and due to not placing the replication in all requested locations, the storage cost and execution time are reduced. If there is enough storage space, the selected file will be duplicated. If the file is available in the local network, it has remote access. Now if there is not enough space to duplicate and the requested file is not available, it prepares a list of available duplicates that have been used less recently and starts removing files from the list to get enough space for duplication. Compared to other algorithms, it reduces the storage space and because it avoids creating unnecessary duplications, it performs better than the others when the file size increases. This method causes availability and response time reduction, but it does not cause scalability, fault tolerance, reliability, fault tolerance, and load balance and reduces bandwidth consumption [40].

In [41], a heuristic method that has a simultaneous application for planning and duplicating files in different parts of a data grid is presented. In the makespan method, the time to execute all the tasks and also the time to transfer all the files is reduced. Because reducing one goal endangers another goal. If using strong connections to achieve the lowest transfer time, needs a lot of bandwidth in the system. Therefore, this method is very dependent on the local timing of computing nodes. Failure to check data security at the time of duplicating and replacing files is one of the disadvantages of dynamic hierarchical duplicating.

Using a data replication method, called Branch Replication Scheme (BRS), Perez et al. investigate the challenge of scalability and optimization of replication in data network environments. In this method, replications are formed as a set of sub-replications that are organized using a hierarchical tree topology. This model is suitable for using parallel input/output techniques and the simulation results show the increase in performance of data access and the feasibility of BRS for a network [42].

2.5.4 Decentralized Hybrid Architecture

This type of architecture includes a combination of several other types of architecture. [43] studied the replication problem and provides a set of replication management services, and its proposed protocols have high availability by reducing bandwidth consumption, progressing fault tolerance, and increasing scalability, reliability, and load balance. This model is a combination of the tree structure and ring topology. This model makes the availability of scalability, fault tolerance, reliability, load balancing, and reducing bandwidth consumption, but it does not reduce the response time.

One replication method in hybrid networks is peer-to-peer content delivery. This method clusters users based on the prediction of their needs and according to their lifetime in the network. By multiple replications of content in the network and transferring each request to the nearest replication location, preventing the content from being wasted when leaving the network can effectively reduce the delay of the user’s request search and increase the reliability of the system. The presented method has been simulated using the NS2 simulator and its results have been analyzed. Considering various parameters in content replication in hybrid networks has led to improvement of operational capacity, useful use of available resources in the network, and more importantly, reduction of customer response time [44].

In [45], the optimal allocation of sensitive data is analyzed. The solution of this method includes two grid topology layers. The upper layer is represented by a general graph that contains a multi-cluster network, and this replication assignment consists of two problems: determining the cluster whose replication needs to be shared, and optimal cluster sharing by determining the number of shared replications required. It is in clusters and other places. This method causes availability but does not cause scalability, fault tolerance, reliability, load balance, and reduced bandwidth consumption and response time.

Rahman et al. present a multi-objective method for replication placement and use p-median and p-center models to select nodes in replication placement. The p-intermediate model finds nodes placing replications to optimize the average request response time. The p-center model selects replication nodes to reduce the maximum response time. Their strategy aims to minimize the intermediate model p. By doing this, they minimize the average response time without having a requester too far from a replication node. The simulation results show that the multi-objective strategy has a better response time than the single-objective strategies that use p-median and p-center models [46].

RTM and RCPL methods are presented in [47], which reconstructs the graph structure for proper scheduling by using two strategies of duplication and integration of tasks. By using replication, the time to recalculate a task is replaced by the time to transfer its information. By merging the tasks, the communication cost is also reduced when the communication cost between the tasks is higher than the time of their execution. To increase efficiency, there is a need for an effective and efficient schedule. In grid networks, the resources are heterogeneous and this increases the complexity of the scheduling algorithms that can be used in these environments.

Li et al. propose a dynamic replication strategy, called Minimize Data Missing Rate (MinDmr). This method introduces two criteria, System Missing File Rate (SFMR) and System Byte Missing Rate (SBMR), by managing the measurement and availability of the entire system. The first one shows the ratio of the number of missing files to the total requested files, and the second one shows the ratio of inaccessible bytes to the total requested bytes. To improve SFMR or SBMR, all files are weighted by calculating file availability, number of expected future accesses, number of replications, and file size. Files with less weight are called cold data and files with more weight are called warm data. During replication replacement, cold data is removed first and hot data is more likely to be replicated. In performance evaluation, this method performs better in terms of execution time compared to other strategies [48].

3 Proposed Solutions

Different problems of reproduction in different articles have been solved in different ways, each of which has advantages and disadvantages, and it has tried to create the least reproduction with the greatest possibility of accessing the existing files [49–54]. In these articles, all available solutions have been reviewed and reported in the form of four solutions.

3.1 Dynamic Replication Algorithm

To check the performance of replication mechanisms, the DRepsim simulator is presented, which has developed two methods for multi-layer data grids to reduce data access response time, namely simple bottom-up (SBU) and aggregation bottom-up (ABU) algorithms. Simple bottom-up algorithms consider the file access date for personal locations, but the cumulative bottom-up method collects the file access date for the system. Using the bottom-up cumulative method, a node sends the collected records of past accesses to the layers above it, and they continue the same process until they reach the root. In this method, popular files are reproduced at the lowest level and less popular ones at higher levels, and the simulation results show that the response time and bandwidth of the cumulative bottom-up method are less than simple bottom-up algorithms [64].

In [65], an automatic replication algorithm is presented in the grid environment, which requires algorithms to create, delete, select, and publish replication updates. With the automatic replication algorithm, new replication is performed. The replication deletion algorithm, saves space. The storage is created and the replication selection algorithm selects the optimal replication for reading/writing operations. Finally, the updated propagation algorithm will be responsible for updating the previous replications. The proposed algorithm has been tested for two types of grids. The results of this method show a reduction in access time and an increase in storage.

In [66], a dynamic propagation method called FIRE is presented. In this method, there is an access table that records the access time of files at any location, and at any location, check the table, and if somewhere, this algorithm detects that a set of files is accessed by a group of local tasks. And there is a strong correlation between a group of local tasks and a set of discrete files, on locations, replication is called.

In [67], a data replication algorithm named PDDRA has been presented that optimizes the previous methods and according to the file access date and the similarity of the characteristics of the system members, using a tree structure to store the file access sequence, it can meet the needs Predict future grid locations before calling a sequence of files. Using this method and comparing the performance of this algorithm with six existing algorithms, it has been improved in terms of access delay, response time, and bandwidth saving.

The multiplexing method called BHR, which is based on hierarchical bandwidth, is presented in [68]. In this method, maximizing the level of the local network and dividing it into several regions, reduces the data access time, and because the network bandwidth between regions is less than the bandwidth within the regions; So, if the required file is placed inside that area, its access time will be less. Also, unnecessary duplications are avoided. The problem with this method is that if there is duplication within the area, it is all over, and the requested files are not placed in the right places, but they are duplicated in all the places of the requester.

In [69], the performance evaluation of eight dynamic replication methods was performed under different data grid settings and the results show that what is very effective on real-time network performance is the file selection technique for replication and the file access pattern.

Zhang et al. proposed a near-optimal network system (BDS) for large-scale data replication between DCs, which is a multi-level overlapping network with a fully centralized architecture and allows the central controller to monitor the data presentation status of the intermediate servers. To take full advantage of existing overlapping routes, have an up-to-date global view. In addition, each overlapping path uses dynamic bandwidth isolation to use the remaining bandwidth available for online traffic. This method is a multi-level overlapping network with dynamic bandwidth separation, which significantly improves the multicast performance of data between DCs [70].

3.2 Meta-heuristic Propagation Algorithm

According to the nature of the problem, the best and most solutions are innovative solutions and have been used a lot to solve problems related to reproduction.

3.2.1 Data Replication Based on Genetic Algorithm

Genetic algorithm is one of the most popular evolutionary techniques and many parts such as solution representation, selection process, and mutation operators can be developed differently based on the problem.

A new genetic algorithm (GA) based replication placement strategy for the cloud environment is presented in [71]. The authors designed a graph with three types of vertices representing jobs, duplications, and nodes. Mappings from jobs to replications are represented by edges between work and replication vertices. This mapping is used in the scheduling step. Mappings between replications and nodes are represented by edges between replication and node vertices. This mapping determines the data replication locations. The proposed strategy can determine the mappings with the best performance as an almost optimal solution using a genetic algorithm. The performance evaluation shows that the proposed replication placement strategy has less data transfer time than the random data placement algorithm applied in the Hadoop distributed file system [72].

In [73], a genetic algorithm is presented for the selection strategy to increase the performance of the cloud system. The proposed strategy determines the probabilistic information of replication in the cloud according to the fixed overlap of the group, the realization of the mutation operators, and the feedback information. The genetic algorithm shows two main steps in the reproductive selection stage. The advantage of this method is that the genetic algorithm finds the optimal solution at the end and this is a distributed optimization method. Therefore, it can adapt to the distributed environment. To calculate the consistency, the proposed algorithm considers the network conditions and the transmission time. The simulation results show that the proposed algorithm leads to good performance in terms of average execution time.

The replication selection process in the data grid was enhanced by Al-Jadaan et al. to ensure user satisfaction. The proposed strategy is done by considering the availability to download the necessary information from that node even if there are some problems such as not working properly and destruction in the network. The authors have combined various parameters such as response time, availability, and security that are not aggregated in the replication selection. To overcome this problem, they have used the Genetic Clustering Algorithm. The best replication is the one that simultaneously provides an appropriate response, availability, and a reasonable level of security. If more than one site shows the best possible combination, the proposed strategy will by default choose one of them randomly. Test results show that it is safer than previous strategies, and at the same time more reliable and efficient [74].

Almuni et al. have presented a genetic-based replication placement method to determine the best location for new replications. The proposed algorithm with optimization technique has two main achievements. The first improvement is that it reduces the data access time by taking into account the cost of reading the files. The second improvement is that it prevents network congestion by considering the load of sites. Compatibility performance is defined based on reading cost and storage cost. Therefore, it can determine the appropriate site for placing different replications to meet the needs of the user and the resource provider [75].

Two meta-heuristic strategies of genetics and ant colony have been used to select the appropriate location of many sites [76]. The main parameters in the ant colony-based algorithm are response time and file size. In the genetic-based algorithm, response time, data availability, security, and load balance are considered. The simulation results show that the genetic algorithm is 30% more efficient than the ant colony strategy.

In [77], a new algorithm for data placement based on a genetic algorithm has been introduced to improve data access in the cloud environment. In the first step, it defines a mathematical model for scheduling data in the cloud system and then uses the consistency function for the number of data accesses to calculate the consistency of each individual in a population. They have used the roulette wheel selection method to select people with high compatibility values. The experimental results show that the genetic algorithm can optimally find a data placement matrix in an acceptable time and the result is a better performance than the Monte Carlo strategy.

A new strategy for placing replication by genetic algorithm for cloud computing is presented in [78]. The proposed strategy takes into account two main facts. The first is that dependencies between primary files are important in reducing data transfer times. Stores files with high dependencies, thus reducing data movement across data centers. The second fact is that the transfer fee is related to the file size. Additionally, there is a high probability that a job requires small input files but produces large files. Therefore, if the created files are required for other tasks in different data centers, a large amount of data must be transferred. In summary, the proposed strategy uses the genetic algorithm to determine the best replication location after constructing the data interdependence matrix. It also uses the total transmission time as the consistency function. The results show that the proposed algorithm can reduce the size of the data movement compared to the K-means algorithm.

The strategy of placing cost-effective replication in the provided cloud system consists of two main processes. First, a cost model is presented to decide the number of replications and their locations. Then, it analyzes a cost-benefit concept for data management. Finally, this method provides real and fictitious replication that minimizes the cost of data management using a general algorithm. The results show that the proposed method is very cost-effective compared to the no-replication scheme, the random strategy, and the minimum replication placement strategy [79].

A dynamic multi-objective optimization replication algorithm to improve system performance is presented in [80]. The proposed algorithm considers file unavailability, data center load, and network transfer cost. Data center load is determined based on processor capacity, memory, disk space, and network bandwidth. Then, it uses the fast permutation genetic algorithm to solve the multi-objective optimized replication placement problem. This program takes into account binary encoding and determines the storage location of the replication and the number of replications. In addition, this compatibility performs replication based on the reliability record table to ensure data availability. The proposed algorithm uses a replication transfer strategy for the problem of file access points that appears with back-to-back requests. The evaluation results show that this method can improve the utilization of network resources compared to the dynamic replication adjustment strategy.

3.2.2 Data Replication Based on the Ant Colony Algorithm

Based on an enhanced genetic ant colony hybrid optimization algorithm, [81] suggests a design for a college scheduling system. First, it is suggested that the selection process of conventional genetic algorithms be augmented by the fitness-enhanced elimination law. The gene infection crossover approach is then suggested to guarantee the rise of the average fitness value during the course of evolution. The standard genetic algorithm’s unneeded replication step is then eliminated to speed up algorithm functioning. Finally, the parallel fuzzy adaptive mechanism is developed to enhance the algorithm’s convergence and stability. The initial pheromone distribution is nonuniform in the ant colony optimization technique because of the position of the current raster in relation to the beginning point.

[82] developed a cost- and time-aware model for data replication that is suitable for combining content-rich services in the cloud. To calculate the cost, the proposed algorithm considers a usage-based model, a flat subscription cost-based package, and a combination-based pricing model. Then, it uses ant colony optimization to reduce the cost of the data-intensive service solution based on the replication cost and response time during the replication selection process. The simulation results have proved that the proposed strategy can efficiently solve the problem of reproduction selection.

An ant colony-based breeding selection algorithm in a data grid environment is presented in [83]. The proposed strategy considers important criteria in the replication selection process: (1) disk input or output throughput, which is related to disk seek time; to reduce data retrieval time, less search time is better. (2) network status, which refers to the available bandwidth for the replication transmission. (3) load the site that contains the requested reproduction; The evaluation results prove that the proposed algorithm was able to reduce the average access time, especially in the data-intensive environment.

Yang et al. describe a new strategy for selection of reproduction based on ant colony optimization to reduce access delay, where the work node is modeled as searching ants and the required files from the work as food. Therefore, replicates stored at different sites have different routes to food. They have assigned a special value for each reproduction so that the size of the special values shows the possibility of being selected. The proposed strategy considers important factors such as host replication load and available bandwidth in the selection process. Simulation results show that the proposed algorithm improves performance in terms of effective network utilization and average execution time [84].

In [85], to increase the performance of the cloud system, they have proposed the propagation selection using the ant colony technique. The authors considered a graph where each node represents a data center and edges connect them. In the beginning, all ants randomly choose a cloud center because there is no pheromone. After the first ant finds the optimal data file, other ants are interested in using the new data center that has the target file. To choose to reproduce, ants use pheromone information, which is defined based on the historical access of reproduction reads and the size of reproduction. The simulation results show that this method reduces the access time more.

Shojaatmand et al. used the ant colony algorithm to select the most appropriate replication in the data network environment [86]. For each file, they defined two references as a logical file name LFN and a physical file name PFN. LFN is independent of the location of the file and PFN indicates a specific reproduction of the file. The proposed strategy using pheromone information shows that reproduction is good. Ants get the statistical information of nodes that have the required replication while moving in the network. Each ant places the collected information as a sequence in the nodes, and other ants use this information to find a better pheromone path. The pheromone is defined based on the available bandwidth and file size. The simulation results show that the proposed strategy can reduce the response time more compared to the strategy without replication.

3.2.3 Data Replication Based on the Bee Colony Algorithm

[87] introduced a honeybee colony-based replication in cloud computing that considers a three-level hierarchical topology. The first level is the areas that are connected with low bandwidth and the second level includes the local network of each area that is connected with higher bandwidth compared to the first level. The third level consists of the sites of each LAN that are connected through high bandwidth. In the proposed algorithm based on a honey bee colony, if new places or new food areas show better quality or more nectar, the bee stays in the new place and one unit is added to its effort index. Quality is defined as the probability of existing files on the sites. After the worker bees finish the search phase, they choose the best site based on the number of requests for a file. Experiments show that the proposed replication strategy can reduce the average working time compared to other algorithms.

Taheri J. et al. use the bee colony optimization algorithm for simultaneous work scheduling and data replication in network systems [88]. The proposed scheduling algorithm arranges jobs based on job length and thus the longest job has higher priority than others. Bees are assigned a certain number of positions to advertise each node. If the profit of the newly allocated job or bee is greater than the bee, it is replaced. If bees are less than 80% similar to dancing bees, they are replaced, thus preventing all bees from being biased towards a particular bee type. There will now be different types available on the dance floor to inform a node for a variety of tasks. This method sorts all the files by size, so the largest file has the highest priority over the others. The results show that the proposed strategies can reduce transmission time and resource usage.

A new replication strategy based on the artificial bee colony (ABC) algorithm is introduced in [89]. In the first phase, the proposed algorithm tries to solve the least cost problem to determine the optimal placement of replication and obtain low cost based on the knapsack problem. The main goal is to find a solution so that the consumer can obtain and store different replications through the shortest path with a lower cost and balance the load in the system. In the second step, the ABC strategy is implemented by data centers to find an optimal sequence of data replication and support the best low-cost path. Experimental results show that the introduced strategy can reduce data transmission compared to other replication strategies.

3.2.4 Data Replication Based on the Firefly Algorithm

The FIREFLY algorithm is presented to deal with multi-state global optimization problems. The flashing light of fireflies is the main characteristic that indicates that there are two main methods, attracting mating partners and alerting potential predators. There are some physical laws in flashing lights. The perspective of the objective function is defined by the brightness of the firefly. After placing a firefly in the vicinity of another firefly, a mutual connection is established between the two fireflies. Attractiveness is proportional to brightness. As the distance increases, the attractiveness and brightness decrease. In other words, the dim firefly is attracted to the brighter side [90].

In [91], the replication step was enhanced with the firefly method in the data grid environment. The proposed strategy regards the grid as a matrix M * N, where M is the number of sites and N is the number of files. The authors used and inserted the total cost of reading and writing. In every generation, the superiority of the elements in finding the best places is determined by their personal experience and collective understanding. The simulation results show that the proposed strategy is capable of reducing storage consumption.

3.2.5 Data Replication Strategies Based on Particle Swarm Optimization Algorithm

Particle Swarm Optimization (PSO) is based on social behavior in collecting birds or training fish. Each particle is defined by its location and velocity in the research space. In each replicate, the velocity of each particle is updated based on the known local position and the best global position. In order to decrease the number of data transfers across cloud data centers, Kchaou et al. suggest a job scheduling and data placement technique. To reduce data transfers during the workflow’s execution, the suggested method employs an algorithm based on the fuzzy clustering method Interval Type-2 Fuzzy C-Means (IT2FCM) and the meta-heuristic optimization methodology Particle Swarm Optimization (PSO). A simulation procedure using multiple well-known scientific workflows of varied sizes is used to assess the proposed strategy. The results also demonstrate that this approach is more trustworthy than the newest cutting-edge methods [92].

To take into account the security of cloud-based data and automatic data update, adaptive particle swarm segmentation and data optimization algorithm replication are presented in [93]. This method divides a reproduction into several parts and stores them by the concept of coloring. Therefore, a successful attack on a single data center should not guess where other parties are in the cloud system. The placement of replication takes place in two stages. Initially, the data center is a candidate for the centralization process to improve recovery time. In the second stage, the data center is selected by the PSO algorithm and the update process is carried out at the same time. The objective function is determined by the reading and writing time. The comparative results demonstrate that the average response time is better.

In [94], an energy-data replication algorithm for the cloud is developed. The author used PSO because of its strong global search capability and banned search TS (TS) because of its strong local search capability. Particle fitness values are obtained according to the total cost, such as reading, writing, and energy. Then, all particles move iteratively until the maximum number of replicates is achieved. Therefore, the proposed algorithm (HPSOTS) regards the replication problem as a 0–1 decision problem and tries to minimize total energy and cost. The experimental results show the strength of HPSOTS, particularly for small storage capacities.

The replication selection strategy based on the OPS-LRU approach for the data grid environment is presented in [95]. In this method, they assume that the file location request is a bird that is looking for food. The bird determines the location of the search based on the food tweet of the flock of birds. The noise of growling is reduced in the distance. The proposed strategy takes into account certain performance measures, such as the success rate and cost of the system. The cost of the network depends on three factors: time, bandwidth, and file size. If there is not enough space in the selected node, the proposed policy removes the files according to the recently unused approach. The simulation results show that this method can decrease response time in a large distributed system.

Awad et al. use two multipurpose swarm intelligence algorithms to optimize the choice of data replication and place them in the cloud environment. These algorithms consist of multipurpose particle swarm optimization and multi-purpose ant colony optimization. The first algorithm is used to find the best data replication chosen by the most popular data replication strategy. The temporal decay feature is used to improve the proposed model. The second algorithm is used to find the best data replication location by a minimum distance, number of data transfers, and data replication availability. The proposed strategy was simulated with CloudSim. The cloud is designed to simulate different kinds of data centers (CDs) with different structures. The results show that MOPSO can replicate data better than compared algorithms. Additionally, MOACO gets more data access, lower cost, and lower bandwidth consumption than compared to algorithms [96].

3.3 Multi-Criteria Decision Making

Replicating the data into the grid should take critical and sensitive decisions. Among these decisions is the answer to the questions of which data should be placed when and where, and based on the answers to these questions, different data replication techniques are defined [97].

Multi-criteria decision-making is a technique that is used to decide which solution to choose when there is a set of solutions. In the multi-criteria decision-making method, a solution is obtained by considering various criteria to reach an efficient solution. If there are many solutions and we are looking for a solution based on some criteria, the different multi-criteria decision-making methods can be helpful. Multicriteria decision-making is used to choose the best option from the preselected solutions. The final decision in multi-criteria decision-making is based on different criteria.

In [98], hierarchical analytics is one of the most popular MCDM methods, which uses various options available to make decisions. In the PAA method, criteria are measured on a sliding scale and their units may have different units. In the AHP method, two to two comparisons are made, and the result depends primarily on the comprehension of the user. This method is based on certain perceived users. In this method, if the compatibility contribution is less than a threshold value, only otherwise the decision makers should consider some other data sets or decide with a different impression.

3.3.1 Multi-Criteria Decision Making Methods(MCDM)

Several common characteristics of MCDM issues are described in [99]. First, the MCDM represents several objectives based on relevant criteria, and decision-makers are allowed to establish conflicting relationships between these criteria. Then, the criteria to be taken into consideration are expressed in various dimensions (units of measurement) which do not allow a trivial aggregation of the decision matrix. Finally, the MCDM issues take into account multiple options that represent socioeconomic decisions at different levels. The existence of the above indicates that a person must face multidimensional issues. In this case, decision-making may be supported by different types of MCDM methods.

MCDM methods are mainly aimed at quantifying the main programs and classifying the available options. However, different MCDM methods may give different results (for example, the same set of options may be allocated different rankings). This may be attributed to the various mathematical operations which are carried out using the methods considered. It is therefore a matter of identifying the MCDM method best suited to a particular case. [100].

MCDM methods are divided into multi-objective decision-making (MODM) and multi-attribute decision-making (MADM) methods, and MODM methods deal with continuous optimization problems (for example, the best option is selected from an unlimited set of options). MADM methods can only handle discrete sets of options. However, papers usually refer to MADM methods as MCDM methods [101].

MADM methods can be classified based on the function of value, reference point, and higher rate [102]. Techniques based on value function include simple additive weighting, weighting method, multi-attribute utility theory (MAUT), and weighted sum product evaluation (WASPAS). These techniques sum the normalized values of the decision matrix by considering the weighting of the criteria.

In [103], he presented a method for ordering priority similar to the ideal solution, TOPSIS and VIKOR as examples of methods based on data reference points, In this case, the distance between options and ideal solutions is measured. TOPSIS relies on the Euclidean distance, whereas VIKOR includes Manhattan and Chebyshev distances. For example, the multi-objective method of optimization versus analysis (MOORA) comprises both the ideal solution method and the value function method. The mean distance-based evaluation (EDAS) uses the mean solution as the reference point.

Ranking methods, including methods based on preferred relationships, were presented by Gabos et al. in [104] And the ELECTRE method is one of the most significant interactive techniques, based on content that is not referenced. This method responds according to the set of notes, which means that it should not rank the options, but can delete some items.

In [105], the PROMETHEE method is presented, which includes two Promethee methods in which the partial ranking is done with the PROMETHEE I method and the overall ranking is done with the PROMETHEE II method and all calculations are done in software called Visual Promethee.

The approach described in [106] uses service replication on one or more virtual machines, where the volume of user requests at any particular time plays a significant role. Because user requests are not trusted by this mechanism, load balancing across virtual machines is improved. Additionally, it gives consumers the option to select the best service for their needs, and this proliferation creates the issue of storage space saturation. It suggests a way based on MCDM techniques to choose virtual machines whose storage space is overloaded to destroy the same service by picking and deleting copies without reducing the load balance. Results based on the Cloudsim simulator demonstrate that their suggested strategy has successfully improved reaction time and produced a good performance.

Objective functions may be defined in a variety of ways [107]. It combines goal programming; compromise programming and the formulation of reference points and uses summation and lexicography methods of mathematical programming to analyze decisions.

In [108] for a more detailed review on the use of different MCDM techniques, in choosing a location, focus on replication and the set of options are limited and done by determining the location. It uses the MADM method and it also refers to the MCDM methods in the sections of the article. Here, the choice of replication location can be expressed as an MCDM problem. Among the possible techniques for MADM, there is a need to choose methods that enable the aggregation of decision information using different principles (e.g., normalization, utility functions) to ensure the robustness of the analysis.

3.3.2 Placement Selection and MCDM

In [109, 110] there is a collection of papers on the use of MCDM techniques in the field of location selection. MADM is used to implement various techniques for discrete option sets (location). Fuzzy logic and preference relationships were involved in the location of the distribution center by [109] to accommodate uncertainty in decision-making information. Triangular fuzzy numbers have been used to map linguistic variables to fuzzy values. Fuzzy numbers were used to represent classification, criteria weights, and usefulness values. A fuzzy priority matrix is created to compare options through a step-by-step method.

In [110], by proposing a fuzzy MCDM method based on the analytical network process, the problem of choosing a retail location has been solved, and in [116], the application of fuzzy MCDM in the hospitality sector has been presented by considering variables such as geographic characteristics, traffic conditions, hotel needs and performance in terms of operations management, a fuzzy MCDM method for hotel location selection is presented.

In [111], AHP and VIKOR serve to choose the restaurant’s location. In [112], an unclear method based on PROMETHEE is presented for choosing a wind farm. And by choosing MODM, has addressed the issue of location selection.

In [113], the integer programming model is also set to optimize the power grid. In particular, the decision variables for replication include the location and capacity of the transformer. The model parameters include investment costs (annual), voltage fluctuations, load feeders, and substations. Data envelope analysis is combined with an entire programming model to facilitate the identification of the most promising location for the investment and implementation of a project. Data envelopment analysis has defined the efficiency frontier based on several criteria, while the integer programming problem can determine the most optimal combination of decisions regarding the efficiency frontier.

Another application of the integer programming problem can be found in [114]. A recent study aims to identify the nodes of a distribution network according to the location of distributors and retailers.

In [115], through the fuzzy multi-objective model, they have operationalized the selection of the number and location of fire stations in an international airport. To deal with the complexities surrounding the fuzzy problem, a genetic algorithm was used and they were placed together with the counting method.

In [117], they used mathematical programming for plant situation problems in different fields. And in [118], AHP and multi-objective programming have been used to solve the restaurant location selection problem. There are also several studies focusing on point-of-sale (e.g. stores or restaurants) problems. [119] has discussed the main principles underlying the problem of a retail location. [120] analyzed rents attributable to stores about store sites. When analyzing the competitive environment in retail activities, the method of multiplicative competitive interaction approach is supported.

In [121], the service quality measurement approach (SERVQUAL) is applied in the field of restaurant performance. The quality of hospitality management services has been evaluated. It has also investigated the issues of strategic management among the effects of globalization, multinationals, and corporate strategies.

An approach that integrates fuzzy numbers and analytic hierarchy process is presented in [122], and to select the most suitable location for a store, using multi-objective integer programming, solves the optimal location of new points. and criteria such as customer flow and the presence of competing media have been considered in their model. Because of the nature of different problems, Boolean logic can’t suitable. Instead fuzzy logic is used [123].