Abstract

A wireless sensor networks (WSN’s) has stimulated significant research work among the researchers in monitoring and tracking tasks. It’s a quite challenging task that needs to cope up with various conflicting issues such as energy efficiency, network lifetime, connectivity, coverage, etc. in WSN’s for designing various applications. This paper explores the recent work and efforts done in addressing the various issues in WSN’s. This paper focused on basic concepts regarding the WSN’s and discusses meta-heuristics and heuristics algorithms for solving these issues with recent investigations. Various optimization algorithms in the context of WSN, routing algorithms, and clustering algorithms were discussed with details of earlier work done. This paper delivers various Multi-Objective Optimization approaches deeply for solving issues and summarizes the recent research work and studies. It provides researchers an understanding of the various issues, trade-offs between them, and meta-heuristics and heuristics approach for solving these issues. A glimpse of open research challenges has also been provided which will be helpful for researchers. This paper also gives an insight into various issues, open challenges that still exist in WSN’s with their heuristics and meta-heuristics solutions and also focuses on various conflicting issues as well.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Wireless sensor networks have multiple numbers of sensor nodes having a small size, inexpensive, low power, and multiple functions which communicate to each other over short-range wirelessly. In this, sensor nodes were installed randomly in the required field for monitoring and detection tasks depending upon the applications. WSN’s is basically based on some attributes such as energy efficiency, load balance, power consumptions, network coverage, latency, and much more of these attributes that need to be optimized to maintain the quality of services. In practical implementation these attributes conflict with each other hence they must be optimize which will enhance their overall performance in real-time applications. Real-time applications have multiple tasks which shows us that we can’t depend upon single objective optimization which is popular in conventional WSN, multiple-objective optimizations [1] is the realistic approach that fulfills the real problems. Multiple objectives such as load balancing between the nodes, more energy efficiency, less delay, low power consumption, storage have to be considered in that [2][3]. There are many issues in WSN’s which need to be resolved, various heuristic and meta-heuristics algorithms came into the picture which resolves these issues.

Recent advancements in wireless sensor networks have designed the sensor nodes with low energy consumption, better lifetime, reliability, cost-effectiveness, and fit for various applications. In the sensors node, the energy of sensor nodes was limited, and recharging them always is not possible thus the lifetime of sensor nodes depends on the battery. For today’s scenario, the applications need to focus on multiple objectives, for fulfilling the user requirements. Energy efficiency is the major concern in WSN because a major amount of energy is consumed in data transmission and thus proper route selection schemes need to follow for efficient energy consumption. Thus various routing algorithms were used in the wireless networks such as hierarchical-based; optimized routing algorithms and data-based routing algorithms. For proper routing, we need to divide the sensor nodes into different parts known as clusters by using clustering algorithms. These clusters have one cluster heads which are responsible for transmitting the data to the base station. Many applications need different objectives to be fulfilled thus various approaches were used accordingly to tackle those issues. In WSN, various issues occur and for solving these issues we use heuristic and meta-heuristics approaches such as optimization techniques, clustering algorithms, and routing algorithms.

1.1 Contribution of the Paper

In this survey paper, we focused on the issues in wireless sensor networks, conflicting criteria among the various attributes in WSN’s, heuristics, and meta-heuristics approaches such as optimization techniques, routing algorithms, and clustering algorithms for solving the issues in WSN’s. In this survey paper, we have also provided an up-to-date review of various applications, issues, conflicting among different issues, approaches to solve these issues i.e. heuristics and meta-heuristics. The main highlights of this survey paper are listed below:

-

We provide a depth discussion on the basics of WSN, applications of WSN and discussed the various issues deeply occur.

-

We provide deep and clear discussion on the conflicting trade-off between the various issues occurs in the wireless sensor network and also provide an up-to-date review on the various trade-off among various issues giving the details on the earlier work that has been done.

-

Research work is done up-to-date that has been presented in the literature review where summarization of various optimization algorithms, routing algorithms, and clustering algorithms has been done.

-

We present a comprehensive survey on the various MOO (multi-objective optimization) algorithms for solving the multi-objective problems and also describe the algorithms in the context of WSN where these can be used.

-

We present various types of routing algorithms i.e. hierarchical based, location-based, data-based explaining them deeply and clarify the hierarchical based algorithm in the context of WSN their performance in the table.

-

We present various attributes needed for clustering and a deep discussion of various clustering algorithms has been given how they can be useful for solving the issues in the WSN.

-

We highlight various open research problems and future trends regarding the advancement made in WSN for solving the issues.

-

In this survey paper, detailed discussion on the various issues, trade-off among the conflicting issues which arises in various applications, various applications in WSN and for solving the issues mention various optimization techniques, routing algorithms, and clustering algorithms were discussed in depth. Which gives an understanding about the various algorithms (heuristic and Meta-heuristics) developed for solving the issues in WSN and a depth knowledge to understand different trade-off arises in the application.

1.2 Paper Organization

The other remaining sections of this paper have been organized as follows: In Sect. 2 we have discussed the literature surveys related to various algorithms for solving the issues in WSN’s. In Sect. 3 fundamentals of WSN’s including sensor nodes and their applications have been discussed. In Sect. 4 we have deeply discussed the issues in wireless sensor networks. In Sect. 5 the previous works done by the researcher including recent year works have been discussed deeply and also the trade-off between the issues has been elaborated. In Sect. 6 the fundamental of MOO techniques including the MOO techniques, algorithms and tools has been discussed deeply. In Sect. 7 the routing algorithms have been discussed thoroughly and their types have been also elaborated. In Sect. 8 various types of clustering algorithms used for resolving WSN’s issues have been discussed. In Sect. 9 we have discussed briefly the challenges in the wireless sensor networks area for the future followed by a conclusion in Sect. 10. For the reader’s clarity and understanding of the structure of this paper, its whole structure has been represented in Fig. 1.

2 Review on Multi-Objective Optimization

Much research has been done in WSN’s were based on single objectives such as energy efficiency, load balance, congestion control, data collections, security and privacy, localization, sink mobility management, MAC protocols, and much more. Recently, the researchers were now focusing on multi-objective optimization which solves the conflicting attributes performances. Many multi-objective algorithms were used such as genetic algorithms, swarm optimization, mathematical optimizations, SIOA’s which understand the behavior of animals and solves the complex problems related to them. One of the algorithms in SIOA’s is the colony optimization algorithm. The author in [4] has discussed various multi-objective approaches and gives a proper review of them. In [5] various criteria regarding the implementation of nodes were discussed and the trade-off between the performances of routing protocol has been review in [6]. Most recently the authors reviewed the various multi-objective approaches for solving the WSN problem in [4]. Table 1 below shows the recent contributions made on multi-objective approaches which give readers a clear understanding of recent work done:

Various works were done earlier regarding the classical approaches followed in WSN for resolving the issues and many contributions were given in routing and the clustering algorithms. Author [7] focuses on the topology and factors affecting the chain and cluster-based routing protocols. A comparison between various chain-based routing protocols has been presented there. In [8] a survey was done on routing protocols based on their network topology, reliability, communication model was classified accordingly. In this classification, each feature's design issues, their requirement, and features were also given. How the trade-off between network lifetime and application requirement was addressed in [9], so in this, the author first focus on the requirements of the application, and routing protocols or techniques were selected accordingly. In [10] the author's present survey on hierarchal routing protocols where their logical classification was given and also tree-based, area-based, grid-based, and chain-based hierarchal routing protocols were discussed. In [11] authors' heterogeneous routing protocols are discussed based on user requirements along with their different parameters. Comparison has also been shown between routing protocols [12] considering the cluster head selection, energy consumption, and energy efficiency security issues. In [13] authors surveyed the opportunistic routing protocols addressing their advantages, metrics and also discussed other approaches for energy efficiency which enhances reliability, network utilization and saves energy. In [14] author discussed the multipath routing protocols for wireless multimedia sensor network which enhances data rate by reducing the congestion and transmission delay. Many researchers also contribute to clustering algorithms by designing new approaches and all these contributions were summarized in Table 2 below for understanding the main contribution of researchers.

3 Fundamental of Wireless Sensor Networks

3.1 Sensor Node

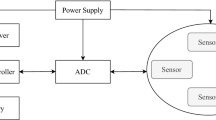

There are numbers of nodes or we say that thousands of nodes in WSN which are spatially distributed having a low cost, small size, multiple-functionality, low power communicating each other within the short-range [9]. Each node in WSN consists of sensors, an A/D converter, radio transceiver, memory, processor, and power supply. They have an additional component that is dependent on the applications and by integrating these sensor nodes they become multi-functional for specific applications. One of the most important challenges in the WSN is to implement these multi-functional components into one for fulfilling the different application requirements such as network coverage, load balancing, congestion control, network coverage, a lifetime of the network, delay. Figure 2 shows the basic architecture of the sensor node in WSN.

Generally, sensor nodes were grouped into clusters and each of them has a cluster head having more power and resources than other nodes. All the other nodes gather the information, transfer it to the cluster head, and via wireless hop-to-hop cluster head send the information to a base station or sink node. There may small or large size WSN’s where single or multiple cluster heads depend upon the base station. The base station has to collect the information from various cluster heads and send it to the users which needed them through the gateway by using the Internet [10]. WSN relies on the ZigBee protocol for communication having the IEEE 802.15.4 standards having both physical layer and MAC layer specification. It coexists with other communication protocols such as IEEE 802.11 WLAN, 802.15.1 Bluetooth communication, due to which it also faces the challenge of co-channel communication by both different communication protocols which affect its performance.

3.2 Applications

Wireless sensor networks have many applications in the multi-disciplinary domain such as in agriculture, bio-medical, industry, environment, urban, ecology, environment, public health, traffic, and many more. Sensor nodes in WSN can be implemented randomly in many applications and when these sensor nodes are deployed in a truculent environment they must have high-efficiency energy for extending their lifetime of the network [11]. There are two types of applications on which WSN’s work: one is monitoring where it supervises, controls the operations, and analyses the system in real-time, and the other is tracking where it tracks the changes in the behavior of the person, animals, or events. The application area of WSN’s applicable in almost every field and widely used. The glimpses of all the applications are given in Fig. 3.

The detailed discussion on applications of WSN’s is given in Fig. 2. In the environment, the WSN plays a major role in detecting fires in the forest, eruptions, earthquakes, flood detection, air pollution measurement, pollution detection, etc. [12, 13]. In agriculture mostly irrigation system management, disease detection, yields prediction, temperature, and soil moisture measurement, monitoring humidity, crop quality measurement. They also detect animals and wildlife for better management. In the medical field WSN can be used in monitoring the health issues of patients from a distance, diagnosis, swallow tablet inside body monitoring [14] and WSN also helps in monitoring the human functions such as retina, skin surgery, fitness issues, eating habit [15]. The applications WSN’s spread almost in every field we can also see the railways tracking system, railway management system, track beds, wheels, their staff [16] and in military WSN also plays an important role tracking the target locations, tank movement, enemy troops, intrusion detection, various attacks detection, weather detection, detection in night or dark areas [17]. New technology i.e. Internet of Things mostly depends upon sensors for sensing the information and communicating it over the internet. In IoT, WSN has been widely used such as smart homes, smart parking, smart agriculture, smart grid, and the smart city much more. Recently cybercrime department also used sensors for their investigation and integrated more methods to resolve the issues [18].

4 Issues in WSN’s

In this section, various issues of WSN’s have been discussed. Earlier many research works have been done exploring the various aspects of the WSN’s such as energy conservation [19], network lifetime [20], their protocols [21], and many more. But now the researchers' interest moves towards the multiple attributes and multiple objective optimizations to provide a better quality of services to the users. Different applications have their requirement regarding the quality of services, some of them are common which were used by almost all applications and this seeks the attention of the researchers. The various metrics which were common are energy efficiency, area coverage, network lifetime, numbers of nodes that are active, load balancing, network coverage quality. By considering the multiple attributes they may conflicts with each other therefore there must be proper optimization algorithms that reduce these conflicts and increase the performance. There are various issues in WSN’s but some of the issues were worth need be noticing for better QoS. Figure 4 represents the various important issues necessary for maintaining the QoS:

4.1 Network Coverage

In the context of WSN, the network coverage means the sensing range while the network connectivity means the communication range. It is one of the most important attributes of wireless sensor networks. Many models have been designed or proposed for different applications, the characteristic of network coverage varies with the models. The Sensing-disk model is one of the most commonly used models where all points within the disk towards the nodes were considered to be covered if Euclidean distance is less than the sensing range of the node [22]. There are three types of coverage found in the WSN namely, the first is area-coverage: here the deployment area is considered where every point in the area must be sensed or observed by at least one sensor. The second is point coverage: in this, it ensures particular points were sensed by at least one sensor and the third is the barrier coverage: here the detection of barrier between the sensing node is determined.

It is considered that finding the optimal positions of nodes for maximum coverage is the NPC problem [23]. There are many other ways to solve the optimization problem of coverage sub-optimally.

4.2 Network Connectivity

Network connectivity is another issue in the WSN which also plays an important role in the performance of WSN [24]. For communication, it is only required that one active node is within the communication range of other active so that they form a communication network. In cluster-based architecture, all nodes communicate to each other within the cluster via a single-hop connection and with the help cluster head, all other nodes in that cluster can communicate to other nodes in other clusters. Cluster heads need to be active always as in the clusters only cluster head collects the information from all the nodes and transfer it. In cluster architecture, issues related to connectivity hook between the nodes present as a particular number of nodes only a cluster head can handle, and coverage issues are related to the ability that at least one sensor can sense the particular node.Let assume a rectangular area grid of size \(x\mathrm{*}y\), let \({R}_{Comm}\) and \({R}_{Sense}\) denote the coverage range and connectivity range of the j sensor node. Below a function [25] represent assure that each sensor node is placed in the range of another sensor node and it can also prevent the sensors not to become too close to any other sensor node. Here \({R}_{Comm}-{R}_{Sense}\)> 0 for the connectivity of the sensor nodes.

Network connectivity is closely related to network efficiency and network coverage. To understand this point, let discuss this energy saving can be done by the management of dynamic energy saving. In this, some node put to sleeping mode and other remaining nodes can remain active to perform the task or provide the service continuously. In this approach, the basic problem is to minimize the active node to provide tasks while maintaining the quality of service. This requirement is very crucial in WSN, but in [26, 27] authors suggested that if \({R}_{Comm}\) >\(2{R}_{Sense}\) then the network will remain connected and the relationship between coverage and connectivity is very tight so we need to carefully optimize these to achieve our goal [28,29,30]. Many authors have given depth knowledge about the relationship between connective, coverage, and energy efficiency [26,27,28,29,30].

4.3 Network Life-time

It is yet another important issue in WSN for performance metrics. Many research works have been done in this direction of solving the problem of network lifetime in WSN by energy conservation. In the sensor node, the energy source life is limited, thus the replacement and recharging of the sensor node are not that possible. It is important to maximize the network life-time, by having efficient energy consumption. [31].The description of every sensor node is important, as a failure of any sensor node energy may cause the network to be partitioned which may affect its performance. Thus the network lifetime can be defined as the time from the first time the application activates to the time when some sensor node failed due to its energy source in clusters.

4.4 Energy Consumption

In WSN every sensor node consumes some energy in data collection, data processing, and data transmission. As above we already discuss that sensor node have a limited network lifetime and thus energy consumption is a critical task in WSN’s. Different sensors node has heterogeneous functionality and power for data processing thus the energy consumption not only depend upon the Shannon capacity but it also depends upon the functionalities of these sensors node. Thus the energy consumption of any given path P can be defined by given function [32]:

where \({t}_{i}^{access}\) is the data collections duration time, \({t}_{i}^{process}\) is the data processing time duration, \({Energy}_{i}^{operate}\) and \({Energy}_{i}^{t}\) were the operation power and transmission power. \(N\) The total number of the sensor nodes and \({t}^{message}\) denotes transmission time of the message.

4.5 Energy Efficiency

Energy efficiency is another important issue in WSN’s which is very closely related to the network lifetime [33]. As we have a limited lifetime of sensor nodes so we have to utilize them efficiently so that the network lifetime increases. Energy efficiency can be defined as the ratio of power transmission to power. We can also express it in term of the equation:

where \(Z\) denotes the communication bandwidth, \({p}_{i}\) represents the power transmission of \(i\) node and \({\gamma }_{i}\) denotes the SINR at receiver in respect to \(i\) node.

Two methods can be used one method is to schedule some nodes to sleeping mode and the remaining node to active mode. The second method is to adjust dynamically the range of sensing in the nodes.

4.6 Network Latency

Latency means how much time the sensor nodes take for transmitting the data from one point to another and in WSN there is fixed bandwidth for transferring the data. If there are a large number of nodes then the latency of the network decreases as the data can be transferred easily but we need to also consider other factors which were affected by this. There must be traffic on the network due to increase sensor nodes or much energy consumed by them. The delay from the source node to the destination node for transferring the data can be given by [34, 35]:

where \(S_{{source}}\) and \(S_{{sink}}\) denotes the source and destination nodes, \(D_{q} {\text{~}},{\text{~}}D_{p}\) and \(D_{t}\) are the transmission delay, propagation delay, and queue delay. And in the second equation for easiness, all the delays were combined and denoted by \(C\).

4.7 Differentiated Detection Level

This is another major issue necessary in WSN; in this, the different threshold value of detection probability is different for different locations or region of deployment. Such as the deployment of sensors node underwater required high detection in comparison to the ground level deployment. The sensitive region required high detection threshold value than the low sensitive region, so we can say that in a less sensitive area low detection probabilities have to be maintained by deploying fewer sensors node. Thus different geographical region has different requirements for the deployment of sensor node and the sensing requirement were not ideal under same supervision region.

[36,37,38] has given the probabilistic model for detection level, let us assume that \(d\left( {\left( {x,y} \right),\left( {p,q} \right)} \right)\) denote the Euclidean distance between co-ordinates \(\left( {a,b} \right){\text{~}}and{\text{~}}\left( {j,k} \right)\). Here n represents the physical characteristics of the sensing device and \(S_{r}\) is the sensing range.

4.8 Number of Nodes

In WSN’s every sensor, the node has deployment, production, maintenance cost, thus if the number of sensors node increases then cost will increase, and deployment complexity increases. There should be a proper balance of the sensors node and sensitivity required in the deployment area so that the performance of WSN’s would not affect. Here is the formula is given by [7] for optimizing the sensor node deployment:

Here \(N\mathrm{\text{'}}\) is an active node and \(N\) is the total number of nodes.

4.9 Fault Tolerance

Due to physical condition or energy source loss sensor nodes may fail and thus affects the performance of the networks. Replacement of the battery is difficult so the network has to be fault-tolerant in reducing the number of failed sensor nodes [5, 39]. Fault tolerance can be defined as the ability of the network to work without any interruption in failure of nodes; with help of routing protocols, this can be implemented. Fault tolerance can be formulated with the help of the Poisson distribution formula by the probability of not having failure [9]:

where \(\lambda _{n}\) the failure rate of \(n\) node and \(t\) is time duration. Many research studies have been done in this direction for finding the \(k\) connectivity of the sensors node [26, 27, and 40].

4.9.1 Fair Rate Allocations

Fair rate allocation of different sensor nodes must be at a high priority so that we cannot end up cutting off the many sources at the networks, thus only allowing those nodes whose energy source cost is very low. Thus to guarantee that all node's information reaches the receiver node fair rate allocation is necessary. By only maximizing the throughput will not guarantee the performance of applications it will only cut off some nodes' source rate that has been allocated to them [41]. All tasks were important in the WSN thus we need to understand the priority of the task and accordingly maximize the throughput. [41] Suggested a method for fare rate allocation which is the network utility maximization (NUM). Suppose \({Utility}_{i}\left({x}_{i}\right)\) is the utility function of sensors node \(i\) at a rate \(\left({x}_{i}\right)\). The utility function for a specific class of fair rare allocation has been equated [42] given below:

For proportional fairness, we have \(\alpha =1\) and for max–min fairness, we had \(\alpha >1\).

4.9.2 Detection Accuracy

High accuracy is a must for WSN’s for achieving the goal for what the sensor networks design for. High accuracy is possible due to detection of accuracy which is directly related to latency of network and timely delivery of the information. Proper information should be reached at the destination within the time limit. Node \(n\) get energy signal \({e}_{n}\left(x\right)\) from the location \(x\) and \({K}_{o}\) is the emitted energy. Where \({d}_{n}^{p}\) is Euclidean distance from node \(p\). The formula for the energy signal can be given as:

4.9.3 Network Security

Attacks on sensor nodes are common, some of them were deployed to an area where the physical attack on sensor nodes can be done to make harmful actions. By unauthorized access of data, the data can be used for unethical work. Much research work has been done in network security to secure the network. Two types of security concerns are there one is context-based and other data-based privacy concerns. In context-based concern, the context of the data was taken for privacy concerns such as timing, location, header much more, and in data-based concern data collected in WSN’s is a major concern (Table 3).

5 Literature Review of Trade-Off between Issues in WSN

5.1 Coverage-VS-Lifetime Trade-Off

Many attributes affect the performance of WSN’s thus many optimization algorithms were applied to tradeoff the conflicting between these objectives. The conflicting reason between coverage and network lifetime will be explained in this section. Optimizing the coverage means extending the monitored areas relative to the whole area. We can also define it as spreading the areas by deploying the sensors nodes at far locations so that area coverage is more and avoid the overlapping of sensors nodes. With this large number of sensors, nodes were deployed as a result relay transmission increases for nodes directly communicating to the base station and this results in loss of energy of sensors nodes which decreases their lifetime. In contrast, optimizing the network lifetime means sensor nodes directly communicate to the base with few hops so that they use their energy for their transmissions which increases network lifetime. Many research works have been done to achieve these two objectives and they are summarized in Table 4 below.

5.2 Latency –VS-Energy Trade-Off

Reducing the energy consumption needs sensed data transmitted over a reduced distance via a single hop. In contrast, minimizing delay requires that the numbers of intermediate nodes from source to destination should be reduced. If we reduced the search space then it will cause unbalanced load balancing between the nodes and this will cause a reduction inefficient energy consumption [43], [44]. Thus it is necessary to solve this problem together, various literature reviews and research works have been done for solving the trade-off between latency-vs-energy. Table 5 shows the various literature review and works done.

5.3 Lifetime-VS-Application Performance Trade-Off

In various applications, its performance depends upon various attributes in some applications number data transfer rate is the trademark for its performance. Thus the performance of certain applications cannot be determined but for certain applications, the higher data transfer means better performance. But the higher data rates result in increased cost for communication and need more sensing thus were reducing the network lifetime. Thus to balance between these two issues various research works and reviews has been done which were elaborated in Table 6.

5.4 Number of Nodes

Implementing a large number of nodes will results in increased cost, more energy consumption, and the detection of the whole system will not be possible. Thus increasing the number of nodes will lead to many issues thus for reducing this many multi-objective algorithms NSGA-II were used. Various literature review and research work contributing to solving this trade-off have been given in Table 7.

5.5 Reliability Issues

The main aim of implementing WSN’s is for transmitting and capturing the images, video, and audio to the destination with reliability. But some of these WSN’s applications need proper implementation of QoS. During routing, QoS faces some critical issues such as energy consumption, delay, latency, bandwidth, reliability, and each node's energy status. Thus we need proper routing protocols which were also discussed later in this paper to maintain the QoS. Various research works and surveys that focus on the multiple trade-offs between nodes centering the reliability issues were summarized in Table 8.

5.6 Trade-off among other Metrics

As we have discussed metrics having conflicting behavior and difficulty to achieve all of them together. Several metrics need to be addressed for achieving MOO. If we try to understand the scenario then we may get to know for different deployment different metrics were important not all of them always be important. According to the decision-makers based on the actual situation or implementation of WSN various routing algorithms were deployed or used for making a tradeoff between conflicting metrics. In WSN basically, the accuracy and network longevity were inversely proportional to each other due to energy consumed by the nodes and the applications want more data for their processing. In Table 9 many algorithms were proposed with multiple objectives and the scope of their applications was general and large scale (Table 10, 11).

6 Techniques of Multi-Objective Optimization (MOO)

6.1 Strategies in Multi-Objective Optimization

In human life, the concept of optimization can be applied in every field. Resources are very limited thus optimization is important. Optimization means making the best or efficient use of available resources. In computer science and engineering, many research works have been done on data analysis, modeling, and simulations. This branch of computer science aims of finding the value of variables which is maximum or minimum for multi-objective or single objective function [45]. There are three components of optimization techniques namely[46]: Optimization strategies where the mathematical formulation has been done, second is the optimization algorithms and third is the simulation, below Fig. 5 is describing the three components of optimization techniques.

First, the problem that has been taken has to be exhibit in mathematical formulation by which we can develop its mathematical model. One of the most important concern is for designing the optimization algorithms because one single algorithm is not sufficient for all problem solution we need multiple algorithms which accurately solves the problem. Many types of optimization algorithms exist such as:

-

Heuristic algorithms provide the optimized solutions for the problem.

-

A bio-inspired algorithm such as genetic algorithms, swarm intelligence and are using widely as they give the optimal solutions.

-

Iterative methods such as the Newton-quasi method, gradient descent, sequential quadratic programming much more.

-

Some of the finitely terminating algorithms are also there.

6.2 Multi-Objective Optimization Algorithms

Various studies have been done in this direction of optimization problems and many algorithms had been developed for solving the multi-objective problem in wireless sensors networks. In optimization algorithms, objective functions need to be carefully chosen for designing the specific optimization algorithm. Careful choice of optimization algorithms is also necessary for solving complex wireless sensor networks. Many optimization algorithms exist, the detailed analysis of these algorithms were discussed in this section:

6.2.1 Mathematical Programming

Mathematical modeling in multi-objective optimization includes weighted sum, goal-based programming (GP), and \(\varepsilon\)—constraints methods.

6.2.1.1 Weighted–Sum Method

Linear weighted sum method is easy to implement and can solve the complex problem the only things need to be taken care of is that the proper weight was chosen so that the optimal solution can be obtained. Linear weighted sum multiplies the variables with proper chosen weight so it can scalarize multi-attribute value to a single objective value. In this method we first apply the normalization to each attributes to achieve the desired balanced between the different attributes that have different units and properties. Then we assign the weights according to the need or importance of the particular attributes. Finally, we get the result based on Pareto-optimal solutions by solving the multi-objective problem.

This method is very to apply just we need to choose the right weights for the attributes because we have to calculate a single value for the single attribute. The range of the weights assigns lies between \([\mathrm{0,1}]\) and the sum is equal to 1. There is no correspondence between weight and solutions; it uses subjective weights to result sometimes in a poor result. Not knowing priori about weights assigns then decision-maker will not get an optimal result and the results were also acceptable. For example: if the decision-maker didn’t know that which is appropriate for which attributes, then he will be not able to adjust the weights to get the optimal results. It is worth noticing that if the decision-maker has a priori about weight preference then he will get the optimal solution based on Pareto-principle. AHP is the powerful method of multi-criteria decision-making [47,48,49,50,51]. AHP has been widely used as it first decomposes the complex or large problem into a sub-problem then analyzes the main problem and after that, it obtains optimal solutions [52, 53].

6.2.1.2 \(\boldsymbol{\varepsilon }\)– Constraints problem

This is another method of mathematical programming it optimizes an objective function with some variables for the constraints on these variables. Here the objective functions can be cost or energy function which needs to be minimized or it can be reward or utility function that is maximized. This method can be formulated as [54]:

where \(f{\text{~}}\left( x \right)\) is the selected function of the objective such that \(x = 1,2, \ldots \ldots ,n\) and remaining is the constraint of objective.

This method sometimes gives the weak Pareto solutions and for certain string conditions, it also gives strict Pareto solutions. This method is not useful when we have more than two objectives because the decision is not obtained as optimal as it can be.

6.2.1.3 Goal Programming (GP)

It is the branch of multi-criteria decision analysis (MCDA) which is the generalization or extension of linear programming to handle the conflicting multiple objectives. Here each of the attributes has given a target or goal value that needs to be achieved and deviations from the given goal measured whether it’s below or above. The non-achievable values were minimized by the decision-maker in the optimization function. In other words, each attribute has given a target o goal value that is achievable; the deviations of non-achievable values were minimized in the achievement function. Many earlier methods were widely used such as MINIMAX, weighted-sum and lexicographic (preemptive) [55,56,57, 2, 58,59,60]. The major advantage of this method is the ease of simplicity and its use. It is used to solve the problem with multiplicity objectives in decision making.

6.2.2 Nature-inspired Algorithms:

Nature-inspired algorithms such as evolutionary multi-objective algorithms [61, 62], swarm intelligence algorithms [63] were sometimes used to solve the multi-objective optimization problem. In realistic situations, some problems may have a complex structure, large number of variables that may not match up with the standard form of mathematical programming. Thus to solve the realistic physical problem in WSN nature-inspired algorithms were used. This nature-inspired metaheuristic approach combined the concept of social science, biology; physics even include the concept of artificial intelligence which increases the performance of optimization algorithms. It also matches up with the complex structure and well suited to solve realistic WSN problems. They give more accurate results but they are very costly as compared to mathematical programming. Nature-inspired algorithms were discussed in this section to brief their meaning:

6.2.2.1 Evolutionary Algorithms

They were also abbreviated as EA’s; it is the family of metaheuristics algorithms. The concept is based on the survival of the fittest in the populations. The main goal of evolutionary algorithms is to select the fittest candidate by calculating the fitness function repeatedly by exploration and exploitation methods. They find in the single execution of algorithms the Pareto-optimal solution dealing with a suitable set of solutions and less responsive to the continuity of PF in comparison with the mathematical programming. [62] discuss the most powerful algorithm of the multi-objective evolutionary algorithm in solving the problem.

-

Genetic Algorithms (GA) Genetic algorithms are the branch of evolutionary algorithms [64]. The algorithms were based on genetics and evolutionary, very efficiently used for solving many optimization problems including the MOO. In comparison with mathematical programming, genetic algorithms handle the more complex problem, implement them parallel. They can deal with any kind of objective function such as linear, non-linear, continuous, stationary, dynamic, discontinuous and these advantages made the genetic algorithms widely used in solving the MOO problems in WSN’s.

-

In WSN, MOGA [65,66,67] has been widely used among all algorithms in MOO, they operated by generation –by generation and obtain the Pareto-optimal solutions throughout the evolution of generations. The author in [65] dictated that MOGA can be used for static sensors implementation in the interesting area which maximizes both the network lifetime and network coverage area. NSGA [68], NGPA [69], and SEPA [61] were also used and a literature review has been in solving MOO.

-

Differential evolution (DE) It’s one of the powerful algorithms of the evolutionary family, developed by storm and price [70]. Its step was similar to the evolutionary algorithms. First, it randomly generates the populations for optimization, then to enhance the performance crossover done between the candidates after generating the donor vector. Finally, the selection is done to determine whether the generated population or candidate is capable of moving to next-generation [71]. The basic step of differential evolution is given in Fig. 6:

-

Artificial Immune System (AIS) Artificial immune system is a computational method inspired by the biological immune system. It is another branch of evolutionary algorithm and solves complex problems. It enhances the local search and avoids premature convergence. It develops improved solutions to the problem by applying clonal selection, immune network theory, and other immune concepts. In general AIS optimization algorithms has a population of individuals known as anti-bodies and other population known as objectives to which anti-bodies try to match it. Those were called antigens. There are many techniques in AIS only the difference is that how these techniques were applied. CSA algorithm or CLONALG develops the anti-bodies by the concept of clonal selection. In this, each individual is cloned and those who have high affinity were selected.

AIS has a similar framework as evolutionary algorithms and can be easily implemented in the MOO and evolutionary optimization problem. Only the difference between AIS and EA’s is the generation of the population. In EA the populations were generated by mutation but in AIS the populations were reproduced by cloning and the child was the exact copy of their parents.

-

Imperialist Competitive Algorithm (ICA) It is another algorithm of evolutionary algorithm, based on imperialistic competition of socio-political evolution. Similar to the evolutionary algorithms, it also starts with the generation of the initial population known as country. The countries were divided into two groups based on their powers one is imperialists and the other is colonies. After the initial generation of populations, the colonies will start moving towards their imperialists and after this, the competitions among the imperialists start. The competition increases gradually the power of strong empires and decreases the power of weak empires, as result the weak empire will their colonies and merges. ICA, lastly formed into a state having the only empire in the world and other countries will be its colonies. ICA is suitable for a single optimization problem and it has been successfully applied to many research works [72, 73]. New methods were developed for multi-objective imperialist competition algorithms and a novel work MOICA was proposed [74] for node deployment handling. Finding the best countries from the Pareto solution will give more coverage and diversity in the solutions, thus it has high significance on the optimization problems that have a large number of objectives.

6.2.2.2 Swarm Intelligence Optimization Algorithms

Swarm intelligence is a branch of artificial intelligence (A.I), adventure the collective behavior of the self-organized and decentralized system of bird flocks, ant colonies, and fish school. It relies on two fundamental concepts one is self-organizing and the other is a division of labor. Self-organizing is defined as the capacity of the system to derive its components and agents without any external help into a suitable form. Self-organizing is based on four properties: positive feedback, negative feedback, fluctuation, and multiple interactions. Positive and negative feedback was used for stabilization and amplification, fluctuation is used for randomness to occur and multiple interactions occur when swarm intelligence has to share information within search space among themselves. Division of labor is the execution of various tasks simultaneously by individuals. With this swarm intelligence is capable to tackle the complex problem that is performed by the individuals. These were the low-cost interacting agents found in a small environment or society’s capability of making the decision. Many algorithms are there in SIOA’s have been developed such as Ant-Colony organization (ACO) [75], Artificial Bee Colony (ABC) [76], Particle Swarm Intelligence (PSO) [77].

-

Ant-Colony Optimization (ACO) As its name says on what concept this algorithm builds. It is inspired by the foraging behavior of the real ant species. Consist of four components mainly: ant, pheromone, daemon action, and decentralized control. To mimic the exploration and exploitation of search space ants were used and pheromones are a chemical that spread by ants as they move in the path whose intensity changes over time. Ants spread or drop the pheromones as they travel, quantities of these pheromones indicate the trial made by ants. The direction chosen by ant indicates the high intensity of pheromones and is considered as global memory for the system. Daemon actions are used to gather global information which a single ant can’t gather and is used to decide whether it’s necessary to add more pheromones or not. The decentralized control is used for making the algorithms more flexible and robust. The algorithm is capable of solving the optimization problem of discrete and combinatorial in engineering, proposed by Dorigo [78, 79]. To understand the basic principle of the ACO algorithm, let us consider two paths A and B from nest to food source. Let assume \({n}_{A}\) and \({n}_{B}\) where the number of ants in the desired path at time \(t\), \(P_{A} {\text{~}}and{\text{~}}P_{B}\) be the probability of choosing paths. Then, the probability of chosen path by ant at the time:

$$P_{A} = {\text{~}}\frac{{\left[ {k + n_{{A{\text{~}}}} \left( t \right)} \right]^{\alpha } }}{{\left[ {k + n_{{A{\text{~}}}} \left( t \right)} \right]^{\alpha } + \left[ {k + n_{{B{\text{~}}}} \left( t \right)} \right]^{\alpha } }}$$(14)and we can also write it as:

$${\text{~~}}P_{A} = 1 - P_{B}$$(15)where \(k\) the degree of attraction is an unexplored branch by ant and \(\alpha\) is a bias towards pheromones for decision.Particle Swarm Optimization (PSO) It is another swarm intelligence algorithm introduced by Kennedy and Eberhard. It mimics the behavior of birds flocking and fish to dictate the particle to find optimal solutions. Three principles were followed in particle swarm intelligence: separation, alignment, and cohesion. Separation is done for avoiding the crowded flock mates, alignment is moving towards the direction of local flockmates moved generally and cohesion is the moving towards the position of local flockmates. In PSO, the birds or particles adjust their flying direction based on their owns experience and neighbor experience [80]. It is capable of solving stochastic optimization problems after several improvements made by researchers. The particles in PSO can be calculated as [80]:

$$S_{{i,d{\text{~}}}} \left( {t_{1} } \right) = wV_{{i,d}} \left( t \right) + C_{1} r_{1} \left( t \right)\left[ {P_{{i,d{\text{~}}}} \left( t \right) - X_{i} \left( t \right)} \right] + C_{2} r_{2} \left( t \right)\left[ {P_{d} \left( t \right) - X_{i} \left( t \right)} \right]{\text{~}}$$(16)$$X_{{i,d{\text{~}}}} \left( {t_{1} } \right) = X_{{i,d}} \left( t \right) + V_{{i,d}} \left( t \right)$$(17)where \({C}_{1}\), \({C}_{2}\) are the constants and \(w\) is the inertia weight. \({P}_{d}\) Is the best position of the particle, whereas \({r}_{1}\left(t\right)\) and \({r}_{2}\left(t\right)\) were the random particle generated and at the \(t\) iteration velocity and distance update their position according to both equations.

Particles population generated here relies upon archive for storing the Pareto solution and choosing the best global solution among them. This is the key difference between multi-objective and earlier single-objective problems. The concept of PSO is relatively new in the WSN, thus people were adopting this new method for multi-objective optimization problems. The author in [81] developed a new approach of MOO based on PSO for node generation improving maximum network coverage, lifetime, and connectivity and it also shows the accurate result with low error transmission.

-

Artificial Bee-Colony Optimization Problem (ABCOP) This optimization algorithm is based on the nature of honey bee the way they collect their food and move. It is also known as ABC algorithm, in this, the food source represents the optimization solutions and the nectare shows the quality of those possible solutions. It consists of three bees namely: employed bee, onlookers’ bee, and scouts bees having three phases [25] [33]. For each food source, there is only one employed bee thus the number of employed bee is equal to the number of food sources in the swarm.

-

Employed bees In the first step randomly distributed food sources have been allotted to each employed bee, then the employed bee flies to the produced food source determines the neighbor source of food, and thus calculates the nectare. If the calculated nectare is better than the previous one then the employed remember the current food source else it remembers the old ones.

-

Onlooker bee After the employed bees completed the entire task stated above, employed bees return to the hive and share their food source location and nectare to the onlooker. The onlooker then calculates the nectare collected by all employed bees and then remembers whose calculated value is higher. If the nectare of food source is higher then the onlooker will remember this position and forgets the previous one same as employed bees.

-

Scout This is the last phase, after completing the above task, the left-over food sources of employed bees were found and these left-over will become a scout. The scout produced new food sources without considering any experience to replace the left-over ones.

6.2.2.3 Artificial Neural Network Optimization Algorithms (ANN)

Also known as ANN based on neurobiology and works similarly as biological neuron works. It is used for processing and estimating the large inputs which are unknown and generates the results [82]. Biological neurons consist of axons, dendrites, and soma, each neuron connects with another neuron with the help of synapse junction of dendrites and axon. The axon performs actions that pass from the soma to the next level of the axon. We can relate the biological neuron to artificial neuron in the same manner, in artificial neuron the signals having the weights corresponds to the synapse and these signals were then processed. Function \(f(x)\) is the weighted sum of all the incoming signals and the output produced represents the axon. We can relate the biological neuron to the WSN nodes such as the sensor node converts the physical signal into an electrical signal which is processed by weighted signal corresponds to synapse. The function which processes the signals represents the soma of biological neuron and it sends output via radio link corresponds to the axon, this represents that biological neuron was strongly represented the similar structure of the sensors node. We can model the whole sensors node from an ANN and we can also rely on them for the outputs.

6.2.2.4 Reinforcement Learning Optimization Algorithms

It is a mathematical framework in which agents interact with the environment learns from it, perform actions and structure the problem into the Markov decision process [83]. The most important technique of reinforcement learning is Q learning which describes in the figure below where we can see an agent which continuously performs an action and gets a reward based on its performance. The reward can be calculated as:

Here \({R}_{{S}_{t+1}{a}_{t+1}}\) represent the overall reward, \({r}_{{S}_{t}{a}_{t}}\) represent the immediate reward after action and \(\alpha\) is the learning rate which can be between \(0\le \alpha \le 1\) determines how fast learning is done. This algorithm can be widely used in WSN’s for solving the optimization problem [84] [85]. Below Fig. 7 shows the basic state:

6.3 Software Tool for Optimization

There are many software tools available that can be used for optimization algorithms. Many of these algorithms were open-source available online.

7 Routing Algorithms in WSN’s

WSN consists of large numbers of nodes that transfer data from source node to destination and has numerous applications in various fields such as business, industry, agriculture, environment, air quality control, and much more. These applications while performing the activity consume a large amount of energy and data processing [86]. Currently, the research has been directed towards efficient energy consumptions, load balancing, better network life, and finding the best path for sending data. Authors [87, 88] give their contribution by making energy efficiency in WSN by using green computing and proposed an energy-efficient industry IoT three-layer architecture [87]. In this, the author used MAC which makes energy conservation by switching nodes to sleep or wake node depending upon the condition and this simulation proves to minimize the energy consumption in the industry and reduced variable neighborhood search (RVNS) queue-based architecture was proposed by [88] to solve the issue related to data processing, collection, and analysis. This RVNS provides better data analysis and efficient energy consumption in WSN. To elaborate further firstly we need to understand the architecture of WSN which describes to us how nodes were connected, how data were transferred, and much more. In designing WSN the most important issue is energy consumption because the nodes which were implemented are mostly non-rechargeable and their replacement was much difficult, similarly the route selection is also important which shows how our data were transmitted. Their many issues in WSN such as load balancing, energy efficiency, cluster head selection, congestion control, network lifetime, and for these issued clustering algorithms (hierarchical) proved to be very promising.

Currently, cluster head-based routing algorithms were now getting more attention, in this sensor nodes were divided into clusters and one of them was selected as cluster head (CH) which main node [89].

-

Taxonomy of various routing algorithms in WSN In wireless sensor networks many of the routing algorithms were used recently for solving many issues and providing them the optimized solutions. Below we have discussed the various routing protocols and their classifications.

-

Structure-based routing algorithm This is one type of classification of routing algorithms; in this, the structure of the network is very important. In this how the nodes were associated with each other and transmit the data is significant for designing the network. The taxonomy of routing algorithms is shown in Fig. 8: hierarchical routing, data-centric routing, location-based routing algorithms, and QoS-based routing algorithm.

7.1 Data-Centric Algorithms

In data-centric algorithms when data sent from the source node to the destination node then nodes in between them perform the aggregation on the data or information from multiple sources and sends the aggregated data or information to the destination nodes this saves energy consumption. But have limited memory for data processing. Here data recovery and data circulation were the main objectives of data-centric algorithms.

7.1.1 Flooding Algorithm

It is the basic routing algorithm that uses multi-hopes as a routing metric. It follows a very simple procedure: when the packet is received by a node, it will broadcast to all its neighbor nodes and this process will continue unless all nodes will receive the packets. In flooding the concept is very simple it only rebroadcast the packet to nodes as it doesn’t require any information about the neighbor node. Thus this algorithm is considered as a simple algorithm but nothing comes without cost, it has many issues from being simple:

-

Duplication In flooding whenever a packet is received by the node it will broadcast to all its neighbors without concerning that node has already received that packet from another node. Thus it does not restrict delicacy and energy consumption becomes high.

-

Information overlapping As in WSN, numerous nodes have been deployed to cover the large area of the network and sense the area. Sometimes the same area is sensed by more than one sensor and they send the same information due to which information overlapped which becomes similar to the duplication of information.

-

The blindness of resources In WSN resources were limited, here whenever the node received packets it will broadcast them to the neighbor without taking care of available energy.

7.1.2 Gossiping Algorithm

Gossiping is the modified version of flooding, when a node received packets it will not broadcast its entire neighbor but it will randomly send the packets to some of its neighbors [90]. The stochastic method is used by the gossip algorithm although it will not solve the duplication problem it surely reduces the data duplication problem. It will not solve the issue of data overlapping and blindness of resources. There are chances of having data delivery failure such that it may be possible that a particular node not receive the packet.

7.1.3 SPIN Algorithm

SPIN stands for “sensors protocol for information via Negotiation” is another algorithm in the family of routing [91]. Different types of SPIN algorithms are there such as Point-to-point SPIN (SPIN PP), energy consumption awareness SPIN (SPIN EC), broadcast network SPIN (SPIN BC), and reliability SPIN (SPIN RL) and SPIN uses negotiation approach to resolve the duplication, information overlapping and resources blindness issues from flooding algorithm. Here sensor nodes send packets to every bode describing the type of data, not original data, and negotiate with them. Nodes that are interested only to those the packets will be sent as a result they reduce the data duplication and data overlapping. In addition to this sensor, node monitors their energy which also limits resource blindness.

The basic algorithm of a SPIN family is SPIN-PP which point to point SPIN, there are three-phase: the advertisement phase, request phase, and transmission phase. In the first phase when the node receives the packets it will advertise this to every node containing the type of data rather than the data itself. After that nodes which were interested, they respond by sending the request message to the sensor node. In the last phase, the data were transmitted to the node interested. By this SPIN-PP decrease, the duplication of data and overlapping but blind resource is still there. To reduce the problem of resource blindness SPIN-EC puts energy conservation as an important metrics for sensor node, it set a threshold value of energy for communication, and if the nodes energy level is less than the threshold value then the communication cannot be done. SPIN-PP and SPUN-EC deal with point-to-point communication but sometimes we need to communicate with more than one at a time for this SPIN-BC to come into the picture.

SPIN-BC uses a three-way handshaking phase with some modification of broadcast: sensor node advertises its packet to all neighbor nodes within its range and the interested node sends the request message with a random time setter. The request message contains the address of the advertisement node in a broadcast manner and a random time setter is used here for avoiding the collision between the nodes. The advertiser node sends the packet to the nodes and rejects other node requests for the same data. SPIN-BC is not that much reliable as it does not track the delivery of packets hence SPI N –RL provides reliability over SPIN-BC. In this, if the node not received data after a certain time then the node understand that some error occurs then it sends again the request message for data transmission.

7.1.4 SPMS Algorithm

SPMS stands for Shortest Path Minded SPIN which a modified version of SPIN. It uses the shortest distances for communication [92] than others because when distance increases the energy consumption also increases. SPMS uses multi-hop and has similar steps as that of SPIN: advertisement phase, request phase, and delivery phase. Unlike SPIN, SPMS does not directly send the advertisement if it finds a node at the shortest distance. It means that if the sensor node has two neighbors then the request message will send by the node having the shortest distance and waits for a particular time then request from other nodes it can receive.

7.1.5 Direct Diffusion

As we have seen that SPIN has many advantages over flooding and gossiping. Sometimes user wants some information from the sensor node thus to fulfill this direct diffusion algorithm used. In this, the communication starts from the sink node which needs to seek some information from the sensor node, and the sensor node provides that data to the user. It has four phases: interest propagation, gradient setup, reinforcement, and delivery of data [93]. Direct diffusion is a query-based algorithm so when there is a query generated only then the communication starts this is the disadvantage that it contains.

In this user placed the request to the sensor node for data it wants with its features, the sensor node transmits the required data to the user. The intermediate node will aggregate the data or send it as it is and the node which receives the interest data will create a gradient and broadcast it. Gradient node will create multiple paths from the source node to sink node, due to which each node has now received the data of interest, and if any node already that data then it can also send that to the sink node. There are multiple paths thru which the data can be received to the sink node. The sink node can receive the data from multiple paths, in this case, the sink node can reinforce the path from which it wants to receive the data based on some priority such as path having less delay, the better quality of data, and based on this sink node will select the path. This is performed by every node which receives the data and the node does it based on local rules.

7.2 Hierarchical Based Algorithms

In this, the nodes were not identical and perform various functions, high energy level nodes are used for transferring the data, and low energy level nodes can be used for sensing the data. These algorithms provide high energy efficiency, simple topology design, less energy consumption, load balancing, and provide us the maximum network lifetime. It is good for WSN but has constraints on energy. Energy saving is the most important role of clusters in sensor nodes and its central focus is on the energy conservation of sensor nodes in multi-hop implementation. When sensor nodes transfer data from one network to another the energy gets depleted and thus there is a need to focus on energy saving of the sensor nodes. In Table 12 some of the hierarchical based algorithms discussed:

7.2.1 LEACH-SWDN Algorithm

Low Energy Adaptive Clustering Hierarchy (LEACH) is well-known heuristic algorithms in WSN for clustering and an adaptive method for hierarchical routing algorithm. It uses the probabilistic approach for selecting the cluster heads (CH) after a particular round and after the selection of cluster head, all other nodes based on their nearness goes to their CHs. Here the probability is predefined and introduces the concept of cluster heads (CH). Random selection of CHs does not increase the network lifetime as they consume more energy, hence some advancements were made by researchers to enhance the LEACH algorithm. The author in [94] proposed LEACH- SWDN (Sliding window and random numbers of node) where the sliding window is used for interval by generating the random numbers of a node. Interval of the sliding window is determined by averaging the random numbers of nodes (non-CH) and nodes energy, thus it optimally places the cluster heads (CH) based on living nodes, and the energy consumption is reduced in comparison with LEACH, A-LEACH, and LEACH-DCHS algorithms. In [95] author proposed a Quadrature-LEACH algorithm for improving network lifetime, throughput, and stability for the homogenous network. Here clusters networks were divided into four quadrants and further they were divided into subsectors thus the distribution of sensor nodes is better and solves the problem of energy depletion. This algorithm provides better network coverage and energy efficiency than LEACH, SEP, and DEEC. The author in [96] proposed an enhanced algorithm ERP (Evolutionary Routing Protocol) which improves energy efficiency and network lifetime for heterogeneous networks. An evolutionary algorithm with a better fitness function proves to be very effective for efficient energy consumption, load balancing, network lifetime (Table 13).

7.2.2 C-RPL Algorithm

In WSN many different tasks such as sensing the information, collecting the data from different sources, and processing the data are done. In RPL network is divided into several RPL instances and how this division takes place is not explained by the RPL. Thus [97] developed C-RPL which is known as Collective-RPL and in this, each instance has a focus to reduce energy consumption. In C-RPL nodes were not predefined as they were constructed according to the system condition, locations, and occurrences. C-RPL by using coalition gives high performance but with increasing power consumption, thus by using fairness analysis α in this the performance and energy consumption trade-off are reduced. When compared to RPL, C-RPL gives better performance with reduced energy consumption in MATLAB simulation.

7.2.3 ZEEP Algorithm

ZEEP stands for Zonal based on Energy Efficient Routing Protocols was proposed to enhance the network lifetime and reduce the intra and inter-cluster transmission distance between the nodes. WSN has issues related to network connectivity, coverage, bandwidth which affects its performance then it requires the careful setting of the network. In [98] author has proposed an Optimized-ZEEP algorithm where two stages of clustering are presents. At initial stage, the fuzzy inference system is present which uses a Fuzzy logic controller for selecting cluster head (CH) taking inputs as the energy of each node, its density, mobility, and distance from a base station. For promoting the best CH genetic algorithms were used. In the second stage of clustering genetic algorithms were applied for optimally balancing the CHs distribution in the network. In comparison to ZEEP, OZEEP has better efficiency and less packet loss.

7.2.4 MTPCR Algorithm

The signal strength of received packets and the contentions that occurs in the contention-based MAC layer were the two factors that affect the transmission bandwidth as they cause more power consumption. Author [99] has proposed Power-aware routing protocols known as MTPCR (Minimum Transmission power consumption routing) which discovers the routing path for transmission consuming less power. With the help of neighboring nodes, MTPCR analyzes the power consumption during transmission and uses path maintenance for optimal path bandwidth. This helps in the reduction of power consumption and node density is used to decide whether or not to activate the path maintenance. The comparison is conducted in terms of throughput, energy consumption during data transmission, and path discovery with AODV, DSR, MMBCR, xMBCR, and PAMP, simulation results show that MTPCR is better than these.

7.2.5 AOVD Algorithm

AOVD algorithm stands for Ad hoc On-Demand Distance Vector algorithm supports multicasting and unicasting within a uniform network. It is an on-demand and table-driven protocol and the packet size is uniform. Each route has its lifetime which means after a certain time it expires if not used and the route is maintained only when it is used. AOVD maintains only one route between the source node and destination node. The author in [100] proposed a multi-cast routing protocol known as Extended-AOVD algorithm based on distributed minimum transmission (DMT). In Extended AOVD each sensor node has fixed power and the same communication range. Here if the received packet has less power than the threshold power it is accepted and for route, optimization EAOVD forwards the routes which connect to the multi-receiver. EAOVD as compared to AOVD, LEACH algorithm is better in terms of performance, packet loss, throughput, energy consumption, and network delay.

7.2.6 PHASeR Algorithm

PHASeR stands for Proactive highly ambulatory sensor routing algorithm is proposed by [101] for radiation mapping. It uses blind forwarding methods to send the messages in a multipath manner and Hop-count gradient is used for blind forwarding in PHASeR. In this, all nodes listen to the communication and only the receiving nodes have the right to decide that whether or not to send the received information further in the network. PHASeR is mathematically analyzed in terms of mobility, traffic load, and scalability. This algorithm gives better performance as compared to AOVD, OLSR.

7.2.7 HEED-ML Algorithm

HEED stands for Hybrid energy-efficient distributed clustering protocol, several neighboring nodes, and residual energy were two factors for selecting the CHS. It does not depend on the density of WSN and used single-hop transmission. Intra-communication cost and residual energy were two parameters for the selection of CHs, where residual energy is first and AMRP (average minimum reachability power) is the second parameter which is a tie-breaker. Author [102] has developed the HEED algorithm and it is also known as a multi-level HEED (HEEDML). Node density and distance were the two add-on parameters necessary for CHs selection in fuzzy deployment. After the CHs are selected then aggregation on data and after that transmission is done. HEED gives better network throughput, reduces delay, energy consumption, and transmission in comparison to others.

7.2.8 EA-FSR Algorithm

Author [103] has developed the Energy-Aware fisheye state routing (EA-FSR) algorithm. It takes energy as the basic parameters for selecting the neighboring nodes rather than distance and the energy of all neighboring nodes was compared to find which nodes have the highest energy. Here with basic FSR, an energy-aware path mechanism is used and the packets were forwarded to nodes considering the efficient energy parameters. EA- FSR performs better than FSR with reduced energy consumption and better performance.

7.2.9 EGRC Algorithm

A novel approach has been proposed by [104] for reliable transmission in underwater acoustic sensor networks (UASNs) as energy consumption is considered to be an important issue in underwater networks. EGRC focuses on complex parameters needed in underwaters such as 3-D dynamics of node density Topology, CHs, mobility, and delay. The 3-D network is developed for EGRC with 3-D small cubes as clusters and the density of node depends upon the size of small cubes. The selection of CHs depends on the residual energy and smallest distance from the base station, later a dynamic mechanism is introduced where small cubes become the CHs on a rotation basis for a particular time. After this, an efficient search algorithm comes where it gives an optimal route path by considering end-to-delay, energy metrics, and distance. In NS2 this algorithm is simulated with Aqua-Sim package, where EGRC gives better performance in terms of energy efficiency and network lifetime in comparison to LEACH, EL-LEACH, VBF, and L2-ABF.

7.2.9.1 PSO Algorithm

Swarm intelligence-based algorithms are self-organizing; self-associated having cooperative behavior among particles and they also give feedback (positive and negative). PSO (Particle swarm intelligence) is a swarm intelligence-based algorithm where the swarm is represented in the form of groups of particles. During the transmission of data, a swarm will be moved to find out the optimal path. The author in [105] found the solutions to two common problems of optimization i.e. clustering and energy-efficient routing by giving linear and non-linear formulas for these two.

7.2.9.2 QoS-PSO Algorithm

The author in [106] introduces QoS in PSO by using the intelligent agent. QoS-PSO is an agent-assisted routing algorithm where QoS is an adaptive value in the PSO algorithm. Here the routing state of every node, changes in network topology, and communication of network were examined by an intelligent agent. This algorithm performance is better for large networks and has better scalability.

7.2.9.3 PSO-ECHS Algorithm

PSO-based energy-efficient cluster head selection algorithm (PSO-ECHS) is implemented by [107] and here fitness function is used in PSO. Cluster head selection is done by considering various parameters such as intra-cluster distance, sink distance, and residual energy for calculating weight function. This algorithm gives better performance than LEACH, LEACH-C.

7.3 Location-Based Routing Algorithm

In a routing algorithm where the focus is on finding the path between pairs of nodes so that data can be transmitted with less delay, various types of algorithms exist in the routing family. Data-centric and hierarchical-based routing algorithms focus on the information of the network for finding the paths but in a location-based routing algorithm, the geographical location of nodes is used to transmit the data. This type of algorithm is mainly used where the location of sensor nodes is necessary such as environmental analysis, forest monitoring, and environment monitoring where the location plays an important role. Now the things that come to mind how does a node get to know about the location information so it can be done by using on-chip GPS or location system or location algorithms. On-chip GPS on every node is not as much feasible because it consumes more energy; cost so various location systems and algorithms were used. Classification of a location-based algorithm is a uni-cast location-based and multi-cast location-based algorithm. In uni-cast location, the sender sends the data to a specific node whose address is known by its but in multi-cast routing, the data were sends to multiple nodes known to sensor nodes.

There are some of the schemes used by location-based routing algorithms to forward the packets in the direction of the path, so any of the one schemes are chosen by the algorithm. In NFP (Nearest with forwarding progress) [108] packets will be sent to the nearest node in the direction of the path moving toward the destination and in greedy forwarding packets will be sent to that node from where the number of hops will be minimized in direction of the destination. MRF (Most forwarding progress with radius) [109] send the packets to that node from its radius coverage which will move toward the destination direction and in compass routing [110] in this the node which will make a smaller angle with two straight lines will be chosen for forwarding the packets.

7.3.1 GPSR Algorithm

Greedy perimeter stateless routing algorithm (GPSR) [111] uses both greedy and perimeter scheme for forwarding the packets to the node but both were not used at the same time. It is a uni-cast routing algorithm that uses a perimeter routing-based scheme only when a greedy algorithm is not applicable. Each node has information about the location of their neighbor and packets sends from the source node have source and destination node address in that. Here when a packet is forwarded to a node then the greedy scheme chooses the best promising node in the direction of the destination node. Sometimes there exists a void region where no neighbor is found despite having a shorter distance than then perimeter scheme has been chosen there. GPSR is a very simple routing algorithm that only needs the location information of the neighboring nodes. Normally it used the greedy scheme where it needs the information of the neighboring nodes to sends the packets but in case the neighbor node is not available then the perimeter scheme is used using the right thumb rule for forwarding the node.

7.3.2 GAF Algorithm

GAF (Geography Adaptive Fidelity) [112] is another location-based routing algorithm with energy awareness. It is also a uni-cast routing algorithm where the entire network is divided into the grid and only one node can transmit the data to other grid as in clusters. There is one main node to which all other nodes forward the data and that main node transmitting the data to other cells and they have the information about the other cell's neighbors. The only main node is active and all other nodes were in sleep mode.

This algorithm is very simple: in this initially, all odes were in the discovery mode where the node waits for the data from other nodes within a cell for a random amount of time after the time expire the node comes in an active mode where it starts transmitting the data to other cells for a random time. During the activation mode, the node broadcasts the data so another cell node comes to an active node for forwarding the data. After that, the node comes in discovery mode where it discovers the data for random time after the time expires the node comes in sleep mode.

7.3.3 GEAR Algorithm

GEAR (Geography and energy-aware routing) [113] is a routing protocol that forwards the data to a particular region rather than the entire network. It has two working phases of operation: first, the packet is forwarded towards the destination based on location and energy awareness information. Second, the when packet reached destination nodes, diffusion of packets within the target is done by recursive restricted forwarding.