Abstract

As the number of smartphone users is increasing exponentially, there is an increase in the availability of continuous sensor data, which has attracted enormous interest in sensor-based human-activity recognition (HAR). Recognizing human activities is particularly important in detecting abnormal activities and tracking a person's physical activity, especially in healthcare applications, among many others. In this paper, HAR analysis is conducted with three different machine learning algorithms (Support Vector Machines (SVM), Decision tree, and random forest methods) based on smartphone sensors. Machine learning algorithms are capable of identifying and differentiating between different human activities using mobile phone sensor data. The smartphone sensors (gyroscope and accelerometer) data are recorded at the Koneru Lakshmaiah Education Foundation University campus, Guntur, India, with different human activities. In this research work, the data from smartphone mobile sensors were initially analysed with SVM, decision tree, and random forest algorithms. To evaluate the machine learning algorithm's accuracy, F1 score for different smartphone sensors for both individually and combined is estimated. The results indicate that the proposed machine learning methods can derive a relation between type of activity, algorithm, smartphone sensors data, and their corresponding accuracy. The outcome of this work would be beneficial for detecting abnormal features of older people with a smartphone device.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Smartphones have a wide range of sensors to record human interaction with our surroundings. Sensors like cameras, gyroscopes, accelerometers, magnetometers, temperature sensors, humidity sensors give the environment characteristics apart from leading telecommunications to the World. Even Wireless Sensor Networks have been widely applied in many fields, such as smart home, industrial control, telemedicine, and disaster relief [1, 2]. There is an increased necessity in developing control models for data planes in software WSN's [3]. The availability of continuous and instantaneous smartphone sensor data of a lifestyle enables us to understand human behaviour and different human interactions better. Activity recognition and Human behaviour cognition are the most sought-after applications of smartphone sensor data. Human activity recognition plays a vital role in medicine and surveillance, especially in monitoring the health of the elderly, where we can continuously track their health condition based on their current activity, which is diagnosed for the sensor data using machine learning techniques [4]. HAR has multiple other applications, including helping patients with cognitive disabilities, Human fall detection, Human–computer interaction, ambient intelligence [5]. Identification of Human activity monitoring with sensors has been used extensively for health monitoring, sports science, navigation, emergency response, etc. [6, 7]. A combination of the latest positioning technologies and sensors are captured human movements in natural environments [8]. Human activities are tracked with the help of individual/dedicated sensors attached to a person, which would be inconvenient to travel and work. The smartphone sensors are capable of detecting such abnormalities are comparatively less expensive than the individual sensors alone. Least Square-SVM (Support vector machines) algorithm is implemented for HAR [8]. Ling Pei et al. [8] found that the motion states recognized with an accuracy rate of up to 92.9% using LS-SVM classifiers. However, there is a need to develop an appropriate machine learning algorithm to understand complex HAR feature classification. In this method, tree-based and random forest methods evaluated for predicting HAR features. Tree-based methods do not assume the distribution of data. The simplest model is the Decision tree, and the superior version of the decision tree algorithm is that the Random forest algorithm implemented with smartphone sensors data. The decision tree is a straight learning algorithm with a pre-defined target, which is mostly used in classification, whereas Random forest algorithms in machine learning can handle large sets of attributes. Mobile phones with sensors like accelerometer and gyroscope acquired at the "Koneru Lakshmaiah Education Foundation," located in Guntur district, Andhra Pradesh, India. The outcome of this work would be useful for characterizing human activity recognition using smartphone sensors data.

2 Experimental Setup

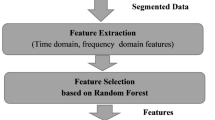

In this paper, we have implemented machine learning algorithms on the data collected through mobile phone sensors. The mobile phone sensors record the data in the form of CSV files. The following testing human activity features investigated for applying the machine learning algorithms.

-

1.

Run or Walk

-

2.

Climbing up or down the stairs, the data collected from the sensors have been grouped in CSV format for simple classification. The grouped data has been given input to the proposed machine learning algorithm. The proposed algorithms were evaluated with the Gini index (Fig. 1).

Honor 10 Lite: mobile used to collect sensors data at KLEF University campus, Guntur, India, on 7 November 2019. The Sensors data was collected using Sensor Data Collector and Sensor Record CSV mobile applications available on Google play store. The sampling rate of data is 100 samples per second (Figs. 2, 3).

2.1 Accelerometer

A mobile phone accelerometer is used to track the smartphone's orientation (Table 1). An accelerometer tests angular motion acceleration. The accelerometer is an electro-mechanical device that measures the acceleration force induced by motion or gravity. The detector of the accelerometer has been instrumental in running or walking. The accelerometer in the mobile we used is ST-LIS3DH.

2.2 Gyroscope

The Gyroscope allows the accelerometer to know how your device is positioned, providing another level of accuracy. The gyroscopes inside the phones use Micro Electronics and Mechanical System (MEMS) based gyroscopes, a smaller version of the design embedded in a PCB (Table 2).

The data collected from these sensors give us a total number of six parameters. The accelerometer provides acceleration values in x, y, z directions, and Gyroscope provides orientation values that represent roll, pitch, yaw axis as x, y, z-axis, respectively. These values give us the motion and orientation of a person when moving. These values are recorded w.r.t time in milliseconds in a CSV (comma-separated values) sheet. The collected data is raw data from sensors that vary spontaneously with time. This data is then processed, grouped, and classified according to the algorithm is implemented to the recorded smartphone dataset.

3 Algorithms Under Evaluation

In this paper, HAR analysis is conducted with three different machine learning algorithms (Support Vector Machines (SVM), Decision tree, and random forest methods) based on smartphone sensors.

3.1 Support Vector Machine (SVM)

The SVM method is one of the primitive supervised learning techniques. It increases the probability of geometric margin and decreases classification error, mainly the empirical one. This property made the algorithm exceptional, and it can be classified as a maximum Margin Classifier [9]. Hyperplanes play a significant role in SVM classifiers. A hyperplane is a plane or a subspace that has one dimension less than the space surrounding it. In any data provided, several hyperplanes exist, segregating the data points. SVM classifier recognizes the best hyperplane, which causes the largest segregation between the points of a decision function, thus classifying the data [10]. SVM classifiers are categorized into linear and non-linear classifiers.

3.2 Decision Tree

It is a tree-based algorithm that is, by definition, a supervised learning technique but can also be termed as a technique in unsupervised learning because this helps cluster the data. It uses a tree-like model of decisions [11]. A decision tree is drawn from up to down with its root at the top. Visualization of datasets and their corresponding outputs is interpreted easily using this algorithm. The non-linear relationship between parameters does not affect the accuracy of the result, which is one of this algorithm's significant features.

Learning the decision tree is constructing a decision tree from class-labeled tuples of learning. A decision tree is a flow-chart structure in which each inner (non-leaf) node denotes a check on an attribute, each branch represents a test result, and each leaf (or terminal) node has a class tag. The root node is the highest in a network. Tree models where the target variable can take a discreet set of values called classification trees; in these tree structures, the leaves represent the labels of the class, and the branches represent the conjuncts of the characteristics that lead to the labels of the class. The root node (decision node) is the highest in a network. Internal nodes (chance nodes) are the nodes that represent the available outcomes at that point. The top edge of the node is connected to the parent node. The bottom edge is connected to the child node. Leaf node (final nodes) is the resultant value. Branches of a decision tree are paths from root nodes to internal nodes, which define the classification decision rule splitting is a significant component in the decision tree algorithm where parent nodes split into the target variable's purer child nodes. A recursive process is applied, which occurs until a stopping criterion [12]. Characteristics such as entropy, information gain, Gini index took into account as a reference for splitting. Stopping is a feature that has to consider for avoiding overfitting, which later makes the model non-reliable. One must identify the correct input variables before training the set, split into child nodes (Fig. 4). This process is implemented to ensure that the model does not lack robustness. The minimum number of records in a leaf node, the minimum number of nodes prior splitting, and the depth of any leaf node from the root node prevent overfitting. Pruning is an alternative technique to avoid stopping. In this technique, the model is optimized by removing excess nodes that do not contain much-needed information. Pruning is again classified into two types pre-pruning and post-pruning. In pre- pruning, adjustments are made such that so that it avoids the generation of redundant nodes. Post-pruning occurs after the decision tree is made and then it removes branches with redundancy to improve accuracy when the algorithm is implemented on a validation dataset [13].

3.3 Random Forest

As its name implies, the Random Forest consists of a large number of individual decision-making trees that function as a group. That tree in the random forest spits out the class prediction, and the class with the most votes is our model prediction [3]. The low correlation between models is the solution to this classifier. Random forest classifier is an advancement to the decision trees. The random forest algorithm is implemented in two steps. First, a forest is created with random samples of data and then the classifier's prediction from the first step. As the name suggests, random data should select from the dataset, and a decision tree should construct for the selected set. A prediction result was obtained, and the voting mechanism was implemented in this context. The prediction result with the maximum number of votes considered as the final prediction. The redundant values are removed in the voting process. By sampling N samples randomly, these samples are used to create a tree, then a forest. For K input variables, a value k is selected, variables selected at random such that k<<K (Fig. 5). K remains constant throughout the process, even though the size of the forest grows [12]. This algorithm is more accurate and efficient than decision trees. This classifier avoids overfitting, which is one of the significant disadvantages raised when the decision tree is used.

4 Accuracy Computations

The proposed machine learning algorithms performance evaluated using the Gini Index parameter.

4.1 Gini Index

The Gini Index is determined by subtracting from one the sum of the squared probabilities of each group [13] Knowledge Gain multiplies the likelihood of the class by the log (base = 2) of the group's probability. Knowledge Gain prefers smaller partitions with many different values. Gini index value ranges between 0 and 1. Gini index is faster to compute as compared to Entropy [3].

4.1.1 Computations Involved

where, pi indicates the probability of each class.

Entropy determines how the decision tree chooses to divide the data.

4.2 Confusion Matrix

A confusion Matrix is a matrix that has four values, True positive (TP), true negatives (TN), false positives (FP), and false negatives (FN). The four essential factors are calculated using this matrix: accuracy, precision, recall, and f1-score [3]

5 Results and Discussions

Initially collected sensor data from the accelerometer and Gyroscope is plotted against time in Fig. 6. the change in the type of motion is noticed, running, and walking for our test data from Fig. 7. This behavior change was investigated to classify smartphone sensors data points.

The accelerometer data and gyroscope data individually depict the change in human behaviours (Fig. 8). The raw data collected is enough to recognize the type of human motion, different plots of each sensor's individual axis data (Fig. 9). In general, this unprocessed data used to identify the feature through human involvement and differentiate the data for different human behaviours like walking, running, climbing up-stairs, getting down-stairs, standing still. Here to distinguish the two activities, we denote walking as 0 and running as 1.

Figures 10 and 11 Show the accuracy of activity recognition between walking and running when the Decision tree algorithm is used with a 20% training set. The point of change activity is identified for each sensor (Fig. 11). Similarly, from Figs. 12 and 13, It is observed that the accuracy of activity recognition between walking and running with a 20% training set using random forest algorithm. Figures 13 and 14 show the accuracy of activity recognition between walking and running when the SVM algorithm is used with a 20% training set (Fig. 15).

We have tested and placed two features in Run or Walk, climbing up or down the stairs. The training set percent arranged in 4 sets as 20%, 40%, 60%, and 80% each for both accelerometer and gyroscope X, Y, Z in SVM, Decision tree, and Random forest algorithms. Table 3 shows the accuracy results of Run or Walk data. The accuracy, when compared between these algorithms SVM, is proven to be the least efficient one. The accuracy of the Decision tree algorithm is a little better than that of the random forest algorithm. Random forest helps in reducing the variance part of the error more than the bias part. Hence, the decision tree may be more reliable on a given training data set. But in terms of accuracy, Random forest is often better on a random collection of validation data. As we can see, if we provide the algorithm with more testing attributes, the result gets more accurate in the decision tree algorithm, excluding the first case, which is a result of the large quantity of test data set available. The highest accuracy of 83.79%, 90.016%, and 89.19% are achieved for Gyroscope and 84.39%, 95.48%, and 94.59% for Accelerometer Run or Walk data attributes using SVM, Decision Tree and Random Forest algorithms, respectively. Total accuracy of 87.56%, 95.58%, and 94.43% for SVM, decision tree, and Random Forest. Climbing up and getting down the stairs are very similar activities (Table 4).

Here, we achieve the highest accuracy of 65.21%, 67.11%, 67.25% for Gyroscope and 57.13%, 59.51%, and 60.25% for SVM, Decision Tree, and Random Forest respectively. Here the accuracy of prediction for the random forest when using only accelerometer data can be observed to grow with an increase in training data set, but the same is not valid for other cases.

6 Conclusion

The results indicate that the SVM's accuracy,decision tree algorithm, and random forest algorithm differ in nominal rates in running or walking as the features do not change much, and the data has many similar features. But on the contrary, climbing up and down has many spike changes in the dataset, which makes the data challenging for accuracy tests. In this case, the random Forest algorithm is proven to be more efficient, and the rate of efficiency is directly proportional to the increase in training to the testing ratio, which indicates that the algorithm works more efficiently upon more training. The accuracy of algorithms is determined using the Gini Index analysis. It is found that the test data set results are closely following the actual sensors data for random forest and Decision tree methods than SVM method. But the random forest is often better on a random collection of validation data, which is generally the case. As the data in walking or running was easy to differentiate, both the algorithms(DT and RF) have proven to be robust, and in the case of climbing up or down, as the data changes unevenly, the differentiation seemed entirely less accurate, and we believe more training on such data sets can improve the prediction accuracy. The accuracy of the algorithm is also dependent on the type of activities under consideration. In the future, more features and more training data sets will be investigated.

References

Abu-Mahfouz, A. M., & Hancke, G. P. (2018). Localised information fusion techniques for location discovery in wireless sensor networks. International Journal of Sensor Networks, 26, 12–25.

Ali, J., Khan, R., Ahmad, N., & Maqsood, I. (2012). Random forests and decision trees. International Journal of Computer Science Issues (IJCSI), 9, 272.

Arize, A. C., Bakarezos, P., Kasibhatla, K. M., Malindretos, J., & Panayides, A. (2014). The gini coefficient. decomposition and overlapping. Journal of Advanced Studies in Finance, 5, 47.

Durgesh, K. S., & Lekha, B. (2010). Data classification using support vector machine. Journal of Theoretical and Applied Information Technology, 12, 1–7.

El-Naqa, I., Yang, Y., Wernick, M. N., Galatsanos, N. P., & Nishikawa, R. M. (2002). A support vector machine approach for detection of microcalcifications. IEEE Transactions on Medical Imaging, 21, 1552–1563.

Frank, K., Vera-Nadales, M. J., Robertson, P., Angermann, M. (2010). Reliable real-time recognition of motion related human activities using MEMS inertial sensors. In Proceedings of the 23rd international technical meeting of the satellite division of the institute of navigation (ION GNSS 2010), pp. 2919–2932.

Géron, A. (2019). Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow: Concepts, tools, and techniques to build intelligent systems: O'Reilly Media.

Istepanian, R. S. H. (1999). Telemedicine in the United Kingdom: Current status and future prospects. IEEE Transactions on Information Technology in Biomedicine, 3, 158–159.

Luštrek, M., Kaluža, B. (2009). Fall detection and activity recognition with machine learning. Informatica, 33.

Pan, J., Li, S., & Xu, Z. (2012). Security mechanism for a wireless-sensor-network-based healthcare monitoring system. IET Communications, 6, 3274–3280.

Patel, N., Upadhyay, S. (2012). Study of various decision tree pruning methods with their empirical comparison in WEKA. International Journal of Computer Applications, 60.

Pei, L., Guinness, R., Chen, R., Liu, J., Kuusniemi, H., Chen, Y., et al. (2013). Human behavior cognition using smartphone sensors. Sensors, 13, 1402–1424.

Susi, M., Borio, D., Lachapelle, G. (2011). Accelerometer signal features and classification algorithms for positioning applications. In Proceedings of the 2011 international technical meeting of the institute of navigation, pp. 158–169.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sri Harsha, N.C., Anudeep, Y.G.V.S., Vikash, K. et al. Performance Analysis of Machine Learning Algorithms for Smartphone-Based Human Activity Recognition. Wireless Pers Commun 121, 381–398 (2021). https://doi.org/10.1007/s11277-021-08641-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-021-08641-7