Abstract

In recent years, there has been a great increase in usage of cloud data centers which leads the energy consumption growth by about 10% a year continuously. Further, due to the increase in temperature of cloud data center, the hardware failure rate increases and maintenance cost is also increased. Hotspots and an irregular temperature distribution are the major research issues. Therefore, accurate and reliable thermal management of the data center is a challenging task. In order to select appropriate and efficient thermal management approach, this survey presents a state of art review on the development in this field, discusses the classification of thermal management approaches and thermal mapping models. This review also reveals the thermal management and heat management strategies at data center level to make the data centre more energy efficient. Further, it discusses various thermal aware task scheduling strategies. In thermal management, computing equipments are scheduled with the objective to minimize the hotspot and cooling cost. Finally, evaluation metrics for measuring thermal efficiency and open research issues in this field are provided. Both researchers and academicians find this review useful since it presents the significant research in the field of thermal management.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cloud computing provisions computing capacities including networks, storage, hardware and software as services under a usage based payment model [1,2,3]. Cloud computing is defined as “A model for dynamic provisioning of computing services that employ virtual machine technologies for consolidation [4]”. Thus, power consumption is the major concern in cloud data centres. From 2007 to 2030, the electricity consumption is going to increase 76% [5]. As per Gartner report [6], a data centre is predicted to take energy equivalent to 25,000 households. Further, the total energy cost for data centre was 11.5 billion in 2010 and energy bills increases in every five years by Mckinsey report [7]. Natural Resources Defence Council (NRDC) in August 2014, published a report to outline the criteria of data centres efficiency [8] and the results show that by 2020, data centres in United States will consumes 140 billion KWH electricity which is equivalent to generation of 50 power plants. Their analysis in collaboration with Anthesis represents that approximately 30% of servers are underutilized or not required [8] but consuming power. The server which is in idle state approximately consumes 50% of the total power [9]. Moreover, in data centres, the effective resource usage is only 20–30% [10]. Therefore, idle/underutilized resources result in significant energy wastage.

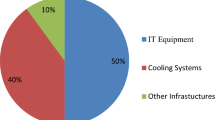

Data centres are highly energy intensive than normal office environment. In a data centre, energy consumption is mainly occurred due to computing and cooling systems. Energy consumption by the computing is attributed to the IT infrastructure. The energy consumption of computing servers is dependent on the workload and resource usage [11]. Further, due to the large use of computing resources in the data centre, devices produce a massive amount of heat which increases the thermal signature. Normally, to maintain temperature within limit/threshold, CRAC units is used which further increases the power consumption. Therefore, cooling facilities causes significant energy usage that is near to 50% of total energy cost. However, if temperature is not maintained, reliability of hardware equipment is decreased and due to this, QoS is breached. Thus, thermal management is a challenging issue due to the scale and complexity of cloud data centres.

Thermal awareness is the process of temperature distribution in a data centre and it is important to enhance the energy as well as cooling efficiency and decreases the probability of server failure. Temperature and power has a direct relationship to each other so, thermal management techniques are of great importance in designing energy efficient data centres [12]. Increased temperature always leads to higher cooling cost; increases the leakage current which enhances power consumption of servers. Further, overheating of components causes thermal cycling that increases the hardware failures and due to this, total cost of ownership is increased. Therefore, it is mandatory to maintain the temperature for reducing the energy cost and maximizing reliability [13]. Overall, thermal management is of great concern for minimizing energy consumption and optimizing the performance of data centres.

Over the last few years, we can find much work being done on thermal management. Recently, various survey articles have been proposed which is relevant to thermal management system as shown in Table 1. Kong et al. [14] summarizes recent thermal management approaches for microprocessors that affect on micro architecture level. By using a hierarchical organisation, they provided a detailed overview of thermal management techniques. They characterize, classify, and describe thermal management techniques for temperature monitoring, floor planning, micro architectural techniques, cooling techniques, and thermal reliability/security. Sharma et al. [15] provided the taxonomy of reliability/energy efficient approaches and shows their trade-off in the cloud environment. They characterize and classify failures of resources, fault tolerance and energy efficient techniques. However, they do not concentrate on the cooling management approaches to lessen the energy consumption in data centres. Lee et al. [16] highlights the advantage of proactive heat imbalance approach for thermal management against reactive temperature-based schemes. Sheikh et al. [17] provides the overview of thermal-aware task scheduling techniques for multi-core systems. They focused on managing temperature through task scheduling and provide a joint minimization of performance and temperature considering constraints. However, our survey provides the following:

-

Identifies the thermal management approaches and mapping models to deal with the hotspot.

-

Identifies the important aspects of thermal management techniques in the scope of the data centres.

-

Classifies the thermal management approaches on the basis of server power, heat flow and different strategies used to minimize the heat generation so that hotspot occurrence are decreased.

-

Specified the evaluation metrics to measure the thermal efficiency of the system and suggested the open research issues for the researchers and academicians to work in this field.

This paper consists of different sections. Section 2 describes the approaches for thermal management. Section 3 is about proactive and reactive thermal management techniques. Section 4 is related to thermal mapping models. Section 5 describes the thermal management strategies at data centre level. Further, Sect. 6 presents the evaluation metrics for measuring the thermal efficiency and Sect. 7 presents the open research issues for the researchers working in this field. Finally, Sect. 8 provides the conclusion.

1.1 Methodology of Paper Selection

1.1.1 Sources for Searching Articles

We have searched the articles under heading “Thermal management in cloud data centers” from the various publishing houses such as Google Scholar, Elsevier, Science Direct, Taylor and Francis, Web of science databases from 2005 to 2021.

1.1.2 Search Strategy

The search keywords are helpful in refining the search and selection process. Research papers which are written in the English language are considered. Firstly, 2313 articles related to “Resource Management in cloud data centers” topic were available in Web of Science on dated 24 November 2020. Figure 1a represents a pie chart that shows the statistics with title “Resource management in cloud data centers”. After that, search was to be confined by considering the targeted topic “Thermal management in cloud data centers” (search within first topic)”. Then 179 articles are provided on the basis of specific keywords which are related to our survey. Keywords such as Cloud Computing (32), Green computing (28), Energy Efficiency (26), Data Centers (24), Cooling (18), Scheduling (16), Power Management (13), Temperature Control (13), and Quality of Service (9) are considered. Figure 1b represents a pie chart that shows the statistics with title “Thermal management in cloud data centers”. Finally, we have selected 112 papers for our review from these on the basis of research contributions.

1.1.3 Inclusion/Exclusion Criteria of Research Papers

The most important research articles are chosen after checking the title, abstract and full content of the research papers which is done to ensure that the specified results are related to the current field. The inclusion and exclusion criteria of papers are shown in Fig. 2.

1.1.4 Research Issues and Motivation

A number of research topics\issues had been framed to plan the review. Table 2 presents the important issues involved in relation to the sub topics of the review and their motivation.

2 Thermal Management Approaches

A number of researches have been done in the area of thermal management to ensure efficient operation of cooling system. To deal with the hotspot, cooling equipments must ensure that data centre temperature must be within the threshold value. Overall, thermal management can be categorized into two approaches:

2.1 Mechanical Based

Mechanical based approach mainly focused on how to circulate cool air effectively by having efficient cooling infrastructure. It basically studies the design of data centre, air flow models and cooling system design. Design of data centre plays a main role in thermal management. A data centre is normally organised with the hot-aisle and cold-aisle structure and use raised floor tile to circulate cool air as shown in Fig. 3. Computing devices placed on a raised floor tiles, organised into number of rows in order to divide intake of cold air from cold aisles and hot air exhaust from hot aisles. Cooling systems used chilled CRAC unit that supply a cool air to maintain all the servers below a redline temperature/threshold. The cool air enters from the front side of the rack and pick up the heat and removed from the data centre by the air conditioner intakes. In paper [18], the authors provide a methodology of air flow management for perforated tiles and raised floor in a data centre.

2.2 Software Based

Software based design mainly concentrated on how to distribute or migrate jobs so that cooling cost is minimized. In paper [19], the authors proposed energy saving techniques by distributing the workload to energy efficient nodes. Further, in paper [11], authors used the power budget concept that is the product of temperature and power. They developed two scheduling algorithms to distribute workload fairly and effectively on the basis of power budget. In paper [20], the authors proposed the power aware scheduling algorithm by focusing on the fact that minimization of the total energy consumption is not enough but minimizing energy at different providers is must. The solution reduces the energy consumption by different providers while guaranteeing the QoS constraints.

3 Proactive Versus Reactive Thermal Management

Thermal management techniques attempt to maintain thermal profile and control data centre cooling equipments to maintain the energy efficiency. Here, we classify the techniques for thermal management as proactive and reactive thermal management as shown in Fig. 4.

3.1 Proactive Thermal Management

In proactive thermal management, there is a requirement of prediction and estimation of temperature in advance. Therefore, a lot of training is required for temperature estimation and thermal profiling. Proactive thermal management is predictive in nature. It estimates the heat generation in advance and manage the CRAC unit accordingly. The main goal of proactive thermal management is to minimize the thermal anomalies and at the same instant minimizing the power consumption. It minimizes the risk of damaging hardware components because different actions like adjusting fan speed, adjusting air compressor cycle, placing workloads are taken in advance as per the prediction. Due to this, risk of overheating and excessive cooling is prevented. Proactive thermal management solutions use measurements and predictive models to avoid undesired thermal behaviour [16].

In research paper [21], the authors considered HPC applications that used proactive cross layer mechanism for maximizing resource utilization, minimizing energy consumption and improve heat extraction. They used thermal aware virtual machine consolidation for achieving a highest coefficient of performance (COP) of cooling. To estimate the future temperature of the data centre in advance, there are various methods described as follows.

3.1.1 Application Basis

Comprehensive knowledge is required about the behaviour of the application workload. Depending on the application behaviour, its resource usage is predicted i.e. CPU, memory, or I/O requirements. If the usage pattern of workload and specification of the system is known in advance then estimation of heat dissipation is very easy [16]. The resource usage pattern is obtained by analysing the selected workload behaviour over period of time that can be used to calculate power utilization and in turn the heat generation.

3.1.2 By Collecting Databases

In proactive model, various parameters like temperature estimation, heat imbalance etc. are stored in databases to control the cooling system in advance before damaging the hardware component of the system due to heat imbalance. This information is collected from experimental log history and stored in the database. In future, that database is beneficial to adjust the parameters like fan speed, workload placement etc.

3.1.3 VM Placement

Virtual machine (VM) placement is the method of choosing appropriate physical host machine for a virtual machine [16]. Suitable host selection is essential to increase energy efficiency, quality of service constraints and proper resource utilization in cloud systems. Hypervisor or virtual machine monitor control all the VM operations. However, VM placement is different from one cloud service provider to another. The placement techniques for VM consolidation have two major goals i.e. power saving and quality of service (QoS) [22,23,24]. In power saving approach [25, 26], the main aim is to obtain a virtual machine to physical machine mapping that makes the system energy-efficient with proper resource utilization. However, in later case, proper VM-PM mapping is required in order to achieve QoS requirement of application users. VM placement approaches can be classified in two ways as:

-

Static VM placement is the placement technique in which the VMs mapping is done statically before actual scheduling and is fixed throughout the period of time. There is no need to recalculate mapping further.

-

Dynamic VM placement is the placement technique in which the placement is done dynamically on the basis of instantaneous system load. Dynamic VM placement further can be classified as reactive and proactive:

-

(a)

Reactive VM placement: In this VM placement, initial placement changes when the system moves to some undesired condition. These changes occur because of the issues related to system performance, maintenance, application workload or other service level constraints.

-

(b)

Proactive VM placement: It changes the selected host machine for the particular VM as per the initial placement before the system actually reaches the specific condition [19].

-

(a)

3.1.4 Resource Provisioning

Resource provisioning techniques observe workload on the number of VMs and accommodate them on the set of resources used by VMs. In paper [27], the authors proposed a dynamic and adaptive approach for adjustment of resources like CPU and memory to each VM on a server to efficiently use the available resources.

3.2 Reactive Thermal Management

Reactive thermal management takes a corrective action when the temperature exceeds the threshold temperature. In reactive approach, different parameters like compressor’s duty cycle and changing the speed of fan are the way to control the temperature. Both can be changed according to user’s specification. In this, users do not have any previous knowledge regarding how much heat is going to generate in future. It just changes the compressor’s duty cycle and speed of fan to adjust the temperature when needed [16]. In paper [28], the authors considered various reactive thermal management approaches considering the energy efficiency and performance trade-off for high performance computing (HPC) workloads. Virtual migration is the important technique to lessen hotspot in data centres and their focus is to lessen the energy consumption, minimizing the usage of data centre resources and the undesired thermal behaviour to obtain QoS. In paper [29], the authors considered how to distribute tasks to the servers using XINT algorithm with the aim to minimize the inlet temperature using task assignment. Redline temperature is considered to minimize the cooling requirement and maximize supply temperature. Computational fluid dynamics (CFD) simulation was carried out to check the temperature variations after workload distribution. Further, the concept of thermal set points was introduced in paper [30] to obtain high performance with low power cost. HVAC power was used to provide cooling zone. Thermal-aware power optimisation in data centers (TAPO-DC) measure the high and low chillier set points based on its utilization levels and achieves power reduction. TAPO-server mechanism was used to adjust thermal set points, leakage power and server fan. Through IBM power 750 servers, 5.4% of total server power reduction is achieved. In paper [31], the authors focused on efficient load provisioning with varied cooling/inlet air temperatures for a cluster of servers. Their aim is to enhance energy efficiency by limiting the outlet air temperature with in specified range. Here, MPC method was used to achieve optimal solution. They used a power model based on server on and off status. They developed control architecture for a cluster and MPC optimizer that assigns load to active server only as turned off server consumes no power. Base approach keeps all servers busy all the time and distribute load to active servers while minimum approach distributes load to minimum number of servers.

Reactive thermal management approaches can be further classified as follows.

3.2.1 Hotspot Management

Thermal imbalance always interferes with efficient cooling operations. Normally, due to the thermal effects, heat recirculation is extracted in between the servers that can create local hot spots. Hotspot creates a probability of redlining server by increasing the maximum temperature; it creates a negative impact on reliability and degrades performance. Thus, hotspot management is essential for achieving the energy efficiency and reliability. In papers [32, 33], job placement algorithms were proposed that consider the thermal behaviour of a data centre to avoid hot spots. These algorithms do not consider the cooling behaviour; they only concentrate the thermal effects in the data centre.

3.2.2 VM Migration

VM migration is the method of VM movement from one host to another. When VM wants some resources as per the requirement and these resources are not available in physical machines, then migrate the VM to another physical machine (PM). The other reason of VM migration is to provide superior layout of the physical machines and data centres. Virtual machine migration is the important characteristic in virtualization which allows the movement of virtual machines from one physical host to another. The VM migration process has four stages. Firstly, select the heavily-loaded/lightly-loaded physical machines then choose the virtual machines to migrate. Afterwards, a PM is chosen to host the VM that meet all important needs like CPU, storage, and bandwidth. Finally, virtual machine placement process is performed where the selected virtual machines are migrated to the target PM.

Further, VM migration is divided into two phases: offline migration and online migration. In offline migration, firstly stop the currently running virtual machines and then perform migration of resources status like memory and current CPU status. Then restart the VM at the new physical server. When migration of the virtual machines is done, the services which are being provided during migration will be terminated. While in the live or online migration, the services continue their running process during the migration without any disturbance [34]. There are two important parameters to calculate the live migration performance [35]. One is migration time (Tmig) i.e. total time between the starting time of a migration and restart the VM at target PM. This migration time is the total time taken to perform the migration of the VM memory. When the VM will stop or slow down their working due to the migration, that time is known as downtime (Tdown). The main motivation is to minimize the migration time and downtime [34]. Online migration consists of two mechanisms: the pre-copy [36] and the post-copy migration [37]. Pre copy [36, 38] migration can be performed in different stages: firstly, copy the VM’s memory from origin to the target iteratively. The original VM will continue running during VM memory copy process. Secondly, system will stop their working from the origin VM and then copy the current state of CPU and dirty memory remains from the origin VM to target VM known as stop and copy stage. Finally, restart the VM on target machine known as restart stage.

Similarly, the post-copy migration [39, 40] also includes three stages: firstly, quit the original VM, then copy the current CPU status to the target VM. Secondly, resume the target VM. Finally, copy the VM memory as per the requirement. When the virtual machine starts again, the memory of VM is blank. If any VM attempts to access the memory page that has yet to be copied, then the respective memory page can be fetched from the origin VM. The migration time is maximum in case of pre-copy migration and the downtime is maximum in case of post-copy migration. However, Pre-copy requires more bandwidth as they transfer the memory pages from origin to target VM iteratively. In paper [41], the authors developed an approach to reduce power usage by identifying memory page characteristics. In papers [42, 43], authors deal with the energy savings by performing migration of the workload to the data centres where less electricity cost occur.

3.2.3 Dynamic Voltage and Frequency Scaling (DVFS)

Dynamic Voltage and Frequency Scaling (DVFS) is an efficient technique to reduce CPU power dissipation. In DVFS, operating system dynamically adjusts the frequency/voltage of processor at run time in order to achieve lower power consumption. It was also used to minimize the energy cost and dealing with the slack time of parallel tasks [44]. In paper [44], the authors proposed a two time scale control method (TTSC) to minimize power consumption of data centre using DVFS enabled processors for optimizing frequency settings and task assignments. They divided the optimization problem into two sub-problems i.e. DVFS and IT workload assignment, cooling system management. To evaluate the proposed model of thermal dynamics, they compared model with the CFD simulation. The main concern of underutilization and over-provisioning of the servers was emphasised in paper [45]. To avoid excessive over-provisioning, the authors proposed the peak power budget management among servers using DVFS and memory/disk scaling. In paper [46], the authors proposed scheduling algorithm for VMs using DVFS in a cluster to minimize the power consumption. In paper [47], the authors apply DVFS technique to memory management which achieves 14% of energy savings. In paper [48], the authors investigate the benefits of DVFS for the energy-efficiency of flash-based storage devices. They developed a heuristic algorithm and evaluate its efficacy considering performance and energy consumption by saving energy up to 20–30%. Authors in [49] proposed an algorithm considering performance constraints to avoid temperature threshold value using DVFS technique. The algorithm schedules the tasks to different number of cores and calculates the frequency at run time to ensure the system’s performance. Thus, DVFS is an effective technique for energy-aware problems.

3.2.4 VMM Configuration

VMM configuration is an alternate technique to VM migration and DVFS to deal with the hotspot. Xen hypervisor provides control mechanism considering the characteristics of workload for assigning it to particular CPU and memory [50]. VMMs allow processor of virtual machines to be allotted to physical processor using two different techniques: without-pinning and with-pinning. In without-pinning technique, VMM determine how virtual CPU’s are assigned to physical CPU’s. On the other hand, pinning technique is used to allow hard assignment of virtual CPU's to one or more physical CPU's.

4 Thermal Mapping Models

In order to determine the thermal map of data centre, there are three different models.

4.1 Analytical Model

This model is based on cyber physical and heat transfer properties of the data centres [13, 51]. This approach calculates the temperature of the server using the physical and heat transfer properties. It incurs less overhead to calculate temperature estimation.

4.2 Predictive Model

This model [52] uses the machine learning techniques and neural network to predict the temperature at diverse locations in a data centre.

4.3 Computational Fluid Dynamics (CFD) Model

This model provides best accuracy but slow in real time decision making [53]. CFD model is used to model the temperature evolution in a data centre. Some research works [11, 51] shows that the complexity of CFD based model is very high, thus online scheduling is not possible. Therefore, new online scheduling algorithms have been developed with less complexity namely: Sensor based fast thermal evaluation model [32], Weatherman—an automated online predictive thermal mapping [54] and Generic Algorithm and Quadratic Programming [51].

5 Thermal Management at Data Centre Level

Cloud data centre comprised of number of racks to situate the servers for processing workloads. During the workload’s execution, server produces heat. Sometimes, the heat generated by processors is above the specified threshold, then cooling management is necessary to maintain temperature/hotspot of the data centres. In data centers, thermal aware task scheduling techniques are considered to diminish the hotspot and set temperature of CRAC. Data center computing and cooling equally consume a large amount of electric power. So there is a need to optimize the thermal management at data centre level and it can be classified as shown in Fig. 5.

5.1 Power Modelling

Minimizing the power consumption of data centres is challenging and tedious issue as amount of data and computing applications are increasing day by day. Thus, the demand of storage media and computing servers is increasing to process them fastly within the specified time period. So, data centres need a large amount of power to process the computing application which results in more electricity costs and a huge carbon footprint. It is mainly categorised into server power and CRAC power as shown in Fig. 6.

5.1.1 Server Power

Server power is primarily due to various components like: CPU, storage, and peripherals. Environmental protection agency (EPA) in year 2006 stated that the whole power consumption of servers at data centres was 61 billion Kilowatt hour which is equivalent to entire power cost of 5.8 million United States households [55]. The power consumption of servers can be reduced via power-aware capacity provisioning techniques like DVFS and dynamic power management (DPM). In literature, many algorithms are proposed to consider the problems of energy consumption using DVFS technique in cloud environment are depicted in Table 3.

5.1.1.1 Dynamic Voltage and Frequency Scaling (DVFS)

DVFS technique minimizes the power consumption of processors by frequency and voltage scaling at run time. The concept behind DVFS is to eliminate power-wastage during idle slots/low workload periods by decreasing the processor’s voltage and frequency level so that the processor will have significant work at every time that leads to minimization in the power usage [67]. DVFS regulates the voltage level of the processor for task execution so that power consumption is reduced while guaranteeing the user’s defined timing constraints. In DVFS enabled processors, the power consumption (pc) of CPU is as follows:

where f = frequency, ceff = capacitance and vdd = voltage.

The frequency (f) is calculated as:

where vt = threshold voltage, l = hardware parameter.

Finally, the energy consumption to execute ith task with cpu cycles (ci), is

There exist several researches works on energy efficiency using DVFS. These works can be divided in the following categories:

-

1.

Online versus offline schemes: Online scheme for voltage and frequency assignment [68, 69] is suitable for dynamic workload distribution and adjust the processor control accordingly while offline [70, 71] is suitable for those applications whose workload characteristics are controlled and repeatable.

-

2.

Inter task versus intra task: In inter-task approach [69,70,71,72], available slack time is redistributed dynamically among all ready tasks, while in the intra-task [73] approach, available slack time is reallocated in the same task.

In literature, numerous researchers have formulated algorithms using DVFS to save energy as presented in Table 2. In Ref. [46], the authors proposed a scheduling algorithm for parallel task execution over DVFS-enabled clusters. They used slack time concept for non-critical jobs for voltage scaling and achieved the energy savings without increasing the scheduling length.

In paper [55], authors proposed energy aware duplication scheduling algorithms (EAD and PEBD) for minimizing energy consumption on homogeneous cluster. They considered task scheduling with communication cost. Their main objective is to eradicate communication costs for scheduling the tasks between the different processors and their successors on the same processor. If task has several successors, then task duplication occurs and it obtains the data from their antecedents with low communication cost resulting in redundant duplications of tasks, due to which bad schedule is achieved.

In paper [56], authors proposed energy aware scheduling algorithm using DVFS technique in heterogeneous grid computing systems with the aim to reduce the energy consumption while considering QoS constraints along with green computing specification to satisfy SLA agreement. The algorithm works in different stages: Firstly, deadline is distributed to all tasks such that each task is finished within sub-deadline. Second is ordering phase in which scheduling solution sequence is generated so that there is no violation in precedence constraints. At last, selection of best processor with suitable voltage and frequency level is done so that the energy consumption will be reduced while meeting the specified deadline. They achieve energy efficiency up to 68% with 30% increase in execution time/makespan.

In paper [57], authors designed the green energy-efficient scheduling algorithm using DVFS technique which used the concept of priority job scheduling as it is used to choose VM for executing jobs and the VM are chosen as per their weight. This algorithm increases the resource utilisation to lessen the energy consumption of servers and avoid the excess resource usage. This algorithm is not advantageous for heavy amount of workload. DVFS technique is used in paper [57] to find the operating frequency of the CPU so that workflow application deadline is satisfied. They considered different number of factors for energy efficiency i.e. energy cost, workload, carbon emission rate and CPU power efficiency. They use meta-scheduling policies that allocate application to selected data centres and determine the assigned CPU frequency.

In paper [59], the authors present an energy-aware heuristics in cloud environment for efficient management of data centres. Authors present the architectural principles in support of resource allocation policies and energy management. These methods diminish the energy consumption of the data center, while considering the QoS constraints.

Authors in [61] proposed a holistic energy efficient framework (Green GPU) for heterogeneous architectures. It consists of two phases: (1) based on the current workload characteristics; Green GPU dynamically distributes the current workload to the GPU and CPU to complete the execution at the same time, (2) based on their utilizations, Green GPU controls the GPU frequency and memory for maximizing the energy savings with minimum performance degradation.

In paper [62], authors formulated the optimization problem with the purpose to minimize the energy consumption and introduced a novel dynamic idleness prediction (DIP) technique in which future VM’s requirement are considered when scheduling the virtual machine on a physical host. They provide an energy efficient solution to minimize the energy consumption namely Smart VM overprovision that is independent on VM live migration.

In paper [63], authors proposed an energy-efficient dynamic scheduling algorithm for VM’s under deadline constraints in heterogeneous cloud environment. To process VM, an optimal frequency exists for each and every physical machine. The PM which is having high performance to power ratio will be assigned the VM’s to minimize energy consumption.

In [64], authors proposed two scheduling algorithms in multiprocessor systems such as Energy Gradient-based Multiprocessor Scheduling (EGMS) algorithm and Energy Gradient-based Multiprocessor Scheduling with Intra-task Voltage scaling (EGMSIV) Algorithm with DVS capabilities for scheduling precedence constraint graphs. To achieve the energy efficient schedule EGMS use the energy gradient concept. Based on the energy gradient value, selected task is mapped to the new processor/voltage level so that energy consumption is minimized for the new schedule. EGMS do not use intra-task voltage scaling as it creates overheads. EGMSIV use the concept of linear programming to initiate the intra-task voltage scaling to further reduce the energy consumption.

Authors in paper [60] proposed energy-conscious task consolidation algorithms namely ECTC and MaxUtil. Their main objective is to increase resources utilization and minimize the energy consumption. These two heuristics assign task to those resources in which energy consumption during task’s execution is less.

In paper [73], DVFS is considered as an effective approach to save energy consumption. They developed a Relaxation-based IteRative Algorithm (RIRA) considering frame-based tasks for DVFS-enabled heterogeneous platforms: dependent platform with adjustment of run time, dependent platform without adjusting run time, independent platform.

5.1.1.2 Dynamic Power Management (DPM)

DPM is a technique that reconfigures the computing equipments dynamically to assign the services on fewer active components and placing a reduced amount of load on those components. DPM switch off the respective computing equipments when they are idle. DPM techniques are classified into two categories: Predictive and Stochastic.

Predictive techniques make the relationship between the past history and instantaneous workload demand and accordingly, it predicts upcoming workload of the system. Based on future workload prediction, some of the components are switched on or off. These techniques do not perform well when no previous information about the workload is available or it is changing rapidly. In paper [74], authors proposed a predictive DPM to predict the future temperature during the execution of a specific application. Further, they improved the accuracy of workload prediction by using another predictor which minimizes the number of mispredictions.

Stochastic techniques are more complex to implement but they guarantee the optimality within the performance bounds. In this technique, uncertainty of workload is considered. Reference [75] focused on the arrival time of requests and different states of devices like idle, sleep or standby are modelled stochastically. They provide a power and performance trade-off. Further, energy-aware scheduling technique has been proposed in [76] for multi-core systems. This simulation-based approach dynamically calculates the best task allocation and execution pattern and reduces energy consumption by 27–41%.

5.1.2 CRAC Power

For a large data centre, the total energy cost per year increases significantly of which the approximately half is corresponding to cooling cost. Cooling power is a power to eliminate the heat produced by the IT equipment. CRAC has power distribution unit, air flow, range of air temperature. The CRAC power mode affects the CRAC power consumption and the efficiency of CRAC is determined by Coefficient of Performance (COP). COP is a function of cold air supply temperature (ts) i.e. defined as the amount of heat removal and the energy consumption by the cooling units (pc). There is an assumption that the energy consumed by the computational resources gets converted into heat directly, so, the amount of heat removal is basically decided by the power taken by the IT equipment (pi). Therefore, COP can be defined as follows:

Therefore, COP impact on total power consumption (Ptotal) i.e. computing and cooling power and is represented as follows

where \(p_{total}\) = Total power consumption of Data Centres.

Therefore, the efficiency of data center [58] is expressed as:

The value of COP depends on the physical design of cloud data centers and thermodynamics feature.

COP specifies the energy needed for cooling devices with regard to computational energy. It calculates the energy consumed which is used to supply cold air in the cloud data centre at a fixed temperature. Equation (7) signifies that by increasing ts, it is necessary to increase the efficiency of cooling devices and in this way, reduction in cooling power occurs. There are two different models of CRAC unit operation: fixed model and dynamic model. In fixed model, the CRAC supply the cool air at fixed temperature [77] while in dynamic model, CRAC supply cool air dynamically. The temperature of cold supply air is dependent on CRAC’s mode and thermostat temperature. When the thermostat temperature is high, then ts is also high then in turn less cooling is required and due to this energy consumption is less. Further, in an overcooled data centre, in order to lessen power consumption, the CRAC thermostat set temperature can be increased. Authors in [78] proposed an approach to reduce the heat removed by every CRAC unit, while satisfying the thermal constraints of data centre.

5.2 Heat Flow Modelling

The main challenge in thermal management is the prediction of the heat profile and temperature at different locations all over the data centre. It is calculated using the thermal topology of cloud data centre. Thermal topology determines the heat profile and indicates how and where heat flows in the data centre. Understanding the thermal topology and prediction of heat profile is a difficult process [54]. The thermal topology consists of the room dimensions, cooling distribution and the heat generation by servers [11]. The heat model significantly impacts the thermal efficiency and power efficiency too. The authors in [79] developed heat transfer model for online scheduling in data centres and considered different parameters like reliability, cooling cost and performance loss. They developed thermal aware scheduling algorithm (TASA) on homogeneous computer system and allocate computer resources for incoming workload for reducing temperature in a data centre based on ambient temperature and temperature profiles. When a job executes on some compute node, temperature of that compute node is increased due to job execution. Due to this, the compute nodes disperse heat to the environment. Heat transfer process of node is represented with CRAC thermal model, compute node thermal model, network model and heat recirculation as shown in Fig. 7.

5.2.1 CRAC Thermal Model

CRAC thermal model elaborates the heat exchange process on the basis of air flow. The thermodynamics principle is beneficial to know the process of heat exchange in the data centre. This model is based upon inlet/outlet CRAC air temperature. The difference among these temperatures can be used to compute the instantaneous amount of heat generation. In paper [16], the authors make a heat model which is used to maintain coordination among heat generation and heat extraction process in the server. It takes decision through different parameters and affects the overall thermal efficiency. Depending upon various parameters, scheduler may be biased toward microprocessor and some server level. In paper [32], the authors use the energy conservation law to calculate the heat. For maintaining uniform temperature at outlets, the power budget assigned to each of the machine/server is given as:

where pk is the total power budget of server, ρ: density of air (1.19 kg/m3), f: air flow rate, C: air capacity, \(T_{o}\): server outlet temperature, \(T_{i}\): server inlet temperature.

In paper [32], the authors show that heat by each outlet (Qo) is the sum of heat entered through each inlet (Qi) and the power Pk used by the node. Therefore, based upon the energy conservation law, calculation of heat dissipation is calculated as follows:

where \(Q_{o}\) = Heat flow out from server, \(Q_{i}\) = Heat flow inside from server.

As mentioned above, based upon the law of thermodynamics, cooling power consumption is typically determined by the air density and its flow rate. Therefore, heterogeneity of servers does not affect the cooling power consumption. However, different server may have different heat dissipation as per the assigned workload. Thus, in order to consider this, compute node thermal model can be used.

5.2.2 Compute Node Thermal Model

A precise and useful modelling of temperature is necessary to precisely describe the thermal actions of an application. The compute node thermal model is dependent on heat transfer to the ambient environment and the RC circuit and the relationship is shown as:

where \(pow(t)\) represents power consumption of computing resource at time instance t, \(T_{d}\): die temperature, \(R_{k}\) and \(C_{k}\): resistance and capacitance of the kth system, \(T_{a}\): ambient temperature, \(\frac{dT}{{dt}}\): rate of change of temperature.

The temperature of Rk*Pow(t) + Ta (i.e. stable state) is achieved after the specific t time period. Many authors [79,80,81] used the concept of RC model to find the instantaneous temperature of the compute node. Equation (7) needs the thermal and power profile of the job executed on the compute node. The model needs the thermal signature i.e. total heat dissipated by executing job. By combining it with the instantaneous temperature of node, total heat produced by the node during job execution is calculated. This information can further be used for scheduling jobs without violating thermal constraints.

In paper [78], authors use the compute node thermal model for scheduling of jobs with backfilling. They considered the thermal profiles and power consumption of each job to calculate the current node temperature. Further, in [79, 80] they evaluated temperature by thermal sensors and the readings were used to find the compute node temperature.

5.2.3 Thermal Network Model

This is a technique of heat modelling between the compute node thermal model and CRAC thermal model. In thermal network model, nodes in a data centre comprised of one or two networks. There are two types of networks: Information Technology (IT) network and Cooling Technology (CT) network. Total power consumed by the server is the important factor in Information Technology and Cooling Technology networks. Heterogeneity of the equipments is an important factor of heat modelling. The thermal network models do not take into consideration the air flow. But it considers only the heat exchange among the devices. This model has high flexibility. In paper [82], authors present the data centre from cyber-physical perspectives. They considered the IT network as cyber and CT network as physical dynamics. Authors developed a model of cyber-physical dynamics to consider the impact of coordinating the IT control with CT control at the data centre level. Thermal network model is used to monitor the thermostat temperature of CRAC unit for scheduling jobs. However, thermostat temperature setting affects the whole data centre. Thus, changing the thermostat temperature frequently is not practically possible as it may cause the hardware damage to computer room air conditioner (CRAC) unit.

5.2.4 Heat Recirculation

This is the process of mixing of hot air from the server outlets and cool air from CRAC unit. In cloud data centers, cold air temperature always changes with respect to time. To maintain cold air temperature, uniformly distributed temperature is required every time. Further, consumption of resources will be reduced that is needed in heat recirculation and performance of data center is also improved with respect to QoS. In paper [29], authors proposed scheduling algorithm to reduces the inlet temperature which in turn minimizes the heat recirculation and cooling cost for homogeneous data centers. The task scheduling policy minimizes the cooling system’s outlet power consumption by making the inlet temperatures of each and every active server as equal as possible.

5.3 Thermal Management Strategies

In data centre architecture, temperature of the cold air that reaches to the server inlet gets different from the cold air temperature due to the mixing of the cold air from the floor tiles with the extracted hot air from the back of server. This process is called heat recirculation. Heat recirculation uniformly provides cool air temperature throughout the data centre.

Modern servers use thermal sensors to monitor the internal CPU temperature and inlet cold air temperature. These thermal sensors are placed at front and back of the racks in the data centre. For every server, thermal status can be examined that is also known as the thermal map. These thermal maps provide the information that helps in application task scheduling, VM migration and cooling management. Scheduling process and heat management are the two main strategies in data centre thermal management in order to increase the energy efficiency and minimizing heat recirculation. Strategies for data centre thermal management are shown in Fig. 8.

5.3.1 Thermal Aware Task Scheduling

Task scheduling is a process that can be statically applied at design time or dynamically at run time [20, 83, 84]. Static scheduling is beneficial for those applications which are not frequently changeable and based on the behavioural prediction of the system [20, 85]. Conversely, dynamic scheduling is based upon the instantaneous status of the system at run time. Moreover, hybrid method has the benefits of both static and dynamic scheduling that make it more effective [84].

Thermal aware task scheduling is a cyber physical approach because it considers the computing as well as the cooling performance [25]. The main goal of this is to create schedules which needs less cooling and makes the system energy-efficient. This approach is known as the thermal-aware job scheduling. Previously, Power-aware techniques were used that concentrate all workload to some active nodes and allowing idle nodes to switch into deactivated state or turn off. Due to which high heat dissipation occurs in small regions that leads to hotspot occurrence. However, thermal aware scheduling techniques do not concentrate workload to few nodes while they consider the minimization of total heat which is generated by active servers. Due to this, chances of hotspot occurrence and cooling load are lowered [86].

Two main criteria of scheduling process are: build scheduling queue, choose optimal processor. The main concept of thermal management is to estimate the thermal effects corresponding to specific task placement and afterwards, selection of placement with best effects is done. The motivation is to decrease the maximum temperature of the compute nodes and minimize the heat imbalance in thermal distribution. Decreasing compute node power also decrease the temperature of nodes therefore reduces cooling cost and relieves the load on cooling infrastructure. These compute nodes temperature and thermal distribution can be calculated by considering ambient temperature [79].

Data centre uses a large amount of electricity for cooling the equipments that is the main reason behind the increase in power usage effectiveness (PUE) which is a metric for grading the data centre energy efficiency. PUE is calculated by dividing the total energy usage with the energy consumed by computing nodes. A data centre with PUE near to 1 signifies that computing energy consumption is high in comparison to cooling energy. However, it is theoretically possible only when zero energy is consumed by cooling systems. For energy efficient data centre, PUE can be decreased by efficient scheduling approaches considering the thermal constraints. In Google data centres, cooling power distribution uses 6% of the total power consumption. Hence, Google data centre have small power usage effectiveness (PUE). Analysis in 2014 shows that an average data centre which is having 1.7 PUE implies that cooling power distribution consumes 40% power consumption in a data centre [87].

Three types of thermal aware task scheduling algorithms are available: (i) Quality of Service (QoS) based (ii) Optimization based and (iii) Temperature-reactive and (iv) Temperature-proactive scheduling.

-

(i)

QoS-Based: It schedules the tasks on efficient resources to enhance the execution performance of data centre. In this, scheduler takes care of the temperature and reduce overcooling load using different thermal management technique. Additionally, SLA maintenance depending on QoS parameter is introduced.

-

(ii)

Optimisation based: It schedules workload with the help of heat-recirculation and thermal aware techniques. Server based methods minimizes peak inlet temperature which increases due to heat recirculation. Reduction in recirculation of heat is possible only by placing less workload on the server which is near to the floor. Previously, zigzag scheme is employed for workload execution till threshold is attained.

-

(iii)

Temperature-reactive Scheduling: To avoid overheating of servers, all important decisions are made reactively based on the observed temperature. In paper [11], the authors schedule the workload dynamically to cool servers by observing temperature to avoid overheating of the server. Paper [88] considers the influence of instantaneous temperature on the performance of the server and allocates resources for Map-Reduce workloads.

-

(iv)

Temperature-proactive Scheduling: Many authors have developed proactive scheduling approaches considering power consumption and application workload based on the heat recirculation and thermal profile [29, 32, 51]. The authors in paper [29] developed the genetic algorithm for proactive scheduling to minimize the inlet air temperature of the servers. A number of temperature-proactive scheduling algorithms were discussed in [11]. These algorithms are mainly focused on distribution of power budget to all servers with the objectives to minimize the heat recirculation. Thermal profiles are maintained for several servers called pods that is beneficial in taking judgement for power budget. Each pod is giving power budget in total heat recirculation. Only one pod is active at one time using these profiles.

Thermal aware task scheduling can further be categorised into microprocessor level and server level.

Microprocessor level: In paper [89], the authors developed scheduling approach in which task profile is created as per the power consumption on the available microprocessor cores and move the tasks pre-emptively from the task list to maintain the proper load balancing. The task under execution that violates the thermal constraints is pre-empted and migrated to another core. Many researcher have proposed different thermal management techniques considering cooperation among hardware and software [90, 91]. Tasks running on a microprocessor shows the hotness of task are that are calculated using regression analysis and predict the hotspot occurrence possibility. To avoid the hot tasks from exceeds the threshold value, clock gating is used. In paper [92], a microprocessor scheduling was proposed, where the authors considered various factors like hotspots, scheduling performance as well as reliability. ILP objective function is defined with two major goals i.e. minimizing the hotspot occurrence and minimization of the thermal-spatial gradient that are achieved by decreasing the scheduling workload on nearby cores.

Server Level: In [32], the authors proposed proactive thermal-aware algorithms like uniform outlet profile (UOP) which is based upon compute node thermal model. This algorithm is same as the One-Pass-Analog by [11] with the main aim of keeping the outlet temperature to be uniform. The total workload is distributed into smaller number of tasks. One-pass-analog algorithm gives priority to the power budget. This does not give importance to allocation of tasks. The UOP assigns workload to servers to maintain uniformity of total outlet hot air temperature. Firstly, UOP considers the power consumption of idle servers and require a thorough knowledge of power consumption by the server during task execution. Secondly, dividing a task into equal parts is not a easy task. The coolest inlet algorithm will prefer some servers having lowest inlet temperature to place load whereas the Uniform-Workload algorithm [11] assigns the uniform power budget for all the servers to maintain uniform outlet air temperature in the data centre. In Ref. [32], Minimal Computing Energy (MCE) algorithm is proposed that is same as the Coolest Inlet algorithm [11]. By Using the minimum number of servers at full utilization creates hotspot which leads to excessive cooling. Due to this, PUE (Power Usage Efficiency) is increased. Another algorithm known as Uniform load (UL) which assigns equal load to each node. These are of two types: optimal and non-optimal.

Optimal: Algorithm is optimal if it switches off the idle servers. Optimal algorithms save more power in this state. In paper [11], authors found more energy saving by shutting down the servers.

Non-Optimal: Algorithm is non optimal if it does not switch off the idle/inactive servers and consumes unnecessary power. In paper [32], there are no criteria of shutting down the servers.

In literature, numerous researchers have proposed thermal-aware scheduling algorithms to maintain thermal profiles as shown in Table 4. In paper [93], authors proposed thermal aware job scheduler (tDispatch) i.e. deployed on top of Hadoop’s cluster for map-reduce applications. tDispatch investigated the behaviour of I/O jobs and CPU-intensive jobs to show thermal impact on hadoop cluster nodes. They consider applications’ characteristics and resource usage pattern for its scheduling decisions. Their main aim is to minimize the CPU and disk temperature of hadoop cluster nodes.

In paper [94], authors proposed thermal and power aware job scheduler to increase energy efficiency by considering computing energy, migration energy and cooling energy. They prepared a frequency assignment and task allocation model to exhibit the relationship between tasks and frequencies. Afterwards, they design an algorithm considering thermal-aware and DVFS techniques (TSTD) which allocate different tasks to the suitable node and adjust their frequency accordingly to minimize the total energy consumption of data centres.

In paper [95], authors proposed the task scheduling algorithm which jointly deals with the fault tolerance, thermal control and energy efficiency. Fault variable is used to adjust task execution time with fault recovery overhead and there is another variable for energy efficiency which indicates the energy saving occur and further it will be maximized for each task. DVFS technique is used with task sequencing order to meet the thermal requirements. They alternate the cold and hot task at suitable frequency to attain thermal control.

In paper [96], authors considered the problem of scheduling tasks to optimize makespan for microprocessor systems under thermal constraints. They select that processor which is having high allocation probability for the task and it is calculated by considering workload of the processor and temperature profiles. They classify the task into hot and cold task category. Hot tasks are performed at scaled frequency while cold tasks perform their execution at maximum frequency. To minimize the peak temperature, tasks are executed alternatively on the processor.

In paper [97], authors propose a consolidation technique which is used to optimize the energy consumption by minimizing the IT and cooling power consumption both. They present a thermo-electric model that considers server’s utilization with the power consumption. They proposed approximation algorithms and heuristics such as thermal aware VM placement optimization algorithm considering the VM migrations count. The proposed solutions not only find a good VM placement consolidated solution, but also diminish the cooling system’s cost.

The work in paper [98] proposes power and thermal aware scheduling algorithm to optimize individual tasks reaching their deadlines. Their main aim is to minimize the energy consumption while guaranteeing the performance does not go below specified threshold. They proposed an optimisation technique by considering performance states of cores.

In paper [99], authors proposed a scheduling algorithms, policies and resource management policies to improve energy efficiency and thermal mapping of data centres. They considered power model in which power consumption was considered mainly for CPU/memory and cooling model in which thermal profile relating to air flow and inlet/outlet temperature for different levels are considered. They recommended further work on CFD simulations, and considered heat recirculation in the data centres as well as DVFS mechanism to improve energy efficiency.

In paper [100], the authors considered the batch job scheduling for distributed data centres and explored the advantage of electricity price variations. Online scheduling algorithm is proposed which optimizes the fairness and energy cost subject to queuing delay constraints. Algorithm does not have the need of any information about the arrival of workload or electricity cost.

In paper [101], authors minimized the thermal heat recirculation of homogeneous data centres subjected to server allocation constraints. They proposed a technique that distributes the workload across the data centre to reduce heat removal inefficiencies and formation of hot spot to minimize cooling costs. They proposed an optimization model that finds the allocation of workloads to nodes with less number of nodes and reduce the heat recirculation by considering special recirculation bound on the individual contribution to each node. All the above works used homogeneous data centre to optimize the cooling cost in thermal manner. Some authors use heterogeneous platform for optimality of the tasks allocation under thermal constraints as explained further.

In paper [102], authors formulated a mixed integer linear programming (ILP) problem with temperature and reliability constraints to minimize the makespan. They proposed a scheme to find the assignment and scheduling of tasks in heterogeneous platform. They analyse the effect of task assignment on makespan, reliability, and temperature.

In paper [103], authors designed an energy efficient temperature aware scheduling technique for heterogeneous systems. This technique works in two phases. In first phase, the energy optimality of task to processor was performed and designs a heuristic to lessen the energy consumption under deadline constraints. In second phase, temperature minimization of the processor was investigated and designed a slack distribution heuristic to develop temperature profiles of processor under thermal constraints.

Authors in paper [104] considered deadline based task allocation scheme (DBTAS) in heterogeneous multicore processor considering deadline constraints for thermally challenged tasks. They use the hotspot model with DVFS capabilities. The main aim of DBTAS scheme is provide optimal allocation of task to each core for every migration time interval to minimize the power consumption by using scaled voltage/frequency while maintaining temperature and deadline constraints.

In paper [105], authors minimizes the computing and cooling energy in a cloud data center by considering deadline constraints by using DVFS technique. Authors divide the application deadline to all the workflow tasks to finish the task execution and then choose the processor for task execution.

5.3.2 Heat Management

The thermal dynamics always takes into consideration the different parameter like heat recirculation and cooling. To minimize the heat recirculation, maximum number of jobs was scheduled to the regions having maximum cooling efficiency so that jobs will perform without any interruption and cooling efficiency will be improved [51]. Heat management of data centres have focused on two fundamental approaches:

-

(i)

Management of Heat Generation [21]: This approach focused on how to balance or migrates workload to avoid overheating in a data centre. In paper [108], the authors proposed a solution for dynamic thermal management to reduce temperature with low performance overhead by providing self-adjustable temperature threshold.

-

(ii)

Management of Heat Extraction [51]: This improves the efficiency of cooling system by supplying cool air inside the data centre. Heat extraction is due to air circulation that depends on racks layout inside the data center and the location of fans and air vents in the CRAC unit. Heat extraction is done by the fans in the server /CRAC unit. Whenever there is difference in the rate of heat generation and heat extraction, it will result into heat imbalance. If heat generation rate is more than extraction rate, increase in temperature occurs due to which thermal hotspot is generated and server will operate in unsafe temperature range. On the other hand, if generation rate is less than extraction rate, that result in overcooling and a significant decrease in temperature [21] which leads to energy-inefficiency.

6 Evaluation Metrics for Thermal Efficiency

The usage of cloud computing infrastructure is increasing exponentially, so it becomes necessary to observe and determine the performance of cloud data centre on regular basis. Thermal management metrics [103] determines the environmental circumstances of the data center and also determine how air flows within a data centres from cooling units to air vents. So we have recognized different metrics for thermal aware scheduling.

-

Temperature Range: This metric indicates the difference between the supply air temperature (\(T^{s}\)) and return air temperature (\(T^{r}\)).

Units: F

$$T = T^{r} - T^{s}$$(13)The range for ambient temperature for system reliability is 68–75 °F (20–25 °C). The essential requirement is that IT equipments should not be operated in data centre where the ambient room temperature has exceeded 85 °F (30 °C).

Recommended

Allowable

Temperature range

64–80 °F

59–90 °F

-

Air Flow Efficiency: Air flow efficiency metric characterized by considering total fan power requirement. This metric show how air is efficiently stimulated from supply to return throughout the CDC and it also considers system efficiency of fans. The units of air flow efficiency are: W/cfm [W/l-s-1]. If the value of this metric is high, it means fan system is not efficient.

$$f_{a}^{eff} = \frac{{f_{p} *1000}}{{f_{a} }}$$(14)where \(f_{a}^{eff}\) stands for air flow efficiency, \(f_{p}\) Complete fan power with supply and return measured in kW, \(f_{a}\) Complete airflow of fans measured in cfm.

-

Return Temperature Index (RTI): This metric measures the energy performance of air management. To improve the air management, the main goal is to separate hot and cold air aisles. By doing this, RTI value can reach to 100% (ideal). This metric also diminishes air flow rate [109].

RTI value (%)

Significance

100

Ideal

< 100

Supply air by-pass the racks

> 100

Air recirculation from hot aisle

Units: %

$$RTI = \frac{{T_{r} - T_{s} }}{{T_{r}^{outlet} - T_{r}^{inlet} }} \times 100$$(15)where \(T_{r}^{inlet}\) racks inlet temperature, \(T_{r}^{outlet}\) racks outlet temperature.

-

Supply and Return Heat Index: These parameters are used to quantify the heat extraction by CRAC in cloud data centres [110]. These are dimensionless and scalable parameters which is used at rack level or data centre level.

$$HI^{supply} = \frac{\delta h}{{h + \delta h}}$$(16)$$HI^{return} = \frac{h}{h + \delta h}$$(17)where h = heat dissipation from all racks, \(\delta h\) = enthalpy rise of cold air.

-

And there exists a relationship between supply and return heat index and this is given as:

The value of \(HI^{return}\) close to 1 indicates high cooling efficiency.

7 Open Issues

In last decade, a lot of progress has been achieved in thermal management. But there are still various open issues that need to be addressed. Based on the current research in thermal management in cloud, we have identified different open issues that are still to be explored. Following open issues have been identified from the existing literature:

-

The problem occurred with cooling data centres is the recirculation of hot air from outlets to their inlets which creates hotspot and an uneven temperature distribution at the inlets.

-

Temporal fluctuations in temperature along with hotspots have bad effect on reliability of the system. Thus, in dynamic environment, prediction of temperature accurately is also a crucial issue as the thermal models complexity is high. Hence, there is a requirement of new approaches for the optimization of thermal effect.

-

Different thermal aware task scheduling algorithms have been proposed by considering fixed CRAC supply air temperature. So, to save more energy in CDC, there is a emergent need to consider varying value of CRAC supply air temperature based on data centre’s overall status.

-

Another motivating issue for thermal-aware scheduling would be the minimization of heat dissipation with the aim to obtain energy savings. Further, it leads to savings in the total cost of ownership (TOC).

-

Dual optimization of temperature and performance is also needed with the optimization of energy consumption.

-

DVFS is an important and efficient technique in the area of energy efficiency by minimizing the operating frequency of the processor and supply voltage during the task’s execution. Recent researches show that decrease in the supply voltage and operating frequency to save the energy has negative consequences on the system’s reliability because it increases transient failure of the resources. If frequency/voltage adjustment is not done appropriately then processor’s performance is degraded. DVFS implementation is dependent on the correct and precise estimation of number of tasks and execution time. But prediction about the number of tasks is most challenging one.

-

In live VM migration, intelligent approaches are required for optimal VM placement which determines the priority of the pages during the VM migration to avoid page fault rate to be increased.

-

Smart prediction techniques are required in stop and copy VM migration phase because exact prediction can minimize the server downtime.

8 Conclusion

Although cloud computing environment are widely popular now-a-days, there are still plenty of research gaps to be addressed. The increase in the size of data centres results in huge energy consumption and its growth rate is expected to accelerate in following years. This consumption is due to power supply of system resources, cooling device and other maintenance costs. Thus, effective thermal management is required for reducing the computing power consumption along with the hotspot reduction. In this paper, we have explored different thermal management approaches that drive researchers to design the mechanisms to make system energy efficient. This survey focused on various thermal management techniques like proactive and reactive, thermal mapping models, different strategies such as power modelling, heat modelling and discussed the thermal aware scheduling techniques in the data centre. The aim of the survey is to help the researchers to identify key research issues that are yet to be explored in future.

References

Qian, L., Luo, Z., Du, Y., & Guo, L. (2009). Cloud computing: An overview. In IEEE international conference on cloud computing (pp. 626–631). Springer, Berlin.

Hauck, M., Huber, M., Klems, M., Kounev, S., Müller-Quade, J., Pretschner, A., et al. (2010). Challenges and opportunities of cloud computing. Karlsruhe Reports in Informatics. https://doi.org/10.5445/IR/1000020328.

JoSEP, A. D., & KAtz, R., KonWinSKi, A., Gunho, L. E. E., PAttERSon, D., & RABKin, A. . (2010). A view of cloud computing. Communications of the ACM, 53(4), 50–58.

Cloud Computing—The Business Perspective-02-09-02.pdf.

World Energy Outlook, 2009 FACT SHEET. http://www.iea.org/weo/docs/weo2009/factsheetsWEO2009.pdf.

Gartner report, financial times, 2007.

Kaplan, J. Forrest, W., & Kindler, N. (2008). Revolutionizing data center energy efficiency. Technical Report (p. 15), McKinsey Company.

Delforge, P. (2014) America’s data centers are wasting huge amounts of energy. Natural Resources Defense Council (NRDC). Available www.nrdc.org/energy/data-center-efficiency-assessment.as.

Barroso, L. A., & Hölzle, U. (2007). The case for energy-proportional computing. Computer, 40(12), 33–37.

Liu, J., Zhao, F., Liu, X., & He, W. (2009). Challenges towards elastic power management in internet data centers. In 2009 29th ieee international conference on distributed computing systems workshops (pp. 65–72). IEEE.

Moore, J. D., Chase, J. S., Ranganathan, P., & Sharma, R. K. (2005). Making scheduling “cool”: temperature-aware workload placement in data centers. In USENIX annual technical conference, general track (pp. 61–75).

Donald, J., & Martonosi, M. (2006). Techniques for multicore thermal management: Classification and new exploration. ACM SIGARCH Computer Architecture News, 34(2), 78–88.

Wang, L., Khan, S. U., & Dayal, J. (2012). Thermal aware workload placement with task-temperature profiles in a data center. The Journal of Supercomputing, 61(3), 780–803.

Kong, J., Chung, S. W., & Skadron, K. (2012). Recent thermal management techniques for microprocessors. ACM Computing Surveys (CSUR), 44(3), 1–42.

Sharma, Y., Javadi, B., Si, W., & Sun, D. (2016). Reliability and energy efficiency in cloud computing systems: Survey and taxonomy. Journal of Network and Computer Applications, 74, 66–85.

Lee, E. K., Kulkarni, I., Pompili, D., & Parashar, M. (2012). Proactive thermal management in green datacenters. The Journal of Supercomputing, 60(2), 165–195.

Sheikh, H. F., Ahmad, I., Wang, Z., & Ranka, S. (2012). An overview and classification of thermal-aware scheduling techniques for multi-core processing systems. Sustainable Computing: Informatics and Systems, 2(3), 151–169.

Arghode, V. K., Kang, T., Joshi, Y., Phelps, W., & Michaels, M. (2017). Measurement of air flow rate through perforated floor tiles in a raised floor data center. Journal of Electronic Packaging, 139(1), 011007-1-011007–8.

Masdari, M., Nabavi, S. S., & Ahmadi, V. (2016). An overview of virtual machine placement schemes in cloud computing. Journal of Network and Computer Applications, 66, 106–127.

Singh, A. K., Shafique, M., Kumar, A., & Henkel, J. (2013). Mapping on multi/many-core systems: Survey of current and emerging trends. In 2013 50th ACM/EDAC/IEEE design automation conference (DAC) (pp. 1–10). IEEE.

Lee, E. K., Viswanathan, H., & Pompili, D. (2015). Proactive thermal-aware resource management in virtualized HPC cloud datacenters. IEEE Transactions on Cloud Computing, 5(2), 234–248.

Bobroff, N., Kochut, A., & Beaty, K. (2007). Dynamic placement of virtual machines for managing SLA violations. In 2007 10th IFIP/IEEE international symposium on integrated network management (pp. 119–128). IEEE.

Beloglazov, A., & Buyya, R. (2010). Energy efficient resource management in virtualized cloud data centers. In 2010 10th IEEE/ACM international conference on cluster, cloud and grid computing (pp. 826–831). IEEE.

Beloglazov, A., & Buyya, R. (2012). Managing overloaded hosts for dynamic consolidation of virtual machines in cloud data centers under quality of service constraints. IEEE Transactions on Parallel and Distributed Systems, 24(7), 1366–1379.

Beloglazov, A., & Buyya, R. (2010). Adaptive threshold-based approach for energy-efficient consolidation of virtual machines in cloud data centers. MGC @ Middleware, 4, 1890799–1890803.

Song, W., Xiao, Z., Chen, Q., & Luo, H. (2013). Adaptive resource provisioning for the cloud using online bin packing. IEEE Transactions on Computers, 63(11), 2647–2660.

Song, Y., Sun, Y., Wang, H., & Song, X. (2007). An adaptive resource flowing scheme amongst VMs in a VM-based utility computing. In 7th IEEE international conference on computer and information technology (CIT 2007) (pp. 1053–1058). IEEE.

Rodero, I., Viswanathan, H., Lee, E. K., Gamell, M., Pompili, D., & Parashar, M. (2012). Energy-efficient thermal-aware autonomic management of virtualized HPC cloud infrastructure. Journal of Grid Computing, 10(3), 447–473.

Tang, Q., Gupta, S. K., & Varsamopoulos, G. (2007). Thermal-aware task scheduling for data centers through minimizing heat recirculation. In 2007 ieee international conference on cluster computing (pp. 129–138). IEEE.

Huang, W., Allen-Ware, M., Carter, J. B., Elnozahy, E., Hamann, H., Keller, T., et al. (2011). TAPO: Thermal-aware power optimization techniques for servers and data centers. In 2011 International green computing conference and workshops (pp. 1–8). IEEE.

Zhu, H., Wang, J., Song, M., & Fang, Q. (2015). Thermal-aware load provisioning for server clusters by using model predictive control. In 2015 ieee conference on control applications (CCA) (pp. 336–340). IEEE.

Tang, Q., Mukherjee, T., Gupta, S. K., & Cayton, P. (2006). Sensor-based fast thermal evaluation model for energy efficient high-performance datacenters. In 2006 Fourth international conference on intelligent sensing and information processing (pp. 203–208). IEEE.

Bash, C., & Forman, G. (2007). Cool job allocation: Measuring the power savings of placing jobs at cooling-efficient locations in the data center. In USENIX annual technical conference (vol. 138, p. 140).

Sun, G., Liao, D., Anand, V., Zhao, D., & Yu, H. (2016). A new technique for efficient live migration of multiple virtual machines. Future Generation Computer Systems, 55, 74–86.

Sarker, T. K., & Tang, M. (2013). Performance-driven live migration of multiple virtual machines in datacenters. In 2013 IEEE international conference on granular computing (GrC) (pp. 253–258). IEEE.

Liu, H., Jin, H., Xu, C. Z., & Liao, X. (2013). Performance and energy modeling for live migration of virtual machines. Cluster Computing, 16(2), 249–264.

Goudarzi, H., Ghasemazar, M., & Pedram, M. (2012). SLA-based optimization of power and migration cost in cloud computing. In 2012 12th IEEE/ACM international symposium on cluster, cloud and grid computing (CCGRID 2012) (pp. 172–179). IEEE.

Callegati, F., & Cerroni, W. (2013). Live migration of virtualized edge networks: Analytical modeling and performance evaluation. In 2013 IEEE SDN for future networks and services (SDN4FNS) (pp. 1–6). IEEE.

Zhang, W., Lam, K. T., & Wang, C. L. (2014). Adaptive live VM migration over a wan: Modeling and implementation. In 2014 IEEE 7th international conference on cloud computing (pp. 368–375). IEEE.

Clark, C., Fraser, K., Hand, S., Hansen, J. G., Jul, E., Limpach, C., et al. (2005). Live migration of virtual machines. In Proceedings of the 2nd conference on symposium on networked systems design and implementation (vol. 2, pp. 273–286).

Jin, H., Deng, L., Wu, S., Shi, X., & Pan, X. (2009). Live virtual machine migration with adaptive, memory compression. In 2009 ieee international conference on cluster computing and workshops (pp. 1–10). IEEE.

Rao, L., Liu, X., Xie, L., & Liu, W. (2010). Minimizing electricity cost: optimization of distributed internet data centers in a multi-electricity-market environment. In 2010 Proceedings IEEE INFOCOM (pp. 1–9). IEEE.

Qureshi, A., Weber, R., Balakrishnan, H., Guttag, J., & Maggs, B. (2009). Cutting the electric bill for internet-scale systems. In Proceedings of the ACM SIGCOMM 2009 conference on data communication (pp. 123–134).

Fang, Q., Wang, J., Gong, Q., & Song, M. (2017). Thermal-aware energy management of an HPC data center via two-time-scale control. IEEE Transactions on Industrial Informatics, 13(5), 2260–2269.

Ranganathan, P., Leech, P., Irwin, D., & Chase, J. (2006). Ensemble-level power management for dense blade servers. ACM SIGARCH Computer Architecture News, 34(2), 66–77.