Abstract

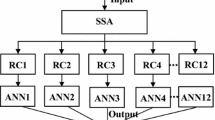

Rainfall, which is one of the most important hydrologic processes, is influenced by many meteorological factors like climatic change, atmospheric temperature, and atmospheric pressure. Even though there are several stochastic and data driven hydrologic models, accurate forecasting of rainfall, especially smaller time step rainfall forecasting, still remains a challenging task. Effective modelling of rainfall is puzzling due to its inherent erratic nature. This calls for an efficient model for accurately forecasting daily rainfall. Singular Spectrum Analysis (SSA) is a time series analysis tool, which is found to be a very successful data pre-processing algorithm. SSA decomposes a given time series into a finite number of simpler and decipherable components. This study proposes integration of Singular Spectrum Analysis (SSA), Auto Regressive Integrated Moving Average (ARIMA) and Artificial Neural Network (ANN) into a hybrid model (SSA-ARIMA-ANN), which can yield reliable daily rainfall forecasts in a river catchment. In the present study, spatially averaged daily rainfall data over Koyna catchment, Maharashtra has been used. In this study SSA is proposed as a data pre-processing tool to separate stationary and non-stationary components from the rainfall data. Correlogram and Kwiatkowski–Phillips–Schmidt–Shin (KPSS) test has been used to validate the stationary and non-stationary components. In the developed hybrid model, the stationary components of rainfall data are modelled using ARIMA method and non-stationary components are modelled using ANN. The study of statistical performance of the model shows that the hybrid SSA-ARIMA-ANN model could forecast the daily rainfall of the catchment with reliable accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Development of reliable and accurate hydrologic time series models has gained the attention of researchers over past few decades. The changing climatic phenomenon and its impact on the ecosystem have made it imperative to develop an accurate hydrologic model with the help of which, water resources systems can be better managed. Out of the several hydrologic models, the mostly used are those relying on either stochastic methods or Artificial Intelligence (AI) technique which have their own advantages and disadvantages. In stochastic modelling, forecasts are deciphered based on the statistical characteristics of the past data (Box and Jenkins 1976). ARIMA model, which is the most widely used stochastic model for forecasting time series, has great flexibility. In the case of stochastic models, for yielding reliable results, the data has to be stationary (Machiwal and Jha 2012). A time series is said to be stationary only if all its statistical properties are all constant with time. A homogeneous non-stationary time series can be reduced to a stationary time series, by taking a proper degree of differencing (Wei 2006). However, in ARIMA model, the differencing can reduce only small-scale non-stationary process to a stationary process (Shahin et al. 1993). Also, the output of ARIMA model depends heavily on the expertise of analyst in modelling (Machiwal and Jha 2012).

Artificial Intelligence (AI) based time series models have gained popularity over the last few decades. These models are based on the input-output relationships (Solomatine and Ostfeld 2008). Most widely used AI based models include Artificial Neural Network (ANN), Genetic Programming (GP), and Model Tree (MT). Among them, ANN has gained widespread acceptance from researchers in various fields. ANN models are highly flexible so that, any combination of different algorithms can be developed according to the complexity of the data. However, the major drawback of ANN is that it is a grey box model and outliers present in the data can critically affect the reliability of the model. It is also reported by many researchers that, for climatic data to yield reliable result by ANN, the data need to be pre-processed (ASCE Task Committee 2000a; Ramírez et al. 2006).

Thus, development of a hydrologic model that can utilize the advantages of these stochastic and AI models and can overcome their disadvantages, can be extremely helpful. Singular Spectrum Analysis (SSA) is a non-parametric, model free time series analysis tool, that can decompose the time series into small interpretable components. SSA is comparatively a new tool in time series modelling and is now gaining widespread response from various field of applications such as image processing, filling gaps, and change point detection (Rodrıguez-Aragon and Zhigljavsky 2010; Afshar and Bigdeli 2011). SSA can be interpreted as a combination of multi-variate statistics, multi-variate geometry, signal processing and classical time series analysis (Elsner and Tsonis 1996). Various components of a time series such as trend, periodic component, cyclic component and noise can be successfully extracted using SSA (Alexandrov 2009; Unnikrishnan and Jothiprakash 2015). SSA can be successfully used as a data pre-processing algorithm in time series modelling (Zhang et al. 2011; Rodrigues and de Carvalho 2013).

The primary aim of this study is to develop a hydrologic model coupling stochastic and data driven approach that can successfully model daily rainfall data. SSA is a data adaptive time series tool, which can be developed as a viable algorithm to integrate stochastic and AI models, thereby effectively utilizing the advantages of both these models. Hence, in the present study, an integrated SSA-ARIMA-ANN model is proposed to model daily rainfall data. The performance of the integrated model is analysed and presented in this study. It was observed that the developed SSA-ARIMA-ANN model could forecast the daily rainfall with reliable accuracy. It can also be noticed that there is no lag effect in the forecasted rainfall data which is common during hydrologic modelling using ANN. The computational time and cost in the developed SSA-ARIMA-ANN model is desirably low when compared to that of stochastic or data driven technique which, when used independently, would require a much more complex model to achieve the forecast performance obtained here in this study. There is a wide scope that the developed SSA-ARIMA-ANN model can be further refined for a more efficient modelling of rainfall data.

2 Study Area

The study area considered in the case study is Koyna catchment, situated on the West Coast of Maharashtra, India, lies between the latitude of 170 0’ N to 170 59’ N and longitude of 730 2′ E to 730 35′ E and is depicted in Fig. 1. There are nine rain gauge stations in the catchment and the locations of all the stations are shown in the figure. The catchment is monsoon fed (south west monsoon from June to September) and during non-monsoon period, there is no rainfall or very little rainfall in the catchment. The average annual rainfall in the catchment is 4654 mm that occur over an area of 891.78 km2.

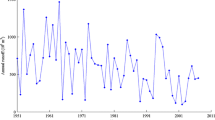

The observed daily rainfall data from 1st January 1961 to 31st December 2013 of all the nine rain gauge stations in Koyna catchment has been collected from the Koyna Irrigation Division Office, Government of Maharashtra, India. The daily rainfall observations for all the rain gauge stations were available during this time and there is no missing data within this time frame. It is observed from the data that, the maximum rainfall contribution to the catchment in terms of average annual rainfall, is from Valavan station, which is 6370 mm and minimum contribution by the Bamnoli station, which is 2970 mm. The average daily rainfall data of the catchment is obtained by spatially averaging the observed daily rainfall data of the nine rain gauge stations in the catchment by means of Thiessen polygon method.

In the present study, the daily rainfall data pertaining to the year 2013 is exclusively used for verifying the forecast performance of the model. In the pre-processing and calibration phase of the model, only the daily rainfall data from 1961 to 2012 is used.

3 Methodology Adopted in the Study

In the present algorithm, SSA is used as a data pre-processing tool that can separate the stationary and non-stationary components from the rainfall data. ARIMA part of the model is designed to model the stationary components and ANN is developed to model the non-stationary components. The detailed description of the methodology adopted in the present study is given below.

3.1 Singular Spectrum Analysis

SSA is a non-parametric, model free time series tool, which can decompose a time series into separable components by means of Singular Value Decomposition (SVD). SSA involves decomposition and reconstruction of a time series. In the decomposition phase, the first step is embedding, in which, the time series is converted into a matrix called trajectory matrix- based on the chosen window length. The order of that matrix will be (N-L + 1) x L, where L is the selected window length and N is the time series length. Window length (L) is a number that must be chosen by the analyst, which will then decide, the order of trajectory matrix upon which the multi variate statistics has to be performed. For example, if the time series under consideration has observations at 6-time steps, and if window length is chosen as 2, then the trajectory matrix will be of order 5 × 2.

After embedding, SVD is applied on the matrix and as a result, the matrix is converted into several small matrices called Eigen Triples (ET). These Eigen Triples include Eigen functions, principal components, and Eigen values. The number of Eigen triples will be equal to the window length chosen.

In order to reconstruct a time series from the decomposed components, significant Eigen Triples need to be selected. This procedure of selecting appropriate components is called grouping. Here, grouping has to be carried out based on the analyst’s experience and expertise in time series modelling (Golyandina et al. 2001). The same grouping procedure cannot be used for all SSA applications. The grouping procedure adopted for data pre-processing will be different from the procedure used for forecasting.

After grouping, the grouped matrices (Eigen triples) are transformed to a time series by means of diagonal averaging. In this mathematical procedure, the matrix elements are averaged over the anti-diagonals to get the time series. Thus, at the end of the diagonal averaging, a new time series is generated. The detailed mathematical explanations of this method can be seen in various literatures (Elsner and Tsonis 1996; Golyandina et al. 2001).

In the present study, SSA has been used as the data pre-processing algorithm by which the non-stationary components from the rainfall data are being separated. Trend and periodicities which are responsible for most of the non-stationary character in the data, have been extracted and separated from the rainfall data using SSA. After pre-processing, the stationary components of the rainfall data have been modelled using ARIMA method and the separated non-stationary components are modelled using ANN proposed in the study.

3.1.1 SSA in Trend Separation

The analysis of periodogram of Eigen functions, based on the criteria described in Unnikrishnan and Jothiprakash (2015), has been used in the present study to separate the non-linear trend component from the daily rainfall time series. In this approach, the trend in data is characterised as low frequency fluctuations which are identified from the periodogram analysis as explained below. Hence, the primary aim of this approach is to identify the SVD components (Eigen Triples) corresponding to low frequency fluctuations.

Periodogram is the spectral density estimate of a time series and from its analysis, the dominant frequency in a time series can be deduced. SSA can decompose a time series into multiple SVD components, where each component is a time series. Hence, by carrying out periodogram analysis of each of the Eigen functions, the dominant frequency of each of the Eigen function can be identified and the Eigen functions corresponding to low frequency fluctuation can be selected as responsible for the trend in the data. The mathematical expression used for trend separation is given below.

The Fourier representation of a time series Y = y1,y2,….yN can be written as

where yn is the time series data at time step n; c0,ak,bk,cN/2 are Fourier coefficients; k ∈ N, N is the time series length, 1 ≤ n ≤ N and cN/2 = 0 if N is an odd number. The periodogram of Y at the frequencies ω = k/N can be defined as

For the given ω0 ∈ (0, 0.5) the contribution of low frequencies from the interval [0, ω0] can be defined as

where \( {\pi}_Y^N\left(\omega \right)={\sum}_{k:0\le k/N\le \omega }{I}_Y^N\left(k/N\right),\omega \in \left[0,0.5\right] \) can be defined as the cumulative contribution of the frequencies [0,ω].

The Eigen functions corresponding to low frequency fluctuations are selected as responsible factor for trend component. The condition satisfying the low frequency fluctuations is given below:

where F0 and ω0 are two parameters ω0 ∈ (0, 0.5) and F0 ∈ [0, 1] and Uj is the corresponding Eigen vector.

where Y is the time series under consideration of length N and \( {M}_Y^N \) is the median of the values of periodogram of Y.

The optimal value of low frequency contribution (F0) can be determined using the following equation

Where

F is defined in Eq. 3. T(ω0, F0) is the trend component that can be extracted from parameters F0 and ω0. ΔF is the search step and ΔR is the threshold. The details for the equations for determining the parameters are explained in Alexandrov (2009) and Unnikrishnan and Jothiprakash (2015).

3.1.2 SSA in Periodic Component Separation

To select the SVD components that are significant for periodic component, grouping based on analysis of peak of periodogram of Eigen functions has been used in the present study. A time series with a strong periodic component will depict a single recognizable peak in its periodogram at that specific period (Unnikrishnan and Jothiprakash 2018b). Thus, in the present study, to identify the dominant periods in the decomposed components, the periodogram of each of the adjacent pairs of Eigen function has been plotted. The Eigen functions with recognizable single peak in the periodogram have been identified as components which are to be grouped in the grouping stage of SSA for periodic component separation.

3.2 ARIMA Process

The ARIMA forecasting equation is a linear equation in which, the predictors consist of lags of the dependent variable known as “autoregressive” terms and lags of the forecast errors known as “moving average” terms. In ARIMA modelling, differencing is carried out which can deduce an integrated version of a stationary series. A non-seasonal ARIMA model is classified as an “ARIMA(p,d,q)” model, where: p is the number of autoregressive terms, d is the number of non-seasonal differences needed for achieving stationary character, and q is the number of moving average terms.

The forecasting equation is constructed as follows. First, let y denote the dth difference of Y, which means:

In terms of y, the general forecasting equation is:

where Y is the time series under consideration and y is the differenced time series. For various selected difference values the relation between Y and y is given above in Eqs. (8), (9) and (10). Ŷt is the forecast of Y at time t. μ is the mean of the process, ϕ refers to the AR operator and θ refers to the MA operator.

-

ϕi, i = 1,..p are real constants with ϕp≠0

-

θj, j = 1,…q are real constants with θq≠0

The degree of differencing in ARIMA depends on the degree of variation of the time series from stationary character. However, this method is effective only if the degree of non-stationary character is low. The order of differencing can be selected based on critical evaluation of the time series plot and correlogram. An over differenced time series may produce less stable modelling results (Machiwal and Jha 2012). Therefore, the selection of order of differencing is quite critical in obtaining a stable model. The number of autoregressive (p) and moving average (q) parameters, also need to be selected based on the characteristics of the time series. The order of the model is selected in such a way that the model is effective and parsimonious. A parsimonious model will have the fewest number of parameters and the greatest number of degree of freedom among all the stochastic models (Machiwal and Jha 2012). Thus, the order of the model needs to be carefully selected in order to get an accurate forecast of the time series (Box and Jenkins 1976; Yajima 1985).

3.3 Artificial Neural Networks (ANN)

ANNs are data driven AI models that work on the concept of human nervous system. Like the human nervous system, if the ANN model is trained appropriately, it can handle all kind of situations arising in its field of knowledge. There are nodes/neurons in the ANN model similar to the human neurons (Zhang et al. 1998; ASCE Task Committee 2000a).

There are input, hidden and output layers in ANN. The number of hidden layers, and the number of nodes within them depend on the complexity of the data (ASCE Task Committee 2000a). The number of nodes in the input layer is determined based on the dimension of the problem i.e., the number of input parameters required to produce output from the system (Zhang et al. 1998; ASCE Task Committee 2000a, b; Dawson and Wilby 2001).

3.4 Kwiatkowski–Phillips–Schmidt–Shin (KPSS) Test

KPSS test is a statistical test, based on linear regression, for checking the stationary character of a time series. The null hypothesis of the test is that the time series is stationary and alternative hypothesis is that there is a unit root in the time series. Unit root implies that the statistical properties of a time series are not constant with time, which is the condition for a non stationary time series. The detailed mathematical description of the test can be seen in Kwiatkowski et al. (1992).

4 Results and Discussion

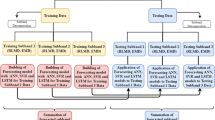

The present study mainly involves three stages, which include SSA modelling of daily rainfall data to separate trend and periodic component, ARIMA modelling of residual data and ANN modelling of the extracted trend and periodic component.

4.1 Rainfall Data Pre-Processing Using SSA

In the current study, an SSA model is developed to extract the trend and periodic component from the daily rainfall data. Selection of window length is a crucial step in SSA. In the present study, window length is selected based on the results of a sensitivity analysis carried out by Unnikrishnan and Jothiprakash (2015). In that sensitivity analysis, a time series was analysed using different window lengths and when compared, window length proportional to the period of the prevailing periodic component was identified to be the optimum window length. Hence, here in this study also, window length is selected accordingly. The correlogram of the Koyna daily rainfall data upto a time lag of 1000 days is given in Fig. 2. Correlogram is the autocorrelation function plot which gives a measure of the inter dependence between observations. From the Fig. 2, it can be noticed that the pattern of the correlogram repeats after every 365 days, which indicates, the presence of a prevailing periodic component with period 365 days. Hence a window length of 365 is selected in the present study.

To extract the trend component from the daily rainfall time series, periodogram analysis was integrated with SSA. Upon SVD of the rainfall data, the data is decomposed into ‘L’ number of SVD components, where ‘L’ is the window length, which in this study is 365. These 365 SVD components are numbered from 1 to 365, in the order of the share of each component in the time series. The share of each component is a measure of the corresponding Eigen value. In the study, the periodogram of each of the decomposed Eigen functions were analysed. The criterion for selection of the trend component is given in Eq. 4. Each of the Eigen functions is a time series and this criterion is checked for each of the 365 Eigen functions. Thus, by carrying out periodogram analysis within SSA, only the first Eigen function satisfied the criteria given in Eq. 4 and thus is selected as the significant trend SVD component. Thus, in the grouping stage of SSA, only first Eigen Triple was selected and after diagonal averaging, trend is extracted from the rainfall data.

The periodic component was extracted by analysing the peak of periodogram of Eigen functions. Periodogram of all the Eigen functions were plotted and those with recognizable single peak are selected as significant for periodic component. Based on this criterion, only 2nd, 3rd, 4th, 5th, 6th and 7th Eigen functions showed single peak in the periodogram, other Eigen functions either showed no peak or multiple peaks. Hence, 2 to 7 Eigen triples were selected in grouping stage, as significant for periodic component, and by diagonal averaging, the periodic component was extracted from the rainfall data using SSA. The details and mathematical description of the methodology, used for periodic component extraction using SSA is explained in Unnikrishnan and Jothiprakash (2018b). The extracted trend and periodic component from the Koyna rainfall data for a period of 1st January 1961 to 31st December 2012 are given in Fig. 3. The trend and periodic component extracted together will contribute to most of the non-stationaries in the data.

To check stationary character of the residual Koyna data after removing the trend and periodic component, graphical analysis such as analysis of correlogram as well as statistical analysis such as Kwiatkowski–Phillips–Schmidt–Shin (KPSS) test were performed. Correlogram of observed Koyna daily rainfall series for a lag of 1000 days is given in Fig. 2. It is evident from the correlogram that, the observed daily rainfall series, is a non-stationary series. Correlogram of the residual rainfall data after removing trend and periodicity is given in Fig. 4. It can be observed from the figure that the correlogram dies out rapidly, which is the property of a stationary time series.

At 95% confidence level, the results of KPSS test showed that the observed rainfall series is non-stationary whereas, the residual series after removing the trend and periodic component is stationary. Thus, both the correlogram analysis and KPSS test confirm that by separating the trend and periodic component from the data, the residual Koyna daily rainfall series became a stationary series.

Thus, trend and periodicity component has been extracted using SSA from the observed rainfall time series and a residual rainfall series, devoid of non-stationary character, is obtained on which ARIMA method can be applied. In the present study, hybrid SSA-ARIMA-ANN single time step ahead daily rainfall forecasting model has been developed. The stationary and non-stationary components forecasted by ARIMA and ANN respectively are added together to get the forecasted rainfall of the Koyna catchment.

4.2 Modelling of Non-stationary Components Using ANN

A three-layered ANN model with one input layer, one hidden layer and an output layer has been selected in the present study. Levenberg-Marquardt learning algorithm with log sigmoid activation function in the hidden layer and linear transfer function in the output layer is used in the study. ANN (3–10-1) model with three input nodes, ten hidden neurons and one output node has been selected based on trial and error procedure, so that best performance measures are achieved during the calibration phase of the ANN modelling.

During the calibration phase of the ANN, the sum of, the extracted trend and periodic component of the Koyna daily rainfall data for the time period from 1st January 1961 to 31st December 2012, has been given as input for the ANN model, which will utilize 70% of the given data for training, 15% for validation and 15% of the data for testing the model. This complete phase is known as calibration phase in the present study. The training of the model was continued until the Mean Squared Error (MSE) and Coefficient of Correlation (R) reached desirable values. During the calibration phase, the target and the output of the model are well correlated with R value of 1, which is exceptionally good. This high value of R in the calibration phase may be due to the absence of noise in the data. The high value of R and the minimum MSE showed that the model is well trained.

The performance of the model in forecasting the non-stationary components can be ascertained only by testing its performance for an unfamiliar data belonging to the same time series. This phase is called revalidation phase in the present study. In the revalidation phase, one day ahead forecast of non-stationary components of rainfall, pertaining to the year 2013, in Koyna catchment, is simulated from the previous three day’s non-stationary components and the forecast output is shown in Fig. 5. It can be noticed that the forecasted non-stationary components are having negative values. It is also to be noticed that, the extracted periodic component of the Koyna daily rainfall data also contains negative values. The sum of all the components in a time series will negate this effect of negative values in the components and will help in reducing the adverse effect of large number of zeros in data during modelling. It is also to be noted that, individual components of a series can be negative indicating the direction of movement of the data. The performance measures of the model have been given in Table 1. It can be observed that all the performance measures are showing exceptional results with very low error values and high correlation measures in ANN modelling of the non-stationary components. This high performance can be attributed to the absence of noise in the data.

4.3 Modelling of the Residual Rainfall Data Using ARIMA

The residual Koyna series after removing the trend and periodic components are modelled by ARIMA process. ARIMA process was modelled to forecast the next day rainfall. By trial and error procedure, the ARIMA model of (3, 1, 3) has been selected for the purpose. For modelling, the residual series for a period from 1st January 1961 to 31st December 2012, has been used and one-year data has been kept for validation of the performance of the model in forecasting.

Thus, forecasting of the residual series is carried out for a period of one year from 1st January 2013 to 31st December 2013 by the modelled ARIMA (3,1,3) for the Koyna residual series. The stationary components forecasted using ARIMA process is given in Fig. 6. The performance measures of the ARIMA in forecasting the residual time series is given in Table 1. It can be noticed that the performance of the model is slightly inferior to the ANN model, which can be attributed to the presence of noise in the residual data.

4.4 Modelling of Daily Rainfall Data Using SSA-ARIMA-ANN

The addition of both the ARIMA and ANN forecasted series, gives the final forecasted rainfall series that can be validated with the observed rainfall series. The observed and forecasted Koyna daily rainfall series using SSA-ARIMA-ANN model from 1st January 2013 to 31st December 2013 along with the corresponding scatter plot is given in Fig. 7. The Fig. 7 shows a good correlation between the observed and forecasted rainfall.

The performance measures of the model are given in Table 1. It shows that the model yielded good performance measures. It is also observed that the performance of ANN in forecasting the trend and periodicity component is exceptionally good compared to the performance of ARIMA. The presence of noise in the stochastic part of the time series, which was modelled by ARIMA, may be the reason for the slightly inferior performance of ARIMA. The Coefficient of Determination (R2) and Nash Sutcliffe efficiency (E) of the model in forecasting are in good agreement with values of 0.68 and 0.69. Mean Positive Error (MPE) and Mean Negative Error (MNE) are statistical measures proposed by Unnikrishnan and Jothiprakash (2018a) that can identify over prediction and under prediction by the model. A higher value of MPE indicates over prediction and a higher value of MNE indicates under prediction of values by the model. A slightly higher value of MNE in the present study indicates that the SSA-ARIMA-ANN model is slightly under predicting some of the rainfall values. This can be attributed to the presence of large number of zero values in the rainfall data which corresponds to the non-monsoon period. The %MP is the percentage error in predicting peak values by the model. The %MP of the SSA-ARIMA-ANN model in the present study is −22% which is acceptable as far as daily rainfall data is concerned.

To compare the performance of the developed SSA-ARIMA-ANN model, the performance of ANN model with same architecture, as used in the present study, without data pre-processing has been studied. In Unnikrishnan and Jothiprakash (2018a), the same rainfall data has been modelled using ANN of same architecture without data pre-processing. The results of the modelling performance of the ANN showed that, the model developed in the present study could perform better than the ANN model without pre-processing. It was also noticed that lag effect, which was present in the forecast by ANN model, was absent in the SSA-ARIMA-ANN model forecasts. The forecast performance measures of the ANN model without data pre-processing have been given in Table 1. It is observed that the ANN model without pre-processing has a percentage error in peak value of −70.3%, which shows that, the model under predicts the peak rainfall with a higher error. Also, a high value of MPE in the model shows that, the model is overpredicting rainfall. MNE is slightly lesser than that of SSA-ARIMA-ANN which shows that the SSA-ARIMA-ANN model is slightly under predicting. However, a lesser value of R2 value and E shows that the overall forecast performance of ANN model without data pre-processing is inferior to the SSA-ARIMA-ANN model.

Thus, it is beneficial to integrate SSA, ARIMA and ANN for hydrologic modelling which can yield reliable rainfall forecasts with reduced computational time and cost.

5 Conclusions

An integrated SSA-ARIMA-ANN model has been developed in the present study to forecast daily rainfall time series. In the present study, the daily rainfall pertaining to Koyna catchment in Maharashtra is used as a case study. A new modelling approach combining stochastic and data driven approach is proposed in the study. SSA is used as a data pre-processing tool, where it separated the stationary and non-stationary components in the rainfall data. In the SSA-ARIMA-ANN model, the non-stationary components were modelled using ANN and the stationary components were modelled using ARIMA. The performance of the SSA-ARIMA-ANN model was compared with that of ANN model without data pre-processing and it was observed that the SSA-ARIMA-ANN model performed better than the ANN model in forecasting the daily rainfall data. It was observed that the developed SSA-ARIMA-ANN model could perform even better with good performance measures in forecasting. There is also great potential in the model to be further refined to yield much better rainfall forecasts.

References

Afshar K, Bigdeli N (2011) Data analysis and short term load forecasting in Iran electricity market using singular spectral analysis (SSA). Energy 36:2620–2627. https://doi.org/10.1016/j.energy.2011.02.003

Alexandrov T (2009) A method of trend extraction using singular Spectrum analysis. Revstat-statistical J 7:1–22

ASCE Task Committee (2000a) Artificial neural networks in hydrology I : preliminary concepts. J Hydrol Eng 5:115–123

ASCE Task Committee (2000b) Artificial neural networks in hydrology II: hydrologic applications. J Hydrol Eng 5:124–137

Box GEP, Jenkins GM (1976) Time Series Analysis- Forecasting and Control, 2nd edn. Colarado State University, Holden day, San Francisco

Dawson CW, Wilby RL (2001) Hydrological Modelling using artificial neural networks. Prog Phys Geogr 25:80–108. https://doi.org/10.1177/030913330102500104

Elsner JB, Tsonis AA (1996) Singular Spectrum analysis - a new tool in time series analysis. Plenum press, New York

Golyandina N, Nekrutkin V, Zhigljavsky A (2001) Analysis of time series structure - SSA and related techniques. CRC press LLC

Kwiatkowski D, Phillips PCB, Schmidt P, Shin Y (1992) Testing the null hypothesis of stationarity against the alternative of a unit root. J Econom 54(1–3):159–178

Machiwal D, Jha MK (2012) Hydrologic time series analysis: theory and practice. Springer, Capital Publishing company

Ramírez MCV, Ferreira NJ, de Campos Velho HF (2006) Linear and Nonlinear Statistical Downscaling for Rainfall Forecasting Over Southeastern Brazil. Weather 21:969–989. https://doi.org/10.1175/WAF981.1

Rodrigues PC, de Carvalho M (2013) Spectral modeling of time series with missing data. Appl Math Model 37:4676–4684. https://doi.org/10.1016/j.apm.2012.09.040

Rodrıguez-Aragon L, Zhigljavsky A (2010) Singular spectrum analysis for image processing. Stat Interface 3:419–426

Shahin M, Van Oorschot HJ, De Lange S (1993) Statistical analysis in water resources engineering. A.A Balkema, Rotterdam

Solomatine DP, Ostfeld A (2008) Data driven Modelling: some past experiences and new approaches. J Hydroinf 10:3–22

Unnikrishnan P, Jothiprakash V (2015) Extraction of nonlinear rainfall trends using singular Spectrum analysis. J Hydrol Eng 20:05015007. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001237

Unnikrishnan P, Jothiprakash V (2018a) Data-driven multi-time-step ahead daily rainfall forecasting using singular spectrum analysis-based data pre-processing. J Hydroinf 20:645–667. https://doi.org/10.2166/hydro.2017.029

Unnikrishnan P, Jothiprakash V (2018b) Daily rainfall forecasting for one year in a single run using singular Spectrum analysis. J Hydrol 561:609–621. https://doi.org/10.1016/j.jhydrol.2018.04.032

Wei WWS (2006) Time series analysis Univariate and multivariate methods, 2nd edn. Pearson Education, United states of America

Yajima Y (1985) Estimation of the degree of differencing of an ARIMA process. Ann Inst Stat Math 37

Zhang G, Eddy Patuwo B, Hu MY (1998) Forecasting With Artificial Neural Networks. Int J Forecast 14:35–62. https://doi.org/10.1016/S0169-2070(97)00044-7

Zhang Q, Wang B-D, He B et al (2011) Singular Spectrum analysis and ARIMA hybrid model for annual runoff forecasting. Water Resour Manag 25:2683–2703. https://doi.org/10.1007/s11269-011-9833-y

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Unnikrishnan, P., Jothiprakash, V. Hybrid SSA-ARIMA-ANN Model for Forecasting Daily Rainfall. Water Resour Manage 34, 3609–3623 (2020). https://doi.org/10.1007/s11269-020-02638-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-020-02638-w