Abstract

Recently, increasing numbers of nonprofit studies have used experiments to understand individuals’ charitable giving decisions. One significant gap between experimental settings and the real world is the way in which individuals earn the incomes that they use for charitable donations. This study examined the relationship between individuals’ income sources and their charitable giving decisions. To do so, we conducted a laboratory experiment with 188 college students and asked them to donate with windfall money or with money earned from a real task, respectively. The findings showed that participants donated more to charities if their funds derived from windfall gains. Implications for conducting experiments and motivating donors are also discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Recently, public and nonprofit management studies have frequently used experiments to examine the relationship between organizations and individuals (Kim et al. 2017; Li and Van Ryzin 2017; Van Ryzin et al. 2017). A frequent question concerns whether the experimental findings have external validity or can be generalized. Although some studies have found no significant difference between behavior in experimental settings and in the real world (Benz and Meier 2008; Hainmueller et al. 2015; Levitt and List 2007), how to make experimental settings more comparable to the field and produce results that can be generalized better remains methodological challenges for scholars.

Efforts such as changing stake sizes (Carpenter et al. 2005), selecting different subjects (Fehr and List 2004), and altering the choice sets (List 2007a) have been made to reduce the differences between experimental settings and the real world. However, the question of the extent to which insights from experimental studies can be generalized when individuals use their own money remains unresolved (Carlsson et al. 2013; Smith 2010). Conducting field experiments is an expensive and less tightly controlled solution, and well-designed laboratory experiments can complement field experiments and observational empirical studies. For example, scholars usually use observational data to study the governance of common-pool resources such as fisheries, forests, and pastoral and water resources (for example, see Ostrom 1990). Recently, laboratory experiments were used to study governing common-pool resources (Janssen et al. 2010). Lower costs and tighter control of laboratory experimental settings allow replicability that is more difficult with field experimental settings (Falk and Heckman 2009). In addition, student participants should not be a concern if our main purpose is to find “the best way to isolate the causal effect of interest (Falk and Heckman 2009, p. 536).” Therefore, instead of going to the field, an alternative to enhance generalizability is to make the laboratory settings “closer” to the real world.

With respect to experimental studies about charitable giving, one large gap between laboratory experimental settings and the field or the real world is the way in which individuals acquire the endowments or incomes they use for charitable donations. In laboratory experiments, participants usually received windfall money before donating. In the field experimental settings or the real world, people normally have to earn money before making donations to nonprofit organizations. Therefore, a methodological challenge is to design a more realistic laboratory setting where the windfall effect, if any, can be identified and controlled.

Some studies have documented the behavioral inconsistency attributable to different income sources—e.g., participants gave more to charities when they received windfall money (Carlsson et al. 2013; Danková and Servátka 2015). Other studies have found no significant evidence of the windfall effect in public good experiments (Cherry et al. 2005; Clark 2002). Scharf (2014) argued that giving windfall money might reduce the level of donors’ happiness. There might be no windfall effect. The mixed empirical results also invite more studies to understand further the role of windfall money in individual charitable giving decisions.

In this study, we replicated the previous studies by using a laboratory experiment in a Chinese context. We introduced a simple real task design that addressed the methodological challenge and provided a new empirical answer to the question: Do participants donate more to charities when they obtain windfall money? We also suggest new experimental designs that can be used for future studies and discuss the implication of our findings.

Literature Review

Studies of nonprofit management have begun to use more experiments recently (for a systematic literature review, see Li and Van Ryzin 2017). For example, Kim et al. (2017) showed the way in which different experiments, such as online survey experiments, laboratory experiments, and field experiments, can be used to examine nonprofits’ fundraising strategies and individuals’ charitable giving decisions. The advantages of experimental studies include the ease of identifying the effect of a certain treatment and the ability to explore the causal relationship between the treatment and the effect (List 2007b). Experimental studies, however, suffer because their results are difficult to generalize.

One significant gap between experimental settings and the real world is the inconsistency in the decisions people make in the two situations (List 2007b, 2008). For example, in the real world, very few people donate 20% of their income to charities. According to the IRS 2014 Statistics of Income, in the most recent tax year for which finalized data are available, people with an adjusted gross income (AGI) less than 25,000 dollars reported the highest average charitable deduction rate (CDR) of 12.3%, followed by those whose AGI was between 25,000 and 50,000 (CDR 6.8%), and those whose AGI exceeded 2,000,000 (CDR 5.6%), respectively (IRS n.d.). However, giving 20% of one’s income to a charity or another person is the average in experiments (Camerer 2003).

Levitt and List (2007) listed “scrutiny, context, stakes, selection of subjects, and restrictions on time horizons and choice sets” as factors that contribute to the behavioral inconsistency found between experiments and real world scenarios. To reduce the differences between experimental settings and the real world, scholars have replicated the same experiment in different cultures (Henrich et al. 2001), used different stake sizes (Carpenter et al. 2005), different subjects (Fehr and List 2004), altered the choice sets (List 2007a), and experimented in the field (Gneezy and List 2006; Mason 2016; Shang and Croson 2009; Soetevent 2011). As Levitt and List (2007) proposed, well-designed field experiments could bridge randomization and a more representative setting of the real world.

However, it is still unclear whether experimental results can be generalized when participants use their own money. There are several different, but related issues about using one’s own money in experiments. First, unsurprisingly, participants tend to give more when they are using hypothetical dollars rather than real money. For example, survey experiments usually grant participants a number of hypothetical dollars to use for further distribution (Kim et al. 2017; Kim and Van Ryzin 2014). Laboratory and field experiments can solve this problem in part by providing participants with real money (Carlsson et al. 2013; Kim et al. 2017; Mason 2013). Several field experimental studies examined the effects of social information (Shang and Croson 2009), payment choice (Soetevent 2011), and image motivation (Mason 2016) on charitable giving decisions when individuals used their earned money. However, field experiments usually cost more and are less tightly controlled than laboratory experiments. Some scholars prefer laboratory experiments because laboratory results are similar and correlated with field findings (Benz and Meier 2008; Carlsson et al. 2013). In addition, laboratory experiments are usually cheaper and can be tighter controlled (Falk and Heckman 2009).

Second, even when real money is used in experiments, it remains unclear in what way windfall money influences the generalizability of the results. The windfall effect, in which people give more when they obtain windfalls, could be the result of different endowment sources: people in experiments usually are given windfall money before making giving decisions, while people in the real world usually donate money they have earned. It also may result from the fact that people use different mental accounts for windfall and earned money (Arkes et al. 1994). Some studies have argued that people who obtained windfall money gave more because they received money effortlessly (Konow 2010) and those who felt that earned money legitimized their incomes gave less because earned incomes evoked less generous behavior (Cherry et al. 2002). However, Scharf (2014) argued that giving earned money brought a greater level of happiness to donors, while giving “windfall money” reduced it. Therefore, there may be no windfall effect in charitable giving, as some studies have found no significant evidence of the windfall effect in public good experiments (Cherry et al. 2005; Clark 2002). Thus, the mixed results of studies of the windfall effect necessitate more experimental studies.

Third, while scholars have noted that individuals’ income levels played significant roles in their charitable giving decisions (Gazley and Dignam 2010; Peck and Guo 2015; Wang and Ashcraft 2014), they largely have ignored the role of income sources. Generally, individuals with higher incomes are more likely to give and, when they do, they tend to give more (Peck and Guo 2015). However, the income effect varies for different types of nonprofits. For example, Wang and Ashcraft (2014) found no significant relationship between income levels and money donated to associations. What is the effect of income sources on charitable giving decisions? One implication of the “easy get, easy go” effect is that individuals who earn incomes through stock markets or lotteries may give more to charities than those who earn salaries in their occupations (Carlsson et al. 2013). Introducing real tasks in experiments allowed us to test and compare the effects of various income sources—windfall and earned money—on charitable giving.

If researchers can create a laboratory experimental setting in which people have to earn income through real tasks before donating to charities, they can reduce the difference between laboratory experiments and the real world where people usually have to earn money before giving to charities. By introducing real tasks, which simulate the real-world scenarios, researchers can increase the ability to generalize the experimental results and, thus, partially address the methodological challenge of laboratory experiments. Some researchers have introduced real tasks in experiments that ask participants to “work” to earn money before making further decisions (Carlsson et al. 2013; Cherry et al. 2005; Kroll et al. 2007). For example, the real task that Carlsson et al. (2013) used in their experiments was having participants respond to a lengthy survey. However, the heterogeneity in the participants’ ability to answer the questions might have an endogenous influence on the amount of money earned and donated. Therefore, simple real tasks are preferred in experiments (e.g., Abeler et al. 2011).

Based on the review of related literature, we suggest that introducing simple real tasks that require participants to earn money before donating in laboratory experimental settings can not only address the methodological challenge of using windfall money but also provide new empirical evidence to the windfall effect.

Experimental Design

The experiments were conducted between September and November 2013 at the Beijing Normal University (BNU), China, which has approximately 26,400 students. One hundred and thirty-eight participants were recruited from the BNU BBS platform, which other researchers use frequently to recruit student participants (e.g., Zhou et al. 2013). 74.25% of the participants were female; 46.11% were from low-income families; 57.06% were undergraduates; 32.93% were from urban areas, and 32.34% were members of the Chinese Communist Party. It should be noted here that 72.90% of the BNU students in 2013 were female (Li et al. 2013). The fact that BNU’s student body was not gender balanced in 2013 explains why the experiment included more female participants.

The experiment used a within-subject design and a dictator game that asked each participant, who served as the dictator, to give money to a charitable organization [for similar experimental settings of dictator games, see Carlsson et al. (2013) and Eckel and Grossman (1996)]. Each participant received 15 CNY (approximately 2.5 US dollars in 2013) for taking part in the experiment.

Each participant was assigned two tasks: Task 1, acquire 15 CNY and give an amount to a charitable organization, and Task 2, earn money through a real task and then give an amount to the charity chosen. To reduce the order effect, Task 1 and Task 2 were randomized, such that participants either performed Task 1 first or the converse. The real task involved participants counting the correct number of 0s in each of 15 rows within 90s (Fig. 1). The number of 0s was generated randomly by Z-Tree, a software used in developing and conducting experiments (Fischbacher 2007). Participants received 1.5 CNY for counting the correct number of 0s in a row. If a participant counted the number of 0s in all 15 rows correctly, he/she received a total of 22.5 (1.5 × 1.5) CNY. We use this simple counting numbers task because it is easy to control and helps rule out other noise in the process.

Usually, the dictator game requires participants to allocate money only to a particular nonprofit organization (Carlsson et al. 2013; Eckel and Grossman 1996). In this experiment, participants were asked to donate to one of five charities that were working on disaster relief and recovery after the 2013 Ya’an Earthquake in China, regardless of whether the money was from a windfall endowment or was earned. The choice of charities varied to enhance the generalizability of the experimental results by reducing the possibility that all of the participants had a particular attitude toward a charity chosen by the researchers. Such a possibility would otherwise distort the findings and thus weaken the external validity of the results. However, when allowed to choose one of five charities, participants might donate to different charities for different causes or make equal contributions to all charities to avoid the risks of investing in a single one (Null 2011). Therefore, the experiment specified that all donations would be used for the 2013 Ya’an Earthquake relief and recovery and asked each participant to choose only one. Before participants did so, they were given an introduction to all five charities, as well as information about the Ya’an Earthquake relief and recovery program. The five foundations represented various types of foundations in China (Ma et al. 2017), including two national public foundations (China Red Cross Foundation and China Fupin Foundation), two national private foundations (Nandu Foundation and Tencent Foundation), and one local public foundation (Shenzhen One Foundation: see Table 1 for a summary).

The information given about the Ya’an program read:

On April 20th, 2013, an earthquake in Ya’an resulted in 193 deaths, 25 missing, 12211 injured, and more than 10 billion CNY in economic loss. Today, Ya’an is no longer in the headlines. However, according to the Chinese Ministry of Civil Affairs, more than 20 billion CNY are needed to rebuild Ya’an. Ya’an needs your help. The donations you make in the experiment will be used to rebuild Ya’an through your chosen organization(s). Thank you very much for your support.

All the participants received the information above after they entered the BNU laboratory. They were then given the rules and context of the experiment. To reduce the degree of “money illusion,” participants were told that 1 CNY in the experiment equaled 1 CNY in reality. The experiment was conducted through the Z-Tree platform (Fischbacher 2007) after participants understood the above information fully.

Results

Donation rates were used to measure the levels of participants’ charitable giving. The absolute values of donations were not used, because they differed in the two tasks. In Task 1, participants were granted a windfall of 15 CNY before giving, while in Task 2, participants earned various amounts of money (maximum 22.5 CNY) based on their performance on the counting task. Giving rates, on the other hand, allowed a comparison of the results across tasks. The giving rates were calculated with the following formula:

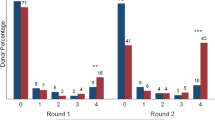

Figure 2 shows the frequencies (left), cumulative distributions (middle), and means of charitable giving (right) for the two tasks. The left figure shows how many participants gave at different giving rates. The middle figure shows the cumulative distributions of giving rates of both tasks. The right figure shows a comparison of the mean giving rates between two tasks.

Participants who received windfall endowments gave significantly more to charities than did those who earned their money in the real task (counting numbers). On average, participants who received windfall money gave 24.61% of their money to their chosen charities, while participants who earned their money gave only 19.15% (the right figure). The differences in the average giving rates between the two tasks were significant (t = 2.0, p = 0.05). The Mann–Whitney–Wilcoxon (MWW) test also showed a significant difference in the cumulative distributions of the two tasks (W = 20462, p = 0.01). Cumulatively, participants who received windfall money donated significantly higher portions of their endowments to charities than did those who earned their money (the middle figure). An ANOVA and the OLS estimation produced similar results (Table 2). The results thus demonstrated the existence of the windfall effect (Table 2).

Tobit models were used to check the robustness of the experimental results. Before participants took part in the experiment, they were asked to answer several sociodemographic questions, including gender, family income, whether or not they were a member of the Chinese Communist Party (CCP), and whether or not they were a graduate student. The results of the Tobit models showed that being female, having a family with a higher income, being a member of the Chinese Communist Party, and a graduate student, were not associated significantly with giving rates in both tasks (Table 3). An additional multinomial model tested the sociodemographics against the dependent variable, the change in giving rates between the two tasks. When a participant acquired windfall money, if he/she gave “more,” “the same,” and “less” than when the money was earned, the dependent variable was then coded “more,” “the same,” or “less,” respectively. In the multinomial model, the category “the same” was used as the reference. The additional multinomial test reconfirmed that the sociodemographic characteristics above had no significant influence on giving rates (Table 3), thus demonstrating that the experimental results were robust.

Conclusion

This study uses a simple real task laboratory experiment to test the effect of endowment heterogeneity on individuals’ charitable giving decisions. The experiment used a within-subject design with two different randomized tasks: Task 1 asked participants to donate after receiving a windfall endowment, while Task 2 asked participants to donate after earning money through a real task. The experimental results showed that individuals gave significantly greater amounts to charities when they received windfall endowments. Several tests confirmed the robustness of the windfall effect.

Two implications can be drawn from the experimental results. First, a methodological implication is that future experimental studies of charitable giving must consider the windfall effect. Using simple real tasks such as counting numbers or completing survey questionnaires in laboratories can help reduce the difference between experimental settings and the real world. However, simple real tasks are preferred.

Second, one practical implication is that, to develop more effective fundraising strategies, charitable organizations must understand that not only income levels, but also income sources, are important in individuals’ giving decisions. Charitable organizations and public goods benefit considerably from windfall money, such as stock market gains (List and Peysakhovich 2011), lottery awards (Lange et al. 2007), and bequest giving (James III 2015; McGranahan 2000; Sargeant and Shang 2011). Thus, charities may wish to consider customizing fundraising strategies to nudge donors who are more likely to have windfall money. For example, charities can show advertisements on lottery tickets and in investor communication messages by working with financial companies’ corporate social responsibility (CSR) departments.

The study is not without limitations. Almost all experimental studies face the “scalability” challenge (Al-Ubaydli et al. 2017). First, the participants in the experiment were recruited only from the BNU and were significantly gender-unbalanced. Although gender and other sociodemographic characteristics were not associated significantly with giving rates in both tasks (Table 3), they do limit the ability to generalize the findings. Another limitation is that the endowment size was relatively small (maximum 15 CNY for the windfall endowment and 22.5 CNY for the real task). Changing the amount of endowment money, as Carpenter et al. (2005) suggested, might produce different results. However, given the administrative issues of running field experiments (Al-Ubaydli et al. 2017), these high costs may also deter scholars from conducting field studies.

More studies, particularly experiments that alter the sample of participants and the size of endowments are needed. Collaborations with actual organizations could be an alternative solution to the high costs of running field experiments (List 2007b). A less expensive alternative is to compare the laboratory experimental results with findings from other datasets. We plan to validate this study in future by examining other data, such as that from the Research Infrastructure for Chinese Foundations (Ma et al. 2017), and the Chinese General Social Survey (CGSS n.d.), and then compare the experimental results of the windfall effect with the findings obtained using those datasets.

References

Abeler, J., Falk, A., Goette, L., & Huffman, D. (2011). Reference points and effort provision. American Economic Review, 101(2), 470–492.

Al-Ubaydli, O., List, J. A., & Suskind, D. L. (2017). What can we learn from experiments? Understanding the threats to the scalability of experimental results. American Economic Review: Papers and Proceedings, 107(5), 282–286.

Arkes, H. R., Joyner, C. A., Pezzo, M. V., Nash, J. G., Siegel-Jacobs, K., & Stone, E. (1994). The psychology of windfall gains. Organizational Behavior and Human Decision Processes, 59(3), 331–347.

Benz, M., & Meier, S. (2008). Do people behave in experiments as in the field?—Evidence from donations. Experimental Economics, 11(3), 268–281.

Camerer, C. F. (2003). Behavioral game theory: Experiments in strategic interaction. Princeton, NJ: Princeton University Press.

Carlsson, F., He, H., & Martinsson, P. (2013). Easy come, easy go: The role of windfall money in lab and field experiments. Experimental Economics, 16(2), 190–207.

Carpenter, J., Verhoogen, E., & Burks, S. (2005). The effect of stakes in distribution experiments. Economics Letters, 86(3), 393–398.

CGSS. (n.d.). Chinese general social survey—Digital chronicle of Chinese social change. Retrieved July 17, 2017, from http://www.chinagss.org/.

Cherry, T. L., Frykblom, P., & Shogren, J. F. (2002). Hardnose the dictator. American Economic Review, 92(4), 1218–1221.

Cherry, T. L., Kroll, S., & Shogren, J. F. (2005). The impact of endowment heterogeneity and origin on public good contributions: Evidence from the lab. Journal of Economic Behavior & Organization, 57(3), 357–365.

Clark, J. (2002). House money effects in public goods experiments. Experimental Economics, 5(3), 223–232.

Danková, K., & Servátka, M. (2015). The house money effect and negative reciprocity. Journal of Economic Psychology, 48, 60–71.

Eckel, C. C., & Grossman, P. J. (1996). Altruism in anonymous dictator games. Games and Economic Behavior, 16(2), 181–191.

Falk, A., & Heckman, J. J. (2009). Lab experiments are a major source of knowledge in the social sciences. Science, 326(5952), 535–538.

Fehr, E., & List, J. A. (2004). The hidden costs and returns of incentives—Trust and trustworthiness among CEOs. Journal of the European Economic Association, 2(5), 743–771.

Fischbacher, U. (2007). Z-Tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10(2), 171–178.

Gazley, B., & Dignam, M. (2010). The decision to give: What motivates individuals to support professional associations. Washington, DC: ASAE & the Center for Association Leadership.

Gneezy, U., & List, J. A. (2006). Putting behavioral economics to work: Testing for gift exchange in labor markets using field experiments. Econometrica, 74(5), 1365–1384.

Hainmueller, J., Hangartner, D., & Yamamoto, T. (2015). Validating vignette and conjoint survey experiments against real-world behavior. Proceedings of the National Academy of Sciences of the United States of America, 112(8), 2395–2400.

Henrich, J., Boyd, R., Bowles, S., Camerer, C., Fehr, E., Gintis, H., et al. (2001). In search of homo economicus: Behavioral experiments in 15 small-scale societies. American Economic Review, 91(2), 73–84.

IRS. (n.d.). SOI tax stats—individual statistical tables by size of adjusted gross income. Retrieved July 25, 2017, from https://www.irs.gov/uac/soi-tax-stats-individual-statistical-tables-by-size-of-adjusted-gross-income.

James, R., III. (2015). The family tribute in charitable bequest giving: An experimental test of the effect of reminders on giving intentions. Nonprofit Management and Leadership, 26(1), 73–89.

Janssen, M. A., Holahan, R., Lee, A., & Ostrom, E. (2010). Lab experiments for the study of social-ecological systems. Science, 328(5978), 613–617.

Kim, M., Mason, D. P., & Li, H. (2017). Experimental research for nonprofit management: Charitable giving and fundraising. In O. James, S. Jilke, & G. G. Van Ryzin (Eds.), Experiments in public management research: Challenges & contributions (pp. 415–436). Cambridge: Cambridge University Press.

Kim, M., & Van Ryzin, G. G. (2014). Impact of government funding on donations to arts organizations: A survey experiment. Nonprofit and Voluntary Sector Quarterly, 43(5), 910–925.

Konow, J. (2010). Mixed feelings: Theories of and evidence on giving. Journal of Public Economics, 94(3–4), 279–297.

Kroll, S., Cherry, T. L., & Shogren, J. F. (2007). The impact of endowment heterogeneity and origin on contributions in best-shot public good games. Experimental Economics, 10(4), 411–428.

Lange, A., List, J. A., & Price, M. K. (2007). Using lotteries to finance public goods: Theory and experimental evidence. International Economic Review, 48(3), 901–927.

Levitt, S. D., & List, J. A. (2007). What do laboratory experiments measuring social preferences reveal about the real world? Journal of Economic Perspectives, 21(2), 153–174.

Li, L., Tian, G., & Wang, Z. (2013). Universities have more female students in China? Retrieved July 8, 2017, from http://www.jyb.cn/high/gjsd/201310/t20131017_555945.html.

Li, H., & Van Ryzin, G. G. (2017). A systematic review of experimental studies in public management journals. In O. James, S. Jilke, & G. G. Van Ryzin (Eds.), Experiments in public management research: challenges & contributions (pp. 20–36). Cambridge: Cambridge University Press.

List, J. A. (2007a). Field experiments: A bridge between lab and naturally occurring data. The B.E. Journal of Economic Analysis & Policy, 5(2), 1–47.

List, J. A. (2007b). On the interpretation of giving in dictator games. Journal of Political Economy, 115(3), 482–493.

List, J. A. (2008). Introduction to field experiments in economics with applications to the economics of charity. Experimental Economics, 11(3), 203–212.

List, J. A., & Peysakhovich, Y. (2011). Charitable donations are more responsive to stock market booms than busts. Economics Letters, 110(2), 166–169.

Ma, J., Wang, Q., Dong, C., & Li, H. (2017). The research infrastructure of Chinese foundations, A database for Chinese civil society studies. Scientific Data, 4, 170094. https://doi.org/10.1038/sdata.2017.94.

Mason, D. P. (2013). Putting charity to the test: A case for field experiments on giving time and money in the nonprofit sector. Nonprofit and Voluntary Sector Quarterly, 42(1), 193–202.

Mason, D. P. (2016). Recognition and cross-cultural communications as motivators for charitable giving a field experiment. Nonprofit and Voluntary Sector Quarterly, 45(1), 192–204.

McGranahan, L. M. (2000). Charity and the bequest motive: Evidence from seventeenth-century wills. Journal of Political Economy, 108(6), 1270–1291.

Null, C. (2011). Warm glow, information, and inefficient charitable giving. Journal of Public Economics, 95(5–6), 455–465.

Ostrom, E. (1990). Governing the commons: The evolution of institutions for collective action. Cambridge: Cambridge University Press.

Peck, L. R., & Guo, C. (2015). How does public assistance use affect charitable activity? A tale of two methods. Nonprofit and Voluntary Sector Quarterly, 44(4), 665–685.

Sargeant, A., & Shang, J. (2011). Bequest giving: Revisiting donor motivation with dimensional qualitative research. Psychology and Marketing, 28(10), 980–997.

Scharf, K. (2014). Impure prosocial motivation in charity provision: Warm-glow charities and implications for public funding. Journal of Public Economics, 114, 50–57.

Shang, J., & Croson, R. (2009). A field experiment in charitable contribution: The impact of social information on the voluntary provision of public goods. The Economic Journal, 119(540), 1422–1439.

Smith, V. L. (2010). Theory and experiment: What are the questions? Journal of Economic Behavior & Organization, 73(1), 3–15.

Soetevent, A. R. (2011). Payment choice, image motivation and contributions to charity: Evidence from a field experiment. American Economic Journal: Economic Policy, 3(1), 180–205.

Van Ryzin, G., Riccucci, N., & Li, H. (2017). Representative bureaucracy and its symbolic effect on citizens: A conceptual replication. Public Management Review, 19(9), 1365–1379.

Wang, L., & Ashcraft, R. F. (2014). Organizational commitment and involvement: Explaining the decision to give to associations. Nonprofit and Voluntary Sector Quarterly, 43(s2), s61–s83.

Zhou, Y., Lian, H., Chen, Y., Zuo, C., & Ye, H. (2013). Social role, heterogeneous preferences and public goods provision. Economic Research, 1, 123–136.

Acknowledgements

We thank Rene Bekkers, Weigang Fu, Haoran He, Zhiwei Liu, Alex Ingrams, Gregg G. Van Ryzin, Sanjay Pandey for helpful comments. We also thank Shuying Wang for the help in z-Tree programming. For all remaining errors, authors may blame each other.

Funding

The authors acknowledged the financial support from the Shanghai Institute of Finance and Law.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared no potential conflicts of interests with respect to the research, authorship, and/or publication of this article.

Rights and permissions

About this article

Cite this article

Li, H., Liang, J., Xu, H. et al. Does Windfall Money Encourage Charitable Giving? An Experimental Study. Voluntas 30, 841–848 (2019). https://doi.org/10.1007/s11266-018-9985-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11266-018-9985-y