Abstract

The appearance of consciousness in the universe remains one of the major mysteries unsolved by science or philosophy. Absent an agreed-upon definition of consciousness or even a convenient system to test theories of consciousness, a confusing heterogeneity of theories proliferate. In pursuit of clarifying this complicated discourse, we here interpret various frameworks for the scientific and philosophical study of consciousness through the lens of social insect evolutionary biology. To do so, we first discuss the notion of a forward test versus a reverse test, analogous to the normal and revolutionary phases of the scientific process. Contemporary theories of consciousness are forward tests for consciousness, in that they strive to become a means to classify the level of consciousness of arbitrary states and systems. Yet no such theory of consciousness has earned sufficient confidence such that it might be actually used as a forward test in ambiguous settings. What is needed now is thus a legitimate reverse test for theories of consciousness, to provide internal and external calibration of different frameworks. A reverse test for consciousness would ideally look like a method for referencing theories of consciousness to a tractable (and non-human) model system. We introduce the Ant Colony Test (ACT) as a rigorous reverse test for consciousness. We show that social insect colonies, though disaggregated collectives, fulfill many of the prerequisites for conscious awareness met by humans and honey bee workers. A long lineage of philosophically-neutral neurobehavioral, evolutionary, and ecological studies on social insect colonies can thus be redeployed for the study of consciousness in general. We suggest that the ACT can provide insight into the nature of consciousness, and highlight the ant colony as a model system for ethically performing clarifying experiments about consciousness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: scientific study of consciousness

1.1 Rewards and challenges for scientific theories of consciousness

Human beings are conscious. Few non-philosophers find this statement contentious. Each healthy human being on this planet, presumably by virtue of their central nervous system (e.g. as opposed to their teeth), has an ongoing subjective experience. Yet no scientific or philosophical consensus exists on what exactly consciousness is, or which physical systems are conscious (Van Gulick 2018). A successful theory of consciousness should provide unique explanations and testable predictions about phenomenal consciousness, and ideally would not suffer from unwarranted anthropocentric biases. Such a theory could be used to determine which systems possess what kind of awareness (equivalent to phenomenological consciousness, as described later). Using theory to resolve the phenomenological status of various human states (e.g. dreaming, fetus, adult in coma), animals (e.g. dogs, cows, insects), and non-biological systems (e.g. computers, countries, ant colonies) would have a tremendous impact on practical ethics. However, no such scientific theory of consciousness has risen to a level of sophistication, coherence, or utility that would allow it to be used in such a “test-like” fashion (Schwitzgebel 2018a; Van Gulick 2018). Further complicating the picture is that the contemporary diversity of theories of consciousness are heterogeneous in their axioms, structure, predictions, and implications. This makes comparisons between theories currently difficult, if not simply irrelevant.

No single definition exists for Consciousness—we here discuss phenomenological awareness. Nuanced pluralistic frameworks exist for the study of observable biological phenomena such as animal behavior (Longino 2000, 2013; Mitchell 2002). However, the scientific study of consciousness is specifically hindered from reaching a state of coherent pluralism due to a heterogeneity of definitions for “consciousness” (Morin 2006; Bayne et al. 2016; Fazekas and Overgaard 2016; Dehaene et al. 2017; Smith 2018; Van Gulick 2018). Despite the murkiness of the term itself, two types of “consciousness” can be differentiated: phenomenological consciousness and access consciousness (Block 1995a, b). Phenomenological consciousness refers to the experience of consciousness, consisting of the private and subjective experiences (qualia) that an entity might be having (Nagel 1974; Toadvine 2018). This sort of conscious awareness, at least sometimes, exists in adult humans (Nagel 1974; Searle 2002; Koch et al. 2016; Tononi et al. 2016; Goff 2017, but see Aaronson 2014; Dennett 2001). Access consciousness more mundanely refers to the ability of a stimuli to influence the future actions of the biological system (e.g. via eliciting a self-report or motor behavior), without making any claims about whether a subjective experience arises from the stimuli (Block 1995b, 2007). For example, subliminal stimuli enter access consciousness but not phenomenological consciousness, because we can be influenced by such stimuli but are not aware of their perception. Here we focus on phenomenological consciousness rather than mere access consciousness, and consider whether diverse systems might genuinely have such awareness.

The “Hard Problem” makes things hard The famously-intractable “Hard Problem of consciousness” is a question about how consciousness arises from physical matter (Chalmers 2017), essentially asking which kinds of physical materials might be capable of hosting conscious awareness (e.g. neurons in a brain? silicon CPU in a computer? ants in a colony?). Even if we were to answer the “Hard Problem”, we would still be faced with the fundamental question of defining the spatial boundary of a conscious system (Fekete et al. 2016). For example, it is commonly said that consciousness arises from the brain, but clearly not all parts of the brain are required for consciousness (Feuillet et al. 2007). All biological systems are comprised of multiple complex scales of organization (Noble 2013; Laubichler et al. 2015) and nested within larger biophysical systems (Gilbert et al. 2012). The theoretical difficulty that already exists in studying measurable biological phenotypes is exacerbated in the scientific study of consciousness, which frames consciousness as a system-level phenotype which can be studied in an evolutionary (Søvik and Perry 2016) and developmental framework. However, the only thing that researchers seem to agree on is that “consciousness” cannot be directly measured. In other words, a distinctively non-measurable trait of a biological system (level of consciousness) is being studied as if it were a measurable trait (e.g. wing length). In the case of studying consciousness as a trait within its phylogenetic context, some work deploys a biological epistemological pluralism (Seth et al. 2006), akin to pluralistic frameworks for animal behavior (Kellert et al. 2006; Longino 2000, 2013; Tabery et al. 2014). However as stated, studies of consciousness face the additional limitation that hidden internal states of systems (i.e. the extent of conscious awareness) are hidden, as opposed to the externally visible motor outputs of behavior (Tinbergen 1963; Gordon 1992; Silvertown and Gordon 1989). Thus something different than epistemological or methodological pluralism is required to circumvent some of the fundamental issues facing the scientific study of consciousness.

It is an interesting and paradoxical time for the scientific study of consciousness in natural and designed systems. A sizeable fraction of scientists seem to ignore the fact that diametrically-opposing viewpoints to their own are also tenable given exactly the same experimental results. Other scientists implicitly or explicitly believe that the collection of more data will resolve the neural basis of consciousness in the future, despite some evidence suggesting otherwise (Jonas and Kording 2017; Overgaard 2017). And yet other scientists may be simply unaware that logical and coherent arguments exist for consciousness in disaggregated entities such as the United States (Schwitzgebel 2015) and other “non-living” systems (Schwitzgebel 2018a). More experiments in human and animal brains will certainly be of biomedical and theoretical significance, but it seems that the irreconcilable heterogeneity of theories regarding “consciousness” may prevent any incontrovertible conclusions from being empirically reached by new experiments.

What seems to be needed is a novel and integrative approach to reconcile apparent axiomatic differences among scientific theories of consciousness. As social insect researchers, the authors noticed that social insect colonies are often thrown about as exemplars of the kind of disaggregated system that cannot have consciousness (e.g. a priori excluded from consideration), yet also referenced by lay persons as examples of higher-order “hive mind”-type consciousness. Both ends of this discourse (colonies are certainly not conscious, colonies are certainly conscious) are alien to the field of social insect behavior studies, yet commonly found in popular media (Decker 2013; Lasseter and Stanton 1998; Marvel 2015). Behavioral ecological research in the social insects addresses collective outcomes at the level of the colony via non-metaphysical means. Philosophically, the existence of such a stark bifurcation in the perception of ant colonies seems to require resolution, or clarification via empirical results.

At visual and perceptible time scales, a social insect colony exhibits behavior that is often referred to as “hive mind”-like, essentially implying a collectively conscious entity, in works of both science and science-fiction (Wheeler 1911; Hofstadter 1981; Seeley and Levien 1987; O’Sullivan 2010; Wilson 2017). Yet the field of social insect behavior never appeals to metaphysical accounts of colony behavior, focusing on neurological and algorithmic processes by which collective outcomes occur (Gordon 2014; Theraulaz 2014; Friedman and Gordon 2016; Feinerman and Korman 2017), as well as the psychological processes by which humans observe and define ant colony behavior (Gordon 1992). This stark contrast between the scientific realism of social insect ethological studies and less-grounded perspectives outside of the field motivates us to inject empirical information about social insect colony behavior back into the scientific discussion of consciousness. Our goal is not to support or deny the existence of consciousness in colonies—we remain agnostic on the issue here. Rather we use the ant colony as a test to explore whether the current contenders for a scientific theory of consciousness are providing us with consistent and useful information about the world.

1.2 Forward tests and reverse tests

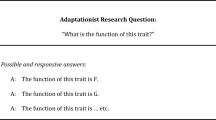

To help clarify the nature and utility of the Ant Colony Test for the scientific study of consciousness, we here discuss a dichotomy between “forward tests” and “reverse tests”. A “forward test” is the state of scientific operation that begins with a validated tool and goes into the world to explore a diversity of objects (Fig. 1a). This is normal science in the Kuhnian sense (Bird 2013; Matthes et al. 2017). A forward test is the usual (post-calibration) mode of operation for e.g. meter sticks or scales. In the normal operating mode, a forward test is used uncritically to accumulate new data within the previously-established paradigm (measuring the height or weight of objects, for example). A forward test might be considered to be the first data-collecting step in performing scientific induction (generalizing theory from empirical observations). In contrast, a “reverse test” is when a diversity of objects are brought to bear on a test for the purpose of tool calibration or assessment of internal/external coherence (Fig. 1b). A reverse test might be considered part of the deductive process, since it begins with theories about the world (e.g. objects don’t change in height or mass between sequential weighings), and then compares these theory-driven assumptions to empirical observations. This is revolutionary science in the Kuhnian sense, when the tools and methodology of a paradigm are challenged in a manner that can retroactively change the interpretation of previous results.

a Forward tests and b reverse tests, shown here in the context of a scale that determines the weight of a block. c A forward test for consciousness would be akin to a “scale” for determining the amount or type of consciousness of an arbitrary system. In all images, gray objects are trusted or assumed to be known, while red objects have unknown reliability or aspects

Operation of a forward test can reveal the need for a reverse test. To give a low-level example: if a trusted scale is used for repeated weighings of the same object and gives different results, the scale is no longer trusted. Then the mode of operation transitions from forward testing (trusted scale, unknown object weight) to reverse testing (unknown reliability of scale, requires objects of known weight to calibrate). At a higher level, a forward test can be a theoretical criterion rather than a measure of some physical trait. The presence of anomaly and need for reverse testing at a lower level can percolate through organizational or conceptual hierarchies to induce a fractal paradigm shift (Sihn 1997).

A forward test for consciousness would look like a metric that could be used to determine the conscious state of an arbitrary system (Fig. 1c), such as the presence of language, or a brain, or self-reflexive behavior. Indeed, the holy grail for the scientific study of consciousness would be a robust forward test for consciousness in the universe. However, no such forward test exists, even one that could answer a simple binary “is this system conscious or not” (Schwitzgebel 2018a, b), let alone anything more nuanced (Moor 2012; Bayne et al. 2016; Van Gulick 2018).

1.3 The Ant Colony Test (ACT)

Here, we examine a range of theories of consciousness through the lens of what we have dubbed the “Ant Colony Test” (ACT), i.e. does the theory predict or allow for consciousness in ant colonies? The ACT is thus a reverse test for theories of consciousness. As a reverse test, the function of the ACT is to ascertain the internal and external coherence of forward test-like theories of consciousness—for example, the notion that a brain, or language ability, or empathy, are necessary or sufficient for conscious awareness.

The ACT is not simply a thought experiment (Gedanken), though pure thought experiments have been tremendously influential in the study of conscious systems (Turing test, Searle’s “Chinese Room”, Brain in a vat, etc.). Rather, with ant colonies, we can perform experiments that are informative and tractable. Unlike brains, social insect colonies can be ethically manipulated (Gordon 1989), divided (Winston et al. 1990), or drugged (Friedman et al. 2018). Additionally, the tremendous evolutionary and ecological diversity of ant colonies (Ward 2014) provides researchers with natural experiments with which to explore various hypotheses.

2 The ACT and several frameworks for consciousness

Here we apply the Ant Colony Test (ACT) to various scientific frameworks for consciousness. As discussed above, contemporary theoretical frameworks for consciousness amount to “forward tests”, since they each focus on a specific suite of salient aspects of a system, such as neuronal connectivity, that are casual or diagnostic of system consciousness. We focus on four genres of forwards tests for consciousness, coming from four distinct fields. First we consider Neurobiological frameworks for consciousness of two types: structural models that focus on micro- or macro-histology, and functionalist models that emphasize dynamical aspects of brain function. Second, within a behaviorist framework we consider the trait of egocentric spatial awareness specifically. Third, we consider cognitive frameworks for consciousness, such as models that emphasize the role of emotional states or cognitive biases. Lastly, we consider mathematical frameworks that controversially formalize “consciousness” as (proxied by) something quantifiable about a system. Two kinds of mathematical frameworks are presented, either dealing with decision-making and long-term planning, or with information-theoretic calculations. For each of these four kinds of frameworks for consciousness we juxtapose the ACT against their forward tests, and evaluate what insights can be gleaned.

2.1 Neurobiological frameworks

Neuroscience Diverse contemporary authors conclude that neuroscience either supports (Dawkins 2012; Fabbro et al. 2015; Barron and Klein 2016; Mashour 2018) or denies (Dennett 1996, 2017; Sapolsky 2017) the existence of consciousness in humans and other brainy organisms. While some neuroscientists (and an eager science media) herald the unstoppable power of basic brain research to unlock the secrets of consciousness (Koch 2014; Koch et al. 2016), other researchers consider this question to be nothing more than the last evaporating vestiges of vitalism in biology. For example, Professor Robert Sapolsky memorably claimed that “If free will lurks in those interstices [of the brain yet unexplored by neuroscience], those crawl spaces are certainly shrinking” (Sapolsky 2004). Yet for those who constrain consciousness to only occur in brains, the “hard problem” falls squarely within the scope of neuroscience. In this case, the task is to identify the brain region (Crick and Koch 2005) or dynamic states (Calabrò et al. 2015; Tsuchiya et al. 2015; Mashour 2018) associated with consciousness—a challenging and unfinished project (Kurthen et al. 1998). We consider here forward tests for a neuroscientific basis of consciousness that rely on either structural or functional findings from neuroscience.

Structural neuroscience Structural neuroscientific frameworks focus on the anatomical correlates of consciousness, such as brain regional anatomy at a gross scale (Crick and Koch 2005), or neural circuit microarchitecture at the histological scale (De Sousa 2013; Grossberg 2017; Key and Brown 2018; Lacalli 2018). The ACT has little to say about such neuroanatomical theories: since an ant colony is not literally a brain (despite salient similarities, Hofstadter 1981), there is no possibility of consciousness in an ant colony according to any neuroanatomical theory. Neuroanatomical theories are, unsurprisingly, neurochauvinist (Walter 2009) and thus a priori exclude other interesting systems from scientific consideration (Baluška and Levin 2016; Dehaene et al. 2017). The ACT suggests that theories of consciousness merely implicating human brain regions or aspects of cellular anatomy (such as anything related to the quantum brain, Hameroff 2012; Hameroff and Penrose 2014) will have limited carryover into other species, and even less carryover into systems with radically different architectures (e.g. computers, Turing 1950; or nations, Schwitzgebel 2015).

Functional neuroscience Functionalist neuroscientific frameworks are worthy of more in-depth discussion in the context of the ACT. Functionalist frameworks abstract away from specific molecular details and brain regions to focus on the dynamical properties that might underlie conscious experience (Ross and Spurrett 2004; Shoemaker 1993), such as self-reference (Hofstadter 2007), recurrence (Barron and Klein 2016), and global binding patterns of neural activity (Crick and Koch 1990; Singer 1999; Uhlhaas et al. 2009). Dynamic brain imaging studies use technologies such as electroencephalogram (EEG) or functional magnetic resonance imaging (fMRI) in humans to study the plausible neural correlates of consciousness (Calabrò et al. 2015; Koch et al. 2016; Tsuchiya et al. 2015). It is beyond the scope of this work to discuss the specific brain regions and statistical patterns that have been implicated in conscious awareness. We merely indicate that rigorous consideration of the available evidence by disciplinary experts does not appear to yield any unambiguous conclusions (Mashour 2018), or even support the notion that brain imaging is indeed the correct means to study consciousness in humans. What is important to note is that all brain imaging paradigms agree that the neural correlates of consciousness change through time and can be proxied by dynamic observables of brain function. Or in other words, these neuroscientific forwards tests of consciousness believe that simple synaptic connectivity patterns are insufficient to give rise to consciousness: a dead brain in a freezer has the same synaptic connections as a living brain, yet is unresponsive and probably unconscious. Apparently something more than mere synaptic connectivity, something dynamic, is required to generate even the illusion of consciousness (Dennett 2001; Mangan 1993). The focus on neural dynamics arising from static connectivity patterns motivates the search for oscillatory processes occurring on the timeframe at which events become consciously aware to normal people: on the order of hundreds of milliseconds, as opposed to nanoseconds or hours. A classic example is an emphasis on rapid gamma-band neural synchrony as a proxy of awareness (Doesburg et al. 2009). However despite some correlations between neural binding patterns and aspects of consciousness in humans, it is not clear exactly how correlated firing rates of neurons might lead to phenomenological awareness (Chalmers 1996; LaRock 2006), which is a primary reason why functionalist neuroscientific theories of consciousness are mired in controversy over even the most basic questions in the field (Calabrò et al. 2015; Koch et al. 2016; Mashour 2018; Tsuchiya et al. 2015).

ACT meets functionalism The ACT as a reverse test can clarify some of the assumptions and implications of functionalist neuroscientific theories of consciousness. There is a large literature on synchronized collective rhythms in social insect colonies, where collective rhythms occur over time scales ranging from seconds to annual seasons (Bochynek et al. 2017; Cole 1991; Gordon 1983; Hayashi et al. 2012; Phillips et al. 2017; Richardson et al. 2017). Like neural rhythms, these oscillations in colony collective behavior are an emergent outcome of interactions among system subunits (Hofstadter 1981). Indeed, the dynamics of transient ensembles of neurons and task groups of ant workers show qualitative and quantitative similarities (Hofstadter 1981; Davidson et al. 2016). In both the brain and ant colony setting, higher-order outcomes are shaped by evolution to optimize the adaptive response to ecologically-important stimuli (Constant et al. 2018; Gordon 2014), as the case with brain dynamics (Sapolsky 2017). As noted above, a hardline anthropocentric interpretation of the brain imaging studies would conclude that only humans, or neurologically-similar species like apes, are conscious, which has low generalizability, especially when considered from an evolutionary perspective (Mashour and Alkire 2013; Søvik and Perry 2016). Abstracting away from the exact neural circuitry or brain regions apparently involved in consciousness in humans is a move towards Functionalism, and highlights the role of information-integrating processes in generating human awareness (Bayne and Carter 2018). Should we take this Functionalist turn, then ant colonies do indeed appear to exhibit multi-scale oscillatory processes that could be considered to be similar evidence for colony consciousness. In other words, if the oscillations in the brain are (a proxy for) consciousness, then similar but slower processes in ant colonies could be considered as the basis of (a proxy for) consciousness as well. For those who think that such multi-scale oscillations are not even proxies for consciousness in ant colonies, they must additionally explain why we can take similar neural oscillations to be causal or correlative of human consciousness (Crick and Koch 1990; Doesburg et al. 2009; Schmidt et al. 2018).

2.2 Spatial sense

Spatial awareness and organismal consciousness In two articles Barron and Klein (Barron and Klein 2016; Klein and Barron 2016) (henceforth B&K and K&B) set forth a framework for subjective experience in animals organized around the concept of a spatial sense. There they convincingly argue that an “integrated and egocentric representation of the world from the animal’s perspective is sufficient for subjective experience” (Barron and Klein 2016, p. 4900). The philosophical argument behind this is that if an organism has an internal model of how the world is supposed to behave in response to its motion, the discrepancy between the expected output of the model and the actual input from the organism’s sensory systems is sufficient for the organism to have a subjective experience of its own movement through the world. This basal system, with subjective experience built into it, has then through evolution been coadapted into more elaborate forms of subjective experience and is at the center of animal sentience. B&K go onto show how the neurobiological systems in the mammalian brain (Barron and Klein 2016; Klein and Barron 2016) are organized to enable a spatial sense like the one imagined above. It is precisely the kinds of neural circuits involved in this ability that are believed to be necessary for consciousness in humans (although some disagree; Hill 2016; Key 2016; Mallatt and Feinberg 2016). They then carefully establish a model for spatial processing in insect brains that aligns with what is currently know about the mammalian system, and argue that this can be taken for evidence of subjective experience in insects also (Barron and Klein 2016; Klein and Barron 2016).

B&K never state specifically that their theory of animal experience is a forward test of consciousness, yet in both of their articles they implicitly use it as such (Barron and Klein 2016; Klein and Barron 2016), e.g. “One of the virtues of the account we have endorsed is that it also gives an evidence-based argument for where to draw the line between the haves and have-nots” (Barron and Klein 2016, p. 4905). In addition, since their publication, several other authors have expanded their line of logic to argue for sentience in a wide range of other animal organisms (Shanahan 2016; Søvik and Perry 2016). It goes without saying that the arguments that B&K build roughly equate to a forward test for sentience in insects—it is the whole point of their argument (Barron and Klein 2016). But in the original paper they go on to speculate as to where one might find consciousness beyond insects. They deny consciousness to cube jellyfish on the grounds that the nervous system is entirely decentralized, so there is nowhere for spatial information to be integrated (Barron and Klein 2016), and this argument is presented much in the same manner in the second article (Klein and Barron 2016). Further, nematodes are not considered sentient either. K&B acknowledge the centralized nervous system of nematodes, but argue that there is nothing in their behavior that suggests that they have any awareness of their surroundings, e.g. they do not hunt for known objects in their environment, rather they respond to immediate stimuli around them (though this is not strictly true; Hendricks 2015; Shtonda and Avery 2006; Sokolowski 2010; Stern et al. 2017).

In the second article K&B again revisit the nematode and take the argument slightly further: They acknowledge that many different types of information are integrated simultaneously in the nematodes nervous system, but as there is no concept of space, no subjective experience can exist (Klein and Barron 2016). In both papers B&K open the door slightly for sentient crustaceans, based on the similarity of nervous systems between crustaceans and insects (not surprisingly, given that both are arthropods). Other authors have used B&K’s line of reasoning to argue for sentience in cephalopods and more basal invertebrates (Mather and Carere 2016; Søvik and Perry 2016). Like all theories of sentience, there are many that disagree with the arguments made by B&K, but it is clear that it is a theory that makes very specific predictions about what is required for sentience to exist and evolve, and as such we predict many will use it as an attempt at a forward test of consciousness in the years to come.

What would happen if we apply the ACT to the criteria of K&B? That is, apply the criteria of K&B to a colony of ants. This is a very different question from applying the criteria to an individual ant. Individual ants, like bees, do have a spatial sense, and as insects contain all the brain structures and circuitry pertinent to B&K’s argument (Fleischmann et al. 2018; Kim and Dickinson 2017; Wehner 2003). So we suspect that K&B would claim that an ant worker has a similar level of consciousness to a bee worker. To apply this theory at the colony level, the question we must ask is whether or not the colony, as a whole, has a spatial sense. It is tempting to dismiss this as a nonsense question out of hand—how can a whole colony of anything have a sense of its environment? But to dismiss the question without further investigation would be akin to a priori dismissing the spatial sense of a human or a single ant. After all, brains are simply made up of a bunch of individual neurons, so how can the entire organism have a sense of anything either? This is where the reverse test properties of the ACT start to emerge. Does the substance matter, e.g. neurons versus ants, or is it the processing of information that allows the spatial sense to exist that is the important factor for the model? It is hard to see anything from what B&K writes that would limit the described spatial sense to systems that process information with neurons. It just so happens that two independent systems (vertebrates and insects) have evolved the same solution to similar spatial navigation problem using neurons, though other systems might find non-neuronal solutions. Does the ant colony display the kind of spatial sense described by B&K as required for consciousness? Tentatively, it is tempting to say yes. Colonies behave as if they have awareness of their surrounding area: they exploit spatial resources in an efficient manner (Gordon 1995; Portha et al. 2002; Adler and Gordon 2003; Flanagan et al. 2013; Robinson 2014). Does the colony suffer from the same problem as the jellyfish? That is, is there a lack of integration of information? Given that the regulation of colony resource exploitation is a collective process in which no ant necessarily “knows” the entire outcome, it would be hard to argue that information about space is not integrated at the colony level. B&K present a further requirement that is more applicable to the ant colony: not only does there need to be integration of information, but the information integration must be centralized. How could information processing be centralized in a colony? Perhaps one could imagine that all the information would have to be integrated in one or a few ants (e.g. these central ants would somehow possess all the vital information for spatial processing—either individually or somehow shared amongst them). How does this compare to the situation in the nervous system? Do we imagine that one or a small number of neurons is where spatial information is integrated? Are those few neurons then the “seat” of subjective experience, or is their mere presence in a nervous system sufficient for more remote neurons to take part in the sea of experience? One could imagine that the lack of centralization could be used to argue why (current) computers cannot be said to be conscious, even if behaviorally they appear to process spatial information in accordance with K&B. While information is integrated in a computer, it does not occur in any centralized way (i.e. all transistors are equally important, none have privileged information others do not have, and the information is fully distributed). Further discussion of informational integration from the perspective of Integrated Information Theory is in a later section.

Further supporting the idea that ant colonies possess spatial awareness is that ant colonies are fooled by spatial illusions (Sakiyama and Gunji 2013, 2016), much in the same was a humans are fooled by visual illusion (Alexander 2017; Brancucci et al. 2011; Guterstam et al. 2015; Pereboom 2016). The human neurological response to a sensory illusion is taken by some as evidence that a change in conscious experience is occurring (Brancucci et al. 2011; Guterstam et al. 2015; Pereboom 2016; Alexander 2017). So should we then assume that the susceptibility of an ant colony to a spatial illusion can also be taken as evidence for a conscious experience at the colony level? Again one might claim that in an ant colony there is no central integration of information, hence no awareness of the illusion. However only a small fraction of neurons in the brain might be involved in processing visual impulses amplified by sensory cells. Yet all cells of the human are affected by the activity of just these few. It would not make sense to say that any skin cell or single neuron “fell for an illusion”. Similarly it does not make sense to say that any single worker was fooled, the illusion occurred via a colony-level process. Thus we cannot explain away the susceptibility of the colony to illusion by reductionistically appealing to individual worker behavior. The outcome of being fooled arises as a system property, in both humans and ant colonies (Baluška and Levin 2016). The spatially-organizing activity of colonies occurs as a consequence of interactions among individuals, most of which might not be directly involved in the organizational task. For example, the work of a small fraction of trash-worker nestmates can alter the distribution of colony waste, leading all other workers to alter their behavior through the process of stigmergy (Gordon and Mehdiabadi 1999; Theraulaz and Bonabeau 1999; Theraulaz 2014).

So after putting K&B through the ACT we have seen that there is one issue that needs to be clarified before K&B can be a more useful forward test. It is not clear what it is about centralization that makes it necessary, nor what it means for information processing to be centralized. Integrating information centrally does not, as far as we can think, alter the behavioural output in any way.

2.3 Cognitive frameworks

2.3.1 Emotional experience

It is perhaps not a coincidence that when people paraphrase Thomas Nagel’s now famous statement “An organism has conscious mental states if and only if there is something that it is like to be that organism—something that it is like for the organism to be itself.” (Nagel 1974), it is common to replace “something that it is like”, with “feels like something” to be a particular organism. That turn of phrase, which introduces the concept of “feeling”, is immediately understandable, though perhaps tautological—to assume it “feels like” anything at all is to already presume that the system in question is having some sort of conscious feeling experience. Thus arguments for consciousness by appeals to emotion appear unable to rigorously resolve scientific questions about consciousness, since they are essentially pre-loaded by the thinker’s axioms to result in whatever conclusions are desired (consistent with their value system). Despite this seeming logical weakness, the ability to feel is by many considered a key, if not the key, characteristic of consciousness (Damasio 2000; Panksepp 2004, 2011). From an animal welfare perspective, this trait has often been used as the defining feature that should be used when considering what rights animals should be granted (Dennett 1995; Singer 1975). Like all phenomena related to sentience, emotions are not directly measurable. By their very definitions, emotional qualia are private experiences to the subjects having them. This does of course make it difficult to assess the emotional state of individuals that cannot self-report what they are feeling, i.e. all beings except communicatively-capable humans. In order to circumvent this inhibition, the field of affective neuroscience has inferred feelings in non-human species by studying neural correlates of human emotions, so called affective states (Fabbro et al. 2015; Panksepp 2011). This research paradigm has been very useful as a wedge for proponents of animal welfare, culminating in the Cambridge declaration on consciousness (Allen and Trestman 2017; Low et al. 2012). The ability of animals to feel pain or suffer has since then been included in the legislation of many countries (e.g. all member countries of the European Union, European Commission 2016).

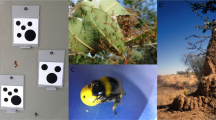

Cognitive bias as a forward test for the ability to feel Because it is not always tractable to study the neural response of animals directly and because the nervous systems of some animals differ fundamentally from humans in terms of structure, indicators for inferring affective states behaviorally across systems have been developed, such as mirror tests (Newen and Vogeley 2003; Toda and Platt 2015) or behavioral paradigms involving gambling with ambiguous stimuli (Perry et al. 2016). The most commonly used indicator here is the appearance of so-called “cognitive bias”. In cognitive bias paradigms, animals are trained to learn that one cue (or range of cues) is associated with a reward while another cue (or range of cues) is associated with something aversive. The animal is then given a treatment that is supposed to affect its emotional state, e.g. something that is meant to make the animal feel anxious, before being presented with an ambiguous cue that is intermediate between the cues (or range of cues) that has previously been associated with reward or punishment. In these experiments a change in behavior, such as being more reluctant to approach the ambiguous cue following the anxiety-inducing treatment, is interpreted as evidence that an emotional state has biased the cognitive decision of the animal (Mendl et al. 2009). Performing cognitive bias tests allows researchers to easily infer both positive and negative emotional states in all sorts of animals. This has been used to infer the presence of emotional states in a wide range of vertebrates (Bethell 2015). The cognitive bias paradigm has also been used to infer negative emotions in honey bees (Perry et al. 2016) and crayfish (Fossat et al. 2014), and more recently positive emotions in bumble bees (Alem et al. 2016).

Some have argued that it makes no sense to infer emotions in invertebrates on the basis of cognitive bias (Giurfa 2013), on the ground that simpler mechanisms, i.e. cognition without sentience, could explain the behavior. There seems to be no good argument for thinking that cognitive bias experiments allow us to infer emotional states in mammals, but not other animals. So far, every study on this phenomena in invertebrates has also included evidence that similar neurochemicals are involved in causing the emotional states as in mammals (Barron et al. 2010).

Putting cognitive bias through the ACT No one has explicitly tried cognitive bias experiments with a colony of ants. In theory such experiments are completely possible. Colonies do regulate their foraging based on external cues (Cooper et al. 1985; Núñez and Giurfa 1996) and modulate their behavioral response to external perturbations (Gordon 1986; Gordon et al. 1992). One can therefore imagine a setup where a colony learns to forage more in response to one cue, and reduce its foraging intensity in response to another cue. How would we interpret the result if the colony displayed a bias in its response to an ambiguous cue, after some treatment? The treatment could be pharmacological, such as dopamine (Friedman et al. 2018), a dopamine antagonist (Perry et al. 2016), or an opiate (Entler et al. 2016), or ecological (such as an increase in humidity or ambient light), and would be meant to shift the emotional state of the colony in a positive or negative direction. One could claim that in a such a situation, the experimenter is merely drugging individual ants, not the entire colony. In this case, should we consider caffeine to only be influencing individual neurons, rather than humans as individuals? When a human takes a drug that “alters their level of consciousness”, only a small fraction of their cells (e.g. neurons expressing a certain type of receptor) might be directly affected. However, the whole body’s behavior might be altered by the change in function of the few cells (not unlike the case with the visual illusions). By the same logic, if drugging a small fraction of workers leads to altered behavior of the colony, such a treatment would be as “consciousness-altering” as the case in humans. It is possible to imagine that shaking the whole colony would put it in an agitated or pessimistic state, causing it to forage less in response to an ambiguous cue, as well as display short term panic behaviors followed by medium-long term coping behavior. Immediately it is tempting to think that it would tell us nothing about the emotional state of the colony. But how is this criticism different from that levied against single honey bees? The rejection of cognitive bias at the colony level, perhaps by reductionistically localizing causality solely in changes in individual worker brains, would be akin to reducing organismal learning solely to some subset of neural changes. One might claim that colonies are performing cognition (computation) at a sufficient organismal-level complexity for consciousness, but these behaviors are simply not accompanied by qualia. Perhaps Daniel Dennett would call such a system a “p-zombie colony”. How would we ever be able to differentiate p-zombie colonies from qualia-laden, conscious colonies? In other words, if one rejects conscious experience at the colony level despite the appearance of cognitive bias in experiments, then they also lose grounding for analogous cognitive bias-based experiments regarding emotional experience in a range of species. In a paper that considers pessimistic honey bees, Bateson et al. state that “[i]t is logically inconsistent to claim that the presence of pessimistic cognitive biases should be taken as confirmation that dogs or rats are anxious but to deny the same conclusion in the case of honeybees” (Bateson et al. 2011). If in fact a case for conscious honey bees can be made (Barron and Klein 2016), then what would prevent us from saying that it is equally inconsistent to not apply the same standard to an ant colony?

2.4 Mathematical frameworks

The logic in the previous behavioral and neurobiological sections is built around the fundamental assumption that it is biological creatures that are the only systems that are plausibly conscious. There is somewhat of a bio- or neuro-chauvinism in the suggestion that only (certain) biological systems can exhibit self-awareness, yet such strong claims are rarely specified or directly supported in such research. Tethering theories of consciousness to biological systems implicitly comes with the axiomatic baggage of having to explain why non-neural or non-biological systems are unable to be conscious. Mathematical theories of consciousness tend to emphasize abstract informational and causal aspects of system function rather than biological idiosyncrasies such as cytoskeletal architecture or brain regional activity. This mathematical abstraction ideally results in a numbered or graded scale that quantifies the extent or type of consciousness in a system. The appeal of a numerical value that “measures” consciousness is undeniable to some and unthinkable to others. However, the benefits of such formal frameworks are clear in comparison to boutique theories that only apply to humans or neural systems. First, numerical accounts of consciousness may allow objectively better-informed medical and ethical decision-making in humans, for example by quantitatively resolving questions about the experiential awareness of e.g. pre-birth humans or those in a coma. Second, mathematical abstractions might provide a less-biased metric for measuring the extent of consciousness in other biological systems, so that consciousness might be studied in a truly phylogenetic framework (Mashour and Alkire 2013; Barron and Klein 2016; Søvik and Perry 2016). Third, it might allow meaningful quantification of the level of consciousness in unconventional systems such as “artificially intelligent” computers (Moor 2012) or countries (Schwitzgebel 2015). Generally, these Mathematical theories formalize some quantitative proxy of a system’s conscious capacity via appealing to the internal causal power or capacity for informational integration of static or dynamical aspects of the system.

First we consider Integrated Information Theory (IIT) as a forward test for consciousness, then reflect what the ACT as a reverse test reveals about IIT and probably other theories of consciousness based upon the topological (Bayne and Carter 2018) or geometric (Fekete et al. 2016) integration of information. IIT is a mathematical theory of consciousness that is to be commended for its intellectual boldness (Bayne et al. 2016; Tononi et al. 2016; Tononi and Koch 2015), though it is not without critique (Bayne 2018; Schwitzgebel 2018b). IIT takes an opposite approach to consciousness than many other scientific theories. Rather than focusing on the commonalities of biological systems that are agreed to be conscious and then using inductive logic to explain how consciousness appears in these systems, IIT begins with 5 axioms (basic, unjustified statements) about consciousness, then deduces conclusions about which systems might have what amount of consciousness. The quantity of consciousness in a system is measured with a single number reflecting the system’s ability to irreducibly integrate information, denoted with the character Φ. IIT predicts that systems with larger values of Φ are more conscious, though it should be added that Φ calculations do not exist for any real biological systems so it is not clear what specific values would mean. This definition leads to some unintuitive conclusions about which systems are conscious (Aaronson 2014), though this may be a general feature of all frameworks that attempt to quantify consciousness (Schwitzgebel 2018a). IIT predicts that consciousness occurs at a maximum Φ value among all possible spatial and temporal partitionings of the system, denoted as Φmax. IIT predicts that the subsystem with the highest Φmax is the one at which consciousness occurs at. Information integration certainly occurs within the brain of an ant or bee worker, which is the basis for the claims of minimal self-awareness in individual workers by B&K (Barron and Klein 2016). However, truly emergent forms of cognition arise at the level of the colony (Marshall et al. 2009; Feinerman and Korman 2017), enabled by the patterns of information transfer among workers (Gordon 2010). The appearance of irreducible informational processes at the colony level seems to support a non-trivial increase in Φ from aggregates of separated workers compared to an integrated colony, suggesting that Φmax is occurring at the colony level. While it is too early to make claims about IIT demonstrating consciousness at the level of an ant colony, it does seem that the colony will have a Φmax higher than that of aggregate individual workers, and thus represent a conscious entity.

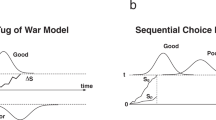

Second, we consider the The Free Energy Principle (FEP) as a forward test for consciousness in dynamical systems, then reflect on how the ACT serves as a reverse test for FEP. Specifically we discuss FEP in the context of long-term planning as an attribute of conscious systems, an idea framed in less-quantitative ways by other sources (Proverbs 6:6–8). Based upon the physical evolution of physical systems, FEP is a far-reaching framework that considers biological systems as dynamic multilevel processes that fundamentally perform statistical inference on themselves and their environment (Friston 2012, 2017a; Kirchhoff et al. 2018; Ramstead et al. 2017). Successful system persistence is equivalent to, and underlain by, the act of self-evidencing (Friston 2018). The persistence of any dissipative system amidst the chaos and struggle of our entropic world directly implies an adequate generative model of the system’s ecological context (Constant et al. 2018). FEP has implications in many physical and biological domains, though here we concern ourselves mainly with what FEP says about conscious and self-aware systems. As a system’s “temporal thickness” (ability to perform successful inference over long time scales) increases, FEP says that a system becomes increasingly sentient (Friston 2017b, 2018; Friston et al. 2018). Ant colonies certainly appear to plan for the future amidst uncertainty (Crompton 1954; Hölldobler and Wilson 1990; Hasegawa et al. 2016). Simply put, the ability of the ant colony to perform long-term (e.g. multi-month/year) planning amidst stochastic environmental conditions is crucial for colony survival and fitness (Gordon 2013). This suggests that effective temporal models at the colony level are shaped by natural selection over millions of years in the > 14,000 extant ant species, consistent with FEP’s phrasing of consciousness as a natural process of multilevel inference (Friston 2017b). Because of the deep temporal colony-level models that allow long-term planning of ant colony behavior, FEP would appropriately consider the ant colony a true “self”.

According to recent contributions, self-awareness goes beyond mere temporal thickness in two important and related ways (Friston 2018; Friston et al. 2018). The first key addition that self-awareness requires beyond temporal thickness is the ability to generate and entertain counterfactual scenarios, then the ability to test these counterfactuals using active inference (Bruineberg et al. 2016). For example while reading, you might imagine that this sentence could have been written differently (which makes you conscious according to FEP), while an automated text-scraping program would read the sentence without considering alternatives (and hence is not conscious). In an interview, Dr. Karl Friston notes that the ant colony performs long-term planning, but that it is not clear whether the ant colony entertains counterfactuals (Friston et al. 2018). Perhaps an example of a colony-level counterfactual occur when the dynamic interaction network of the colony (Gordon 2010) simulates the state in which the colony is hungrier than it is, e.g. to stimulate foraging (Johnson and Linksvayer 2010). The second prerequisite of FEP for conscious awareness, beyond the appearance of long-term planning via counterfactuals, is an internal model that differentiates self-caused events from events beyond the causal reach of the system. For biological systems that engage with an environment rich in similar agents (e.g. humans interacting with humans, Friston 2018; or worms interacting with worms, Friston et al. 2018), entertaining long-term generative models of the environment entails parsing out the causal influence of one’s self from other similar selves. It is the case that ant colonies are often interacting with other ant colonies nearby, of the same or different species (Adler et al. 2018). The interactions among conspecific colonies are crucial for ecological success since neighboring colonies are in direct competition, and colonies update their behavior over the timescale of days and years to adjust to the presence of nearby colonies (Gordon 1995; Adler and Gordon 2003). Thus it does seem to be the case that ant colonies have thick temporal models of their environment, probably including the influence of conspecific neighbors and with an ability to differentiate between causes under the control of the colony from causes that are not within the colony’s control. In summary, FEP might support the notion of a rudimentary self-awareness or genuine self-consciousness for ant colonies, due to their ability to perform long-term planning, possibly entertain counterfactuals, and maintain a collective model of self- vs. non-self. However few experiments have been performed to investigate counterfactuals or colony-level temporal models in the social insects, so more work is required.

3 Overview of the “Ant Colony Test” across disciplines

We presented the ACT as a reverse test for theories of consciousness. The ACT has advantages over other thought experiments for theories of consciousness (Turing 1950; Moor 2012; Cole 2014; Fekete et al. 2016) in that ant colonies have a large amount of empirical work previously done in the system, and there are growing possibilities for further neurobehavioral experiments to test theories of consciousness (Alem et al. 2016; Perry et al. 2016). Thus the ant colony is a useful model system for future philosophical and scientific work on consciousness. Colonies are embedded within nests that exist within ecosystems, just like brains are embedded within bodies that exist within ecosystems. Thus ant colonies, like human organisms, represent encultured extended phenotypes that perform extensive niche construction. Also like brains, colonies plus their built environments are the unit of selection and bearer of fitness (Wheeler 1911; Linksvayer 2015; Boomsma and Gawne 2017). Thus from the ecological and evolutionary perspectives, it stands to reason that emergent self-reflexive dynamics might arise at the scale of the entire colony (beyond even that of the worker) for the same reason that such dynamics have been selected for in organisms such as humans.

Though neuro-centric frameworks for consciousness a priori exclude ant colonies from possessing consciousness, multiple other frameworks discussed above do predict that awareness might occur at the level of the colony. We are then left to either accept the claim that colonies of ants are phenomenologically-aware entities, or to acknowledge that current approaches to investigating consciousness scientifically are individually inadequate and collectively inconsistent. This unresolved bifurcation implies that while current disciplinary approaches to the study of consciousness may provide fascinating insights into the physiology of the brain and the statistics of information processing by cognitive systems, they may be unable to answer fundamental questions about the nature of consciousness. While we do not dispute the soundness of the scientific investigations conducted by most consciousness researchers, we hold that measurable proxies of consciousness are limited or ambiguous at best, and misleading at worst.

As a consequence, we welcome the authors/followers of theories that allow for conscious colonies to either (1) explain why their theory is plausible if they reject the idea that colonies are genuinely conscious despite fulfilling the requisites of the theory, or (2) elaborate on the character of colony consciousness and explore how a disaggregated entity like a colony can give rise to a unified conscious experience.

4 Conclusion: go to the ant, thou philosopher

Humans have long been fascinated by the ineffable nature of their own conscious awareness, and have extended this questioning to ask whether other systems might have similar conscious experiences as well. Today, the interdisciplinary field of “consciousness studies” addresses these mysteries through a diversity of philosophical, cognitive, biological, and mathematical frameworks. Sundry frameworks for consciousness fall under the category of what we call a “forward test”, in that they aim to provide a metric that could assess the level of consciousness of arbitrary human states or non-human systems. However, the lack of coherent theory in this area makes the progress research chaotic at best and confusing at worst. Really the hunt is on for reverse tests now: questions, experiments, and perspectives that are able to group or differentiate various frameworks for studying consciousness.

In service to the challenge of developing a scientific theory of consciousness, we took an empirical and pluralistic approach by assessing how diverse “theories of consciousness” might assess the status of awareness in an ant colony. The answers were predictably unsatisfying to the question of whether a colony is or is not conscious, as resolving the actual phenomenological status of a colony was not our agenda. Instead, the use of the ACT reveals that even without definitively answering whether colonies do have consciousness, a rigorous reverse test for theories of consciousness might be useful for the field. This work is just the beginning of a more formal meta-analysis of consciousness and collective behavior across scales in the natural and designed worlds: the foraging trip of a thousand nestmates begins with a single pheromone trail.

References

Aaronson, S. (2014). Giulio Tononi and me: A phi-nal exchange. Shtetl-optimized blog. https://www.scottaaronson.com/blog/?p=1823. Accessed May 30, 2018.

Adler, F. R., & Gordon, D. M. (2003). Optimization, conflict, and nonoverlapping foraging ranges in ants. The American Naturalist, 162(5), 529–543.

Adler, F. R., Quinonez, S., Plowes, N., & Adams, E. S. (2018). Mechanistic models of conflict between ant colonies and their consequences for territory scaling. American Naturalist, 192(2), 204–216.

Alem, S., Perry, C. J., Zhu, X., Loukola, O. J., Ingraham, T., Søvik, E., et al. (2016). Associative mechanisms allow for social learning and cultural transmission of string pulling in an insect. PLoS Biology, 14(10), e1002564.

Alexander, S. (2017). Why are transgender people immune to optical illusions? Slate star codex. http://slatestarcodex.com/2017/06/28/why-are-transgender-people-immune-to-optical-illusions/. Accessed October 5, 2018.

Allen, C., & Trestman, M. (2017). Animal consciousness. In E. N. Zalta (Ed.) The stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University. https://plato.stanford.edu/archives/win2017/entries/consciousness-animal/. Accessed January 2, 2019.

Animal welfare - Food Safety - European Commission. (2016). Food safety. https://ec.europa.eu/food/animals/welfare_en. Accessed October 23, 2018.

Baluška, F., & Levin, M. (2016). On having no head: Cognition throughout biological systems. Frontiers in Psychology, 7, 902.

Barron, A. B., & Klein, C. (2016). What insects can tell us about the origins of consciousness. Proceedings of the National Academy of Sciences of the United States of America, 113(18), 4900–4908.

Barron, A. B., Søvik, E., & Cornish, J. L. (2010). The roles of dopamine and related compounds in reward-seeking behavior across animal phyla. Frontiers in Behavioral Neuroscience, 4, 163.

Bateson, M., Desire, S., Gartside, S. E., & Wright, G. A. (2011). Agitated honeybees exhibit pessimistic cognitive biases. Current Biology: CB, 21(12), 1070–1073.

Bayne, T. (2018). On the axiomatic foundations of the integrated information theory of consciousness. Neuroscience of Consciousness, 2018(1), niy007.

Bayne, T., & Carter, O. (2018). Dimensions of consciousness and the psychedelic state. Neuroscience of Consciousness, 2018(1), niy008.

Bayne, T., Hohwy, J., & Owen, A. M. (2016). Are there levels of consciousness? Trends in Cognitive Sciences, 20(6), 405–413.

Bethell, E. J. (2015). A “how-to” guide for designing judgment bias studies to assess captive animal welfare. Journal of Applied Animal Welfare science: JAAWS, 18(sup1), S18–S42.

Bird, A. (2013). Thomas Kuhn. In E. N. Zalta (Ed.) The stanford encyclopedia of philosophy. Metaphysics Research Lab, Stanford University. https://plato.stanford.edu/archives/fall2013/entries/thomas-kuhn/. Accessed January 2, 2019.

Block, N. (1995a). On a confusion about a function of consciousness. The Behavioral and Brain Sciences, 18(2), 227–247.

Block, N. (1995b). How many concepts of consciousness? The Behavioral and Brain Sciences, 18(2), 272–287.

Block, N. (2007). Consciousness, accessibility, and the mesh between psychology and neuroscience. The Behavioral and Brain Sciences, 30(5–6), 481–499.

Bochynek, T., Tanner, J. L., Meyer, B., & Burd, M. (2017). Parallel foraging cycles for different resources in leaf-cutting ants: A clue to the mechanisms of rhythmic activity. Ecological Entomology, 42(6), 849–852.

Boomsma, J. J., & Gawne, R. (2017). Superorganismality and caste differentiation as points of no return: How the major evolutionary transitions were lost in translation. Biological Reviews of the Cambridge Philosophical Society. https://doi.org/10.1111/brv.12330.

Brancucci, A., Franciotti, R., D’Anselmo, A., Della Penna, S., & Tommasi, L. (2011). The sound of consciousness: Neural underpinnings of auditory perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 31(46), 16611–16618.

Bruineberg, J., Kiverstein, J., & Rietveld, E. (2016). The anticipating brain is not a scientist: The free-energy principle from an ecological-enactive perspective. Synthese, 195(6), 2417–2444.

Calabrò, R. S., Cacciola, A., Bramanti, P., & Milardi, D. (2015). Neural correlates of consciousness: What we know and what we have to learn! Neurological Sciences: Official Journal of the Italian Neurological Society and of the Italian Society of Clinical Neurophysiology, 36(4), 505–513.

Chalmers, D. (1996). On the search for the neural correlate of consciousness. Cogprints. http://cogprints.org/227/. Accessed October 4, 2018.

Chalmers, D. (2017). The hard problem of consciousness. In S. Schneider & M. Velmans (Eds.), The blackwell companion to consciousness (Vol. 4, pp. 32–42). Chichester, UK: Wiley.

Cole, B. J. (1991). Short-term activity cycles in ants: Generation of periodicity by worker interaction. The American Naturalist, 137(2), 244–259.

Cole, D. (2014). The Chinese room argument. Stanford encyclopedia of philosophy. https://stanford.library.sydney.edu.au/entries/chinese-room/. Accessed October 8, 2018.

Constant, A., Ramstead, M. J. D., Veissière, S. P. L., Campbell, J. O., & Friston, K. J. (2018). A variational approach to niche construction. Journal of the Royal Society, Interface/the Royal Society, 15(141), 20170685.

Cooper, P. D., Schaffer, W. M., & Buchmann, S. L. (1985). Temperature regulation of honey bees (Apis mellifera) foraging in the Sonoran desert. The Journal of Experimental Biology, 114(1), 1–15.

Crick, F., & Koch, C. (1990). Towards a neurobiological theory of consciousness. Seminars in the Neurosciences, 2, 263–275.

Crick, F. C., & Koch, C. (2005). What is the function of the claustrum? Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 360(1458), 1271–1279.

Crompton, J. (1954). Ways of the ant. Boston: Houghton Mifflin.

Damasio, A. (2000). The feeling of what happens: Body and emotion in the making of consciousness (1st ed.). Wilmington: Mariner Books.

Davidson, J. D., Arauco-Aliaga, R. P., Crow, S., Gordon, D. M., & Goldman, M. S. (2016). Effect of interactions between harvester ants on forager decisions. Frontiers in Ecology and Evolution, 4, 115.

Dawkins, M. S. (2012). Why animals matter: Animal consciousness, animal welfare, and human well-being (1st ed.). Oxford: Oxford University Press.

De Sousa, A. (2013). Towards an integrative theory of consciousness: Part 1 (neurobiological and cognitive models). Mens Sana Monographs, 11(1), 100–150.

Decker, K. S. (2013). Enders game and philosophy the logic gate is down. Hoboken: Wiley.

Dehaene, S., Lau, H., & Kouider, S. (2017). What is consciousness, and could machines have it? Science, 358(6362), 486–492.

Dennett, D. (2001). Are we explaining consciousness yet? Cognition, 79(1–2), 221–237.

Dennett, D. C. (1995). Animal Consciousness: What matters and why. Social Research, 62(3), 691–710.

Dennett, D. C. (1996). Darwin’s dangerous idea: Evolution and the meanings of life. New York City: Simon and Schuster.

Dennett, D. C. (2017). From bacteria to bach and back: The evolution of minds. New York City: W.W. Norton.

Doesburg, S. M., Green, J. J., McDonald, J. J., & Ward, L. M. (2009). Rhythms of consciousness: Binocular rivalry reveals large-scale oscillatory network dynamics mediating visual perception. PLoS ONE, 4(7), e6142.

Entler, B. V., Cannon, J. T., & Seid, M. A. (2016). Morphine addiction in ants: A new model for self-administration and neurochemical analysis. The Journal of Experimental Biology, 219(Pt 18), 2865–2869.

Fabbro, F., Aglioti, S. M., Bergamasco, M., Clarici, A., & Panksepp, J. (2015). Evolutionary aspects of self- and world consciousness in vertebrates. Frontiers in Human Neuroscience, 9, 157.

Fazekas, P., & Overgaard, M. (2016). Multidimensional models of degrees and levels of consciousness. Trends in Cognitive Sciences, 20(10), 715–716.

Feinerman, O., & Korman, A. (2017). Individual versus collective cognition in social insects. The Journal of Experimental Biology, 220(Pt 1), 73–82.

Fekete, T., van Leeuwen, C., & Edelman, S. (2016). System, subsystem, hive: Boundary problems in computational theories of consciousness. Frontiers in Psychology, 7, 1041.

Feuillet, L., Dufour, H., & Pelletier, J. (2007). Brain of a white-collar worker. The Lancet, 370(9583), 262.

Flanagan, T. P., Pinter-Wollman, N. M., Moses, M. E., & Gordon, D. M. (2013). Fast and flexible: Argentine ants recruit from nearby trails. PLoS ONE, 8(8), e70888.

Fleischmann, P. N., Rössler, W., & Wehner, R. (2018). Early foraging life: Spatial and temporal aspects of landmark learning in the ant Cataglyphis noda. Journal of Comparative Physiology. A, Neuroethology, Sensory, Neural, and Behavioral Physiology. https://doi.org/10.1007/s00359-018-1260-6.

Fossat, P., Bacqué-Cazenave, J., De Deurwaerdère, P., Delbecque, J.-P., & Cattaert, D. (2014). Comparative behavior. Anxiety-like behavior in crayfish is controlled by serotonin. Science, 344(6189), 1293–1297.

Friedman, D. A., & Gordon, D. M. (2016). Ant genetics: Reproductive physiology, worker morphology, and behavior. Annual Review of Neuroscience, 39(1), 41–56.

Friedman, D. A., Pilko, A., Skowronska-Krawczyk, D., Krasinska, K., Parker, J. W., Hirsh, J., et al. (2018). The role of dopamine in the collective regulation of foraging in harvester ants. iScience. https://doi.org/10.1016/j.isci.2018.09.001.

Friston, K. (2012). The history of the future of the Bayesian brain. NeuroImage, 62(2), 1230–1233.

Friston, K. (2017a). Sentient dynamics: The kiss of chaos * through the markov blanket. Chaos and Complexity Letters; Hauppauge, 11(1), 117–140.

Friston, K. (2017b). Consciousness is not a thing, but a process of inference—Karl Friston | Aeon Essays. Aeon. Aeon. https://aeon.co/essays/consciousness-is-not-a-thing-but-a-process-of-inference. Accessed October 19, 2017.

Friston, K. (2018). Am i self-conscious? (or does self-organization entail self-consciousness?). Frontiers in Psychology, 9, 579.

Friston, K., Martin, F., & Friedman, D. A. (2018). Of woodlice and men. ALIUS Bulletin, 2, 17–43.

Gilbert, S. F., Sapp, J., & Tauber, A. I. (2012). A symbiotic view of life: We have never been individuals. The Quarterly Review of Biology, 87(4), 325–341.

Giurfa, M. (2013). Cognition with few neurons: Higher-order learning in insects. Trends in Neurosciences, 36(5), 285–294.

Goff, P. (2017). Consciousness and fundamental reality. New York: Oxford University Press.

Gordon, D. M. (1983). The relation of recruitment rate to activity rhythms in the harvester ant, Pogonomyrmex barbatus (F. Smith) (Hymenoptera: Formicidae). Journal of the Kansas Entomological Society, 56(3), 277–285.

Gordon, D. M. (1986). The dynamics of the daily round of the harvester ant colony (Pogonomyrmex barbatus). Animal Behaviour, 34(5), 1402–1419.

Gordon, D. M. (1989). Dynamics of task switching in harvester ants. Animal Behaviour, 38(2), 194–204.

Gordon, D. M. (1992). Wittgenstein and ant-watching. Biology & Philosophy, 7(1), 13–25.

Gordon, D. M. (1995). The development of an ant colony’s foraging range. Animal Behaviour, 49(3), 649–659.

Gordon, D. M. (2010). Ant encounters: Interaction networks and colony behavior. Princeton: Princeton University Press.

Gordon, D. M. (2013). The rewards of restraint in the collective regulation of foraging by harvester ant colonies. Nature, 498(7452), 91–93.

Gordon, D. M. (2014). The ecology of collective behavior. PLoS Biology, 12(3), e1001805.

Gordon, D. M., Goodwin, B. C., & Trainor, L. E. H. (1992). A parallel distributed model of the behaviour of ant colonies. Journal of Theoretical Biology, 156(3), 293–307.

Gordon, D. M., & Mehdiabadi, N. J. (1999). Encounter rate and task allocation in harvester ants. Behavioral Ecology and Sociobiology, 45(5), 370–377.

Grossberg, S. (2017). Towards solving the hard problem of consciousness: The varieties of brain resonances and the conscious experiences that they support. Neural Networks: The Official Journal of the International Neural Network Society, 87, 38–95.

Guterstam, A., Björnsdotter, M., Gentile, G., & Ehrsson, H. H. (2015). Posterior cingulate cortex integrates the senses of self-location and body ownership. Current Biology: CB, 25(11), 1416–1425.

Hameroff, S. (2012). How quantum brain biology can rescue conscious free will. Frontiers in Integrative Neuroscience, 6(October), 93.

Hameroff, S., & Penrose, R. (2014). Consciousness in the universe: A review of the “Orch OR” theory. Physics of Life Reviews, 11(1), 39–78.

Hasegawa, E., Ishii, Y., Tada, K., Kobayashi, K., & Yoshimura, J. (2016). Lazy workers are necessary for long-term sustainability in insect societies. Scientific Reports, 6, 20846.

Hayashi, Y., Yuki, M., Sugawara, K., Kikuchi, T., & Tsuji, K. (2012). Rhythmic behavior of social insects from single to multibody. Robotics and Autonomous Systems, 60(5), 714–721.

Hendricks, M. (2015). Neuroecology: Tuning foraging strategies to environmental variability. Current Biology: CB, 25(12), R498–R500.

Hill, C. S. (2016). Insects: Still looking like zombies. Animal Sentience: An Interdisciplinary Journal on Animal Feeling, 1(9), 20.

Hofstadter, D. R. (1981). Prelude… ant fugue. The Mind’s I: Fantasies and Reflections on Self and Soul, 149.

Hofstadter, D. R. (2007). I am a strange loop. New York: Basic Books.

Hölldobler, B., & Wilson, E. O. (1990). The ants. Cambridge: Harvard University Press.

Johnson, B. R., & Linksvayer, T. A. (2010). Deconstructing the superorganism: Social physiology, groundplans, and sociogenomics. The Quarterly Review of Biology, 85(1), 57–79.

Jonas, E., & Kording, K. P. (2017). Could a neuroscientist understand a microprocessor? PLoS Computational Biology, 13(1), e1005268.

Kellert, S. H., Longino, H. E., & Kenneth Waters, C. (2006). Scientific pluralism. Minneapolis: University of Minnesota Press.

Key, B. (2016). Why fish do not feel pain. Animal Sentience, 1(3), 1.

Key, B., & Brown, D. (2018). Designing brains for pain: Human to mollusc. Frontiers in Physiology, 9, 1027.

Kim, I. S., & Dickinson, M. H. (2017). Idiothetic path integration in the fruit fly Drosophila melanogaster. Current Biology: CB, 27(15), 2227.e3–2238.e3.

Kirchhoff, M., Parr, T., Palacios, E., Friston, K., & Kiverstein, J. (2018). The Markov blankets of life: Autonomy, active inference and the free energy principle. Journal of the Royal Society, Interface/the Royal Society, 15(138), 20170792.

Klein, C., & Barron, A. B. (2016). Insect consciousness: Commitments, conflicts and consequences. Animal Sentience: An Interdisciplinary Journal on Animal Feeling, 1(9), 21.

Koch, C. (2014). Neuronal “Superhub” might generate consciousness. Scientific American. https://doi.org/10.1038/scientificamericanmind1114-24.

Koch, C., Massimini, M., Boly, M., & Tononi, G. (2016). Neural correlates of consciousness: Progress and problems. Nature Reviews Neuroscience, 17(5), 307–321.

Kurthen, M., Grunwald, T., & Elger, C. E. (1998). Will there be a neuroscientific theory of consciousness? Trends in Cognitive Sciences, 2(6), 229–234.

Lacalli, T. (2018). Amphioxus neurocircuits, enhanced arousal, and the origin of vertebrate consciousness. Consciousness and Cognition. https://doi.org/10.1016/j.concog.2018.03.006.

LaRock, E. (2006). Why neural synchrony fails to explain the unity of visual consciousness. Behavior and Philosophy, 34, 39–58.

Lasseter, J., & Stanton, A. (1998). A bug’s life. USA. https://www.imdb.com/title/tt0120623/. Accessed January 2, 2019.

Laubichler, M. D., Stadler, P. F., Prohaska, S. J., & Nowick, K. (2015). The relativity of biological function. Theory in Biosciences = Theorie in den Biowissenschaften, 134(3–4), 143–147.

Linksvayer, T. A. (2015). Chapter eight—The molecular and evolutionary genetic implications of being truly social for the social insects. In A. Zayed & C. F. Kent (Eds.), Advances in insect physiology (Vol. 48, pp. 271–292). Cambridge: Academic Press.

Longino, H. E. (2000). Toward an epistemology for biological pluralism. In Biology and Epistemology, pp. 261–286.

Longino, H. E. (2013). Studying human behavior (pp. 3430623–3430810). University of Chicago Press. http://www.press.uchicago.edu/ucp/books/book/chicago/S/bo13025491.html. Accessed December 11, 2017.

Low, P., Panksepp, J., Reiss, D., Edelman, D., Van Swinderen, B., & Koch, C. (2012). The Cambridge declaration on consciousness. In Francis crick memorial conference, Cambridge, England.

Mallatt, J., & Feinberg, T. E. (2016). Insect consciousness: Fine-tuning the hypothesis. Animal Sentience: An Interdisciplinary Journal on Animal Feeling, 1(9), 10.

Mangan, B. (1993). Dennett, consciousness, and the sorrows of functionalism. Consciousness and Cognition, 2(1), 1–17.

Marshall, J. A. R., Bogacz, R., Dornhaus, A., Planqué, R., Kovacs, T., & Franks, N. R. (2009). On optimal decision-making in brains and social insect colonies. Journal of the Royal Society, Interface/the Royal Society, 6(40), 1065–1074.

Marvel. (2015). Ant–man (film). Wikipedia, the free encyclopedia. https://en.wikipedia.org/w/index.php?title=Ant-Man_(film)&oldid=862864493. Accessed October 9, 2018.

Mashour, G. A. (2018). The controversial correlates of consciousness. Science, 360(6388), 493–494.

Mashour, G. A., & Alkire, M. T. (2013). Evolution of consciousness: Phylogeny, ontogeny, and emergence from general anesthesia. Proceedings of the National Academy of Sciences of the United States of America, 110(Suppl 2), 10357–10364.

Mather, J. A., & Carere, C. (2016). Cephalopods are best candidates for invertebrate consciousness. Animal sentience: An. http://animalstudiesrepository.org/cgi/viewcontent.cgi?article=1127&context=animsent. Accessed January 2, 2019.

Matthes, J., Davis, C. S., & Potter, R. F. (Eds.). (2017). Normal science and paradigm shift. In The international encyclopedia of communication research methods (Vol. 55, pp. 1–17). Hoboken, NJ, USA: Wiley.

Mendl, M., Burman, O. H. P., Parker, R. M. A., & Paul, E. S. (2009). Cognitive bias as an indicator of animal emotion and welfare: Emerging evidence and underlying mechanisms. Applied Animal Behaviour Science, 118(3), 161–181.

Mitchell, S. D. (2002). Integrative pluralism. Biology and Philosophy, 17(1), 55–70.

Moor, J. H. (2012). The turing test: The elusive standard of artificial intelligence. Berlin: Springer.

Morin, A. (2006). Levels of consciousness and self-awareness: A comparison and integration of various neurocognitive views. Consciousness and Cognition, 15(2), 358–371.

Nagel, T. (1974). What is it like to be a bat? The Philosophical Review, 83(4), 435–450.

Newen, A., & Vogeley, K. (2003). Self-representation: Searching for a neural signature of self-consciousness. Consciousness and Cognition, 12(4), 529–543.

Noble, D. (2013). A biological relativity view of the relationships between genomes and phenotypes. Progress in Biophysics and Molecular Biology, 111(2–3), 59–65.

Núñez, J. A., & Giurfa, M. (1996). Motivation and regulation of honey bee foraging. Bee World, 77(4), 182–196.

O’Sullivan, J. (2010). Collective consciousness in science fiction. Foundations. http://search.proquest.com/openview/036b39d2d8caf299d7e01c8ed5ada97b/1?pq-origsite=gscholar&cbl=636386. Accessed January 2, 2019.

Overgaard, M. (2017). The status and future of consciousness research. Frontiers in Psychology, 8, 1719.

Panksepp, J. (2004). Affective neuroscience: The foundations of human and animal emotions. Oxford: Oxford University Press.

Panksepp, J. (2011). Toward a cross-species neuroscientific understanding of the affective mind: Do animals have emotional feelings? American Journal of Primatology, 73(6), 545–561.

Pereboom, D. (2016). Illusionism and anti-functionalism about phenomenal consciousness. Journal of Consciousness Studies, 23(11–12), 172–185.

Perry, C. J., Baciadonna, L., & Chittka, L. (2016). Unexpected rewards induce dopamine-dependent positive emotion–like state changes in bumblebees. Science, 353(6307), 1529–1531.

Phillips, Z. I., Zhang, M. M., & Mueller, U. G. (2017). Dispersal of Attaphila fungicola, a symbiotic cockroach of leaf-cutter ants. Insectes Sociaux, 64(2), 1–8.

Portha, S., Deneubourg, J.-L., & Detrain, C. (2002). Self-organized asymmetries in ant foraging: A functional response to food type and colony needs. Behavioral Ecology: Official Journal of the International Society for Behavioral Ecology, 13(6), 776–781.

Proverbs. (n.d.). Bible. Bible. https://www.biblegateway.com/passage/?search=Proverbs+6%3A6-8&version=NIV. Accessed October 8, 2018.

Ramstead, M. J. D., Badcock, P. B., & Friston, K. J. (2017). Answering Schrödinger’s question: A free-energy formulation. Physics of Life Reviews. https://doi.org/10.1016/j.plrev.2017.09.001.

Richardson, T. O., Liechti, J. I., Stroeymeyt, N., Bonhoeffer, S., & Keller, L. (2017). Short-term activity cycles impede information transmission in ant colonies. PLoS Computational Biology, 13(5), e1005527.

Robinson, E. J. H. (2014). Polydomy: The organisation and adaptive function of complex nest systems in ants. Current Opinion in Insect Science, 5, 37–43.

Ross, D., & Spurrett, D. (2004). What to say to a skeptical metaphysician: A defense manual for cognitive and behavioral scientists. The Behavioral and Brain Sciences, 27(5), 603–627.

Sakiyama, T., & Gunji, Y.-P. (2013). The Müller–Lyer illusion in ant foraging. PLoS ONE, 8(12), e81714.

Sakiyama, T., & Gunji, Y.-P. (2016). The Kanizsa triangle illusion in foraging ants. BioSystems, 142–143, 9–14.

Sapolsky, R. M. (2004). The frontal cortex and the criminal justice system. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 359(1451), 1787–1796.