Abstract

Frequent itemset mining (FIM) is the crucial task in mining association rules that finds all frequent k-itemsets in the transaction dataset from which all association rules are extracted. In the big-data era, the datasets are huge and rapidly expanding, so adding new transactions as time advances results in periodic changes in correlations and frequent itemsets present in the dataset. Re-mining the updated dataset is impractical and costly. This problem is solved via incremental frequent itemset mining. Numerous researchers view the new transactions as a distinct dataset (partition) that may be mined to obtain all of its frequent item sets. The extracted local frequent itemsets are then combined to create a collection of global candidates, where it is possible to estimate the support count of the combined candidates to avoid re-scanning the dataset. However, these works are hampered by the growth of a huge number of candidates, and the support count estimation is still imprecise. In this paper, the Closed Candidates-based Incremental Frequent Itemset Mining approach, or CC-IFIM, has been proposed to decrease candidate generation and improve the accuracy of the global frequent itemsets that are retrieved. The proposed approach is able to prune several produced candidates in earlier steps before performing any further computations. To improve the accuracy of the computation of the support count of the produced candidates, the similarity between partitions has been evaluated using just the local closed candidates rather than all candidates. The experimental findings demonstrated that the CC-IFIM approach is superior to its competitors in terms of efficiency and accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Association rule mining (ARM) is one of the key tasks in data mining. It discovers interesting correlations among set of items in large transaction datasets. ARM has developed into a powerful tool with immense potential and wide applications such as market basket analysis, medical diagnosis, bioinformatics, fraud detection and the internet of things.

Formally, consider \(\mathcal {I}=\{I_1, I_2, \dots , I_m\}\) is a set of m items and a dataset \(\mathcal {D}=\{T_1, T_2, \dots , T_n\}\) is a set of n transactions, where \(T_i \subseteq \mathcal {I}\), \(\text {ARM}\) is a process of mining all strong association rules (AR) by performing the following two tasks [1]. Firstly, generate all frequent k-itemsets (\({\mathcal {F}}{\mathcal {I}}\)); \(k=1, 2, \dots , m\). The k-itemset X (a set of k items) is called frequent if \(\text {sup}(X)\ge \text {minsup} \times n\); \(\text {sup}(X)={|T_i; X \subseteq T_i|}\) and \(\text {minsup}\) is give threshold (say \(80\%\)). Secondly, from the extracted \({\mathcal {F}}{\mathcal {I}}\), the strong association rules (AR) have been mined; an association \(R\in AR\) on the form \(R:X \rightarrow Y\); \(X\cup Y \in {\mathcal {F}}{\mathcal {I}}\), \(X \cap Y =\phi\). The rule R is strong if \(\text {sup}p(R)>\text {minsup}\) and \(\text {conf}(R)>\text {minconf}\); \(\text {sup}(R) =\frac{\text {sup}(X \cup Y)}{n}\) and \(\text {conf}(R) =\frac{\text {sup}(X \cup Y)}{\text {sup}(X)}\), where \(\text {minsup}\) and \(\text {minconf}\) are given thresholds.

Frequent itemsets mining (\(\text {FIM}\)) is the crucial task in AR that finds all \({\mathcal {F}}{\mathcal {I}}\) among a set of items in \(\mathcal {D}\). The exponential complexity of \(\text {FIM}\) attracts researchers to develop new algorithms to improve it. In huge datasets, the traditional algorithms, such as Apriori [1], FP-growth [9], Eclat [19] and Quick-Apriori [17] require exponential time and memory for mining all \({\mathcal {F}}{\mathcal {I}}\). In several applications, transaction datasets are growing continuously, so adding incremental transactions to the original dataset requires repeating the mining processes. These kinds of algorithms are considered static because they have no way to merge the new transactions without re-mining the updated datasets. Due to their exponential complexity, traditional mining approaches fail to address the continuously increasing dataset.

To overcome these obstacles, incremental frequent itemset mining (\(\text {IFIM}\)) is utilized. There are two categories for \(\text {IFIM}\) approaches: the Apriori-based [4, 5, 20] and the tree-based approaches [9, 10, 13, 15]. Apriori-based approaches suffer from the I/O overhead of scanning the dataset many times and the cost of computation and memory of generating a huge number of candidates. As well as FP tree-based approaches suffer from complex operations for adjusting trees [18].

Nowadays where the number of transactions is very huge and continuously changing, all the above approaches are not applicable because they cannot get the results in an efficient way. Therefore, some approaches [3, 18] introduced approximated solutions for \(\text {IFIM}\). These approaches can be utilized in mining FI as well. For \(\text {IFIM}\), the \(\text {FPMSIM}\) approximated approach [18] considers the new transactions as a separate dataset to be mined, from which all local \({\mathcal {F}}{\mathcal {I}}\) are mined. Subsequently, the extracted local \({\mathcal {F}}{\mathcal {I}}\)s of both the original and the incremental datasets are combined together to produce global candidates. The support count of each candidate is estimated using a kind of statistical model such as Jaccard similarity between the produced candidates of the original and incremental datasets. Although the approach [18] has good results, it produces many candidate itemsets that can be pruned before any further computation. As well as, the estimating of the support count of all candidates is imprecise, which causes the loss of global \({\mathcal {F}}{\mathcal {I}}\). The purpose of this paper is to address the issues raised in the \(\text {FPMSIM}\) [18]. CC-IFIM, or closed candidates-based incremental frequent itemset mining, is the name of the proposed approach. The suggested approach makes use of a pruning mechanism to eliminate certain early, pointless candidates. The CC-IFIM approach measures the similarity between just the closed candidates of the original and incremental datasets rather than all produced candidates in order to increase the estimated accuracy of the support count of all candidates. The experimental results on five benchmark datasets showed that the CC-IFIM approach is more efficient and accurate than the \(\text {FPMSIM}\) approach.

The remainder of this paper is organized as follows: The related works are discussed in Sect. 2, where \(\text {FPMSIM}\), which is the source of CC-IFIM, is given specific attention. Section 3 introduces the proposed approach CC-IFIM. Section 4 discusses the experimental results. Finally, Sect. 5 concludes the paper.

2 Related work

In this section, the incremental frequent itemsets mining is classified into two categories: exact approaches that find all \({\mathcal {F}}{\mathcal {I}}\) (Sect. 2.1), and approximated approach that tries to find approximated \({\mathcal {F}}{\mathcal {I}}\) denoted by \({\mathcal {F}}{\mathcal {I}}_\text {{approx}}\); \({\mathcal {F}}{\mathcal {I}}_\text {{approx}} \subseteq {\mathcal {F}}{\mathcal {I}}\) (Sect. 2.2).

2.1 Exact approaches

The exact approach can be classified into two major categories: Apriori-based and FP-tree-based as shown in the following two subsections:

2.1.1 Apriori-based

Apriori-based approach firstly scans the incremental dataset to extract its \({\mathcal {F}}{\mathcal {I}}\)s, then re-scanning the original dataset to get the exact support of each candidate, as in FUP [4], FUP2 [5]. However, re-scanning the original dataset causes I/O cost overload.

In 2001, Zhou et al. [20] developed the MAAP algorithm, which utilizes the characteristics of Apriori to improve the overall performance. In the Apriori algorithm, high-level itemsets are joined by low-level itemsets, where in the Apriori algorithm uses high-level itemsets to induct low-level itemsets. MAAP compares frequent patterns with new transactions to generate new association rules. In addition, MAAP can improve the performance of FUP [4]; however, the problem of re-scanning the dataset still exists.

2.1.2 FP tree-based

Rather than employing the generate-and-test strategy of Apriori-based algorithms, the tree-based framework constructs an extended prefix-tree structure, called Frequent Pattern tree (FPtree), to capture the content of the transaction database [9].

In 2008, Hong et al. [10] proposed a fast and effective method for updating the structure of the FP-tree, called the FUFP (Fast Updated Frequent Pattern) algorithm, in which only frequent items are saved in the FUFP-tree. The FUFP algorithm can quickly update and modify the tree by dividing items. When the original large item becomes smaller, it will be directly deleted from the FUFP-tree. Instead, as the original item gets larger, it is added to the end of the head table in descending order. But it needs to re-scan the original dataset to find the transactions of the newly enlarged items and insert them into the FUFP tree.

In 2014, two remarkably efficient algorithms are introduced: FIN [7] and PrePost+ [8] with POC tree and PPC tree, respectively. These two structures are prefix trees and similar to FP-tree, Moreover, both algorithms employ two additional data structures called Nodeset and N-list, respectively, to significantly improve mining speed. However, N-list consumes a lot of memory, and for some datasets, Nodeset’s cardinality grows significantly [6].

The preceding issue was resolved in 2016 by Deng, by proposing an algorithm called dFIN [6] that is based on a new data structure called DiffNodeset instead of Nodeset. In contrast to Nodeset, the DiffNodeset of each k-itemset \((k \ge 3)\) is extracted by the difference between the DiffNodesets of two (k-1)- itemsets. Numerous investigations demonstrate that DiffNodeset’s cardinality is lower than Nodeset’s. As a result, the dFIN algorithm is quicker than Nodeset-based algorithms. However, the calculation of the difference between two DiffNodesets can be time-consuming for some datasets.

In 2017, Huynh et al. [11] proposed a tree structure IPPC (Incremental Pre-Post-Order Coding) algorithm which supports incremental tree construction, and an algorithm for incrementally mining frequent itemsets, IFIN (Incremental Frequent Itemsets Nodesets). Through experiments, algorithm IFIN has demonstrated its superior performance compared to FIN [7] and PrePost+ [8]. However, in the case of datasets comprising a large number of distinct items but just a small percentage of frequent items for a certain support threshold, we investigate that IPPC tree becomes to lose its advantage in running time and memory for its construction compared to other trees such as POC and PPC of algorithms FIN and PrePost+.

In 2021, Satyavathi et al. [14] proposed the FIN_INCRE algorithm by enhancing FIN algorithm [7] for efficient mining of incremental association rules which require scanning of the original dataset only once. After scanning the dataset, it generates POC-Tree and from which it produces item sets that occur frequently. Then, they are used to generate association rules. When some new instances are inserted into the original dataset, the algorithm scans only the newly added instances. Then, it updates POC-Tree and frequent itemsets before actually updating the mined association rules.

However, FP tree-based approaches suffer from complex operations for adjusting FP-tree.

2.2 Approximated approaches

Regardless of using Apriori [2] or FP-Tree [9], the key of these approaches are reducing both I/O cost and a complex generating of FP-trees.

In 2017, Li et al. [12] proposed a three-way decision update pattern (TDUP) approach along with a synchronization mechanism for this issue. With two support-based measures, all possible itemsets are divided into positive, boundary, and negative regions. TDUP efficiently updates frequent itemsets and reduces the cost of re-scanning. However, TDUP may miss some potential frequent itemsets with incremental data updates.

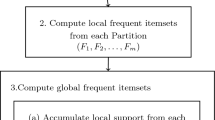

In 2021, Xun et al. [18], developed an approach for incremental frequent itemsets mining based on frequent pattern tree and multi-scale called \(\text {FPMSIM}\). A partitioning-based \(\text {FPMSIM}\) is valid for not only parallel programming of mining all \({\mathcal {F}}{\mathcal {I}}\)’s, but also for addressing the problem of incremental frequent itemsets mining. In general, As shown in Algorithm 1, \(\text {FPMSIM}\) divides the dataset into scales or partitions using a multi-scale concept in which each partition may have the same essence and characteristics. Using FP-growth [2] and for each partition, the local frequent itemsets \({\mathcal {F}}{\mathcal {I}}\) have been extracted. The union of all local frequent itemsets is considered as global candidates C. To estimate the support of global candidates, Jaccard method [16] is used for measuring the similarities between scales or partitions. Finally, the global frequent itemsets are candidates whose candidates with support is greater than or equal to global minsup. In big data, this approach is efficient and scalable which produced an acceptable \({\mathcal {F}}{\mathcal {I}}_\text {{approx}}\) compared with the exact \({\mathcal {F}}{\mathcal {I}}\), however, it produces many candidate itemsets that can be pruned before any further computation. Additionally, the estimating of the support count of all candidates is imprecise, which causes the loss of global \({\mathcal {F}}{\mathcal {I}}\). Consequently, miss some potential frequent itemsets.

3 The proposed approach CC-IFIM

In this section, an improved approach of \(\text {FPMSIM}\) approach [18] has been introduced. This approach has been called Closed Candidates-based Frequent Itemset Mining and denoted(CC-IFIM). Although \(\text {FPMSIM}\) and CC-IFIM have some common phases there are a lot of differences between them, The core difference is ignoring the division of the dataset into scales, which requires an additional cost for getting further information as in \(\text {FPMSIM}\). This kind of division is not applicable in most cases of real applications.

Consider a transaction dataset \(\mathcal {D}\) and minimum support count \(\text {minsup}_{\mathcal {D}}\). Algorithm 2 shows the steps of extracting \({\mathcal {F}}{\mathcal {I}}\), where CC-IFIM works as follows:

-

1.

Divide \(\mathcal {D}\) into partitions \(\mathcal {P}\)=\(\{P_1,P_2,\dots ,P_k \}\) where:

-

\(\bigcup \nolimits _{j=1}^{k} P_j\) = \(\mathcal {D}\); k the number of partitions.

-

For \(i\ne j\), \(P_i\) \(\cap\) \(P_j\) = \(\varnothing\).

-

Each \(P_j\) has its own \(\text {minsup}\) called \(\text {minsup}_{P_j}\).

-

-

2.

Using Apriori [2] or FP-tree [9] algorithm, \(\forall\) \(P_j \in \mathcal {P}\) mine its frequent itemsets \({\mathcal {F}}{\mathcal {I}}^j\) =\(\{f_1^j, f_2^j, \dots , f^j_{q_j}\}\). Where, each frequent set \(f\in\) \({\mathcal {F}}{\mathcal {I}}^{j}\) is a local frequent itemset of \(\mathcal {D}\); \(\text {sup}p_j(f)\ge \text {minsup}_{P_j}\), while it is called a global candidate itemset of \(\mathcal {D}\).

-

3.

Generate a set of candidates denoted by \(\mathcal {C}_{\mathcal {D}}\); \(\mathcal {C}_{\mathcal {D}}\)= \(\bigcup \nolimits _{j=1}^{k} {\mathcal {F}}{\mathcal {I}}^j\). Let q is the number of generated candidates (i.e., \(q=\mid \mathcal {C}_{\mathcal {D}} \mid\)). Each itemset \(c\in\) \(\mathcal {C}_{\mathcal {D}}\) is called a candidate itemset of \(\mathcal {D}\).

-

4.

Build a matrix \(S \in {\mathbb {R}}^{q\times k}\); in which the rows represent the candidates \(c_i\); \(i=1, 2,\dots , q\) and columns represent the partitions \(p_j\); \(j=1, 2,\dots , k\). The element S(i, j); \(1 \le i \le q\), \(1 \le j \le k\) of the matrix S is determined as follows:

$$\begin{aligned} S(i,j)=\bigg \{ \begin{array}{ll} {\text {sup}}_j(c_i) &{}\quad {\text {if}}\ c_i \in {\mathcal {F}}{\mathcal {I}}^{j} \\ {{0}} &{}\quad {\text {otherwise}}\\ \end{array} \end{aligned}$$(3) -

5.

An extra column \(m\in {\mathbb {R}}^{q\times 1}\) with dimension q is associated to the matrix S as follows:

$$\begin{aligned} m(i)=\mid p ^+(i) \mid , i=1, 2,\dots , q \end{aligned}$$(4)where \(p^+(i) =\{j=S(i,j)\ne 0\}\) gives the partition j in which \(c_i\) is frequent (i.e., \(\text {sup}_j(c_i) \ge 0\)).

-

6.

Extract closed candidates Using the candidates’ \(\mathcal {C}_D\) and the matrix S, which contains local frequent itemsets, extract only the closed candidates’ \({\mathcal {C}}{\mathcal {C}}_{D}\) which contains all local closed frequent itemset (Definition 1).

Definition 1

(local closed frequent itemset) A local frequent itemset \(c_i \in \mathcal {C}_D\) is a local closed frequent itemset, if there is no local frequent itemset \(c_{i'}\in \mathcal {C}_D\); \(c_{i'}\supset c_i\) and \(S(i,j)= S(i',j); j=1,2,\dots ,k\).

-

7.

Using \({\mathcal {C}}{\mathcal {C}}_D\), build its corresponding new matrix called \(S_\text {{closed}} \in {\mathbb {R}}^{q_c \times k}; q_c = \mid {\mathcal {C}}{\mathcal {C}}_D \mid\), \(q_c \le q\), and \(S_\text {{closed}}(i,j)=S(i,j);\ cc_i \in {\mathcal {C}}{\mathcal {C}}_D\) and \(j=1,2,\dots ,k\). In CC-IFIM, the matrix \(S_\text {{closed}}\) is used for creating the similarity matrix \(M_{{\mathcal {C}}{\mathcal {C}}}\) as shown in the next step instead of the matrix S as in \(\text {FPMSIM}\).

-

8.

Using \({\mathcal {C}}{\mathcal {C}}_D\), build a symmetric similarity matrix \(M_{\mathcal {C}}{\mathcal {C}}\in {\mathbb {R}}^{k\times k}\), where \(M_{\mathcal {C}}{\mathcal {C}}(j,j')\) is the similarity between the partitions \(P_j\) and \(P_{j'}\) for all \(j,j'=1,2, \dots , k\) and \(j \ne j'\). The three versions of similarity such as Jaccard, Dice, or Cosine [16] has been used from which the more accurate method will be chosen as a suitable similarity measurement. The similarity Jaccard matrix is calculated as follows:

$$\begin{aligned} { M_{{\mathcal {C}}{\mathcal {C}}}(j,j')=\frac{|c_i\in {\mathcal {C}}{\mathcal {C}}_D;\, S_\text {{closed}}(i,j) \ne 0\ \text {and}\ S_\text {{closed}}(i,j') \ne 0 |}{|c_i\in {\mathcal {C}}{\mathcal {C}}_D;\, S_\text {{closed}}(i,j)\ne 0\ or\ S_\text {{closed}}(i,j') \ne 0 |} } \end{aligned}$$(5)Simply, you can read \(S_\text {{closed}}(i,j) \ne 0\) by the candidate \(c_i\) is frequent at partition j. Therefore, \(M_{{\mathcal {C}}{\mathcal {C}}}(j,j')\) is the number of closed candidates that are frequent in both partitions j and \(j'\) divided by the number of candidates that are frequent in at least one partition. In CC-IFIM, we discovered that the Jaccard similarity matrix is not the suitable method for measuring the similarity between partitions as shown in the experimental result section. The similarity Dice matrix is calculated as follows:

$$\begin{aligned} { M_{{\mathcal {C}}{\mathcal {C}}}(j,j')=2\frac{|c_i\in {\mathcal {C}}{\mathcal {C}}_D;\, S_\text {{closed}}(i,j) \ne 0\ and\ S_\text {{closed}}(i,j') \ne 0 |}{|c_i\in {\mathcal {C}}{\mathcal {C}}_D; \,S_\text {{closed}}(i,j)\ne 0 |+ |c_i\in {\mathcal {C}}{\mathcal {C}}_D; S_\text {{closed}}(i,j') \ne 0 |} } \end{aligned}$$(6)The Cosine similarity method is calculated as follows:

$$\begin{aligned} { M_{\mathcal {C}}{\mathcal {C}}(j,j')=\frac{|c_i\in {\mathcal {C}}{\mathcal {C}}_D;\, S_\text {{closed}}(i,j) \ne 0\ and\ S_\text {{closed}}(i,j') \ne 0 |}{\sqrt{|c_i\in CC_D; \,S_\text {{closed}}(i,j)\ne 0 |} . \sqrt{|c_i\in {\mathcal {C}}{\mathcal {C}}_D; \,S_\text {{closed}}(i,j') \ne 0 |}} } \end{aligned}$$(7)Based on any version of similarity method, obviously \(M_{\mathcal {C}}{\mathcal {C}}(j,j)=1\), \(M_{\mathcal {C}}{\mathcal {C}}(j,j')=M_{\mathcal {C}}{\mathcal {C}}(j',j)\). The value or coefficient \(M_{\mathcal {C}}{\mathcal {C}}(j,j')\) ranges between 0 and 1. \(M_{\mathcal {C}}{\mathcal {C}}(j,j')=1\) means the partitions j and \(j'\) are similar, while \(M_{\mathcal {C}}{\mathcal {C}}(j,j')=0\) means the partitions j and \(j'\) are dissimilar and there is no intersection between them.

-

9.

Pruning step After creating matrix \(M_{\mathcal {C}}{\mathcal {C}}\), we back again to \(\mathcal {C}_D\) and its corresponding matrix S. Before estimating the global support, a new hypothesis was used in earlier step to predict whether the candidate \(c_i\) is globally frequent or not. Therefore, \(\forall c_i \in \mathcal {C}_D\), \(\text {Critical}\_\text {sup}\) can be calculated as follows:

$$\begin{aligned} \text {Critical}\_\text {sup}(c_i)= \sum _{j=1}^{k} S'(i,j) \end{aligned}$$(8)where

$$\begin{aligned} S'(i,j)=\Bigg \{ \begin{array}{ll} {\text {minsup}}_j-1 &{}\quad {\text {if}}\ S(i,j)=0 \\ {S(i,j)} &{}\quad {\text {otherwise}}\\ \end{array} \end{aligned}$$(9)Since, at \(S(i,j)=0\), \(c_i\) is infrequent at partition j. Therefore, the most expected support value should be \(\text {minsup}_j-1\) that is the largest number less than \(\text {minsup}_j\). Remove row i from table S, consequently remove candidate \(c_i\) from \(\mathcal {C}_D\) if its \(\text {Critical}\_\text {sup}(c_i) < \text {minsup}\). This step reduces the computation and memory cost for building the matrix S.

-

10.

To estimate the support of each candidate \(c_i\in \mathcal {C}_D\) at any partition j with \(S(i,j)=0\). The estimated support S(i, j) is computed according to the following equation:

$$\begin{aligned} S(i,j) =\Bigg \{ \begin{array}{ll} {\text {sup}}_{\text {app}}=\frac{1}{m(i)}\sum _{j' = 1, j' \ne j} ^{k} M(j',j) \times S(i,j') &{}\quad \text {if}\ {\text {sup}}_{\text {app}} \le {\text {minsup}}_j \\ {\text {minsup}}_j &{}\quad {\text {otherwise}} \\ \end{array} \end{aligned}$$(10) -

11.

For each candidate \(c_i\in \mathcal {C}_D\), estimate a global support using the following equation:

$$\begin{aligned} {\text {sup}}(c_i)=\sum _{j=1}^{k}S(i,j) \end{aligned}$$(11) -

12.

Add \(c_i\) to the global frequent itemsets \({\mathcal {F}}{\mathcal {I}}\), if \(c_i\) satisfies the following condition:

$$\begin{aligned} {\text{sup}}(c_i)\ge \text {minsup}_{D} \end{aligned}$$(12)

The following example is used for discussing each step of CC-IFIM for extracting \({\mathcal {F}}{\mathcal {I}}\) and the differences between \(\text {FPMSIM}\) and CC-IFIM. Consider the transaction dataset \(\mathcal {D}\) (Fig. 1) that contains sixteen transactions (\(n = 16\)) and five items \(\mathcal {I}=\{a,b,c,d,e\}\) (\(|\mathcal {I} |= 5\)). And \(\text {minsup}=50\%\ of\ \mathcal {D}=0.5*16=8\). CC-IFIM works as follows:

-

1.

Firstly, \(\mathcal {D}\) is divided into four partitions (\(k=4\)): \(P_1, P_2, P_3\), and \(P_4\) (Fig. 1). Where \(\text {minsup}_{P_j}=50\% of\ P_j=0.5 \times 4=2; j=1,2,3,4\).

-

2.

For each partition \(P_j\), mine its frequent itemsets \({\mathcal {F}}{\mathcal {I}}^j\) (Fig. 2).

-

3.

Generate the candidates \(\mathcal {C}_D=\bigcup \nolimits _{j=1}^{4} {\mathcal {F}}{\mathcal {I}}^j\) and its corresponding matrix S (Fig. 3).

-

4.

Build a matrix S (Fig. 3) according to Eq. 3. For instance, the candidate itemset \(c_i = \{bc\}\) is frequent at partitions \(P_1\), \(P_2\), and \(P_4\) with \(S(i,1)=2\), \(S(i,2)=2\), and \(S(i,4)=3\). While this itemset \(\{bc\}\) is infrequent at partitions \(P_3\), therefore \(S(i,3)=0\).

-

5.

Adding the column m (Fig. 3) that contains the number of partitions in which the itemset is frequent. Where at candidate \(c_i=\{a\}\), \(m(i)=3\) means \(\{a\}\) is frequent at three partitions (as shown at partitions 1, 2, 4).

-

6.

Generate the closed candidates \({\mathcal {C}}{\mathcal {C}}_D\) (Definition 1). For instance, the candidates \(c_i=\{ab\}\) and \(c_{i'}=\{abc\}\) are frequent and have the same support count at partitions \(P_1\), \(P_2\), and \(P_4\). where, \(c_{i'}\supset c_i\), and \(S(i,j)=S(i',j);j=1,2,4\). According to Definition 1, \(\{bc\}\) is non-closed candidate. Therefore \(\{bc\}\) is not included in \({\mathcal {C}}{\mathcal {C}}_D\) due to the two frequent itemsets \(\{bc\}\) and \(\{abc\}\) share the same information. So, the closed candidate abc is enough to calculate the similarity between partitions. While, the candidate \(c_i=\{ac\}\) is a closed candidate because \(c_i=\{ac\}\) and its the only super candidate \(c_{i'}=\{abc\}\) not have the same support at corresponding partitions, as shown \(S(i,j) \ne S(i',j); j=1,2\) which violates Definition 1. Therefore, \({\mathcal {C}}{\mathcal {C}}_D=\{a, b, c, d, e, ab, ac, ce, abc\}\).

-

7.

From a matrix S, extract only a matrix \(S_\text {{closed}}\) that corresponds to \({\mathcal {C}}{\mathcal {C}}_D\) (Fig. 3).

-

8.

Using the \({\mathcal {C}}{\mathcal {C}}_D\) and its matrix \(S_\text {{closed}}\) (Fig. 3) and based on Jaccard similarity method, calculate the similarity coefficients between each pair of partitions. According to Eq. (5), the matrix \(M_{\mathcal {C}}{\mathcal {C}}\) (Fig. 3) has been created. \(M_{\mathcal {C}}{\mathcal {C}}(1,2) =M_{\mathcal {C}}{\mathcal {C}}(2,1) =\frac{7}{9}=0.7778\), means 7 and 9 are the numbers of the intersection and union between two partitions \(P_1\) and \(P_2\), respectively.

-

9.

Apply pruning step (Fig. 3), for the itemset \(c_i=\{ad\}\), \(S(i, 1)=S(i, 2)=2\), \(S(i, 3)= S(i, 4)=0\), this mean the itemset \(\{ad\}\) is infrequent at \(P_3\) and \(P_4\). Then using our hypothesis \(S'(i, j') = \text {minsup}-1= 2-1=1; j'=3,4\) then \({\text{Critical}}\_\text {sup}p(ad)= 2+2+1+1=6 < \text {minsup}_{\mathcal {D}}=8\). This means the candidate \(\{ad\}\) is a global infrequent itemset. Therefore, in CC-IFIM, the itemset \(\{ad\}\) must be ignored from \(\mathcal {C}_D\). While the candidate \(\{a\}\), its \({\text{Critical}}\_\text {sup}p(a)= 3+4+1+3=11 > \text {minsup}_\mathcal {D}=8\). Therefore, the candidate a may be valid as a frequent global itemset. Finally, \(\mathcal {C}_D=\{a, b, c, d, e, ab, ac, bc, ce, abc\}\). The shaded cells in \({\mathcal {C}}{\mathcal {C}}_D\) represent the ignored candidates (Fig. 3). As well as, the values that correspond to the pruned candidates are ignored.

-

10.

Using \(M_{\mathcal {C}}{\mathcal {C}}\) and Eq. (5), estimate the support of all candidates \(c_i \in \mathcal {C}_D; S(i, j)=0; j=1, 2, 3, 4\) (Fig. 3). As shown, for itemset \(c_i=\{a\}\), \(S(i,3) =\frac{1}{m(i)}\sum _{j = 1, j \ne 3} ^{4} M_{{\mathcal {C}}{\mathcal {C}}}(j,3) \times S(i,j)=\) \(\frac{1}{3}(M_{{\mathcal {C}}{\mathcal {C}}}(1,3) \times S(i,1) + M_{{\mathcal {C}}{\mathcal {C}}}(2,3) \times S(i,2)+ M_{{\mathcal {C}}{\mathcal {C}}}(4,3) \times S(i,4)) =\) \(\frac{1}{3}(0.55556 \times 3 + 0.3333 \times 4+ 0.44444 \times 3)=1.4444\). The resulted S is shown in CC-IFIM partition at the middle of Fig. 3.

-

11.

For each candidate \(c \in \mathcal {C}_D\), estimate its global support (Fig. 3). As shown, \(sup(a)=3+4+1.4444.3=11.444 > \text {minsup}=8\), then the itemset \(\{a\}\) is global frequent and has been added to \({\mathcal {F}}{\mathcal {I}}\).

-

12.

Finally \({\mathcal {F}}{\mathcal {I}}=\{a,b,c,d,e,ab,ac,bc,ce,abc\}; sup(c)\ge \text {minsup}; c \in {\mathcal {F}}{\mathcal {I}}\).

-

Incremental dataset Finally, this approach will also deal with any incremental datasets as a new partition \(P_2\), where \(P_1\) is the original dataset. Similarly as shown in Algorithm 2, \({\mathcal {F}}{\mathcal {I}}^1\) and \({\mathcal {F}}{\mathcal {I}}^2\) are extracted for the partitions \(P_1\) and \(P_2\), respectively. All the steps as shown in Algorithm 2 have been applied to \(P_1\) and \(P_2\). Where, form the candidates \(\mathcal {C} ={\mathcal {F}}{\mathcal {I}}^1 \bigcup {\mathcal {F}}{\mathcal {I}}^2\). Then form a matrix S for the two partitions \(P_1\) and \(P_2\) as mentioned earlier. Find the closed candidates \({\mathcal {C}}{\mathcal {C}}\), then the similarity matrix \(M_{{\mathcal {C}}{\mathcal {C}}}\) that is corresponding to the closed candidates from \({\mathcal {C}}{\mathcal {C}}\) (Definition 1). Apply the pruning step, then Using \(M_{{\mathcal {C}}{\mathcal {C}}}\) estimate the support of zero entries in matrix \(S_{\mathcal {C}}\) to find the global support of all candidates. From \({\mathcal {F}}{\mathcal {I}}\) that satisfies \(\text {miusup}\) threshold.

-

The Differences between traditional, \(\text {FPMSIM}\),and CC-IFIM approaches The traditional approach is applied to the transaction dataset \(\mathcal {D}\) (Fig. 1), where the extracted \({\mathcal {F}}{\mathcal {I}}_\text {{Original}}=\{a,b,c,d,e,ab,ac,bc,ce,abc\}\). Using \(\text {FPMSIM}\), the similarity matrix \(M_{\mathcal {C}}\) (shown at \(\text {FPMSIM}\) partition at the right of Fig. 3) is measured based on the original matrix S that corresponds \(\mathcal {C}_D\) instead of \(M_{{\mathcal {C}}{\mathcal {C}}}\) as in CC-IFIM. In \(\text {FPMSIM}\) that is based on Jaccard similarity, the matrix \(M_C\) is calculated according to the following equation:

$$\begin{aligned} { M_{\mathcal {C}}(j,j')=\frac{|c_i\in \mathcal {C}_D;\; S(i,j) \ne 0\ \text {and}\ S(i,j') \ne 0 |}{|c_i\in \mathcal {C}_D;\; S(i,j)\ne 0\ \text {or}\ S(i,j') \ne 0 |} } \end{aligned}$$(13)The reason of calculating similarity matrix based on \({\mathcal {C}}{\mathcal {C}}_D\) instead of \(\mathcal {C}_D\) returns to the following property: if there are two frequent itemsets X and Y have the same support at all the corresponding partitions, and \(X\subset Y\), There are the same and share the same information. Therefore, using X and Y for computing the similarity between two partitions j and \(j'\) decrease the coefficient \(M(j,j')\) because the denominator is increased twice, one for X and one for Y, consequently, the calculated similarity coefficient is decreased. Instead, we have to ignore X and keep only Y, consequently, the denominator is increased once which leads to increase the similarity coefficient compared to the previous. This means that the computed similarity based only on local closed candidates is more realistic and better. For example, Using \({\mathcal {C}}{\mathcal {C}}_D\), \(M_{{\mathcal {C}}{\mathcal {C}}}(1,2) =7/9=0.7778\). Where, the number of itemsets which are frequent in \(P_1\) and \(P_2\) (intersection) is 7. Where, the intersected itemsets in \({\mathcal {C}}{\mathcal {C}}_D\) are \(\{a,b,c,d,ab,ac,abc\}\). while the union is 9 which is \(\{a,b,c,d,e,ab,ac,ce,abc\}\). Similarly, but using \(\mathcal {C}_D\), \(M_\mathcal {C}(1,2)=9/17=0.52941\). As shown in (Fig. 3) the coefficients of matrix \(M_{\mathcal {C}}{\mathcal {C}}\) are greater than the coefficients of matrix \(M_\mathcal {C}\). Therefore, the estimated support of CC-IFIM is greater than \(\text {FPMSIM}\).

Back to the example, it is worth noting that, for calculating the similarity matrix, we consider the whole candidates in \(\mathcal {C}_D\) before applying the pruning step as in our proposed approach. Based on \(M_{\mathcal {C}}\), the corresponding S is calculated (shown at the \(\text {FPMSIM}\) partition at the right of Fig. 3), and the global support is estimated. Finally, the extracted \({\mathcal {F}}{\mathcal {I}}_{\text {FPMSIM}}=\{a,b,c,d,e,ab,ac,ce\}\). As shown, \({\mathcal {F}}{\mathcal {I}}_{\text {FPMSIM}} \subset {\mathcal {F}}{\mathcal {I}}_\text {{Original}}\), where there are two frequent itemsets missing in \(\text {FPMSIM}\). While in CC-IFIM, \({\mathcal {F}}{\mathcal {I}}_\text {{proposed}} ={\mathcal {F}}{\mathcal {I}}_\text {{Original}}\). Finally, as shown in Fig. 3, it is clear that there is waste of time and memory used for processing useless thirteen candidates as in FPMSIM compared to our proposed approach CC-IFIM.

4 Experimental results

In this section, we compare CC-IFIM with \(\text {FPMSIM}\) [18]. We have implemented both two approaches using Python. The implemented approaches have been applied to various real datasets and synthetic datasets (as shown in Table 1) (URL:http://fimi.ua.ac.be.) which are widely used for performance evaluation in the pattern mining area.

Accidents and Mushroom are dense datasets, while Retail is a sparse dataset. Generally, a dense dataset is composed of relatively long transactions and a small number of items, and a sparse dataset is characterized by relatively short transactions and a large number of items.

we have used a synthetic sparse dataset T10I4D100K and a real dataset, online news portal click-stream data (Kosarak). The efficiency (running time) of the CC-IFIM compared to \(\text {FPMSIM}\) is shown in Sect. 4.1. Section 4.2 introduces the rich comparative study that shows the accuracy of CC-IFIM compared to \(\text {FPMSIM}\) using three measurements; coverage, precision, and average support error.

4.1 Efficiency of CC-IFIM

Using several \(\text {minsup}\) thresholds on the mentioned datasets:

-

Running time Since the generation of \({\mathcal {F}}{\mathcal {I}}\) of each part is similar in both FPMSIM and CC-IFIM, the running time of \({\mathcal {F}}{\mathcal {I}}\) generation is not included in the comparisons. Figure 4 shows the running time in seconds of CC-IFIM compared to \(\text {FPMSIM}\). In CC-IFIM, the running time is calculated starting from pruning \(\mathcal {C}_D\), getting \({\mathcal {C}}{\mathcal {C}}_D\), calculating \(M_{{\mathcal {C}}{\mathcal {C}}}\), then estimating the zeros entries in the matrix S. While in \(\text {FPMSIM}\), the running time is calculated starting from calculating \(M_{\mathcal {C}}\), then estimating the zeros entries in the matrix S. All results show CC-IFIM is better than \(\text {FPMSIM}\). Where in Mushroom, Accidents, Retail, T10I4D100K, and T10I4D100K datasets, CC-IFIM reduced the execution time of \(\text {FPMSIM}\) around 10–12%, 10–42%, 16–34%, 10–18%, and 10–18%, respectively. In Kosarak dataset, CC-IFIM reduced the execution time of \(\text {FPMSIM}\) around 10–13%. Although our approach consumes time for extracting the closed candidates, our approach with the pruning step is more efficient than FPMSIM.

-

Memory consumption Fig. 5 shows the memory consumption of CC-IFIM compared to \(\text {FPMSIM}\). All results show CC-IFIM uses memory more efficiently than \(\text {FPMSIM}\). As a result, our approach consumed less memory than FPMSIM.

4.2 Accuracy of CC-IFIM Using \(M_{{\mathcal {C}}{\mathcal {C}}}\)

The coverage, precision, and average support error of CC-IFIM are evaluated against \(\text {FPMSIM}\) [18].

The coverage is calculated using the following equation:

where \({\mathcal {F}}{\mathcal {I}}_{app}\) is the approximated \({\mathcal {F}}{\mathcal {I}}\) using any approach. \(fP{\mathcal {F}}{\mathcal {I}}\) is the false positive \({\mathcal {F}}{\mathcal {I}}\). The precision reflects the effect of false positive and false negative itemsets on the accuracy of experimental [18], which is defined as:

\(fN{\mathcal {F}}{\mathcal {I}}\) is the false negative \({\mathcal {F}}{\mathcal {I}}\).

The support estimation error is the average of the difference between the estimated support \(\text {sup}^*(f)\) of the frequent itemsets f in CC-IFIM and the real support \(\text {sup}(f)\) of these itemsets as follows:

Figures 6 and 7 shows coverage, precision, and \(\text {avg}\_\text {sup}\_\text {error}\) among \(\text {FPMSIM}\) and three versions of CC-IFIM based on Jaccard, DICE and Cosine, respectively. These results have been tested using several \(\text {minsup}p\). On Mushroom, Retail, T10I4D100K and T40I10D100K datasets, the overall coverage and precision of CC-IFIM are better than \(\text {FPMSIM}\). This means the similarity based on \({\mathcal {C}}{\mathcal {C}}_D\) is more accurate of estimating the global itemsets support than based on \(\mathcal {C}_D\) as in \(\text {FPMSIM}\). As shown in the last column, that shows the average calculations, CC-IFIM based on Dice or Cosine similarity is better than using Jaccard similarity. It is worth noting that, although the avg_supp_error is increasing on CC-IFIM, it is very normal due to the increasing of the number of \({\mathcal {F}}{\mathcal {I}}\). In Mushroom dataset, avg_supp_error recorded 0% at some \(\text {minsup}\) thresholds, this does not indicate that the estimation support values are identical to an actual support values. It means CC-IFIM and \(\text {FPMSIM}\) didn’t estimate any support values, where all values of a matrix S is non-zero.

For big data, Kosarak dataset, the traditional algorithm fails to mine all \({\mathcal {F}}{\mathcal {I}}s\), according to heap memory exception. Therefore, we can not able to calculate coverage, precision and avg_supp_error, while the approximated approaches are the suitable solution to mine \({\mathcal {F}}{\mathcal {I}}s\). The comparative study has been made between CC-IFIM and FPMISM only. Figure 8 shows that the number of extracted \({\mathcal {F}}{\mathcal {I}}\)s by CC-IFIM is more than \({\mathcal {F}}{\mathcal {I}}\)s by \(\text {FPMSIM}\). As shown, at \(\text {minsup}=0.6\%\) CC-IFIM approach can mine 1332 frequent itemset from 1465 candidates, while FPMISM mines only 1309. Moreover, \(CC-IFIM\) approach pruned 131 candidates.

-

Incremental dataset Figs. 9, 10, 11 and 12 are another way of visualization that show the comparison of \(\text {FPMSIM}\) and three versions of CC-IFIM on Mushroom, Retail, T40I4D100K and T10I4D100K datasets, respectively.

Figures13 and 14 shows the accuracy CC-IFIM compared to \(\text {FPMSIM}\) for the incremental datasets with different partition size to the original. Where the size of incremental dataset is \(30\%\) of the dataset. Similarly Figs. 15 and 16 where the size of incremental dataset is \(20\%\) of the dataset. These figure shows that CC-IFIM outperforms \(\text {FPMSIM}\).

Coverage, precision and avg_sup_error among \(\text {FPMSIM}\) and three versions of CC-IFIM based on Jaccard, DICE and Cosine for T10I4D100K and Fig. 11 datasets after dividing them into 70% original dataset and 30% incremental dataset

5 Conclusion

The incremental frequent itemset mining approach is an estimated good choice for the huge datasets that are rapidly expanding by periodically adding new transactions. In which, the approach frequent pattern tree and multi-scale incremental frequent itemset, \(\text {FPMSIM}\), is used. It splits the dataset into several components, applies frequent itemset mining separately, then uses Jaccard similarity based on all local frequent itemsets the global frequent itemsets have been mined. However, it faces two problems: performance and efficiency. In this paper, we developed this approach to be more efficient and accurate. In CC-IFIM, the performance has been increased by applying some prior candidate pruning. While the accuracy has been increased by applying similarity measurements on local closed frequent itemsets instead of local frequent itemsets. As well as using another similarity methods such as Dice or Cosine which is remarkably increasing the accuracy of CC-IFIM. The key contributions of this study are the introduction of a pruning approach for reducing the number of candidates, as well as a novel definition of closed itemsets termed local closed frequent itemsets, on which similarity methods rely. Future research will focus on establishing the scientific rationale for the ideal number of partitions in division processes. Also closed and maximal incremental frequent itemsets will be covered. Additionally, investigate several different similarity models in order to propose a new one.

Data availability

The datasets used during the current study are available in the public (the Frequent Itemset Mining Implementations) repository, [http://fimi.ua.ac.be]. The generated source codes of the proposed approach are available from the corresponding author on reasonable request.

References

Agrawal R, Imieliński T, Swami A (1993) Mining association rules between sets of items in large databases. In: Proceedings of the 1993 ACM SIGMOD International Conference on Management of Data, pp. 207–216

Agrawal R, Srikant R et al (1994) Fast algorithms for mining association rules. In: Proceedings 20th International Conference Very Large Data Bases. VLDB 1215:487–499

Chen R, Zhao S, Liu M (2020) A fast approach for up-scaling frequent itemsets. IEEE Access 8:97141–97151

Cheung DW, Han J, Ng VT, Wong C (1996) Maintenance of discovered association rules in large databases: an incremental updating technique. In: Proceedings of the Twelfth International Conference on Data Engineering, pp. 106–114

Cheung DW, Lee SD, Kao B (1997) A general incremental technique for maintaining discovered association rules. Database Syst Adva Appl 97:185–194

Deng ZH (2016) Diffnodesets: an efficient structure for fast mining frequent itemsets. Appl Soft Comput 41:214–223

Deng ZH, Lv SL (2014) Fast mining frequent itemsets using nodesets. Expert Syst Appl 41(10):4505–4512

Deng ZH, Lv SL (2015) Prepost+: an efficient n-lists-based algorithm for mining frequent itemsets via children-parent equivalence pruning. Expert Syst Appl 42(13):5424–5432

Han J, Pei J, Yin Y, Mao R (2004) Mining frequent patterns without candidate generation: a frequent-pattern tree approach. Data Min Knowl Disc 8(1):53–87

Hong TP, Lin CW, Wu YL (2008) Incrementally fast updated frequent pattern trees. Expert Syst Appl 34(4):2424–2435

Huynh VQP, Küng J, Dang TK (2017) Incremental frequent itemsets mining with ippc tree. In: International Conference on Database and Expert Systems Applications, Springer, pp. 463–477

Li Y, Zhang ZH, Chen WB, Min F (2017) Tdup: an approach to incremental mining of frequent itemsets with three-way-decision pattern updating. Int J Mach Learn Cybern 8(2):441–453

Lv D, Fu B, Sun X, Qiu H, Liu X, Zhang Y (2017) Efficient fast updated frequent pattern tree algorithm and its parallel implementation. In: 2017 2nd International Conference on Image, Vision and Computing (ICIVC), IEEE, pp. 970–974

Satyavathi N, Rama B (2021) Dynamic and incremental update of mined association rules against changes in dataset. Innov Comput Sci Eng 25:115–121

Sun J, Xun Y, Zhang J, Li J (2019) Incremental frequent itemsets mining with FCFP tree. IEEE Access 7:136511–136524

Thada V, Jaglan V (2013) Comparison of Jaccard, dice, cosine similarity coefficient to find best fitness value for web retrieved documents using genetic algorithm. Int J Innov Eng Technol 2(4):202–205

Wael Z, Yasser K, Elham E, Fayed G (2009) Mining association rule with reducing candidates generation. In: Proceedings of Fourth International Conference on Intelligent Computing and Information Systems (ICICIS2009). ACM

Xun Y, Cui X, Zhang J, Yin Q (2021) Incremental frequent itemsets mining based on frequent pattern tree and multi-scale. Expert Syst Appl 163:113805

Zaki MJ (2000) Scalable algorithms for association mining. IEEE Trans Knowl Data Eng 12(3):372–390

Zhou Z, Ezeife C (2001) A low-scan incremental association rule maintenance method based on the apriori property. In: Conference of the Canadian Society for Computational Studies of Intelligence, Springer, pp. 26–35

Acknowledgments

The authors acknowledge the role of the Artificial Intelligence and Cognitive Science Laboratory at the Department of Mathematics, Faculty of Science, Ain Shams University, Cairo, Egypt.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that there is no conflict of interest.

Ethical approval

Not applicable.

Consent for publication

Not applicable.

Consent to participate

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Magdy, M., Ghaleb, F.F.M., Mohamed, D.A.E.A. et al. CC-IFIM: an efficient approach for incremental frequent itemset mining based on closed candidates. J Supercomput 79, 7877–7899 (2023). https://doi.org/10.1007/s11227-022-04976-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-022-04976-5