Abstract

Advances in information technologies have resulted in people spending increasing amounts of time staring at electronic screens. Long-term use of computers, mobile phones, and tablets can cause eye soreness and fatigue, but can also cause more serious conditions including myopia, cataracts, and glaucoma. This study assesses changes in brain wave activity detected by eight electrodes targeting different brain regions to identify and assess the brain wave patterns in the regions associated with visual fatigue under various visual presentation methods. Furthermore, linear discriminant analysis and Min–Max scaling techniques are applied to develop a visual fatigue assessment model to quantify visual fatigue. Finally, experiments are run to assess the impact of screen size (smartphone, tablet, computer) and visual presentation mode (2D, 3D, AR, VR) on visual fatigue. This study finds that (1) the brain wave features which influence the reaction to 2D and 3D imaging are the delta and theta waves at the prefrontal Fp1 and Fp2 poles. When viewing AR images, the alpha bands at the O1 and O2 poles of the occipital lobe show a relatively clear impact, while the delta and theta waves at the C3 pole in the left center area are associated with VR images; (2) larger screens cause greater visual fatigue, indicating that excessive visual stimulation will increase visual loading and thus produce greater visual fatigue; (3) the results show that VR can cause quite severe visual fatigue, along with motion sickness passed on sensory mismatch. Therefore, it is recommended to avoid viewing experiences that are inconsistent with the brain’s physiological experience, such as walking while viewing a mobile phone, or reading in a moving car. The proposed visual fatigue assessment model provides easy and objective quantification of visual fatigue indicators, contributing to the reduction of risk for eye injury and disease.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given the high prevalence of Internet applications and mobile devices, people are surrounded by screens of different sizes, and computers and smartphones are integral to both work and leisure life. Looking at digital screens for extended periods can cause eyes to feel sore and tired, but can also result in dizziness, headache, and nausea, and can also contribute to eye diseases including myopia, cataracts, and glaucoma. In recent years, digital imaging technologies have advanced far beyond simple 2D renderings, with three-dimensional (3D) display technologies, 3D movies, and other visualization modes gradually becoming integrated into daily life. Technologies such as augmented reality (AR) and virtual reality (VR) are widely used in education and entertainment applications, and are used to allow consumers to view cars, houses, and other potential purchases from a distance but still with a sense of presence. However, few studies have examined the impact of such technology usage on visual fatigue, and the potential effects of long-term use on human eye comfort remain unclear. Therefore, it is increasingly important to understand the impact of these digital imaging methods on eye fatigue.

Moreover, most people will ignore eye fatigue until the condition begins to seriously impact daily life, thus losing the opportunity for early intervention and treatment. Thus, an objective assessment tool for the real-time detection and evaluation of eye fatigue is needed. However, most previous research on visual fatigue detection have depended on questionnaires and scales, which cannot directly or quickly measure degree of visual fatigue, making it impossible to provide immediate detection and feedback. Such approaches are also easily biased by subjective perception and individual experience, thus leading to accuracy problems [1,2,3]. The electroencephalogram (EEG) can directly display brain wave states, and EEG response in the visual areas of the brain can be measured to identify eye fatigue. Thus, changes in brain wave characteristics can be used to evaluate eye fatigue [4,5,6,7].

This study combines the Butterworth filter (BF), fast Fourier transform (FFT), linear discriminant analysis (LDS), and Min–Max scaling techniques to develop a model for assessing visual fatigue based on EEG signals. Visual fatigue is quantified and expressed numerically before being classified as mild, moderate, or severe. The real-time detection and evaluation of eye fatigue could be used to trigger prompts and reminders to modify screen usage behavior, thus potentially effectively reducing the incidence of resulting your eyes get tired from intense use conditions. In addition, in order to understand the impact of screen size and visualization type on eyes, experimental scenarios are designed with different screen sizes (mobile phones and computers) and visualization types (2D, 3D, AR and VR), combined with a conventional visual fatigue questionnaire to observe the impact of screen size and presentation mode on visual fatigue. Research results can provide insight into the varying effect of screen size and visualization type, thus facilitating appropriate adjustment and self-protection to prevent excessive strain and reduce the risk of serious eye damage.

2 Literature review

2.1 Visual fatigue

Visual fatigue is caused by improper extended eye use (such as close-up or excessive viewing behavior), or external environmental stimuli (such as flicking or insufficient lighting, or inability to fix on the visual target). Short-term eye fatigue can cause sore or dry eyes, poor focus, blurred vision, image persistence, eye redness, or increased eye secretions. Long-term fatigue can cause objects to become unrecognizable at close range, or incorrect color recognition, and can even cause significant physical and mental conditions that can lead to eye damage including glaucoma, cataracts, dry eye, cornea whitening, and refractive distortion.

Visual display terminals (VDTs) generally refer to monitors found in computers, mobile phones, and tablet computers. VDT syndrome is caused by overuse of such monitors and causes discomfort to the eyes and other parts of the body. Decreased vision and dry eye can also lead to poor posture, causing soreness in the shoulders, back, and neck, and can also contribute to loss of appetite, anxiety, and depression [8, 9]. Studies have found that prolonged use of electronic screens is an important cause of visual fatigue, and may contribute to false myopia and other vision-related problems [10,11,12].

Previous research has found that factors such as viewing distance, font size, background color, screen brightness, ambient brightness, contrast, usage time, and binocular parallax in 3D stereo images can cause users to experience symptoms of visual fatigue and discomfort [13,14,15,16,17].

2.2 Relationship between brain waves and visual fatigue

Electroencephalographic (EEG) signals reflect changes in the current generated by cells in the cerebral cortex, indicating synaptic activity of brain neurons and thus the state of the brain [18]. The cerebral cortex can be divided into three functional areas: sensory, motor, and cognitive [19, 20], where the cortical areas receive and process sensory information, including the visual cortex of the occipital lobe, the auditory cortex of the temporal lobe, and the primary somatosensory cortex of the postcentral gyrus of the parietal lobe which receives tactile information from the various parts of the body [20, 21].

Although visual fatigue cannot be directly measured, previous research indicates that visual fatigue is reflected by EEG signals, and changes in degree of fatigue are also reflected in the intensity of brain wave band activity. Chen et al. [6] found that viewing 3D screens is prone to causing visual fatigue, and is associated with significant changes in the relative energy in the α, β, and δ bands. They also found that the level of visual fatigue is clearly associated with changes in brain wave activity in the frontal lobe. Chen et al. [7] established a 3D visual fatigue assessment model by calculating the gravity frequency and power spectral entropy of brain waves at the left forehead electrode point (Fp1). Kim and Lee [22] also found 3D visual fatigue affects EEG activity in the beta band (14–25 Hz). Park et al. [23] found that watching 3D movies was more likely to cause visual fatigue than 2D movies, and found that alpha wave activity was higher in the frontal lobe (i.e., F3 and F4 poles).

2.3 Related works

Previous visual fatigue assessment has mostly been conducted using scales, with degree of fatigue determined in terms of the subjective perception of the subject [3]. A subjective visual fatigue scale developed by [1] has been widely used in studies on visual fatigue. Later research explored the impact of additional factors on eye fatigue, such as visual stress, eye pain, body pain, and image blurring [24]. Previous studies have also suggested that critical flash fusion frequency (CFF), blink frequency, eye movement, and spatial frequency adaptability can be used as objective indicators of visual fatigue [14, 25, 26].

Some more recent studies have noted that visual fatigue is related to the δ, θ, α, and β brain wave bands [6]. Event-related potential (ERP) [27] or steady-state visual evoked potential (SSVEP) [28] explore the effects of different brain regions and the intensity of EEG activity on visual fatigue. Studies using functional magnetic resonance imaging (fMRI) to evaluate visual fatigue found that excessive visual stimulus will increase the neuronal activity of the frontal eye fields [5, 29]. Previous studies have also explored the relationship between heart rate variability (HRV) and visual fatigue [7, 30]. Furthermore, heart–brain synchronization, heartbeat evoked potential (HEP), and brain wave event-related potential (ERP) have been used to assess visual fatigue resulting from viewing of 3D images [23].

3 Methods

3.1 Linear discriminant analysis

Linear discriminant analysis (LDA) combines statistics and machine learning to identify linear combinations of categorical features. In addition to being used for classification [31], it is also used for dimension reduction [32]. LDA projects labeled samples from n-dimensional samples to a smaller subspace k (where k ≤ n − 1), while maintaining class-discriminatory information, to find an optimized objective function J (W) according to the Fisher criterion, that is, to find a projection matrix composed of a set of optimal difference vectors, to find the largest ratio of between-class and within-class scatter. The objective function is defined as follows.

where \(S_{b}\) is the degree of dispersion among the taxonomic groups, and \(S_{w}\) is the degree of aggregation within the groups, which are defined as follows:

where C represents the number of categories, N is the total number of data samples, \(N_{i}\) is the number of data for the ith category, \(\mu\) is the average value matrix for all data, \(\mu_{i}\) is the average matrix of the ith category data, and \(x_{j}^{i}\) is the jth sample of the ith category.

From Eq. (1), we see that W is the eigenvector matrix of \(S_{w}^{ - 1} S_{b}\), where the optimal projection direction is obtained by solving the matrix characteristic problem of the following equation:

where λ is the Lagrange multiplier.

After obtaining W, a discriminant function can be established by the equation \(y = W^{{\text{T}}} x\) as follows:

where y is the discriminant score, xi is features or variables, and wi is discriminant weight. The discriminant score of the observed value can be obtained using the discriminant function, and can then be classified appropriately.

3.2 Min–Max scaling

The data used for analysis suffer from inconsistent variable ranges. To process data in different ranges, feature scaling can be used to normalize the self-variables or to uniformize their data range. The Min–Max scaling method rescales data from different ranges to a fixed range or interval, usually [0, 1] or [− 1, 1] using the following formula:

where x is the original value and \(x^{\prime}\) is the normalized value.

4 Experiment

4.1 Participants

Four experimental scenarios were designed, one each for 2D, 3D, AR, and VR. After explaining the experimental process, 30 subjects provided informed consent and met the following criteria: vision over 0.8, right-handed, and without color recognition disability or other eye diseases.

In the 2D experiment, the subject's watched a 110-min-long animated movie on a smartphone, a tablet computer, and a desktop computer, with respective screen sizes of 5, 9, and 19 inches. All viewings took place at a similarly suitable distance. The 18 subjects (9 men and 9 women) were randomly divided into three experimental groups of 6 (3 men and 3 women). Subjects were aged 18–27 years with a mean age of 20.6 years (SD 2.35). In the 3D experiment, ten subjects [5 men and 5 women, aged 18–24 with an average age of 20.1 years (SD1.91)] played the 3D game “Minecraft” on a 19-inch computer screen. In the VR experiment, eight subjects [4 men and 4 women, aged 19–25 with a mean age of 21.25 years (SD 2.12)] played the VR version of Minecraft using an Oculus Rift virtual reality headset. The game was run on the Microsoft Xbox One platform, using an Xbox One Wireless Gamepad. In the AR experiment, 12 subjects [6 men and 6 women, aged 19–25 years with an average age of 22 years (SD 20.9)] played an AR shooting game, in which the embedded camera of a smartphone (5″ screen) and a tablet computer (9″ screen) is used to view virtual environment overlayed on the physical environment. Table 1 summarizes demographic data for all participants.

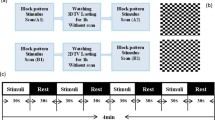

4.2 Experimental procedure

This study examines the impact of different visualization modes (2D, 3D, AR and VR) and screen sizes (smartphone, tablet, and computer) on visual fatigue. Participants either watched videos or playing games in seven experimental scenarios: 2D mobile video, 2D tablet video, 2D computer video, 3D computer game, AR mobile game, AR tablet game, and VR computer game. Brain wave data were collected by EEG, and participants also completed a visual fatigue questionnaire and a three-dimensional visual fatigue questionnaire [1, 24]. EEG recordings and questionnaires were administered to the experimental groups both before and after the visual stimulus to assess the different visual fatigue impacts of the various stimuli and viewing conditions. To avoid interference, for all four experimental scenarios, all participants were seated independently in a quiet room. The 2D experiment lasted 110 min, while the 3D, AR, and VR experiments lasted 1 h without interruption. Participants were told they could stop the experiment at any time if they began to feel dizzy or nauseous.

The experimental design is divided into two parts: (1) the combined effect of different visual stimuli and screen size on visual fatigue and (2) the effect of screen size on visual fatigue. However, the study is subject to certain limitations imposed by the experimental equipment and EEG instruments. For example, the camera operation of AR and VR games needs to be matched with body movement. Nevertheless, the experimental design controls the impact of other environmental factors as much as possible. The experimental process was designed with reference to previous studies [7, 33]. Before the experiment, EEG measurements were taken of each participant for five minutes with their eyes continuously shut. Participants also completed a visual fatigue questionnaire to collect basic participant information and a baseline value for visual fatigue. Then, the various experimental groups either watched videos or played games. To prevent excessive eye fatigue, participants were verbally asked every 10 min if they were able to continue without interrupting the experiment [34, 35]. After the experiment, participants were again subjected to five minutes of EEG recording, and two questionnaires were completed to identify changes in visual fatigue before and after the experiment. EEG data were continuously collected from the 2D and 3D groups, but this was not feasible for the AR and VR groups due to interference from the visualization equipment and/or regular head shaking during game play. Figure 1 shows the experimental process.

4.3 Data collection

4.3.1 Brain–computer interfaces

As shown in Fig. 2, this study uses the BR8 PLUS eight-channel electroencephalogram instrument produced by Brain Rhythm Inc, applied to electrode points are Fp1, Fp2, Fz, C3, C4, Pz, O1, and O2. As shown in Fig. 3, the reference electrode and the circuit grounding system are connected to the left and right earlobes to measure the EEG signal. The measurement frequency range is 0.12–125 Hz, and the sampling frequency is 1000 Hz.

4.3.2 Visual fatigue measurement questionnaire

To demonstrate that brain waves can effectively reflect degree of visual fatigue, an energy-based indicator for measuring the degree of visual fatigue is needed. Therefore, a visual fatigue questionnaire [1] was used to collect the participants’ subjective feelings of visual fatigue. The visual fatigue questionnaire has six questions with a 10-point Likert scale: (1) “I have difficulty seeing”; (2) “I have a strange feeling around the eyes”; (3) “My eyes feel tied”; (4) “I feel numb”; (5) “I have a headache”; (6) “I feel dizzy looking at the screen.” The results of the six questions are summed to produce a subjective visual fatigue score.

3D visual stimulation can cause visual fatigue due to stereoscopic perception [36, 37], which is also featured in the visual presentation of AR and VR [38, 39]. Stereoscopic perception easily leads to visual fatigue, along with physical symptoms including headache, nausea, and dizziness [40]. A three-dimensional visual fatigue questionnaire [24] was used to evaluate visual fatigue and physical discomfort caused by 3D visual stimuli. This questionnaire uses a five-point scale to score 29 questions that assess four independent factors: visual stress (VS) (15 items), eye pain (EP) (10 items), body pain (BP) (4 items), and image blurring factor (IBF) (3 items) (Table 2).

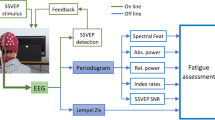

4.4 A visual fatigue assessment model

4.4.1 EEG signal processing and features

A Butterworth filter was used to filter out the frequency bands vulnerable to interference, while retaining the required brain wave frequency band (0.5–30 Hz) [41]. Fast Fourier transform is used to convert the time-domain brain wave signal into the frequency domain, which is used to extract the δ (0.5–4 Hz), θ (4–8 Hz), α (8–13 Hz), and β (13–30 Hz) brain wave bands. Electroencephalogram data were divided into 10 s samples [42] to observe changes in EEG signal values. This study took the average values of all signals in four frequency bands at eight electrode points (Fp1, Fp2, Fz, C3, C4, Pz, O1, and O2) [6, 43, 44], for a total of 32 brain wave features.

4.4.2 Feature selection

This study collects brain wave data in response to different visual presentations (2D, 3D, AR, VR) and then categorizes eye fatigue as mild, moderate, and severe. ANOVA analysis is performed to identify important brain wave characteristics that can distinguish different degrees of visual fatigue. The analysis results in the 3D group showed that the delta and theta waves of the prefrontal (Fp1 and Fp2) were significantly different (p < 0.001), and past studies have also shown that 3D visual fatigue is associated with the prefrontal lobe, The frontal, central, and occipital regions had significant effects, with the frontal lobe being the most significant [7]. The analysis results of the 2D group found that their significant characteristics were similar to those of the 3D group: delta (p < 0.001) and theta (p < 0.01) of the prefrontal lobe (Fp1 and Fp2) showed little difference in the visual fatigue characteristics of the 2D and 3D groups. In the AR group, the delta and theta bands of the prefrontal lobe (Fp1 and Fp2) did not reach a level of significance, but the alpha band (p < 0.05) of the occipital lobe (O1 and O2) did, indicating that viewing AR images had different brain stimulation effects than those found with 2D and 3D. However, the VR group showed a significant difference between the delta (p < 0.001) and theta (p < 0.05) bands in the left central area (C3), which differs significantly from the other three visual presentation methods.

4.4.3 Visual fatigue assessment model

Brain wave analysis found similar brain wave patterns for 2D and 3D stimulation, while AR and VR stimulated different brain regions and bands. Therefore, this study used significantly different brain wave characteristics to develop three models for visual fatigue assessment.

Linear discriminant analysis was used to map the sample points of the above-mentioned multi-dimensional brain wave features onto a single-dimensional axis, and a discriminant function was established to calculate the discriminant score corresponding to this axis, that is, the yi value which is the degree of visual fatigue. Equation (5) is a discriminant function established by 2D and 3D brain wave characteristics and experimental data.

To assess the degree and significance of the value of yi in the entire sample data, all sample points must be assigned to the same scaling range through normalization. This study uses Min–Max scaling methods to rescale all values to [0–1] [45]. To determine the distribution of all values, the discriminant scores of all sample points need to be calculated to obtain the maximum and minimum values.

For example, in subject am EEG feature values for Fp1.Delta, Fp1.Theta, Fp2.Delta, and Fp2.Theta are respectively 9.112610565, 2.122571031, 10.18418902, 2.201798311. Thus, Eq. (5) calculates that \(y_{{\text{a}}}\) as 6.2011, and calculates the difference scores of all sample points to obtain the maximum value (8.396579) and the minimum value (− 0.014483).

The visual fatigue assessment index Va is obtained by Min–Max scaling methods, and is then multiplied by 100. Therefore, the visual fatigue degree of subject a is estimated to be about 33.8065.

4.4.4 Effectiveness evaluation of visual fatigue model

In the 2D and 3D experiments, the brain wave data of 10 subjects were selected from the original training data set, and the experimental data were divided into four segments based on the viewing time of each participant. Brain wave data before and after the experiment were divided into six stages. Under normal circumstances, visual fatigue increases with the length of viewing [12]. The average degree of visual fatigue at each stage of the experiment is calculated and plotted in Fig. 4a using the proposed brain wave-based evaluation model. It can be seen that 8 out of the 10 participants show a continuous increase in eye fatigue. The dashed lines in different colors represent the data for each subject, and the solid black line is the average trend of all data. Using the same experimental procedure, the brain wave data of 5 additional participants were collected for testing (all male, mean age 20.4 years old, SD 1.516575), with the results shown in Fig. 4b. It can be seen that 4 of the 5 participants experienced continuously increasing eye fatigue. For the AR and VR experiments, only brain wave data before and after the experiment were collected, and Figs. 5 and 6 show the degree of visual fatigue increased significantly over these two stages. Of the 12 VR game participants, 11 experienced increased fatigue, with those playing 8-bit VR games showing increasing fatigue. The trend shown in the above results is similar to the results of the visual fatigue questionnaire, indicating the proposed evaluation model is reliable and accurate for assessing visual fatigue, and thus can be used as an auxiliary tool for visual fatigue detection.

5 Analysis and results

5.1 Effects of visualization type on visual fatigue

This study uses visual fatigue questionnaire scores [1] and brain wave data as indicators for assessing visual fatigue. It then explores the impact of visualization mode (2D, 3D, AR, VR) and screen size (smartphones, tablets, and computer screens) on visual fatigue in different experimental scenarios. For changes in the degree of visual fatigue, paired-samples t test was used to detect whether the visual fatigue score and the brain wave index before and after the experiment reached a degree of significance. Table 3 shows that the results of 2D movies, 3D games, AR games, and VR games are consistent, and most of the brain wave evaluation indicators and scale scores have significant differences (p < 0.01), with the average degree of post-experiment visual fatigue significantly higher than prior to the experiment, indicating that eye fatigue indeed increases with viewing time using the tested visualization methods. The difference between the average value of the brain wave evaluation index and the scale score shows that the difference between the 3D group and the VR group is higher than that of other visual presentation methods (2D, VR). VR games produce greater visual fatigue than 3D games, which may indicate that type of visual stimulus has a significant effect on degree of visual fatigue. The average value of the brain wave evaluation index for AR game play also showed an increase, but it did not reach the level of statistical significance.

5.2 Physical discomfort caused by visual fatigue

The 3D visual fatigue questionnaire is used to explore the effect of visual fatigue on physical discomfort, using the paired-samples t test to identify significant differences in the four visual fatigue symptom scores before and after the experiment. The results summarized in Table 4 show significant differences between the 2D and 3D AR and VR visualizations for the four different physical symptoms (p < 0.05), especially in terms of visual stress and eye pain (p < 0.01). For AR game play, the body pain dimension is found to be very significant (p < 0.001). A detailed comparison of the various questions related to body pain shows significant differences between “shoulder stiffness” and “neck stiffness” (p = 0.001). In the 3D and VR groups, the average value of the “dizziness” response was high, and subjects were more likely to stop the experiment because of dizziness or headache, possibly due to 3D motion sickness caused by stereoscopic discomfort.

5.3 Comparison of physical discomfort caused by visual fatigue

The 2D video-watching group is also assessed in terms of the impact of different screen sizes (smartphones, tablets and computer screens) on visual fatigue, using the visual fatigue questionnaire score and brain wave assessment indicators, followed by covariance analysis (ANCOVA). The covariates are set as the pre-experimental data to prevent influence from inconsistent initial visual fatigue values for each participant, and to reduce the influence caused by individual differences and experiment time. Levene’s test was used to test the homogeneity of the variation number. The test results were p = 0.289 (scale score) and p = 0.507 (EEG index). Table 5 shows that the effect of screen size on visual fatigue reached a level of significance. Using Tukey post hoc tests analysis, although the subjective scale scores were not significant, smartphone and computer screens showed significant differences in terms of brain wave evaluation indicators (p = 0.022). Observing the difference of the adjusted mean, we can see that the visual fatigue of the computer screen group (85.507) is higher than that of the other two groups (67.710, 74.934), and screen size has a closely positive correlation with the degree of visual fatigue.

5.4 Discussion

5.4.1 Brain wave characteristics

In this study, different brain presentation areas and features were identified for different visual presentation methods. Among them, 2D and 3D have similar features, which are the delta and theta bands of the frontal lobe (Fp1 and Fp2). 2D is different from 3D, but is the alpha band of the occipital lobe (O1 and O2). It may be because AR's visual stimulation and use experience are different from 2 and 3D. The visual stimulation comes from the presentation of virtual objects in the real world. Appears, so it causes differences in AR brain wave characteristics. Finally, an important brain wave feature of VR is the left and right sulcus brain gyrus (C3). It can be found that the effect of VR on the brain is completely different from other visual presentation methods. Past research shows that some people will experience “VR motion sickness” (Cybersickness) [46, 47], and the parietal lobe, occipital lobe, and motor areas are related to motion sickness [48,49,50], and the motor areas are located in the cerebral gyrus area (C3 and C4) before and after the sulcus. They are responsible for part of the movement and somatosensory functions, probably due to the vision of VR. Stimulation can cause the brain to misunderstand that body movements differ from visual reception, causing sensory mismatches [51,52,53].

5.5 Different visualization modes

Among the different visualization modes tested, 3D and VR viewing resulted in greater visual fatigue than 2D and AR. Most people are highly accustomed to passive viewing of 2D visualizations, while AR visualizations are more dynamic and integrated into the user’s physical response, and the resulting greater visual processing requirements may lead to greater fatigue. Likewise, the misalignment of 3D and VR vision and stereoscopic phenomenon imposes significant brain's visual processing requirements and thus contributes to feelings of fatigue.

Experimental results show the use of VR can cause a type of motion sickness, called simulator sickness or cybersickness. Symptoms include nausea, eye fatigue, and dizziness [54, 55]. The most widely accepted reason for motion sickness is sensory mismatch, which occurs when the brain's sensory input is inconsistent with expectations or predictions generated by previous experience, and the severity of motion sickness depends on the degree of sensory mismatch [51,52,53, 56].

However, nowadays, many people often view their mobile phone screens while walking or in moving cars, exposing them to similar sensory mismatch due to the contrast of the static visual input and the tactile sense of movement, and can thus result in similar feelings of discomfort. Although this study finds that smaller screens produce visual fatigue, incorrect screen usage can also be quite harmful to the eyes, and further research is needed. In addition, AR is prone to cause shoulder and neck soreness. AR applications require users to hold actively point the camera of their mobile device to interact with the application content, moving and rotating the device to access different viewpoints, and continuous extended usage can contribute to neck and shoulder fatigue.

5.6 Different screen sizes

This study explores the effects of different screen sizes on visual fatigue from watching 2D videos. Computer screens (15 inches) result in greater visual fatigue than smartphones or tablet computer screens, and the degree of fatigue is found to increase with screen size. Past research has suggested that smaller screens are more taxing on the eyes [11, 57], but those studies focused on the impact of text size on reading speed. The present research experiment focuses on viewing of videos, which is less taxing than text reading, which may account for this discrepancy, and future studies should examine the impact of various visual stimuli on visual fatigue.

6 Conclusions

The main contributions of this study are as follows: (1) brain waves measurements and questionnaire responses were used to assess the impact of extended 2D, 3D, AR and VR visual stimulation on eye fatigue, using ANOVA analysis to identify brain regions and features that reflect the impact of the various visualization modes, providing a useful reference for continuing research on factors contributing to visual fatigue. (2) A brain wave-based visual fatigue assessment model is developed based on different brain wave characteristics, and the degree of visual fatigue is quantified in a fixed range, providing a more objective alternative to questionnaires for assessing visual fatigue. This can assist clinicians in making diagnosis, and also help users adjust their screen usage behaviors to protect against excessive use and reduce the risk of eye injury. (3) The effects of different screen sizes and visualization modes on visual fatigue are discussed, providing new insight. Experimental results show that screen size is positively related to degree of visual fatigue, and that 3D and VR viewing place considerable strain on the eyes, brain, and other parts of the body. Thus, users of these technologies should carefully monitor their discomfort level and duration of use, and minimize additional body movement while viewing. In addition, the research results provide some suggestions to protect the eyes of the public. Users try not to watch the screen when the body is shaking (such as watching a mobile phone while walking or using transportation). Try to avoid watching movies or games with 3D or VR visual rendering technology. The larger the screen size, the greater the distance between the eyes and the screen.

Change history

03 November 2020

The original article has been corrected: in Figure 4 the label for part (b) was missing

References

Heuer H, Hollendiek G, Kröger H, Römer T (1989) Die Ruhelage der Augen und ihr Einfluß auf Beobachtungsabstand und visuelle Ermüdung bei Bildschirmarbeit (Rest position of the eyes and the influence of observation distance and visual fatigue on VDT work). Z Exp Angew Psychol 36(4):538–566

Li HO, Seo J, Kham K, Lee S (2008a) Method of measuring subjective 3D visual fatigue: a five-factor model. In: Digital holography and three-dimensional imaging (p. DWA5). Optical Society of America. https://doi.org/10.1364/DH.2008.DWA5

Gutiérrez J, Pérez P, Jaureguizar F, Cabrera J, García N (2011) Subjective assessment of the impact of transmission errors in 3DTV compared to HDTV. In: 3DTV IEEE Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON). pp 1–4.https://doi.org/10.1109/3DTV.2011.5877209

Yano S, Ide S, Mitsuhashi T, Thwaites H (2002) A study of visual fatigue and visual comfort for 3D HDTV/HDTV images. Displays 23(4):191–201. https://doi.org/10.1016/S0141-9382(02)00038-0

Kim D, Jung YJ, Kim E, Ro YM, Park H (2011) Human brain response to visual fatigue caused by stereoscopic depth perception. In: 2011 International Conference on IEEE Digital Signal Processing (DSP). pp 1–5.https://doi.org/10.1109/ICDSP.2011.6004997

Chen C, Li K, Wu Q, Wang H, Qian Z, Sudlow G (2013) EEG-based detection and evaluation of fatigue caused by watching 3DTV. Displays 34(2):81–88. https://doi.org/10.1016/j.displa.2013.01.002

Chen C, Wang J, Li K, Wu Q, Wang H, Qian Z, Gu N (2014) Assessment visual fatigue of watching 3DTV using EEG power spectral parameters. Displays 35(5):266–272. https://doi.org/10.1016/j.displa.2014.10.001

Wang AH, Chen MT (2000) Effects of polarity and luminance contrast on visual performance and VDT display quality. Int J Ind Ergon 25(4):415–421. https://doi.org/10.1016/S0169-8141(99)00040-2

Lin CC, Huang KC (2006) Effects of ambient illumination and screen luminance combination on character identification performance of desktop TFT-LCD monitors. Int J Ind Ergon 36(3):211–218. https://doi.org/10.1016/j.ergon.2005.11.004

Lin YH, Chen CY, Lu SY, Lin YC (2008) Visual fatigue during VDT work: effects of time-based and environment-based conditions. Displays 29(5):487–492. https://doi.org/10.1016/j.displa.2008.04.003

Lee DS, Ko YH, Shen IH, Chao CY (2011) Effect of light source, ambient illumination, character size and interline spacing on visual performance and visual fatigue with electronic paper displays. Displays 32(1):1–7. https://doi.org/10.1016/j.displa.2010.09.001

Chu C, Rosenfield M, Portello JK, Benzoni JA, Collier JD (2011) A comparison of symptoms after viewing text on a computer screen and hardcopy. Ophthalmic Physiol Opt 31(1):29–32. https://doi.org/10.1111/j.1475-1313.2010.00802.x

Kooi FL, Toet A (2004) Visual comfort of binocular and 3D displays. Displays 25(2):99–108. https://doi.org/10.1016/j.displa.2004.07.004

Lee EC, Heo H, Park KR (2010) The comparative measurements of eyestrain caused by 2D and 3D displays. IEEE Trans Consum Electron 56(3):1677–1683. https://doi.org/10.1109/TCE.2010.5606312

Bando T, Iijima A, Yano S (2012) Visual fatigue caused by stereoscopic images and the search for the requirement to prevent them: a review. Displays 33(2):76–83. https://doi.org/10.1016/j.displa.2011.09.001

Lee H, Whang KW (2012) A quantitative measurement of LCD and PDP TVs for human visual preference and fatigue. Displays 33(1):1–6. https://doi.org/10.1016/j.displa.2011.08.001

Wang Q, Xu H, Gong R, Cai J (2015) Investigation of visual fatigue under LED lighting based on reading task. Optik 126(15–16):1433–1438. https://doi.org/10.1016/j.ijleo.2015.04.033

Nunez PL, Srinivasan R (2007) Electroencephalogram. Scholarpedia 2(2):1348. https://doi.org/10.4249/scholarpedia.1348

Rakic P (1988) Specification of cerebral cortical areas. Science 241(4862):170–176. https://doi.org/10.1126/science.3291116

Kandel ER, Schwartz JH, Jessell TM, Siegelbaum SA, Hudspeth AJ (2013) Principles of neural science, 5th edn. McGraw-Hill Medical, New York. ISBN:9780071390118

Ribas GC (2010) The cerebral sulci and gyri. Neurosurg Focus 28(2):E2. https://doi.org/10.3171/2009.11.FOCUS09245

Kim YJ, Lee EC (2011) EEG based comparative measurement of visual fatigue caused by 2D and 3D displays. In: International Conference on Human–Computer Interaction.https://doi.org/10.1007/978-3-642-22095-1_59

Park S, Won MJ, Lee EC, Mun S, Park MC, Whang M (2015) Evaluation of 3D cognitive fatigue using heart–brain synchronization. Int J Psychophysiol 97(2):120–130. https://doi.org/10.1016/j.ijpsycho.2015.04.006

Li HO (2010) Human factor research on the measurement of subjective three dimensional fatigue. J Broadcast Eng 15(5):607–616. https://doi.org/10.5909/JBE.2010.15.5.607

Mourant RR, Chantadisai LR (1981) Visual fatigue and cathode ray tube display terminals. Hum Factors 23(5):529–540. https://doi.org/10.1177/001872088102300503

Lunn R, Banks WP (1986) Visual fatigue and spatial frequency adaptation to video displays of text. Hum Fact J Hum Fact Ergon Soc 28(4):457–464. https://doi.org/10.1177/001872088602800407

Li HO, Seo J, Kham K, Lee S (2008b) Measurement of 3D visual fatigue using event-related potential (ERP): 3D oddball paradigm. In: IEEE 2008 3DTV Conference: The True Vision-Capture, Transmission and Display of 3D Video.https://doi.org/10.1109/3DTV.2008.4547846

Sakurada T, Kawase T, Komatsu T, Kansaku K (2015) Use of high-frequency visual stimuli above the critical flicker frequency in a SSVEP-based BMI. Clin Neurophysiol 126(10):1972–1978. https://doi.org/10.1016/j.clinph.2014.12.010

Chen C, Wang J, Li K, Liu Y, Chen X (2015) Visual fatigue caused by watching 3DTV: an fMRI study. Biomed Eng Online 14(1):1. https://doi.org/10.1186/1475-925X-14-S1-S12

Park S, Won MJ, Mun S, Lee EC, Whang M (2014) Does visual fatigue from 3D displays affect autonomic regulation and heart rhythm? Int J Psychophysiol 92(1):42–48. https://doi.org/10.1016/j.ijpsycho.2014.02.003

Pang S, Ozawa S, Kasabov N (2005) Incremental linear discriminant analysis for classification of data streams. IEEE Trans Syst Man Cybern Part B Cybern 35(5):905–914. https://doi.org/10.1109/TSMCB.2005.847744

Zhong F, Zhang J (2013) Linear discriminant analysis based on L1-norm maximization. IEEE Trans Image Process 22(8):3018–3027. https://doi.org/10.1109/TIP.2013.2253476

Li J, Chen C, Liu Y, Chen X (2016) Small-world brain functional network altered by watching 2D/3DTV. J Vis Commun Image R 38:433–439. https://doi.org/10.1016/j.jvcir.2016.03.023

Iatsun I, Larabi MC, Fernandez-Maloigne C (2015) Investigation and modeling of visual fatigue caused by S3D content using eye-tracking. Displays 39:11–25. https://doi.org/10.1016/j.displa.2015.07.001

Nalivaiko E, Davis SL, Blackmore KL, Vakulin A, Nesbitt KV (2015) Cybersickness provoked by head-mounted display affects cutaneous vascular tone, heart rate and reaction time. Physiol Behav 151:583–590. https://doi.org/10.1016/j.physbeh.2015.08.043

Kim H, Lee J (2015) A study on the possibility of implementing a real-time stereoscopic 3D rendering TV system. Displays 40:24–34. https://doi.org/10.1016/j.displa.2015.05.001

Vinnikov M, Allison RS, Fernandes S (2016) Impact of depth of field simulation on visual fatigue: Who are impacted? and how? Int J Hum Comput Stud 91:37–51. https://doi.org/10.1016/j.ijhcs.2016.03.001

Slobounov SM, Ray W, Johnson B, Slobounov E, Newell KM (2015) Modulation of cortical activity in 2D versus 3D virtual reality environments: an EEG study. Int J Psychophysiol 95(3):54–260. https://doi.org/10.1016/j.ijpsycho.2014.11.003

Soto C, Vargas M, Uribe-Quevedo A, Jaimes N, Kapralos B (2015) AR stereoscopic 3D human eye examination app. In: 2015 IEEE International Conference on Interactive Mobile Communication Technologies and Learning (IMCL).https://doi.org/10.1109/IMCTL.2015.7359594

Lambooij MT, IJsselsteijn WA, Heynderickx I (2007) Visual discomfort in stereoscopic displays: a review. In: Proceedings of the SPIE 6490, Stereoscopic Displays and Virtual Reality Systems XIV, 64900I.https://doi.org/10.1117/12.705527

Sayed K, Kamel M, Alhaddad M, Malibary HM, Kadah YM (2017) Characterization of phase space trajectories for brain–computer Interface. Biomed Signal Process Control 38:55–66. https://doi.org/10.1016/j.bspc.2017.05.007

Meyberg S, Werkle-Bergner M, Sommer W, Dimigen O (2015) Microsaccade-related brain potentials signal the focus of visuospatial attention. NeuroImage 104:79–88. https://doi.org/10.1016/j.neuroimage.2014.09.065

Jap BT, Lal S, Fischer P, Bekiaris E (2009) Using EEG spectral components to assess algorithms for detecting fatigue. Expert Syst Appl 36(2):2352–2359. https://doi.org/10.1016/j.eswa.2007.12.043

Jap BT, Lal S, Fischer P (2011) Comparing combinations of EEG activity in train drivers during monotonous driving. Expert Syst Appl 38(1):996–1003. https://doi.org/10.1016/j.eswa.2010.07.109

de Amorim RC, Hennig C (2015) Recovering the number of clusters in data sets with noise features using feature rescaling factors. Inform Sci 324:126–145. https://doi.org/10.1016/j.ins.2015.06.039

Regan EC, Price KR (1994) The frequency of occurrence and severity of side-effects of immersion virtual reality. Aviat Space Environ Med 65(6):527–530

Cobb SV, Nichols S, Ramsey A, Wilson JR (1999) Virtual reality-induced symptoms and effects (VRISE). Presence Teleoper Virtual Environ 8(2):169–186. https://doi.org/10.1162/105474699566152

Chen YC, Duann JR, Chuang SW, Lin CL, Ko LW, Jung TP, Lin CT (2010) Spatial and temporal EEG dynamics of motion sickness. NeuroImage 49(3):2862–2870. https://doi.org/10.1016/j.neuroimage.2009.10.005

Ko LW, Wei CS, Jung TP, Lin CT (2011) Estimating the level of motion sickness based on EEG spectra. In: International Conference on Foundations of Augmented Cognition.https://doi.org/10.1007/978-3-642-21852-1_21

Lin CT, Tsai SF, Ko LW (2013) EEG-based learning system for online motion sickness level estimation in a dynamic vehicle environment. IEEE Trans Neural Netw Learn Syst 24(10):1689–1700. https://doi.org/10.1109/TNNLS.2013.2275003

Treisman M (1977) Motion sickness: an evolutionary hypothesis. Science 197(4302):493–495. https://doi.org/10.1126/science.301659

Reason JT (1978) Motion sickness adaptation: a neural mismatch model. J R Soc Med 71(11):819. https://doi.org/10.1177/014107687807101109

Bos JE, Bles W, Groen EL (2008) A theory on visually induced motion sickness. Displays 29(2):47–57. https://doi.org/10.1016/j.displa.2007.09.002

Stanney KM, Kennedy RS, Drexler JM (1997) Cybersickness is not simulator sickness. Proc Hum Factors Ergon Soc Annu Meet 41(2):1138–1142. https://doi.org/10.1177/107118139704100292

LaViola JJ (2000) A discussion of cybersickness in virtual environments. ACM SIGCHI Bull 32(1):47–56. https://doi.org/10.1145/333329.333344

Choi MH, Alquzi MB, Hong M (2014) Assessment of human perceptual sensitivity to physically non-conforming motion in virtual environments. J Supercomput 69(3):1311–1323. https://doi.org/10.1007/s11227-014-1169-y

Lin H, Wu FG, Cheng YY (2013) Legibility and visual fatigue affected by text direction, screen size and character size on color LCD e-reader. Displays 34(1):49–58. https://doi.org/10.1016/j.displa.2012.11.006

Acknowledgement

The authors would like to give thanks to the Ministry of Science and Technology of Taiwan for Grant MOST 107-2410-H-025-010-MY2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, CC., Chiang, HS. & Hsiao, MH. Effects of screen size and visual presentation on visual fatigue based on regional brain wave activity. J Supercomput 77, 4831–4851 (2021). https://doi.org/10.1007/s11227-020-03458-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03458-w