Abstract

We analyze the computational efficiency of approximate Bayesian computation (ABC), which approximates a likelihood function by drawing pseudo-samples from the associated model. For the rejection sampling version of ABC, it is known that multiple pseudo-samples cannot substantially increase (and can substantially decrease) the efficiency of the algorithm as compared to employing a high-variance estimate based on a single pseudo-sample. We show that this conclusion also holds for a Markov chain Monte Carlo version of ABC, implying that it is unnecessary to tune the number of pseudo-samples used in ABC-MCMC. This conclusion is in contrast to particle MCMC methods, for which increasing the number of particles can provide large gains in computational efficiency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Approximate Bayesian computation (ABC) is a family of algorithms for Bayesian inference that address situations where the likelihood function is intractable to evaluate but where one can obtain samples from the model. These methods are now widely used in population genetics, systems biology, ecology, and other areas, and are implemented in an array of popular software packages (Tavare et al. 1997; Marin et al. 2012). Let \(\varvec{\theta }\in \Theta \) be the parameter of interest with prior density \(\pi (\varvec{\theta })\), \(\varvec{y}_{\mathrm {obs}}\in \mathcal {Y}\) be the observed data and \(p(\varvec{y}|\varvec{\theta })\) be the model. The simplest ABC algorithm first generates a sample \(\varvec{\theta }{^\prime } \sim \pi (\varvec{\theta })\) from the prior, then generates a pseudo-sample \(\varvec{y}_{\varvec{\theta }}{^\prime } \sim p( \cdot | \varvec{\theta }{^\prime })\). Conditional on \(\varvec{y}_{\varvec{\theta }'} = \varvec{y}_{\mathrm {obs}}\), the distribution of \(\varvec{\theta }{^\prime }\) is the posterior distribution \(\pi ( \varvec{\theta }| \varvec{y}_{\mathrm {obs}})\propto \pi (\varvec{\theta }) p(\varvec{y}_{\mathrm {obs}}| \varvec{\theta })\). For all but the most trivial discrete problems, the probability that \(\varvec{y}_{\varvec{\theta }}{^\prime } = \varvec{y}_{\mathrm {obs}}\) is either zero or very small. Thus the condition of exact matching of pseudo-data to the observed data is typically relaxed to \(\Vert \eta (\varvec{y}_{\mathrm {obs}}) - \eta (\varvec{y}_{\varvec{\theta }}{^\prime })\Vert < \epsilon ,\) where \(\eta \) is a low-dimensional summary statistic, \(\Vert \cdot \Vert \) is a distance function, and \(\epsilon >0\) is a tolerance level. The resulting algorithm gives samples from the target density \(\pi _\epsilon \) that is proportional to \(\left[ \pi (\varvec{\theta })\int \mathbf {1}_{\{\Vert \eta (\varvec{y}_{\mathrm {obs}}) - \eta (\varvec{y})\Vert <\epsilon \}}p(\varvec{y}|\varvec{\theta }) d\varvec{y}\right] \). If the tolerance \(\epsilon \) is small enough and the statistic(s) \(\eta \) good enough, then \(\pi _{\epsilon }\) can be a good approximation to \(\pi (\varvec{\theta }|\varvec{y}_{\mathrm {obs}})\).

A generalized version of this method (Wilkinson 2013) is given in Algorithm 1, where \(K(\eta )\) is an unnormalized probability density function, M is an arbitrary number of pseudo-samples, and c is a constant satisfying \(c \ge \sup _\eta K(\eta )\).

Using the “uniform kernel” \(K(\eta ) = \mathbf {1}_{\{\Vert \eta \Vert < \epsilon \}}\) and taking \(M= c=1\) yields the version described above. Algorithm 1 yields samples \(\varvec{\theta }^{(t)}\) from the kernel-smoothed posterior density

Although Algorithm 1 is nearly always presented in the special case with \(M=1\) (Wilkinson 2013), it is easily verified that \(M > 1\) still yields samples from \(\pi _K(\varvec{\theta }| \varvec{y}_{\mathrm {obs}})\).

Many variants of Algorithm 1 exist. For any unbiased, nonnegative estimator \(\hat{\mu }(\varvec{\theta })\) of \(\pi _K( \varvec{\theta }| \varvec{y}_{\mathrm {obs}})\) and any reversible transition kernel q on \(\Theta \), Algorithm 2 gives a Markov chain Monte Carlo (MCMC) version that is based on the pseudo-marginal algorithm of (Andrieu and Roberts 2009) (see also Marjoram et al. 2003; Wilkinson 2013; Andrieu and Vihola 2014). To obtain an algorithm that is similar to Algorithm 1, a good choice for \(\hat{\mu }(\varvec{\theta })\) is

where \(M \in \mathbb {N}\). When we refer to Algorithm 2, we always use this family of weights unless stated otherwise. Despite the fact that the expression (2) depends on M, as \(n\rightarrow \infty \) the distribution of \(x^{(n)}\) in Algorithm 2 converges to the same distribution \(\pi _K\) under mild conditions (Andrieu and Vihola 2014). Although the choice of M in (2) generally does not affect the limiting distribution of \(x^{(n)}\), it does affect the evolution of the stochastic process \(\{ x^{(n)} \}_{n \in \mathbb {N}}\).

We address the effect of M on the efficiency of Algorithm 2 when using the weight (2). Increasing the number of pseudo-samples M decreases the variance of 2, which one might think could improve the efficiency of Algorithm 2. Indeed, increasing M does decrease the asymptotic variance of the associated Monte Carlo estimates (Andrieu and Vihola 2014). However, increasing M also increases the computational cost of each step of the algorithm. Although the tradeoff between these two factors is quite complicated, our main result in this paper gives a good compromise: the choice \(M=1\) yields a running time within a factor of two of optimal. We use natural definitions of running time in the contexts of serial and parallel computing, which are extensions of those used by Pitt et al. (2012), Sherlock et al. (2013), and Doucet et al. (2014), and which capture the time required to obtain a particular Monte Carlo accuracy. Our definition in the serial computing case is the number of pseudo-samples generated, which is an appropriate measure of running time when drawing pseudo-samples is more computationally intensive than the other steps in Algorithm 2, and when the expected computational cost of drawing each pseudo-sample is the same, i.e. when there is no computational discounting due to having pre-computed relevant quantities.

Several authors have drawn the conclusion that in many situations the approximately optimal value of M in pseudo-marginal algorithms (a class of algorithms that includes Algorithm 2) is obtained by tuning M to achieve a particular variance for the estimator \(\hat{\pi }_{K,M}(\varvec{\theta }| \varvec{y}_{\mathrm {obs}})\) (Pitt et al. 2012; Sherlock et al. 2013; Doucet et al. 2014). This often means a value of M that is much larger than 1. We demonstrate that in Algorithm 2 such a tuning process is unnecessary, since near-optimal efficiency is obtained by using estimates based on a single pseudo-sample (Proposition 4 and Corollary 5); these estimates are lower-cost and higher-variance than those based on several pseudo-samples. This result assumes only that the kernel \(K(\eta )\) is the uniform kernel \(\mathbf {1}_{\{\Vert \eta \Vert < \epsilon \}}\) (the most common choice). In particular, and in contrast to earlier work, it does not make any assumptions about the target distribution \(\pi (\varvec{\theta }| \varvec{y}_{\mathrm {obs}})\).

Our result is in contrast to particle MCMC methods (Andrieu et al. 2010), which Flury and Shephard (2011) demonstrated could require thousands, millions or more particles to obtain sufficient accuracy in some realistic problems. This difference between particle MCMC and ABC-MCMC is largely due to the interacting nature of the particles in particle MCMC, allowing for better path samples.

2 Efficiency of ABC and ABC-MCMC

For a measure \(\mu \) on space \(\mathcal {X}\) let \(\mu (f) \equiv \int f(x) \mu (dx)\) be the expectation of a real-valued function f with respect to \(\mu \) and let \(L^2(\mu ) =\{f: \mu (f^2) < \infty \}\) be the space of functions with finite variance. For any reversible Markov transition kernel H with stationary distribution \(\mu \), any function \(f\in L^2(\mu )\), and Markov chain \(X^{(t)}\) evolving according to H, the MCMC estimator of \(\mu (f)\) is \(\bar{f}_n \equiv \frac{1}{n} \sum _{t=1}^{n} f(X^{(t)})\). The error of this estimator can be measured by the asymptotic variance:

which is closely related to the autocorrelation of the samples \(f(X^{(t)})\) (Tierney 1998).

If H is geometrically ergodic, v(f, H) is guaranteed to be finite for all \(f \in L^2(\mu )\), while in the non-geometrically ergodic case v(f, H) may or may not be finite (Roberts and Rosenthal 2008). When v(f, H) is infinite our results still hold but are not informative. The fact that our results do not require geometric ergodicity distinguishes them from many results on efficiency of MCMC methods (Guan and Krone 2007; Woodard et al. 2009). When \(X^{(t)}\mathop {\sim }\limits ^{\mathrm{iid}}\mu \), we define \(v(f) = \mathrm {Var} \left( f(X^{(t)}) \right) \).

We will describe the running time of Algorithms 1 and 2 in two cases: first, when the computations are done serially, and second, when they are done in parallel across M processors. Using (3), the variance of \(\bar{f}_n\) from a single (reversible) Markov chain H of length n is roughly v(f, H) / n, so to achieve variance \(\delta >0\) in the serial context we need \(v(f,H)/\delta \) iterations. Similarly, the variance of \(\bar{f}_{n}\) from a collection of M reversible Markov chains, each run for n steps, is roughly v(f, H) / (Mn). Thus, to achieve variance \(\delta >0\) in the parallel context we need to run each Markov chain for \(n > v(f,H)/(\delta M)\) iterations.

Although our definitions make sense for any function f of the two values \(( \varvec{\theta }^{(t)}, T^{(t)} )\) described by Algorithm 2, throughout the rest of the note we restrict our attention to functions that depend only on the first coordinate, \(\varvec{\theta }^{(t)}\). That is, when discussing Algorithm 2 or other pseudo-marginal algorithms, we restrict our attention to functions that satisfy \(f(\varvec{\theta },T_{1}) = f(\varvec{\theta },T_{2})\) for all \(\varvec{\theta }\) and all \(T_{1}, T_{2}\). We slightly abuse this in our notation, not distinguishing between a function \(f: \Theta \mapsto \mathbb {R}\) of a single variable \(\varvec{\theta }\) and a function \(f: \Theta \times \mathbb {R}^{+} \mapsto \mathbb {R}\) of two variables \((\varvec{\theta }, T)\) that only depends on the first coordinate.

Let \(Q_M\) be the transition kernel of Algorithm 2; like all pseudo-marginal algorithms, \(Q_M\) is reversible (Andrieu and Roberts 2009). We assume that drawing pseudo-samples is the slowest part of the computation, and that drawing each pseudo-sample takes the same amount of time on average (as also assumed in Pitt et al. 2012; Sherlock et al. 2013; Doucet et al. 2014). Then the running time of \(Q_M\) in the serial context can be measured as the number of iterations times the number of pseudo-samples drawn in each iteration, namely \(C_{f,Q_M,\delta }^{\mathrm{ser}} \equiv M v(f,Q_M)/\delta \). In the context of parallel computation across M processors, we compare two competing approaches that utilize all the processors. These are: (a) a single chain with transition kernel \(Q_M\), where the \(M>1\) pseudo-samples in each iteration are drawn independently across M processors; and (b) M parallel chains with transition kernel \(Q_1\). The running time of these methods can be measured as the number of required Markov chain iterations to obtain accuracy \(\delta \), namely \(C_{f,Q_M,\delta }^{\mathrm{M-par}} \equiv v(f,Q_M)/\delta \) utilizing method (a) and \(C_{f,Q_1,\delta }^{\mathrm{M-par}} \equiv v(f,Q_1)/(\delta M)\) utilizing method (b). Since both measures of computation time are based on the asymptotic variance of the underlying Markov chain, they ignore the initial ‘burn-in’ period and are most appropriate when the desired error \(\delta \) is small. Other measures of computation time should be used if the Markov chains are being used to get only a very rough picture of the posterior (e.g. to locate, but not explore, a single posterior mode). Note, however, that in practice there is typically no burn-in period for Algorithm 2, since it is usually initialized using samples from ABC (Marin et al. 2012).

For Algorithm 1 the running time, denoted by \(R_M\), is defined analogously. However, we must account for the fact that each iteration of \(R_M\) yields one accepted value of \(\varvec{\theta }\), which may require multiple proposed values of \(\varvec{\theta }\) (along with the associated computations, including drawing pseudo-samples). The number of proposed values of \(\varvec{\theta }\) to get one acceptance has a geometric distribution with mean equal to the inverse of the marginal probability \(p_{acc}(R_M)\) of accepting a proposed value of \(\varvec{\theta }\). So, similarly to \(Q_M\), the running time of \(R_M\) in the context of serial computing can be measured as \(C_{f,R_M,\delta }^{\mathrm{ser}} \equiv M v(f)/(\delta \, p_{acc}(R_M))\), and the computation time in the context of parallel computing can be measured as \(C_{f,R_M,\delta }^{\mathrm{M-par}} \equiv v(f)/(\delta \, p_{acc}(R_M))\) utilizing method (a) and \(C_{f,R_1,\delta }^{\mathrm{M-par}} \equiv v(f)/(\delta M p_{acc}(R_1))\) utilizing method (b).

Using these definitions, we first state the result that \(M=1\) is optimal for ABC (Algorithm 1). This result is widely known but we could not locate it in print, so we include it here for completeness.

Lemma 1

The marginal acceptance probability of ABC (Algorithm 1) does not depend on M. For \(M>1\) the running times \(C_{f,R_M,\delta }^{\mathrm{ser}}\) and \(C_{f,R_M,\delta }^{\mathrm{M-par}}\) of ABC in the serial and parallel contexts satisfy \(C_{f,R_M,\delta }^{\mathrm{ser}} = M C_{f,R_1,\delta }^{\mathrm{ser}}\) and \(C_{f,R_M,\delta }^{\mathrm{M-par}} = M C_{f,R_1,\delta }^{\mathrm{M-par}}\) for any \(f \in L^2(\pi _K)\) and any \(\delta >0\).

Proof

The marginal acceptance probability of Algorithm 1 is

which does not depend on M. The results for the running times follow immediately. \(\square \)

Our contribution is to show a similar result for ABC-MCMC. A potential concern regarding Algorithm 2 is raised by Lee and Latuszynski (2013), who point out that this algorithm is generally not geometrically ergodic when q is a local proposal distribution, such as a random walk proposal. This is due to the fact that in the tails of the distribution \(\pi _{K}\), the pseudo-data \(y_{i,\varvec{\theta }}\) are very different from \(y_{\mathrm {obs}}\) and so the proposed moves are rarely accepted. This problem can be fixed in several ways. Lee and Latuszynski (2013) give a sophisticated solution that involves choosing a random number of pseudo-samples at every step of Algorithm 2, and they show that this modification increases the class of target distributions for which the ABC-MCMC algorithm is geometrically ergodic. One consequence of our Proposition 4 is that a simpler ‘obvious’ fix to the problem of geometric ergodicity does not work: increasing the number of pseudo-samples used in Algorithm 2 from 1 to any fixed number M has no impact on the geometric ergodicity of the algorithm.

Our main tool in analyzing Algorithm 2 will be the results of Andrieu and Vihola (2014). Two random variables X and Y are convex ordered if \(\mathbb {E}[\phi (X)] \le \mathbb {E}[\phi (Y)]\) for any convex function \(\phi \); we denote this relation by \(X \le _{cx} Y\). Let \(H_{1}, H_{2}\) be the transition kernels of two pseudo-marginal algorithms with the same proposal kernel q and the same target marginal distribution \(\mu \). Denote by \(T_{1,x}\) and \(T_{2,x}\) the estimators of the unnormalized target used by \(H_{1}\) and \(H_{2}\) respectively. Recall the asymptotic variance v(f, H) from (3); although f could be a function on \(\mathcal {X}\times \mathbb {R}^+\), we restrict our attention to functions f on the non-augmented state space \(\mathcal {X}\). Then if \(T_{1,x} \le _{cx} T_{2,x}\) for all x, Theorem 3 of Andrieu and Vihola (2014) shows that \(v(f, H_1) \le v(f, H_2)\) for all \(f \in L^{2}(\mu )\). As shown in Section 6 of that work, this tool can be used to show that increasing M decreases the asymptotic variance of Algorithm 2:

Corollary 2

For any \(f \in L^{2}(\pi _K)\) and any \(M \le N \in \mathbb {N}\) we have \( v(f, Q_M) \ge v( f, Q_{N}).\)

In the appendix we give a similar comparison result for the alternative version of ABC-MCMC described in Wilkinson (2013).

We will show that, despite Corollary 2, it is not generally an advantage to use a large value of M in Algorithm 2. To do this, we first give a result that follows easily from Theorem 3 of Andrieu and Vihola (2014). For any \( 0 \le \alpha < 1\) and \(i \in \{1,2\}\), define the estimator

\(T_{i,x,\alpha }\) has the same mean as \(T_{i,x}\), but is a worse estimator. In particular, Proposition 1.2 of Leskelä and Vihola (2014) implies \(T_{i,x} \le _{cx} T_{i,x,\alpha }\) for any \(0 \le \alpha \le 1\) and any \(i \in \{1,2\}\) (Eq. (4) gives the coupling required by that proposition). We have:

Theorem 3

Assume that \(H_2\) is nonnegative definite. For any \( 0 \le \alpha < 1\), if \(T_{1,x}\le _{cx} T_{2,x,\alpha }\) for all x, then for any \(f \in L^{2}(\mu )\) we have

Theorem 3 is proven in the appendix. The assumption that \(H_2\) is nonnegative definite is a common technical assumption in analyses of the efficiency of Markov chains (Woodard et al. 2009; Narayanan and Rakhlin 2010), and is done here so we can compare \(v(f,H_{2})\) to v(f). It can easily be achieved, for example, by incorporating a “holding probability” (chance of proposing to stay in the same location) of 1/2 into the proposal kernel q (Woodard et al. 2009).

Theorem 3 yields the following bound for Algorithm 2, proven in the appendix.

Proposition 4

If \(K(\eta ) = \mathbf 1 _{\{\Vert \eta \Vert < \epsilon \}}\) for some \(\epsilon >0\), and if the transition kernel \(Q_M\) of Algorithm 2 is nonnegative definite, then for any \(f \in L^2(\pi _K)\) we have

This yields the following bounds on the running times:

Corollary 5

Using the uniform kernel and assuming that \(Q_M\) is nonnegative definite, the running time of \(Q_1\) is at most twice that of \(Q_M\), for both serial and parallel computing:

Corollary 5 implies that, under the condition that a uniform kernel is being used and that all pseudosamples have the same computational cost, it is only possible to improve the running time of the Markov chain by a factor of 2 by choosing \(Q_M\) rather than \(Q_1\). Thus, under these reasonable conditions, there is never a strong reason to use \(Q_{M}\) over \(Q_{1}\), and in fact there can be a strong reason to use \(Q_{1}\) over \(Q_{M}\).

2.1 Simulation study

We now demonstrate these results through a simple simulation study, showing that choosing \(M>1\) is seldom beneficial. We consider the model \(\varvec{y}| \varvec{\theta }\sim \mathcal {N}(\varvec{\theta }, \sigma _y^2)\) for a single observation \(\varvec{y}\), where \(\sigma _y\) is known and \(\varvec{\theta }\) is given a standard normal prior, \(\varvec{\theta }\sim \mathcal {N}(0, 1)\). We apply Algorithm 2 using proposal distribution \(q(\varvec{\theta }| \varvec{\theta }^{(t-1)}) = \mathcal {N}(\varvec{\theta }; 0, 1)\), summary statistic \(\eta (\varvec{y}) = \varvec{y}\), and \(K(\eta ) = \mathbf {1}_{\{\Vert \eta \Vert < \epsilon \}}\) equal to the uniform kernel with bandwidth \(\epsilon \), and subsequently study the algorithm’s acceptance rate, which due to the independent proposal is analogous to effective sample size. We start by exploring the case where \(\varvec{y}_{\mathrm {obs}}=2\) and \(\sigma _y=1\), simulating the Markov chain for 5 million iterations. Figure 1 (left) shows the acceptance rate per generated pseudo-sample as a function of M. Large M does not provide a benefit in terms of accepted \(\varvec{\theta }\) samples per generated pseudo-sample, and can even decrease this measure of efficiency, supporting the result of Corollary 5. In fact, replacing the uniform kernel with a Gaussian kernel (not shown) leads to indistinguishable results. In Fig. 1 (right) we look at the \(\epsilon \) which results from requiring that a fixed percentage (\(0.4~\%\)) of \(\varvec{\theta }\) samples are accepted per unit computational cost. This means that for \(M=1\) we require that \(0.4~\%\) of samples are accepted, while for \(M=64\) we require that \(0.4\times 64 = 25.6~\%\) of samples are accepted.

Left Acceptance rate per pseudo-sample. Lines correspond to \(\epsilon =0.5^2\) (black) through \(\epsilon =0.5^6\) (light grey), spaced uniformly on log scale. Right The \(\epsilon \) resulting from requiring that \(0.4~\%\) of \(\varvec{\theta }\) samples are accepted per unit computational cost. Lines correspond to different computational savings for pseudo-samples beyond the first, in the range \(\delta = 1\) (black, no cost savings), 2, 4, 8, 16 (light grey)

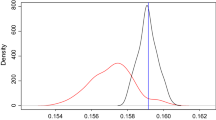

Sensitivity of the relationship between the bias and M to changes in the likelihood. Each curve is normalized by dividing by its respective \(\epsilon \) for \(M=1\). Left: Varying \(\varvec{y}_{\mathrm {obs}}= 2\) (light grey), 4, 6, 8 (black). Right Varying \(\sigma _y = 0.01\) (black), 0.05, 0.1, 0.5, 1, 2 (light grey)

In certain cases, there is an initial fixed computational cost to generating pseudo-samples, after which generating subsequent pseudo-samples is computationally inexpensive. If \(\varvec{y}\) is a length-n sample from a Gaussian process, for instance, there is an initial \(O(n^3)\) cost to decomposing the covariance, after which each pseudo-sample may be generated at \(O(n^2)\) cost. In the case with discount factor \(\delta >1\) (representing a \(\delta \times \) cost reduction for all pseudo-samples after the first), the pseudo-sampling cost is \((1+(M-1)/\delta )\), so we require that \(0.4\times (1+(M-1)/\delta )\%\) of \(\varvec{\theta }\) samples are accepted. For example, a discount of \(\delta =16\) with \(M=64\) requires that \(2.0~\%\) of samples are accepted. Figure 1 shows that, for \(\delta =1\), larger M results in larger \(\epsilon \); in other words, for a fixed computational budget and no discount, \(M=1\) gives the smallest \(\epsilon \). For discounts \(\delta >1\), however, increasing M can indeed lead to reduced \(\epsilon \).

We further explore discounting in Fig. 2, which uses a discount of \(\delta = 8\) and varies \(\varvec{y}_{\mathrm {obs}}\) from 2 to 8 (left plot) and \(\sigma _y\) from 0.01 to 2 (right plot). In both cases the changes are meant to induce a divergence between the prior and the likelihood, and hence the prior and posterior. In these figures, the requisite \(\epsilon \) are scaled such that at \(M=1\) all normalized \(\epsilon \) are 1. Figure 2 (left) shows that as \(\varvec{y}_{\mathrm {obs}}\) grows, the benefit associated with using higher values of M shrinks and eventually disappears. This is because for large \(\varvec{y}_{\mathrm {obs}}\) a large value of \(\epsilon \) is required in order to frequently get a nonzero value for the approximated likelihood and thus a reasonable acceptance rate; for instance, the unnormalized value of \(\epsilon \) is 0.08 when \(\varvec{y}_{\mathrm {obs}}=2\) and \(M=1\), while \(\epsilon =7.64\) when \(\varvec{y}_{\mathrm {obs}}=8\) and \(M=1\). As such, the increased diversity from multiple samples is dwarfed by the scale of \(\epsilon \). In Fig. 2 (right) we examine sensitivity of our conclusions to \(\sigma _y\). For large \(\sigma _y\), additional (discounted-cost) pseudo-samples provide a benefit, because they improve the accuracy of the approximated likelihood. However, for small values of \(\sigma _y\), the variability of the pseudo-samples \(y_{i,\varvec{\theta }{^\prime }}\) is low and so additional pseudo-samples do not provide much incremental improvement to the likelihood approximation. In summary, we only find a benefit of increasing the number of pseudo-samples M in cases where there is a discounted cost to obtain those pseudo-samples, and even then the benefit can be decreased or eliminated when \(\varvec{y}_{\mathrm {obs}}\) is extreme under typical proposed values of \(\varvec{\theta }\), or when the variability of \(\varvec{y}\) under the model is low.

3 Discussion

In this paper, we have shown that despite the true likelihood leading to reduced asymptotic variance relative to the approximated likelihood constructed through ABC, in practice one should stick with simple, single pseudo-sample approximations rather than trying to accurately approximate the true likelihood with multiple pseudo-samples. Our results are obtained by bounding the asymptotic variance of Markov chain Monte Carlo estimates, which takes into account the autocorrelation of the Markov chain. This is not to say that \(M=1\) is optimal in all situations. In many cases, there is a large initial cost to the first pseudo-sample, with subsequent samples drawn at a much reduced computational cost. In this case \(M>1\) can lead to improved performance.

We hope that this work not only provides practical guidance on the choice of the number of pseudo-samples when using ABC, but also that it might lead to future research in the analysis of these algorithms. As a specific example, it would be fruitful to pursue the results in this paper extended to non-uniform kernels such as the popular Gaussian kernel. The main difficulty in doing so is extending the explicit calculation (9) in the proof of Proposition 4. Although we have found it possible to obtain similar bounds for specific kernels, target distributions and proposal distributions via grid search on a computer, and simulations suggest that our conclusions hold in greater generality, we have not been able to extend this inequality to general kernels and general target distributions simultaneously.

References

Andrieu, C., Roberts, G.O.: The pseudo-marginal approach for efficient Monte Carlo computations. Ann. Stat. 37, 697–725 (2009)

Andrieu, C., Vihola, M.: Establishing some order amongst exact approximations of MCMCs. arXiv preprint, arXiv:1404.6909v1 (2014)

Andrieu, C., Doucet, A., Holenstein, R.: Particle Markov chain Monte Carlo methods. J. R. Stat. Soc. 72, 269–342 (2010)

Doucet, A., Pitt, M., Deligiannidis, G., Kohn, R.: Efficient implementation of Markov chain Monte Carlo when using an unbiased likelihood estimator. arXiv preprint, arXiv:1210.1871v3 (2014)

Flury, T., Shephard, N.: Bayesian inference based only on simulated likelihood: particle filter analysis of dynamic economic models. Econom. Theory 27(5), 933–956 (2011)

Guan, Y., Krone, S.M.: Small-world MCMC and convergence to multi-modal distributions: from slow mixing to fast mixing. Ann. Appl. Probab. 17, 284–304 (2007)

Latuszyński, K., Roberts, G.O.: CLTs and asymptotic variance of time-sampled Markov chains. Methodol. Comput. Appl. Probab. 15(1), 237–247 (2013)

Lee, A., Latuszynski, K.: Variance bounding and geometric ergodicity of Markov chain Monte Carlo kernels for approximate Bayesian computation. arXiv preprint, arXiv:1210.6703 (2013)

Leskelä, L., Vihola, M.: Conditional convex orders and measurable martingale couplings. arXiv preprint, arXiv:1404.0999 (2014)

Marin, J.-M., Pudlo, P., Robert, C.P., Ryder, R.J.: Approximate Bayesian computational methods. Stat. Comput. 22, 1167–1180 (2012)

Marjoram, P., Molitor, J., Plagnol, V., Tavaré, S.: Markov chain Monte Carlo without likelihoods. Proc. Natl. Acad. Sci. 100(26), 15324–15328 (2003)

Narayanan, H., Rakhlin, A.: Random walk approach to regret minimization. In: Lafferty, J.D., Williams, C.K.I., Shawe-Taylor, J., Zemel, R.S., Culotta, A. (eds.) Conference proceedings of NIPS, Advances in Neural Information Processing Systems, vol. 23. Curran Associates, Inc., http://papers.nips.cc/book/advances-in-neural-information-processing-systems-23-2010 (2010)

Pitt, M.K., Silva, R.d S., Giordani, P., Kohn, R.: On some properties of Markov chain Monte Carlo simulation methods based on the particle filter. J. Econom. 171(2), 134–151 (2012)

Roberts, G.O., Rosenthal, J.S.: Variance bounding Markov chains. Ann. Appl. Probab. 18, 1201–1214 (2008)

Sherlock, C., Thiery, A.H., Roberts, G.O., Rosenthal, J.S.: On the efficiency of pseudo-marginal random walk Metropolis algorithms. arXiv preprint, arXiv:1309.7209 (2013)

Tavare, S., Balding, D.J., Griffiths, R., Donnelly, P.: Inferring coalescence times from DNA sequence data. Genetics 145(2), 505–518 (1997)

Tierney, L.: A note on Metropolis-Hastings kernels for general state spaces. Ann Appl Probab 8, 1–9 (1998)

Wilkinson, R.D.: Approximate Bayesian computation (ABC) gives exact results under the assumption of model error. Stat. Appl. Genet. Mol. Biol. 12(2), 129–141 (2013)

Woodard, D.B., Schmidler, S.C., Huber, M.: Conditions for rapid mixing of parallel and simulated tempering on multimodal distributions. Ann. Appl. Probab. 19, 617–640 (2009)

Acknowledgments

The authors thank Alex Thiery for his careful reading of an earlier draft, as well as Pierre Jacob, Rémi Bardenet, Christophe Andrieu, Matti Vihola, Christian Robert, and Arnaud Doucet for useful discussions. This research was supported in part by U.S. National Science Foundation grants 1461435, DMS-1209103, and DMS-1406599, by DARPA under Grant No. FA8750-14-2-0117, by ARO under Grant No. W911NF-15-1-0172, and by NSERC.

Author information

Authors and Affiliations

Corresponding author

Appendices

Proofs

Proof of Theorem 3

Denote by \(H_{2,\alpha }\) the transition kernel of the pseudo-marginal algorithm with proposal kernel q, target marginal distribution \(\mu \), and estimator \(T_{2,x,\alpha }\) of the unnormalized target. If we denote by \(\{ (X_{t}^{(1)}, T_{t}^{(1)}) \}_{t \in \mathbb {N}}\) and \(\{ (X_{t}^{(2)}, T_{t}^{(2)}) \}_{t \in \mathbb {N}}\) the Markov chains driven by the kernels \(H_{2,\alpha }\) and \(\alpha \mathrm {I} + (1-\alpha ) H_2\) respectively, then \(\{ X_{t}^{(1)} \}_{t \in \mathbb {N}}\) and \(\{ X_{t}^{(2)} \}_{t \in \mathbb {N}}\) have the same distribution.

If \(T_{1,x} \le _{cx} T_{2,x,\alpha }\) then by Theorem 3 of Andrieu and Vihola (2014),

for any \(f \in L^2(\mu )\). We also have

where the first inequality follows from Corollary 1 of Latuszyński and Roberts (2013) and the second follows from the fact that \(H_{2}\) has nonnegative spectrum. Combining this with (6) yields the desired result.\(\square \)

Proof of Proposition 4

For any \(M\ge 1\), let \(T_{M,\varvec{\theta }}\) be the estimator \(\hat{\pi }_{K,M}(\varvec{\theta }|\varvec{y}_{\mathrm {obs}})\) of the target \(\pi _K\), so that \(T_{M,\varvec{\theta },\alpha }\) is \(T_{M,\varvec{\theta }}\) handicapped by \(\alpha \) as defined in (4) of the main document. To obtain (5) of the main document, by Theorem 3 it is sufficient to take \(\alpha = 1-\frac{1}{M}\) and show that \(T_{1,\varvec{\theta }} \le _{cx} T_{M,\varvec{\theta },\alpha }\). By Proposition 2.2 of Leskelä and Vihola (2014), it is furthermore sufficient to show that, for all \(c \in \mathbb {R}\),

Let \(\mathrm {Bin}(n,\psi )\) denote the binomial distribution with n trials and success probability \(\psi \). For a given point \(\varvec{\theta }\in \Theta \), let \(\tau = \tau (\varvec{\theta }) \equiv \mathbb {P}[T_{1,\varvec{\theta }} \ne 0] = \int \mathbf 1 _{\{\Vert \eta (\varvec{y}_{\mathrm {obs}}) - \eta (\varvec{y})\Vert < \epsilon \}} p(\varvec{y}|\varvec{\theta }) d \varvec{y}\). Noting that \(\frac{T_{1,\varvec{\theta }}}{\pi (\varvec{\theta })} \in \{0, 1 \}\), we may then write \(T_{1,\varvec{\theta }}\) and \(T_{M,\varvec{\theta },\alpha }\) as the following mixtures

where \(\delta _0\) is the unit point mass at zero. Denote \(T_{1,\varvec{\theta }}' = \frac{T_{1,\varvec{\theta }}}{\pi (\varvec{\theta })} \) and \(T_{M,\varvec{\theta },\alpha }'=\frac{T_{M,\varvec{\theta },\alpha }}{\pi (\varvec{\theta })}\). We will check condition (8) for \(T_{1,\varvec{\theta }}', T_{M,\varvec{\theta },\alpha }'\) and \(0 \le c \le 1\), then separately for \(c < 0\) and \(c > 1\). For \(0 \le c \le 1\), we compute:

For \(c < 0\), we have

and the analogous calculation gives the same conclusion for \(c \ge M\). Finally, For \(1< c < M\), note

Also, the functions \(f_1(c) \equiv \mathbb {E}[\vert T_{1,\varvec{\theta }}' - c \vert ]\) and \(f_2(c) \equiv \mathbb {E}[\vert T_{M,\varvec{\theta },\alpha }' - c \vert ]\) are continuous, convex and piecewise linear. For \(c \ge 1\), they satisfy

where the derivative of \(f_{2}\) exists. Combining inequalities (10) and (11), we conclude that

for all \(1 < c < M\). Thus we have verified (8) and the proposition follows.\(\square \)

Analysis of an alternative ABC-MCMC method

We give a result analogous to Corollary 2 for the version of ABC-MCMC proposed in Wilkinson (2013), given in Algorithm 3 below. The constant c can be any value satisfying \(c \ge \sup _{\varvec{y}} K(\eta (\varvec{y}_{\mathrm {obs}}) - \eta (\varvec{y}))\).

Lemma 6 compares Algorithm 3 (call its transition kernel \(\tilde{Q}\)) to \(Q_\infty \).

Lemma 6

For any \(f\in L^2(\pi _K)\) we have \( v(f, \tilde{Q}) \ge v( f, Q_{\infty })\).

Proof

Both \(\tilde{Q}\) and \(Q_\infty \) have stationary density \(\pi _K\), so by Theorem 4 of Tierney (1998), it suffices to show that \(Q_\infty (\varvec{\theta },A\backslash \{\varvec{\theta }\}) \ge \tilde{Q}(\varvec{\theta },A\backslash \{\varvec{\theta }\})\) for all \(\varvec{\theta }\in \Theta \) and measurable \(A \subset \Theta \). Since \(\tilde{Q}\) and \(Q_\infty \) use the same proposal density q, it is furthermore sufficient to show that for every \(\varvec{\theta }^{(t-1)}\) and \(\varvec{\theta }'\), the acceptance probability of \(Q_\infty \) is at least as large as that of \(\tilde{Q}\). Since \(p(\cdot |\varvec{\theta })\) is a probability density,

So the acceptance probability of \(\tilde{Q}\), marginalizing over \(\varvec{y}_{\varvec{\theta }'}\), is

The acceptance probability of the Metropolis-Hastings algorithm is

Using (12), (13), and (14), \(a_{\mathrm {ABC}}/a_{\mathrm {MH}} \le 1\).\(\square \)

Rights and permissions

About this article

Cite this article

Bornn, L., Pillai, N.S., Smith, A. et al. The use of a single pseudo-sample in approximate Bayesian computation. Stat Comput 27, 583–590 (2017). https://doi.org/10.1007/s11222-016-9640-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-016-9640-7