Abstract

The virtue of quality is not itself a subject; it depends on a subject. In the software engineering field, quality means good software products that meet customer expectations, constraints, and requirements. Despite the numerous approaches, methods, descriptive models, and tools, that have been developed, a level of consensus has been reached by software practitioners. However, in the model-driven engineering (MDE) field, which has emerged from software engineering paradigms, quality continues to be a great challenge since the subject is not fully defined. The use of models alone is not enough to manage all of the quality issues at the modeling language level. In this work, we present the current state and some relevant considerations regarding quality in MDE, by identifying current categories in quality conception and by highlighting quality issues in real applications of the model-driven initiatives. We identified 16 categories in the definition of quality in MDE. From this identification, by applying an adaptive sampling approach, we discovered the five most influential authors for the works that propose definitions of quality. These include (in order): the OMG standards (e.g., MDA, UML, MOF, OCL, SysML), the ISO standards for software quality models (e.g., 9126 and 25,000), Krogstie, Lindland, and Moody. We also discovered families of works about quality, i.e., works that belong to the same author or topic. Seventy-three works were found with evidence of the mismatch between the academic/research field of quality evaluation of modeling languages and actual MDE practice in industry. We demonstrate that this field does not currently solve quality issues reported in industrial scenarios. The evidence of the mismatch was grouped in eight categories, four for academic/research evidence and four for industrial reports. These categories were detected based on the scope proposed in each one of the academic/research works and from the questions and issues raised by real practitioners. We then proposed a scenario to illustrate quality issues in a real information system project in which multiple modeling languages were used. For the evaluation of the quality of this MDE scenario, we chose one of the most cited and influential quality frameworks; it was detected from the information obtained in the identification of the categories about quality definition for MDE. We demonstrated that the selected framework falls short in addressing the quality issues. Finally, based on the findings, we derive eight challenges for quality evaluation in MDE projects that current quality initiatives do not address sufficiently.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Conceptual models are the main artifacts for handling the high complexity involved in current information system (IS) development processes. The cognitive nature of the models natively supports all of the issues that are derived from the presence of several stakeholders/viewpoints, abstraction levels, and organizational challenges in an IS project. The model-driven engineering (MDE) is a software engineering paradigm that promotes the use of conceptual models as the primary artifacts of a complete engineering process. MDE focuses on the business and organizational concerns so that technological aspects are the result of operations over models via transformations or mappings.

An underlying foundation for working with models was proposed in the first version of the model-driven architecture (MDA) specification of the object management group (OMG 2003). Here, the basic principles for working and managing models were defined. These can be summarized in two main features: the specification of three abstraction levels Footnote 1 (computation-independent model, CIM; platform-independent model, PIM; and platform-specific model, PSM), and the definition of the model transformation operations. However, the increase in the number of communities of model-driven practitioners and the lack of a common consensus regarding model management (due to conceptual divergences from practitioners) has produced challenges in the usage and management of models. The MDA 1.0.1 specification has become insufficient to address these challenges (see Section 2.1). Paradoxically, some of the derived challenges were formulated in IS frameworks prior to the official release of MDA specification.

One of the most critical concerns for the model-driven paradigm is the difficulty of its adoption in real contexts. Several reports have pointed out issues in model-driven adoption that are related to the misalignment between the model-driven principles and the real context (Burden et al. 2014; Whittle et al. 2013, 2014). Some of these include the overload imposed by the model-driven tools, the lack of traceability mechanisms, and the lack of support for the adoption of model-driven strategies in organizational/development processes. Evidences from model-driven works and real applications suggest symptoms of quality assessment over models. In Giraldo et al. (2014), the authors demonstrated the wide divergence in quality conception for MDE.

This work presents a 3-year process to review the literature about the conceptualization of quality in MDE. Unlike other reviews on the same topic (most of which are summarized in Goulão et al. 2016), we focus on the identification of explicit definitions of quality for MDE, as well as the perception of quality in model-driven projects from real practitioners and its associated support in the academic/research field. This focus is important considering that, in the Engineering field, high quality is determined through an assessment that takes an artifact under evaluation and checks whether or not it is in accordance with to its specification (Krogstie 2012c). Due to the specific features of the MDE paradigm, it is necessary to establish the impact of the MDE specification on the current initiatives of quality for this paradigm.

This paper presents the current state of quality conception in model-driven contexts, presenting several factors that influence it. These include the subjectivity of the practitioners, the misalignment between the real application in model-driven scenarios and the research effort required, and the implications that quality in model-driven scenarios must be considered as part of an integral quality evaluation process. This paper builds upon previous works by the authors (Giraldo et al. 2014, 2015) and makes the following contributions:

-

(i)

An analysis of the quality issues detected for both academic/research contexts and industrial contexts is performed in order to determine if current research works on quality in MDE meet the requirements of real scenarios of model-driven usage. This analysis was performed through a structured literature review using backward snowballing (Wohlin 2014) on scientific publications and gray literature (non-scientific publications).

-

(ii)

A demonstration of quality in MDE issues is presented in a real scenario. This demonstration shows that current proposals of quality in MDE do not cover quality issues that are implicit in IS projects, such as the suitability in multiple-view support, the organizational adoption of modeling efforts, and the derivation of software code as a consequence of a systematic process, among others.

-

(iii)

A set of challenges that must be considered and addressed in model-driven works regarding quality and the identified categories and industrial/research alignments is presented. This set is derived from the literature reviews and should be integrally considered by any quality evaluation proposal in order to guide model-driven practitioners in how to detect and manage quality issues in MDE projects.

The remainder of this article is structured as follows: Section 2 describes quality in MDE contexts and includes an extension of a previous systematic literature review (Giraldo et al. 2014) to identify the main categories of quality conceptualization in MDE to date. Section 3 shows the results of a literature review to determine the mismatch between the quality conceptions in research and the quality conceptions of industrial practitioners and communities of model-driven practitioners. Section 4 presents a real example where multiple modeling languages are used to conceive and manage a real information system. This real scenario highlights quality issues on modeling languages and also the insufficiency of a quality evaluation proposal in MDE for revealing quality issues in the analyzed scenario. Section 5 describes some of the challenges that quality in MDE evaluation must address based on the reported findings and evidence. Finally, Section 6 presents our conclusions.

2 Quality issues in MDE

2.1 Evolution and limitations of the MDA standard

The model-driven paradigm does not have a common conception; instead, there are a plethora of interpretations based on the goals of each model-driven community. The most neutral and accepted reference for model-driven initiative is the MDA specification which reflects the OMG vision about model-driven scenarios. It serves as a common reference for roles and operations in models.

Even though the MDA guide 1.0.1 (OMG 2003) has been a key specification for model-driven contexts, its lack of updates over a decade has contributed to the emergence of new challenges for model-driven practitioners. Each of these challenges has been addressed by individual efforts and initiatives. Also, this guide did not provide an explicit definition about quality in models and modeling languages despite the definition of key concepts (Table 1) for using models as the main artifacts in a software/system construction process.

The MDA guide 2.0 (OMG 2014) released in June 2014 takes into account some of the current model challenges, including issues such as communication, automation, analytics, simulation, and execution. The MDA guide 2.0 defines the implicit semantic data in the models (which is associated with diagrams of models) to support model management operations. Although the MDA 2.0 guide essentially preserves the basic principles of model usage and transformation, it also complements the specification of some key terms and adds new features for the management of models. Table 1 shows the differences in some of the key modeling terms between MDA 1.0 and MDA 2.0. One of the most important refinements of MDA 2.0 is the explicit definition of model as information.

The MDA guide 2.0 attempts to address current model challenges, including quality assessment of models through analytics of semantic data extracted from models (model analytics). However, this specification does not prescribe how to perform analytics of this kind or quality assessment of models.

Clearly, the refinement of key concepts that is presented in Table 1 (depicted in bold) demonstrates that the MDA guide 2.0 attempts to tackle new challenges that are implicit in modeling tasks. However, this effort is not sufficient considering that the MDA guide does not specify how to identify and manage semantic data derived from models; this guide is only a preliminary (or complementary) descriptive application of model-driven standards.

In addition, most of the current challenges for the model-driven paradigm have only been proposed since the emergence of previous information system frameworks by researchers. In fact, IS frameworks such as FRISCO (Falkenberg et al. 1996) (from IFIPFootnote 2) define key aspects for the model-driven approach. These include the use of models themselves (conceptual modeling), the definition of information systems, and the use of information system denotations by representations (models), the definition of computerized information system, and the abstraction level zero by the presence of processors.

FRISCO gives MDA an opportunity to consider the communicative factor which is commonly reported as a key consequence of model use (Hutchinson et al. 2011b). In 1996, FRISCO suggested the need for harmonizing modeling languages and presented the suitability and communicational aspects for the modeling languages. Communication between stakeholders is critical for harmonization purposes. It allows important quality issues to be discussed from different views (Shekhovtsov et al. 2014). FRISCO also suggested relevant features for modeling languages (expressiveness, arbitrariness, and suitability).

These kinds of FRISCO challenges produce new concerns for model-driven practitioners. For example, suitability requires the usage of a variety of modeling languages and communication requires the languages to be compatible and harmonized. Since suitability concludes that a diversity of modeling languages is needed, the differences between modeling languages (due to this diversity) are unjustified.

MDA was the first attempt to standardize the model-driven paradigm, by defining three essential abstraction levels1 for any model-driven project and by specifying model transformations between higher/lower levels. Even though MDA has been widely accepted by software development communities and model-driven communities, the question about the ability of MDA to meet the actual MDE challenges and trends remains a pending issue.

Generally, despite the specification of the most relevant features for models and modelling languages, the lack of a specification about when something is in MDE is evident. This is relevant in order to be able to establish whether or not model-based proposals are aligned with the MDE paradigm beyond the presence of notational elements. There is no evidence of a quality proposal that is aligned with MDE itself.

2.2 A literature review about models and modeling language quality categories

In the RCIS 2014 conference, we first presented the preliminary results of a Systematic Literature Review (SR) that was performed over 21 months, with the goal of identifying the main categories in quality definition in MDE (Giraldo et al. 2014). This review is ongoing since we are attempting to demonstrate the diversity in the resulting definitions, including the most recent ones.

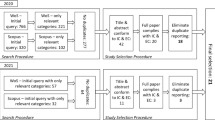

Figure 1 summarizes the SR protocol that was performed, which follows the Kitchenham guidelines (Kitchenham and Charters 2007) for ensuring a rigorous and formal search on this topic. As is depicted in Fig. 1, the protocol was enriched with an adaptive sampling approach (Thompson and Seber 1996) in order to find the primary authors on quality in MDE (see Section 2.5).

This SR addressed the following research questions:

-

RQ1: What does quality mean in the context of MDE literature?

-

RQ2: What does it mean to say that an artifact conforms to the principles of MDE?

While the main research question is RQ1, question RQ2 focuses on the fulfillment of the term model-compliance, i.e., whether or not the identified works have artifacts that belong to the model-driven paradigm. For this analysis, we considered modeling artifacts such as models and modeling languages. From RQ1, we derived the search string depicted as follows:

The population of this work is made up of the primary studies published in journals, book sections, or conference papers, where an explicit definition about quality in model-driven contexts can be identified. The date range for this work includes contributions from 1990 until now. In order to identify these primary studies, we defined the search string that is presented above. All logical combinations were valid for identifying related works about quality in model-driven contexts. This search string was operationalized according to several configuration options (advanced mode) of each search engine. The information about the selected studies (bibliographical references) was extracted directly from the search engine.

The main sources of the studies were:

-

Scientific databases and search engines such as ACM Digital Library, IEEE Explore, Springer, Science Direct, Scopus, and Willey. These include conference proceedings and associated journals.

-

Indexing services such as Google Scholar and DBLP.

-

Conference Proceedings: CAISE, ER (Conceptual modeling), RCIS, ECMFA, MODELS, RE, HICSS, ECSA, and MODELSWARDS.

-

Industrial repositories such as OMG and IFIP.

For this review process, a minimal set of criteria was defined in order to include/exclude studies. These are as follows:

Inclusion criteria

-

Studies from fields such as computer science, software engineering, business, and engineering.

-

Studies whose title, abstract and/or keywords have at least one word belonging to each dimension of a search string (what, in which, and where).

Exclusion criteria

-

Studies belonging to fields that differ from computer science, software engineering, model-driven engineering, and conceptual modeling (e.g., biology, chemistry, etc.).

-

Studies whose title/abstract/keywords do not have at least two dimensions of the search string’ configuration.

-

Studies related to models in areas/fields that differ from software construction and enterprize/organizational views (e.g., water models, biological models, VHDL models, etc.).

-

Studies related to artificial grammars and/or language processing.

-

Studies not related to MDA/MDE/ technical spaces (Bézivin and Kurtev 2005) (i.e., data schemas, XML processing, ontologies).

Due to the variety of studies, a classification schema was defined in order to differentiate and analyze them. Here, RQ2 plays a key role in this literature review because the evaluation of the model-driven compliant feature allow us to focus on the main artifacts of the modelling processes: models and modeling languages. Quality definitions are different for both artifacts. In fact, the SEQUAL framework (maybe the most complete work about quality in MDE) defines separately the quality of models (Krogstie 2012c) and the quality of modeling languages (Krogstie 2012b). The first definition is based on seven quality levels (Physical, Empirical, Syntactic, Semantic and Perceived Semantic, Pragmatic, Social, and Deontic). The second definition is based on six quality categories (Domain appropriateness, Comprehensibility appropriateness, Participant appropriateness, Modeller appropriateness, Tool appropriateness, and Organizational appropriateness).

All of the detected studies were analyzed using the questions in Table 2, which were defined in accordance with RQ2. We have resolved all of the questions that this table contains for quality studies detected. These questions identify whether or not quality studies address the scope of the MDE compliant feature. For studies that do not offer a quality definition, we identify the type of proposed study based on previous categories detected in our research.

2.3 Results

Table 3 presents the results of the search string applied in the databases. A second debugging process was necessary to discard studies that appear in the search results but that do not contribute to this research. This new review was made using the abstracts of the studies. These studies were considered to be not pertinent for this research despite their presence in the results of the search on academic databases. These works show words that are defined in the search string according to the inclusion criteria defined above; however, they do not explicitly provide any method/definition about quality in MDE and the support for multiple modeling languages. In fact, works of this kind appear as results of the search string, but they cover other topics that are aligned with model-driven approaches. We also discarded repeated studies that appear in the results of searches on multiple databases. Our analysis was made on 176 relevant studies. A summary of the analysis is presented in Fig. 2.

This debugging is particularly important because it reflects the broad implications involved in the terms model and quality. Although these discarded works are model-driven compliance, they reflect the ambiguity that model-driven compliance represents (even without full MDA compliance), so the mere existence of models may be criteria enough to determine compliance with the model-driven paradigm. Also, the generality in the use of the terms model and quality in the software engineering context and related areas is demonstrated, producing a diversity of works to support initiatives under those terms as a result.

During the analysis of the 176 primary studies reviewed, we checked whether each paper offered an explicit definition of quality, or at least if the study provided a conceptual framework that would allow a definition of quality to be derived as a result of the application of some theory. Therefore, from the 176 detected studies, we detected 29 studies (16.48% of the target population) that provide a definition of quality in model-driven contexts. The number of papers that provide a definition of quality is relatively low with respect to the number of identified and debugged studies. This indicates that the quality concept leads to works where quality is the result of the application of a specific approach. In those cases, quality is reduced to specific dimensions (e.g., metrics, detection of defects, increased productivity, cognitive effectiveness, etc.).

Of the 29 studies that provide definitions about quality, 21 studies (11.93% of all studies) offer a definition in terms of quality of models. Eighteen of these studies (10.23%) present the quality of models in terms of diagrams (mostly UML), and only one study (0.57%) defines the quality of textual models. In addition, 15 of the 29 quality studies (8.52%) offer a definition of quality at the modeling language level, of which 11 studies (6.25%) mention quality at the concrete syntax level, 14 studies (7.95%) at the abstract syntax level, and 10 studies (5.68%) at the language semantics level. Of the 29 quality studies, 8 studies (4.55%) were detected in which the quality definition is shared between models and modeling languages. Similarly, we detected 4 other studies (2.27 %) whose definitions of quality do not consider model or language artifacts. These studies are associated to category 1 presented in Section 2.4, which proposes a quality model for a quality framework for a specific model-driven approach.

On the other hand, 147 studies were detected (83.52% of total identified studies) that do not provide an explicit definition of quality in model-driven contexts. The presence of these studies is a consequence of specific model-driven proposals formulated to promote specific works on specific aspects of quality such as methodological frameworks, experiments, processes, etc. Of these works:

-

Five studies (2.84%) present specific adoptions of standards such as ISO 9126, ISO 25010, descriptive models such as CMMIⒸ, and approaches such as goal-question-metric (GQM) to support the operationalization of techniques applied in model-driven contexts (including model transformations).

-

Seventy-eight of the 176 identified studies (44.32%) have proposed methodologies to perform tasks in model-driven contexts that are commonly framed in quality assurance processes (e.g., behavioral verification of models, performance models, guidelines for quality improvement in the transformation of models, OCL verifications, checklists, model metrics and measurement, etc).

-

Fourteen studies (7.95%) report tools that are built to evaluate and/or support the applicability of specific quality initiatives in model-driven contexts.

-

Twenty-nine studies (16.48%) are about designed experiments or empirical procedures to evaluate quality features of models that are mostly oriented toward their understandability.

-

Twelve studies (6.82%) reported specific dissertations about quality procedures in model-driven contexts such as data quality, complexity, application of agile methodology principles, evaluation of languages, etc.

-

Six studies (3.41%) are works that extend predefined model-driven proposals such as metamodels, insertion of constraints into the complex system design processes, definition of contracts for model substitutability, model-driven architecture extension, etc.

-

Seven studies (3.98%) propose domain-specific languages (DSL) for specific tasks that are related to model management or model transformations.

-

Four studies (2.27%) report model-driven experiences in industrial automation contexts where models become useful mechanisms to generate software with a higher level of quality which is defined as the presence of specific considerations at the modeling level previous to the software production.

-

Fourteen studies (7.95%) define frameworks for multiple purposes such as measuring processes, quality of services, enrichment of languages, validation of software implementations according to their design, etc.

The existence of these studies indicate that the terms quality and model are often used as pivots to highlight specific initiatives that cover only certain dimensions of quality and MDE.

2.4 Identified categories of the definition of quality in MDE

In this research, a category is a set of established practices, activities, or procedures for evaluating the quality of models, regardless of any formality level and the modeling languages involved. According to RQ1, a summary of the defined categories is presented in Table 4.Footnote 3 The categories reflect the grouping of the quality works identified. In contrast to the previous report of Giraldo et al. (2014), in this extension, we found six new categories for quality in MDE, which are highlighted in Table 4.

-

Category 1—quality model for MDWE: This quality model defines and describes a set of quality criteria (usability, functionality, maintainability, and reliability) for the model-driven web approach (MDWE). The model also defines the weights for each element of the quality criteria set, and the relation of the elements with the user information needs (MDE, web modeling, tool support, and maturity).

-

Category 2—SEQUAL framework: This is a semiotic framework that is derived from the initial framework proposed by Linland et al. Quality is discussed on seven levels: physical, empirical, syntactic, semantic, pragmatic, social, and deontic. The way different quality types build upon each other is also explained.

-

Category 3—6C framework: These works propose the 6C quality framework, which defines six classes of model quality goals: correctness, completeness, consistency, comprehensibility, confinement, and changeability. This framework emerges as a grouping element that contains model quality definition and modeling concepts from previous works such as Lindland, Krogstie, Sølvberg, Nelson. and Monarchi.

-

Category 4—UML guidelines: In this work the quality of a model is defined in terms of style guide rules. The quality of a model is not subject to conformance to individual rules, but rather to statistical knowledge that is embodied as threshold values for attributes and characteristics. These thresholds come from quality objectives that are set according to the specific needs of applications. From the quality point of view, only deviations from these values will lead to corrections; otherwise, the model is considered to have the expected quality. While the style guide notifies the user of all rule violations, non-quality is detected only when the combination of a set of metrics reach critical thresholds.

-

Category 5—model size metrics: Quality is defined in terms of model size metrics (MoSMe). The quality evaluation considers defect density through model size measurement. The size is generally captured by the height, width, and depth dimensions. This already indicates that one single size measure is not sufficient to describe an entity.

-

Category 6—quality in model transformations: The work presented in Amstel (2010) defines the quality of model transformation through internal and external qualities. The internal quality of a model transformation is the quality of the transformation artifact itself. The quality attributes that describe the internal quality of a model transformation are understandability, modifiability, reusability, modularity, completeness, consistency, and correctness. The external quality of a model transformation is the quality change induced on a model by the model transformation. The work proposes a direct quality assessment for internal quality and an indirect quality assessment approach for external quality, but only if it is possible to make a comparison between the source and the target models.

Other work that is associated to this category is presented in Merilinna (2005). This work proposes a specific tool that automates the quality-driven model transformation approach proposed in Matinlassi (2005). To do this, the authors propose a procedure that consists of the development of a rule description language, the selection of the most suitable CASE tool for making the transformations, and the design and implementation of a tool extension for the CASE tool.

In addition, in the work presented in Grobshtein and Dori (2011), quality is a consequence of an OPM2SysML view generation process, that uses an algorithm with its respective software application. Thus, quality is defined as the effectiveness and fulfillment of faithfully translating OPM to SysML.

-

Category 7—empirical evidence about the effectiveness of modeling with UML: The identified works do not provide a definition for quality in models; it contains a synthesis of empirical evidence about the effectiveness of modeling with UML, defining it as a combination of positive (benefits) and negative (costs) effects on overall project productivity and quality. The work contributes to the quality in models by showing the need for quality assurance methods based on the level of quality required in different parts of the system, and including consistency and completeness dimensions as part of quality assurance practices as a consequence of the communicational purposes of (UML) models.

-

Category 8—understandability of UML: This is an empirical study that evaluates the effect that structural complexity has on the understandability of the UML statechart diagram. The report presents three dimensions of structural complexity that affect understandability. The authors also define a set of nine metrics for measuring the UML statechart diagram structural complexity. This work is part of broad empirical research about quality in modeling with UML diagrams where works like Piattini et al. (2011) can be identified.

-

Category 9—application of model quality frameworks: This is an empirical study that evaluates and compares feature diagrams languages and their semantics. This method relies on formally defined criteria and terminology based on the highest standards in engineering formal languages defined by Harel and Rumpe, and a global language quality framework: the Krosgtie’SEQUAL framework.

-

Category 10—quality from structural design properties: Quality assurance is the measurement of structural design properties such as coupling or complexity based on a UML-oriented representation of components. The UML design modeling is a key technology in MDA, and UML design models naturally lend themselves to design measurement. The internal quality attributes of relevance in model-driven development are structural properties of UML artifacts. The specific structural properties of interest are coupling, complexity, and size. An example is reported in Mijatov et al. (2013) where the authors propose an approach to validate the functional correctness of UML activities by the executability of a subset of UML provided by the fUML standard.

-

Category 11—quality of metamodels: Works of this kind specific languages and tools to check desired properties on metamodels and to visualize the problematic elements (i.e., the non-conforming parts of metamodels). The validation is performed over real metamodel repositories. When the evaluation is done, feedback is delivered to both MDE practitioners and metamodel tool builders.

-

Category 12—formal quality methods: This category is related to the ARENA formal method reported in Morais and da Silva (2015) that allows the quality and effectiveness of modeling languages to be evaluated. The reported selection process was performed over a set of user-interface modeling languages. The framework is a mathematical formula whose parameters are predefined properties that are specified by the authors.

-

Category 13—quality factors of business process models: In Heidari and Loucopoulos (2014), the authors proposed the quality evaluation framework (QEF) method to assess the quality of business processes through their models. This method could be applicable to any business process notation; however, its first application was reported in BPMN models. The framework relates and measures business process quality factors (like resource efficiency, performance, reliability, etc.) that are the inherent property of a business process concept and can be measured by quality metrics.

In this category, the SIQ framework (Reijers et al. 2015) is also identified for the evaluation of business process models. Here, three categories for evaluating models are distinguished: syntactic, semantic, and pragmatic. By this, there is an inevitable association of SIQ with previous quality frameworks such as SEQUAL (category 2) and some works of Moody; however, the authors clarify that the SIQ categories are not the same as those that were previously defined in the other quality frameworks. The authors show how SIQ is a practical framework for performing quality evaluation that has links with previous quality frameworks. SIQ attempts to integrate concepts and guidelines that belong to the research in the BPM domain.

A complete list of works around quality for business process modeling is presented in De Oca et al. (2015). This works reports a systematic review for identifying relevant works that address quality aspects of business process models. The classification of these works was performed by the use of the CMQF framework (Nelson et al. 2012), which a combination of SEQUAL and the Bunge-Wand-Weber ontology.

-

Category 14—quality procedures derived from IS success evaluation framework: The authors in Maes and Poels (2007) proposed a method to measure the quality of modeling artifacts through the application of a previous framework of Seddon (1997) for evaluating the success of information systems. The method proposes a selection of four related evaluation model variables: perceived semantic quality (PSQ), perceived ease of understanding (PEOU), perceived usefulness (PU), and user satisfaction (US). This method is directly associated with a manifestation of the perceived semantic quality (category 2) described in Krogstie et al. (1995).

-

Category 15—a quality patterns catalog for modeling languages and models: The authors in Sayeb et al. (2012) propose a collaborative pattern system that capitalizes on the knowledge about the quality of modeling languages and models. To support this, the authors introduce a web management tool for describing and sharing the collaborative quality pattern catalog.

-

Category 16—an evaluation framework for DSMLs that are used in a specific context: the authors in Challenger et al. (2015) formulate a specific quality evaluation framework for languages employed in the context of multi-agent systems (MAS). Their systematic evaluation procedure is a comparison of a modeling proposal with a hierarchical structure of dimension/sub-dimension/criteria items. The lower level (criteria) defines specific MAS characteristics. For this category, quality is a dimension that has two sub-dimensions: the general DSML assessment sub-dimension (with criteria such as domain scope, suitability, domain expertise, domain expressiveness, effective underlying generation, abstraction-viewpoint orientation, understandability, maintainability, modularity, reusability, well-written, and readability) and the user perspective sub-dimension (with criteria such as developer ease, and advantages/disadvantages). Both sub-dimensions are addressed by qualitative analysis; it is assumed that this type of analysis is performed with case studies that are designed with experimental protocols.

2.5 Adaptive sampling

Using the principles of the adaptive sampling approach defined in Thompson and Seber (1996), we analyzed the identified papers in order to explore clustered populations of studies about quality in models. We made a review of the bibliographical references of each study detecting reference authors or works (i.e., previous studies formulated before the publication of the analyzed study that have been cited in the quality studies identified). We established the reference authors or reference works as those who have been referenced by at least two quality studies detected of different authors.

To do this, we defined Tables 5 and 6, where the rows refer to the authors of the identified quality studies and the columns contain the referenced authors or works. A link in the (i,j) cell on Tables 5 and 6 (the color black in the cell fill) indicates that the author of the j column has influenced the authors of the i row; so that the i-author(s) cite the j-author(s) in the quality study(ies) that were analyzed.

In the Table 5, the columns (or j-authors) correspond to the same authors of quality studies; this was intentionally done in order to show the influence of authors on the analyzed quality studies. Table 5 shows that Krogstie (category 2) is the author that has had the most influence on the quality works analyzed. His work influences 50% of the identified quality studies, followed by Lange (category 5) with 31.3%. Two special cases occur in the columns of Krogstie and Mohagheghi (category 3); they appear as authors of identified quality papers, but they were cited by other works that were not detected in the searches of the academic databases. We wanted to highlight the other works of the authors that influence the analyzed studies.

Table 5 also shows the studies that are referenced, created, or influenced by works of the same author. These studies do not affect other authors or proposals for quality in models. However, Table 5 also shows quality communities of researchers on topics such as model metrics and guidelines mainly applied over UML. Works led by Lange, Chaudron, and Hindawi contribute to the consolidation of these research communities. This community phenomenon was originally reported in Budgen et al. (2011), and is described in works like Lange and Chaudron (2006), Lange et al. (2003), Lange and Chaudron (2005), and Lange et al. (2006). In fact, the works of Lange presented in Budgen et al. (2011) suggest that most model quality problems are related to the design process, which shows that a conflict arises with all viewpoint-based modeling forms, and not just UML.

In the Table 6, the columns represent other authors or works which were identified in the review of the bibliographical references for each quality study. As Table 6 shows, the OMG specifications and ISO 9126 standard are the most important industrial references that influence the formulation of quality studies.

The OMG specifications were cited by 68.8% of the authors of identified categories. The OMG specifications that were most cited by authors were MDAFootnote 4 specification followed by UML, MOF, OCL, and SysML specifications. Evidence of the adoption of the OMG standard suggests that the works are MDA compliant, but this does not necessarily means an explicit adoption or alignment to the MDA initiative itself. The ISO standards (cited by 50% of the works) are used to support quality model proposals on the taxonomy composed by features, sub-features, and quality attributes. It is even useful for evaluation purposes. This kind of adoption excludes the quality dimensions that are involved in the ISO standards (quality of the process, intern quality, extern quality, and quality in use).

Linland’s quality framework (Lindland et al. 1994) is one of the reference frameworks that is most frequently used and cited by the authors of the primary studies (43.75%). This framework was one of the first quality proposals formulated and it takes into account the syntactic, semantic, and pragmatic qualities regarding goals, means, activities, and modelling properties. The Krosgtie quality framework (an evolution from Linland’s framework) is recognized as the work with the most influence on contemporary works about the quality of models. In the case of Krogstie and Moody (cited by the 31.25% of the works), the authors of the analyzed studies cited early papers where they began to present the first versions and applications of their approaches. Finally, it is important to highlight the references to Kitchenham’s works to support the application of systematic review guidelines and analysis in procedures on empirical software engineering.

2.6 Other findings

As a consequence of the searches performed, an identification of studies belonging to the same authors or topics was made. These were sets of related works with specific approaches for evaluating quality over models such as model metrics, defect detections, cognitive evaluation procedures, checklists, and other works about quality frameworks. For our research, this distinction is particularly important because of their presence in the search results; however, most of them do not contribute a formal definition for quality in models. Instead, they focus on specific topics that are considered in quality strategies.

The identified families are the following:

-

Understandability of UML diagrams (Piattini et al).

-

SMF approach (Piattini et al.).

-

NDT (University of Sevilla Spain)

-

SEQUAL Framework (Krogstie)

-

Constraint—Model verification (Cabot et al., and others) (Chenouard et al. 2008; González et al. 2012; Tairas and Cabot 2013; Planas et al. 2016).

-

OOmCFP (Pastor et al.) (Marín et al. 2010; Marín et al. 2013; Panach et al. 2015a).

-

6C Framework (Mohagheghi et al.).

These families show how the interpretation of quality is reduced to specific proceedings or approaches in a way similar to mismatches or limitations on the term software quality. Because of this, some authors like Piattini et al. (2011) suggest the need for more empirical research to develop (at least) a theoretical understanding of concept of quality in model.

2.7 Discussion

Section 2.4 answered RQ1 (the meaning of quality in the MDE literature). The obtained categories of quality were classified in accordance with the schema that was defined in Table 2 (derived from RQ2). Despite the many model-driven works, tools, modeling languages, etc., the concept of quality has only been ambiguously defined by the MDE community. Most quality proposals are focused primarily on the evaluation of UML for many varied interests and goals.

Works about quality in MDE are limited to specific initiatives of the researchers without having applicability beyond the research or specific works considered. This contrasts with the relative maturity level of quality definitions such as the one presented in Section 2.2 (SEQUAL framework).

The low number of works on quality and the diversity of quality categories reflect specific quality frameworks and the respective communities that support these quality concept. The high number of results in the searches performed indicates misconceptions about quality due to the wide spectrum of model engineering in terms of its ease of application (any model can conform to MDE), and the lack of mechanisms to indicate when something is in accordance with MDE.

There are many definitions on quality in models in the literature, but, there is also dispersion and a general disagreement about quality in MDE contexts; this is demonstrated by multiple categories in the quality in MDE presented in Section 2.4.

MDE requires a definition of quality that is aligned with the principles and main motivations of this approach. Extrapolation of software quality approaches alone are insufficient because we move from a concrete level (code production, software quality assurance activities) to a higher abstract level to support specific modeling domains.

Traditional evaluations of UML are not enough for a full understanding of quality in models; UML is oriented to functional software features and also, is an object-oriented modeling approach. UML is the defacto software modeling approach, but the evaluation of quality models in terms of UML excludes the overall spectrum of MDE initiatives. Quality evaluation of cognitive effectiveness could restrict the overall quality in models to the diagram and notational levels.

The quality proposals analyzed do not consider how to reduce the complexity added by the model quality activities (experiments, changes in syntax and semantics, evaluation of quality features of a high level of abstraction, etc).

The quality evaluation categories reported do not take into account the implications at the tool level. Tools are a particularly important issue because a language can be explained by its associated tool. New challenges related to the tools that support MDE initiatives have emerged; an example can be seen in Köhnlein (2013). In the proposals, tools are limited to validation cases without further applicability beyond the proposal itself. Also, the lack of reports about the validation and use of the quality proposals demonstrates the level that they were formulated in the preliminarily stage of research.

2.8 The relationship between quality in MDE and V&V

Verification and validation procedures (commonly referred to as V&V) are key strategies in the software quality area for avoiding, detecting, and fixing defects and quality issues in software products. These procedures are applied throughout all the life cycle of the software product before its release.

MDE also takes advantage of V&V procedures by applying them in modeling artifacts (i.e., languages, models, and transformations) in order to find issues before the generation of artifacts such as source code or other models. One of the most representative examples in the MDE literature of V&V procedures is the MoDEVVaFootnote 5 (model-driven engineering, verification and validation) workshop of the ACM/IEEE MODELS conference.

Thirteen of the 16 categories of quality in MDE are associated to specific V&V procedures in MDE reported by the authors, highlighting the studies reported in Mijatov et al. (2013)—category 10—and (López-Fernández et al. 2014)—category 11—which appear in the proceedings of the MoDEVVa workshop (MoDEVVa 2013 and MoDEVVa 2014, respectively). Three categories (2, 3, and 15) provide guidance for evaluating quality in modeling artifacts. Works of these categories must be interpreted in order to be applied in specific evaluation scenarios.

3 A mismatch analysis between industry and academy field on MDE quality evaluation

Quality in models and modeling languages has been considered in several ontological IS frameworks even before the formulation of the model-driven architecture (MDA) specification by the object management group (OMG), as mentioned above. The ISO 42010 standard (612, 2011) defines that the architecture descriptions are supported by models,Footnote 6 but it recognizes that the evaluation of the quality of the architecture (and its descriptions) is the subject of further standardization efforts.

The survey artifact proposed in the CMA workshop of the MODELS conferenceFootnote 7 presents a set of key features for all modeling approaches, considering issues related to the modeling paradigm involved, the notation, views, etc. This is a valuable effort to harmonize the study of the modern modeling approaches, which suggest higher features to analyze in modeling languages. However, some key issues such as usability, expressiveness, completeness, and abstraction management (which are key in ontological frameworks) are poorly described. The support for transformations between models, the role of tools in a model-driven context, and the diagrams as main interaction mechanism between models and users also require better descriptions..

The above evidence demonstrates quality in MDE is not an unknown factor for the adoption of model-driven initiatives in real contexts, e.g., software, IS, or complex engineering development processes. Therefore, the consideration and/or use of the MDE paradigm in industrial scenarios is an important source for detecting quality issues, taking into account that it would impact the adoption of model-driven initiatives. It is also important to identify the support of the current MDE quality proposals for the model-driven industrial communities and practitioners.

For this reason, we performed a complementary literature review in order to find evidence of the mismatch between the research field of modeling language quality evaluation and actual MDE practice in industry. In Giraldo et al. (2015), we presented the preliminary results of a literature review. This search is currently ongoing.

3.1 Literature review process design

We have performed a structured literature review using the backward snowballing approach. It has been demonstrated that it yields similar results to search-string-based searches in terms of conclusions and patterns found (Jalali and Wohlin 2012), and we did not want to miss valuable gray literature in the results. Gray literature is not published commercially and is seldom peer-reviewed (e.g., reports, theses, technical, and commercial documentation, scientific or practitioner blog posts, official documents), but it may contain facts that complement those of conventional scientific publications.

Figure 3 summarizes the literature review protocol that was performed. This literature review is an extension of a previous systematic review reported in Section 2.2. The snowballing sampling approach helps to identify additional works from an initial reference list. This list was obtained from an initial keyword search. We use the snowballing procedure reported in Wohlin (2014) to address the following research questions:

-

RQ1: What are the main issues reported in MDE adoption for industrial practice that affect modeling quality evaluation?

-

RQ2: What is the focus of works on modeling quality evaluation in the corresponding research field?

-

RQ3: Does the term model quality evaluation have a similar meaning in both the industrial level and the academic/research level?

-

RQ4: Is there a clear correspondence between industrial issues of modeling quality and trends in the identified research?

Our snowballing search method was performed as follows:

-

1.

The initial searches were done on scientific databases and search engines such as Scopus, ACM Digital Library, IEEE Explore, Springer, Science Direct, and Willey.Footnote 8These include conference proceedings and associated journals. We used search strings depicted as follows:

$$(\textit{MDE} \ \lor \textit{Model-driven}*) \ \land \ (\textit{real}\ \textit{adoption} \ \lor \ \textit{adoption} \ \textit{issues} \ \lor \ \textit{problem} \ \textit{report} \ ) $$ -

2.

For the resulting works, we chose articles that show explicit reports about the applicability of the MDE paradigm in real contexts.

-

3.

For those relevant works, quality issues were identified, and their reference lists were reviewed to find related works on reporting quality issues. This iteration was made until no new works were identified.

-

4.

To complement the quality issues detected, we analyzed web portals of software development communities, such as blogs, technical web sites, forums, social networks, and portals accessed from Google web search, using similar strings regarding previous scientific database searches. Our goal was to identify model quality manifestations from software practitioners who work with specific technical and business constraints.

Several inclusion/exclusion criteria were applied on the search results to identify relevant works for our analysis. These criteria are as follows:

Inclusion criteria

-

Works where an explicit manifestation of quality on a model-driven issue were included and presented. Examples of these manifestations are model transformation tool problems, misalignment of model-driven principles with specific business concerns, skepticism of the model-driven real application, and sufficiency, among others.

-

Reports that include an approach to identify model-driven issues in real applications (e.g., interviews with people that perform roles within an IS project, questionnaires, or description about real experiences).

-

Works that relate (and/or perform) a literature review approach on the applicability of model-driven approaches in real scenarios.

-

For non-academic works (web portals), we checked the impact and quality of the posted information. This was done by reviewing the forum messages, the academic references used, and the level of the community that supports those portals in terms of technological reports, conference-related mentions, and participants’ profiles.

-

For non-academic works (web portals), we checked the link between authors and participants with well-known companies that report the application of model-driven approaches (e.g., MetaCase, Mendix, Integranova, etc.), and academic/industrial conferences related to model-driven and IS topics (e.g., CodeGeneration Conference, RCIS, CAiSE, MODELS, and etc.).

Exclusion criteria

-

Works that report application cases of model-driven compliance approaches or initiatives (notations, application on a specific domain, guidelines, etc.), but whose main focus is the promotion of those specific approaches, without considering the collateral effects of their application.

Each included work was analyzed in order to find quality evidence (i.e., explicit sentences) in the adoption of the model-driven approach reported. Because of the kind of works detected and the level of formality of their sources, it was necessary to access the full content of each work, in order to determine the relevance of each contribution regarding the expectations formulated in our research questions. Despite the common terms used in the search strings, we only accepted works based on the MDE applicability report.

More information about reported quality issues can be found in the technical report available in Giraldo et al. (2016). This report presents all the works with their associated statements that support the detected quality issues. During the review of these issues, we found that quality evidence could be categorized as follows:

Industrial issues (RQ1)

-

Industrial issue 01: Implicit questions derived from the MDE adoption itself.

-

Industrial issue 02: Organizational support for the MDE adoption.

-

Industrial issue 03: MDA not enough.

-

Industrial issue 04: Tools as a way to increase complexity.

Academic/research issues (RQ2)

-

A/R issue 01: UML as the main language to apply metrics over models and defect prevention strategies.

-

A/R issue 02: Hard operationalization of model-quality frameworks.

-

A/R issue 03: Software quality principles extrapolated at modeling levels.

-

A/R issue 04: Specificity in the scenarios for quality in models.

Sections 3.2 and 3.3 describe in depth the above categories related to RQ1 and RQ2, respectively. Section 3.4 presents the results of the mismatch related to RQ3 and RQ4.

3.2 Detected categories for industrial quality issues

In response to RQ1, in the following, we present four categories that we defined for grouping the sentences of industrial quality issues. In Giraldo et al. (2016), 240 quality sentences are reported from industrial sources. These affect the perception of model-driven initiatives, and, therefore, their quality. Each category groups sentences of several sources that share a common quality issue. These categories were used to facilitate the analysis of the industry-academy mismatch.

The MDA is not enough category groups the sentences that report the lack of the MDA specification to resolve questions in the use and application of models and modeling languages (see Section 2.1). The Implicit questions derived from the MDE adoption itself category groups sentences in which open questions remain unresolved when a model-driven initiative (with its associated set of languages, models, transformations, and tools) is applied in a specific context.

The Tools as a way to increase complexity category groups the sentences that report explicit problems in the use and application of model-driven tools (e.g., tools based on the Eclipse EMF-GMF frameworks and associated projects). Tools are the main mechanism for creating and managing models by the application of modeling languages. Finally, the Organizational support for the MDE adoption category groups the sentences that report issues in the organizational adoption of model-driven initiatives.

In the following, we describe each category in more detail:

3.2.1 MDA is not enough

As a reference architecture, MDA provides the foundation for the usage and transformation of models in order to generate software using three predefined abstraction levels. A definition of quality in models that is supported in the alignment with MDA would not be enough. This is because the compliance with the guidelines of this architecture is the minimum criterion expected for the management of models and it must be implicitly supported by current tools and model-driven standards.

A real consequence of this MDA insufficiency is presented in Hutchinson et al. (2014). The authors show the lack of consensus about the best language and tool as being a pending issue that is not covered in the MDA specification. This issue affects real scenarios where a combination of languages is used to support specific industrial tasks. The model-driven community have recognized the lack of structural updates of the MDA specification in the last decade, which produces imprecise semantic definitions over models and transformations (Cabot). The MDA revision guide 2.0 (OMG 2014) released in June 2014 preserves these issues.

3.2.2 Implicit questions derived from the MDE adoption itself

This covers concerns about the suitability of languages and tools (Hutchinson et al. 2014; Staron 2006), new development processes derived from MDE adoption (Hutchinson et al. 2014), MDE deployment (Hutchinson et al. 2011a), the scope of the MDE application (Aranda et al. 2012; Whittle et al. 2014), and implicit questions about how and when a MDE approach is applied, e.g., when and where to apply MDE ? (Burden et al. 2014), and which MDE features mesh most easily with features of organizational change? which create most problems? (Hutchinson et al. 2011a). The correct usage of the modeling foundation in current modelling approaches is also questioned (Whittle et al. 2014).

3.2.3 Tools as a way to increase complexity

The absence of support for MDE tools and the lack of trained people require that great effort be made to adapt to the context of the organization with probably less that optimun results (Burden et al. 2014). This issue leads to problems with the followings: customization, tailoring, and interoperability among modelling tools (Burden et al. 2014; Mohagheghi et al. 2013b), management of traceability with several tools (Mohagheghi et al. 2013b), the high level of expertise and effort required to develop a MDE tool (Burden et al. 2014; Mohagheghi et al. 2013b), tool integration (Baker et al. 2005; Burden et al. 2014; Mohagheghi and Dehlen 2008b; Mohagheghi et al. 2013a), the dissatisfaction of MDE practicioners with the available tools (Tomassetti et al. 2012), the lack of technological maturity of the tools (Mohagheghi et al. 2013a), the scaling of the tools to large system development (Mohagheghi and Dehlen 2008b), poor user experience (Mohagheghi et al. 2009b), too many dependencies for adopting MDE tools (Whittle et al. 2013), and poor performance (Baker et al. 2005).

3.2.4 Organizational support for the adoption of MDE

This category represents issues that are related to commitments, costs especially training (Hutchinson et al. 2014), resistant to change (Aranda et al. 2012), the alignment and adaptation of MDE with how people and organizations work (Burden et al. 2014; Whittle et al. 2014), and organizational decisions based on diverging expert opinions (Hutchinson et al. 2011b).

The main concern of these works is the misalignment between the model-driven principles and the organizational elements. Most of the works on model-driven compliance are related to technical adoption, such as modelling tools, model-transformation consistency, and the incorporation of models in software development scenarios. However, due to the lack of an explicit model-driven process, organizational issues may not be able to be completely managed in a model-driven approach, by final model users.

3.3 Detected categories for academic/research quality issues

In response to RQ2, we propose another four categories in order to group the focus of the works on quality evaluation in the academic/research field. Seventy-one issues from this field were reported in Giraldo et al. (2016). The categories reflect the intention of the researchers in the model-driven field for managing quality issues. These are as follows:

3.3.1 Hard operationalization of model-quality frameworks

High abstraction and specific model issues influence the operationalization of model quality frameworks (i.e., the instrumentation of a framework by a software tool). Therefore, quality rules or procedures may not be fully implemented by operational mechanisms such as XSD schemas, EMF Query support, etc. In Störrle and Fish (2013) present an attempt to make operational the Physics of notations evaluation framework (Moody 2009); however, this operationalization (and any similar proposal) could be ambiguous as a consequence of the lack of precision and detail of the framework itself.

An example of model quality assurance tools as reported in Arendt and Taentzer (2013) where an operational process for assessing quality through static model analysis is presented. Instead of having an operational model quality framework, a quality framework like 6C (Mohagheghi et al. 2009a) has been used as a conceptual basis for deriving a quality assurance tool.

The lack of full operationalizations of model quality evaluation frameworks shows that model evaluation is still more an art than science (Nelson et al. 2005), and that current specifications to evaluate quality in models and modelling languages continue to be complex procedures for language designers and final model users.

3.3.2 Defects and metrics mainly in UML

Most of the quality proposals in models focus their effort on the applicability of metrics in UML models and the definition of guidelines to detect and avoid defects in UML diagrams. This trend is a direct consequence of the limitation of the model-driven paradigm in UML terms.

Limitations are based on the specific model-driven vision of OMG. This promotes the model-driven approach in UML, which offers a set of modelling notations that cover multiple aspects of business and systems modeling. MDA also promotes the UML extension using profiles by tailoring the core UML capabilities in a unified tooling environment (OMG 2003, 2014).

However, this vision contrasts with the low incidence of UML as the main artifact in software and IS development processes. Clear and recent evidence is reported in Petre (2013), where the main trend regarding the use of UML among a group of software experts was No Usage (No UML); the second representative trend was UML models were useful artifacts for specific and personal tasks, but these were discarded after explanatory tasks were completed. A very low number of participant experts mention UML in code-generation tasks. This vision also contrasts with recent evidence of removing UML in recognized development environments due to its lack of use as reported in Krill (2016).

Ambiguity in UML persists due to the specific meanings and interpretations that model practitioners applied to it. This ambiguity directly affects the full adoption of UML as a standard for software and information systems development communities. Also, there is no link between the quality issues reported in UML with the standardization effort of UML by OMG. The complexity in the UML formal specifications contributes to the confusion of model-driven practitioners.

3.3.3 Specificity in the scenarios for quality in models

The most relevant works in this issue have a specific focus from which the quality of models are defined. The quality frameworks formulated in Krogstie (2012a) and Lindland et al. (1994) have a semiotic foundation due to the use of signs in the process of the domain representation. Other works like Mohagheghi and Dehlen (2008a) and Mohagheghi et al. (2009a) propose desirable features (goals) for models. Some proposals are specific to the scope of the research performed (e.g. Domínguez-Mayo et al. 2010).

Some of the classical procedures for verifying the quality of conceptual models are related to the cognitive effectiveness of notations (generally UML models). In this way, quality motivations are limited to an evaluation (and probably intervention) process on a notation.

3.3.4 Software quality principles extrapolated at modeling levels

Within the MDE literature, there are proposals that extrapolate specific approaches for evaluating software quality at model levels, which are supported by the fact that MDE is a focus of software engineering. Some of the reported software quality approaches include the usage of metrics, defect detection in models, application of software quality hierarchies (in terms of characteristics, sub-characteristics, and quality attributes), best practices for implementing high-quality models, and model transformations. There is even a research area that is oriented to the evaluation of the usability of modeling languages (Schalles 2013), where the usability in diagrams is prioritized as the main quality attribute of models.

The main motivation for this extrapolation is the level of relative maturity of the software quality initiatives. In Moody (2005), the author suggests the formulation of quality frameworks for conceptual models based on the explicit adoption of the ISO 9126 standard, because of its wide usage in real scenarios and the fact that this standard makes recognizable the properties of a product or service. In Kahraman and Bilgen (2013), authors present a set of artifacts that are formulated to support the evaluation of domain-specific languages (DSLs). These instruments are derived from an integration of the CMMI model, the ISO 25010, standard, and the DESMET approach. The success of a DSL is defined as a combination of related characteristics that must be collectively possessed (by combining practices from CMMI and ISO 25010 hierarchy). Proposals of this kind assume that there is an existing relation among organizational process improvement efforts, their maturity levels, and the quality of DSL’s.

Software quality involves a strategy for the production of software that ensures user satisfaction, absence of defects, compliance with budget and time constraints, and the application of standards and best practices for software development. However, software quality is a ubiquitous concern in software engineering (Abran et al. 2013), and therefore, in the MDE context, additional effort is required for the adoption of the MDE approach.

3.4 Findings in the literature review of mismatch

For this literature review, journal papers were the main source of quality issues for both contexts (industrial and research), as shown in Fig. 4. However, for the industrial context, specialized websites (gray literatureFootnote 9) make significant contributions to the quality from a practitioners’ perspective. We found 49 industrial works and 24 academic/research works; the analysis was made on a total of 73 works.

To answer RQ3, Table 7 presents the identified works classified in the categories described in Section 3.1. The found mismatches show that model-driven practitioners perceive quality of models and modeling languages in different ways. It greatly depends on the application context where modelling approaches are used.

Figure 5 shows the percentage of quality issues detected in the industrial works analyzed. From a real software engineering perspective, there is an initial assumption about the high degree of impact related to model-driven tools and its consequences on development and organizational environments. However, for the industrial works analyzed, we detected the implicit questions derived from the MDE adoption itself issue as being the first concern of quality regarding the applicability of models and modeling languages. This issue is derived from the great ambiguity about when something is in MDE (or when something is MDE compliant) and also from the open questions generated in the application of models.

Clearly, industrial publications show a marked trend when discussing the deficiency, consequences, and support of the modeling act itself before using of specific modeling tools. In addition, quality issues related to the tools are evident in the detected works. Beyond the consequences of the application of model-driven initiatives, tools become a key artefact in perceiving, measuring and managing quality issues in modeling languages, taking into account concerns related to organizational, interactional, and technical levels.

The results in Fig. 6 highlight the presence of academic and research works that address industrial issues such as implicit questions derived from the MDE adoption itself and tools as a way to increase complexity. Some statements from academic and research sources show an alignment with industrial issues. However, in Fig. 6, the percentage of works that address industrial issues is lower than the sum of the percentages of works that promote specific interests of researchers in this field. It shows that model-driven researchers tend to focus on theoretical works; thus, these industrial issues are not interesting or relevant to model-driven researchers. This lack of research support increases the conceptual and methodological gaps for the real application of model-driven initiatives and promotes confusion in the model-driven paradigm.

An example of this theoretical emphasis of researchers is the relative proximity of the issue of implicit questions derived from the MDE adoption itself of the industrial category and the issue of defects and metrics mainly on UML of the academic/research category. There are many efforts that target the quality management of models through the intervention of modeling practices in UML as the defacto language for software analysis/design. There is clearly a gap between these quality trends and the reports about the real usage and applicability of UML, as in the study reported in Petre (2013).

Academic/research works also consider the inherent complexity involved in achieving concrete tools from theoretical quality frameworks for models and languages due to the high level of abstraction involved in them. In contrast, industrial works do not report specific quality issues that are related to the academic/research categories. Therefore, for answering RQ4, the above evidence demonstrates a very significant difference between the perceptions and efforts regarding quality in modeling languages and models for industrial and academic/research scenarios. This issue gap between industrial and academic communities requires a method that resolves the problems in industry that are not covered by the current methods.

In the academic-research and industrial contexts, the subjectivity and the particularities of the application scenarios play an important role in the derivation of quality issues in model-driven initiatives. Figure 7 shows the main intention of the analyzed works, depending on whether the work was written for academic/research purposes or for industrial purposes. These intentions refer to personal opinions, studies, or approaches. The main sources for the industrial context are opinions and interactions in web sites reported in the gray literature. This is valuable considering that these resources show real experiences of attempts to use model-driven initiatives in real software projects.

In the academic and research field, there is a strong trend (41.67% of reported works) toward specific model-driven initiatives promoted by practitioners. Among these initiatives are DSL, model-driven approaches, operations on models (e.g., searching over models, establishing the level of detail of models), and specific considerations for model transformations (e.g., BPMN models to petri nets). Although several modeling language quality issues were extracted from formal studies performed by researchers, it is important to note how quality issues also serve as excuses (or pivots) for promoting specific model-driven initiatives.

In summary, the current academic/research methods have not solved quality issues for MDE reported in the industry (Section 3.2). It seems that researchers have not yet addressed these problems satisfactorily.Footnote 10 Therefore, we consider it necessary to list the open challenges and to define (in a greater depth) the research roadmap proposed in Giraldo et al. (2015) in order to cover these issues comprehensively. Thus, in Section 4, we show a real scenario in which quality issues associated to the Sections 3.2.2 and 3.2.4 are depicted. Afterwards, in the Section 5, we present a set of challenges we inferred from the evidences related in both above literature reviews.

4 The sufficiency of current quality evaluation proposals

In this section, we present a scenario for multiple application modeling languages. The case presented in this section was a finished project that had been previously developed by the authors, the implementation of an information system for institutional academic quality management. In this IS project, quality issues were empirically demonstrated. Quality evaluation methods were not used during the execution of this model-driven project. The full specification of the case is presented in Appendix A.

The objective of this scenario is to demonstrate that the application of an existing quality method has not revealed all of the modeling quality issues of the project, despite the execution of the analysis as a post-mortem task. For this empirical study, we have chosen the Physics of Notations - PoN - (Moody 2009), the most widely cited modeling language quality evaluation framework available in the literature. We show that, despite having many useful features, this framework is insufficient to cover all the needs that arise when evaluating the quality of (sets of) modeling languages in MDE projects. The identification of these uncovered needs serves as additional input for the definition of a research roadmap in Section 5.

A post-mortem analysis was performed to evaluate the quality of a set of modeling languages that were employed in the project (Flowchart, UML, E/R, and architecture languages). Appendix A.1 presents the models that were obtained in the project. Each one of the PoN principles was applied to the obtained models in the project to determine whether or not the models meet the PoN principles. Appendix A.2 presents the results of the quality assessment with the PoN framework.

Table 8 summarizes the detected quality issues in the proposed scenario. Although it is true that the application of the PoN framework allows quality issues in the modeling scenario to be detected, other critical quality issues were not detected by this method. PoN meets its goals of analyzing the concrete syntax of the modelling languages under evaluation. However, other quality issues appear for factors such as multiple modeling languages, different abstraction levels, several stakeholders, and viewpoints.

One single quality framework may be insufficient to integrally address all quality issues in MDE projects. Even though there are guidelines to support the application of existing individual quality methods which avoid subjective criteria that influence the final results of the analysis for PON (e.g., da Silva Teixeira et al. 2016), there are no systematic guidelines for using quality methods for MDE in combination.

5 Open challenges in the evaluation of the quality of modeling languages in MDE contexts

Sections 2, 3, and 4 presented the problems and questions that remain regarding the evaluation of quality issues in the MDE field. Current phenomena for model-driven applicability, use, and the associated quality issues create several challenges that impact the adoption of the model-driven paradigm. Here, it is not enough to evaluate quality from a prescriptive perspective as is proposed for most of the identified quality categories of Section 2.4. Any quality evaluation method in models and modeling languages requires the incorporation of the realities regarding MDE itself.

These realities are not unfamiliar to the model-driven community. In the following, we have highlighted the terms and sentences that represent them in bold. They were taken from recognized sources that provide definitions about models. A quick overview of some classical model definitions reveals the presence of subject as a fundamental element of the model itself. This is valid for the unified axiom of model as concept in order to understand a subject or phenomenon in the form of description, specification, or theory:

-

OMG MDA guide 1.0 (OMG 2003): A model of a system is a description or specification of that system and its environment for a certain purpose. A model is often presented as a combination of drawings and text. The text may be in a modeling language or in a natural language. Model is also a formal specification of the function, structure, and/or behavior of an application or system.

-