Abstract

We review novel data analysis techniques developed or adapted for the field of coronal seismology. We focus on methods from the last ten years that were developed for extreme ultraviolet (EUV) imaging observations of the solar corona, as well as for light curves from radio and X-ray. The review covers methods for the analysis of transverse and longitudinal waves; spectral analysis of oscillatory signals in time series; automated detection and processing of large data sets; empirical mode decomposition; motion magnification; and reliable detection, including the most common pitfalls causing artefacts and false detections. We also consider techniques for the detailed investigation of MHD waves and seismological inference of physical parameters of the coronal plasma, including restoration of the three-dimensional geometry of oscillating coronal loops, forward modelling and Bayesian parameter inference.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the recent decade, a number of new instruments for observing the solar corona were commissioned including space-borne instruments such as the Atmospheric Imaging Assembly on-board the Solar Dynamics Observatory (SDO/AIA, Lemen et al. 2012), the TESIS experiment on the CORONAS-PHOTON spacecraft (Kuzin et al. 2011), and the Interface Region Imaging Spectrograph (IRIS, De Pontieu et al. 2014), as well as ground-based optical instruments such as the Coronal Multi-Channel Polarimeter (CoMP, Tomczyk et al. 2008). These additional observational capabilities triggered a new wave of research in the field of coronal seismology, the results of which are discussed in detail in other reviews of this series.

Among the current observational instruments, the most important is SDO/AIA which is an imaging instrument continuously observing the Sun and providing full-disk images in 7 channels in the EUV band with a cadence of 12 seconds, as well as in 2 channels in the UV range with a cadence of 24 seconds. The spatial resolution is around one arcsecond, with a pixel size of 0.6 arcseconds for all channels. In contrast to the previous-generation instrument TRACE, SDO/AIA has higher sensitivity and a wider field-of-view and observes the Sun simultaneously in several filters that are sensitive to different temperatures. Higher sensitivity allows for precise measurements of the brightness variations associated with MHD waves. Thanks to the field-of-view covering the whole solar disk and almost-continuous observational setup, every event is recorded and can be investigated afterwards. The simultaneous high cadence observations at multiple wavelengths allows the differential emission measure (DEM) to be computed, and hence the density and temperature in coronal structures to be estimated.

Since seismological information comes from spatially- and temporally-resolved observations of the solar corona, a typical workflow of data analysis consists of three steps:

-

1.

Dimension reduction in order to extract a time-series corresponding to some physical quantity (e.g. displacement or brightness of a coronal structure);

-

2.

Spectral analysis of the time-series obtained in the previous step;

-

3.

Inferring physical properties of the coronal plasma from parameters of the oscillatory signals obtained with spectral analysis.

Although imaging data obtained by SDO/AIA are essentially 3D, with two spatial and one temporal dimension, traditional analysis techniques such as Fast Fourier Transform (FFT) and Continuous Wavelet Transform (CWT), as well as a number of novel techniques reviewed here (see Sect. 2) are designed to process 1D time-series. Therefore, before applying these techniques, one needs to reduce the number of dimensions. A typical approach of dimension reduction is to put an artificial slit across or along the oscillating structure (depending upon the polarisation of the analysed oscillation) and produce a 2D distribution of the emission intensity in the time-distance space. Then, the dimensions are reduced further by extracting an oscillation profile (e.g. by measuring the structure’s position or its brightness at every instant of time). This approach is very common and is also used by some of the advanced data analysis techniques reviewed here (see Sects. 3.2 and 4). However, for some techniques the spatial information in imaging data is essential and used without dimension reductions (see Sects. 3.1, 5, and 6).

The rapid development of coronal MHD seismology and the new observational capabilities have caused new challenges for the research field, which are listed below:

-

1.

Oscillatory signals in observational time series need to be discriminated from the noise and background on which they are superimposed;

-

2.

Oscillatory signals associated with MHD waves and oscillations are often non-stationary and only a few oscillation cycles are observed.

-

3.

Modern instruments such as SDO/AIA produce a large amount of observational data that needs to be stored and processed;

-

4.

Spatial and temporal resolution of the available data is limited;

-

5.

MHD seismology problems are usually ill-posed and the available observational information needed to infer model parameters is often limited and incomplete;

Time-series analysis is one of the basic elements in a typical data processing pipelines in the field of coronal seismology. For instance, a time-series can represent the coronal loop displacement or its brightness variation associated with an MHD wave. Such an oscillatory signal is always superimposed with noise and non-periodic background processes. Therefore, it should be discriminated from the noise and a background trend. The methods of detecting oscillatory signals in time-series are discussed in Sect. 2.

In contrast to classical helioseismology, coronal seismology often deals with the rapidly-evolving medium of the solar corona. The characteristic evolution time of coronal structures (e.g. coronal loops) is comparable to the typical periods of MHD oscillations. Thus, the key parameters of an oscillating structure, such as density and temperature, can change significantly during an oscillation event causing pronounced amplitude and frequency modulation, resulting in the non-stationarity of the observed oscillatory signals. The analysis of such signals with traditional spectral analysis, based on decomposition of the signal into fixed basis functions such as in Fourier or Wavelet transforms, is less reliable and can lead to both false positive and false negative detections of oscillatory phenomena (Allian et al. 2019). Thus, there is a need for new data processing techniques targeted at the analysis of non-stationary signals modulated in both frequency and amplitude, including the adoption of approaches intensively used in other research fields. One such method, empirical mode decomposition (EMD), discussed in Sect. 2.1.

The majority of coronal MHD seismology research is concentrated on case studies where one or several individual events are analysed in detail. However, there is a need for much broader statistical studies covering a large number of events and covering several years of observations. The gathering of such a statistical sample requires new techniques with automated processing of a large amount of data. Thus, we need data processing techniques with the capabilities of fast automated detection and analysis for imaging observations of MHD waves in the coronal plasma. One of the responses to this challenge is the Northumbria University Wave Tracking (NUWT) code aimed at automatic detection of transverse oscillations in time-distance plots. This technique is described in Sect. 3.2.

Despite there being a large volume of observational data from instruments observing the solar corona in different bands, including EUV and radio, the available observables are incomplete in terms of seismological inference of the physical parameters of coronal plasma. For instance, the determination of the coronal magnetic field from kink oscillations of coronal loops requires measurements of: the oscillation period of the fundamental kink mode, density, internal-to-external density ratio (density contrast), and the length of the oscillating coronal loop. From these four required parameters only the oscillation period can be measured directly, whereas the measurement of the other three parameters has some ambiguities because the observed EUV emission is optically thin. For instance, the estimation of the density contrast requires measurement of the background plasma density, which is complicated by the line-of-sight integration effects of the optically-thin EUV emission of coronal plasma. Such observational limitations are a source of uncertainty that should be quantified and propagated to the inference results. The solution of this problem is addressed by the Bayesian analysis discussed in Sect. 7. Likewise, the reconstruction of the 3D geometry of the oscillating coronal structure is crucial for the estimation of loop length and is a non-trivial problem. Solutions to this are discussed in Sect. 5.

As mentioned, the analysis of observations of the coronal plasma in EUV and radio is complicated by line-of-sight effects. Thus, the interpretation of such observations requires accounting for these effects by modelling of the radiation transfer in the coronal plasma. This problem is addressed in Sect. 6 where forward modelling of EUV emission is discussed.

Another problem of available observations in the EUV band is the limited spatial resolution. This is especially important for low-amplitude decayless kink oscillation of coronal loops recently discovered in SDO/AIA data (see Sect. 12 in Nakariakov et al. 2021). These oscillations are characterised by very low spatial displacements of oscillating loops which are usually lower than the pixel size of the SDO/AIA instrument and, therefore are hard to detect and analyse. This challenge motivated the development of the motion magnification algorithm which is discussed in Sect. 3.1.

This review is organised as follows: Firstly, we discuss methods for detection and preliminary analysis of waves and oscillations in different kinds of data. Section 2 is devoted to the processing of time-series data, while the analysis of transverse and longitudinal motions in imaging data is reviewed in Sects. 3 and 4, respectively. We then consider advanced data analysis techniques used for the detailed investigation of MHD waves and seismological inference of physical parameters of coronal plasma. Methods for restoration of the 3D geometry of oscillating coronal loops are reviewed in Sect. 5, forward modelling of the instrumental response upon waves and oscillations is considered in Sect. 6, and inference of the physical parameters of coronal plasma within the Bayesian paradigm is discussed in Sect. 7.

This review does not cover all existing data processing methods used in solar physics. Instead, we focused only on the novel techniques developed in the last ten years that are connected with the subject of MHD seismology via EUV observations. Therefore, many methods beyond the scope of our review are not considered here. These are mainly methods developed more than ten years ago that have already become standard tools such as the wavelet analysis (Torrence and Compo 1998) and tools based on it (Sych and Nakariakov 2008). Since our focus is on EUV data, we do not cover new advances in forward modelling and analysis of radio observations (Nita et al. 2011; Kuznetsov et al. 2015). Also, we do not cover new promising techniques based on machine-learning, which can be used for forecasting solar flares and space weather events (see e.g. Bobra and Couvidat 2015; Camporeale 2019; Benvenuto et al. 2020) since these have not been applied in coronal seismology yet.

2 Detection and Analysis of Waves and Oscillations in Time-Series Data

The most popular traditional techniques for the analysis of oscillatory signals in time-series data are Fast Fourier transform (FFT), Continuous Wavelet Transform (CWT), and least squares fitting. All of these techniques are based on mode decomposition or some sort of matching of an analysed signal with a model or a set of models. Least-squares fitting does this directly by fitting the data with a predefined model depending on a set of free parameters. The model parameters are tuned until the best match (the lowest \(\chi ^{2}\)-criterion) between the model and data is found.

FFT and CWT are based on the decomposition of an oscillatory signal into a set of modes using a predefined basis. The Fast Fourier Transform decomposes the input signal into a set of harmonic functions which are not modulated and therefore have constant frequencies and amplitudes. The most valuable benefits from the FFT analysis are gained when the FFT basis coincides with a set of natural modes of the physical system which is investigated. A good example of such a system is acoustic waves in the solar interior. The natural modes of the Sun as an acoustic resonator are almost-pure, very narrow-band harmonic waves which can be observed for months and even years at the same frequencies. Therefore, FFT has become the main tool in the seismology of the solar interior (helioseismology) and has allowed a lot of valuable information about the internal structure of the Sun to be obtained.

In contrast to the solar interior, the characteristic evolution time of the structures observed in the solar corona and their life-time as well are around several tens of minutes or even less, which is comparable to typical periods of MHD oscillations observed in the corona. Moreover, the number of observed natural modes (harmonics) of coronal oscillations is always very limited. Usually, only the fundamental mode and 1–2 of its overtones can be detected. Thus, the oscillatory processes in the solar corona are expected to be rather short-lived and to exhibit a very limited number of harmonics (2–3) modulated in both frequency and amplitude due to fast evolution of oscillating plasma structures, even if their natural modes of are pure sinusoids.

Since the classical FFT analysis does not have temporal resolution, it is not capable of resolving different physical modes having similar frequencies but separated in the time domain. Since the coronal oscillations are observed as quasi-harmonic signals localised in both the time and frequency domain, a natural choice for their analysis is the CWT which provides a representation of the oscillating power of a 1D signal in a 2D time-frequency domain. Like FFT, CWT is a decomposition of the signal into a set of oscillatory modes, but CWT modes are rather short wave-trains as opposed to the plane sinusoidal waves used by FFT.

Despite the successful applications of FFT and CWT in coronal seismology, both techniques suffer from the same drawback: Their predefined modes not always match the physical modes of oscillating structures such as coronal loops. These modes, in turn, are known only for just a few specific coronal magnetic plasma configurations (e.g. magnetic cylinders with a sharp boundary and plasma slabs with a smooth boundary determined by the symmetric Epstein profile). A possible first step to alleviate this problem is described by Rial et al. (2019) who considered how to determine the normal modes of a physical system by iterative application of time-dependent numerical simulations and the CEOF (Complex Empirical Orthogonal Function) analysis of simulation results. Provided the numerical models are realistic enough, this should produce normal mode characteristics comparable to observed loop oscillation events. Additionally, waves in coronal structures are subject to damping that introduces amplitude modulation, which is different from both the constant amplitude of the FFT modes and from the predefined symmetric shape of a wavelet. Moreover, loop parameters such as length or temperature evolve in time causing corresponding evolution of the resonant frequencies. These circumstances stimulated development and adaptation of new time-series analysis techniques, such as EMD, which do not use a predefined set of basis functions. Instead, the oscillatory modes are obtained empirically from the signal itself, potentially allowing for the extraction of true physical modes. The detailed description of the EMD analysis is given in Sect. 2.1.

Below, we present individual tools for time-series analysis including EMD (Sect. 2.1) and software packages for automated time-series analysis AFINO (Sect. 2.2) and Stingray (Sect. 2.3). Following this, we discuss possible pitfalls of the time-series processing that may cause detection of false periodicities even in pure noise (Sect. 2.4) and summarise everything on the example of searching for quasi-periodic pulsations (QPPs) in flaring lightcurves (Sect. 2.5).

2.1 Empirical Mode Decomposition for Analysis of Oscillatory Processes on the Sun and Stars

A novel promising method for the detection and analysis of quasi-periodic and non-stationary oscillatory patterns in astrophysical observations, in particular various oscillations in the solar and stellar atmospheres, is empirical mode decomposition (EMD, Huang et al. 1998). Originally designed for geophysical applications (see e.g. Huang and Wu 2008, for review), its potential for astrophysical application was revealed rather recently, thus demonstrating an excellent example of scientific knowledge transfer. In this section, we briefly outline the basic principles of EMD, overview examples of its application for processing of solar, stellar, and magnetospheric observations, describe current trends in the further development of the method, highlight its advantages in comparison with more traditional and well-elaborated Fourier and wavelet approaches, and summarise known shortcomings and perspectives for improvement.

Treating a signal of interest as an ensemble of various active time scales defined by the local extrema, EMD reduces the signal into a number of intrinsic mode functions (IMF) which are smooth quasi-periodic functions with approximately zero mean and having slowly varying amplitude and period (see examples in Fig. 1b). IMFs are extracted from the original signal through the so-called sifting process, iteratively searching for and extracting local time scales from the input time-series. More specifically, for each IMF the sifting procedure runs through:

-

Constructing the upper and lower envelopes of the input signal via connecting, for example by cubic spline interpolation, all the local maxima and minima;

-

Obtaining the mean of these two envelopes and subtracting it from the input signal;

-

Repeating the two previous steps until a condition determining the end of the sifting process for each individual intrinsic mode is met. This stopping criterion could be implemented via evaluation of the standard deviation between two consecutive sifting iterations, known as a shift factor, or by limiting the maximum number of sifting iterations by some reasonable value;

-

Once this stopping condition is met, the resultant intrinsic mode is subtracted from the input signal and the whole procedure repeats for the next intrinsic mode, until a non-oscillatory residue is reached.

As a result of this sifting process, each detected IMF should approximately satisfy the following conditions:

-

The number of zero-crossings and extrema differ by no more than one;

-

The local mean of the upper and lower envelopes is zero.

Thus, the basis of decomposition in the EMD analysis is derived locally from the data and is not prescribed a priori. The local nature of EMD makes it adaptive and therefore entirely different and independent from other, more conventional Fourier transform based techniques. Namely, intrinsic modes are not necessarily harmonic, can be highly non-stationary, and their number is small (\(\sim \log _{2}(N)\), where \(N\) is the length of a time-series) in comparison with the number of harmonics revealed by the discrete Fourier transform. For instance, EMD decomposes a time series shown in Fig. 1a into eights IMFs (five of them are shown in Fig. 1b) and a non-oscillatory residual or trend (dashed red line in Fig. 1c).

Application of the EMD and FFT methods to a synthetic signal, shown in black in panel (a) and consisting of the exponential trend (red dashed), a non-stationary oscillatory component with decaying amplitude and shortening period (blue), and coloured noise with the power-law index \(\alpha =1\) (see Eq. (1)). Panel (b) shows first five EMD-revealed intrinsic mode functions of the original signal, including a statistically significant mode 5 which retains the non-stationary properties of the input oscillatory signal. The red dashed boxes illustrate the phenomenon of mode mixing in modes 2 and 3. Panel (c) shows the EMD-revealed significant mode 5 (blue) superimposed onto the EMD-revealed trend (red dashed) of the original signal. Panels (d) and (e) show the representation of the original signal with the EMD-revealed trend subtracted in the Fourier spectrum and in the EMD spectrum, with the latter defined by Eq. (1) as dependence of the EMD total modal energy on the mean modal period. The green, red, and yellow circles in the EMD spectrum correspond to the total energies and mean periods of each EMD-revealed mode, including one statistically significant mode and one mode associated with the aperiodic trend of the original signal. The blue solid lines in both panels (d) and (e) show best-fitting of the corresponding spectra by a power law function, see Eq. (1). The red solid lines in both panels show the 95% confidence levels, above which the oscillatory components are considered as statistically significant (see Kolotkov et al. 2016, for details)

On the other hand, the completeness of the obtained empirical modes, i.e. recombining them to restore the initial signal, and their approximate orthogonality are discussed in detail in Sect. 6 of Huang et al. (1998). We also note here that in reality even two pure sinusoidal waves with different periods are not exactly orthogonal because of the finite data length. The detected empirical modes are typically characterised by increasing intrinsic time scales, with the last modes representing the longest-term variations of the input signal. Deduction of the shortest-period modes from the original signal is equivalent to its low-pass filtering or smoothing, while the aperiodic residue (or a combination of several longest-period modes) represent a slowly-varying trend, thus allowing one to use EMD for self-consistent detrending and smoothing. The analysis of instantaneous period and amplitude of each locally narrow-band intrinsic mode obtained with EMD can be performed, for example, with the Hilbert transform. A combination of EMD and the Hilbert transform for observational signal processing is known as the Hilbert–Huang transform approach.

An interesting experiment aiming at comparative analysis of the efficiency of various state-of-the-art methods, including EMD and the Fourier and wavelet transform based techniques, for detecting and analysis of quasi-periodic pulsations (QPPs) in solar and stellar flares was performed in Broomhall et al. (2019). Due to their essentially irregular nature and relatively short lifetime (usually lasting for only a few oscillation cycles), the question of robust and reliable detection of QPPs remains open (see also Sect. 2.5 of this review and Zimovets et al. (2021) for more details on the phenomenon of QPPs in solar and stellar flares). The best practice revealed by Broomhall et al. (2019) is that QPPs demonstrating different observational properties should be analysed with different methods. For example, EMD was found to perform very efficiently for detecting QPPs with non-stationary periods, which were not detected by the other methods based on the assumption of harmonic basis functions or their wavelets. A combination of several independent techniques can also help to increase the reliability of detection. Figure 1 demonstrates an example of applying the EMD analysis to a synthetic signal imitating a QPP in the decay phase of a solar flare and consisting of an monotonic trend and a non-stationary oscillation with varying period and amplitude, and contaminated by coloured noise. EMD has successfully detected and recovered both the trend and oscillatory signal as the only significant modes in the decomposition.

Thus, due to its advantages in processing irregular and short-lived oscillatory signals, EMD has been extensively used for detection and analysis of solar and stellar oscillations on various time scales and of different physical natures. For example, the above-mentioned phenomenon of QPPs in solar flares with periods from a few seconds to tens of minutes was detected with EMD in e.g. Kolotkov et al. (2015b), Nakariakov et al. (2019), Kashapova et al. (2020), Kupriyanova et al. (2020), including QPPs in the most powerful solar flare of cycle 24 (Kolotkov et al. 2018b). Likewise, EMD was successfully employed for studying QPPs in stellar flares (e.g. Doyle et al. 2018; Jackman et al. 2019) and for establishing the analogy between solar and stellar flares via QPPs (e.g. Cho et al. 2016). The use of EMD has also allowed for an advancement of our understanding of MHD waves and oscillations in solar coronal loops (Terradas et al. 2004) and at chromospheric and transition region heights (Narang et al. 2019) with typical periods of a few minutes, and for identification of quasi-periodic oscillatory modes in long-lived solar facular regions with periods ranging from several minutes to a few hours (Kolotkov et al. 2017; Strekalova et al. 2018). Oscillatory variabilities in the longer-term solar proxies with periods from about a month up to the entire solar cycle were found with EMD in 10.7 cm solar radio flux and sunspot records (Zolotova and Ponyavin 2007; Mei et al. 2018), coronal Fe XIV emission (Deng et al. 2015), flare activity index and occurrence rate of coronal mass ejections (Deng et al. 2019; Gao et al. 2012), total and surface solar irradiance (Li et al. 2012; Lee et al. 2015; Bengulescu et al. 2018), helioseismic frequency shift (Kolotkov et al. 2015a), spatio-temporal dynamics of the solar magnetic field (Vecchio et al. 2012) and in the Sun-as-a-star observations of the solar mean magnetic field (Xiang and Qu 2016), solar radius data (Qu et al. 2015), and also in direct numerical simulations of convection-driven dynamos (Käpylä et al. 2016).

Another important practical application of EMD draws on its ability to self-consistently detect aperiodic or low-frequency trends in observational time-series. For example, Nakariakov et al. (2010) obtained solar flare trends with EMD which allowed for a simultaneous detection of highly anharmonic QPPs of a symmetric triangular shape in the microwave and hard X-ray flare fluxes. Likewise, Hnat et al. (2016) used EMD to get rid of nonharmonic trends, associated with short large amplitude magnetic field structures (SLAMS) in the in-situ measurements of the magnetic field in the terrestrial quasi-parallel foreshock by the multi-spacecraft Cluster mission. This allowed for the first direct observational detection of nonlinear wave trains on scales smaller than those of SLAMS and numerical modelling of them in terms of the derivative nonlinear Schrödinger equation. Thus, the application of EMD could provide an additional method for detrending in the areas of solar and heliospheric physics for which the presence of low-frequency background variations in observational time-series is crucially important (see e.g. the problem of a long-term trend in the total solar irradiance measurments Fröhlich 2009).

Similarly to the conventional Fourier-based decomposition methods in which the presence of noise in the analysed signal leads to the appearance of spurious peaks in the power spectrum, whose significance can be assessed through the application of well elaborated and robust statistical techniques (see Sect. 2.5 of this review and also Vaughan 2005; Pugh et al. 2017a), not all the modes revealed by EMD necessarily represent statistically-significant oscillatory processes. In other words, significance of the EMD-revealed intrinsic modes must be tested in comparison with the background noise in a similar manner to the corresponding tests in the Fourier and wavelet methods. For example, properties of white noise in the EMD analysis were studied in Wu and Huang (2004). However, the solar and heliospheric observations are often contaminated by a combination of white and coloured noises (e.g. Inglis et al. 2015; Ireland et al. 2015), with white/coloured noise dominating at higher-/lower-frequency parts of the spectrum, respectively. These noisy components could manifest different physical processes of natural or instrumental origin. For example, coloured, i.e. frequency-dependent, noise may be associated, for example, with spontaneous regimes of small-scale magnetic reconnection, for which the characteristic distribution of the released energy is found to obey a power-law dependence (e.g. Bárta et al. 2011).

This urgent need for incorporating statistics of coloured noise in the EMD analysis was recently addressed by Kolotkov et al. (2016) through the development of a method for assessing the statistical significance of the intrinsic EMD modes in comparison with a power-law distributed background. Namely, the fact that EMD operates as an approximate dyadic filter bank (Flandrin et al. 2004), i.e. the frequency coverage in each intrinsic mode function decreases approximately by a factor of 2 with the mode number so that the higher-frequency modes occupy a broader range of frequencies, allowed for re-writing the distribution of the Fourier spectral power \(S\) over frequencies \(f\) for a power-law distributed noise, \(S\propto 1/f^{\alpha }\) with typical \(\alpha \gtrsim 0\), in terms of the total energy of each EMD mode \(E_{\mathrm{m}} = \sum _{i} x_{i}^{2}\) and dominant modal period \(P_{\mathrm{m}}\) as

Equation (1) can be referred to as the mean EMD power spectrum of a power-law distributed noise. The particular forms of Eq. (1) for white, pink (flicker), and red noises can be obtained by substituting \(\alpha =0\), \(\alpha =1\), and \(\alpha =2\) into it, respectively. A similar relationship was obtained by Franzke (2009) in the application to oscillations in climatological data, but for the background noise modelled as an autoregressive process of the first order.

An additional fact about EMD is that the instantaneous amplitudes of intrinsic mode functions obtained from pure noise samples are normally distributed, that was demonstrated for the models of white (Wu and Huang 2004) and coloured (Kolotkov et al. 2016) noise. Combined with the definition of the total energy of each EMD mode as a sum of all instantaneous amplitudes squared, this allowed Kolotkov et al. (2016) to show that this total EMD modal energy of coloured noise obeys a chi-squared distribution with the mean value determined by Eq. (1). The number of degrees of freedom (DoF) in this distribution is greater than 2 providing the existence of the upper and lower confidence levels, and also the number of DoF was shown to depend on the modal oscillation period. In the method of Kolotkov et al. (2016) this dependence was established heuristically, i.e. from numerical experiments. Thus, all the EMD modes with total energies situated outside this distribution possess properties statistically different to noise and hence can be considered as statistically significant. Revealing the properties of white and coloured noise in the EMD analysis and developing the noise testing scheme radically improves the ability of EMD to detect quasi-oscillatory signals in observations. Figure 1 demonstrates detection of a statistically significant non-stationary oscillation in a synthetic time-series by EMD and Fourier analysis. An example of real data processing is shown in Fig. 2 where out of ten oscillatory modes detected with EMD in the time-series of the average magnetic field in a solar facula only a single mode was found to posses statistically-significant properties, representing a non-stationary oscillation with the period increasing from about 80 min to 230 min.

An example of the EMD analysis of oscillatory variations of the average magnetic field (the black line in the right-hand panel) in a solar facula (adapted from Kolotkov et al. 2017). The left-hand panel shows the EMD energy spectrum, that is dependence of the total energy of each intrinsic mode revealed by EMD (the black, green, and red circles) on the corresponding modal dominant period. The blue and pink solid lines show the 99% confidence intervals for while noise (with the spectral power-law index \(\alpha =0\)) and pink noise (\(\alpha =1\)), respectively, obtained with the method developed by Kolotkov et al. (2016). The dashed blue and pink lines show best-fits of the corresponding EMD modal energies by Eq. (1). The red circle indicates the EMD mode with statistically significant properties, that is shown in the right-hand panel in red. The yellow circle indicates a slowly varying trend that was subtracted from the original signal

Despite being developed just a few decades ago, EMD has already been unequivocally proven as an efficient and powerful method for revealing non-stationary and irregular quasi-periodic patterns in observational time-series of various natures. The test of statistical significance developed by Kolotkov et al. (2016) enabled a more rigorous and thus meaningful use of EMD for solar, stellar, and magnetospheric observations. This opens up clear perspectives for exploiting the potential of EMD for processing the upcoming data from recently commissioned and future observational instruments, such as, for example, Parker Solar Probe and Solar Orbiter (De Pablos et al. 2021). On the other hand, the full-scale application of the method also requires understanding of the realm of its capability and pitfalls. Below, we summarise a few known shortcomings of EMD and provide suggestions for further potential improvements:

-

Sometimes, for example due to poor time resolution or other peculiarities in the analysed signal or improper settings of the decomposition algorithm, EMD is unable to properly distinguish between local time scales, causing a so-called mode-mixing (also known as mode-leakage) problem. It manifests as either the appearance of widely disparate time scales in a single intrinsic mode or when a signal of a similar time scale resides in different intrinsic modes. An example of apparent mode-mixing can be seen in Fig. 1b where it is marked by red dashed rectangles. In particular, the mode-mixing problem can adversely affect the dyadic nature of EMD and thus corrupt the applicability of Eq. (1) and compromise the entire analysis. To suppress such inter-mode leakages, the noise-assisted ensemble empirical mode decomposition (EEMD) was proposed (see Huang and Wu 2008, for details). Although EEMD was indeed able to cope with the mode-mixing problem, its application is time-consuming and would benefit from parallelising.

-

The assessment of the statistical significance by the method developed by Kolotkov et al. (2016) fails if there is an insufficient number of oscillation cycles in the EMD-revealed intrinsic mode (see e.g. Broomhall et al. 2019). In this case, such low-frequency modes are better to be attributed to a slowly-varying trend of the original signal and excluded from the list of potential oscillations.

-

The choice of the internal EMD parameter, a shift factor, determining the criterion for stopping the sifting process for each individual intrinsic mode and thus controlling the sensitivity of the decomposition is another currently unresolved issue of EMD. For example, if this shift factor is chosen too low, that corresponds to a large exponentially growing number of sifting iterations, then the decomposition reduces to the Fourier transform in the limit of infinite run time (Wang and Jiang 2010). In contrast, if the shift factor is too high then the sifting process is stopped too early, and IMFs appear to be undersifted, i.e. highly obscured by noise. Hence, an optimisation of the choice of the shift factor is currently needed.

2.2 AFINO Code

Most studies that search for oscillatory phenomena in solar data make use of popular techniques such as wavelets or periodograms. The Automated Flare Inference of Oscillations (AFINO) method (Inglis et al. 2015, 2016; Murphy et al. 2018; Hayes et al. 2020) takes an alternative approach to this problem, instead searching for oscillations in time-series data by fitting and comparing various models to the Fourier power spectral density of the signal. AFINO is an open source tool written in Python and is publicly available.

The approach taken by AFINO is motivated by several factors. First, the realisation that many time-series in nature, and in particular in astrophysics, exhibit power laws in the Fourier power spectrum (McHardy et al. 2006; Cenko et al. 2010; Gruber et al. 2011; Huppenkothen et al. 2013), a feature that must be carefully accounted for when using techniques such as a periodogram or wavelet [e.g. Vaughan 2005, 2010]. Secondly, while it is common to prepare signals for analysis by performing running mean subtractions or pre-filtering of the initial time-series, such practices require arbitrary choices that can lead to inaccurate results and also cause a reproducibility problem (see also Sect. 2.4 for a more detailed discussion of these issues). Finally, the AFINO algorithm was designed to enable quick analysis of a large number of time-series to enable statistical studies, something difficult to achieve with methods that require manual fine-tuning. Given these motivations, the AFINO method instead analyses the full Fourier spectrum of an input signal, avoiding manual intervention and providing fast, reproducible results.

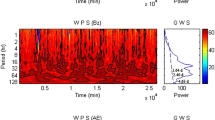

The AFINO methodology is described in detail in Inglis et al. (2015, 2016). First, an evenly-sampled input time series is acquired, normalised and multiplied by a window function. The choice of window function is not critical, and a number of popular functions may be used (for example the Hanning window, or the Blackman-Harris window). Second, AFINO fits a set of candidate models to the Fourier power spectrum of the modified signal using the maximum likelihood method, using tools available in SciPy. In the Inglis et al. (2015, 2016) formulation, one of these models includes a Gaussian enhancement in frequency space, representing a quasi-stationary oscillation, while other ‘null hypothesis’ models include variations on a power-law in frequency space. Third, these models are compared using the Bayesian Information Criterion (BIC) (Schwarz 1978). The BIC is defined as \(BIC = -2 \ln (L) + k \ln (n)\), where \(L\) is the maximum likelihood, \(n\) is the number of spectral data points and \(k\) is the number of free model parameters. Thus, BIC can be calculated directly from the maximum likelihood. The BIC includes a penalty term associated with adding extra free parameters, the idea being that a simpler model will be preferred unless there is substantial evidence in favour of a more complex alternative. The last major step is to perform an additional check to test whether any of the applied models were acceptable using a \(\chi ^{2}\)-like parameter derived by Nita et al. (2014). This is done since the BIC comparison itself does not make an explicit determination of whether any of the chosen models are an acceptable fit. Once this is complete, the evidence for an oscillatory signature in the data can be assessed based on the BIC values. An illustrative example of the analysis of a synthetic signal mimicking a QPP in a stellar flare is presented in Fig. 3. The AFINO code has successfully detected a predefined oscillatory component and correctly identified its period. The presence of the oscillations were assessed based on the BIC values computed for two competing models: an oscillatory signal superimposed with a power law noise and a power law noise alone.

The AFINO technique applied to a synthetic flare signal with a known oscillation period of 13 s. The flare input time series is shown in the left panel. The right panel shows the Fourier Power Spectral Density (PSD) in blue, with the best-fit model superimposed in green. Using the Bayesian Information Criterion (BIC) comparison, AFINO finds that the model ‘M1’ that contains a Gaussian enhancement is strongly preferred over the other candidate models, a single power law (model M1) and a broken power law (model M2). The oscillation period is correctly identified with a frequency of 0.074 Hz, corresponding to a period of 13.5 s. Adapted from Broomhall et al. (2019), Fig. 5

One caveat of this approach is that the choice of models is empirical. In particular, a power law with a Gaussian enhancement is used to represent a discrete oscillation signal, while in reality this may be an incomplete or inaccurate model of the signal. Another limitation of this method is that many oscillatory signals on the Sun (and in other phenomena) evolve significantly over time. Such signals are likely to be overlooked by this approach that searches for localised frequency enhancements in the Fourier power spectrum of the entire signal. The performance of many QPP detection methods, including AFINO, was recently assessed by Broomhall et al. (2019) using test data sets, thus revealing the positives and negatives of this technique. On the positive side, AFINO was found to produce few false positive results, i.e. detections claimed by the method were genuinely present in the data. However, the downside was that this approach produced more false negatives than other methods, meaning a number of real signals were missed.

2.3 Stingray Code

Another recently released Python package that provides time-series analysis tools is Stingray (Huppenkothen et al. 2019). Stingray is a general-purpose tool that provides a range of Fourier analysis techniques used in astrophysical spectral-timing analysis. The package was written in Python, and is fully open source, version controlled and publicly available. The package has already been used in a variety of recent studies of astrophysical phenomena (e.g. Brumback et al. 2018; Kennedy et al. 2018; Pike et al. 2019; Bachetti and Huppenkothen 2018).

Stingray was developed as a means to unify the distribution and use of time-series analysis tools in astronomy, which historically has been fractured. It aims to provide the core tools needed to analyse time-series data in a wide variety of contexts. Stingray provides a framework for data manipulation and analysis in the form of a LightCurve class, which can be constructed from arbitrary time-series data. Via this object, many basic tasks can be easily performed, including addition and subtraction, time-shifting, re-binning, truncation and concatenation of time-series, as well as functionality to save data in certain formats. Stingray also provides an Events class, which is used for single-event measurements such as individual photon counts.

From these objects, Stingray can generate cross spectra and power spectra for the desired time-series, and calculate confidence limits. Another tool generated by Stingray of particular interest in the study of solar pulsations and oscillations is the dynamic Fourier power spectrum. This spectrum can be used to study the variation in Fourier power of a signal over time, similar to a wavelet spectrum. In the dynamic power spectrum, a time-series is divided into a number of segments, and the Fourier power spectrum calculated for each one. These individual power spectra are then plotted sequentially in vertical slices to visualise the change in power over time, a common need when studying non-stationary signals.

In addition to these core tools, Stingray includes additional features that allow for the modelling of time-series in a variety of ways. It can be used to fitting models to the Fourier power spectra of signals, a useful feature in the search for oscillations. A variety of comparison metrics are implemented, including the likelihood ratio test (LRT), the Akaike Information Criterion (AIC) (Akaike 1974), and the Bayesian Information Criterion (BIC) (Schwarz 1978). For this model fitting, Stingray implements a full Bayesian Markov Chain Monte Carlo (MCMC) framework, allowing the full posterior probability distributions to be estimated (see Fig. 4).

An example of Stingray modelling tools being used to fit the Fourier power spectrum of X-ray binary GX 339-4 and observe a QPO signature. Left: The black data shows the averaged power spectrum from GX 339-4 from 15 different time segments. The red line shows the best fit model, consisting of a power law plus a Lorentzian and a constant. The right panel shows the marginal posterior distributions of the six model parameters. Reproduced from Huppenkothen et al. (2019), Fig. 4

Stingray also includes a simulation package, which enables the creation of simulated time-series from an input power spectrum. This feature is particularly useful for validation and testing of analysis methods, such as that performed by Broomhall et al. (2019). For example, using Stingray a user can quickly generate a sample time-series from a white-noise background spectrum and a red-noise background spectrum for comparison, and test the performance of their chosen analysis technique in both scenarios.

2.4 Pitfalls of Time-Series Pre-Processing

In order to reduce noise, remove a trend, or stabilise the signal, researchers often perform preliminary processing of a time-series before applying actual spectral analysis. Such preprocessing may include the following steps:

-

Trend removal;

-

Noise suppression;

-

Various kinds of normalisation.

All these operations may introduce new time scales to the time-series and, thus, may cause false positive detections of oscillatory components. For instance, a common detrending procedure implies subtracting from the signal its version smoothed by a boxcar filter. Here, the width of the boxcar filter defines a new time-scale which can appear in the result of the subsequent spectral analysis as a false periodicity.

To illustrate these caveats, we present a practical exercise demonstrating how smoothing and normalising the observational time-series modifies its Fourier power spectrum and can lead to the erroneous detection of oscillations in a pure noisy input signal.

Figure 5 demonstrates a sample of synthetic red noise with the Fourier spectral power \(S\) distributed over frequencies \(f\) as \(S\propto f^{-2}\) and its Fourier power spectrum with the corresponding 99% confidence interval estimated following the procedure analogous to that described in Sect. 2.5 and references therein. The total length of the signal is 256 (in some arbitrary time units) and the amplitude is also set arbitrarily. As expected, neither of the Fourier peaks obtained from the pure red noise sample is seen to be statistically significant.

Top and middle rows: a synthetic red noise sample represented in the time and Fourier domains. The solid blue (dashed red) line in the time domain indicates the original signal smoothed over 10 (40) time units. The solid blue and red lines in the Fourier domain show the best-fit of the Fourier spectrum by a power-law model and the 99% confidence level, respectively. The bottom row shows the red noise sample smoothed and normalised to its trend, also in the time and Fourier domains

We now smooth the original signal over 10 time units using a basic boxcar smoothing technique. This is effectively equivalent to suppressing higher-frequency fluctuations in the original signal, which is evident from the Fourier power spectrum of a smoothed signal (see Fig. 5). Indeed, the Fourier spectrum of a smoothed red noise changes its shape in the shorter-period (higher-frequency) part where the oscillation energy is filtered out by smoothing. As previously seen, all the Fourier peaks are detected well below the noise confidence level. We also apply the same smoothing method for obtaining a slowly-varying trend of the original signal. In this case, we smooth it over 40 time units.

As the next step, we normalise the smoothed red noise sample to its slowly varying trend. This causes the resulting signal to oscillate with respect to the unity level at a relatively stable period of about 30 time units and with no signatures of decay. In the Fourier domain, after the application of this data processing, the periods shorter than 10 time units and those longer than 40 time units are severely suppressed, which leads to the artificial enhancement of the group of Fourier periods at around 30 time units, well above the 99% level of statistical significance. Thus, the resulting spectrum demonstrates disparately different behaviour in comparison with that of the original unprocessed pure red noise, and hence it cannot be used for detecting any potential oscillations.

We stress that the appearance of such a nice and apparently significant quasi-periodic pattern in a pure synthetic power-law distributed noise sample should not be associated with any oscillatory physical process or characteristic time scale, and is caused by a combination of the two data processing tricks which are smoothing and subsequent normalisation to the trend. As a power-law distributed noise is a common feature of solar and heliospheric observations (Sects. 2.1, 2.2 and references therein), and, on the other hand, smoothing and various manipulations with the trend are rather common data processing approaches, through this quick example we illustrate that:

-

It is fine to use smoothing procedures to get rid of high-frequency noise in the analysed observational signal. It may also be safe to use smoothing for obtaining its long-term trends if a sufficient diligence is applied to the choice of the smoothing window;

-

However, normalisation of such a smoothed signal to its trend introduces an element of nonlinearity, i.e. interaction of the signal with itself;

-

As the Fourier analysis strictly requires the oscillating system to be linear, the resulting signal no longer possesses the spectral properties of the original signal, which makes claiming significance of oscillations in the normalised signal meaningless;

-

On the other hand, such a normalisation to the trend may be safely used for the physical interpretation of quasi-periodic patterns whose statistical significance was assessed independently.

2.5 Detection of Quasi-Periodic Pulsations in Flaring Light-Curves

Quasi-Periodic Pulsations (QPPs) manifest as a pronounced oscillatory pattern within the electromagnetic radiation detected in solar and stellar flares. The oscillatory signals are detected over a range of periods and with period modulation, coupled with apparent amplitude modulation. The true physical mechanism(s) that underpin QPPs in flares is currently undergoing intensive theoretical study (e.g., see reviews by McLaughlin et al. 2018; Kupriyanova et al. 2020; Zimovets et al. 2021). These specific properties lead to challenges in the detection and analysis of QPPs. Firstly, the low quality of QPPs should be mentioned, where the quality, or Q-factor, is defined as the number of full periods. Studying QPP signals observed in the same solar active region, it was found that typically QPPs have from two to ten full periods only (Nakariakov et al. 2019). The second problem relates to the parameters of the QPP (period, amplitude) which can vary significantly in time. This non-stationarity of the parameters could be caused both by multi-modality of the QPP or by the temporal evolution of the plasma parameters during the flare (Nakariakov et al. 2019; Kupriyanova et al. 2020). Given these challenges, different methods are used for the detection and analysis of QPPs in flare emission. Among them, there are standard methods utilising the periodic basic functions (FFT and CWT) as well as fully empirical methods such as EMD described in Sect. 2.1 (see Broomhall et al. (2019) for a comprehensive review). Each method has its own advantages and disadvantages, and the question arises as to what specific technique is most appropriate for detecting a quasi-periodic signal with a certain combination of observational properties.

As an example, let us consider the FFT method applied to the full flare light curve, or a time-series containing a flare, with a low-frequency trend, a QPP and different kinds of noise. Here, the Fourier spectrum takes the form of a power-law function. In the spectrum, the lowest-frequency component (trend) has the maximum spectral power while the highest-frequency white noise has the minimum spectral power. In addition, the noise component is distributed according to power-law \(S \propto f^{-\alpha }\). In particular, a power-law index \(\alpha = 0\) corresponds to the uncorrelated (or white) noise, \(\alpha = 1\) denotes correlated flicker (or pink) noise, and \(\alpha = 2\) means correlated red noise. Often, a combination of the different \(\alpha \) values at the lower and higher Fourier frequencies can form a broken power-law spectrum (Inglis et al. 2015; Pugh et al. 2017b). The colour of the noise in the full time series is defined empirically by fitting its Fourier spectrum and estimating its power-law index, \(\alpha \). The spectral peak in the Fourier spectrum is treated to be significant if its power is higher than the noise level (or significance level) which is defined performing the \(\chi ^{2}\) test (Vaughan 2005; Pugh et al. 2017a).

The FFT method of the full time-series is appropriate for analysing a high-quality QPP with a stable period or with slightly varying periods, and in these cases, there is a high probability of the QPP being detected. However, if the period of the QPP is varying significantly with time, the FFT method can give spurious results. For example, the spectral power of a QPP with a significantly-growing period will be distributed over an interval between the start and end values of the period, instead of the formation of a localised peak in the periodogram. Therefore, the significance of such a QPP will be underestimated, and these QPPs will be mistakenly sifted out.

In contrast, if we consider a short time-series containing a low-quality QPP, the number of counts in the Fourier spectrum will be small. This can lead to an incorrect estimation of the \(\alpha \) value and, consequently, to overestimation or underestimation of the QPP power. For example, making the assumption of a white noise background spectrum for analysis of a time-series that actually contains red noise can lead to a false detection, i.e. the lower frequencies appear to be significant. Therefore, estimation of the correct statistical significance of the QPP is an obligatory task.

However, the presence of a low-frequency trend adds uncertainty to this task. The shape of the simplest flare consists of a fast rise and the consequent gradual decay. An empirical template of the simplest white light flare on an M-type star fits the decay flare phase with a broken exponential function (Davenport et al. 2014). An analytical template of the solar flare in soft X-rays represents the trend as a combination of a Gaussian function at the rise phase and exponential relaxation at the decay phase (Gryciuk et al. 2017). A curious result is that the Fourier spectrum of the flare trend obtained by Gryciuk et al. (2017) has a similar slope as the Fourier spectrum of red noise (Nakariakov et al. 2019). Thus, it is difficult to determine whether the slope of the Fourier spectrum is caused by the flare trend or by background red noise. It is possible that the detrending procedure (the subtraction of the trend from the full time-series) could help to resolve this uncertainty.

Anyone who studies QPPs meets a conceptual problem: to detrend or not to detrend. On one hand, when analysing the full time-series (including trend) with the FFT method, a number of real QPPs occur to be below the significance level. The presence of a trend affects the slope of the Fourier spectrum, resulting in an incorrect estimation of the noise parameters and, as a consequence, in the incorrect estimation of the significance level. This problem can be resolved by analysing not the full time-series, but its high-frequency component only. The high-frequency component can be obtained with direct methods of Fourier filtration (Inglis and Nakariakov 2009) or wavelet filtration (Dolla et al. 2012) or with an indirect method, i.e. the detrending approach. There are several methods for defining the trend. In the ideal case, the analytical form of the trend is known (e.g. the previously mentioned exponential decay phase). In this case, parameters of the trend could be estimated, for example, with the least-squares technique. However, most flares have a more complicated shape which is difficult to fit with such an analytical function. Therefore, the trend could be defined with a smoothing procedure, such as smoothing with the running average or convoluting the time-series with a polynomial function (the IDL routine SAVGOL.pro). The potential of the EMD method for obtaining the flare trends is discussed in Sect. 2.1.

On the other hand, there is a danger that the trend could be determined incorrectly, e.g. if the smoothing was performed over an inappropriately large window. This could lead to the appearance of a false peak (or peaks) in the Fourier spectrum (see also Sect. 2.4). For this reason, some studies do not apply a detrending procedure (e.g., Pugh et al. 2017a; Dominique et al. 2018).

Given these common pitfalls in QPP detection, including detrending, trimming, coloured noise, possible stationary or non-stationary periodicities, one must be careful to deploy appropriate techniques to reliably identify QPPs and minimise false detections. Broomhall et al. (2019) reviewed multiple techniques for detecting QPPs, including Gaussian Process Regression (Foreman-Mackey et al. 2017), wavelet analysis, AFINO/Automated Flare Inference of Oscillations (see Sect. 2.2), Smoothing and Periodogram (with a trimmed versus untrimmed signal), EMD/Empirical Mode Decomposition (see Sect. 2.1), forward modelling of QPP signals with the use of MCMC and Bayesian analysis, and Periodogram-based Significance Testing. Several recommendations were articulated to help avoid these key pitfalls, which include using simulations to test the robustness of the detection method, taking red noise into account during detections, being cautious with detrending, including only trimming the data around the QPPs if this benefits detection, using EMD for non-stationary signals, and then using the wavelet analysis on the detrended and EMD-decomposed signal.

3 Methods for Detecting and Analysing Periodic Transverse Displacements of Coronal Structures

3.1 Enhancing Transverse Motions with the DTℂWT-Based Motion Magnification

It was discovered (e.g. Anfinogentov et al. 2015) that the coronal loops and other structures seen in SDO/AIA EUV images support omnipresent low amplitude transverse periodic motions. In most cases, these motions have an amplitude less than the pixel size. Therefore, they are difficult to recognize by eye and require advanced data analysis methods for processing. A possible solution for the analysis of low amplitude motions in imaging data is a technique called motion magnification. Motion magnification aims to magnify low amplitude transverse motions to make them visible by eye and available for traditional data processing methods such as investigating oscillatory patterns in time-distance plots.

The first implementations of motion magnification were based on explicit estimation of the velocity field and subsequent wrapping of individual images (Liu et al. 2005). However, such approaches were found to be computationally heavy and able to produce artefacts. The further development of the motion magnification algorithms led to the Eulerian video magnification method developed by Wu et al. (2012). Their approach eliminates explicit computation of the velocity field. Instead, the algorithm uses a temporal broadband band-pass filter to extract and amplify intensity variations associated with small transverse motions. The main limitations of this approach are small magnification factors, and image distortions due to amplification of the brightness noise and brightness variations not related to transverse motions.

The current generation of motion magnification algorithms is so-called phase-based motion magnification. It relies on the decomposition of the images into complex 2D wavelets or wavelet-like pyramids. The elements of such a decomposition are localised in space and wave-number domains. Thus, every element of such a decomposition is characterised by its location, spatial scale, orientation, and complex amplitude. The latter encodes the brightness and position of individual structures present in the original images. The brightness is related to the absolute value of complex amplitudes, while any transverse motions cause variations of the phase. In the case of small displacements these two dependencies are linear and decoupled, allowing for measuring and magnifying small transverse motions in time-resolved imaging data. The first implementation of phase-based motion magnification was proposed by Wadhwa et al. (2013). Their algorithm decomposes a sequence of images into Complex Steerable Pyramids (see Simoncelli et al. 1992; Simoncelli and Freeman 1995, for the detailed description), which are a complex wavelet-like 2D spatial decomposition.

Anfinogentov and Nakariakov (2016) developed an original algorithm of the phase-based motion magnification tuned for analysis of EUV images of the solar corona. Their algorithm uses the two-dimensional dual tree complex wavelet transform (DTℂWT) developed by Selesnick et al. (2005). The distinct features of DTℂWT are perfect reconstruction, good shift invariance, and computational efficiency. Moreover DTℂWT is implemented in several programming languages and is published as an open source software (e.g. Wareham et al. 2014). Since DTℂWT is a 2D wavelet decomposition, its individual elements are localised both in space and in wave number domains allowing for independent processing of structures in images with different locations (several loops in an active region), spatial scales (individual thin strand in a thick multi-stranded loop) and orientations (overlapped loops with different orientations).

Like 1D wavelets, the 2D wavelet transform has a trade-off between spatial and wave-number resolutions. The limiting cases of such a trade-off are spatial Fourier transform (perfect wave-number resolution, no spatial resolution) and the image itself (perfect spatial resolution, no wave-number resolution). The lower spatial resolution of individual wavelet elements allows for higher magnification coefficients, but sacrifices the ability to resolve different motions in neighbouring structures. The motion magnification code developed by Anfinogentov and Nakariakov (2016) follows the opposite approach. The algorithm uses basic DTℂWT elements with higher spatial and lower wave-number resolution to allow accurate resolution of motion in neighbouring structures, which is more important than the high magnification coefficient while analysing complex images of solar corona with an active background and overlapping coronal loops.

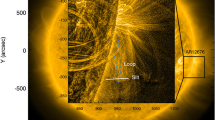

The motion magnification algorithm (Anfinogentov and Nakariakov 2016) was tested on synthetic data sets in order to reveal its potential usage in coronal seismology. The synthetic sequence of images has four distinct loops: a steady loop oscillating at a single frequency, a loop slowly moving and oscillating at the same time, a loop exhibiting exponentially decaying transverse oscillations, and a fourth loop oscillating at two different frequencies simultaneously. The data was superimposed with a randomised non-uniform background and contaminated with photon noise. Figure 6 illustrates the synthetic data sets and the results obtained by motion magnification with the magnification factor of 10. All transverse motions that were put in the model are clearly seen in the time-distance map produced from the magnified data (Fig. 6d), unlike in the original data (Fig. 6c), where they are visually not recognisable. An example of detecting decayless kink oscillations in a coronal loop observed by SDO/AIA in 171 Å channel at 2013-01-21 15:50:12 UT is shown in Fig. 7. The oscillating patterns are clearly visible in magnified time-distance plots, unlike in the original data where they are barely noticeable.

Detection of synthetic signals by the method of DTℂWT-based motion magnification. Panel (a) shows a frame with four loops superimposed with a time varying spatially non-uniform background. Loop 1 oscillates harmonically with a constant period of 30 frames and amplitude 0.2 px. Loop 2 oscillates with constant amplitude of 0.2 px; its major radius increases with time, and the oscillation period increases with the increase in the loop length. Loop 3 performs a decaying oscillation with the initial amplitude of 1 px, and the damping time of 40 frames. Loop 4 performs a two-harmonic oscillations, with the period ratio of 3, the amplitude of the longer period oscillation is 0.2 px, and of the shorter period is 0.1 px. The white vertical line shows the location of the slit for time-distance maps. Panel (b) shows the same frame as (a) but after the application of the DTℂWT-based motion magnification. Panel (c) gives the time-distance plot made along the slit shown in panel (a) for the original synthetic data. Panel (d) shows the time-distance plot after the application of the DTℂWT-based motion magnification. The figure is taken from Anfinogentov and Nakariakov (2016)

EUV image (a) of the coronal loop system observed in 171 Å SDO/AIA channel on 21 January 2013. Right panels show time-distance plots made with the use of the original data (b) and processed with the motion magnification technique with the magnification factors \(k = 3\) (c) and \(k = 9\) (d). The vertical green line in plots (b–d) indicates the instant of time when the image (a) was taken. The artificial slit used for the time-distance plot construction is indicated by the straight white line in panel (a). The distance is measured along the slit, starting at its left edge. The figure is taken from Anfinogentov and Nakariakov (2016)

The DTℂWT-based motion magnification algorithm is aimed at analysing different kinds of oscillatory signals including non-harmonic and non-stationary quasi-periodic displacement. Therefore it is crucial to provide linear and uniform magnification with respect to the amplitude and period of the original signal. A set of synthetic tests revealed that the DTℂWT-based motion magnification demonstrates linear dependence of the magnified amplitude versus the original one for the cases when the periodic displacements in the original data do not exceed 2–3 pixels. However displacements larger than 1–2 pixels do not need any magnification to be analyzed with traditional techniques. It was also found that the oscillation frequency has no effect on the actual magnification factor in a broad range of periods, allowing for reliable analysis of multi-modal and non-harmonic transverse oscillations in coronal loops and other structures.

The motion magnification method has several limitations. First of all, the method can be applied to only steady or slowly moving structures, since it implies gathering information from a fixed time-window covering several subsequent images, and an oscillating structure should persist during this time window. The width of this window is a parameter of the algorithm called the smoothing width and should be tuned to be shorter than the characteristic evolution time of an oscillating structure (i.e. coronal loop) but longer than the longest timescale of investigated motions. Secondly, the projected amplitude of transverse motions should not exceed 1–2 pixels, otherwise the motion-magnified data will have significant distortion. Also, the background brightness noise which is always present in the data will be magnified alongside the real transverse motions and may cause distortion in the processed images. Keeping in mind these limitations, Anfinogentov and Nakariakov (2016) suggest the following strategy for data analysis using motion magnification.

-

Process the data cube of interest with a magnification coefficient of 10 and a smoothing width corresponding to the expected period of the oscillation.

-

Make several time-distance plots from the magnified and original data.

-

If there is a significant distortion in the magnified data in comparison to the original one, reduce the magnification coefficient and try again.

-

Examine the time-distance plots and estimate the main (longest) oscillation period of interest.

-

Set the smoothing width parameter to be slightly larger (for example, by 10%) than the main oscillation period and process the original data again, obtaining the final result.

By following this strategy one can get reliable results and avoid adverse effects causing possible distortions (Zhong et al. 2021). The practical application of the motion magnification method to analysing transverse oscillations in coronal loops made it possible to obtain new seismological information from the low amplitude kink oscillations of coronal loops. In particular, the presence of a second harmonic was detected for the first time in the decayless regime of kink oscillations (Duckenfield et al. 2018). The analysis of omnipresent decayless kink oscillations simultaneously observed in several loops of a single active region allowed for mapping the Alfvén speed (and potentially the magnetic field) in the corona (Anfinogentov and Nakariakov 2019). Li et al. (2020) discovered low amplitude transverse oscillations with growing period in a diffuse loop observed by SDO/AIA 171 Å associated with the QPP event observed during the rising phase of a solar flare by Nobeyama radioheliograph at 17 GHz. Thus, motion magnification allows for seismological analysis of the solar corona by the low-amplitude transverse motions in coronal loops in non-flaring active regions and for investigating weak oscillatory responses of coronal structures to the eruptive events in nearby active regions.

3.2 Automatic Detection of Transverse Motion with the NUWT Code

NUWT (Northumbria University Wave Tracking) is an image-processing algorithm, specifically an automated Gaussian-fitting method, that can accurately identify individual brighter/darker features in time-distance diagrams and track their evolution through time. The fundamental framework of NUWT was reported in Morton and McLaughlin (2013) - who compared and contrasted Hi-C (Cirtain et al. 2013) and SDO/AIA observations of transverse MHD waves in active regions - and Morton et al. (2013), who demonstrated the connection between convectively-driven photospheric flows and incompressible chromospheric waves. NUWT has also been used to provide an analysis of the fine-scale structure of moss in an active region (Morton and McLaughlin 2014), including making the first direct observation of physical displacements of the moss fine structure in a direction transverse to its central axis. NUWT was used by Morton et al. (2014) for statistical studies in the highly-dynamic solar chromosphere, which allowed for the determination of the chromospheric kink wave velocity power spectra. Morton et al. (2015) used NUWT to reveal that counter-propagating transverse waves exist in open coronal magnetic fields using the CoMP instrument (Tomczyk et al. 2008).

Thurgood et al. (2014) used NUWT to report the first direct measurements of transverse wave motions in solar polar plumes and evaluate their energy contributions. The authors considered the solar north pole as seen by SDO/AIA in 171 Å on 6 August 2010 at 00:00 UTC. A slit of 200 pixels (87 Mm) at an altitude of 8.71 Mm was used to create a time-distance diagram. The time-distance diagram was characterised by intermittent, bright streaks (corresponding to the locations of the over-dense, plume structures) which exhibited clear signs of transverse motion. NUWT extracted the transverse displacements, periods and velocity amplitudes of 596 distinct oscillations observed in these time-distance diagrams. The measurements made for the slit at an altitude of 8.71 Mm are shown in Fig. 8 (left) and, as a typical example, four fits are shown in further detail in Fig. 8 (right).

(Left) Time-distance map made from slit at 8.71 Mm for the whole time series. A selection (i.e. not full sample) of longer-period fits that were made to the oscillating features are overlaid in white. (Right) Close-up view of fitted oscillations. Centre of the blue vertical error bars show the feature centre from time-to-time as determined by Gaussian fitting routine and \(\pm \sigma \) uncertainty on that position. Blue-dashed curve through the feature the best sinusoidal fit to the feature centre, from which the wave parameters are derived

The NUWT code can be downloaded via Morton et al. (2016a) and NUWT has been further automated and improved in Weberg et al. (2018), who also tested and calibrated the algorithm using synthetic data, which included noise and rotational effects. The calibration indicated an accuracy of 1%-2% for displacement amplitudes and 4%-10% for wave periods and velocity amplitudes. Here, we briefly review the basic operation of the code (see Weberg et al. 2018, for details). There are six steps in the NUWT data processing pipeline:

-

1.

Data acquisition and preprocessing. After acquired the data, relevant instrument-specific corrections and processing should be performed, which could include rotating, rescaling, co-alignment, de-spiking and suppression of random noise.

-

2.

Slit Extraction. A two-dimensional time–distance diagram is constructed by extracting the data from a virtual slit. The intensity uncertainties in the time–distance diagram are also collated, including expected contributions from standard sources (e.g. photon noise, dark current). These uncertainties (data errors) influence the uncertainties on model parameters in stage 3, and so it is important that accurate values are extracted or estimated.

-

3.

Feature Identification. Local maxima are located in the time–distance diagram by comparing values to their nearest neighbours, via a crawling algorithm. Then, gradients either side of the determined location are checked against a user-adjustable threshold gradient. If the gradient is sufficient, the point is considered a local maximum. This step also removes spurious peaks due to noise. Once pixels containing local maxima are determined, their position is refined to sub-pixel accuracy by fitting the local neighbourhood with a Gaussian model, using a nonlinear least-squares fitting method (Markwardt 2009) which takes into account the intensity uncertainties. For SDO/AIA 171 Å, the fitting is weighted by intensity errors taken as \(\sigma _{\mathrm{{noise}}}(F) \approx \sqrt{2.3 + 0.06F} /\sqrt{5}\: (DN)\) as per Yuan and Nakariakov (2012), where \(F\) is the pixel-intensity of the unaltered Level 1 data and \(\sqrt{5}\) arises because the time-distance diagrams are constructed from an average of 5-neighbouring slices. The uncertainty on the position of the local maxima is taken as the \(\sigma \) estimate on the position of the apex of the fitted Gaussian and the uncertainty on position due to sub-pixel jitter (added in quadrature).

-

4.

Thread tracking. Local intensity maxima are traced through the time series by connecting the maxima into ‘threads’. This is achieved using a nearest-neighbour method that scans a user-adjustable search box in both time and space. A given peak cannot be assigned to more than one thread. Threads containing less than a minimum number of data points are rejected. For SDO/AIA, 20 data points is found to be reasonable cut-off, which means we reject threads that persist for less than 240 s. Fits dependent on a set of points that include a jump of more than 2.5 pixels from point-to-point are discounted since, for SDO/AIA, this would constitute an instantaneous transverse velocity of greater than 100 km s−1 which is an order of magnitude greater than the representative velocity amplitudes measured for all samples (even when this criterion is relaxed). Such jumps are artifacts from the thread-following algorithm.

-

5.

Application of FFT. A split cosine bell windowing function is applied to the time series and then FFT is applied. We correct the output power spectrum to account for signal lost due to windowing. If required, gaps within each thread can be filled using linear interpolation.

-

6.

Filtering Waves and Calculating Observables. The significant wave components of the FFT power spectrum are selected. Every peak with power greater than the significance threshold are identified as different waves that are propagating on the same structure. The tracking-routine picks up threads of variable lengths and so longer threads can often be fitted with different oscillations during different stages of their lifetime. In addition, it is often the case that shorter-period oscillations can be seen super-imposed upon longer period trends. Where multiple fits to different subsections of a longer thread are possible, all such fits are taken and contribute to the sample. Wave displacement amplitudes, \(\xi \), and period, \(P\), are calculated from the power spectrum and the velocity amplitudes are calculated using \(v = 2\pi \xi / P\).

The NUWT automated algorithm unlocks a wide range of statistical studies that would be impractical to conduct by hand (i.e. extremely labour-intensive). For example, NUWT has been used by Morton et al. (2019) to reveal that the Sun’s internal acoustic modes (p-modes) make a basal contribution to the Alfvénic wave flux in the solar corona, delivering a spatially-ubiquitous input to the coronal energy balance that is sustained over the solar cycle. Weberg et al. (2020) used NUWT to make direct measurements of key parameters of transverse waves in a coronal hole, and reported how those measurements change with altitude through the low corona. Interestingly, this enabled them to derive a relative density profile for the coronal hole environment but, crucially, without the use of spectroscopic data.