Abstract

During last several decades, various indicators and proxies to measure research output and their impact for different units have been proposed. These measurements may be targeted at individuals, institutions, journals, countries etc. Institutional level assessment is one such area that has always been and will remain a key challenge to a multitude of stakeholders. Various international rankings as well as different bibliometric indicators have been explored in the context of institutional assessments, though each of them has certain criticisms associated. Most of the existing indicators, including h-type indicators, mainly focus on research output and/ or citations to the research output. They do not reveal the expertise of institutions in different subject areas, which is crucial to know the research portfolio of an institution. Recently, a set of expertise measures such as x and x(g) indices were introduced to determine the expertise of institutions with respect to a specific discipline/field considering strengths in different finer level thematic areas of that discipline/field. In this work, an adaptation of the x-index, namely the \(x_{d}\)-index is proposed to determine the overall scholarly expertise of an institution considering its publication pattern and strength in different coarse thematic areas. This indicator helps to identify the core expertise areas and the diversity of the research portfolio of the institution. Further, two variants of the indicator, namely field normalized indicator or \(x_{d}\) (FN)-index and fractional indicator \(x_{d} \left( f \right)\)-index are also introduced to address the effect of field bias and collaborations on the computation of the expertise diversity. The framework can determine the most suitable version of the indicator to use for research portfolio management with the help of correlation analysis. These indicators and the associated framework are demonstrated on a dataset of 136 institutions. Upon rank correlation analysis, no significant difference is noticed between \(x_{d}\) and its variants computed using different publication counting, in this particular dataset, making \(x_{d}\) the most suitable indicator in this case. The possibilities offered by the framework for effective management of the research portfolio of an institution by expanding its diversity and its ability to aid national level policymakers for the effective management of scholarly ecosystem of the country is discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The institutional organization of science, along with intellectual and social organizations of science, had played and might continue to play a very important role in the advancement of science. This growth is facilitated by various funding sources, including government and non-governmental agencies. Until a few decades ago, such funding was largely ‘trust-based,’ which involved less use of systematic methods for the assessment of institutions for funding. However, with the growth of the field of Scientometrics and increased awareness about its potential, funding agencies started to adopt sharp performance assessment methods. The main reason for using performance-based funding is to achieve two key goals: (i) establish both 'vertical differentiation' and 'functional specialization' among institutions and (ii) promote horizontal diversity and pluralism within the system (Sörlin, 2007). Some examples are (i) the formation of the Research Excellence Framework (REF) in the UK (de Boer et al., 2015) (ii) the allocation of AUD 80 million to universities based on performance-based funding by the Australian government (Maslen, 2019) and (iii) adoption of Norwegian model of funding at national level by Norway, Belgium (Flanders), Denmark, Finland, and Portugal (Sivertsen, 2016). Due to this change in funding pattern, most institutions were forced to strive for a continuous improvement of performance.

The quest for institutional assessments has also resulted in creation of various University ranking frameworks. The rise of several international ranking systems like QS, THE, ARWU, and CWTS etc. are some examples. Most of the above-mentioned global ranking systems make use of different kinds of data, along with information such as (i) the number of publications in highly reputed/cream journals, (ii) number of the faculty and alumni with prestigious awards like Nobel Prizes and Fields Medals (Shanghai Ranking’s Academic Ranking of World Universities, 2021), and/or (iii) other information such as grants. These methodologies are prone to bias and have invited several criticisms. The criticisms include use of inappropriate criteria (Billaut et al., 2010), sensitivity of weights placed upon different factors (Jeremic et al., 2011), anchoring effect on the ratings (Bowman & Bastedo, 2011), excess emphasis on reputation surveys (Anowar et al., 2015) etc. Further, all these international rankings are limited with a problem of inclusion, especially for institutions in developing countries. This can be a strong reason for several countries to go for national ranking frameworks. The recent introduction of the National Institutional Ranking Framework (NIRF) in India can be an example in this regard.

Additionally, these international rankings often struggle with inclusivity, particularly for institutions in developing countries. This has prompted several countries to establish their own national ranking systems, such as the National Institutional Ranking Framework (NIRF) in India. However, these systems do not fully evaluate institutional research performance, as they overlook (i) the overall diversity and strength of thematic expertise at a broad level, and (ii) the diversity and strength within specific disciplines. The importance of expertise diversity in institutions is critical. It fosters innovative problem-solving by integrating varied perspectives and approaches, leading to creative and groundbreaking solutions. Diverse expertise ensures a comprehensive understanding of complex issues, as different disciplines contribute unique insights and methodologies. This diversity also enhances the adaptability and resilience of institutions, enabling them to respond effectively to new challenges and changes in the research landscape. Additionally, a diverse pool of expertise fosters a collaborative environment where interdisciplinary integration can thrive, ultimately leading to more robust and impactful research outcomes. Promoting relevant and inclusive research, expertise diversity ensures that findings and innovations address a wide range of societal needs and challenges, making institutions more effective and responsive to global issues. The first limitation if addressed properly can be useful to inform institutional level policymakers for overall research portfolio management and national level policymakers to plan portfolio management of the scholarly ecosystem of the nation, and to plan institutionalization to boost overall scholarly output, and for funding agencies to assess research for overall scholarly expertise and diversity. Systematic pursuit of the second one can be useful to inform institutional level policymakers for research portfolio management at the level of disciplines, and national level policymakers and funding agencies to assess research for ‘thrust area/national priority area’ funding and institutionalization.

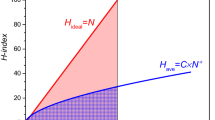

In the effort to search for a suitable indicator to measure the expertise diversity of institutions, we look at various scientometric indicators in popular use at present, such as h-index (Hirsch, 2005), g-index (Egghe, 2006), P-index (Prathap, 2010) etc. These indicators utilize the data for research output and citations and provide a composite value. These and several other indicators are often viewed in the context of institutional size, resulting in discussion and debate about size-dependence and size-independence of indicators (Katz, 2000; Waltman et al., 2016; Tijssen et al., 2018; Prathap, 2020; Glänzel & Moed, 2013; Waltman, 2016; Szluka, Csajbók, Győrffy, 2023). Further, the varying patterns of citation practices across different subject areas have also been recognized and different kinds of normalizations have been suggested (Leydesdorff & Bornmann, 2011; Waltman & van Eck, 2019). The abovementioned and several other indicators have been used in different contexts of assessments of different units (individuals, institutions etc.). However, all of these indicators are limited in measuring research output and citations of institutions, and the research expertise diversity of institutions could not be captured by any of them. In this context, attention is also sought to indicators of interdisciplinarity and disciplinary diversity proposed and investigated by Scientometrics researchers in many previous studies (Glänzel & Debackere, 2022; Leydesdorff et al., 2018, 2019; Rafols & Meyer, 2010; Wagner et al., 2011). However, indicators of interdisciplinarity and diversity are primarily defined in the context of individual articles or journals, and do not focus on institutions. Thus, the existing indicators fall short of capturing the expertise diversity of institutions, and as such there is a need for designing an appropriate indicator that can measure the expertise diversity and thematic strength of institutions by utilizing suitable data.

For this purpose, the network and natural language processing (NLP) based framework introduced by Lathabai et al., (2021a, 2021b) can be explored as a possible motivation. The authors devised a set of novel indicators, namely, the expertise indicators such as the x-index and the x(g)-index, inspired by principles of the h-index (Hirsch, 2005) and the g-index (Egghe, 2006). The x-index is supposed to determine the core competency areas of an institution, and the x(g)-index together with the x-index can determine the potential core competency areas (at fine thematic area level within a discipline). This framework was later developed into a recommendation system framework by Lathabai et al. (2022) for institutional collaboration within a discipline. The concept of thematic strengths and expertise determination has an appeal for overall expertise and diversity determination.

This work thus proposes a framework for the expertise diversity determination of institutions by considering their research outputs. The framework, however, is different from the earlier idea of x-index (Lathabai et al., 2021a, 2021b), as instead of considering fine thematic areas within a discipline, the proposed framework considers coarse thematic areas in the overall scholarly literature. Now, a suitable classification mechanism that categorizes the overall scholarly literature indexed in scholarly databases into coarse-level thematic areas is required. As such a classification is available in WoS, we use the WoS database for data collection and WoS categories for mapping scholarly literature into thematic classifications (coarse level).

Now, the ‘Expertise diversity index’ or ‘\(x_{d}\)-index,’ which determines the top \(x_{d}\) categories that have a strength of at least \(x_{d}\) can be computed. These are the core competency (coarse level) areas of an institution. This index is supposed to express the thematic diversity of the research portfolio of an institution.

However, there is a possibility that the expertise diversity indicator based on the chosen attribute such as full citation (or altmetric) counts is prone to bias induced due to (i) the tendency of some fields (subject categories) to attract more citations than others (ii) the tendency of collaborated works (works published out of collaborative research of institutions) to attract more citations. Therefore, our framework supports the computation of different versions of the expertise diversity indicator that retain the merits of the original indicator while overcoming the effect of biases. Further, a rank correlation-based method is incorporated into the framework to determine the most significant indicator to be used for research portfolio management at the institutional level and scholarly ecosystem management at the national level. Now, we can discuss the details of the data and methodology used in our proposed framework.

Data and methodology

Data

The data collection was carried out from the Web of Science database as it is widely regarded as a reliable source of article meta-data. For the study, a list of 136 Indian institutions was taken from an ordered list based on the total number of articles published in descending order of total number of articles for a given period. This list excluded all possible observations of Institutional systems like the IIT System, the CSIR System, etc., and only included the individual institutions and laboratories from these systems. The list of the selected institutions is included in Appendix A. Using this list of institutions, article meta-data was fetched from the WoS database institution-wise. Along with publications, the WoS categories to which those publications belong to are also retrieved. These subject categories from WoS were taken since they are curated by experts, are in use for a long period of time, and constitute a reliable source. The proposed framework, however, can use any other subject classification scheme, including the national science classification systems. This subject category mapping of research works facilitates the creation of work(paper)-category affiliation network, and thematic strength computation (where suitable attributes such as citations, altmetric score, etc., can be used for strength computation) using network approach (the details of the same is elaborated in ‘Methodology’ subsection).

The study intended to highlight the study for the time period of 2011–2020, although this framework is capable of being effective for a larger or smaller time span if needed. A total of 467,550 articles were downloaded from the database corresponding to the time. The data so obtained was further cleaned, to remove any missing data or inconsistent data from the dataset. For example, the publication records that did not have a subject category mapping associated with them were removed. Similarly, duplicate entries were removed. The cleaned data consisted of 418,831 publications, for all the 136 institutions taken together.

The proposed method is based on publication data only, which gives more focus on the research output of the institutions. Thus, other factors which are included in various other indexing and ranking frameworks like the Times Higher Education ranking, the ARWU rankings, the QS rankings, and so on, are not considered. This way, we also eliminate such factors that can be manipulated and solely focus on the research output of the institution.

For the computation of field normalized indicator scores (in this case field normalized citations) and for determination of the effect of field bias (bias induced due to citation distribution difference across various fields or subject categories), publication (and citation) information in WoS database concerning all categories in WoS database during the period (2011–2020) is required. However, the mean-field citation (average citations in a WoS category), against which the citation (full count) is to be normalized, is extremely difficult to find with a normal subscription to Clarivate Analytics’ WoS product. Therefore, instead, we used mean field citations of WoS category for all publications from the country of analysis (in our case India) during the period 2011–2020. This way, any possible country-wise variation in citation distributions across WoS categories (i.e., citations received by a subject category via publications from well-off countries may be significantly different from countries of lesser stature) can be eliminated. There were 6,72,639 publications from the country ‘India’ (not only confined to 136 institutions) after data cleaning. From this data, mean-field indicator (citation) scores of all the fields (WoS categories) associated with the original dataset (136 institutions) can be retrieved and can be utilized for converting full count scores to field-normalized scores. Other details of normalization will be discussed in the subsection Methodology.

Similarly, the effect of collaboration also needs to be taken care of. The number of collaborating institutions associated with publications in the dataset of 136 institutions is determined from the data field ‘Author Address’ in WoS files. With this full counting citation scores can be converted to fractional counts. More details of the same are given in the subsection methodology.

Methodology

The schematic diagram of the proposed framework is shown in Fig. 1. The framework requires a reliable scholarly data source with substantial coverage. The database is required to have information about suitable indicators of papers’ impact and a suitable subject classification schema to which papers are mapped. The mapping and suitable indicators enable the determination of the expertise diversity of an actor in the following way. This mapping helps to determine the affiliation of publications of an actor (in this case, institutions) to thematic areas (in this case, broad/coarse level represented by subject categories). Thus, each actor’s scholarly profile can be represented as an unweighted affiliation network. As work (publications) to category (thematic area) affiliation network is created, we denote it as W–C network. This representation offers the flexibility to inject values related to suitable indicators (like citations) that are directly provided by the database as well as ones that have to be obtained from external sources and ones that have to be derived as done by Lathabai et al. (2017) and enables computation of thematic strength in terms of these indicators. The injection process (by which chosen indicator values are assigned as weights of links from publications to thematic areas) converts the unweighted network into weighted network. Upon weighted indegree analysis of the subject categories/thematic areas in the weighted network, the strengths of an actor for all thematic areas can be computed, where strength is the sum of values of chosen indicator associated with publications in that thematic area. For instance, if total citations (full counting) is chosen as indicator for thematic strength computation (as it is one of the simplest indicators for scholarly impact), upon injection of total citations as weight, it is converted into weighted network denoted as W–C* network. Weighted indegree analysis for thematic areas will provide thematic strength (sum of citations) in every thematic area, as weighted indegree is equal to the sum of weights of the links towards a category (thematic area). After that, \(x_{d}\)-index can be computed in h like fashion. The formal definition of expertise diversity indicator is given next.

\({\varvec{x}}_{{\varvec{d}}}\)-index: An actor is supposed to have an \(x_{d}\)-index value of \(x_{d}\) if it/he has published articles in at least \(x_{d}\) broad thematic areas and has the strength of at least \(x_{d}\) in those \(x_{d}\) areas. These \(x_{d}\) areas would be considered as the \(x_{d}\)-core competent areas of the actor. A high \(x_{d}\)-index value indicates that the actor’s research portfolio is more diverse.

As we already mentioned in the introduction, suitable indicators such as citations can be used for the determination of thematic strengths, either as full counts or its modified forms. If citations are used as full counts, the computation of the \(x_{d}\)-index will be prone to a bias induced by the differences in citation distributions across different fields, which can be termed as field bias. Also, publications from an actor, be it an institution or individual, can also be a result of collaboration with one or more actors, though actors might also have solely authored works. Thus, collaboration might induce a bias that might be favorable to some of the evaluated actors, popularly known as inflationary bias (George et al., 2020; Hagen, 2008). To tackle these kinds of biases, our framework offers flexibility to compute different variants of \(x_{d}\)-index. These are defined next.

As field bias is caused by the possible variations in citations attracted by publications belonging to different fields (represented by WoS categories), normalization of citations can eliminate field bias to an extent. That is, instead of full citation counts, the field normalized citation scores have to be injected into the W–C network. Field-normalized citation scores (Haunschild et al., 2022; Lundberg, 2007; Rehn et al., 2007; Waltman et al., 2011) of a publication w that belongs to a category c can be computed by in the following way:

where cit represents the full count citations and mfc or mean-field citations is the average citations received by the field category which in turn can be computed as:

The mfc computation requires values of the total publications (in the whole database) belonging to category c and citations received by all of those. As such, it is difficult to obtain through a normal subscription to the database. Therefore, instead, we considered the total publications from the country of analysis (in this case, India). This is judicious because only institutions belonging to a country/region are to be assessed by the framework with the help of the indicators we propose. Secondly, this might nullify the possibility of the influence of country-wise differences in attracting citations by works in a field.

Now, thematic strengths computed by injecting field-normalized citations as weights will be field-normalized thematic strengths. The expertise diversity indicator computed from such strengths will be field-normalized expertise diversity index, denoted as \({\varvec{x}}_{{\varvec{d}}} \user2{ }\left( {{\mathbf{FN}}} \right)\)-index.

As inflationary bias is caused by the allocation of full credit for publication and thereby the impact earned by that publication to all the actors, the usage of fractional credit allocation (Lindsey, 1980; Price, 1981) might be useful in reducing the effect of such bias. Thus, for each publication, the number of institutions affiliated with the publication is retrieved from the address field of WoS file. Full citations (or any other indicator scores for that matter) can be converted to fractionally counted scores by dividing the full count by the number of affiliated institutions. Fractionally counted citation scores of a publication w that is affiliated to k different institutions can be expressed as:

Now, thematic strengths computed by injecting fractionally counted citations as weights will be fractionally counted thematic strengths. The expertise diversity indicator computed from such strengths will be fractionally counted expertise diversity index, denoted as \({\varvec{x}}_{{\varvec{d}}} \varvec{ }\left( {\varvec{f}} \right)\)-index.

Selection of appropriate indicator

Once we compute the original indicator and its variants (here just two are demonstrated, but the framework is accommodative for more variants that could reflect the effect of other possible biases too). We can identify the best one to use by using a module based on Spearman’s rank correlation. It is discussed next.

Spearman’s rank correlation between \(x_{d}\)-index and its variants need to be computed. If the correlation coefficient value between any such pair indicates a strong correlation, then there is no significant difference between the expertise diversity ranking assigned by the original \(x_{d}\)-index (full counting-based one) and its variant. Instead, if the correlation is either weak or moderate, there is a significant difference in ranking due to a significant underlying bias (field bias, or inflationary bias, or any other biases). A strong correlation can be interpreted based on a reasonable threshold of 0.7 or 0.75. For instance, if Spearman’s rank correlation coefficient value obtained is above 0.75 or 0.7, the correlation can be treated as a strong positive correlation. Otherwise, it will be either moderately strong or weak, suggesting a strong effect of underlying bias of using full count. For instance, if the correlation value between \(x_{d}\)-index and \(x_{d} \left( {{\text{FN}}} \right)\)-index is above 0.75, there is no significant effect of field bias and hence no significant difference in ranking. In that case, \(x_{d}\)-index is the suitable indicator, because the usage of the other indicator does not offer any added benefit. If there is any variant exhibiting moderate or weak correlation with \(x_{d}\)-index, \(x_{d}\)-index is the most appropriate indicator to be used for research portfolio management at the institutional level and scholarly ecosystem management at the national level. If any of the variants are found to offer significant differences in ranking, it can be used instead of \(x_{d}\)-index. In case, if more than one variant exhibit weak or moderate correlations, the one that is found to have the lowest correlation coefficient can be taken for further analysis. Once, the suitable indicator is determined, we can examine how that indicator can be used for these tasks.

Information about the core competent and non-core competent areas might help an actor to adopt strategies for diversity expansion by prioritizing thematic areas to nurture through investment, capacity building, and planning collaboration with suitable partners. Apart from this, our framework can be used by national-level policymakers to determine actors who are contributing more to the overall expertise diversity of the national scholarly ecosystem in the following way:

For a nation, an actor can be taken as a scholarly entity that represents a unit of knowledge production just like a thematic area is taken as a unit of knowledge production at the actor’s level. Then, just like strength in a thematic area of an actor helped find the expertise diversity of that actor, the ‘expertise diversity’ index (at the actor’s level) can be used to determine the expertise diversity of the scholarly ecosystem of the nation. This is a kind of nested expertise formation that offers room for the development of nested expertise indices. Here, we develop two nested expertise indices namely the nested \(x_{d}\)-index or \(xx_{d}\)-index and nested \(x\left( g \right)_{d}\)-index or \(xx\left( g \right)_{d}\)-index with the principles of h and g indices. These are defined as:

-

\({\varvec{xx}}_{{\varvec{d}}}\)-index: A nation is supposed to have an \(xx_{d}\)-index value of \(xx_{d}\) if it has at least x \(x_{d}\) actors that have at least \(xx_{d}\) expertise diversity areas. These \(xx_{d}\) actors can be considered as the core competent-diverse actors or actors with high expertise diversity. A high \(xx_{d}\)-index value indicates that the nation’s scholarly research portfolio is more diverse and richer.

-

\({\varvec{xx}}\left( {\varvec{g}} \right)_{{\varvec{d}}}\)-index: A nation is supposed to have an \(xx\left( g \right)_{d}\)-index value of \(xx\left( g \right)_{d}\) if it has at least \(xx\left( g \right)_{d}\) actors whose expertise diversity indices taken together average at least \(xx\left( g \right)_{d}\). Then, the \(xx\left( g \right)_{d} - xx_{d}\) actors that follow the top \(xx_{d}\) high expertise diversity actors can be considered as potential core competent-diverse actors of the country or actors with potential expertise diversity.

Using these two indices, the list of actors (ordered according to their \(x_{d}\)-index) can be grouped into three classes:

-

Class 1 or Core competent-diverse: Actors with \(x_{d}\)-index ≥ \(xx_{d}\)

-

Class 2 or Potential core competent-diverse: Actors whose \(x_{d}\)-index satisfies \(xx_{d}\) > \(x_{d}\)-index ≥ \(xx\left( g \right)_{d}\)

-

Class 3: Actors with \(x_{d}\)-index \(< xx\left( g \right)_{d}\)

This classification helps national policymakers to formulate policies for strengthening the scholarly ecosystem (i) by nurturing potential core competent-diverse institutions for possible enhancement of their expertise diversity so that they can be converted into core competent-diverse institutions, (ii) by planning incentivization and systematic programs to encourage class 3 institutions to upgrade as class 2 and (iii) by encouraging class 1 institutions to further expand their expertise diversity.

Results

Demonstration of computation of \(x_{d}\)-index

To demonstrate the framework, we chose Indian scholarly ecosystem and ‘institutions’ (from India) as actors. As WOS is one of the most credible and comprehensive scholarly databases, we collected institutional publications data for 136 institutions.

To illustrate the \(x_{d}\)-index computation, we use Banaras Hindu University (BHU) as an example. From 2011 to 2020, BHU published 11,780 cleaned research papers. These papers were categorized into various fields: 1084 in 'Materials Science, Multidisciplinary', 778 in 'Chemistry, Multidisciplinary', and 765 in 'Chemistry, Physical', among others. BHU’s research spanned 207 out of the 254 WoS categories. We mapped these publications to their categories, creating an unweighted affiliation network (W–C network) where nodes represent publications and categories. Next, we incorporated citation data, converting it into a citation-weighted network (W–C* network). This weighted network reflects the thematic strengths of BHU’s research based on citation counts. Using the W–C* network, we conducted a weighted indegree analysis to compute the thematic strengths across the 207 categories. We sorted these categories by their citation counts and calculated the xd-index in h-index fashion. BHU’s \(x_{d}\)-index was found to be 140, indicating that BHU has published papers in 140 categories, each with at least 140 citations. This index highlights BHU’s core research strengths and diversity.

The University of Delhi had the highest \(x_{d}\)-index at 156, showing a highly diverse and strong research portfolio, while the Inter University Accelerator Center had the lowest at 36, indicating a more specialized focus. The \(x_{d}\)-index for all the 136 institutions is computed and shown in Appendix A.

The list of \(x_{d}\)-index values for the top 10 institutions is given in Table 1 for demonstration and understanding the connection with total publications of an institution. One may be tempted to assume that the institutions having more publications to have high \(x_{d}\)-index value. This can be true to some extent but is not the case always. As we can see in the Table 1, the Indian Institute of Science has the highest publication (18,099 total publications) but it does not have the highest \(x_{d}\)-index value (the \(x_{d}\)-index value is 132). The institutions with highest \(x_{d}\)-index values are University of Delhi (\(x_{d}\)-index value of 156) and Banaras Hindu University (\(x_{d}\)-index value of 140). Here it may be noted that total publications of these institutions are quite less than several other institutions (such as IIT at Kharagpur, Madras and Bombay) which have lower \(x_{d}\)-index value than these two institutions. Thus, the \(x_{d}\)-index represents research diversity of an institution, which may not depend on its research output volume alone. However, it is more dependent on an institution’s publishing activity in more thematic areas or categories.

To shed more light on this, Spearman's correlation between \(x_{d}\)-index and total publications, \(x_{d}\)-index and number of WOS categories associated with an institution is computed (scatter plot of these variables can be found in Fig. 2, Appendix B). The Spearman correlation between \(x_{d}\)-index and total publications is found to be 0.706. It certainly indicates that the higher the number of publications greater the possibility of having a high \(x_{d}\)-index or competency in more thematic areas. However, it cannot be always expected that institutions with higher numbers of publications can claim competencies in more diverse areas. It is like expecting actors having a greater number of publications always to have higher h indices too. We also computed the Spearman correlation between the h-index and total publications, and it was found to be 0.78 (which is greater than the correlation between \(x_{d}\)-index and total publications). As h-index is a size-dependent indicator that is bounded by total publications, \(x_{d}\)-index is also a size-dependent indicator, but is bounded by the total number of thematic areas (in this case, WOS categories), not total publications. The conviction about more dependence on \(x_{d}\)-index on publishing activity in more thematic areas rather than publishing volume is strengthened by the Spearman correlation coefficient value of 0.955 (very strong relationship).

Comparison of \(x_{d}\)-index with its variants and determination of suitable indicator

Now, to check the effect of biases such as field bias and inflationary bias, we computed the \(x_{d}\)(FN) and \(x_{d} \left( f \right)\) indices of all the 136 institutions. Upon computation of Spearman’s rank correlation of these indicators with \(x_{d}\)-index, the correlation coefficient value between \(x_{d}\) and \(x_{d}\)(FN) is 0.888 and the correlation coefficient value between \(x_{d}\) and \(x_{d}\)(f) is 0.975. Both the values are above 0.75, indicating a strong correlation. Thus, in our dataset, the usage of full-counting citations has not induced any significant field bias or inflationary bias and \(x_{d}\)-index can be used for further analysis.

Nested expertise indicator and national scholarly ecosystem

As we already mentioned in the methodology section, the concept of \(x_{d}\)-index can be extended at the national level as well to determine the expertise diversity of the national scholarly ecosystem. Two indices, i.e., \(xx_{d}\)-index and \(xx\left( g \right)_{d}\)-index was computed to be 74 and 91, enabling the classification of the set of institutions into three classes. This indicates that the country has at least 74 institutions with expertise diversity scores greater than 74, making these the 74 core competent diverse institutions. There are 17 (91–74) potential core competent diverse organizations and the rest 45 in the set are class 3 institutions. More analysis based on the advantages of this classification is provided in the Discussions section of the article.

The framework developed in this study is unique since it can efficiently determine how many different fields/categories an institution is working on from the scholarly publication list of that institution. The proposed \(x_{d}\)-index indicates the expertise diversity of an institution, indicating the richness of its research portfolio. We now try to analyze the proposed index further by trying to correlate it with international rankings as well as other indices, like h-index and g-index.

Comparison with popular ranking systems

An accurate and comparative validation of the system’s performance with existing ranking systems and frameworks that are not purely based on publication data is not possible. Another hinderance is the underrepresentation of Indian institutions in these rankings. Despite this, we are attempting such a comparative validation by using commonly listed institutions in a pair of ‘\(x_{d}\) based list vs existing ranking-based list’, where latest edition of the popular international overall ranking schemes of QS, ARWU, THE and CWTS Leiden rankings (based on indicator ‘P’) are used for determination of existing ranking-based list, hoping that it still can signal the novelty/non-obviousness of the proposed framework. Also, the overall rank assigned to institutions by these ranking systems are used, not the ranking based on any particular component. As already mentioned, in most of these rankings, the Indian institutions are underrepresented due to multiple factors, and hence have less data. Hence, a small number out of the 136 institutions are represented only in these rankings as commonly listed institutions.

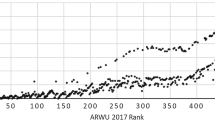

For the commonly listed institutions, relative ranking is used for determination of correlation. Suppose institutions A and B are common listed institutions in \(x_{d}\)-based ranking and CWTS Leiden ranking. Let A’s rank be 7 and 40 in \(x_{d}\)-based ranking and CWTS Leiden ranking, respectively; and similarly, B’s rank be 9 and 38, respectively. However, since lot of other institutions present in both the rankings are not present, upon relative ranking, let relative rank of A be 3 and 4 and B’s rank be 5 and 2 in \(x_{d}\)-based ranking and CWTS Leiden ranking, respectively. Pairs of newly formed relative ranked lists created in the above-mentioned manner are used for correlation analysis. Spearman Correlation Coefficient \(\rho\) of \(x_{d}\) with these relative rankings are given in Table 2 along with number of common listed institutions. From the table, it can be observed that the ARWU Shanghai ranking, the Times Higher Education ranking as well as the QS World University rankings have a low correlation score with the proposed \(x_{d}\)-index based rankings. This may be because these rankings bring in many factors within their internal ranking score. CWTS Leiden ranking can be seen to have strong positive correlation since it emphasizes the publication-based factors more into their ranking score as compared to others. Variants of \(x_{d}\)-index are also showing similar pattern of correlation with major ranking systems. These results shows that the \(x_{d}\)-index framework can generate essentially different rankings from most other prevalent ranking systems.

Advantage of \(x_{d}\)-index over major h-type indicators

Since the \(x_{d}\)-index framework was designed with the h-index (Hirsch, 2005) principle, a correlation between the two indices was also computed. A Spearman Rank Correlation was computed between the \(x_{d}\)-index and h-index, and the ρ value is 0.5786. Similarly, upon computation of the correlation of \(x_{d}\)-index and g-index, the ρ value is 0.4207. These not-so strong correlation values may indicate that the institutional h-index if used for ranking the institutional output might produce significantly different results and such an assessment might not help to determine the expertise diversity of the institutions.

Adaptability and flexibility of the framework

It may be important to look at the possible range of values of the proposed \(x_{d}\)-index. In the present case, since the data of institutions is obtained from WoS database and the subject classification scheme of WoS is used to compute the expertise diversity, the range of values will be between 0 and 254. There are 254 subject categories in the WoS database, and an institution need to have sufficient number of cited publications (\(\ge 254)\) in all these categories resulting into a \(x_{d}\)-index value of 254. Similarly, 0 would be the lowest value possible for the \(x_{d}\)-index value, which happens if an institution does not have any publication or none of its publications are mapped to any subject category. The range of \(x_{d}\)-index values will, however, be different if a different subject classification scheme is used. For example, Dimensions database uses a subject classification in 22 2-digit FOR fields from the ANZRC 2020 fields of research,Footnote 1 and 171 subcategories. Therefore, if we use the subject classification of Dimensions database at the level of subcategories, the \(x_{d}\)-index values may range from 0 to 171. There could also be cases where a national subject classification scheme is used to categorize the publication data of institutions into different subject categories. In that case, the range of \(x_{d}\)-index values will be according to the number of subject categories provided. Other possibilities of using a finer subject category mapping also exist. Hence the proposed \(x_{d}\)-index framework is capable of being adapted to different settings.

Challenges in validation of ability to reflect diversity

The ability of framework to determine diversity of expertise can be determined by doing a correlation analysis of \(x_{d}\)-index with suitable existing diversity measures. However, existing diversity measures like Shannon Entropy may not be appropriate to compute in this case as some publications may be mapped to multiple subject categories. As diversity indicators like Shannon Entropy do not accommodate item overlaps among classifications, computation of Shannon Entropy (and others) is not appropriate here. There are no other suitable diversity measures applicable at the level of institutions which can be used in the present case and hence such a validation will not be feasible.

Discussion

Validation

The proposed \(x_{d}\)-index is supposed to measure the expertise diversity of institutions. It would, however, be relevant to examine whether this proposed index is actually measuring research diversity of institutions. As we are limited by an apparent lack of suitable benchmark diversity indicators to compare our indicator, we are relying on an alternative. We select a few institutions and verify them from the details available in the public domain. For a high diversity institution, we take the example of University of Delhi (\(x_{d}\)-index = 156), and for a low diversity institution, we take the example of Indian Institute of Science Education and Research Bhopal (IISER Bhopal) (\(x_{d}\)-index = 59). From a perusal of the institutional webpages, it is observed that University of DelhiFootnote 2 is a multi-disciplinary university with academic departments in diverse areas including science, arts, social science, commerce, law, engineering, medical science etc. Thus, the University of Delhi has a high research expertise diversity, as also seen in the \(x_{d}\)-index value of 156. On the other hand, IISER Bhopal,Footnote 3 with about ten academic departments, mainly in Sciences and Engineering Sciences, has a \(x_{d}\)-index value of 59. Thus, institutions with diverse research expertise get higher value of \(x_{d}\)-index as compared to institutions which have specific disciplinary focus (such Engineering and Medical institutions). The \(x_{d}\)-index value is found to be independent of publication volume of an institution. For example, IISc Bangalore, IIT Kharagpur, IIT Madras, IIT Bombay, all have higher publication volume than Banaras Hindu University (BHU), but BHU has higher \(x_{d}\)-index value than all of these. Though exercise we carried out (i.e., verification of the academic/research portfolio diversity of selected high \(x_{d}\) valued institutions and some relatively less \(x_{d}\) valued ones) is minimalistic, we believe that if the same is done on a more rigorous or exhaustive manner, sufficient affirmatory evidences can be garnered. However, as this shows that the \(x_{d}\)-index effectively captures the expertise diversity of institution, we are positive that the current exercise at least strengthens the conjecture that the \(x_{d}\)-index can be a potential candidate for one of the true measures of expertise diversity of institutions (even if it is not ‘the’ true measure). Also, several relevant influencing factors should be contemplated in the decision-making process along with the recommended actions (in the following subsection) for portfolio management, especially for enhancement of expertise diversity of institutions. In fact, whether there is really a need for diversity expansion should be decided in the first place by concerned authorities at institutional level as well as national level.

Applicability at different levels

As already discussed, the overall expertise index (\(x_{d}\)-index) computed using WoS categories indicates the number of the core competency areas (at a coarse level) of an institution. Higher the value of \(x_{d}\)-index, higher would be the diversity of the institution. h-index (in this case the institutional h-index), which served as the inspiration for the development of \(x_{d}\)-index, neither helps to determine the core competency at a coarse level (at any level for that matter) nor can indicate the diversity of the research portfolio of an institution. Moreover, the correlation between h and \(x_{d}\) is found to be moderate. This eliminates any speculation of information redundancy and strengthens the conviction of novelty of the \(x_{d}\)-index. So, when it comes to the scholarly output assessment of an institution with an objective to determine the overall scholarly productivity and research expertise diversity of an institution, the framework devised in this work that uses coarse level thematic classification as represented by WoS categories and computation of \(x_{d}\)-index is suitable. However, when fine level thematic strength within a discipline is required, a framework that uses the x-index would be recommended. In other words, when an institutional research portfolio is required to be studied, the proposed framework is useful and when a discipline level research portfolio of an institution is required to be understood, the x-index based framework is to be used. The x-index based assessment will help to make decisions on whether an existing department have to start a new academic course/research lab or to train an existing faculty/researcher in thematic areas or to pick suitable faculty member/researcher/group to collaborate with their peers working on the concerned thematic areas in other institutions. The proposed \(x_{d}\)-index framework can be used to gain insights that will help to determine whether an institution needs to altogether start a new department (hire faculty members, start academic/research programs and various allied activities, etc.) to work towards the expansion of its scholarly diversity.

Moreover, the application of \(x_{d}\)-index can be expanded to computation of the national level expertise and diversity in the form of nested (coarse level) expertise indices such as nested \(x_{d}\)-index or \(xx_{d}\)-index and nested \(x\left( g \right)_{d}\)-index or \(xx\left( g \right)_{d}\)-index. As already mentioned, India’s \(xx_{d}\)-index is found to be 74, which indicates that India has at least 74 institutions that have at least 74 coarse level core competency areas. This can be considered as core competent-diverse institutions of the country or institutions with high expertise diversity. Also, there are 17 institutions (\(xx\left( g \right)_{d} - xx_{d}\) = 91 − 74 = 17) that can be considered as potential core competent-diverse institutions of the country or institutions with potential expertise diversity. Thus, the nested expertise indices (at coarse level) or nested expertise diversity indices partition the dataset of 136 institutions into three categories-high (core competent-diverse), moderate (potential core competent-diverse) and low (the rest). These categories consist of 74, 17 and 45 institutions, respectively. This is a kind of classification of the scholarly (organizational) portfolio of the country. This classification may help national level policymakers to devise strategies for strengthening the diversity of the national scholarly ecosystem. This classification along with the classification of their research portfolio into core competency areas might help institutional level policymakers to devise research portfolio diversity enhancement strategies. Some directions for a course of action to be taken by national level and institutional decision-makers based on the analysis of the set of 136 institutions in India are given below:

National level policymakers

National level policymakers are informed of the 74 most core competent and diverse institutions and 17 potential competent and diverse institutions in the national scholarly ecosystem that embeds the science and technology ecosystem. This information can be used to:

-

Develop policies for the establishment of novel regional research collaboration among academic and research institutions (A2A), between academic and research institutions and research institutions by Government (A2G), between academic and research institutions and industry (A2I) and even for establishment of regional innovation clusters,

-

Develop policies to facilitate international collaboration (academic and industrial) to enhance the expertise of 74 core competent and diverse institutions as well as to enhance the expertise and expand the diversity of 17 potential core competent and diverse institutions.

Institutional level policymakers

Institutional level policymakers are informed of the overall classification using nested expertise and diversity indices and the category/class in which they belong to. This information can be used by institutions in different categories to form their own policies for research portfolio management (either expansion of diversity or enhancement of expertise or both) other than striving constantly for improving their strengths in the thematic areas that are already part of the core competency list of an institution by measures such as looking for international and industrial collaboration in these areas or other areas.

-

Institutions in the different category of expertise diversity:

-

May primarily look for the improvement of diversity by enhancing some relatively strong areas other than core competency coarse level thematic areas by pairing with suitable institutions in the appropriate category having these areas as core competency areas,

-

May secondarily look for the improvement of diversity by enhancing some relatively strong areas other than core competency coarse level thematic areas by pairing with suitable institutions in the appropriate category having these areas as core competency areas,

-

May thirdly look for the adoption of expansion of diversity by focusing on some weak areas too or even opening accounts in some weak areas eyeing for benefit in the not-so-immediate future by pairing with suitable institutions in the appropriate category having these areas as core competency areas.

-

Usefulness for subject-focused institutions

This indicator can thus help in providing an expertise assessment based on the subject diversity of an institution. Although for most of the institution this would be beneficial to increase the overall diversity and strength of their research portfolio, this would also help subject focused institutions like medical colleges and business schools to analyze which category they are lacking on, within their own subject field. Taking an example of “INDIAN INSTITUTE OF SCIENCE IISC BANGALORE,” they can use the proposed method to confirm any field of STEM which they may be lacking in, rather than having more focus on subject categories based on Arts and Humanities. Even though our framework has the capability to assess the overall diversity of the research portfolio of an institution, the institution themselves can use the methods to select the subject areas most similar to their current research portfolio.

Limitation

The proposed framework has certain limitations due to the choice of the database used, choice of indicator to reflect the strength of the institution, etc. The coverage, granularity of subject classification scheme of the chosen database and the accuracy with which papers are mapped to subject categories may affect the results to some extent. In the case study that we used to demonstrate the framework, the results depend on the coverage of WOS and the subject classification available in WOS. More categories are there in the broad area ‘Life Sciences and Biomedicine’ while broad areas ‘Technology’, ‘Physical Sciences’ and ‘Arts & Humanities’ have less categories. Therefore, institutions dealing with ‘Life Sciences and Biomedicine’ may have an undue advantage compared to institutions focusing on other broad areas. However, the extent of it is not known and more dedicated research is required in this direction. Usage of another database (such as Dimensions) and classification scheme (like ANZRC) can also be considered and comparative analysis of the variability of results is also intriguing.

Potential for collaboration recommendations

One of the further possible applications of this indicator would be to have a recommendation system for collaborations between institutions. As mentioned before, we had classified the set of institutions into three different classes—Class 1 (High), Class 2 (Moderate) and Class 3 (Low). This classification was based on the \(xx_{d}\)-index and the \(xx\left( g \right)_{d}\)-index values of 74 and 91 that were calculated previously. Hence, institutions ranked within 74 based on their non-increasing order of \(x_{d}\)-index were taken as class 1. In a similar manner, the institutions ranking from 75 to 91 based on their non-increasing order of \(x_{d}\)-index were taken into class 2, and the rest as class 3. Thus, the recommendations for diversity expansion for the institutions would vary from class to class, based on their initial diversity expertise or the \(x_{d}\)-index. The framework for the recommendation system would be involved in first discovering two sets of institutions—(i) the institutions with very high diversity, which can be excluded from needing to be communicated with recommendations, and (ii) the institutions that have very low diversity and cannot be easily recommended as a collaborator for diversity expansion.

Conclusion

This work presents a newly devised indicator namely, the \(x_{d}\)-index, which indicates the overall scholarly expertise diversity in the research portfolio of an institution. Unlike the h-index, from which motivation is drawn for its design, its novelty lies in its ability to be used to determine diversity. Also, unlike the x-index based framework by Lathabai et al., (2021a, 2021b), the proposed framework based on \(x_{d}\)-index (which can be treated as a kind of upward integration to serve the purpose of overall scholarly expertise determination of institutions) is much simpler as it does not require NLP module. Thus, the proposed index provides a novel framework for assessing the expertise diversity of institutions. To accommodate the effect of biases such as field bias and inflationary bias, the framework has provisions to compute variants of \(x_{d}\)-index and a correlation-based module to determine the suitable indicator to be used for research portfolio management of institution and scholarly ecosystem management of nation/region. Upon demonstration of the framework on 136 institutions from India, \(x_{d}\)-index is found to be most appropriate indicator among the three indices considered. The correlation studies show that the proposed indicator is different from traditional indices like h and g. Further, analysis of the \(x_{d}\)-index values for the given dataset show that it can be one of the potential candidates as a true measure of expertise diversity of institutions. The \(x_{d}\)-index values of a set of institutions in a country can be used to categorize them into different classes of expertise diversity. This can be done by using the notion of nested (coarse level) expertise indices such as \(xx_{d}\) and \(xx\left( g \right)_{d}\) indices, as explained earlier. A nation’s scholarly portfolio can be classified into classes such as high expertise diversity class (or high) accommodating top \(xx_{d}\) institutions, potential expertise diversity class (or moderate) that consists of institutions ranking in the range [\(xx_{d}\) + 1, \(xx\left( g \right)_{d}\)] and the rest can be treated as low expertise diversity category. This classification may help the institutions to explore possibilities to forge effective policies for research portfolio management of the institution. This classification may aid national level policymakers to manage the scholarly portfolio in an effective way to strengthen the scholarly ecosystem that includes the Science and Technology ecosystem of the nation.

The indicator, although uses the Web of Science (WoS) database, is limited by the subject categories ontology of the database. Given WoS's emphasis on STEM fields, it often overlooks research in the Arts and Humanities, particularly non-published work. Additionally, the dataset used contains data specifically from Indian institutions and as such the framework is demonstrated on this dataset only. These limitations affect the indicator, and we plan to address them more comprehensively in future research. Databases like Dimensions and classification schemes like ANZRC will be useful for such studies. Similarly, the choice of indicator (say usage of altmetric attention score instead of citations) might also produce slightly different results (in terms of rankings). However, the usage of altmetric attention score might change the nature of \(x_{d}\) and it can be denoted as \(x_{d\left( a \right)}\), where ‘a’ symbolizes ‘attention’. While \(x_{d}\) helps to estimate expertise diversity, \(x_{d\left( a \right)}\) may help to quantify attention diversity and provides thematic areas that are have received high level of attention. Thus, the framework offers room for a series of further worthy endeavours.

Data availability

The datasets generated during and/or analyzed during the current study will be made available on reasonable request.

References

Anowar, F., Helal, M. A., Afroj, S., Sultana, S., Sarker, F., & Mamun, K. A. (2015). A critical review on World University ranking in terms of top four ranking systems. Lecture Notes in Electrical Engineering, 312, 559–566.

Billaut, J. C., Bouyssou, D., & Vincke, P. (2010). Should you believe in the Shanghai ranking? Scientometrics, 84(1), 237–263.

Bowman, N. A., & Bastedo, M. N. (2013). Anchoring effects in world university rankings: Exploring biases in reputation scores. In Higher Education in the Global Age (pp. 271–287). Routledge.

de Boer, H. F., Jongbloed, B. W., Benneworth, P. S., Cremonini, L., Kolster, R., Kottmann, A., ... & Vossensteyn, J. J. (2015). Performance-based funding and performance agreements in fourteen higher education systems.

Egghe, L. (2006). An improvement of the h-index: The g-index. ISSI Newsletter, 2(1), 8–9.

George, S., Lathabai, H. H., Prabhakaran, T., & Changat, M. (2020). A framework towards bias-free contextual productivity assessment. Scientometrics, 122, 127–157.

Glänzel, W., & Debackere, K. (2022). Various aspects of interdisciplinarity in research and how to quantify and measure those. Scientometrics, 127(9), 5551–5569.

Glänzel, W., & Moed, H. F. (2013). Opinion paper: Thoughts and facts on bibliometric indicators. Scientometrics, 96, 381–394.

Hagen, N. T. (2008). Harmonic allocation of authorship credit: Source-level correction of bibliometric bias assures accurate publication and citation analysis. PLoS ONE, 3(12), e4021.

Haunschild, R., Daniels, A. D., & Bornmann, L. (2022). Scores of a specific field-normalized indicator calculated with different approaches of field-categorization: Are the scores different or similar? Journal of Informetrics, 16(1), 101241.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102(46), 16569–16572.

Jeremic, V., Bulajic, M., Martic, M., & Radojicic, Z. (2011). A fresh approach to evaluating the academic ranking of world universities. Scientometrics, 87(3), 587–596.

Katz, J. S. (2000). Scale-independent indicators and research evaluation. Science and Public Policy, 27(1), 23–36.

Lathabai, H. H., Prabhakaran, T., & Changat, M. (2017). Contextual productivity assessment of authors and journals: A network scientometric approach. Scientometrics, 110(2), 711–737.

Lathabai, H. H., Nandy, A., & Singh, V. K. (2021a). Expertise-based institutional collaboration recommendation in different thematic areas. CEUR Workshop Proceedings (Vol. 2847).

Lathabai, H. H., Nandy, A., & Singh, V. K. (2021b). x-index: Identifying core competency and thematic research strengths of institutions using an NLP and network based ranking framework. Scientometrics, 126(12), 9557–9583.

Lathabai, H. H., Nandy, A., & Singh, V. K. (2022). Institutional collaboration recommendation: An expertise-based framework using NLP and network analysis. Expert Systems with Applications, 209, 118317.

Leydesdorff, L., & Bornmann, L. (2011). How fractional counting of citations affects the impact factor: Normalization in terms of differences in citation potentials among fields of science. Journal of the American Society for Information Science and Technology, 62(2), 217–229.

Leydesdorff, L., Wagner, C. S., & Bornmann, L. (2018). Betweenness and diversity in journal citation networks as measures of interdisciplinarity—A tribute to Eugene Garfield. Scientometrics, 114, 567–592.

Leydesdorff, L., Wagner, C. S., & Bornmann, L. (2019). Interdisciplinarity as diversity in citation patterns among journals: Rao-Stirling diversity, relative variety, and the Gini coefficient. Journal of Informetrics, 13(1), 255–269.

Lindsey, D. (1980). Production and citation measures in the sociology of science: The problem of multiple authorship. Social Studies of Science, 10(2), 145–162.

Lundberg, J. (2007). Lifting the crown—citation z-score. Journal of Informetrics, 1(2), 145–154.

Geof Maslen (2019), New performance-based funding system for universities, Retrieved January 10, 2022, from https://www.universityworldnews.com/post.php?story=20190822085127986

Nandy, A., Lathabai, H.H., & Singh, V.K. (2023). x_d-index: An overall scholarly expertise index for the research portfolio management of institutions. In Conference paper presented in 19th International Scientometrics and Informetrics (ISSI) Conference, Jul. 2023, Indiana, USA.

Prathap, G. (2010). Is there a place for a mock h-index? Scientometrics, 84(1), 153–165.

Prathap, G. (2020). Journal indicators from a dimensionality perspective. Scientometrics, 122(2), 1259–1265.

Price, D. D. S. (1981). Multiple authorship. Science, 212(4498), 986–986.

Rafols, I., & Meyer, M. (2010). Diversity and network coherence as indicators of interdisciplinarity: Case studies in bionanoscience. Scientometrics, 82(2), 263–287.

Rehn, C., Kronman, U., & Wadskog, D. (2007). Bibliometric indicators—definitions and usage at Karolinska Institutet. Karolinska Institutet, 13, 2012.

Shanghai Ranking’s Academic Ranking of World Universities Methodology. (2021). https://www.shanghairanking.com/methodology/arwu/2021. accessed on 10th Jan 2021

Sivertsen, G. (2016). Publication-based funding: The Norwegian model. In Research assessment in the humanities (pp. 79–90). Springer, Cham

Sörlin, S. (2007). Funding diversity: Performance-based funding regimes as drivers of differentiation in higher education systems. Higher Education Policy, 20(4), 413–440

Szluka, P., Csajbók, E., & Győrffy, B. (2023). Relationship between bibliometric indicators and university ranking positions. Scientific Reports, 13(1), 14193.

Tijssen, R. J., & Winnink, J. J. (2018). Capturing ‘R&D excellence’: Indicators, international statistics, and innovative universities. Scientometrics, 114, 687–699.

Wagner, C. S., Roessner, J. D., Bobb, K., Klein, J. T., Boyack, K. W., Keyton, J., Ismael, R., & Börner, K. (2011). Approaches to understanding and measuring interdisciplinary scientific research (IDR): A review of the literature. Journal of informetrics, 5(1), 14–26.

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391.

Waltman, L., van Eck, N. J., van Leeuwen, T. N., Visser, M. S., & van Raan, A. F. (2011). Towards a new crown indicator: Some theoretical considerations. Journal of Informetrics, 5(1), 37–47.

Waltman, L., van Eck, N. J., Visser, M., & Wouters, P. (2016). The elephant in the room: The problem of quantifying productivity in evaluative scientometrics. Journal of Informetrics, 10(2), 671–674.

Waltman, L., & van Eck, N. J. (2019). Field normalization of scientometric indicators. Springer handbook of science and technology indicators, pp 281–300.

Funding

This work is partly supported by extramural research Grant No.: MTR/2020/000625 from Science and Engineering Research Board (SERB), India, and by HPE Aruba Centre for Research in Information Systems at BHU (No.: M-22-69 of BHU), to the third author.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that the manuscript complies with the ethical standards of the journal and there is no conflict of interests whatsoever.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Previous publication: This work is an extended version of the paper presented in the 19th International Conference on Scientometrics and Informetrics held in Bloomington, USA during 2–5 July 2023. Please see—Nandy, Lathabai, & Singh (2023).

Appendices

Appendix A

S. no | Institution | Total Publications | \(x_{d}\)-index | \(x_{d}\)(FN) -index | \(x_{d} \left( f \right)\)-index |

|---|---|---|---|---|---|

1 | ACADEMY OF SCIENTIFIC INNOVATIVE RESEARCH ACSIR | 9748 | 98 | 49 | 77 |

2 | ALAGAPPA UNIVERSITY | 2244 | 80 | 37 | 71 |

3 | ALIGARH MUSLIM UNIVERSITY | 6283 | 118 | 51 | 102 |

4 | ALL INDIA INSTITUTE OF MEDICAL SCIENCES AIIMS NEW DELHI | 8320 | 103 | 50 | 85 |

5 | AMITY UNIVERSITY NOIDA | 2279 | 100 | 32 | 73 |

6 | AMRITA VISHWA VIDYAPEETHAM | 2602 | 97 | 40 | 75 |

7 | ANDHRA UNIVERSITY | 1905 | 78 | 24 | 60 |

8 | ANNA UNIVERSITY | 8874 | 109 | 62 | 97 |

9 | ANNAMALAI UNIVERSITY | 3746 | 94 | 44 | 86 |

10 | BANARAS HINDU UNIVERSITY BHU | 11,139 | 139 | 74 | 124 |

11 | BHARATHIAR UNIVERSITY | 4068 | 96 | 47 | 85 |

12 | BHARATHIDASAN UNIVERSITY | 3000 | 87 | 40 | 73 |

13 | BIRLA INSTITUTE OF TECHNOLOGY MESRA | 2101 | 83 | 32 | 73 |

14 | BIRLA INSTITUTE OF TECHNOLOGY SCIENCE PILANI BITS PILANI | 4348 | 109 | 49 | 93 |

15 | BOSE INSTITUTE | 1933 | 67 | 28 | 55 |

16 | CHRISTIAN MEDICAL COLLEGE HOSPITAL CMCH VELLORE | 2514 | 68 | 32 | 52 |

17 | COCHIN UNIVERSITY SCIENCE TECHNOLOGY | 2182 | 85 | 29 | 70 |

18 | CSIR CENTRAL DRUG RESEARCH INSTITUTE CDRI | 3030 | 69 | 31 | 58 |

19 | CSIR CENTRAL ELECTROCHEMICAL RESEARCH INSTITUTE CECRI | 2203 | 51 | 26 | 45 |

20 | CSIR CENTRAL FOOD TECHNOLOGICAL RESEARCH INSTITUTE CFTRI | 1881 | 52 | 23 | 45 |

21 | CSIR CENTRAL GLASS CERAMIC RESEARCH INSTITUTE CGCRI | 1607 | 50 | 23 | 45 |

22 | CSIR CENTRAL LEATHER RESEARCH INSTITUTE CLRI | 1926 | 64 | 28 | 53 |

23 | CSIR CENTRAL SALT MARINE CHEMICAL RESEARCH INSTITUTE CSMCRI | 1971 | 55 | 28 | 50 |

24 | CSIR CENTRE FOR CELLULAR MOLECULAR BIOLOGY CCMB | 977 | 56 | 21 | 48 |

25 | CSIR INDIAN INSTITUTE OF CHEMICAL BIOLOGY IICB | 1933 | 70 | 29 | 59 |

26 | CSIR INDIAN INSTITUTE OF CHEMICAL TECHNOLOGY IICT | 6028 | 72 | 35 | 61 |

27 | CSIR INSTITUTE OF GENOMICS INTEGRATIVE BIOLOGY IGIB | 1380 | 68 | 26 | 49 |

28 | CSIR NATIONAL CHEMICAL LABORATORY NCL | 4868 | 69 | 32 | 60 |

29 | CSIR NATIONAL INSTITUTE INTERDISCIPLINARY SCIENCE TECHNOLOGY NIIST | 2004 | 59 | 28 | 54 |

30 | CSIR NATIONAL INSTITUTE OF OCEANOGRAPHY NIO | 1924 | 54 | 23 | 43 |

31 | CSIR NATIONAL PHYSICAL LABORATORY NPL | 3251 | 63 | 31 | 51 |

32 | DELHI TECHNOLOGICAL UNIVERSITY | 1843 | 90 | 34 | 73 |

33 | DR B R AMBEDKAR NATIONAL INSTITUTE OF TECHNOLOGY JALANDHAR | 1539 | 70 | 30 | 63 |

34 | GAUHATI UNIVERSITY | 1634 | 76 | 24 | 58 |

35 | GOVT MED COLL | 1008 | 57 | 20 | 39 |

36 | GURU NANAK DEV UNIVERSITY | 3245 | 89 | 35 | 77 |

37 | ICAR INDIAN AGRICULTURAL RESEARCH INSTITUTE | 4428 | 65 | 31 | 50 |

38 | ICAR INDIAN VETERINARY RESEARCH INSTITUTE | 2533 | 54 | 25 | 41 |

39 | ICAR NATIONAL DAIRY RESEARCH INSTITUTE | 2054 | 45 | 21 | 37 |

40 | INDIAN ASSOCIATION FOR THE CULTIVATION OF SCIENCE IACS JADAVPUR | 4413 | 53 | 28 | 49 |

41 | INDIAN INSTITUTE OF ENGINEERING SCIENCE TECHNOLOGY SHIBPUR IIEST | 3109 | 82 | 39 | 73 |

42 | INDIAN INSTITUTE OF SCIENCE EDUCATION RESEARCH IISER BHOPAL | 1718 | 59 | 23 | 44 |

43 | INDIAN INSTITUTE OF SCIENCE EDUCATION RESEARCH IISER KOLKATA | 2801 | 79 | 33 | 68 |

44 | INDIAN INSTITUTE OF SCIENCE EDUCATION RESEARCH IISER MOHALI | 1643 | 60 | 27 | 47 |

45 | INDIAN INSTITUTE OF SCIENCE IISC BANGALORE | 17,021 | 131 | 77 | 119 |

46 | INDIAN INSTITUTE OF TECHNOLOGY IIT BHU VARANASI | 4823 | 97 | 48 | 86 |

47 | INDIAN INSTITUTE OF TECHNOLOGY IIT BOMBAY | 12,815 | 121 | 67 | 110 |

48 | INDIAN INSTITUTE OF TECHNOLOGY IIT DELHI | 12,051 | 127 | 65 | 115 |

49 | INDIAN INSTITUTE OF TECHNOLOGY IIT GANDHINAGAR | 1545 | 72 | 23 | 62 |

50 | INDIAN INSTITUTE OF TECHNOLOGY IIT GUWAHATI | 8061 | 114 | 59 | 106 |

51 | INDIAN INSTITUTE OF TECHNOLOGY IIT HYDERABAD | 2990 | 87 | 37 | 73 |

52 | INDIAN INSTITUTE OF TECHNOLOGY IIT INDORE | 2959 | 83 | 38 | 75 |

53 | INDIAN INSTITUTE OF TECHNOLOGY IIT KANPUR | 9191 | 115 | 60 | 99 |

54 | INDIAN INSTITUTE OF TECHNOLOGY IIT KHARAGPUR | 14,637 | 136 | 78 | 124 |

55 | INDIAN INSTITUTE OF TECHNOLOGY IIT MADRAS | 12,944 | 126 | 70 | 110 |

56 | INDIAN INSTITUTE OF TECHNOLOGY IIT PATNA | 1681 | 70 | 29 | 68 |

57 | INDIAN INSTITUTE OF TECHNOLOGY IIT ROORKEE | 9916 | 123 | 66 | 112 |

58 | INDIAN INSTITUTE OF TECHNOLOGY IIT ROPAR | 1615 | 74 | 30 | 61 |

59 | INDIAN INSTITUTE OF TECHNOLOGY INDIAN SCHOOL OF MINES DHANBAD | 5547 | 99 | 49 | 91 |

60 | INDIAN SPACE RESEARCH ORGANISATION ISRO | 3596 | 72 | 30 | 63 |

61 | INDIAN STATISTICAL INSTITUTE | 3275 | 93 | 34 | 75 |

62 | INDIRA GANDHI CENTRE FOR ATOMIC RESEARCH IGCAR | 3521 | 70 | 29 | 58 |

63 | INSTITUTE OF CHEMICAL TECHNOLOGY MUMBAI | 3668 | 66 | 32 | 58 |

64 | INTER UNIVERSITY ACCELERATOR CENTRE | 1602 | 36 | 19 | 27 |

65 | JADAVPUR UNIVERSITY | 8823 | 115 | 64 | 100 |

66 | JAMIA HAMDARD UNIVERSITY | 2785 | 78 | 31 | 62 |

67 | JAMIA MILLIA ISLAMIA | 3957 | 110 | 47 | 89 |

68 | JAWAHARLAL INSTITUTE OF POSTGRADUATE MEDICAL EDUCATION RESEARCH | 1265 | 51 | 20 | 41 |

69 | JAWAHARLAL NEHRU CENTER FOR ADVANCED SCIENTIFIC RESEARCH JNCASR | 2926 | 66 | 34 | 57 |

70 | JAWAHARLAL NEHRU TECHNOLOGICAL UNIVERSITY HYDERABAD | 1592 | 71 | 23 | 55 |

71 | JAWAHARLAL NEHRU UNIVERSITY NEW DELHI | 4524 | 103 | 48 | 91 |

72 | KALINGA INSTITUTE OF INDUSTRIAL TECHNOLOGY KIIT | 958 | 85 | 31 | 69 |

73 | KALYANI UNIVERSITY | 2023 | 79 | 28 | 66 |

74 | KASTURBA MEDICAL COLLEGE MANIPAL | 1272 | 70 | 24 | 50 |

75 | KURUKSHETRA UNIVERSITY | 1583 | 76 | 26 | 62 |

76 | L V PRASAD EYE INSTITUTE | 1408 | 39 | 12 | 26 |

77 | LOVELY PROFESSIONAL UNIVERSITY | 1558 | 78 | 30 | 62 |

78 | LUCKNOW UNIVERSITY | 1882 | 80 | 28 | 68 |

79 | MADURAI KAMARAJ UNIVERSITY | 2189 | 73 | 29 | 59 |

80 | MAHARAJA SAYAJIRAO UNIVERSITY BARODA | 2088 | 87 | 31 | 69 |

81 | MAHARSHI DAYANAND UNIVERSITY | 1535 | 72 | 26 | 63 |

82 | MAHATMA GANDHI UNIVERSITY KERALA | 1506 | 66 | 26 | 56 |

83 | MALAVIYA NATIONAL INSTITUTE OF TECHNOLOGY JAIPUR | 2023 | 79 | 34 | 65 |

84 | MANIPAL ACADEMY OF HIGHER EDUCATION MAHE | 5492 | 124 | 50 | 99 |

85 | MAULANA AZAD MEDICAL COLLEGE | 1050 | 44 | 20 | 38 |

86 | MOTILAL NEHRU NATIONAL INSTITUTE OF TECHNOLOGY | 1817 | 74 | 35 | 68 |

87 | NATIONAL CENTRE FOR BIOLOGICAL SCIENCES NCBS | 1474 | 64 | 24 | 41 |

88 | NATIONAL INSTITUTE OF MENTAL HEALTH NEUROSCIENCES INDIA | 2323 | 60 | 25 | 43 |

89 | NATIONAL INSTITUTE OF PHARMACEUTICAL EDUCATION RESEARCH S A S NAGAR MOHALI | 1413 | 57 | 26 | 50 |

90 | NATIONAL INSTITUTE OF TECHNOLOGY CALICUT | 1720 | 78 | 28 | 71 |

91 | NATIONAL INSTITUTE OF TECHNOLOGY DURGAPUR | 2210 | 79 | 35 | 69 |

92 | NATIONAL INSTITUTE OF TECHNOLOGY KARNATAKA | 2614 | 82 | 32 | 77 |

93 | NATIONAL INSTITUTE OF TECHNOLOGY KURUKSHETRA | 1532 | 71 | 28 | 59 |

94 | NATIONAL INSTITUTE OF TECHNOLOGY ROURKELA | 4617 | 107 | 51 | 96 |

95 | NATIONAL INSTITUTE OF TECHNOLOGY SILCHAR | 1515 | 66 | 27 | 59 |

96 | NATIONAL INSTITUTE OF TECHNOLOGY TIRUCHIRAPPALLI | 3892 | 81 | 44 | 71 |

97 | NATIONAL INSTITUTE OF TECHNOLOGY WARANGAL | 2055 | 68 | 30 | 64 |

98 | PHYSICAL RESEARCH LABORATORY INDIA | 2043 | 42 | 18 | 33 |

99 | PONDICHERRY UNIVERSITY | 2998 | 95 | 38 | 77 |

100 | POST GRADUATE INSTITUTE OF MEDICAL EDUCATION RESEARCH PGIMER CHANDIGARH | 5911 | 85 | 41 | 70 |

101 | PUNJAB AGRICULTURAL UNIVERSITY | 2134 | 56 | 22 | 44 |

102 | PUNJABI UNIVERSITY | 2057 | 96 | 32 | 78 |

103 | RAJA RAMANNA CENTRE FOR ADVANCED TECHNOLOGY | 1634 | 46 | 19 | 42 |

104 | RASHTRASANT TUKADOJI MAHARAJ NAGPUR UNIVERSITY | 1443 | 66 | 24 | 51 |

105 | SANJAY GANDHI POSTGRADUATE INSTITUTE OF MEDICAL SCIENCES | 2148 | 65 | 31 | 52 |

106 | SARDAR VALLABHBHAI NATIONAL INSTITUTE OF TECHNOLOGY | 1977 | 78 | 37 | 74 |

107 | SATHYABAMA INSTITUTE OF SCIENCE TECHNOLOGY | 1315 | 72 | 24 | 62 |

108 | SAVITRIBAI PHULE PUNE UNIVERSITY | 3993 | 98 | 42 | 82 |

109 | SETH GORDHANDAS SUNDERDAS MEDICAL COLLEGE KING EDWARD MEMORIAL HOSPITAL | 938 | 51 | 19 | 35 |

110 | SHANMUGHA ARTS SCIENCE TECHNOLOGY RESEARCH ACADEMY SASTRA | 3116 | 92 | 45 | 82 |

111 | SHIVAJI UNIVERSITY | 2332 | 70 | 31 | 56 |

112 | SIKSHA O ANUSANDHAN UNIVERSITY | 1855 | 78 | 29 | 67 |

113 | SN BOSE NATIONAL CENTRE FOR BASIC SCIENCE SNBNCBS | 1816 | 49 | 20 | 40 |

114 | SREE CHITRA TIRUNAL INSTITUTE FOR MEDICAL SCIENCES TECHNOLOGY SCTIMST | 1253 | 64 | 28 | 51 |

115 | SRI VENKATESWARA UNIVERSITY | 2276 | 74 | 31 | 59 |

116 | SRM INSTITUTE OF SCIENCE TECHNOLOGY CHENNAI | 4329 | 105 | 44 | 90 |

117 | SSN COLLEGE OF ENGINEERING | 1419 | 58 | 23 | 50 |

118 | ST JOHN S NATIONAL ACADEMY OF HEALTH SCIENCES | 1140 | 60 | 21 | 40 |

119 | TATA MEMORIAL CENTRE TMC | 2223 | 63 | 28 | 47 |

120 | TATA MEMORIAL HOSPITAL | 1894 | 59 | 27 | 46 |

121 | TEZPUR UNIVERSITY | 2618 | 89 | 38 | 82 |

122 | THAPAR INSTITUTE OF ENGINEERING TECHNOLOGY | 4650 | 98 | 48 | 88 |

123 | UGC DAE CONSORTIUM FOR SCIENTIFIC RESEARCH | 2137 | 43 | 21 | 34 |

124 | UNIVERSITY COLLEGE OF MEDICAL SCIENCES | 924 | 53 | 20 | 41 |

125 | UNIVERSITY OF ALLAHABAD | 2209 | 93 | 31 | 76 |

126 | UNIVERSITY OF BURDWAN | 2025 | 83 | 32 | 74 |

127 | UNIVERSITY OF CALCUTTA | 6958 | 122 | 53 | 103 |

128 | UNIVERSITY OF DELHI | 11,906 | 153 | 72 | 134 |

129 | UNIVERSITY OF HYDERABAD | 5081 | 100 | 42 | 82 |

130 | UNIVERSITY OF JAMMU | 1502 | 65 | 21 | 56 |

131 | UNIVERSITY OF KASHMIR | 1726 | 89 | 30 | 72 |

132 | UNIVERSITY OF MADRAS | 3124 | 89 | 38 | 75 |

133 | UNIVERSITY OF MYSORE | 2060 | 74 | 27 | 58 |

134 | UNIVERSITY OF RAJASTHAN | 1566 | 75 | 25 | 60 |

135 | VELLORE INSTITUTE OF TECHNOLOGY | 7529 | 118 | 62 | 104 |

136 | VISVESVARAYA NATIONAL INSTITUTE OF TECHNOLOGY NAGPUR | 1975 | 78 | 29 | 67 |

Appendix B

See Fig. 2.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nandy, A., Lathabai, H.H. & Singh, V.K. \({\varvec{x}}_{{\varvec{d}}}\)-index and its variants: a set of overall scholarly expertise diversity indices for the research portfolio management of institutions. Scientometrics (2024). https://doi.org/10.1007/s11192-024-05131-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11192-024-05131-y