Abstract

The ‘quality’, ‘prestige’, and ‘impact’ of a scholarly journal is largely determined by the number of citations its articles receive. Publication and citation metrics play an increasingly central (but contested) role in academia, often influencing who gets hired, who gets promoted, and who gets funded. These metrics are also of interest to institutions, as they may impact government funding, and their position in influential university rankings. Within this context, researchers around the world are experiencing pressure to publish in the ‘top’ journals. But what if a journal is considered a ‘top’ journal, and a ‘bottom’ journal at the same time? We recently came across such a case, and wondered if it was just an anomaly, or if it was more common than we might assume. This short communication reports the findings of our investigation into the nature and extent of this phenomenon in Scimago Journal Country and Rank (SJR) and Journal Citation Reports (JCR), both of which produce influential citation-based metrics. In analyzing around 25,000 journals and 12,000 journals respectively, we found that they are commonly placed into multiple subject categories. If citation-based metrics are an indication of broader concepts of research/er quality, which is so often implied or inferred, then we would expect that journals would be ranked similarly across these categories. However, our findings show that it is not uncommon for journals to attract citations to differing degrees depending on their category, resulting in journals that may at the same time be perceived as both ‘high’ and ‘low’ quality. This study is further evidence of the illogicality of conflating citation-based metrics with journal, research, and researcher quality, a continuing and ubiquitous practice that impacts thousands of researchers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Citation-based metrics are commonly used as a proxy measure of the quality, prestige, and impact of scholarly journals, the articles published in them, and the researchers who author those articles. Publication outputs and their citations weigh heavily in international and national university rankings, which influence the public image of a higher education institution (HEI), and in turn its ability to attract students and staff (Robinson-Garcia et al., 2019). Where a HEI receives government funding, the amount received may be dependent on the ‘performance’ of its faculty, which again often takes into account publication- and citation-based metrics (Jonkers & Zacharewicz, 2016). As a result, some HEIs offer monetary rewards for publication in ‘top’ journals—those that garner more citations (Abritis & McCook, 2017). On an individual level, the number of articles a researcher publishes, the number of citations those publications attract, and the perception of the quality of that journal (generally determined by the number of citations its articles attract) all play a role in determining who gets hired, who gets promoted, and who receives tenure (McKiernan, 2019). This is despite an array of arguments against the unquestioned application of such metrics to measure research/er quality (e.g. Fire & Guestrin, 2019; Jain et al., 2021; Mañana-Rodríguez, 2015; McKiernan et al., 2019; Nicholas et al., 2017; Niles et al., 2020; Rice et al., 2020; Tian et al., 2016).

In a recent study, my co-authors and I compared the internationality of journals in the field of higher education that were in the highest quartile of citations (Q1)—those thought to be of the highest quality and afforded the highest level of prestige—with those in the lowest quartile of citations (Q4), according to the commonly used SCImago Journal & Country Rank (SJR) (Mason et al., 2021), which uses data from the Scopus database. The study concluded that Q1 journals were more likely to include studies from and about a small number of core anglophone countries. On the other hand, Q4 journals were “more likely to include research and researchers from outside of the core anglophone countries, making an important contribution to the diversity of scholarship beyond the dominating western and English-language discourse” (p. 1).

Notwithstanding the significance of that finding, an interesting observation was made during the data collection phase that was mentioned briefly in the paper. The observation was that one of the journals included in the corpus was ranked simultaneously as both a Q1 journal and a Q4 journal. The journal referred to is Christian Higher Education (CHE), published by Taylor & Francis. The journal falls under two subject categories: Education (under the broader subject area of Social Sciences), and Religious Studies (under the broader subject area of Arts and Humanities). In 2018, CHE was ranked Q1 in Religious Studies, but among journals in Education, it was ranked Q4. This makes the journal one that is likely considered to be ‘high prestige’ ‘high quality’ and ‘high impact’, and at the same time may be perceived to be ‘low prestige’, ‘low quality’ and ‘low impact’. Considering that the content of the journal is the same regardless of what subject category it is placed into, conflating a SJR indicator with quality (or impact or prestige) in the case of CHE appears nonsensical. Is it a journal of high quality, or limited quality? Would a paper in this journal be valued by HEIs, or not? Would it be looked at favorably by a tenure review board or a funding agency, or not? The answer to these questions cannot logically be both.

We conducted a thorough review of the literature to try to determine how common it was for journals to belong to different quartiles under the various disciplines in which they are categorized. We identified several studies that make reference to journals being attributed to more than one quartile, in relation to either the SJR, or the Journal Citation Report (JCR) indicator, a separate but also widely-used citation-based journal metric that uses data from the Web of Science database (Liu et al., 2016; Miranda & Garcia-Carpintero, 2019; Pajić, 2015; Vȋiu & Păunescu, 2021). In each of these papers attention is given to the methodological implications of this phenomenon, but detailed quantification of the extent or nature of this inconsistency across the database was not within their scope. This led us to conduct this small study in the spirit of curiosity, to determine if this was merely an anomaly, or if it was more common than we might expect. This led us to develop the following research questions (RQ), within the context of all journals in both the SJR and the JCR databases:

-

RQ1 How common is it for journals to be placed into multiple subject categories?

-

RQ2 How common is it for journals to fall into different quartiles?

-

RQ3 How common is it for journals to fall into non-adjacent quartiles?

-

RQ4 How common is it for journals to fall into quartiles that cross the ‘top’ and ‘bottom’ half of journals?

We believe the answers to these questions may be consequential, and it is surprising that they have yet to be answered in a systematic way, despite requiring relatively uncomplicated data collection, data cleaning, and descriptive analyses. Perhaps this is the very reason, as while it is of interest to us as curious researchers, it might not meet the narrow expectations of a full-length scholarly (publishable) paper. So, we welcome the opportunity to publish our findings as a ‘short communication’, a somewhat undervalued genre in academic journals, but an important channel to publish small but otherwise robust studies (Joaquin & Tan, 2021).

Journal classification and ranking

Scientific literature databases use various classification systems to categorize their entries in order to organize large volumes of publications, and to facilitate their search and retrieval. Some systems, such as the Chinese Library Classification, classify at the publication-level, although most classification systems are established at the journal level (Shu et al., 2019). The largest and most well-known international databases are Scopus and Web of Science, which have some important similarities. Both use a journal-level classification system, and are both multidisciplinary in nature, with a broad coverage across many research fields. Journals are classified into different subject categories based on their title, scope, or citation patterns (Gómez-Núñez et al., 2011). Though the processes for determining categorization are generally not made available in any detail, it may include a mix of manual and algorithmic approaches. While the actual categorizations used by SJR and JCR are different, they both adopt a hierarchical structure with a large number of lower-level subject areas organized under a smaller number of broader disciplinary themes, and both allow their various journals to potentially be given multiple classifications (Wang & Waltman, 2016). The journals within each lower-level category are divided into quartiles according to the number and weight of citations they have received in the previous two (JCR) or three-year period (SJR). Those in the top quartile are given a Q1 rating, those in the second highest quartile are given a Q2 ranking, and so on.

While in reality a journal quartile ranking indicates no more than relative citation attraction within a specific subject category to allow for the different definitions and conceptualizations of ‘quality’ in different disciplines and traditions, it is regularly conflated with broader journal quality, article quality, and researcher quality. In reference to JCR indicators, Vȋiu and Păunescu (2021) note that they have “become popular not only as a retrospective but also as an a priori impact assessment tool. Problematically, it is used to assess the distinct articles published in a journal and the merit of individual scholars” (p. 1496). While the evidence of this is pervasive, it is often subtle. For example, a quick online search found multiple cases of academic libraries listing bibliometric databases under ‘journal quality indicators’ and similar headings. Researchers are encouraged to publish in journals of a certain ‘standard’ often with reference to citation-based metrics. For example in Australia, institutional guidelines for researchers at Murdoch University (2021) state, “ideally, you want your articles to be published in journals that are ranked in either Q1 or Q2”. As a result, the pressure to publish in highly-cited journals is felt by researchers around the world and at all career stages (e.g. Fernández-Navas et al., 2020).

SCImago Journal & Country Rank (SJR)

In order to answer the research questions in relation to SJR, we began by downloading data for all publications within the freely available platform in mid 2021, with data current as at 2020. After excluding conference proceedings, book series, trade journals, and outlets without a calculated quartile ranking, a total of 24,978 journals were identified for analysis. As shown in Table 1, half of the journals identified were published in Northern Europe and Northern America. While one quarter of journals were published by either Elsevier, Springer, Wiley, or Taylor & Francis, the majority were published by one of thousands of smaller publishers.

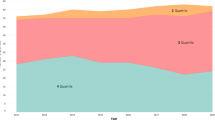

The almost 25 thousand journals included in the analysis were from across 308 of the possible 311 subject categories used by SJR at the time of data collection (this has increased to 313 at the time of writing). Missing only were journals from Dental Assisting, Respiratory Care, and Reviews and References (Medical). One-third of all journals (n = 7561) in the sample were designated to a single subject category, meaning that the majority were designated to more than one (Table 2). This ranged from two up to 13 different subject categories, with an average of 2.4 per journal. These subject categories are further organized under 27 broader subject areas, all of which were represented in the sample. Half of all journals crossed more than one broader subject area. Thus, in response to RQ1, it is common for journals to be categorized into more than one subject area, which includes crossing broad disciplinary boundaries.

Table 3 shows the breakdown of journals against the number of quartile rankings attributed to them. The majority of journals (n= 17,779, 71%) were ranked within a single quartile, of which just over half were assigned to one subject category (n = 10,218, 57%), and as such could only be assigned to one quartile. Around one quarter of all journals were ranked in two different quartiles (n = 6592, 26%), with the majority of those (n = 6264, 95%) being placed in adjacent quartiles. Just over 2% of all journals were ranked in three or more quartiles, including 17 journals that were ranked within all four, meaning that they were simultaneously ranked as Q1, Q2, Q3, and Q4 journals. In response to RQ2, around one-third of all journals (n = 7192, 29%) were assigned to more than one quartile, and therefore this is far from a rare occurrence.

Next we disaggregated the journal data according to the makeup of their quartile rank indicators, providing answers to RQ3 and RQ4. Looking at Table 4, we can see that a total of 935 journals (4%) were placed in non-adjacent quartiles. Further, over 3000 journals (n = 3214, 13%) crossed the central line between the ‘top’ and ‘bottom’ half of journals by citation, including 62 journals that are assigned at the same time within the highest quartile of citations (Q1), and the lowest quartile of citations (Q4).

Journal Citation Reports (JCR)

To answer the same questions in regards to JCR, the subscription-based platform was accessed in early 2022 and all journal data from 2020 were downloaded. Journals from the Social Science Citation Index (SSCI) and Science Citation Index Expanded (SCIE) were included, which both incur an impact factor and corresponding quartile ranking. 57 journals were removed that did not provide details of a quartile ranking. The platform provides much less detailed journal-level data than SJR, and as such we are unable to similarly report publisher details in this section.

The more than 12 thousand journals included in the analysis were from across all of the possible 254 categories used by JCR. Around half of all journals (n = 6709) in the sample were designated to a single subject category, with one third designated to two categories, and others designated from three up to six categories (Table 5). These categories are further organized under 21 broader disciplinary categories, that JCR refers to as ‘groups’ (referred to as ‘subject areas’ by SJR). The lower-level categories may fall under more than one of these broader groups. In this sample, a slight majority of all journals were placed into more than one broader group. As with SJR, it is common for journals to be categorized in the JCR into more than one category at both upper and lower levels.

The majority of JCR journals (n = 9867, 80%) were ranked within a single quartile, of which two thirds (n = 6709, 68%) were assigned to one subject category (Table 6). One-fifth of journals were placed in different quartiles across the different categories they were assigned, and Table 7 shows that 352 journals (3%) were placed simultaneously within non-adjacent quartiles, and 1131 (9%) crossed the line between the top and bottom half of cited journals.

Implications

Journals are often multidisciplinary in nature, and thus being assigned to more than one category is a necessity. Our investigation, which was born out of curiosity regarding what seemed to be a peculiarity in our data, found that it is not uncommon for journals to be placed into different quartiles across the different categories to which they may be assigned. This in itself is understandable, as there are many factors that influence how and why articles are cited (both valid and spurious). However, if citations are a valid measure of broader notions of research/er quality, then we would expect that journals would be ranked similarly across their different categories because the content remains the same. This is clearly not the case, with many journals in both SJR and JCR assigned to more than one quartile ranking. This includes journals assigned to divergent quartiles. We define such journals as those that cross the central line where journal quality (or lack thereof) is arbitrarily but often determined, as well as those that are (also) placed in non-adjacent quartiles, making the “rather arguable assumption that a couple of citations more or less is making a big difference” to research quality even more contentious (Pajić, 2015, p. 990). Such divergent cases involve 3214 (around 13%) of SJR journals, and 1131 (around 9%) of JCR journals, and the many more researchers who contribute to their articles.

For researchers still developing a career and identity, “publications in journals with a high impact factor and number of citations are seen as important signs of recognition”, and thus play an important role in accumulation of credibility (Hessels, et al., 2019, p. 135). A failure to publish in such journals may be manifested as misplaced feelings of inadequacy, but may also have tangible impacts on career progression. It may also impact the ways in which researchers engage with their work, leaning more toward scholarly publishing than other forms of research dissemination that may not be held in the same regard and for which there is less support (Merga & Mason, 2021). In a recent study, Niles et al. (2020) found that while researchers most value journal readership in deciding where to publish, they believe that their peers most value metrics. Broader discourse in scholarly publishing that centers citations as the main indicator of quality may result in engagement in practices that may not align with scholars’ own values, particularly for those in precarious employment. It may empower researchers to know that journals may be perceived as ‘top’ journals and ‘bottom’ journals with the same content, a clear challenge to the notion that citation indicators can accurately measure research/er quality.

There are some efforts in place at different levels to limit the role that citation metrics play in research/er evaluation. The San Francisco Declaration on Research Assessment (DORA) (Raff, 2013), and the Leiden Manifesto (Hicks et al., 2015), are both international initiatives aimed at challenging the inappropriate application of quantitative metrics, both providing a range of actions and recommendations to facilitate change. These international movements have led to the development of various national and institutional policies. For example, China has recently banned institutions from offering financial incentives for publication, a practice which has resulted in an increase in inappropriate publication and citation practices (Mallapaty, 2020). In an Australian example, an alliance between schools of computer science in four universities has published a position statement that begins:

This alliance advocates practical and robust approaches for evaluating research, aligned to those of DORA. Venue [e.g. journal] impact factors and rankings are not measures of the scientific quality nor impact of an article’s research. We strongly discourage inclusion of such rankings in job applications, promotion applications, and other career (-progression) and evaluation processes (Australian Computing Research Alliance, 2022, para. 3).

While the phenomenon of journals being ranked differently across subject categories is common, we believe that it may not be commonly known, or at least the implications have not been engaged with to any serious degree. Although the point of reference for citation-based metrics is, in principle, other journals within the same category, the reality in modern academia is that citations are regularly perceived to be a proxy measure of research quality and researcher success, and there are various systems and practices that enforce this. One way this is perpetuated is through the common practice of journals promoting their ‘best quartile’ on their homepages. Indeed, the SJR platform provides an automated function for journals to share their best quartile. We have yet to find a case where a journal promotes their indicators across the multiple subject categories that may make up the multidisciplinarity of their journal.

Studies in various disciplines have shown that there are many more factors at play than article quality when articles are cited (or not), including the race (e.g. Chakravartty et al., 2018), gender (e.g. Dion et al., 2018), and national affiliation of authors (e.g. Nielsen & Anderson, 2018). Studies published in English (e.g. Liang et al., 2013) and those that report significant results (e.g. Jannot et al., 2013) are more likely to be cited than otherwise, and certain types of studies are privileged over others (e.g. Mendoza, 2012; Slyder et al., 2011; Vanclay, 2013). Further, not all citations equate to a positive evaluation of the original source. Garfield (1964) identified 15 different reasons why authors might cite other works, which in addition to positive applications, also included negative applications such as to criticize previous work, to disclaim previous work, or to dispute specific claims.

It is important that citation metrics are shown and seen in ways that reflect their true function. Our study has shown that a journal may attract different rates of citation by researchers in different fields, resulting in cases where journals may be placed in different quartiles across their different categorizations. This is understandable and even to be expected if quartile rankings are used in a valid way, that is, to measure a journal’s ability to attract citations within a specific category. When defined in this way, it invites reflection on why some journals and articles attract more citations, and to “interrogate how just these [citation] practices are” (Citational Justice Collective et al., 2021, p. 360). However, and despite some counter efforts, the conflation of citation metrics with broader concepts of research/er quality continue. That a journal, with the same content, can be perceived to be both of high quality and low quality at the same time shows the illogicality of such conflation. This adds to the many existing arguments against the continued and ubiquitous application of citation-based metrics as a proxy measure of journal, article, and research quality, and their influence on individual researchers’ prospects for employment, promotion, funding and tenure.

References

Abritis, A., & McCook, A. (2017). Cash bonuses for peer-reviewed papers go global. Science. https://doi.org/10.1126/science.aan7214

Australian Computing Research Alliance. (2022). Joint statement on rankings in computer science. Retrieved from https://www.unsw.edu.au/news/2022/02/statement-on-evaluating-the-quality-of-computer-science-research

Chakravartty, P., Kuo, R., Grubbs, V., & McIlwain, C. (2018). #CommunicationSoWhite. Journal of Communication, 68(2), 254–266. https://doi.org/10.1093/joc/jqy003

Citational Justice Collective, Molina León, G., Kirabo, L., Wong-Villacres, M., Karusala, N., Kumar, N., Bidwell, N., Reynolds-Cuéllar, P., Borah, P.P., Garg, R., Oswal, S.K., Chuanromanee, T., & Sharma, V. (2021). Following the trail of citational justice: critically examining knowledge production in HCI. CSCW'21: Companion Publication of the 2021 Conference on Computer Supported Cooperative Work and Social Computing. 360–363. https://doi.org/10.1145/3462204.3481732

Dion, M., Sumner, J., & Mitchell, S. (2018). Gendered citation patterns across political science and social science methodology fields. Political Analysis, 26(3), 312–327. https://doi.org/10.1017/pan.2018.12

Fernández-Navas, M., Alcaraz-Salarirche, N., Pérez-Granados, L., & Postigo-Fuentes, A. (2020). Is qualitative research in education being lost in Spain? Analysis and reflections on the problems arising from generating knowledge hegemonically. The Qualitative Report, 25(6), 1555–1578. https://doi.org/10.46743/2160-3715/2020.4374

Fire, M., & Guestrin, C. (2019). Over-optimization of academic publishing metrics: Observing Goodhart’s Law in action. GigaScience, 8(6), giz053. https://doi.org/10.1093/gigascience/giz053

Garfield, E. (1964). Can citation indexing be automated? In M.E. Stevens, V.E. Giuliano, & L.B. Heilprin (Eds.), Statistical Association Methods for Mechanized Documentation (pp. 189–192). National Bureau of Standards. Retrieved from http://garfield.library.upenn.edu/essays/V1p084y1962-73.pdf

Gómez-Núñez, A. J., Vargas-Quesada, B., de Moya-Anegón, F., & Glänzel, W. (2011). Improving SCImago Journal & Country Rank (SJR) subject classification through reference analysis. Scientometrics, 89, 741–758. https://doi.org/10.1007/s11192-011-0485-8

Hessels, L. K., Franssen, T., Scholten, W., & de Rijcke, S. (2019). Variation in valuation: How research groups accumulate credibility in four epistemic cultures. Minerva, 57, 127–149. https://doi.org/10.1007/s11024-018-09366-x

Hicks, D., Wouters, P., de Waltman, L., Rijcke, S., & Rafols, I. (2015). Bibliometrics: The Leiden Manifesto for research metrics. Nature, 520, 429–431. https://doi.org/10.1038/520429a

Jain, A., Khor, K. S., Beard, D., Smith, T. O., & Hing, C. B. (2021). Do journals raise their impact factor or SCImago ranking by self-citing in editorials? A bibliometric analysis of trauma and orthopaedic journals. ANZ Journal of Surgery, 91(5), 975–979. https://doi.org/10.1111/ans.16546

Jannot, A.-S., Agoritsas, T., Gayet-Ageron, A., & Perneger, T. V. (2013). Citation bias favoring statistically significant studies was present in medical research. Journal of Clinical Epidemiology, 66(3), 296–301. https://doi.org/10.1016/j.jclinepi.2012.09.015

Jones, T., Huggett, S., & Kamalski, J. (2011). Finding a way through the scientific literature: Indexes and measures. World Neurosurgery, 76(1/2), 36–38. https://doi.org/10.1016/j.wneu.2011.01.015

Jonkers, K., & Zacharewicz, T. (2016). Research performance based funding systems: A comparative assessment. JRC science for policy report. European Commission. Retrieved from https://publications.jrc.ec.europa.eu/repository/bitstream/JRC101043/kj1a27837enn.pdf

Joaquin, J. J., & Tan, R. R. (2021). The lost art of short communications in academia. Scientometrics, 126, 9633–9637. https://doi.org/10.1007/s11192-021-04192-7

Liang, L., Rousseau, R., & Zhong, Z. (2013). Non-English journals and papers in physics and chemistry: Bias in citations? Scientometrics, 95, 333–3501. https://doi.org/10.1007/s11192-012-0828-0

Liu, W., Hu, G., & Gu, M. (2016). The probability of publishing in first-quartile journals. Scientometrics, 106, 1273–1276. https://doi.org/10.1007/s11192-015-1821-1

Mallapaty, S. (2020). China bans cash rewards for publishing papers. Nature, 579, 18. https://doi.org/10.1038/d41586-020-00574-8

Mañana-Rodríguez, J. (2015). A critical review of SCImago Journal & Country Rank. Research Evaluation, 24(4), 343–354. https://doi.org/10.1093/reseval/rvu008

Mason, S., Merga, M. K., González Canché, M. S., & Mat Roni, S. (2021). The internationality of published higher education scholarship: How do the ‘top’ journals compare. Journal of Informetrics, 15(2), 101155. https://doi.org/10.1016/j.joi.2021.101155

McKiernan, E. D., Schimanski, L. A., Muñoz Nieves, C., Matthias, L., Niles, M. T., & Alperin, J. P. (2019). Meta-research: Use of the Journal Impact Factor in academic review, promotion, and tenure evaluations. eLife Sciences, 8, e47338. https://doi.org/10.7554/eLife.47338

Mendoza, M. (2012). Differences in citation patterns across areas, article types and age Groups of researchers. Publications, 9(4), a47. https://doi.org/10.3390/publications9040047

Merga, M. K., & Mason, S. (2021). Perspectives on institutional valuing and support for academic and translational outputs in Japan and Australia. Learned Publishing, 34(3), 305–314. https://doi.org/10.1002/leap.1365

Miranda, R., & Garcia-Carpintero, E. (2019). Comparison of the share of documents and citations from different quartile journals in 25 research areas. Scientometrics, 121, 479–501. https://doi.org/10.1007/s11192-019-03210-z

Murdoch University. (2021). Measure research quality and impact—research guide. Retrieved from https://libguides.murdoch.edu.au/measure_research/journal

Nicholas, D., Rodríguez-Bravo, B., Watkinson, A., Boukacem- Zeghmouri, C., Herman, E., Xu, J., & Swigon, M. (2017). Early career researchers and their publishing and authorship practices. Learned Publishing, 30, 205–217. https://doi.org/10.1002/leap.1102

Nielsen, M. W., & Andersen, J. P. (2021). Global citation inequality is on the rise. Proceedings of the National Academy of Sciences (PNAS), 118(7), e2012208118. https://doi.org/10.1073/pnas.2012208118

Niles, M. T., Schimanski, L. A., McKiernan, E. C., & Alperin, J. P. (2020). Why we publish where we do: Faculty publishing values and their relationship to review, promotion and tenure expectations. PLoS ONE, 15(3), e0228914. https://doi.org/10.1371/journal.pone.0228914

Pajić, D. (2015). On the stability of citation-based journal rankings. Journal of Informetrics, 9(4), 990–1006. https://doi.org/10.1016/j.joi.2015.08.005

Raff, J. W. (2013). The San Francisco declaration on research assessment. Biology Open, 2(6), 533–534. https://doi.org/10.1242/bio.20135330

Rice, D. B., Raffoul, H., Ioannidis, J. P. A., & Moher, D. (2020). Academic criteria for promotion and tenure in biomedical sciences faculties: Cross sectional analysis of international sample of universities. The BMJ, 369, m2081. https://doi.org/10.1136/bmj.m2081

Robinson-Garcia, N., Torres-Salinas, D., Herrera-Viedma, E., & Docampo, D. (2019). Mining university rankings: Publication output and citation impact as their basis. Research Evaluation, 28(3), 232–240. https://doi.org/10.1093/reseval/rvz014

Schotten, M., el Aisati, M., Meester, W. J. N., Steiginga, S., & Ross, C. A. (2017). A brief history of Scopus: the world’s largest abstract and citation database of scientific literature. In F. J. Cantú-Ortiz (Ed.), Research Analytics (pp. 31–58). Auerbach Publications.

Shu, F., Julien, C. A., Zhang, L., Qiu, J., Zhang, J., & Larivière, V. (2019). Comparing journal and paper level classifications of science. Journal of Informetrics, 13(1), 202–225. https://doi.org/10.1016/j.joi.2018.12.005

Slyder, J. B., Stein, B. R., Sams, B. S., Walker, D. M., Beale, B. J., Feldhaus, J. J., & Copenhaver, C. A. (2011). Citation pattern and lifespan: A comparison of discipline, institution, and individual. Scientometrics, 89, a955. https://doi.org/10.1007/s11192-011-0467-x

Tian, M., Su, Y., & Ru, X. (2016). Perish or publish in China: Pressures on young Chinese scholars to publish in internationally indexed journals. Publications, 4(9), 1–16. https://doi.org/10.3390/publications4020009

United Nations. (1998). Standard country or area codes for statistics use, 1999 (Revision 4). Retrieved from https://unstats.un.org/unsd/methodology/m49

Vanclay, J. K. (2013). Factors affecting citation rates in environmental science. Journal of Informetrics, 7(2), 265–271. https://doi.org/10.1016/j.joi.2012.11.009

Vȋiu, G. A., & Păunescu, M. (2021). The lack of meaningful boundary differences between journal impact factor quartiles undermines their independent use in research evaluation. Scientometrics, 126, 1495–1525. https://doi.org/10.1007/s11192-020-03801-1

Wang, Q., & Waltman, L. (2016). Large-scale analysis of the accuracy of the journal classification systems of Web of Science and Scopus. Journal of Informetrics, 10, 347–364. https://doi.org/10.1016/j.joi.2016.02.003

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors have no relevant financial or non-financial interests to disclose.

Rights and permissions

About this article

Cite this article

Mason, S., Singh, L. When a journal is both at the ‘top’ and the ‘bottom’: the illogicality of conflating citation-based metrics with quality. Scientometrics 127, 3683–3694 (2022). https://doi.org/10.1007/s11192-022-04402-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-022-04402-w