Abstract

This article analyzes the effect of country size, level of funding, method of financing, and ways of collaboration on scientific publication output in terms of the number of articles published and citations received in the scientific literature across national research systems. This article takes an initial step toward integrating mentioned aspects into one analysis because previously, they have been studied incoherently as separate issues. The study encompasses European countries using data provided by Clarivate Analytics, the European Commission and Eurostat. Based on the empirical analysis, three conclusions emerge. Firstly, we have to reject the proposition that the function of scientific production exhibits increasing returns to scale. Secondly, transnationally coordinated research projects have a strong positive effect on countries’ number of articles and citations. Smaller countries participate proportionally more in transnationally coordinated research and are therefore more affected by the phenomena of hyperauthorship. This explains why several small nations perform above their weight in impact relative to spending. Thirdly, the share of competitive project-based funding does not affect the number of articles published but has a U-shaped relationship with research impact per article, pointing toward two alternative financing strategies for maximizing impact based on high or low share of project-based funding. Based on the analysis, we present strengths and weaknesses of European countries’ research systems for policy purposes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

What characteristics of research systems influence scientific output? That question has a long tradition in the economics of science. Numerous works throughout the last decades have shown that bibliometric data can be used to answer this type of question and to compare the performance and effectiveness of research systems across countries (King, 2004; May, 1997). Garfield (1979), one of the founders of bibliometrics, held the opinion that if the scientific literature reflects research activities, then a comprehensive multidisciplinary reference index provides an appropriate overview of them.

Previous research using cross-national and historical comparison has shown that investments in research and development (R&D) do not solely determine the differential growth of scientific productivity and visibility (Powell & Dusdal, 2017). Many other factors affect bibliometric research impact besides the level of funding such as size (Allik et al., 2020), hyperauthorship (mass authorship) (Hirv, 2019; Thelwall, 2020), collaboration (Adams, 2012; Wagner et al., 2018) and the way of financing (Auranen & Nieminen, 2010; Dialogic/Empirica, 2014; Jonkers & Zacharewicz, 2015). These factors have not been studied simultaneously, but rather incoherently as separate issues. This article takes an initial step toward integration by using Lepori’s (2011) theoretical structure of public research funding to bring relevant characteristics together systematically. In this scheme, public funding is viewed as a multi-level, multi-actor system in which stable patterns are mostly formed through collective interaction among actors and where coordination among actors is critical to system performance. The scheme gives an overview of public research funding, distinguishing between four organizational layers—the policy layer, funding agencies, performing organizations and research groups/individual researchers—as well as two main allocation methods, core funding to research organizations and project funding to research groups.

Despite the complexities of research funding systems and the vast differences between them, they fall under the theoretical structure of public research funding proposed by Lepori (2011). In order to contribute to research on scientific productivity, we investigate simultaneously how returns to scale, level of funding, way of financing and forms of collaboration (e.g., international and industry–university collaboration) affect research impact in terms of the number of research articles published and citations received. Based on our adaptation of Lepori’s (2011) work on the research system’s theoretical structure, we have three research hypotheses on what could affect countries’ scientific impact. These hypotheses could bring a new perspective to debates on the science production function’s returns to scale and effects of competitive funding as well as explain why some small countries perform significantly better than expected based on their R&D spending.

Firstly, we propose that the size of the research system affects countries’ citation rates positively. In larger research systems, there is more room for specialization and diversification, which are associated with higher research impact (Herron et al., 2016; Parker et al., 2013). We argue that digital innovations have significantly reduced transaction costs of collaboration (Goldfarb & Tucker, 2019) and increased the role of specialization and diversification. Since co-authorship has increased from 1900 onward (Lariviere et al., 2015), it is also reasonable to assume that increasing returns to scale, described by total number of researchers in the system, is associated with higher scientific impact.

Secondly, we propose that transnationally coordinated research has a positive effect on the country’s citation rates per article. This question has increased in relevance as the relationship between public funding and scientific output has become more complicated than previously believed. Scholars have recently found that research done in consortia has significantly higher citation impact than the world average (Thelwall, 2020), and small countries participate proportionally more in consortia than larger ones (Must, 2014). This could explain why several small nations perform above their weight in impact relative to spending (ibid).

Thirdly, we propose that the financing model for research organizations and individuals (e.g., core versus project-based funding) has a significant impact on the number of research articles published and citations received. Many have noted that countries differ considerably in terms of the efficiency of turning financial inputs into bibliometrically measurable outputs (Guan & Zuo, 2014; Leydesdorff & Wagner, 2009; Rousseau & Rousseau, 1997). We expect the share of project-based funding to increase the number of citations per article but also to decrease the number of articles published as a consequence of higher transaction costs.

In this study, we first give an overview of the literature on factors affecting national scientific impact. Secondly, we construct a regression model to test the proposed hypotheses. Relevant metrics are retrieved from Clarivate Analytics, the European Commission and Eurostat. Based on the results, we discuss the strengths and weaknesses of the national research systems of European countries.

General scheme of the research system

In the early 1960s, Nelson (1959) and Arrow (1962) laid the foundation for the economics of science. These two works accentuate that scientific non-rivalry and excludability—that its use by someone does not reduce its availability to others—make science a public good and prevent firms from obtaining exclusive benefits through investment. As a result, most of this public good is produced in publicly funded basic research, which typically leads to peer-reviewed publications as an output (Sugimoto & Larivière, 2018). Garfield (1979) developed the publishing and referencing concept as a method to evaluate research, thereby creating the science of bibliometrics. The computer age has made the use of bibliometrics easy; however, simplified use of it has raised legitimate concerns among its critics that it mechanizes science evaluation and overlooks intrinsic value being evaluated (Bornmann & Haunschild, 2017).

Determinants of the scientific productivity of countries described in this study are based on our adaptation of Lepori’s (2011) work on the theoretical structure of research systems. The theoretical framework of how different aspects interact with each other and can influence countries’ scientific impact is outlined in Fig. 1. Our adaptation gives an overview of the public research system by distinguishing among five layers—scale layer, policy layer, funding agencies, performing organizations, and research groups/individual researchers—and between two allocation methods (core and project-based funding) for how resources are brought down to research groups and individuals. We made three substantial changes in comparison to Lepori’s (2011) initial scheme. Firstly, we put research impact to the top of the scheme which was previously left implicit. Secondly, we added the scale layer to reflect possible size advantages of larger research systems due to increasing returns to scale. Thirdly, we added the possibility to participate in transnationally coordinated research to the policy layer.

Source: author’s adaptation based on Lepori’s (2011) model

An overall view of public research systems.

Based on the scheme elaborated in Fig. 1, we investigate how financial resources are brought down from the policy layer to the layer of research groups and individuals (core vs project-based funding) and the overall effect on research systems at the country level. We also take into consideration the collaboration activities of research groups and individuals such as university–industry collaborations (UIC) and collaborations with scientists from abroad.

Determinants of the scientific productivity of countries

In this article, we focus only on factors brought out in our adaptation of Lepori’s (2011) scheme of research systems and leave factors such as language barriers (Van Leeuwen et al., 2001), political stances (Gantman, 2012), and history (Allik et al., 2020) aside. We assume these factors have no effect because of the current homogeneity of Europe. Previous empirical findings on how factors mentioned in our adaptation can affect a country’s scientific impact are outlined in the following.

Returns to scale

An awareness of the production function’s returns to scale is crucially important in order to allocate resources efficiently. Schumpeter (1942) argues that only large firms could induce technological change because small firms are incapable of allocating the optimal level of resources for R&D. It is stated that in some cases, interactions among researchers, fixed setup costs (ibid; Romer, 2012) or even choice of research topics could lead to a situation where doubling capital and labor more than doubles the output. Although Schumpeter received heavy criticism (Fisher & Temin, 1973), his theory has stayed relevant since.

Recent findings on returns to scale in science production are primarily based on university-level data, where results are mixed. All the possible returns to scale are presented in those studies, including (1) increasing (Koshal & Koshal, 1999; Laband & Lentz, 2003); (2) decreasing (Worthington & Higgs, 2011); and (3) constant returns to scale (Abramo et al., 2012; Bonaccorsi & Daraio, 2005; Clermont et al., 2015).

Results from the macro-level (country) are also mixed. Some state that returns to scale is country-dependent and varies from decreasing to increasing (Guan & Zuo, 2014; Sharma & Thomas, 2008), while others find that smaller countries seem to have an advantage over larger ones in producing highly cited articles (Allik et al., 2020). On the other hand, others reject constant and decreasing returns to scale for publications and citations and point toward only increasing returns to scale (Crespi & Geuna, 2008). A possible explanation for why some studies have shown increasing returns in small countries and decreasing in large ones could come from the methodology of how research publications were counted. The full counting scheme is found to boost the position of more internationally tied countries with a relatively weak science base in relation to scientifically stronger but less internationally connected countries (Gauffriau et al., 2007).

Including international collaboration could bring out the significance of increasing returns to scale on the country level. Recent research has found digital innovations that have significantly reduced the transaction costs of collaboration (Goldfarb & Tucker, 2019) and increased the role of specialization and diversification. In larger economies, there is more room for both, which are associated with higher research impact (Cimini et al., 2014; Herron et al., 2016; Parker et al., 2013).

Expenditure on R&D

Expenditure on R&D is the most significant factor determining the number of articles and their citations. According to Griliches (1979), the scientific output \(Y\) is a function \(F\left( {X, \;K,\; u} \right)\) of conventional inputs of production X, such as labor and capital, the current level of technological knowledge K, and unmeasured determinants of output and productivity. Expenditure on R&D covers both capital and labor used in the production of science. Causality is argued from the observation that funding is required to pay for researchers’ time, other resources needed to conduct research and to publish the results (Shelton, 2020).

The link between financial resources available and scientific impact has been repeatedly demonstrated in recent literature (Docampo & Bessoule, 2019; Shelton, 2020; Vinkler, 2018). It is a general understanding that national GDP has a linear or exponential relationship with scientific publications (Allik, 2013; Docampo & Bessoule, 2019; King, 2004; May, 1997; Mueller, 2016). Although national GDP and expenditure on R&D are highly correlated, they are not the same. It is assumed that R&D expenditures do not depend on real needs because economically well-off countries can afford to spend more money, while developing countries less on scientific research (Vinkler, 2008)

Governmental R&D expenditures (GERD) are found to be directly proportional to the number of scientific articles published (Cimini et al., 2014; Shelton, 2020) and are the main source of the heterogeneity in the scientific output of the EU countries (Pastor & Serrano, 2016). Also, it is noted that more government spending does not necessarily result in more citations because additional government funding is not always absorbed by authors and institutions who produce highly cited research (Leydesdorff et al., 2018).

Large transnationally coordinated research projects

The relationship between public funding and scientific output has become more complicated than previously believed due to the prevalence of large publicly funded, transnationally coordinated R&D projects. For example, in 2010, approximately 3.8 percent of EU Member States’ R&D budgets was directed to these projects (Eurostat, 2010). Transnationally coordinated R&D projects are seen as policy instruments through which states pursue their policy objectives (Reale et al., 2013). Smaller states compensate for their lack of size and specialization by funding and participating in this type of collaboration (ibid). Furthermore, many modern scientific challenges cannot be solved without international networks involving a large number of countries and sometimes even thousands of collaborators (Adams, 2012; Castelvecchi, 2015).

Transnationally coordinated research projects are linked with hyperauthorship (mass-authorship), which is seen as the postmodern pervasion of science (Cronin, 2001), because it is doubtful that all collaborators could have possibly written, edited and approved the final work. The exact threshold which marks the start of hyperauthorship is open to debate. Based on King’s (2012) and Pacchioni’s (2018) suggestions, we interpret articles with more than 50 authors as hyper-authored.

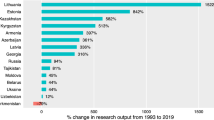

Like hyperauthorship, there is no agreed way to measure the output of transnationally coordinated R&D projects. One method is through a proxy. As we can see in Fig. 2, the total number of hyper-authored articles and investments made to transnationally coordinated research have a very strong correlation (r = 0.88). Therefore, hyper-authored articles are a reasonably good proxy for measuring the scientific output of transnationally coordinated research.

Large publishing consortia produce highly cited research, but, at the same time, it is harder to evaluate them bibliometrically. Determining the allocation of credit when publishing in consortia is especially a problem for smaller countries that participate more actively in consortia relative to their size than medium-sized or large countries (Must, 2014). For example, in the case of Estonia, articles with up to 50 authors account for 66 percent of total citations (Hirv, 2019). In addition, an increase in consortia publishing during the decade 2005–2015 led to a significant increase in overall impact (ibid), even though Estonian expenditures on R&D were decreasing during the observed period (Lauk & Allik, 2018).

Additionally, we have to keep in mind that although countries’ participation rates in transnationally coordinated research could be similar, the projects in which countries participate could be different. We expect the effect of transnational coordination to vary between countries.

Core versus project-based funding

There are two main ways to fund research: project-based funding and core funding. According to Eurostat, project funding is part of government budget allocations for R&D (GBARD) provided to a group or individual to carry out an R&D activity with a limited scope, budget and time frame, typically based on the submission of a project proposal specifying the research activities to be carried out (Eurostat, 2020). On the other hand, core (institutional) funding is defined as the part of GBARD granted to institutions with no direct selection of R&D projects or programs to be carried out. Under this type of funding, the receiving institution, not the funding organization, has authority over the R&D projects that are to be carried out (ibid). In the case of core funding, research agencies are skipped, and a state allocates money from the state budget to research organizations, such as universities, for their normal functioning. It is important to note that the divide between project-based and core funding has narrowed since some countries have introduced performance-based funding formulas (Klein, 2015; Salomon-Fernandez, 2014). When project-based and core funding are compared, researchers usually do not focus on absolute amounts of funding but rather on the balance between these two methods.

The share of project-based funding varies notably in European countries (Jongbloed & Lepori, 2015; Reale, 2017), demonstrating significant differences between countries on how they are pursuing research excellence, economic and social impact (Lepori et al., 2007; Van Steen, 2012). As noted by many authors (Guan & Zuo, 2014; Leydesdorff & Wagner, 2009; Rousseau & Rousseau, 1997), countries differ considerably in terms of the efficiency of turning (financial) input into bibliometrically measurable output. Some of these differences could be explained by the variation of funding scheme used.

Competitive funding mechanisms for project-based funding have a number of common features: a call for proposals, a panel assessment, peer review, scoring, award of funding for a limited time and follow-up (OECD, 2018). According to García and Sanz-Menéndez (2005), research allocation, especially in the case of project-based funding, goes through the scientist’s credibility cycle. The scientist’s credibility cycle is a relationship between production, communication and collective evaluation of the results that expands through the process of competing for funding to carry out research. In the setting of project-based funding, government agencies select the agent who best meets their goals. In contrast, research agents decide where to apply for funding and strategically develop their competencies, depending on the available sources of funds (Lepori et al., 2009). The competition takes place concerning quality, while the price is fixed. Research groups need to decide if it is reasonably feasible to achieve the level of quality required and if its cost is worth the price, depending on the other options available and the perceived position of potential competitors. In the evaluation of research proposals, government agencies take into account agent credibility (reputation), which is based on their past scientific achievements (usually number of articles and citations received) (García & Sanz-Menéndez, 2005).

The evidence that excellence in science is linked to competition between researchers is mixed (Auranen & Nieminen, 2010; Dialogic/Empirica, 2014; Jonkers & Zacharewicz, 2015). Although the broader conclusion is that although increased competition in research funding allocation is associated with higher performance, the underlying situation is more complicated and subject to contextual factors (Georghiou, 2013), leaving the question of which is the most effective way to improve research performance open to discussion. The main advantages of project-based funding from the literature contain the following: (1) tendency to increase quality and relevance of research proposals; (2) way to ensure research proposals meet a minimum standard of quality; (3) opportunity to test their ideas among peers; and (4) fair and impartial way of distributing funds (OECD, 2018). On the other hand, project-based funding has got some potential side effects too. For example, with regard to the quality of science, it has been found that competition-based funding leads to the emergence of mainstream, thematically stable but also lower-quality science (Laudel, 2006; Sandstrom & Van den Besselaar, 2018) and also puts considerable costs on the system (Cocos & Lepori, 2020; Gross & Bergstrom, 2019).

We suggest that there are transaction costs in the case of project-based funding in the form of a decreased number of research articles. Competitive project-based funding via funding agencies does guarantee research quality, but it comes with a cost. In addition to higher monetary costs, it also has non-monetary transaction costs, such as time spent on writing research grants and follow-up monitoring. Obtaining funds from any source requires the actors to apply for funds, negotiate and conclude contracts, monitor the project’s implementation and submit reports to the funder (Raudla et al., 2015). The time that goes into these administrative duties is the time that does not go into doing research.

International collaboration

Alongside funding and way of financing, collaboration has been found to play a crucial role in the innovation process (De Solla Price & Beaver, 1966). From 1900 onward, co-authorship and international collaboration have been increasing (Lariviere et al., 2015) and are seen as crucial to success in science (Adams, 2013; Figg et al., 2006; Glanzel, 2001; Glänzel & Schubert, 2005; Moed, 2005). For example, a fraction of papers that are entirely ‘homegrown’ is falling in countries that are at the forefront of science (Wagner & Jonkers, 2017).

International collaboration allows scientists to combine conventional production inputs, such as labor and capital, and exchange technological knowledge internationally. In many instances, international collaboration is necessary because specific technological knowledge or/and capital needed for scientific breakthroughs are difficult or impossible to find within country borders. In the current age, internationally collaborated projects account for at least 20 percent of nationally funded research (Wagner & Jonkers, 2017).

International co-authorship has been very thoroughly researched. On average, publications with international collaboration have higher citation rates than domestic papers (Adams, 2013; Wagner et al., 2018). It is found that openness among advanced science systems is strongly correlated with impact—the more internationally engaged a nation is in terms of co-authorship and researcher mobility, the higher the impact of scientific work (Wagner et al., 2018). Also, it is noted that when the effect of the number of authors is taken into account, the difference in citation impacts between international and domestic publications becomes significantly smaller (Puuska et al., 2013).

University–industry collaboration

In the present era, we cannot overlook university–business collaboration (UIC). National governments support knowledge transfer from universities to the industry as part of their innovation and competitiveness agenda. According to Leydesdorff and Henry (1996), economic, scientific and social development takes place in academia, industry and government. Existing literature on UIC has focused predominantly on how firms can benefit from collaborations with academic institutions, and the effects on academia have been covered to a lesser extent.

University–industry articles are more cited on average than single-university research, indicating that university researchers can often increase their research impact by collaborating with industry researchers (Abramo et al., 2009; Lebeau et al., 2008; Morillo, 2016). We have to keep in mind that this is not the case every time, even though there tend to be positive expectations from collaboration (Bloch et al., 2019). Moreover, papers that mention having received industry funding receive more citations if their results are favorable to industry (Farshad et al., 2013; Kulkarni et al., 2007).

Data

Metrics used in this article are retrieved from Eurostat, European Commission reports and the online InCites database, a research analytics solution provided by Clarivate Analytics (2018). InCites has the advantage of extensive coverage of recognized and widely read scientific journals. The present study uses metrics from 2008 to 2018 and covers 30 countries (28 EU Member States plus Iceland, Norway and Switzerland). For the basis of this analysis, the Essential Science Indicators (ESI) scheme from the InCites database is used. Selected documents include research and review articles from the Science Citation Index Expanded and Social Science Citation Index. The articles’ country identification is based on the article’s address section. If there are two country addresses, then it is read as a product of international collaboration, and an article is designated to all collaborated parties. Unfortunately, due to InCites limitations (Todeschini & Baccini, 2016), fractional counting, as recommended by numerous experts in the field (Gauffriau & Larsen, 2005; Leydesdorff et al., 2018), could not be applied.

In addition to the number of articles, we measure articles’ scientific impact via their citations. Using the number of articles and their citations as an output of production is common practice in the field of scientometrics (Waltman, 2016). Variables used in the study are normalized in order to avoid problems with the difference in scale between countries. For example, GBARD for each country is normalized by per capita. Detailed descriptions of dependent and independent variables used in the econometric models, their sources and normalization are shown in Table 1.

Descriptive statistics of the main variables are shown in Table 2. Missing values in GBARD (3) and RESEARCHERS (13) are dealt with by using linearly interpolated and extrapolated values. In the case of COMPETITION, where a majority of observations (166) are missing, we use country averages for the entire period because core funding is relatively stable (Zacharewicz et al., 2019) and countries are not required to regularly report it to Eurostat.

The sample average for CNCI is 132.5 and has a minimum of 56 and a maximum of 232. On average, countries included in the analysis published 1542 articles per million inhabitants. Research system size measured by full-time researchers varies greatly. The sample average is approximately 58,000, but a country with the smallest research system has only around 500 full-time researchers. The proportion of hyper-authored articles is low, but in the case of smaller countries, it can peak as high as 18 percent. In general, countries are more tilted toward institutional funding, as seen in a moderately low share of project-based funding. The data also confirms that international collaboration has become the norm. The mean share of internationally co-authored articles exceeds 50, varying between 32 and 86 percent. As expected, the number of publications with industry collaboration is low, averaging slightly above four.

The correlation matrix of main variables is presented in Table 3. Based on correlations, the best predictor of research impact per article is an international collaboration (r = 0.75). The number of articles has the strongest correlation with GBARD (r = 0.87).

Model

In order to deal with the invariant variable and to get the most information out of the given data, we use a within‑between random effects (REWB) model proposed by Allison (2009) that includes random effects (RE), i.e., between-group and within-group estimates.

The econometric models constructed, where the dependent variable is CNCI or number of articles published, are described as follows:

whereby

\(~\overline{{\ln {\text{RESEARCHERS}}_{{\text{i}}} }} ,\;~\overline{{\ln {\text{GBARD}}_{{\text{i}}} }} ,~\;\overline{{{\text{HYPER}}_{{\text{i}}} }} ,~\;\overline{{~{\text{COLLAB}}_{{{\text{i}}~}} ,{\text{INDUSRY}}_{{\text{i}}} ~}} ,~\;\overline{{{\text{COMP}}_{{\text{i}}} }}\) and \(\overline{{~{\text{COMP}}_{{\text{i}}}^{2} }}\) represent clustered means of a country i. When subtracting the country-specific mean from each of their respective values at time t, those differences become fully independent explanatory variables just like in the fixed effect (FE) model. Therefore, the coefficients of \(\beta_{1}\)–\(\beta_{5}\) will have the same interpretation as in the FE model, since they capture within effects. More importantly, the coefficients of \(\gamma_{1}\)–\(\gamma_{7}\) represent between effects, as they capture a change in any of the country-specific means, which are time-invariant. Time dummies \(\delta_{{\text{t}}}\) are also included in the models to take into account macroeconomic trends.

All other dependent variables besides the number of full-time researchers \(\left( {{\text{RESEARCHERS}}_{{\text{i}}} } \right)\) and project-based funding (\({\text{COMP}}_{{\text{i}}} )\) are time-varying. The number of researchers did not vary sufficiently to create a within-cluster variable. We interpret the country’s random effect (ui) as a country-specific ‘unknown ability’. Project-based funding square is added to have more flexibility in the case when the effect is nonlinear. Variables used in the models are either measured in percentage points or taken into a logarithmic form to assure the comparability of variables. A logarithmic unit is chosen for its familiarity: in small quantities, a log point is equivalent to a percentage point.

It is important to recognize that there are lags between changes in research funding and outputs (publications), and between outputs and their impact (King, 2004). Therefore, we use a lagged funding variable and assume that a change in expenditures shows an effect in output with a 4-year lag (t–4). Therefore, expenditures made on research in 2008 would affect research output in 2012. The length of the lag was decided based on a panel vector autoregression model (Abrigo & Love, 2016).

Results

The main results are shown in Table 4. We find that project-based funding does not vary within-groups and therefore cannot be used to create a within-group regressor. For interpreting the analysis, we are interested mostly in between-group estimates (BE) because those are more relevant for comparing countries.

Researchers

Surprisingly, results do not confirm the proposition that the research system size has an effect on research impact.

GBARD

When GBARD increases within-group by one percent, the number of research articles per million inhabitants increases by 0.84 percent. The same number for the between-group estimate is 0.18 percent. A significant difference amongst within and between estimates hints that there is some contextual factor(s) underneath. For example, whole counting could benefit more internationally linked countries with a relatively weaker research base in comparison to scientifically advanced but less internationally connected countries.

A one percent between-group increase in GBARD increases citation impact per article by 0.16 points, confirming that GBARD is the main source of heterogeneity between EU countries’ scientific output (Pastor & Serrano, 2016). For example, when holding other variables constant, the country with the lowest level of funding (Bulgaria) would only have a citation impact of 112. At the same time, the country with the highest level of funding (Luxembourg) would have a citation impact of 151.

Hyperauthorship

A one percentage point within-group increase in the proportion of hyper-authored articles increases the number of articles published per million inhabitants by 1.7 percent. In addition, transnationally coordinated research has a significant positive effect on citation rates. A one percentage point increase in the proportion of transnationally coordinated research articles increases CNCI by 3.19 points, explaining why several small nations perform above their weight in citations relative to spending. For example, holding other variables constant, the country with the highest proportional participation rate in transnationally coordinated research (Cyprus) gains 29 CNCI points over the country with the lowest proportional participation rate (United Kingdom). It is also important to highlight that the effect of transnationally coordinated research on citations is homogenous for countries (i.e., within and between-group estimates do not differ).

Competition

Contrary to our expectations, the share of project-based funding does not affect the number of research articles produced. On the other hand, project-based funding has a nonlinear U-shaped relationship with citation impact, showing that there are two alternative financing strategies to maximize research impact per article, namely having a strong preference toward core or project-based funding. A U-shaped relationship is, to some extent, consistent with previously found mixed results. Firstly, a U-shaped relationship is tricky, and when a model’s specification is not flexible and does not allow nonlinearity, then the actual U-shaped relationship would come off as a non-significant linear relationship. Secondly, in a sample where there are not enough observations with a high share of project-based funding, it can seem that project-based funding is only associated with mainstream, thematically stable, and lower quality science (Laudel, 2006). Thirdly, when there are not enough observations with a low share of project-based funding, then only a positive tendency to increase research quality would emerge.

Collaboration

A one percentage point increase in collaboration decreases the number of articles produced within-group by 1.7 percent, but the change between-groups is insignificant. It shows there are some contextual factors underneath.

A one percentage point increase in international collaboration increases between-group citation impact by 1.31 points, which aligns with the understanding that collaboration is crucial to success in science. When holding other variables constant, the country with the highest level of international collaboration (Luxembourg) would have a citation impact of 165, while the country with the lowest level (Poland) would have a citation impact of 104. We have to keep in mind that larger countries probably have more domestic collaboration that is to some extent if not totally interchangeable with international.

Industry

Also, UIC is an important predictor of the between-group difference in research impact. A one percentage point increase in UIC increases between-group citation impact by 4.07 points. When holding other variables constant, the country with the lowest level of UIC (Romania) would have a citation impact of 129. At the same time, the country with the highest level of UIC (Switzerland) would have a citations impact of 155.

Country level results

The significant factors forming the basis of our discussion of countries’ strengths and weaknesses are presented in Table 5, where countries are ranked based on their CNCI. All linearly interpretable variables are presented as a heatmap with green indicating strengths and red weaknesses. The column describing the importance of the funding scheme is left colorless because of the nonlinearity and is visualized separately. The country’s random effect (ui) is interpreted as a country-specific ‘unknown ability.’

We see that countries from the former Eastern Bloc are strongly disadvantaged by low governmental expenditures on R&D. The problem of underfinancing is the largest in Romania, followed by Bulgaria and Latvia. Countries with smaller research systems, such as Cyprus, Estonia, Bulgaria and Iceland, participate far more proportionally in transnationally coordinated research than others. This is the reason why these countries are surprisingly successful in terms of citations when whole counts are used.

Share of project-based funding has a U-shaped relationship with research impact measured in citations. Figure 3 shows the predicted citation impacts of countries when other variables are held constant at their respective means. When policymakers are only interested in citations, then a strong preference for core or project-based funding is preferred. As seen in the figure, only Malta, Italy, Latvia and Estonia are strongly tilted to either extreme.

Most microstates collaborate more internationally than large ones. Luxembourg collaborates internationally more than other countries and is the most active in that regard. Luxembourg is followed by Iceland, Cyprus and Malta. Larger countries do not collaborate so much internationally, probably because they can find suitable partners and necessary know-how within their own country.

UIC is especially frequent in rich countries where expenditures on R&D are also high. This is understandable because more prosperous economies are more knowledge-based and more dependent on new knowledge and information (OECD, 2006). This is most seen in Switzerland, Belgium and Scandinavian countries (Denmark, Sweden, Norway, Iceland). The former Eastern Bloc countries are notably lagging in UIC.

As for unknown ability (ui), Greece, Iceland and Romania have a noticeable advantage, while Slovakia has a significant disadvantage. Unfortunately, what is behind these numbers is only a speculation, hence its name. Iceland has a tiny research system, but they have ten out 3000 in Thomson Reuters’ list of the most cited researchers—a share out of all proportion to the country’s population (Iceland Monitor, 2015). A large value for Greece could possibly be explained by strong connections in scientific networks that remain despite the turmoil caused by economic decline (Trachana, 2013). Romania’s large positive value could be caused by scientists who are more influential than expected based on the country’s shortcomings. A large negative value for Slovakia could be caused by brain-drain, which has been noted to be a problem by the chairman of the Slovak Academy of Sciences (Zubașcu, 2020).

Conclusion

This article analyzed the interplay of size, funding and collaboration in determining bibliometric outputs on the country level. Previous studies have addressed these factors incoherently as separate issues. This article has taken an initial step toward integration by using an adaptation of Lepori’s (2011) theoretical structure of public research funding to bring relevant characteristics together systematically. The hypotheses raised add a new perspective on how research system structure affects scientific output and explain why some microstates perform significantly better than expected based on their R&D spending.

The first research proposition—that the science production function has increasing returns to scale—was unsupported by evidence. No association was seen in the case of the number of articles published or citations received per article.

Secondly, as proposed, transnationally coordinated research projects have a positive association with citations. Larger countries invest more in transnationally coordinated research and have more hyper-authored articles. However, they have a much lower percentage of hyper-authored articles as a proportion of total articles. This creates a problem when comparing countries, since smaller ones are more affected by hyperauthorship, causing misleading information on the country’s contribution to the scientific world (Hirv, 2019; Zanotto et al., 2016). As a result, several small nations perform above their weight in impact relative to spending, although their intent for such collaborations remains unclear—is it better science, learning or something else?

Although transnationally coordinated research boosts countries’ impact significantly, policymakers need to keep in mind that most of it is artificial because of problems with credit allocations that occur with mass-authorship. Fractionalization of articles would significantly diminish the overall output of microstates with a higher prevalence of international collaboration, especially with hyperauthorship.

Thirdly, results show that not only the level of funding but also the way of financing is an important factor determining research impact. Surprisingly, project-based funding does not affect the number of research articles published, but, at the same time, has a U-shaped association with citations per article. This highlights that there are two alternative financing strategies to maximize research impact per article—strong preference toward core or project-based funding.

The reason why this relationship is U-shaped in regards to citations per article could be a result of the Matthew effect in financing (Bol et al., 2018), where early successes increase future success chances. High impact research is concentrated in a few premier institutions in countries where the overall research impact is higher than average (Prathap, 2017). In the case of core funding, institutions get a sufficient amount directly from a government, but in the case of a high share of project-based funding, research groups may get the same amount in the end by getting a higher number of research grants. Also, because of path dependence, there is pressure to publish well-cited articles. Scientists may combine funds from different grants to achieve a sufficient level of funding in order to cope with a high level of competitiveness (Laudel, 2006). The pressure to publish is probably less with a balanced strategy because core funding is more stable than project-based.

In addition, science evolution seems to undergo taxonomic change, where the impact is no longer measured by citations but in terms of impact on all sectors of society (Bornmann & Haunschild, 2017). A balanced funding strategy gives a lower impact in terms of citations per article, but its effects could be positive on culture and economics, that we did not cover here.

As the last step of the analysis, we created an overview of the factors in which countries are performing and lagging. As mentioned, the former Eastern Bloc countries are strongly disadvantaged by shortcomings in R&D expenditures. However, as results show, this is not the only reason why they lag. In most cases, they have significantly less UIC. UIC is especially frequent in countries with high living standards where expenditures on R&D are also high, such as Switzerland, Belgium and Scandinavian countries. Although UIC is associated with higher research impact, it seems to go hand in with the economic structure and cannot be forced upon by policy in the short run. Less developed countries will probably catch up in UIC when the economic structure is advanced enough for a more knowledge-based economy. Microstates compensate for their shortcomings by participating more in international collaboration and transnationally coordinated research than larger countries do. When it comes to financing, only Malta, Italy, Latvia and Estonia gain in impact thanks to having a strong preference toward institutional or project-based funding.

A limitation of the bibliometric method used in this study is that it measures innovativeness through the number of articles and citations that show relevance and standing at a current timepoint compared to other countries and overlooks comparisons between time periods. One way to compare innovativeness in different periods is to look at the number of macro-innovations made. Macro-innovations, as defined by Surry (2008), are innovations that impact a broad spectrum of people or processes within an organization, often requiring significant modifications to the organization’s structures and policies. These types of innovations have been declining since the end of the Industrial Revolution (Huebner, 2005; Woodley of Menie, Figueredo & Sarraf, 2019). Therefore, standings based only on the current independent variables may be inadequate in explaining long-term science production.

References

Abramo, G., Cicero, T., & D’Angelo, C. A. (2012). Revisiting size effects in higher education research productivity. Higher Education, 63(6), 701–717. https://doi.org/10.1007/s10734-011-9471-6

Abramo, G., D’Angelo, C. A., Di Costaa, F., & Solazzi, M. (2009). University–industry collaboration in Italy: A bibliometric examination. Technovation, 29(6–7), 498–507. https://doi.org/10.1016/j.technovation.2008.11.003

Abrigo, M. R., & Love, I. (2016). Estimation of panel vector autoregression in Stata. The Stata Journal, 16(3), 778–804. https://doi.org/10.1177/1536867X1601600314

Adams, J. (2012). The rise of research networks. Nature, 490(7420), 335–336. https://doi.org/10.1038/490335a

Adams, J. (2013). Collaborations: The fourth age of research. Nature, 497(7451), 557–560. https://doi.org/10.1038/497557a

Allik, J. (2013). Factors affecting bibliometric indicators of scientific quality. Trames: A Journal of the Humanities and Social Sciences, 17(3), 199–214. https://doi.org/10.3176/tr.2013.3.01

Allik, J., Lauk, K., & Realo, A. (2020). Factors predicting the scientific wealth of nations. Cross-Cultural Research. https://doi.org/10.1177/1069397120910982

Allison, P. D. (2009). Fixed effects regression models. SAGE Publications.

Arrow, K. (1962). The rate and direction of inventive activity: Economic and social factors. Princeton University Press.

Auranen, O., & Nieminen, M. (2010). University research funding and publication performance—An international comparison. Research Policy, 39(6), 822–834. https://doi.org/10.1016/j.respol.2010.03.003

Bloch, C., Ryan, T. K., & Andersen, J. P. (2019). Public-private collaboration and scientific impact: An analysis based on Danish publication data for 1995–2013. Journal of Informetrics, 13(2), 593–604. https://doi.org/10.1016/j.joi.2019.03.003

Bol, T., de Vaan, M., & van de Rijt, A. (2018). The Matthew effect in science funding. Proceedings of the National Academy of Sciences, 115(19), 4887–4890. https://doi.org/10.1073/pnas.1719557115

Bonaccorsi, A., & Daraio, C. (2005). Exploring size and agglomeration effects on public research productivity. Scientometrics, 63(1), 87–120. https://doi.org/10.1007/s11192-005-0205-3

Bornmann, L., & Haunschild, R. (2017). Does evaluative scientometrics lose its main focus on scientific quality by the new orientation towards societal impact? Scientometrics, 110(2), 937–943. https://doi.org/10.1007/s11192-016-2200-2

Castelvecchi, D. (2015). Physics paper sets record with more than 5000 authors. Nature. https://doi.org/10.1038/nature.2015.17567

Cimini, G., Gabrielli, A., & Labini, F. S. (2014). The scientific competitiveness of nations. PLoS ONE, 9(12), e113470. https://doi.org/10.1371/journal.pone.0113470

Clarivate Analytics. (2018). InCites indicators handbook. Clarivate Analytics.

Clermont, M., Dirksen, A., & Harald, D. (2015). Returns to scale of Business Administration research in Germany. Scientometrics, 103(2), 583–614. https://doi.org/10.1007/s11192-015-1561-2

Cocos, M., & Lepori, B. (2020). What we know about research policy mix. Science and Public Policy, 47(2), 1–11. https://doi.org/10.1093/scipol/scz061

Crespi, G. A., & Geuna, A. (2008). An empirical study of scientific production: A cross country analysis, 1981–2002. Research Policy, 37(4), 565–579. https://doi.org/10.1016/j.respol.2007.12.007

Cronin, B. (2001). Hyperauthorship: A postmodern perversion or evidence of a structural shift in scholarly communication practices? Journal of the American Society for Information Science and Technology, 52(7), 558–569. https://doi.org/10.1002/asi.1097

De Solla Price, D. J., & Beaver, D. (1966). Collaboration in an invisible college. American Psychologist, 21(11), 1011–1018. https://doi.org/10.1037/h0024051

Dialogic/Empirica. (2014). The effectiveness of national research funding systems. Utrecht/Bonn: Dialogic/Empirica. Retrieved from https://www.dialogic.nl/wp-content/uploads/2016/12/2013.109-1422.pdf.

Docampo, D., & Bessoule, J.-J. (2019). A new approach to the analysis and evaluation of the research output of countries and institutions. Scientometrics, 119(2), 1207–1225. https://doi.org/10.1007/s11192-019-03089-w

Eurostat. (2010). R&D budget statistics—transnationally coordinated research. Retrieved 19 Nov 2019, from https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Archive:R_%26_D_budget_statistics_-_transnationally_coordinated_research.

Eurostat. (2020). Government budget allocations for R&D (GBARD). Government budget allocations for R&D (GBARD). Retrieved from https://ec.europa.eu/eurostat/cache/metadata/en/gba_esms.htm#contact1616163588011.

Farshad, M., Claudia, S., & Gerber, C. (2013). Association of scientific and nonscientific factors to citation rates of articles of renowned orthopedic journals. European Orthopaedics and Traumatology, 4(3), 125–130. https://doi.org/10.1007/s12570-013-0174-6

Figg, W. D., Dunn, L., Liewehr, D., Steinberg, S. M., Thurman, P., Barrett, C., & Birkinshaw, J. (2006). Scientific collaboration results in higher citation. Pharmacotherapy, 26(6), 759–767. https://doi.org/10.1592/phco.26.6.759

Fisher, F. M., & Temin, P. (1973). Returns to scale in research and development: What does the Schumpeterian hypothesis imply? Journal of Political Economy, 81(1), 56–70. https://doi.org/10.1086/260006

Gantman, E. R. (2012). Economic, linguistic, and political factors in the scientific productivity of countries. Scientometrics, 93(3), 967–985. https://doi.org/10.1007/s11192-012-0736-3

García, C. E., & Sanz-Menéndez, L. (2005). Competition for funding as an indicator of research competitiveness. Scientometrics, 64(3), 271–300. https://doi.org/10.1007/s11192-005-0251-x

Garfield, E. (1979). Citation indexing– Its theory and application in science. John Wiley & Sons.

Gauffriau, M., & Larsen, P. O. (2005). Counting methods are decisive for rankings based on publication and citation studies. Scientometrics, 64(1), 85–93. https://doi.org/10.1007/s11192-005-0239-6

Gauffriau, M., Larsen, P. O., Maye, I., Roulin-Perriard, A., & von Ins, M. (2007). Publication, cooperation and productivity measures in scientific research. Scientometrics, 73(2), 175–214. https://doi.org/10.1007/s11192-007-1800-2

Georghiou, L. (2013). Effectiveness of national research systems. Discussion paper, Brussels. Retrieved from https://era.gv.at/object/document/366/attach/background_paper_session_1.pdf.

Glanzel, W. (2001). National characteristics in international scientific co-authorship relations. Scientometrics, 51(1), 69–115. https://doi.org/10.1023/A:1010512628145

Glänzel, W., & Schubert, A. (2005). Analysing scientific networks through co-authorship. In W. Glänzel & A. Schubert (Eds.), Handbook of quantitative science and technology research (pp. 257–276). Springer.

Goldfarb, A., & Tucker, C. (2019). Digital economics. Journal of Economic Literature, 57(1), 3–43. https://doi.org/10.1257/jel.20171452

Griliches, Z. (1979). Issues in assessing the contribution of research and development to productivity growth. The Bell Journal of Economics, 10(1), 92–116. https://doi.org/10.2307/3003321

Gross, K., & Bergstrom, C. T. (2019). Contest models highlight inherent inefficiencies of scientific funding competitions. PLOS Biology, 17(1), e3000065. https://doi.org/10.1371/journal.pbio.3000065

Guan, J., & Zuo, K. (2014). A cross-country comparison of innovation efficiency. Scientometrics, 100(2), 541–575. https://doi.org/10.1007/s11192-014-1288-5

Herron, P., Mehta, A., Cao, C., & Lenoir, T. (2016). Research diversification and impact: The case of national nanoscience development. Scientometrics, 109(2), 629–659. https://doi.org/10.1007/s11192-016-2062-7

Hirv, T. (2019). Research consortia determine a significant part of the bibliometric visibility of Estonian science. TRAMES: A Journal of the Humanities and Social Sciences, 23(3), 287–308. https://doi.org/10.3176/tr.2019.3.02

Huebner, J. (2005). A possible declining trend for worldwide innovation. Technological Forecasting and Social Change, 72(8), 980–986. https://doi.org/10.1016/j.techfore.2005.01.003

Iceland Monitor. (2015). Iceland has 10 of the world’s top scientists. Retrieved 5 June 2020, from https://www.mbl.is/ferdalog/frettir/2015/09/18/iceland_has_10_of_the_world_s_top_scientists/.

Jongbloed, B., & Lepori, B. (2015). Funding of research in higher education: Mixed models and mixed results. In The Palgrave international handbook of higher education policy and governance. Palgrave Macmillan. (pp. 439–462). https://doi.org/10.1007/978-1-137-45617-5_24.

Jonkers, K., & Zacharewicz, T. (2015). Performance based funding: A comparative assessment of their use and nature in EU Member States. Working paper. https://doi.org/10.2791/134058.

Jonkers, K., & Zacharewicz, T. (2016). Research performance based funding systems: A comparative assessment. Publications Office of the European Union. https://doi.org/10.2791/659483

King, C. (2012). Multiauthor papers: Onward and upward. Science Focus, 7, 62–64.

King, D. A. (2004). The scientific impact of nations. Nature, 430(6997), 311–316. https://doi.org/10.1038/430311a

Klein, S. G. (2015). Using performance-based funding to incentivize change. RTI International.

Koshal, R. K., & Koshal, M. (1999). Economies of scale and scope in higher education: A case of comprehensive universities. Economics of Education Review, 18(2), 269–277. https://doi.org/10.1016/S0272-7757(98)00035-1

Kulkarni, A. V., Busse, J. W., & Shams, I. (2007). Characteristics associated with citation rate of the medical literature. PLoS ONE, 2(5), e403. https://doi.org/10.1371/journal.pone.0000403

Laband, D. N., & Lentz, B. F. (2003). New estimates of economies of scale and scope in higher education. Southern Economic Journal, 70(1), 172–183. https://doi.org/10.2307/1061638

Lariviere, V., Gingras, Y., Sugimoto, C. R., & Tsou, A. (2015). Team size matters: Collaboration and scientific impact since 1900. Journal of the Association for Information Science and Technology, 66(7), 1323–1333. https://doi.org/10.1002/asi.23266

Laudel, G. (2006). The art of getting funded: How scientists adapt to their funding conditions. Science and Public Policy, 33(7), 489–504. https://doi.org/10.3152/147154306781778777

Lauk, K., & Allik, J. (2018). A puzzle of Estonian science: How to explain unexpected rise of the scientific impact. Trames: A Journal of the Humanities and Social Sciences, 22(4), 329–344. https://doi.org/10.3176/tr.2018.4.01

Lebeau, L.-M., Laframboise, M.-C., Larivière, V., & Gingras, Y. (2008). The effect of university–industry collaboration on the scientific impact of publications: The Canadian case, 1980–2005. Research Evaluation, 17(3), 227–232. https://doi.org/10.3152/095820208X331685

Lepori, B. (2011). Coordination modes in public funding systems. Research Policy, 40(3), 355–367. https://doi.org/10.1016/j.respol.2010.10.016

Lepori, B., Masso, J., Jabłecka, J., Sima, K., & Ukrainski, K. (2009). Comparing the organization of public research funding in central and eastern European countries. Science and Public Policy, 36(9), 667–681. https://doi.org/10.3152/030234209X479494

Lepori, B., van den Besselaar, P., Dinges, M., van der Meulen, B., Potì, B., Reale, E., Slipsersaeter, S., & Theves, J. (2007). Indicators for comparative analysis of public project funding: Concepts, implementation and evaluation. Research Evaluation, 16(4), 243–255. https://doi.org/10.3152/095820207X260252

Leydesdorff, L., & Henry, E. (1996). Emergence of a Triple Helix of university—industry—government relations. Science and Public Policy, 23(5), 279–286. https://doi.org/10.1093/spp/23.5.279

Leydesdorff, L., & Wagner, C. (2009). Macro-level indicators of the relations between research funding and research output. Journal of Informetrics, 3(4), 353–362. https://doi.org/10.1016/j.joi.2009.05.005

Leydesdorff, L., Bornmann, L., & Wagner, C. S. (2018). The relative influences of government funding and international collaboration on citation impact. Journal of the Association for Information Science and Technology, 70(2), 198–201. https://doi.org/10.1002/asi.24109

May, R. M. (1997). The scientific wealth of nations. Science, 275(5301), 793–796. https://doi.org/10.1126/science.275.5301.793

Moed, H. F. (2005). Citation analysis in research evaluation. Springer.

Morillo, F. (2016). Public-private interactions reflected through the funding acknowledgements. Scientometrics, 108(3), 1193–1204. https://doi.org/10.1007/s11192-016-2032-0

Mueller, C. (2016). Accurate forecast of countries’ research output by macro-level indicators. Scientometrics, 109(2), 1307–1328. https://doi.org/10.1007/s11192-016-2084-1

Must, Ü. (2014). The impact of multi-authored papers: The case of a small country. COLLNET Journal of Scientometrics and Information Management, 8(1), 41–47. https://doi.org/10.1080/09737766.2014.916874

Nelson, R. R. (1959). The simple economics of basic scientific research. Journal of Political Economy, 67(3), 297–306. https://doi.org/10.1086/258177

OECD. (2006). Knowledge-based economy. Retrieved 11 Feb 2020, from https://stats.oecd.org/glossary/detail.asp?ID=6864.

OECD. (2018). Effective operation of competitive research funding systems. OECD Publishing.

Pacchioni, G. (2018). The overproduction of truth: Passion, competition, and integrity in modern science. Oxford University Press.

Parker, J. N., Allesina, S., & Lortie, C. J. (2013). Characterizing a scientific elite (B): Publication and citation patterns of the most highly cited scientists in environmental science and ecology. Scientometrics, 94, 469–480. https://doi.org/10.1007/s11192-012-0859-6

Pastor, J. M., & Serrano, L. (2016). The determinants of the research output of universities: Specialization, quality and inefficiencies. Scientometrics, 109(2), 1255–1281. https://doi.org/10.1007/s11192-016-2102-3

Powell, J. J., & Dusdal, J. (2017). Science production in Germany, France, Belgium, and Luxembourg: Comparing the contributions of research Universities and Institutes to science, technology, engineering, mathematics, and health. Minerva, 55(4), 413–434. https://doi.org/10.1007/s11024-017-9327-z

Prathap, G. (2017). Scientific wealth and inequality within nations. Scientometrics, 113(2), 923–928. https://doi.org/10.1007/s11192-017-2511-y

Puuska, H.-M., Muhonen, R., & Leino, Y. (2013). International and domestic co-publishing and their citation impact in different disciplines. Scientometrics, 98(2), 823–839. https://doi.org/10.1007/s11192-013-1181-7

Raudla, R., Karo, E., Valdmaa, K., & Kattel, R. (2015). Implications of project-based funding of research on budgeting and financial management in public universities. Higher Education, 70(6), 957–971. https://doi.org/10.1007/s10734-015-9875-9

Reale, E. (2017). Analysis of national public research funding-PREF. Final Report. https://doi.org/10.2760/19140

Reale, E., Lepori, B., Nedeva, M., Thomas, D., Primeri, E., Chassagneux, E., & Laredo, P. (2013). Investments in joint and open research programmes and analysis of their economic impact (JOREP). Directorate General for Research and Innovation.

Romer, D. (2012). Advanced macroeconomics (Ed.). McGraw-Hill Education.

Rousseau, S., & Rousseau, R. (1997). The scientific wealth of European nations: Taking effectiveness into account. Scientometrics, 42(1), 75–87. https://doi.org/10.1007/BF02465013

Salomon-Fernandez, Y. (2014). The Massachusetts community college performance-based funding formula: A new model for New England?. New England Journal of Higher Education.

Sandstrom, U., & Van den Besselaar, P. (2018). Funding, evaluation, and the performance of national research systems. Journal of Informetrics, 12(1), 365–384. https://doi.org/10.1016/j.joi.2018.01.007

Schumpeter, J. (1942). Capitalism, socialism and democracy. Harper & Brothers.

Sharma, S., & Thomas, V. (2008). Inter-country R&D efficiency analysis: An application of data envelopment analysis. Scientometrics, 76(3), 483–501. https://doi.org/10.1007/s11192-007-1896-4

Shelton, R. D. (2020). Scientometric laws connecting publication counts to national research funding. Scientometrics, 123(1), 181–206. https://doi.org/10.1007/s11192-020-03392-x

Sugimoto, C. R., & Larivière, V. (2018). Measuring research: What everyone needs to know. Oxford University Press.

Surry, D. U. (2008). Innovations in Learning Technology. In L. A. Tomei, Encyclopedia of information technology curriculum integration. Hershey: IGI Global. (pp. 413–417). https://doi.org/10.4018/978-1-59904-881-9

Thelwall, M. (2020). Large publishing consortia produce higher citation impact research but coauthor contributions are hard to evaluate. Quantitative Science Studies, 1, 290–302. https://doi.org/10.1162/qss_a_00003

Todeschini, R., & Baccini, A. (2016). Handbook of bibliometric indicators: Quantitative tools for studying and evaluating research. John Wiley & Sons.

Trachana, V. (2013). Austerity-led brain drain is killing Greek science. Nature, 496(7445), 271–271. https://doi.org/10.1038/496271a

Van Steen, J. (2012). Modes of public funding of research and development: Towards internationally comparable indicators. OECD Publishing.

Van Leeuwen, T. N., Moed, H. F., Tijssen, R. J., Visser, M. S., & Van Raan, A. F. (2001). Language biases in the coverage of the Science Citation Index and its consequencesfor international comparisons of national research performance. Scientometrics, 51(1), 335–346. https://doi.org/10.1023/A:1010549719484

Vinkler, P. (2008). Correlation between the structure of scientific research, scientometric indicators and GDP in EU and non-EU countries. Scientometrics, 74(2), 237–254. https://doi.org/10.1007/s11192-008-0215-z

Vinkler, P. (2018). Structure of the scientific research and science policy. Scientometrics, 114(2), 737–756. https://doi.org/10.1007/s11192-017-2568-7

Wagner, C. S., & Jonkers, K. (2017). Open countries have strong science. Nature, 550(7674), 32–33. https://doi.org/10.1038/550032a

Wagner, C. S., Whetsell, T., Baas, J., & Jonkers, K. (2018). Openness and impact of leading scientific countries. Frontiers in Research Metrics and Analytics. https://doi.org/10.3389/frma.2018.00010

Waltman, L. (2016). A review of the literature on citation impact indicators. Journal of Informetrics, 10(2), 365–391. https://doi.org/10.1016/j.joi.2016.02.007

Woodley of Menie, M. A., Figueredo, A. J., & Sarraf, M. A. (2019). Slowing life history (K) can account for increasing micro-innovation rates and GDP growth, but not macro-innovation rates, which declined following the end of the Industrial Revolution. The Behavioral and brain sciences. https://doi.org/10.1017/S0140525X19000098.

Worthington, A. C., & Higgs, H. (2011). Economies of scale and scope in Australian higher education. Higher Education, 61(4), 387–414. https://doi.org/10.1007/s10734-010-9337-3

Zacharewicz, T., Lepori, B., Reale, E., & Jonkers, K. (2019). Performance-based research funding in EU Member States—a comparative assessment. Science and Public Policy, 46, 105–115.

Zanotto, S. R., Haeffner, C., & Guimaraes, J. A. (2016). Unbalanced international collaboration affects adversely the usefulness of countries’ scientific output as well as their technological and social impact. Scientometrics, 109, 1789–1814.

Zubașcu, F. (2020). Call to reform Slovakia’s R&D system. Science|Business. Retrieved 10 Apr 2020 from https://sciencebusiness.net/news/call-reform-slovakias-rd-system.

Acknowledgements

The author thanks Bradley Loewen for proofreading and anonymous reviewers for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hirv, T. The interplay of the size of the research system, ways of collaboration, level, and method of funding in determining bibliometric outputs. Scientometrics 127, 1295–1316 (2022). https://doi.org/10.1007/s11192-021-04232-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-04232-2