Abstract

The ever-increasing number of published scientific articles has prompted the need for automated, data-driven approaches to summarizing the content of scientific articles. The Computational Linguistics Scientific Document Summarization Shared Task (CL-SciSumm 2019) has recently fostered the study and development of new text mining and machine learning solutions to the summarization problem customized to the academic domain. In CL-SciSumm, a Reference Paper (RP) is associated with a set of Citing Papers (CPs), all containing citations to the RP. In each CP, the text spans (i.e., citances) have been identified that pertain to a particular citation to the RP. The task of identifying the spans of text in the RP that most accurately reflect the citance is addressed using supervised approaches. This paper proposes a new, more effective solution to the CL-SciSumm discourse facet classification task, which entails identifying for each cited text span what facet of the paper it belongs to from a predefined set of facets. It proposes also to extend the set of traditional CL-SciSumm tasks with a new one, namely the discourse facet summarization task. The idea behind is to extract facet-specific descriptions of each RP consisting of a fixed-length collection of RP’s text spans. To tackle both the standard and the new tasks, we propose machine learning supported solutions based on the extraction of a selection of discriminating words, called pivot words. Predictive features based on pivot words are shown to be of great importance to rate the pertinence and relevance of a text span to a given facet. The newly proposed facet classification method performs significantly better than the best performing CL-SciSumm 2019 participant (i.e., the classification accuracy has increased by + 8%), whereas regression methods achieved promising results for the newly proposed summarization task.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Thanks to the advances in Information Systems, multimedia processing, and Web-based architectures, in the last decade digital libraries have significantly extended the volume of managed data, the number and kind of provided functionalities, and the user interfaces. They nowadays play a fundamental role in academic research. In fact, researchers can easily access the full-text of the most relevant scientific publications in electronic form. In parallel, scientometric data (e.g., author and co-author relationships, citation and co-citation information) have become easily accessible and usable for various purposes.

Scientists who are interested in enlarging their collaboration network can get in touch with other colleagues through various social platforms [e.g., ResearchGate, Academia.edu (Ovadia 2014)], which recommend links and papers based on citation networks. Within the current scenario, however, the in-depth exploration of the content of scientific papers still remains extremely time-consuming, because it mainly relies on manual content retrieval Saggion and Ronzano 2017.

The Computational Linguistics Scientific Document Summarization Shared Task (CL-SciSumm) (Chandrasekaran et al. 2020) is an yearly research challenge focused on text mining and summarization of scientific papers. It fosters the joint analysis of paper full-text and scientometric data in order to gain insights into the analyzed content. More specifically, it aims at bringing together the summarization community to address challenges in scientific communication summarization. The tasks proposed in the fifth edition of the challenge, i.e., CL-SciSumm BIRNDL 2019, address an advanced analysis of a set of topics, where each topic consists of a Reference Paper (RP) and a set of Citing Papers (CPs), all containing citations to the RP. In each CP, the text spans, denoted as citances, have been identified that pertain to a particular citation to the RP. The main CL-SciSumm task (1A) is to identify the spans of text in the RP (namely, the cited text spans) that most accurately reflect the citance.

This paper addresses a specific task of CL-SciSumm BIRNDL 2019, namely the discourse facet classification (task 1B). The considered task entails identifying for each cited text span in RP what discourse facet it belongs to. Facets are selected from a predefined set (i.e., Aim, Method, Results, Hypothesis, Implication). We formulate the task as a classical binary classification problem, i.e., for each cited text span we predict whether a specific discourse facet is pertinent or not. The classifier is trained on a labeled dataset consisting of various cited and citing text spans’ descriptors. Among the dataset features, the most discriminating ones for classification purposes rely on the concept of pivot words. In a nutshell, the occurrence of these particular words, extracted a priori from the training set, is likely to determine the discourse facet of a text span. The experiments carried out on benchmark data have shown that the proposed classification approach performs significantly better than the winner of the CL-SciSumm 2019 contest (e.g., classifier accuracy has increased by + 8%).

Once the discourse facets have been correctly identified, synthetic descriptions of the RP tailored to each facet would be desirable. Faceted summaries are deemed as useful for gaining insights into specific aspects covered by the paper. In fact, exploring per-facet summaries helps readers to explore particular aspects with limited human effort (i.e., without perusing the entire scientific paper). Hence, we propose to generate a fixed-length summary per facet consisting of the most salient text spans in RP pertaining to that facet. To our best knowledgeFootnote 1 the discourse facet summarization task is new. We propose a machine learning solution based on regression methods. Specifically, we predict the overlap level between each text span in the RP and the expected per-facet summary. Notably, the results confirm the importance of using the pivot words to drive the summarization process (e.g., Rouge-2 F-measure between 0.30 and 0.40 on most of the analyzed facets). This reinforces the hypothesis that using the pivot words as text span descriptors is particularly effective in this particular scenario.

The innovative contribution of this paper can be summarized as follows:

-

A new machine learning approach, based on the analysis of the pivot words, to identifying the most likely discourse facet of each cited text span in the RP.

-

A new facet summarization task, which focuses on extracting summaries of the RP’s text spans pertaining to a specific facet.

-

A machine learning strategy to solve the newly proposed facet summarization task, which relies on regression models and also considers the presence of pivot words as predictive feature in the supervised models.

The rest of the paper is organized as follows. “Related works” and “Computational linguistics scientific document summarization shared tasks” sections overview the related literature and the official CL-SciSumm 2019 Shared Tasks, respectively. “The newly proposed task: discourse facet summarization” section formalizes the newly proposed faceted summarization task. “Presented methods” section thoroughly describes the presented method, while “Experimental results” section summarizes the experimental results. Finally, “Conclusions and future works” section draws conclusions and discusses future works.

Related works

This work is an extended version of the paper presented by La Quatra et al. (2019). The preliminary version describes a submission to the 2019 CL-SciSumm Shared tasks (Chandrasekaran et al. 2020), which is based on an ensemble of traditional classification and regression models. The submission achieved very good results on summarization task 2 (i.e., 1st out of 104 runs against the community summary), whereas got halfway ranks on tasks 1A and 1B (i.e., 36th out of 98 submitted runs for task 1A, 57th over 98 submitted runs for task 1B). The achieved results have pushed us, on one hand, to deepen the search of effective solutions for a specific task (i.e., task 1B: discourse facet classification) and, on the other hand, to explore further extensions of the original problem related to the area of scientific paper summarization. Compared to our previous submission, this extended version (1) presents a new methodology to identify the facets of each cited text span of the RP, (2) proposes a new task, called discourse facet summarization, (3) describes a method to solve the newly proposed task.

A huge body of work has been devoted to proposing new summarization algorithms. Summarization focuses on identifying the most significant content from the body of a document (Nenkova and McKeown 2012). Extractive methods pick existing parts of the original document, such as words, phrases, or sentences, whereas abstractive methods produce summaries including also newly generated content. Summarization techniques have already been applied to documents related to a variety of contexts, such as news articles (Cagliero et al. 2019; Giannakopoulos et al. 2015; Giannakopoulos 2013), tweets (Naik et al. 2017, 2018), learning documents (Cagliero et al. 2019; Baralis and Cagliero 2018), and scientific papers (Collins et al. 2017a; Nikolov et al. 2018). The latter application context (scientific paper summarization) is the main target of this work. Notice that, unlike news articles, scientific papers are usually enriched with bibliographic references. In our context, a citing paper contains a reference to a given cited one. A subset of sentences of the citing paper pertaining to a given citation will be denoted as citing sentences, whereas the sentences in the cited paper that are most likely related to the citation will be denoted as cited sentences. Scientific papers can be summarized in many different ways: (1) by the abstract and title that the author provides (Nikolov et al. 2018; Kim et al. 2016; Lloret et al. 2013), (2) by the text snippets in the citing papers that pertain to it (Schwartz and Hearst 2006; Nakov et al. 2004; Qazvinian and Radev 2010), (3) by the text snippets including the most representative content in a semantic link network (Sun and Zhuge 2018), (4) by the presentation slides (Sun and Zhuge 2018), or (5) by the highlight statements submitted along with the manuscript (Collins et al. 2017b). The contribution of this paper provides one step further towards the use of text spans in the cited papers surrounding a citation.

The task of summarizing a scientific paper using its corresponding set of citation sentences is called citation-based summarization (Qazvinian and Radev 2008). It entails aggregating all the citation sentences that cite a paper and ranking them based on various criteria (e.g., coherence, readability) (Abu-Jbara and Radev 2011). Unlike (Abu-Jbara and Radev 2011; Qazvinian and Radev 2008), in the CL-SciSumm Shared tasks and in the newly extension proposed in the present work the paper summary does not include citing sentence but only the sentences of the cited paper.

A pilot study of the Biomedical Summarization Track of the Text Analysis Conference 2014Footnote 2 indicates that most citations clearly refer to one or more specific aspects of the cited paper (usually Aim, Method, Result/Data, or Conclusion) (Ronzano and Saggion 2016). Considering this insight could help analysts to create more coherent citation-based summaries. They recommend to identify the cited text span first, then identify the facet, and finally create a unique summary covering all the aspects. The newly proposed facet summarization task differs from those presented in Ronzano and Saggion (2016) because it focuses on creating facet-specific, fixed-length summaries (a separate summary for each facet). Hence, each summary covers a separate aspect of the paper and provides deep knowledge about that aspect.

Citation-Sensitive In-Browser Summariser (CSIBS) systems (e.g.,Wan et al. 2009a, b, 2010) generate facet-weighted previews, which vary according to the section of the text that the user is reading. This approach is complementary to the method proposed for the faceted summarization task, as the facet-specific summaries can be provided as detailed overview.

The participants to the previous editions of the Computational Linguistic Scientific Document Summarization Shared Tasks have proposed different solutions to automatically identify the discourse facets in the reference papers. For example, the winners of the 2018 challenge edition (Jaidka et al. 2018) have proposed to predict the syntactic distance between the candidate and actual cited text spans using regression models. Parallel attempts have been devoted to applying binary classifiers that combine various text embedding features (Davoodi et al. 2018; Wang et al. 2018; Ma et al. 2018). For example, the approach presented in Wang et al. (2018) has integrated contextualized word vectors trained on GoogleNews and on the ACL Antology Network collections. Alternative solutions adopted advanced word- or sentence-based distance metrics such as the Word Mover’s Distance and the Earth Mover’s Distance (Li et al. 2018), the IDF-weighted Average Embedding based similarity, and the Smooth Inverse Frequency based similarity distances (Baruah and Kolla 2018). This paper proposes to use the concept of pivot words, proposed, with different formalizations, by Wan et al. (2008), Fu et al. (2019). As shown in “Experimental results” section, the solution to the facet classification task (1B) presented in this paper performs significantly better than the winner of the 2019 CL-SciSumm Shared task 1B.

Computational linguistics scientific document summarization shared tasks

This section introduces the official tasks of the fifth edition of the Computational Linguistics Scientific Document Summarization Shared Task (i.e., CL-SciSumm 2019) (Chandrasekaran et al. 2020). The proposed challenges focus on text mining and summarization of scientific papers and follow up on the tasks proposed in the previous edition (CL-SciSumm 2018 Kumar et al. 2018). The CL-SciSumm 2019 tasks’ descriptions, the approaches proposed by the participants, and the achieved results have been presented at the joint workshop on Bibliometric-enhanced IR and NLP for Digital Libraries (BIRNDL@SIGIR 2019).

Description of the data

The corpus of scientific papers released by the organizers of the CL-SciSumm 2019 Shared task consists of the full-text of 40 academic papers ranging over various topics (mostly from scientific research areas). The corpus is available online at https://github.com/WING-NUS/scisumm-corpus.

To support computational linguistics analyses, the text of each paper is partitioned into sentences (according to the presence of in-text punctuation marks) and sections (according to the original structure of the paper) (Jaidka et al. 2016). Furthermore, the information about the citation network is known. Specifically, for each citation the citing and the cited (reference) papers are enriched with the following information: (1) The text spans in the citing paper that pertain to the citation (i.e., hereafter denoted as citances). (2) The text span in the cited paper that are referenced by the citation. (3) The discourse facets of the link between citing and cited text spans, which belong to the following set of predefined categories: Aim, Hypothesis, Implication, Results, Method. The text spans (1) and (2) may include one or more consecutive sentences and may belong to many facets. Each paper contains, on average, 20 citations.

Official CL-SciSumm 2019 tasks

The goal of the CL-SciSumm 2019 Shared Task is threefold. First, given a Reference Paper, it aims at identifying the text spans that are referenced by each of the corresponding citances (hereafter denoted as task 1A). It entails automatically linking the text spans belonging to the reference and citing papers, respectively. Secondly, for each cited text span it identifies the discourse facet it belongs to (task 1B). It entails addressing a multi-label text classification problem (Tan et al. 2018). Lastly, it generates a unique summary per reference paper, consisting of its most salient text spans. It is a supervised text summarization problem, hereafter denoted as task 2. A more formal description of the proposed tasks is given below.

Notation Let rp be a reference scientific paper and let CP be the set of scientific papers citing rp (hereafter denoted as citing papers). Given an arbitrary citing paper \(\mathrm{cp}\in \mathrm{CP}\), let \(c_{\mathrm{CP}}\) = {\(c_1, c_2, \ldots , c_n\)} be the citances in cp that pertain to any citation to rp (i.e., the text spans where citations to rp are placed).

The CL-SciSumm summarization challenge is comprised of the following tasks

-

Task 1A: cited text span identification For each citance \(c_j\) (1\(\le j \le n\)), identify the spans of text rp(\(c_j\)) in the reference paper (hereafter denoted as cited text spans) that are most likely to be pertinent to \(c_j\). The cited text spans can be either a single sentence or a sequence of sentences.

-

Task 1B: discourse facet classification For each cited text span, identify the discourse facets it belongs to from a predefined set of facets.

-

Task 2: reference paper summarization Produce a short summary of the reference paper (no longer than 250 words), consisting of a selection of the most salient text spans.Footnote 3 Although the text spans included in the summary are not necessarily referenced by any citance, the summarization process should be driven by the knowledge extracted at the previous steps (i.e., the citation links generated as output of task 1A and the facets discovered for task 1B).

The paper summaries produced as output of task 2 are compared with the following three different types of summaries: (1) the abstract summary of the reference paper, which was written by the authors of the research paper. (2) the community summary, which collects all the referenced text spans in the reference paper. (3) a human-written summary, written by the annotators of the CL-SciSumm annotation effort.

The newly proposed task: discourse facet summarization

We propose to extend the set of the Computational Linguistics Scientific Document Summarization Shared Tasks with a new one called Discourse Facet Summarization. In coherence with the notation used by the task proponents (Chandrasekaran et al. 2020), we will also denote it as task 2B.

The goal is to produce a summary of the reference paper tailored to a specific discourse facet. Unlike in task 2, whose aim is to extract general-purpose summaries, in the newly proposed task each summary will consist of a selection of RP’s text spans containing the key information related to a given discourse facet.

Similar to other summarization tasks, the idea behind is to provide a synthetic overview on the RP content. The peculiarity of the proposed task is the use of facet labels to drive the selection of the summary content. As mentioned in Nenkova and McKeown (2012), facet-specific summaries can be explored to quickly grasp all aspects of a scientific paper with limited human effort.

Let \(\mathcal {F}\) be the set of predefined facets (i.e., Aim, Hypothesis, Implication, Results, Method) and let rp be the reference paper. The discourse facet summarization task entails generating facet-specific, fixed-length summaries \(\mathcal {S}\)(\(F_k\)) of rp, one for each facet in \(\mathcal {F}\). \(\mathcal {S}\)(\(F_k\)) consists of a selection of text spans in rp. Notice that the correct labeling produced by the facet classification is assumed to be unknown.

Presented methods

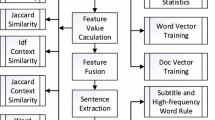

This section thoroughly describes the methods proposed to tackle both the official task 1B (cited text span classification) and the newly proposed task 2B (discourse facet summarization).

The section is organized as follows. “Text parsing and preprocessing” and “Feature engineering” sections describes the data preparation and feature engineering steps. “Discourse facet classification (Task 1B)” section presents the new methodology, based on pivot word extraction, to address task 1B. Finally, “Discourse facet summarization (Task 2B)” section presents the regression-based approach proposed to address the new task 2B.

Text parsing and preprocessing

We process the text of the scientific papers and the related citation network to tailor the input data to the subsequent analyses. First, we perform a parsing of the text, provided in xml format, and store the structure of the text (e.g., the organization of the text into sentences and sections). Then, the input text is tokenized into separate words and the less relevant or non-informative words (e.g., conjunctions, prepositions) are removed. Word tokenization and stop-word removal were based on English vocabulary provided by the Natural Language Toolkit (Bird et al. 2009). Such data are then used in the subsequent stages of the system both for the classification and the summarization task.

Feature engineering

The feature engineering step aims at generating a rich description of the cited and citing text spans. The selected features will be used to discriminate a sentence as pertinent and relevant for a given facet.

For each pair of cited and citing sentences the features for the classifier belongs to three different classes.

- \(S_1\):

-

Relative position of the cited text span in the RP

- \(S_2\):

-

Relative position of the citing text span in the CP

- \(S_3\):

-

Cited text span length (expressed in number of words)

- \(S_4\):

-

Presence of non-alphabetic characters in the cited text span

- \(S_5\):

-

Section where the cited text span is placed (encoded using the IMRaD standard Sollaci and Pereira 2004)

- \(P_1-P_n\):

-

Number of pivot words peculiar to facet \(F_k\) [1 \(\le\) k \(\le\) n] that occur in the cited text span

- \(P_{n+1}-P_{2n}\):

-

Number of pivot words peculiar to facet \(F_k\) [1 \(\le\) k \(\le\) n] that occur in the citing text span

- \(P^{\mathrm{W}2\mathrm{V}}_1-P^{\mathrm{W}2\mathrm{V}}_n\):

-

Distance between the cited text span and the pivot words peculiar to facet \(F_k\) [1 \(\le\) k \(\le\) n] in the Word2Vec latent space (Mikolov et al. 2013) (computed using the Word Mover Distance (WMD) Kusner et al. 2015)

- \(P^{\mathrm{W}2\mathrm{V}}_{n+1}-P^{\mathrm{W}2\mathrm{V}}_{2n}\):

-

Distance between the citing text span and the pivot words peculiar to facet \(F_k\) [1 \(\le\) k \(\le\) n] in the Word2Vec latent space (Mikolov et al. 2013) (computed using the Word Mover Distance (WMD) Kusner et al. 2015)

- PR:

-

PageRank (Page et al. 1999) of the cited text span in the SciBERT vector similarity graph (vector similarity is computed using the cosine similarity).

Features denoted as \(S_*\) describe structural and syntactical features related to the text spans. They are considered as they provide basic textual properties.

Features denoted as \(P_*\) and \(P^{\mathrm{W}2\mathrm{V}}_*\) indicate the syntactic and semantic coherence of the text span with a set of pivot words generated using the state-of-art approach presented by Fu et al. (2019). Pivot words are collections of words from all sentences peculiar to a specific facet. Hence, their probability of occurrence within a text is likely to be related to the membership of the text to that facet. The idea behind using pivot words in facet classification and summarization is to identify the units of text that are most discriminating while deciding the membership of a text span to a given facet. We extract a separate set of pivot words for each facet. Features \(P_1-P_{2n}\) indicate the number of occurrences of the pivot word set in each text span, while features \(P^{\mathrm{W}2\mathrm{V}}_{1}-P^{\mathrm{W}2\mathrm{V}}_{2n}\) denote the semantic similarity between the pivot word set and the text span in the Word2Vec latent space. Using two complementary text representations (i.e., frequency-based and Deep NLP-based) allows us to capture both syntactic and semantic pertinence of the text span to the facet under analysis. To tailor word embeddings to the context of scientific papers, the embedding vector representation of text are trained on the large collection of scientific papers introduced in Collins et al. (2017b).

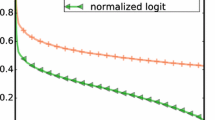

Feature PR indicates the centrality of a cited text span in the reference paper. It indicates the relative importance of a text span compared to all the other ones in the RP. The similarity graph consists of undirected weighted graph, where each node is a sentence in the RP while edges indicate that the two vector representations are similar in terms of SciBERT embedding vectors (Beltagy et al. 2019). SciBERT is an established sentence embedding model trained on a large corpus of scientific documents. For each sentence, the vector representation is extracted considering last hidden layer of the SciBERT architecture. Pairwise vector similarity is computed using the cosine similarity (Tan et al. 2018) and indicates the semantic relatedness between the two text snippets. The similarity scores are normalized per paper to range in the interval [0, 1]. Figure 1 shows the distribution of the similarity scores between sentence pairs across the analyzed data collection, which fits, to a good approximation, a Gaussian distribution, with mean 0.75 and standard deviation 0.1. Notice that in the graph-based representation the edges representing least similar sentences are kept to guarantee PageRank algorithm convergence.

Discourse facet classification (Task 1B)

For each cited text span we decide whether to assign it to a given facet using a classification approach. Specifically, the data model described in “Feature engineering” section is used to train a multi-class classifier. Let rp be an arbitrary reference paper and let \(\mathbf {C}\) be a target variable taking values in \(\mathcal {F}\). The classifier is an arbitrary function \(\mathcal {G}\): \(\mathrm{rp} \rightarrow \mathbf {C}\) indicating for each cited text span in rp the assigned facet.

To accomplish task 1B, we tested various classification methods (i.e., Gradient Boosting, Multi-Layer Perceptron, AdaBoost Tan et al. 2018). The considered classifiers are able to capture both linear and non-linear trends in the analyzed data.

Discourse facet summarization (Task 2B)

Given a facet \(F_k\) and a collection of reference papers, the facet summarization task entails learning a model able to extract a fixed-length, facet-specific summary of a new reference paper rp. The summary consists of a subset of rp’s text spans pertaining to facet \(F_k\).

Hence, we formulate the aforesaid problem as a regression task.

Regression target For each text span of the reference paper we first compute the full set of features defined in “Feature engineering” section. Then, we choose as target variable the level of overlap of the text span with the expected summary content using the Rouge-L precision (Lin and Hovy 2003).

Rouge is an established evaluation tool for summarization algorithms. It indicates the unit overlap (in terms of N-grams) between the generated and the expected summary. Rouge-L indicates the overlap between the longest common sub-sequence. Its use in evaluating supervised summarization techniques is established (Collins et al. 2017b).

Objective function The objective is to maximize the overlap between the text span and the expected summary.

Model application We iteratively pick, in a greedy way, the top-ranked sentences (i.e., the one maximizing the predicted overlap score). To generate summaries of the same paper tailored to different facet, a separate regression model is trained for each facet.

Tested models To accomplish task 2B, we used Linear Regression, Decision Tree Regressor, Random Forest Regressor, AdaBoost Regressor, Multi-Layer Perceptron Regressor, and Gradient Boosting Regressor (Tan et al. 2018).

Experimental results

We empirically analyzed the performance of the proposed approach on the benchmark dataset released for the CL-SciSumm 2019 Shared Task (Jaidka et al. 2019). All the experiments were run on a machine equipped with Intel® Xeon® X5650, 32 GB of RAM and running Ubuntu 18.04.1 LTS.

Experimental design

We tested the performance of the proposed approach on benchmark data provided by the organizers of the CL-SciSumm 2019 Shared Task. Specifically, we considered the Training-Set-2018 collection (https://github.com/WING-NUS/scisumm-corpus), which consists of 40 annotated papers. We applied an 75–25% hold-out validation strategy. Specifically, we divided it into two parts: 30 papers (along with the corresponding citation network) was used for training, whereas the remaining 10 for test. To make the results fully reproducible, we made the train-test dataset splits publicly available to the research community (see “Contribution to the research community” section).

Algorithms To accomplish both tasks we used the implementations available in the Scikit-Learn libraryFootnote 4 (Pedregosa et al. 2011) and we tested various parameter settings to suit them to the underlying data distributions.

To extract the pivot words we replicated the methodology described in Fu et al. (2019) to the best of our understanding starting from the implementation available in the official repository of the project.Footnote 5

Evaluation metrics We evaluated the system performance on task 1B using three established information retrieval measures, i.e., precision, recall, and F-measure (Leskovec et al. 2014). The precision is the fraction of cited text spans pertinent to the given facet among all the retrieved pertinent text spans, whereas the recall, is the fraction of pertinent text spans that have been retrieved over the total number of pertinent text spans. Finally, the F-measure is the harmonic mean of precision and recall. Since task 1B is a multi-class classification problem, we separately report (1) macro-average (i.e., the average over all the class), (2) micro-average (i.e., the average computed considering both true positives, false negatives and false positives), and (3) weighted average (i.e., the weighted average per class).

To evaluate the performance of the summarization approach on the newly proposed task 2B, we exploited F-measure of the Rouge-2 which denote the overlap in terms of bi-grams (Lin and Hovy 2003). For each facet we consider as reference summary the sentences of the community summary that have been classified as pertinent to the given facet.

Preliminary data characterization

We first analyzed the frequency of occurrence of the facet labels in the analyzed data. The distribution of the facets in the collection is reported in Table 1. It shows that using predictive models to predict Hypothesis is practically unfeasible, because the number of training data is too limited. Hence, hereafter we will report the results achieved only on the other facets.

We analyzed also the importance of the input features in the classification process using a standard feature weighting method.Footnote 6

Figure 2 summarizes the importance of different features in the classification task. Notice that, since we address a multi-class problem, each feature has multiple relevance scores, one for each candidate facet. Hence, due to the inherent characteristics of the addressed task, the scores reported in Fig. 2 are aggregated over all the facets.

The features related to the similarity with the pivot words in the Word2Vec space appear to be the most discriminating ones. This confirms the hypothesis that pivot words are potentially useful for classifying the cited text spans. However, notice that the simple count of the number of pivot words is weakly correlated with the target class as it is sensitive to the presence of noise in the input data.

Results on discourse facet classification

We compare the performance of the proposed approach, namely Pivot-Based Classification, the with that of (1) the best performing approach presented in the latest edition (2019) of the CL-SciSumm Shared Task 1B, i.e., CIST (Li et al. 2019)Footnote 7 and (2) the previous version of the proposed method (Poli2Sum), presented in La Quatra et al. (2019).

In Table 2 we report the results achieved on task 1B. The results were obtained by applying the model described in “Discourse facet classification (Task 1B)” section. The Weighted Average F-measure is around 8% higher than those of CIST and 14% higher than Poli2Sum (La Quatra et al. 2019). This indicates that the classifiers based on pivot words are able to identify the given facets more accurately than previous approaches.

Results on discourse facet summarization

We have compared the performance of multiple summarization models, each one trained using a different regression method. Each model is trained to predict, as target value, the Rouge-L precision score with respect to the reference summary. Figure 3 reports the F-Measure Rouge-2 scores. The bars coloured in blue indicate the performance of the regression models trained on the full feature set (see “Feature engineering” section). Conversely, the orange bars indicate the performance of the models trained by excluding the pivot word features. The comparison between blue and orange bars allows us to highlight the impact of pivot words on the summarizer performance. Excluding the pivot words results in a significant performance drop for all the considered facets and algorithms. This indicates that pivot words are of great importance even for tackling the summarization task.

In red we plotted also the performance of a baseline ranking method, which picks the top ranked sentences in order of decreasing PageRank score until the constraint on the maximum summary length is met. For the sake of clarity, in the plots we have reported only the Rouge score achieved by the best ranking function (i.e., the PageRank computed on the SciBERT vector similarity graph).

As expected, all the supervised methods outperform the baseline strategy. The MultiLayer Perceptron and the ensemble methods (ABR, RFR, GBR) achieved similar performance (Rouge-2 F-measure around 0.30).

Applicability of neural network models

In the previous evaluation we have considered just a simple fully-connected Neural Network Model, i.e., MultiLayer Perceptron (MLP). This kind of models require a relatively large number of training data to avoid data overfitting. To gain insights into the reliability of the Neural Network model, we have tested the performance of the simple MLP model on paper collections of various size. The results, for the most frequent facets, are shown in Fig. 4. By increasing the number of papers, the performance increases roughly linearly. Thus the models seem to be not affected by data overfitting.

Notice that as soon as a sufficient amount of training data will become available, various state-of-the-art deep summarization models (e.g., Cheng and Lapata 2016; Nallapati et al. 2017; Kedzie et al. 2018) will become eligible for tackling the newly proposed summarization task.

Qualitative evaluation

We validated the outcomes of the facet classification and summarization processes with the help of a domain expert. Table 3 reports some examples of facet annotations produced by the classification strategy. For example, the Method annotation was assigned to a cited text span describing the features used in the proposed methodology. Notice that the citing text also refers to the feature model. The Aim annotation was correctly assigned to a sentence where the authors clarify the objectives of their research work.

Table 4 compares the automatically generated and expected summaries. The automatically selected content reflects, to a large extent, the meaning of the expected summary.

Contribution to the research community

To foster further contributions on the newly proposed facet summarization task, at https://git.io/JvOe7 we have released (1) the list of pivot words extracted from the the Scisumm collection, (2) the facet summaries generated from Scisumm, (3) the automatic facet assignments from ScisummNet (Yasunaga et al. 2017, 2019) (i.e., a larger collection of 1000 papers, for which the manual annotations are missing).

Conclusions and future works

This paper addresses the problems of assigning facets to cited texts spans and summarizing the cited paper with text spans pertaining to a specific facet. The classification problem is part of the CL-Scisumm Shared tasks. We propose a new machine learning approach, based on the concept of pivot words, that has achieved performance superior to the best performing participant to the 2019 CL-Scisumm Shared Task 1B. The facet summarization problem addressed in this paper is new (to our best knowledge). We deem the newly proposed task as relevant to provide a facet-specific overview of the reference paper.

The results of the 2019 CL-Scisumm have highlighted the great potential of deep learning methods in tackling summarization problems tailored to the academic domain (Chandrasekaran et al. 2020). In light of these results, as a future work we plan to perform a semi-automatic, human-driven validation method that allows us to use the larger ScisummNet collection (1000 papers Yasunaga et al. 2017, 2019). This would enable the use of various deep summarization methods to address task 2B.

Notes

The formulated task has not been included among the official tasks of the CL-SciSumm challenges.

This task was optional in the BIRNDL CL-SciSumm 2019 challenge.

We exploit the feature importance function of Scikit-Learn to measure the relevance of each input feature to the classification phase.

The approach presented by Li et al. (2019) has been re-implemented at the best of the authors’ understanding.

References

Abu-Jbara, A., & Radev, D. (2011). Coherent citation-based summarization of scientific papers. In Proceedings of the 49th annual meeting of the association for computational linguistics: Human language technologies —HLT ’11 (Vol. 1, pp. 500–509). USA: Association for Computational Linguistics.

Baralis, E., & Cagliero, L. (2018). Highlighter: Automatic highlighting of electronic learning documents. IEEE Transactions on Emerging Topics in Computing, 6(1), 7–19. https://doi.org/10.1109/TETC.2017.2681655.

Baruah, G., & Kolla, M. (2018). Klick labs at CL-SciSumm 2018. In BIRNDL@SIGIR, “CEUR” workshop proceedings (Vol. 2132, pp. 134–141). CEUR-WS.org.

Beltagy, I., Lo, K., & Cohan, A. (2019). Scibert: A pretrained language model for scientific text. In Proceedings of the 2019 conference on empirical methods in natural language processing and the 9th international joint conference on natural language processing (EMNLP-IJCNLP) (pp. 3606–3611).

Bird, S., Klein, E., & Loper, E. (2009). Natural language processing with Python: Analyzing text with the natural language toolkit. Sebastopol: O’Reilly Media, Inc.

Cagliero, L., Farinetti, L., & Baralis, E. (2019). Recommending personalized summaries of teaching materials. IEEE Access, 7, 22729–22739. https://doi.org/10.1109/ACCESS.2019.2899655.

Cagliero, L., Garza, P., & Baralis, E. (2019). ELSA: A multilingual document summarization algorithm based on frequent itemsets and latent semantic analysis. ACM Transactions on Information Systems, 37(2), 21:1–21:33. https://doi.org/10.1145/3298987.

Chandrasekaran, M. K., Yasunaga, M., Radev, D., Freitag, D., & Kan, M. -Y. (2019). Overview and results: CL-SciSumm SharedTask. In Proceedings of the 4th joint workshop on bibliometric-enhanced information retrieval and natural language processing for digital libraries (BIRNDL 2019) @ SIGIR 2019 (p. 2019). Paris: France.

Cheng, J., & Lapata, M. (2016). Neural summarization by extracting sentences and words. In Proceedings of the 54th annual meeting of the association for computational linguistics (Long papers) (Vol. 1, pp. 484–494). Berlin, Germany: Association for Computational Linguistics. https://doi.org/10.18653/v1/P16-1046. https://www.aclweb.org/anthology/P16-1046.

Collins, E., Augenstein, I., & Riedel, S. (2017). A supervised approach to extractive summarisation of scientific papers. In Proceedings of the 21st conference on computational natural language learning (CoNLL 2017) (pp. 195–205). Vancouver, Canada: Association for Computational Linguistics. https://doi.org/10.18653/v1/K17-1021. https://www.aclweb.org/anthology/K17-1021.

Collins, E., Augenstein, I., & Riedel, S. (2017). A supervised approach to extractive summarisation of scientific papers. In Proceedings of the 21st conference on computational natural language learning (CoNLL 2017) (pp. 195–205).

Davoodi, E., Madan, K., & Gu, J. (2018). CLSciSumm shared task: On the contribution of similarity measure and natural language processing features for citing problem. In BIRNDL@SIGIR, “CEUR” workshop proceedings (Vol. 2132, pp. 96–101). CEUR-WS.org.

Fu, Y., Zhou, H., Chen, J., & Li, L. (2019). Rethinking text attribute transfer: A lexical analysis. In K. van Deemter, C. Lin, & H. Takamura (Eds.), Proceedings of the 12th international conference on natural language generation, INLG 2019, October 29–November 1, 2019 (pp. 24–33). Tokyo, Japan: Association for Computational Linguistics. https://aclweb.org/anthology/papers/W/W19/W19-8604/.

Giannakopoulos, G. (2013). Multi-document multilingual summarization and evaluation tracks in ACL 2013 multiling workshop. In Proceedings of the multiling 2013 workshop on multilingual multi-document summarization (pp. 20–28). Association for Computational Linguistics. http://www.aclweb.org/anthology/W13-3103.

Giannakopoulos, G., Kubina, J., Conroy, J. M., Steinberger, J., Favre, B., Kabadjov, M. A., Kruschwitz, U., & Poesio, M. (2015). MultiLing 2015: Multilingual summarization of single and multi-documents, on-line fora, and call-center conversations. In Proceedings of the “SIGDIAL” 2015 conference, the 16th annual meeting of the special interest group on discourse and dialogue, 2–4 September 2015 (pp. 270–274). Prague, Czech Republic. http://aclweb.org/anthology/W/W15/W15-4638.pdf.

Jaidka, K., Chandrasekaran, M. K., Rustagi, S., & Kan, M. -Y. (2016). Overview of the CL-SciSumm 2016 shared task. In Proceedings of joint workshop on bibliometric-enhanced information retrieval and NLP for digital libraries.

Jaidka, K., Yasunga, M., Chandrasekaran, M., Radev, D., & Kan, M. -Y. (2018). The CL-SciSumm shared task 2018: Results and key insights (pp. 1–10).

Jaidka, K., Yasunaga, M., Chandrasekaran, M. K., Radev, D., & Kan, M. Y. (2019). The CL-SciSumm shared task 2018: Results and key insights. arXiv preprint arXiv:1909.00764.

Kedzie, C., McKeown, K., & Daumé III, H. (2018). Content selection in deep learning models of summarization. In Proceedings of the 2018 conference on empirical methods in natural language processing (pp. 1818–1828).

Kim, M., Moirangthem, D. S., & Lee, M. (2016). Towards abstraction from extraction: Multiple timescale gated recurrent unit for summarization. In Rep4NLP@ACL (pp. 70–77). Association for Computational Linguistics.

Kumar Chandrasekaran, M., Jaidka, K., & Mayr, P. (2018). Joint workshop on bibliometric-enhanced information retrieval and natural language processing for digital libraries (BIRNDL 2018). In The 41st international ACM SIGIR conference on research & development in information retrieval, SIGIR ’18 (pp. 1415–1418). New York, NY, USA: ACM. https://doi.org/10.1145/3209978.3210194.

Kusner, M. J., Sun, Y., Kolkin, N. I., & Weinberger, K. Q. (2015). From word embeddings to document distances. In Proceedings of the 32nd international conference on international conference on machine learning—ICML’15 (Vol. 37, pp. 957-966). JMLR.org.

La Quatra, M., Cagliero, L., & Baralis, E. (2019). Poli2sum@CL-SciSumm-19: Identify, classify, and summarize cited text spans by means of ensembles of supervised models (pp. 233–246). https://www2.scopus.com/inward/record.uri?eid=2-s2.0-85071194418&partnerID=40&md5=e8f54672c3477c87a07010397cc60d28.

Leskovec, J., Rajaraman, A., & Ullman, J. D. (2014). Mining of massive datasets (2nd ed.). New York, NY: Cambridge University Press.

Li, L., Chi, J., Chen, M., Huang, Z., Zhu, Y., & Fu, X. (2018). CIST@CLSciSumm-18: Methods for computational linguistics scientific citation linkage, facet classification and summarization. In BIRNDL@SIGIR, “CEUR” workshop proceedings (Vol. 2132, pp. 84–95). CEUR-WS.org.

Li, L., Zhu, Y., Xie, Y., Huang, Z., Liu, W., Li, X., & Liu, Y. (2019). Cist@ CLSciSumm-19: Automatic scientific paper summarization with citances and facets. In BIRNDL@SIGIR.

Lin, C. -Y., & Hovy, E. (2003). Automatic evaluation of summaries using N-gram co-occurrence statistics. In Proceedings of the North American chapter of the association for computational linguistics on human language technology (Vol. 1, pp. 71–78).

Lloret, E., Romá-Ferri, M. T., & Palomar, M. (2013). Compendium: A text summarization system for generating abstracts of research papers. Data & Knowledge Engineering, 88, 164–175. https://doi.org/10.1016/j.datak.2013.08.005.

Ma, S., Jin, X., & Zhang, C. (2018). Automatic identification of cited text spans: A multi-classifier approach over imbalanced dataset. Scientometrics, 116(2), 1303–1330.

Mikolov, T., Sutskever, I., Chen, K., Corrado, G. S., & Dean, J. (2013). Distributed representations of words and phrases and their compositionality. In Advances in neural information processing systems (pp. 3111–3119).

Naik, A. P., & Bojewar, S. (2017). Tweet analytics and tweet summarization using graph mining. In 2017 international conference of electronics, communication and aerospace technology (ICECA) (Vol. 1, pp. 17–21). https://doi.org/10.1109/ICECA.2017.8203674.

Naik, S., Lade, S., Mamidipelli, S., & Save, A. (2018). Tweet summarization: A new approach. In 2018 second international conference on inventive communication and computational technologies (ICICCT) (pp. 1022–1025). https://doi.org/10.1109/ICICCT.2018.8473327.

Nakov, P. I., Schwartz, A. S., & Hearst, M. A. (2004). Citances: Citation sentences for semantic analysis of bioscience text. In In Proceedings of the SIGIR’04 workshop on search and discovery in bioinformatics.

Nallapati, R., Zhai, F., & Zhou, B. (2017). Summarunner: A recurrent neural network based sequence model for extractive summarization of documents. In Proceedings of the thirty-first AAAI conference on artificial intelligence, AAAI’17 (pp. 3075–3081). AAAI Press.

Nenkova, A., & McKeown, K. (2012). A survey of text summarization techniques. In C. C. Aggarwal & C. Zhai (Eds.), Mining text data (pp. 43–76). Berlin: Springer.

Nikolov, N. I., & Pfeiffer, M., & Hahnloser, R. H. R. (2018). Data-driven summarization of scientific articles. In Proceedings of the 7th international workshop on mining scientific publications, LREC 2018.

Ovadia, S. (2014). ResearchGate and Academia.edu: Academic social networks. Behavioral & Social Sciences Librarian, 33(3), 165–169. https://doi.org/10.1080/01639269.2014.934093.

Page, L., Brin, S., Motwani, R., & Winograd, T. (1999). The PageRank citation ranking: Bringing order to the web, Technical report. Stanford InfoLab.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Qazvinian, V., & Radev, D. R. (2008). Scientific paper summarization using citation summary networks. In Proceedings of the 22nd international conference on computational linguistics (Coling 2008) (pp. 689–696). Manchester, UK: Coling 2008 Organizing Committee. https://www.aclweb.org/anthology/C08-1087.

Qazvinian, V., & Radev, D. R. (2010). Identifying non-explicit citing sentences for citation-based summarization. In Proceedings of the 48th annual meeting of the association for computational linguistics, ACL ’10 (pp. 555–564). USA: Association for Computational Linguistics.

Ronzano, F., & Saggion, H. (2016). An empirical assessment of citation information in scientific summarization. In E. Métais, F. Meziane, M. Saraee, V. Sugumaran, & S. Vadera (Eds.), Natural language processing and information systems (pp. 318–325). Cham: Springer.

Saggion, H., & Ronzano, F. (2017). Scholarly data mining: Making sense of scientific literature. In 2017 ACM/IEEE joint conference on digital libraries (JCDL) (pp. 1–2). https://doi.org/10.1109/JCDL.2017.7991622.

Schwartz, A. S., & Hearst, M. (2006). Summarizing key concepts using citation sentences. In Proceedings of the HLT-NAACL BioNLP workshop on linking natural language and biology, LNLBioNLP ’06 (pp. 134–135). USA: Association for Computational Linguistics.

Sollaci, L. B., & Pereira, M. G. (2004). The introduction, methods, results, and discussion (IMRAD) structure: A fifty-year survey. Journal of the Medical Library Association, 92(3), 364.

Sun, X., & Zhuge, H. (2018). Summarization of scientific paper through reinforcement ranking on semantic link network. IEEE Access, 6, 40611–40625. https://doi.org/10.1109/ACCESS.2018.2856530.

Tan, P. N., Steinbach, M., Karpatne, A., & Kumar, V. (2018). Introduction to data mining (2nd ed.). New York: Pearson.

Wan, S., Dale, R., Dras, M., & Paris, C. (2008). Seed and grow: Augmenting statistically generated summary sentences using schematic word patterns. In Proceedings of the 2008 conference on empirical methods in natural language processing (pp. 543–552).

Wan, S., Paris, C., & Dale, R. (2009). Whetting the appetite of scientists: Producing summaries tailored to the citation context. In Proceedings of the 9th ACM/IEEE-CS joint conference on digital libraries (pp. 59–68). ACM.

Wan, S., Paris, C., & Dale, R. (2010). Invited paper: Supporting browsing-specific information needs: Introducing the citation-sensitive in-browser summariser. Web Semantics, 8(2–3), 196–202. https://doi.org/10.1016/j.websem.2010.03.002.

Wan, S., Paris, C., Muthukrishna, M., & Dale, R. (2009). Designing a citation-sensitive research tool: An initial study of browsing-specific information needs. In Proceedings of the 2009 workshop on text and citation analysis for scholarly digital libraries (NLPIR4DL) (pp. 45–53). Suntec City, Singapore: Association for Computational Linguistics. https://www.aclweb.org/anthology/W09-3606.

Wang, P., Li, S., Wang, T., Zhou, H., & Tang, J. (2018). “NUDT” @ CLSciSumm-18. In Proceedings of the 3rd joint workshop on bibliometric-enhanced information retrieval and natural language processing for digital libraries “(BIRNDL” 2018) co-located with the 41st international “ACM” “SIGIR” conference on research and development in information retrieval “(SIGIR” 2018) (pp. 102–113). Ann Arbor, USA.

Yasunaga, M., Kasai, J., Zhang, R., Fabbri, A., Li, I., Friedman, D., & Radev, D. (2019). ScisummNet: A large annotated corpus and content-impact models for scientific paper summarization with citation networks. In Proceedings of AAAI 2019.

Yasunaga, M., Zhang, R., Meelu, K., Pareek, A., Srinivasan, K., & Radev, D. R. (2017). Graph-based neural multi-document summarization. In Proceedings of CoNLL 2017.

Acknowledgements

The research leading to these results has been partly funded by the Smart-Data@PoliTO center for Big Data and Machine Learning technologies. Computational resources were provided by HPC@POLITO, a project of Academic Computing within the Department of Control and Computer Engineering at the Politecnico di Torino (http://www.hpc.polito.it).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

La Quatra, M., Cagliero, L. & Baralis, E. Exploiting pivot words to classify and summarize discourse facets of scientific papers. Scientometrics 125, 3139–3157 (2020). https://doi.org/10.1007/s11192-020-03532-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-020-03532-3