Abstract

The ratio of the total number of citations to the total number of cited papers was called “Garfield’s Constant” in some of the earlier works of Eugene Garfield. Later, he himself realized that the ratio is changing over time, but still was confident that behind this ratio some deeper regularity may be found. In the present paper a systematic analysis of this indicator, the Garfield Ratio is attempted. Its application in journal-level analysis is presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The term “Garfield’s Constant” was coined semi-self-ironically by Eugene Garfield in a Current Contents essay in 1976 (Garfield 1976). The concept itself has already been included in Garfield’s classic 1972 Science paper (Garfield 1972) with reference to the Science Citation Index 1970 Guide & Journal Lists (Science Citation Index 1971). Actually, in Garfield’s words: “By the time we had completed the 1964 Science Citation Index (SCI), we were aware that there was a surprising near-constancy in the ratio of 1.7 between references processed each year and the number of different items cited by those references. Very early we began to call the 1.7 ratio ‘the citation constant’” (Garfield 1976).

Garfield was confident that behind this ‘constant’ some deeper regularity may be found: “Obviously a changing number cannot be called a constant. But if the SCI were a real random sample of the total literature or achieved ‘complete’ coverage, we then would observe a constant, I believe, or at least be able to explain why we didn’t” (Garfield 1976).

Garfield was concerned about this near-constancy of the citation per cited paper ratio for long. In 1998, he wrote: “Another modest contribution I made to the microtheory of citation is Garfield’s constant. Actually, we know that this ‘constant’ is really a ratio. That ratio is remarkably ‘stable’ considering how much the literature has grown. Due to continuous growth of source journal coverage and increasing references cited per paper, the ratio of citations to published papers increased about 75% from 1945 to 1995—from 1.33 to 2.25 over the past 50 years. It is the inflation of the literature which increases the ratio each year” (Garfield 1998).

Although Garfield’s Constant has been mentioned a few times in the literature, it was done mainly as a historical curiosity (Bensman 2007) or just another example of the various laws of bibliometrics (Holden et al. 2005). Some citations to Garfield (1976) was found to refer to other passages of the essay than those concerning with the constant (Rushton and Endler 1979; van Leeuwen 2012).

In the present paper we revisit Garfield’s idea with an attempt to breathe new life into it or let it sleep for another while until the real Prince will come (Braun et al. 2010).

The number of citations per cited publications indicator

Irrespective of its ‘near-constancy’ property, the number of citations per cited publications indicator itself deserves some notice. Whether used for individuals, groups, journals, countries, etc., it has a certain ‘elitist’ feature: it completely disregards uncited papers—it does not punish unsuccessful attempts, thereby encourages risk-taking attitude. In this aspect, it behaves similarly to the h-index; on the other hand, it fully takes into account the actual citation rate of the cited publications—a feature often missed from the h-index (see e.g., Alonso et al. 2009). It would be, therefore, an obvious and straightforward choice when these features are preferred.

Notwithstanding, the number of citations per cited publications indicator was quite infrequently used in practical evaluations. It was mainly used in papers on quite peripheral topics: New-Zealand psychology (White and White 1977; White 1979), Nordic cancer research (Luukonen-Gronow and Suutarinen 1988), Croatian journals (Andreis and Jokic 2008) or Polish history (Kolasa 2012), to mention a few.

Garfield himself also used it in a rather specialized topic under the name “cited impact” without any reference to the Garfield’s Constant topic (Garfield 1990).

A specific technical advantage of calculating the number of citations per cited publications indicator instead of other citation averages (e.g., impact factor) that it is not necessary to determine the total number of papers (“citable items”) which might often be a substantial source of uncertainty. Not citable items remain uncited, thereby automatically get ruled out from the calculation.

The Garfield Factor and the Garfield Ratio

The term “Garfield Factor” was proposed by Vinkler (2000, 2012) to distinguish the original Journal Citation Reports journal impact factor (number of citations in a given year per citable items published in the journal in the two previous years) from various other variants of mean journal citation rates (e.g. publication and/or citation windows different from the original). In a similar vein, we take the liberty of calling the ratio defined by Garfield (number of citations in a given year per cited items published in all previous years) as the Garfield Ratio.

The Garfield Ratio values of the complete Science Citation Index (SCI) database were published until 2000 in the Comparative Statistical Summary section of the Citation Index volumes (Institute for Scientific Information 2001). They are displayed in Fig. 1. It can be seen that except for the decade 1960–1970 and the first half of the 1980s, the overall Garfield Ratio is continuously increasing. The values found in the SCI 1970 volume might be in this sense deceptive suggesting Garfield’s “constant” hypothesis.

In order to compare the time dependence of the Garfield Ratio, we also plotted the annual average impact factor (Garfield Factor) values in Fig. 1. It can be seen that the Garfield Ratio increases in a slower rate and fluctuates less than the Garfield Factor. Apparently, the ever increasing publication window (from the indefinite past until the citation year) lends the indicator a certain inertia and stability.

Garfield Ratio as journal indicator

Obviously, the Garfield Ratio can be determined for each journal separately by dividing the total number of citations received in a given year to all papers of the journal by the number of the cited papers. The features of this indicator are partly similar to, partly markedly different from those of the usual journal citation indicators such as the Garfield Factor (JCR Impact Factor) or the h-index (Braun et al. 2005, 2006).

Some features of the Garfield Ratio, the Garfield Factor and the SCImago version of the h-index (the “journal’s number of articles (h) that have received at least h citations over the whole period”; as published at www.scimagojr.com/journalrank.php) are compared in Table 1.

The table illustrates that a few simple indicators can fulfil various requirements, and the Garfield Ratio may be a reasonable choice for certain evaluation tasks.

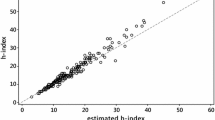

The Garfield Ratio of more than 5000 journals was calculated from the Web of Science data of the year 2000. The simple reason of the choice of this year was the availability of the data. For the same year the Garfield Factors of the Journal Citation Reports and the SCImago h-index values were compiled, as well. Figures 2 and 3 show the correlation of the Garfield Ratio with the other two journal citation indicators. The correlation between the GR and the GF is strong, between the GR and the h-index is much weaker (actually, weaker than that between the GF and the h-index).

More telling than the mere scatter plots is the list of the top ranked journals according to the various indicators (Table 2).

Visibly, the Garfield Ratio favors review journals as much as, or even more than, Garfield Factor; there are 11 of them in the top 20. These journals are obviously under-evaluated in the h-index ranking because of their small size. Journals ranked significantly higher by the Garfield Ratio than by the Garfield Factor may contain a higher percentage of citable but uncited paper diminishing the mean citation rate but leaving the citation per cited papers indicator undiscounted. Journals in the top 20 in all three indicator rankings deserve to name them in their full titles: Cell, Nature Genetics and Nature Medicine. They are really the crème de la crème.

The Garfield Ratio at individual journal level

We saw in Fig. 1 that, except for two short periods, the overall Garfield Ratio is, indeed, not a constant, but is increasing persistently. That does not mean necessarily that the Garfield Ratio of every single journal follows a similar pattern. It may well happen that the coverage of the database changes in a way to favor journals with higher Garfield Ratio. A closer look of some examples may help to clarify the situation.

In Figs. 4, 5 and 6, the time dependence of the Garfield Ratio and the Garfield Factor of the journals Scientometrics, Journal of Radioanalytical and Nuclear Chemistry and Journal of Membrane Biology are presented (representing the social, physical and biomedical sciences).

We are well aware that three examples do not allow for any far-reaching conclusions, so we only record our initial impressions.

-

The Garfield Ratio and the Garfield Factor runs roughly parallel. Both indicators reflect, in a sense, the current “citation potential” the topic. This may be underlined by the launching of a new publication channel in the field: in the case of Scientometrics, that of the Journal of Informetrics which was established in 2007 and soon became the second largest citing source of Scientometrics after itself.

-

The Garfield Ratio is more stable, less inclined to fluctuations than the Garfield Factor; for decade-long periods it may show “near-constant” behavior”.

-

Although in two of the three examples the Garfield Ratio shows an overall increasing tendency, the case of the Journal of Membrane Biology exemplifies the possibility of persistent decreasing. This does not mean necessarily a loss of interest in the topic. The frequency of the key term “membrane biology” is constantly increasing in the same period, but the literature is extremely scattered: the 205 papers were published in 155 different journals.

Concluding remarks

To become such an outstandingly successful and influential personality as Eugene Garfield turned to be, requires a long list of extraordinary virtues. Among them, perspicacity and foresight belonged to his particular strengths. It is not mere chance that he can be considered the forefather of so many later developments in information science and technology. Therefore, even ideas that later were modified or partly withdrawn by him worth serious consideration.

The Garfield Constant was changing in time, as Garfield himself admitted, and preferred to call it a ratio. In this paper it was shown that the Garfield Ratio (the ratio of citations per cited papers) is a rather undervalued simple indicator that can be usefully applied in various levels of analysis. Its time behavior is the result of complex factors like literature growth and obsolescence, and its study worth spending efforts even if the original “constant hypothesis” turned to be invalid.

References

Alonso, S., Cabrerizo, F. J., Herrera-Viedma, E., & Herrera, F. (2009). h-Index: A review focused in its variants, computation and standardization for different scientific fields. Journal of Informetrics, 3(4), 273–289.

Andreis, M., & Jokic, M. (2008). An impact of Croatian journals measured by citation analysis from SCI-expanded database in time span 1975–2001. Scientometrics, 75(2), 263–288.

Bensman, S. J. (2007). Garfield and the impact factor. Annual Review of Information Science and Technology, 41(1), 93–155.

Braun, T., Glänzel, W., & Schubert, A. (2005). A Hirsch-type index for journals. Scientist, 19(22), 8.

Braun, T., Glänzel, W., & Schubert, A. (2006). A Hirsch-type index for journals. Scientometrics, 69(1), 169–173.

Braun, T., Glänzel, W., & Schubert, A. (2010). On Sleeping Beauties, Princes and other tales of citation distributions. Research Evaluation, 19(3), 195–202.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178, 471–479.

Garfield, E. (1976). Is the ratio between number of citations and publications cited a true constant? Current Contents, #6, 5–7. Reprinted in: Garfield, E. (1977) Essays of an Information Scientist, 2, 419–425.

Garfield, E. (1990) Journal Citation Studies. 52. The multifaceted structure of crystallography research. Part 2. A global perspective. Current Contents, #37, 3–11. Reprinted in: Garfield, E. (1990) Essays of an Information Scientist: Journalology, KeyWords Plus, and other essays, Vol 13, pp 337–345.

Garfield, E. (1998). Random thoughts on citationology. Its theory and practice. Scientometrics, 43(1), 69–76.

Holden, G., Rosenberg, G., & Barker, K. (2005). Tracing thought through time and space: A selective review of bibliometrics in social work. Social Work in Health Care, 41(3/4), 1–34.

Institute for Scientific Information. (1971). Science Citation Index 1970: Guide & Journal Lists (p. 14). Philadelphia: Institute for Scientific Information.

Institute for Scientific Information. (2001). Science Citation Index 1995–2000: Comparative statistical summary. Philadelphia: Institute for Scientific Information.

Kolasa, W. M. (2012). Specific character of citations in historiography (using the example of Polish history). Scientometrics, 90(3), 905–923.

Luukonen-Gronow, T., & Suutarinen, P. (1988). Bibliometric analysis of Nordic cancer research: a report on study data. FPR-Publication No. 8. Copenhagen: Nordic Council of Ministers.

Rushton, J. P., & Endler, N. S. (1979). More to-do about citation counts in British psychology. Bulletin of the British Psychological Society, 32(March), 107–109.

van Leeuwen, T. (2012). Discussing some basic critique on Journal Impact Factors: Revision of earlier comments. Scientometrics, 92(2), 443–455.

Vinkler, P. (2000). Evaluation of the publication activity of research teams by means of scientometric indicators. Current Science, 79(5), 602–612.

Vinkler, P. (2012). The Garfield impact factor, one of the fundamental indicators in scientometrics. Scientometrics, 92(2), 471–483.

White, M. J. (1979). Scholarly impact of New Zealand psychology (1970–1977). New Zealand Psychologist, 8(1), 67–75.

White, M. J., & White, K. G. (1977). Citation analysis of psychology journals. American Psychologist, 32(5), 301–305.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schubert, A., Schubert, G. Whatever happened to Garfield’s constant?. Scientometrics 114, 659–667 (2018). https://doi.org/10.1007/s11192-017-2527-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-017-2527-3