Abstract

This article analyses the development of effectiveness and efficiency of German business schools’ research production between 2001 and 2009. The results suggest that effectiveness for most of the examined business schools increases initially. Then, however, a declining trend in the further course of time can be observed. Similar tendencies can be stated considering efficiency, even though they are slightly less pronounced. An analysis of the reasons for these observations reveals that the initial positive developments of effectiveness and of efficiency are mainly due to technology advances, whereas the following decreases are basically a result of technology backwardness. In regard to different types of business schools, a strong relation between the reputation of a school and the research effectiveness of that school becomes apparent. With reference to geographical regions, Western and Southern German business schools feature higher effectiveness than their Northern or Eastern counterparts do. This statement, however, is not valid in terms of efficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Against the background of growing numbers of students and increasing research expenditure, as well as stronger competition and the targeted fiscal consolidation on the basis of the so-called debt brake in the federal state constitutions of Germany, it is imperative for universities that they ensure an effective and efficient allocation of resources. An assessment of whether and to what extent universities do so, requires the present definition and/or discussion of what the elements of performance in higher education are. It is undisputed and appropriately recorded in the higher education acts of the federal states that the three overriding task areas of scientific institutions in higher education consist of research, teaching and self-management. The determination of effectiveness and efficiency of these tasks requires the definition and selection as well as acquisition and purposeful aggregation of appropriate indicators. Concerning research, the indicators used are typically based on the input and output of the research process. Transparent comparisons of values of appropriate input and output indicators of research allow comparison of the benefits and costs of universities. The research ranking of the Centre for Higher Education (CHE) is such a comparison. The CHE compares German university departments in relation to each other and to the same research subject. This is done by a specific evaluation and merging of certain selected input and output indicators as well as data specifically collected for that purpose (Berghoff et al. 2011). The research rankings are discussed controversially in the literature (see, e.g., Ahn et al. 2007; Marginson and van der Welde 2007; Stolz et al. 2010; Clermont and Dirksen 2016). Despite the criticism, by determining the indicators used in the ranking, the CHE provides a comprehensive, up-to-date and advanced database, which facilitates further empirical analyses of the effectiveness and/or efficiency of research of business schools (BuSs) in Germany.

During the survey years of the CHE, business research in Germany has decisively changed with respect to numerous influencing factors. Many BuSs have increased in size due to more diverse funding programmes of the German federal and state governments—as part of the so-called ‘Excellence Initiative’. Furthermore, an important condition for young scientists is that they acquire third-party funds and, primarily, place articles in top journals, in order to even be considered when applying for a professorship at the end of their qualification process (Schrader and Hennig-Thurau 2009, p. 202). In addition, for scientists who have already been appointed to professorships, the pressure to publish research results in (top) journals, as well as to acquire third-party funds is increasing. This is due to the reform of performance-related salary in Germany that results in performance-related targets (Bort and Schiller-Merkens 2010, p. 340). Owing to these changes, an analysis of research effectiveness and efficiency of BuSs in Germany over time brings deeper insights into possible changes and their reasons. To date, such a consideration however, has rarely been implemented, which results in the following research question:

-

Which developments and/or changes demonstrate the research effectiveness and research efficiency of German BuSs with respect to the criteria collected by the CHE over the course of time?

To calculate the effectiveness and efficiency of BuSs, I use the non-parametric procedure of data envelopment analysis (DEA), which has been methodically established in the university context (see, e.g., the analyses by Ahn et al. 1988; Johnes 2006; Fandel 2007; Gutierrez 2007; Dyckhoff et al. 2013). DEA measures the relative efficiency of Decision Making Units (DMUs; here BuSs) on the basis of a comparison of inputs and outputs, without the need for an explicit indication of weighting factors. The weighting factors are determined endogenously within the model. Changes in the degrees of effectiveness or of efficiency calculated by DEA can, on the one hand, result from actual improvements or deterioration in effectiveness and/or efficiency, and therefore as a result of changes in the performances of the members of the organisation. On the other hand, changes can also be based on technological improvements or setbacks, because the research production technology underlying the relevant observation period varies. Therefore, I will look at the following research question:

-

To what effects are the resulting changes in degrees of effectiveness and of efficiency due?

In addition to the aforementioned questions, it is also of interest to distinguish between different groups of BuSs, in order to analyse whether there are differences in development between these subgroups. For this reason, in a first step, BuSs which are renowned for research are regarded, i.e. BuSs that have an appropriate research reputation. An additional investigation of different types of universities is also generally desirable, e.g. public and private universities. However, only three private universities are among the used datasets, which is why such an investigation does not appear useful. In Germany, another type of university is the university of technology (TU), which primarily offers engineering and science courses. Although this type of university focuses on courses in natural sciences and engineering, it is increasingly offering other courses, such as business studies. Due to the different focus of this type of university, the TU group is analysed separately. In addition, in Germany, universities fall under the responsibility of the individual federal states. That is, each of the sixteen German federal states can decide autonomously on the controlling of its universities, which is one reason why a detailed analysis of the development of universities in the individual federal states would appear fundamentally appropriate. Therefore, I will address the following research question:

-

What differences can be determined between renowned BuSs, BuSs of TUs, and when comparing the geographical region of BuSs in the German federal states in terms of the respective development of research effectiveness and efficiency?

To answer these research questions, the paper is structured as follows: In the next section I give a short overview of the literature on research performance measurement (in Germany) before I introduce the research design. After that I discuss the development of the degrees of effectiveness and of efficiency of BuSs over time and identify the reasons for these developments. Next I analyse whether the type of university and its reputation influence the degrees of effectiveness or of efficiency. The paper concludes with implications and limitations.

Literature overview

There are numerous articles which deal with questions of management control of university services. With regard to management control of teaching, Höfer-Diehl (2014) describes the structures and instruments which are discussed in the (German) literature. Rassenhövel (2010) analyses the performance of research and teaching from the theoretical standpoint of decision-orientated production theory and gives an overview of the relevant literature. Performance assessment and the analysis of its influencing factors are also discussed in an international context (e.g. Keeney et al. 2006; Beerkens 2013; Zhang et al. 2013; Barham et al. 2014).

However, there are only a few studies which specifically address the question of effectiveness and/or efficiency development. These studies differ from one another, depending on which organisational units of universities are considered. While the majority of studies analyse universities in their entirety (such as Johnes 2008; Worthington and Lee 2008; García-Aracil 2013), only a few examine individual departments (e.g. Bolli and Farsi 2015). On the other hand, due to the heterogeneity of higher education systems in different countries, the studies can be differentiated according to the countries being examined. In this sense, studies on efficiency development of universities in the UK (such as Thanassoulis et al. 2011; Flegg and Allen 2007), Italy and Spain (e.g. Agasisti and Pérez Esparrells 2010), Australia (such as Horne and Hu 2008) and Switzerland in conjunction with Germany (e.g. Olivares and Schenker-Wicki 2012) were conducted. To aggregate performance indicators for an efficiency analysis over time, different methods are used, most notably parametric frontier techniques, such as stochastic frontier analysis (e.g. Horne and Hu 2008), or non-parametric frontier techniques, such as DEA (e.g. Thanassoulis et al. 2011).Footnote 1

Concerning German BuSs, Ahn et al. (2007) examine the effects of different aggregation methods on research performance, whereas Dyckhoff et al. (2013) analyse effectiveness and efficiency, as well as balance and/or specialisation of single German BuSs. In contrast, Dyckhoff et al. (2009), Albers (2015) and Clermont et al. (2015) investigate the relation between research productivity and the size of German BuSs, while Albers and Bielecki (2012) identify factors which influence the reputation of institutions of higher education.

In respect of the major purpose of this paper—the analysis of the research effectiveness and efficiency development of German BuSs—the work of Bielecki and Albers (2012) represents a starting point. The authors examine the research efficiency of BuSs by using DEA and CHE research data from 2008 and 2011. To compare the resulting degrees of efficiency, the authors create rankings of the individual year groups and compare them with the help of rank correlation coefficients. They show that the calculated degrees of efficiency and the resulting rankings barely differ between the years involved. Major differences are noticeable, however, when looking at the development of single performance criteria.

Nevertheless, this study has its weaknesses in terms of the datasets generated, as well as in terms of the application of the DEA method. Due to the possible double count in the indicators “national publications” and “international publications” (Clermont and Dyckhoff 2012), the authors deduct, for example, the international publications from the national ones in order to generate German-language publications. This approach is however not feasible, because the international publications are measured as numbers, whereas the national publications are measured as weighted publication points. Furthermore, a different number of BuSs are involved in the DEA analysis for the data from 2008 and from 2011. This can cause distortions within intertemporal comparisons. DEA analysis results depend on the indicators included and on the number and the type of DMUs. Therefore, resulting changes in intertemporal comparisons can also be attributed to the fact that other DMUs were included in the analysis. In addition, the ranking of DMUs on the basis of degrees of efficiency calculated by DEA is controversially discussed in the literature. However, the improved practices for determining rankings by using DEA (e.g., on the basis of cross-efficiencies, see Doyle and Green 1994) were not implemented by Bielecki and Albers (2012). Due to these problems, distortions in the results and the interpretations of this study are both possible and probable. Therefore, the main purpose of the existent study still remains unachieved.

Study design

Database

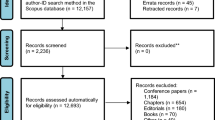

There are, so far, four publication dates of research performance data of German BuSs via the CHE: 2002, 2005, 2008 and 2011.Footnote 2 The respective data of a survey year always refer to the 3 years preceding the data collection. Therefore, for example, research performance data for the ranking in 2011 are collected throughout 2010 and refer to the period from 1st January 2007 to 31st December 2009. To characterise research profiles, and for the final determination of research-orientated BuSs, the respective outputs from CHE are operationalised and assessed using absolute indicators.Footnote 3 Since 2008, the CHE considers the six research performance indicators named in Table 1 (four outputs, two inputs).

The indicator PP-nat states the publications in journals, conference proceedings and books and is determined by a query in the German literature database WISO with the names of BuS scientists holding a Ph.D. degree. The names are submitted by a BuS itself. The generated publications are converted into publication points, in which the number of authors, the length as well as the type of each article and, in the case of journal articles, the quality of the publication medium on the basis of the JOURQUAL2 journal ranking are considered. The numbers of internationally visible publications have been considered as indicators since the 2008 ranking. The Pub-int indicator was determined during survey year 2007 with the names of Ph.D. scientists submitted by the BuSs, and in 2010 with the names of the BuSs (institution-related), in both cases with the US-American database Web of Science. Here, the numbers of publications are not converted into publication points. The amount of third-party funds expended and the numbers of Ph.D. dissertations were determined again for all periods. Both values are measured by surveying the BuSs. Additionally, the two input indicators—numbers of professors and of research assistants—are generated by surveying the BuSs. The numbers of professors and research assistants involve only those positions resulting from federal state funding.

The indicator values for all BuSs were provided to me in a non-aggregated form directly by the CHE. However, only the datasets from the ranking publications from 2005 onwards are included in the following analysis, i.e. the datasets relate to the period from 1st January 2001 until 31st December 2009. The dataset of the first survey in 2001 (ranking published in 2002) can be considered only as a pioneer dataset, on the basis of which the indicator design and collection were gradually improved. Due to collection as well as validity and consistency aspects, this dataset is only of limited suitability for empirical analysis (Gilles 2005, p. 130ff.; Dyckhoff et al. 2009, p. 27f.), which is why this dataset is not included in the subsequent analyses.

However, further modifications to the data material are necessary. Thus, the Pub-int indicator for the ranking in 2005 has not been collected. Because the effectiveness and efficiency evaluation is relative to the consolidated indicators and different indicators are used between the individual years, it cannot be abstracted in the analysis as to whether changes only result from the inclusion of a new indicator. Hence, the analyses are initially performed without the Pub-int indicator. In the subsequent part of each analysis step, the Pub-int indicator is integrated into the calculations and the changes in the results when including this indicator are analysed.

A further modification is required because the ranked BuSs vary between the survey years. This is, for example, on account of some universities now boycotting participation in the ranking; e.g. the universities of Hamburg and Cologne. Since an analysis of effectiveness and of efficiency with DEA is also relative to the BuSs (as DMUs) included, the variations in degrees of effectiveness and of efficiency could be misinterpreted when the number of BuSs varied between the survey years. To avoid such misinterpretations, only those 48 BuSs are analysed which participated in all survey years and whose datasets are completely available in relation to the indicators used. The latter exclusion is necessary because it cannot be clarified as to whether an incomplete dataset is because BuSs have, for example, acquired no third-party funds during the survey period, or because BuSs cannot or do not want to make this information available.

The resulting datasets used for the subsequent analysis of the survey years 2004 (2005 ranking), 2007 (2008 ranking) and 2010 (2011 ranking) are shown in Tables 6, 7 and 8 in the “Appendix”. All specified indicator values are absolute data over the entire three-year period. For simplification purposes, they will be referred to as the data and the effectiveness or the efficiency in 2005, 2008 and 2011 in the following, meaning the respective periods 2001–2003, 2004–2006 and 2007–2009.

Moreover, the 48 BuSs are arranged in different subgroups. While the TU are relatively easily definable, the surveys carried out by the CHE are consulted for determining those BuSs which are renowned for their research. There, the BuS professors are asked during every ranking data collection to specify five BuSs that they perceive to be strong in research, without mentioning their own BuS. In this article, such BuSs are termed ‘(research) renowned’, which in at least two of the three CHE survey rounds ranked among the ten BuSs with the highest reputation indicator values. This results in nine BuSs, which are denoted in the tables of the “Appendix” in bold font.

To analyse developments of BuSs which belong to specific German federal states, the number of universities in certain federal states is insufficient for a meaningful analysis. Therefore, BuSs are not allocated to the individual federal states, but are divided into Northern, Southern, Eastern and Western German regions. This results in the following allocations, with the number of allocated BuSs specified in parenthesesFootnote 4:

-

Northern Germany (7): Bremen, Hamburg, Mecklenburg-Western Pomerania, Lower Saxony and Schleswig–Holstein

-

Southern Germany (13): Baden-Württemberg and Bavaria

-

Eastern Germany (13): Berlin, Brandenburg, Saxony, Saxony-Anhalt and Thuringia

-

Western Germany (15): Hessen, North Rhine-Westphalia, Rhineland-Palatinate and Saarland.

Methodology

Since effectiveness is not a well-known concept and often confused with efficiency, the difference between the two concepts has to be made clear before describing the methods used to calculate them.Footnote 5 Effectiveness and efficiency are used as evaluation criteria for the rationality of human behaviours or activities with respect to their targeted purpose (Ahn and Neumann 2014). A decision maker acts in a purpose-rational way, if he considers purposes, means as well as positive and/or negative side effects of an economic activity or alternative in the decision making process (Weber 1978). Purposes reflect the original motive for an economic activity and are specified by fundamental objectives (Keeney 1992), whereas means are the resources used to conduct this activity. Any other results of performing an economic activity, which are caused unintentionally, are called (positive or negative) side effects, e.g. the emission of greenhouse gases in production processes. The definitions of effectiveness and efficiency are essentially based on those elements. The degree of effectiveness describes the extent to which the fundamental objectives are achieved. In contrast, the degree of efficiency refers to the relation between the operationalised fundamental objectives and the resources used plus any relevant positive and/or negative side effects. That is, both effectiveness and efficiency are relative to the regarded fundamental objectives, and to the considered activities or alternatives as well. In our case, the output indicators of research indicate the fundamental objectives, and the input indicators illustrate the resources used.

To calculate the effectiveness and efficiency degree of the regarded BuSs, DEA and advanced analysis techniques will be used in the following. There are four basic radial DEA models, which can then be distinguished as to whether constant or variable returns to scale (CCR models vs. BCC models) are postulated and whether an input or output orientation is regarded (Charnes et al. 1978; Banker et al. 1984).Footnote 6 Analogous to the calculation of degrees of efficiency with DEA, it is also possible to calculate degrees of effectiveness. The difference is that only the outputs of BuSs are taken into account, while the input is set to the value of one for each BuS (Dyckhoff and Gilles 2004; Ahn and Neumann 2014). Unfortunately, the degrees of effectiveness and of efficiency of individual periods calculated by DEA cannot be compared directly with each other, or at best only to a restricted degree. This is essentially because with DEA a deterministic, empirical research technology is determined from the current input and output data. The research technology and hence the relevant benchmarking partners can vary between different periods, which appropriately limit comparability. In addition, a ranking of the DMUs on the basis of the degrees of efficiency with a subsequent comparison of the rank correlations is therefore questionable.Footnote 7 However, window analysis and the Malmquist index provide ways of comparing DEA degrees of effectiveness and of efficiency over time.

The window analysis developed by Charnes et al. (1985) is in principle an advanced DEA analysis; ‘advanced’ in the sense that a time window is spanned within which the identified activities of BuSs are included in the analysis. For example, the datasets from 2005 and 2008 are evaluated together in one DEA analysis. That is, the efficient boundary is determined from these two datasets and the degrees of efficiency result from the relevant distance of the data points from this boundary. Hence, a BuS receives two degrees of efficiency, one for 2005 and another for 2008. However, it is implicitly assumed during such analyses that there are no technical changes in research production between the considered periods (Asmild et al. 2004, p. 70), i.e. an analysis of the causes of changes in degrees of effectiveness or of efficiency is not possible.

The Malmquist index (Malmquist 1953; Caves et al. 1982a, b) is a method that enables the calculation of potential technical changes.Footnote 8 In this index, the degrees of efficiency of two different survey points in time t and t + 1 are set into a ratio, out of which a percentage development of efficiency can be derived. Caves et al. (1982b) deduce two Malmquist index values M, one in relation to the technology \(t \, \left( {M_{t}^{0} } \right)\) and one in relation to the technology \(t + 1 \, \left( {M_{t + 1}^{0} } \right)\). That means, let \(\theta_{a,b}^{0}\) be the degree of efficiency determined by DEA of a considered BuS 0 with its activity data at time b and the underlying research technology at time a simplified to \(a,b \in \left\{ {t, \, t + 1} \right\}\). Thus, the following two Malmquist indexes result in

Färe et al. (1992, 1994) merge these two index values into a factor \(M_{t,t + 1}^{0}\) by calculating the geometric mean:

(EC) is the ratio of the degree of efficiency in t + 1 and that in t resulting from the “normal” application of DEA. It indicates the (pure) efficiency change. (TC) corresponds to a technology change, which is calculated as the distance between the efficient boundaries of technologies in t and t + 1 from the perspective of the considered BuS 0. Because the activities of BuS 0 at times t and t + 1 can be and usually are in other positions in the multidimensional polyhedron spanned by the DEA (=empirical technology), it is necessary to separately consider the distance between the efficient boundaries from both the perspective of time t and t + 1. A disadvantage of the Malmquist index calculated with DEA is that it is defined only for a case of two points in time, i.e. the consideration of a third period, t + 2, requires two separate analyses. Therefore, the development of effectiveness and efficiency from 2005 to 2008 and from 2008 to 2011 are analysed.

When calculating the degrees of efficiency within the framework of the Malmquist index, BuSs can be outside of the data envelopment at one of the observed points in time; therefore, problems could result in calculations in the form of insolvabilities of optimisation.Footnote 9 When calculating radial projections, this problem can always be solved if a linear envelopment is presumed, which is why constant returns to scale are presumed in the following analyses. To reach comparability between the analyses of the Malmquist index with those of the window analyses, also the window analyses are based on linear envelopments.

It still needs to be clarified whether the radial degrees of efficiency are calculated in an input-orientated or an output-orientated way. From a short-term or a medium-term perspective, the adoption of output orientation seems plausible, because in Germany scientific staff in the short-term can almost be viewed as being permanent and are (if at all) available only in the long-term. Thus, input-orientated models only make sense for a long-term perspective and output-oriented models are used in the following investigations.

Development of effectiveness and efficiency

Table 2 records the descriptive analysis of BuSs and the formed subgroups, in terms of each of the three three-year groups investigated and each of the five indicators. From the arithmetic means it becomes evident that the renowned BuSs have the highest average values in all indicators, with regard to all year groups considered. On the other hand, the average characteristics of the Northern German BuSs in almost all indicators and for all year groups are the lowest. While BuSs of TUs display, in comparison, average sizes within most of the average characteristics, their values stand out in terms of third-party funds. This result is generally explainable: As third-party funds are often considered to be important performance criteria within the engineering departments of TUs, their relevance is foregrounded by the university administration, possibly also among other departments, and thus scientific disciplines.

As regards the average development of the values, it is striking that the size of the BuSs (i.e. the number of professors and research assistants), the number of internationally visible publications, Ph.D. dissertations and the amount of third-party funds are increasing. Thus for example, from 2005 to 2011, the average third-party funds increased by about 59 % and the average number of Ph.D. dissertations by almost 50 %. Both the renowned BuSs and BuSs of TUs are on average increasing disproportionately compared to the average of all BuSs: the renowned BuSs by 67 % and BuSs of TUs even by 88 %. In the case of the development of Ph.D. dissertations, BuSs of TUs increase their average doctoral degrees from 29 (2005) by 93 % to 56 (2011).

Also in relation to the region of the universities, differences become apparent within the development of third-party funds and Ph.D. dissertations. While BuSs in Eastern and Western Germany in terms of both indicators—and especially in the case of third-party funding (84 and/or 94 %)—are disproportionately increasing on the overall average, there are almost no changes in Northern BuSs, while Southern BuSs lie slightly below the overall average. However, it must be noted that at Southern BuSs, the number of Ph.D. dissertations and the amount of third-party funds already exhibit high absolute values. Possibly, these results are attributable to positive incentive effects through the collection of data by the CHE and the transition to performance-based allocations of resources. Thus, the BuSs anticipate this type of measurement and are increasing the corresponding activity types. Nevertheless, the corresponding staff deployment has also increased in the period, which is then taken into account in the analysis of efficiency development.

An opposite trend is noticeable when inspecting the nationally visible publications. While the average values for all observed groups increased between 2005 and 2008, they decreased in 2011. Thereby, the average publication points are falling even below the values from 2005. This basic development applies to all subgroups considered. Publication points between 2005 and 2011 only remained fairly constant for BuSs in Eastern Germany.

Figure 1 shows the results, when aggregating the data with a window analysis. The top two diagrams display the degrees of effectiveness; the lower two display the respective degrees of efficiency. The left-hand diagrams refer to the comparisons between 2005 and 2008, i.e. the effective or efficient boundary is composed of a linear combination of datasets from 2005 and 2008. Accordingly, the right-hand diagrams refer to comparisons of the degrees of effectiveness or of efficiency in 2008 and 2011. The degrees of effectiveness and of efficiency for those BuSs renown for their research are represented by a triangle, the degrees for BuSs of TUs by a circle, and the others by a rhombus. The BuSs which are positioned above the marked angle bisectors have improved in each comparison, whereas those positioned below them have deteriorated, and BuSs on the angle bisector have the same degree of effectiveness or of efficiency throughout the observed years.

It becomes apparent that the majority of the BuSs improved their degrees of effectiveness between 2005 and 2008. Only 13 of the 48 BuSs had a lower degree in 2008 compared to 2005. Also in relation to the effective boundary, it appears that this is exclusively composed of data points from 2008. It can be further established that especially those BuSs renowned for their research obtained high degrees of effectiveness and as well of efficiency throughout each year, and improved, with one exception, between 2005/08. The degrees of efficiency for most of the BuSs are better than or equal to the corresponding degrees of effectiveness. This suggests that the improvements in the targets (i.e. in the single output indicators) were not “bought” by an excessive increase in staff numbers. There are only a few BuSs where the reverse results: Their degrees of efficiency are lower than the corresponding degrees of effectiveness, which indicates a comparatively excessively high use of resources in the corresponding years. When comparing the development of degrees of effectiveness and of efficiency, similar results emerge. On the one hand, most BuSs improved between 2005/08. On the other hand, in comparison, degrees of efficiency between 2008 and 2011 declined for many BuSs. In contrast to effectiveness observation, no concrete statements about individual groups are possible.Footnote 10

If the number of internationally visible publications is taken into account for comparing the years 2008 and 2011, firstly the degrees of effectiveness of many BuSs increased (18 BuSs in 2008 and 35 BuSs in 2011). On average, degrees of effectiveness rose in 2008 by 5 % and in 2011 by 17 %. Also, in regard to the degrees of efficiency, a boost is noticeable for similar numbers of BuSs (20 BuSs in 2008 and 33 BuSs in 2011); the gain is on average, with 4 % (2008) and 15 % (2011), slightly lower than for the degrees of effectiveness. First of all, the determined increase in the degrees of effectiveness and of efficiency is an effect of DEA, because if an additional indicator is integrated, degrees of effectiveness and/or of efficiency for each BuS can only improve based on the models chosen here. However, it can be stated that the increase for many BuSs is relatively low and for some BuSs significant changes result. For example, the BuS of the university in Frankfurt (Main) mainly benefits from the inclusion of the international publications, since their degree of effectiveness increased by 288 % in 2008 and by 40 % in 2011. In terms of the degrees of efficiency, larger deflections (>40 %) are not recognizable. Only one BuS benefitted more from the inclusion with an appreciation of 43 %.Footnote 11

Reasons for the development of effectiveness and efficiency

For a detailed analysis of the reasons for the developments, Fig. 2 contrasts the results from a Malmquist index calculation. On the x-axis are the (pure) changes in effectiveness or efficiency (EC), and on the y-axis are the related technology changes (TC); both according to Eq. (2). The four indifference curves show exemplary constant Malmquist index values, resulting from the multiplication of the two development reasons (EC × TC). Again, the top two diagrams represent the effectiveness and the bottom two diagrams the efficiency. The left-hand diagrams take into account the comparison of the years 2005 and 2008, while those on the right-hand refer to the comparison between 2008 and 2011.

Concerning effectiveness, it can be determined that for all BuSs, (research) technology between 2005 and 2008 changes positively. This is no longer the case in the comparison of 2008 and 2011. This means that, in relation to all BuSs, technological improvement is noticeable, thus for given input constantly more output could be created in 2008 than was the case in 2005. With respect to the performance achieved in the industrial sector and (partially) in the service sector, such changes can indeed be attributed to technology improvements, e.g. if automation leads to production possibilities where more cars can be produced with the same amount of resources. However, in the context of research performance in business administration, technical improvements or automations are not expectable, in particular with regard to the integrated output indicators used in this study. Hence, the term “technological improvements” in the DEA or Malmquist index context is not to be interpreted as technical improvements in the production technology of a BuS but rather as positive changes in the behaviour of the researchers employed by these BuSs.

Between 2008 and 2011, this was applicable for only a few BuSs and the extent of technology change for these few BuSs was significantly lower than in the previous years. Looking at (pure) effectiveness changes, a reverse trend can be determined. While between 2005 and 2008 only twelve BuSs experienced a positive change with regard to their effectiveness, this number increased significantly between 2008 and 2011 to 26 BuSs. Hence, the positive development of BuSs between 2005 and 2008 was mainly due to technological improvements. The corresponding negative developments between 2008 and 2011, however, result from technological setbacks.

In terms of efficiency, a similar picture appears, even if it is not as evident as that of effectiveness. For 38 BuSs, technological improvements result between 2005 and 2008 within their relevant areas. Between 2008 and 2011, this is the case for only a few BuSs; however, for considerably more of them than with regard to effectiveness. Thus, 25, i.e. more than half of the BuSs, are located in an area which is subject to technological improvements. At the same time, (pure) efficiency change is declining. Overall, the positive efficiency improvements between 2005 and 2008 can be attributed to technology improvements too. However, the negative development in 2011 does not clearly result from technological setbacks, but also from a negative change in efficiency, thus from a now less favourable ratio of weighted outputs to weighted inputs of individual BuSs.

In addition to the above statements, BuSs can be subdivided into up to six different groups (A to F in Fig. 2). Due to technical progress realised for all BuSs, only three groups emerge between 2005 and 2008; however, all six groups emerge between 2008 and 2011. A crucial limit to interpreting these groups is whether the Malmquist index value is greater or less than one; thus overall, a positive (Malmquist index greater than one) or negative (Malmquist index less than one) development of effectiveness and/or efficiency exists. The meanings of the individual groups are listed in Table 3. Based on this subdividing, the effects which have contributed to the overall positive or negative development of BuSs can be determined.

In terms of effectiveness, it can be stated that BuSs are distributed evenly between 2005 and 2008 over groups A, D and E. While approximately two-thirds of BuSs improved their aggregated output, only about one-third reached a performance above technological improvement. Compared to 2008 and 2011, the number of the BuSs increased whose aggregated performance rose more strongly than technological improvement did (Group E) and/or rose despite technological setbacks (Group F). The allocation of individual BuSs to groups varied strongly between the observation periods; only the allocations of a few BuSs remained constant. A total of 12 BuSs moved from groups A and D (2005/08) to Group F (2008/11). These BuSs thus improved significantly in comparison to the others.

A reverse trend becomes evident for the efficiency development. While 20 BuSs in the comparison between 2005 and 2008 are in Groups E and F, this number drops to only 9 BuSs for the 2008/11 comparison. This shows a more significant decline in the aggregated performance than in effectiveness, where an increase was detected. Somewhat lower motion profiles became apparent when the two periods were compared with each other. Stronger movements take place from Group E to A (i.e. from a high performance to a significant decline in the aggregated performance values) and from Group D to B (both marked by a decline in the aggregated performance data).

The results when bringing the number of internationally visible publications into the investigations as additional output indicators for comparing the years 2008 and 2011 are shown in Fig. 3. The upper diagram displays effectiveness developments, and the bottom diagram accordingly efficiency developments. When comparing the effectiveness diagrams of Fig. 3 with those of Fig. 2, the coordinates of the individual BuSs move mainly on the technology change axis (x-axis). As a result, the identified groups B, C and F, are now significantly less pronounced, especially in favour of groups A and E. This shows that there is a positive trend in the number of internationally visible publications, which, at least as regards effectiveness, apparently offsets the technological setbacks due to the decline in the points of nationally visible publications. Interestingly, the previously mentioned effect cannot be determined when regarding efficiency: The allocation of BuSs to the various groups, as well as the strength of these groups remains almost constant. In particular, it is undetectable whether the relevant range of efficient boundary for BuSs shifts accordingly. Hence, the relevance of involving the Pub-int indicator becomes relative when the input factors are also taken into account.

Influence of reputation, type of university and regional allocations on the development of effectiveness and efficiency

Table 4 presents (for the window analysis and for the components of the Malmquist index) the average degrees of effectiveness and of efficiency of the BuSs and the developments in terms of all BuSs and BuSs of the individual subgroups.

In terms of degrees of effectiveness of the window analyses,Footnote 12 it is demonstrated that the average degree of efficiency of renowned BuSs during all periods is significantly higher than the average of all BuSs, while BuSs of TUs mostly correspond to the average. It is also striking that BuSs in Northern and Eastern Germany are on average less effective than those in Southern and Western Germany (and this in regard to all three periods). With a view of the years 2005/2008, a strong development of the renowned BuSs resulted, which is, on average, clearly 24 % higher than the other BuSs group results. Also the renowned BuSs have a declining average effectiveness change (Malmquist index), which is, however, less pronounced than in other groups. Between 2008/11, renowned BuSs declined in terms of development. Only the BuSs of TUs and Eastern German BuSs recorded positive developments, while in terms of technology they recorded setbacks, as did all the groups observed.

A similar effect can be determined when looking at efficiency. The renowned BuSs show higher degrees of efficiency than the average, but not in the same range as the degrees of effectiveness. The degrees of efficiency of BuSs of TUs are slightly above average, as differences between BuSs from different regions of Germany are practically not deducible. While BuSs in Northern Germany have weaker degrees of effectiveness in comparison, the outputs realised are in a comparatively favourable relation to the input used. The average degree of effectiveness is 25 % in 2005, well below the average of all BuSs, while the efficiency degree is 64 %, and therefore higher than average. Concerning the development of efficiency, no large abnormalities can be determined when comparing 2005/08. With reference to 2008/11, BuSs in Eastern Germany remain fairly constant, whereas on average all other BuSs significantly decrease.

To analyse whether there are indeed statistically significant influence factors, appropriate linear regressions are performed.Footnote 13 The degrees of effectiveness and of efficiency as well as the values of EC and TC are modelled as dependent variables, whereas reputation, BuSs of TUs and the regional allocation represent the independent variables (each binary defined). Due to the explicit allocation of a BuS to a region and the resulting perfect collinearity, one of the regions is selected as a starting point for the analysis. Thus, the results must always be interpreted from a region, for example, from the perspective of the Western German BuSs; Table 5 gives a prime example of the degree of effectiveness in 2005 as dependent variable.

Altogether 80 regression analyses (20 dependent variables from the perspective of all four regions) were calculated. For the sake of clarity, I avoid a presentation of all tables and subsume the main results: Although ultimately only a few influence factors are included in the regression analyses, a high proportion of the variance between the degrees of effectiveness for 2005 can be explained. Furthermore, there is a significantly high positive correlation between reputation and degree of effectiveness, as well as significant negative correlation results between BuSs from Northern as well as Eastern Germany, and degrees of effectiveness. Therefore, the findings identified on the degrees of effectiveness in 2005 in Table 4 can also be confirmed from a statistical perspective. This also applies to the statements concerning the degrees of effectiveness in 2008 and 2011, whereby reputation in particular has a significantly high positive impact.

In relation to the degrees of efficiency, positive significant correlations with reputation emerged for all year groups, but are less pronounced than for the degrees of effectiveness. Additionally, in regard to the degrees of efficiency in 2005, there is a slightly less significant positive correlation (0.130) between BuSs of TUs and the degrees of efficiency. Concerning the allocation of BuSs to different regions in Germany, hardly any significant correlations with the calculated degrees, developments and changes can be derived. The explanatory power of the individual models decreases when looking at degrees of efficiency: Only between 16 and 19.5 % of the variances can be explained. The regression models conducted for the values of EC and TC all result in no statistically reliable findings, i.e. at least regarding these sizes, the factors included have no statistically verifiable correlation.

Implications, limitations and outlook

First of all, it can be stated that effectiveness is increasing for many BuSs between 2001/03 and 2004/06; between 2004/06 and 2007/09, however, the degrees of effectiveness declined. In regard to the causes, the improvements between 2001/03 and 2004/06 are mainly due to technological improvements. The deterioration between 2004/06 and 2007/09 is primarily due to technological setbacks. Technological improvements and/or setbacks here means that the relevant area of the effectiveness or efficient boundary for the BuSs has shifted, i.e. in a way that with the same input during technological improvements, more output can be generated, and accordingly during technological setbacks, less output can be generated. In the context of research performance in business administration, technological improvements or setbacks mean positive or negative changes in the behaviour of the researchers employed by the regarded BuSs.

Basically, the reasons for these observations can only be speculated on. However, against the background of a changing academic landscape, this result appears plausible in principle. The public and internal discussions about the measurability of research performance increased significantly over this period. At the same time, objective agreements were also concluded at an increasing number of universities which, among other things, are measured similarly to the measures in the CHE ranking (Jaeger 2006; Weichslerbaumer 2007). Jobs were created at the universities in the sector of management control and also as “ranking representatives”, resulting in some universities compiling ranking reports. As a result, most professors and their respective research assistants will have made more effort to boost their measurable research performance. The increasing pressure on professors and young scientists to present “measurable” research results when applying for a professorship, could also explain the result.

However, the increasing effectiveness between 2001/03 and 2004/06 could also be an effect of a change in the CHE method concerning data collection. In the publication data of the 2005 ranking, only those publications were included which had been written by professors. Since the survey of 2008, the publications of all staff holding doctoral degrees are evaluated, now also including those of post-docs and professorship candidates. That is, more scientists are now integrated into the evaluation. Therefore, the quantity of publications and the degree of effectiveness increase. But, on the other hand, many publications are written by post-docs in cooperation with their supervising professor. The real impact of these survey-related differences, however, cannot be analysed, because the CHE does not make the raw data of their publication analysis available.

Also the technological setback as a reason for decreases in efficiency between 2004/06 and 2007/09 could be a consequence of changes particularly in researchers’ publication behaviour. To a certain extent, the CHE promotes more lengthy publications from scientists via the scoring system, e.g. articles in (conference) proceedings or monographs. The willingness to produce such publications may, however, decline due to the current professorial appointment practice—for which mainly publications in international (top) journals are more relevant—especially among young scientists (Schlinghoff 2002, p. 143; Hennig-Thurau et al. 2004, p. 520; Leininger 2008, p. 39). Journal articles are typically much shorter than books and monographs and therefore gain fewer points. The comparatively high amount of work involved in writing a monograph together with less appreciation could have led to a shift of work effort in favour of writing journal articles. The significant decrease in nationally visible publication points backs up the above-mentioned aspect. By integrating internationally visible (journal) publications into the dataset, the decline in degrees of effectiveness is accordingly weaker. Although the growth of internationally visible publications is currently not compensating the decline in nationally visible publication points, because of the similarly pronounced efficiency development it can be deduced that the higher degrees of effectiveness within the tendency were not achieved through increased numbers of staff.

Above all, the analyses of different influence factors reveal a high correlation between reputation of a BuS and the degrees of effectiveness and of efficiency. The explanation power of the regression models is, however, considerably higher for degrees of effectiveness. As the (research) reputation stems from the survey of professors, this suggests that reputation as perceived by this peer group is a result of the absolute number of research publications. Ultimately, this argument also seems plausible, because publications by individual researchers and their locations are probably more easily perceived, than when placing the amount of publications in relation to the number of the resident professors of a BuS and their corresponding research assistants.

With regard to the location of BuSs, only a few statements can be made. Although corresponding effects become apparent in an average observation—particularly with regard to a positive development of Eastern German BuSs—they do not stand up to the statistical analyses conducted here. In principle it only becomes apparent that Western German and Southern German BuSs exhibit higher degrees of effectiveness than those in other parts of Germany; for efficiency, this statement is however untenable.

Limitations of the analyses stem from the use of datasets from the CHE, and the application of DEA. The CHE does provide a comprehensive database and due to the repeated implementation—also in relation to other areas of expertise—the data collection was able to be tested. However, it cannot be ruled out that distortions arise, for example, due to the limitation of the definition of a “business professor”. The differing numbers of named professorships over the course of time suggest that the classification of an overlapping professorship—such as Business Informatics—does not always follow uniform criteria.Footnote 14 Also in relation to the publication indicators, distortions are possible. CHE collects the BuSs’ publication data based on literature databases. In this respect, it has been found that none of the literature databases provide a full evaluation of business-relevant journals from a German point of view (Clermont and Schmitz 2008; Clermont and Dyckhoff 2012). In relation to the time comparison and the nationally visible publication points, it must be kept in mind that—in addition to the already mentioned difference in the integrated personnel—a quality quantifier of journal articles is made during the collection of publication points starting from the 2008 survey, which has not yet been undertaken for the 2005 survey.

The results of the DEA depend mainly on the indicators included and the comparability of consolidated DMUs. In this respect, BuSs are clearly characterised by different individual situations (perhaps public universities vs. private universities).Footnote 15 The commitment and/or different number of courses in academic teaching is not considered in the present datasets. Therefore, ultimately partial productivity analyses of the entire academic performance are carried out here despite the multidimensionality of the integrated output factors.

The requirements for the comparability of BuSs are, however, greater the more periods are incorporated into the window analysis. Because an empirical production function and the resulting effective or the efficient boundary are formed from the individual activity data for different points in time, it is assumed (usually implicitly) that the technologies of the various years are comparable. In particular, changes to the environment of BuSs, as well as changes to the indicator surveys can limit the comparability of the year groups with each other. Nevertheless, only two periods of 3-years each were compared with each other in the presented investigations; furthermore, the Malmquist index values were compared with those of the window analysis. Because the Malmquist index removes the deficiency in the comparability of activity data to some extent—because here, effectiveness and efficiency degrees are always determined in relation to the technology of the particular year—and the results between window analyses and Malmquist index strongly correlate with each other, the developments in effectiveness and efficiency seem to be appropriately robust.

Another methodological limitation could result from the assumption about the underlying returns to scale of the empirical production technology. To avoid insolvabilities, constant returns to scale were assumed in the current investigations. This assumption is not without problems, as it implies, for example, that a doubling of inputs leads to a doubling of outputs. On the other hand, Clermont et al. (2015) demonstrate that constant returns to scale do exist for medium-sized BuSs in Germany, both on the basis of the CHE research data examined and when using DEA. Nevertheless, there is also a trend towards decreasing returns to scale for larger BuSs with more than 18 professorships.Footnote 16

Possibilities for future research are emerging, particularly in terms of the currently used database. On the one hand, the current analyses can be extended to the next year groups within CHE research data, in order to investigate the consequences of measures, such as the Excellence Initiative and further research funding, on business research performance. On the other hand, supplementary data from the area of academic teaching would be desirable. Both positive and negative effects of teaching on research productivity are possible in terms of interactions between research and teaching. Thus on the one hand, although teaching is a time-consuming task, the contact and the debates with students may also increase the research performance of a BuS, on the other hand. A structured analysis of this question as well as its development over time has yet to take place. This is probably because, so far, there has been a lack of meaningful indicators for academic teaching. Teaching indicators discussed in business administration only refer either to graduate numbers (meaning a hassle-free interconnection of students’ performance and the performance of their lecturers), or to pure satisfaction indicators from students (Ahn et al. 2012).

Notes

A detailed substantive analysis of the results of the mentioned studies should not be undertake here, since due to the usage of different aggregation methods and indicators as well as country-specific features, the results are not comparable with each other. However, Bolli and Farsi (2015) give a detailed description of the results of some of these studies.

Although research performance data were collected for 2014 by the CHE, these data are no longer evaluated in a research performance ranking. Rather, only certain and only relative research performance data are incorporated into the so-called “Multifaceted Excellence” ranking. As the absolute data values necessary for the subsequent analyses could not be currently provided by the CHE, the data of 2014 are not integrated into the following analyses.

Based on these indicators, the CHE also determines corresponding relative indicators. Because the exact CHE procedure is irrelevant for the focus of the present article, I refrain from an explicit representation and refer to the CHE publications; cf. Berghoff et al. (2011).

As there is no recognised and/or clear mapping of the German federal states into north, south, east and west, I divide Germany into four approximately equal-sized areas from the geographical centre with two diagonal lines. Those federal states which are clearly in one of these entities and/or take up the largest area within an entity have accordingly been allotted to that entity.

For a broader discussion of the concepts of effectiveness and efficiency, see Ahn and Dyckhoff (2004).

For an overview of alternative ranking options by means of DEA, see Hosseinzadeh Lotfi et al. (2013).

For one, though older, overview of definitions and applications of the Malmquist index, see Färe et al. (1998).

Basically, the location of BuSs outside the data envelopment means that such a BuS is allotted a degree of efficiency greater than 100 %. Thus, the BuS considered must reduce its outputs in order to be projected on the efficient boundary; within this context, the DEA literature discusses super efficiencies (cf. Banker et al. 1989; Andersen and Petersen 1993). Calculating super efficiency degrees is not always possible. Insolvabilities occur if a BuS cannot be projected on the efficient boundary.

The degrees of effectiveness and of efficiency for individual years mentioned in Table 4 relate to the effective and/or efficient boundary that is made up of the activity data from the periods 2005/08 and 2008/11. For simplicity, this will only be referred to as degrees of effectiveness and of efficiency throughout the following descriptions. In any case, the corresponding window analysis is then meant.

In DEA literature, subsequent statistical investigations of the calculated degrees of effectiveness and/or of efficiency are known as two-stage DEA (Liu et al. 2013, p. 12). However, this term is not clear, because also efficiency analyses of multi-stage production processes are subsumed under this term, e.g. Cook et al. (2010).

For a more detailed analysis, see Clermont and Dirksen (2016).

In the datasets analysed, there are only three BuSs of private universities, but they do perform significantly better.

Albers (2015), however, determines increasing returns to scale. His analyses are based on a modified database and use a varied methodological approach, which is why direct comparisons are not given here.

References

Agasisti, T., & Pérez-Esparrells, C. (2010). Comparing efficiency in a cross-country perspective: The case of Italian and Spanish state universities. Higher Education, 59(1), 85–103.

Ahn, T., Charnes, A., & Cooper, W. W. (1988). Some statistical and DEA evaluations of relative efficiencies of public and private institutions of higher learning. Socio-Economic Planning Sciences, 22(6), 259–269.

Ahn, H., Clermont, M., Dyckhoff, H., & Höfer-Diehl, Y. (2012). Entscheidungsanalytische Strukturierung fundamentaler Studienziele: Generische Zielhierarchie und Fallstudie. Zeitschrift für Betriebswirtschaft, 82(11), 1229–1257.

Ahn, H., & Dyckhoff, H. (2004). Zum Kern des Controllings: Von der Rationalitätssicherung zur Effektivitäts- und Effizienzsicherung. In E. Scherm & G. Pietsch (Eds.), Controlling: Theorien und Konzeptionen (pp. 501–525). München: Vahlen.

Ahn, H., Dyckhoff, H., & Gilles, R. (2007). Datenaggregation zur Leistungsbeurteilung durch Ranking: Vergleich der CHE- und DEA-Methodik sowie Ableitung eines Kompromissansatzes. Zeitschrift für Betriebswirtschaft, 77(6), 615–643.

Ahn, H., & Neumann, L. (2014). Measuring effectiveness: A DEA approach under predetermined targets. International Journal of Business Analytics, 1(1), 16–28.

Albers, S. (2015). What drives publication productivity in German business faculties? Schmalenbach Business Review, 67(1), 6–33.

Albers, S., & Bielecki, A. (2012). Wovon hängt die Leistung in Forschung und Lehre ab? Eine Analyse deutscher betriebswirtschaftlicher Fachbereiche basierend auf den Daten des Centrums für Hochschulentwicklung. http://hdl.handlenet/10419/57428. Accessed 5 January 2016.

Andersen, P., & Petersen, N. C. (1993). A procedure for ranking efficient units in data envelopment analysis. Management Science, 39(10), 1261–1264.

Asmild, M., Paradi, J. C., Aggarwall, V., & Schaffnit, C. (2004). Combining DEA window analysis with the Malmquist index approach in a study of the Canadian banking industry. Journal of Productivity Analysis, 21(1), 67–89.

Banker, R. D., Charnes, A. C., & Cooper, W. W. (1984). Some models for estimating technical and scale inefficiencies in data envelopment analysis. Management Science, 30(9), 1078–1092.

Banker, R. D., Das, S., & Datar, S. M. (1989). Analysis of cost variances for management control in hospitals. Research in Governmental and Nonprofit Accounting, 5, 269–291.

Barham, B. L., Foltz, J. D., & Prager, D. L. (2014). Making time for science. Research Policy, 43(1), 21–31.

Beerkens, M. (2013). Facts and fads in academic research management: The effect of management practices on research productivity in Australia. Research Policy, 42(9), 1679–1693.

Berghoff, S., Giebisch, P., Hachmeister, C.-D., Hoffmann-Kobert, B., Hennings, M., & Ziegele, F. (2011). Vielfältige Exzellenz 2011: Forschung. Anwendungsbezug, Internationalität, Studierendenorientierung im CHE Ranking, Gütersloh: CHE.

Bielecki, A., & Albers, S. (2012). Eine Analyse der Forschungseffizienz deutscher betriebswirtschaftlicher Fachbereiche basierend auf den Daten des Centrums für Hochschulentwicklung (CHE). http://hdl.handle.net/10419/57429. Accessed 5 January 2016.

Bolli, T., & Farsi, M. (2015). The dynamics of productivity in Swiss Universities. Journal of Productivity Analysis, 44(1), 21–38.

Bort, S., & Schiller-Merkens, S. (2010). Publish or Perish. Zeitschrift Führung und Organisation, 79(5), 340–346.

Caves, D. W., Christensen, L. R., & Erwin, W. (1982a). Multilateral comparisons of output, input, and productivity using superlative index numbers. Economic Journal, 92(1), 73–86.

Caves, D. W., Christensen, L. R., & Erwin, W. (1982b). The economic theory of index numbers and the measurement of input, output, and productivity. Econometrica, 50(6), 1393–1414.

Charnes, A. C., Clark, C. T., Cooper, W. W., & Golany, B. (1985). A development study of data envelopment analysis in measuring the efficiency of maintenance units in the U.S. air forces. Annals of Operations Research, 2(1), 95–112.

Charnes, A. C., Cooper, W. W., & Rhodes, E. (1978). Measuring the efficiency of decision making units. European Journal of Operational Research, 2(6), 429–441.

Clermont, M., & Dirksen, A. (2016). The measurement, evaluation, and publication of performance in higher education: An analysis of the CHE research ranking of business schools in Germany from an accounting perspective. Public Administration Quarterly, 40(1), 133–178.

Clermont, M., Dirksen, A., & Dyckhoff, H. (2015). Returns to scale of business administration research in Germany. Scientometrics, 103(2), 583–614.

Clermont, M., & Dyckhoff, H. (2012). Erfassung betriebswirtschaftlich relevanter Zeitschriften in Literaturdatenbanken. Betriebswirtschaftliche Forschung und Praxis, 64(3), 324–346.

Clermont, M., & Schmitz, C. (2008). Erfassung betriebswirtschaftlich relevanter Zeitschriften in den ISI-Datenbanken sowie der Scopus-Datenbank. Zeitschrift für Betriebswirtschaft, 78(10), 987–1010.

Cook, W. D., Liang, L., & Zhu, J. (2010). Measuring performance of two-stage network structures by DEA: A review and future perspective. Omega, 38(6), 423–430.

Cooper, W. W., Seiford, L. M., & Tone, K. (2007). Data envelopment analysis: A comprehensive text with models, applications, references and DEA-solver software (2nd ed.). New York: Springer.

Doyle, J. R., & Green, R. H. (1994). Efficiency and cross-efficiency in DEA: Deviations, meanings and uses. Journal of the Operational Research Society, 45(5), 567–578.

Dyckhoff, H., Clermont, M., Dirksen, A., & Mbock, E. (2013). Measuring balanced effectiveness and efficiency of German business schools’ research performance. Zeitschrift für Betriebswirtschaft, Special Issue, 3(2013), 39–60.

Dyckhoff, H., & Gilles, R. (2004). Messung der Effektivität und Effizienz produktiver Einheiten. Zeitschrift für Betriebswirtschaft, 74(8), 765–783.

Dyckhoff, H., Rassenhövel, S., & Sandfort, K. (2009). Empirische Produktionsfunktion betriebswirtschaftlicher Forschung: Eine Analyse der Daten des Centrums für Hochschulentwicklung. Zeitschrift für betriebswirtschaftliche Forschung, 61(1), 22–56.

Fandel, G. (2007). On the performance of universities in North Rhine-Westphalia, Germany: Government’s redistribution of funds judged using DEA efficiency measures. European Journal of Operational Research, 176(1), 521–533.

Färe, R., Grosskopf, S., Lindgren, B., & Roos, P. (1992). Productivity changes in Swedish pharmacies 1980–1989: A non-parametric Malmquist approach. Journal of Productivity Analysis, 3(1), 85–101.

Färe, R., Grosskopf, S., Norris, M., & Zhang, Z. (1994). Productivity growth, technical progress, and efficiency change in industrialized countries. American Economic Review, 84(1), 66–83.

Färe, R., Grosskopf, S., & Roos, P. (1998). Malmquist productivity indexes: A survey of theory and practice. In R. Färe, S. Grosskopf, & R. R. Russel (Eds.), Index numbers: Essays in honour of Sten Malmquist (pp. 127–190). Dordrecht: Springer.

Flegg, A. T., & Allen, D. O. (2007). Does expansion cause congestion? The case of the older British Universities, 1994–2004. Education Economics, 15(1), 75–102.

García-Aracil, A. (2013). Understanding productivity changes in public universities: Evidence from Spain. Research Evaluation, 22, 351–368.

Gilles, R. (2005). Performance Measurement mittels Data Envelopment Analysis: Theoretisches Grundkonzept und universitäre Forschungsperformance als Anwendungsfall. Lohmar, Cologne: Eul.

Gutierrez, M. (2007). Messung der Effizienz von Professuren mittels Data Envelopment Analysis. Zeitschrift für Betriebswirtschaft, Special Issue, 5(2007), 101–130.

Hennig-Thurau, T., Walsh, G., & Schrader, U. (2004). VHB-JOURQUAL: Ein Ranking von betriebswirtschaftlich-relevanten Zeitschriften auf der Grundlage von Expertenurteilen. Zeitschrift für betriebswirtschaftliche Forschung, 56(9), 520–545.

Höfer-Diehl, Y. (2014). Hochschulcontrolling: Bezugsrahmen und Instrumente zur Sicherung der Lehreffektivität. Hamburg: Kovac.

Horne, J., & Hu, B. (2008). Estimation of cost efficiency of Australian universities. Mathematics and Computers in Simulation, 78(2), 266–275.

Hosseinzadeh Lotfi, F., Jahanshahloo, G. R., Khodabakshi, M., Rostamy-Malkhlifeh, M., Moghaddas, Z., & Vaez-Ghasemi, M. (2013). A review of ranking models in data envelopment analysis. Journal of Applied Mathematics, 2013.

Jaeger, M. (2006). Steuerung an Hochschulen durch interne Zielvereinbarungen: Aktueller Stand der Entwicklungen. Die Hochschule, 2006(2), 55–66.

Johnes, J. (2006). Measuring teaching efficiency in higher education: An application of data envelopment analysis to economic graduates from UK universities 1993. European Journal of Operational Research, 174(1), 443–456.

Johnes, J. (2008). Efficiency and productivity change in the English Higher education sector from 1996/97 to 2004/5. The Manchester School, 76(6), 653–674.

Keeney, R. L. (1992). Value-focussed thinking: A path to creative decisionmaking. Cambridge, MA: Harvard University Press.

Keeney, R. L., See, K. E., & von Winterfeldt, D. (2006). Evaluating academic programs: With applications to U.S. graduate decision science programs. Operations Research, 54(5), 813–828.

Leininger, W. (2008). Publikationsverhalten in den Wirtschaftswissenschaften. In A. von Humboldt-Stiftung (Ed.), Publikationsverhalten in unterschiedlichen wissenschaftlichen Disziplinen: Beiträge zur Beurteilung von Forschungsleistungen (pp. 39–40). Bonn: Alexander-von-Humboldt-Stiftung.

Liu, J. S., Lu, L. Y. Y., Lu, W.-M., & Lin, B. J. Y. (2013). Data envelopment analysis 1978-2010: A citation-based literature survey. Omega, 41(1), 3–15.

Malmquist, S. (1953). Index numbers and indifference curves. Trabajos de Estatistica, 4(2), 209–242.

Marginson, S., & van der Welde, M. (2007). To rank or to be ranked: The impact of global rankings in higher education. Journal of Studies in International Education, 11(3–4), 206–329.

Olivares, M., & Schenker-Wicki, A. (2012). The dynamics of productivity in the Swiss and German University sector: A non-parametric analysis that accounts for heterogeneous production. http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2139364. Accessed 5 January 2016.

Rassenhövel, S. (2010). Performancemessung im Hochschulbereich: Theoretische Grundlagen und empirische Befunde. Wiesbaden: Gabler.

Schlinghoff, A. (2002). Personalauswahl an Universitäten: Die Berufungspraxis deutscher wirtschaftswissenschaftlicher Fakultäten in den neunziger Jahren. Zeitschrift für Betriebswirtschaft, Special Issue, 2(2002), 139–147.

Schrader, U., & Hennig-Thurau, T. (2009). VHB-Journal2: Methods, results, and implications of the German Academic association for business research’s journal ranking. Business Research, 2(2), 180–204.

Stolz, I., Hendel, D. D., & Horn, A. S. (2010). Ranking of rankings: Benchmarking twenty-five higher education ranking systems in Europe. Higher Education, 60(5), 507–528.

Thanassoulis, E., Kortelainen, M., Johnes, G., & Johnes, J. (2011). Costs and efficiency of higher education institutions in England: A DEA analysis. Journal of the Operational Research Society, 62(7), 1282–1297.

Weber, M. (1978). Economy and society: An outline of interpretive sociology. Berekley, CA: University of California Press.

Weichslerbaumer, J. (2007). Hochschulinterne Steuerung über Zielvereinbarungen: Ein prozessbegleitender ökonomisch-methodischer Ansatz an der TU München. Zeitschrift für Betriebswirtschaft, Special Issue, 5(2007), 157–172.

Worthington, A. C., & Lee, B. L. (2008). Efficiency, technology and productivity change in Australian universities, 1998–2003. Economics of Education Review, 27(3), 285–298.

Zhang, H., Patton, D., & Kenney, M. (2013). Building global-class universities: Assessing the impact of the 985 PROJECT. Research Policy, 42(3), 765–775.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Clermont, M. Effectiveness and efficiency of research in Germany over time: an analysis of German business schools between 2001 and 2009. Scientometrics 108, 1347–1381 (2016). https://doi.org/10.1007/s11192-016-2013-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2013-3