Abstract

It is now generally accepted that institutions of higher education and research, largely publicly funded, need to be subjected to some benchmarking process or performance evaluation. Currently there are several international ranking exercises that rank institutions at the global level, using a variety of performance criteria such as research publication data, citations, awards and reputation surveys etc. In these ranking exercises, the data are combined in specified ways to create an index which is then used to rank the institutions. These lists are generally limited to the top 500–1000 institutions in the world. Further, some criteria (e.g., the Nobel Prize), used in some of the ranking exercises, are not relevant for the large number of institutions that are in the medium range. In this paper we propose a multidimensional ‘Quality–Quantity’ Composite Index for a group of institutions using bibliometric data, that can be used for ranking and for decision making or policy purposes at the national or regional level. The index is applied here to rank Central Universities in India. The ranks obtained compare well with those obtained with the h-index and partially with the size-dependent Leiden ranking and University Ranking by Academic Performance. A generalized model for the index using other variables and variable weights is proposed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Scientific performance is a multidimensional process with several different facets. However for purposes of evaluation it is sometimes required by administrators or directors of institutions that the institutions be arranged in order of merit in a ranked list. This requires the creation of composite indicators based on measurable parameters (Vinkler 2006; Torres Salinas et al. 2011) which reflect merit in accordance with human judgement.

At present there are several global performance ranking exercises of universities and higher education and research institutes based on a variety of variables. The ranked lists published cover the top 500–750 or so top institutions in the world. In the case of a particular region or country, for instance a developing country, these lists may not cover a meaningful section of total institutions, and are useful only in so far as one can celebrate the status of the few top performing institutions in the country that make it to the international rank lists. While it may be considered important to aspire to these ranked lists or work towards inclusion, it may be more meaningful to design simpler indices based on local data that can rank institutions within the country or region for local decision making or policy purposes.

Bibliometric indicators measure the volume and quality of research output in terms of published papers and citations, and these and derived indicators are now routinely used in evaluation. We propose to do the same using basic and some derived indicators to obtain a ‘Quality–Quantity’ Composite Index (QQCI) for the set of 39 Central Universities in India. Access to a citation database is required, and we have chosen to work with the Web of Science (WoS) which is an acceptable standard in bibliometric work. The objective of this kind of national ranking exercise is to show where each university stands, which can lead to a kind of self assessment, and course correction if need be, at the level of national institutions. It may also assist national policy and funding decisions. The attempt is also to keep the methodology simple to ensure easy replication of results, possibly as an annual exercise.

International ranking exercises and related work

Our position is that global ranking exercises are not suitable for developing countries or institutions in the mid-range for reasons listed above. The Academic Ranking of World Universities (ARWU)Footnote 1 considers number of Nobel prizes, Fields medals, highly cited researchers—variables which are relevant mainly for some prestigious universities. In fact in the ARWU ranking, the top 500 institutions are found in only 35 countries. The USA has a dominant position, with 85 percent of the top 20 institutions, 51 percent of the top 100 institutions, and 45 percent of the top 200 institutions (Liu and Cheng 2005; Liu et al. 2005). The US bias has been noted by others such as Van Raan (2005), who also points out pitfalls in ranking institutions. For example, the cleaning and merging of institution names can be difficult due to numerous alternative versions of an institution’s name as it appears in the citation database. While this would be a problem common to any ranking exercise, in ARWU there is also the major question of disambiguation of names of scientists (to find highly cited researchers) which continues to be a crucial problem in bibliometrics. In the initial exercise of the Shanghai ranking, only 3 institutions from India found a place in the list (Liu et al. 2005). ARWU considers over 1000 institutions and produces a list of 500. Another difficulty in this ranking is the problem of awards—should the current institution or affiliation of the awardee be considered, or the institution at the time of the award, or the institution where the award winning work was done? Similar problems can be envisaged for allocation of credit for alumni.

The Leiden RankingFootnote 2 based exclusively on bibliometric indicators uses field normalization and normalization with respect to year. While this is desirable, it is difficult to perform if one is working exclusively with national data, and would require processing of citation data at the global scale. The Leiden Ranking uses papers, citations and collaboration, and offers separate ranked lists for each of these. It offers both size-dependent and size independent ranking based on different criteria (Waltman and Van Eck 2012; Waltman et al. 2012). When it comes to Indian institutions, only four institutions appear in the recent Leiden ranking.

The Webometrics or Web RankingFootnote 3 is based entirely on Web visibility and is not related to our objective of research performance based ranking (Aguillo et al. 2008).

Since 2009, SCIMago Institution Ranking (SIR)Footnote 4 has been publishing ranks for worlwide institutes based on different research indicators such as research output, international collaboration and impact etc. from the SCOPUS database. This ranking is published by a group of researchers in a Spain-based research organization, consisting of researchers from different institutions such as University of Granada, Spanish National Research Council (CSIC), Charles III University of Madrid. At present SCIMago ranks over 5000 institutions. However access to the results are password controlled.

The TimesFootnote 5 and QSFootnote 6 Ranking use other data on internationalization and reputation surveys as part of the ranking exercise, which would need time-consuming and expensive surveys. They both use perceptual scores, which in addition to being highly subjective are not necessarily representative of demographic and cultural variations of responders. Furthermore, while evaluation of teaching—a key function of a university—is important, objective measures of teaching that can be quantified have not been developed. It is unrealistic at present to include teaching appraisals as part of quantified ranking exercises, particularly in Indian context.

A relatively recent entrant into the global ranking exercises is URAP,Footnote 7 which was started in 2009 in Turkey. University Ranking by Academic Performance (URAP) Research Laboratory was established at Informatics Institute of Middle East Technical University in 2009. The main objective of URAP was to develop a ranking system for the world universities based on academic performance determined by quality and quantity of scholarly publications. Yearly World Ranking of 2000 Higher Education Institutions have been released since 2010. Its coverage is much larger than the earlier ranking exercises. We have found some overlap of institutions between the URAP list for India and the set of Indian Central Universities, and URAP has been included in our comparison for validating our results.

Apart from the standard global ranking exercises, there are other sites where institutions are rated in different disciplines. The website—Mapping Scientific Excellence (www.excellencemapping.net) has a tool that rates global higher education and research institutions in different disciplines in terms of ‘best paper rate’ and ‘best journal rate’ (Bornmann et al. 2015). In addition, country specific characteristics like GDP, population and perceived corruption can be introduced as co-variates (Bornmann et al. 2014). Both ranking lists and geographical maps are given, indicating whether the performance is below average or above average. Starting with year 2005, the website is currently in its fourth version covering the publication years 2008–2012. We found 10–20 Indian institutions in these lists, but since they were subject specific and did not include many Central Universities, we were unable to compare them directly with our results.

Other published works try to assess the ranking systems and also suggest alternative methods and improvements for ranking research competence of institutions. Jeremic et al. (2011) present ideas for evaluating the Shanghai University’s Academic Ranking of World Universities (ARWU) and propose the I-distance method for ranking. Molinari and Molinari (2008) tried to provide a metric which removes the size dependence and proposed an index as a complement to the h-index, to compare the scientific production of institutions of different sizes. García et al. (2012) introduced ranking of research output of universities based on the multidimensional prestige of influential fields, with flexibility to reset different thresholds and obtain different levels of ranking. In Matthews (2012), different ranking methods were accessed to look at the positions of a set of South African Universities. In a similar study, Geraci and Esposti (2011) presented and compared different rankings used, to evaluate the prestige and merit of Italian universities, and also proposed a new approach that combines both national and international standing of Italian universities. Lazaridis (2010) presented a study to evaluate the research performance of departments of universities using the mean h-index. Liu and Liu (2005) reported methodologies used in rankings proposed by different Chinese Universities and addressed the characteristics inherent to those rankings. Billaut et al. (2010) take a critical approach and present a criticism of ARWU by attempting to show that the criteria used in ARWU are not relevant and that the aggregation methodology suffers from major problems. Torres Salinas et al. (2011) have developed a bidimensional Quality–Quantity index and used it to rank Spanish universities in two fields of science.

Despite the abovementioned and other studies, the problem of developing countries whose Universities do not find representation in the global ranking exercises has not been given enough attention. This provides the motivation for the current study, where we have adopted a methodology somewhat similar to Torres Salinas (Torres Salinas et al. 2011).

Need for regional or national ranking exercises

As stated before, it is felt that for countries or regions that are not at the core of the global science system or are on its periphery, there is a need for regional or national level ranking exercises based on data that is readily available and can be processed without undue difficulty. A Quality–Quantity ranking was done recently by us for Computer Science departments in India (Uddin and Singh 2015; Singh et al. 2015). On the other hand, some developed countries like Italy have more than one single ranking exercise, both government initiated and private (Geraci and Esposti 2011). This kind of situation leads to a problem of too much ranking information, as the criteria and objectives of the exercises are different and can lead to very different ranked lists, requiring further consolidation.

In India, an early start in this direction was made with the project National Mapping of Science at NISTADS, New Delhi in the late 90 s, funded by the Department of Scientific and Industrial Research (Basu and Nagpaul 1998). Ranked lists of Indian institutions in 12 broad subject areas were produced and 5000 copies distributed as a booklet at the Indian Science Congress held in the first week of January each year, in order for the results to be accessible to a large number of scientists and administrators (Basu et al. 2000). Absolute numbers of papers from the Science Citation Index were used and journal impact factors used as a proxy for quality, as was the practice at the time. The objective was to reach scientists, administrators, principals and vice-chancellors in India and elicit interest among them in using bibliometric evidence for policy formulation. The other motive was to reach the scientists and institutions who could then assess where they stood, and it was hoped that this exercise would lead to a virtuous cycle of introspection and non-pecuniary incentives. The exercise was continued for 3 years before being discontinued. While ongoing, it generated a lot of interest in individuals, professors and heads of institutions, at a time when global rankings were few in number. Since then there have been sporadic national assessment exercises (Gupta 2011), but without a single established methodology. Recently the Government of India has defined a new framework for ranking Universities and colleges (NIRF 2015) which will take into account parameters such as faculty-student ratio, faculty qualification (e.g., Ph.D. degree), library and other facilities, sports and extra-curricular facilities, publications, citations, patents, performance in examinations, outreach and inclusivity (for women, socially disadvantaged, disabled etc.), peer evaluation and application-to-seat ratio. However, the ranking exercise is yet to be initiated.

Our objective here is to propose a single QQCI and to demonstrate its utility by using it to rank a given set of institutions in India. As a test case we have taken 39 Central Universities in India. The Composite Index is calculated for each University and the ranking is done on this basis. To validate our methodology we have tested it against ranks obtained from an accepted indicator, the h-index. A scientist has index h if h of his/her Np papers have at least h citations each, and the other (Np-h) papers have fewer than h citations each (Hirsch 2005). Although defined initially for individual scientists, it can be used in an analogous way for institutions (Huang 2012). The reason for using the h-index for validation is that it is also a ‘Quality–Quantity’ indicator. We neither expect nor demand total agreement, but expect the h-index to act as a rough guide to check that our ranking is not totally out of line. In addition, we check our ranking against the Leiden ranking which is also based exclusively on bibliometric indicators as ours is, and URAP which has more institutions from India as well as the same sort of bibliometric indicator based ranking system.

The Central Universities in India

The Central Universities in India have been instituted with the specific mandates of academic excellence and inclusion with central government support. Some of them were existing reputed universities given the status of Central Universities, while others are newly founded universities located in each state to give an equitable geographical spread to institutes of higher education in the country. They are funded by the University Grants Commission (UGC)Footnote 8 set up in 1953 by the Government of India to initiate a planned development of higher education in the country. The UGC worked with a stated mandate to promote quality education, but with regard to the concerns of access, equity, quality, excellence, relevance and value based education.Footnote 9 Under the UGC’s oversight and advisory framework, higher education institutions are divided into different categories: Central Universities, deemed-to-be Universities, State Universities, Colleges and Distance Education Centres. Each Central University is established by an individual Act of Parliament and exercises autonomy in its academic and administrative affairs in keeping with the provisions of the relevant Act. Their entire maintenance and development expenditure is met by the Central Government. Given their special status, it is important to see how the Central Universities have been performing.

The Central Universities were set up in various stages. At the time of the 11th 5-year plan in 2009, there were 19 (+4) Central Universities. It was recommended by the Parliamentary Standing Committee on Human Resource Development that Central Universities be set up in all the remaining states, including the Northeastern states and Sikkim. At present there are 39 Central Universities spread out all over India (see Fig. 1 ) in addition to some other central institutions of a different nature. The Indira Gandhi National Open University (IGNOU) (an institution devoted to distance education), the Indian Maritime University, and the South Asian University (internationally administered by the South Asian SAARC countries), have not been included in the study as they follow different models.

Data and methodology

The data on research publications has been taken from the Web of Science (Science Citation Index-Expanded) for the years 1990–2014 (Table 1). Data on faculty size for the year 2014 is obtained from the University Grants Commission note on vacant positionsFootnote 10 (UGC 2014). A total of 59,339 papers (58,781 unique, after eliminating duplicates) with 590,227 citations were downloaded for the study. As can be seen from Table 1 (sorted by number of papers), the Central Universities vary significantly both in size and date of inception. Out of 39 Universities, 16 were established as recently as 2009, while 7 more were established earlier but given Central University status between 1988 and 2009. The older Universities are the University of Allahabad, established in 1887 and given Central University status in 2005, Banaras Hindu University established in 1916, Aligarh Muslim University and Jamia Milia Islamia in 1920 and Vishwa Bharati in 1939. We have taken a 25 year time period for evaluating the Universities. We will try to explain our choice in the following way. These Central Universities have never been evaluated together as India does not have a regular ranking exercise in place as yet. So, there is interest in the question of what has been the contribution of the Universities over their lifetime. We took a period for which bibliometric data was available (in SCI-expanded)—though of course the older Universities are very much older than this period. The last year of data should have been left out as per guidelines suggested in the literature (Bornmann 2014).

The older Central Universities have had a longer period to establish themselves and gain in reputation. Is the reputation upheld in a research assessment? To assess the Universities we take bibliometric measures of research productivity and citation and combine them into a Composite Index as explained in the next section. Data used are shown in Table 1 where all the Central Universities are sorted on the basis of their Total Papers (TP) in descending order. Further, in this table Total Citations (TC), Number of highly cited papers in the top 1 % of cited papers (HiCP)Footnote 11 and International Collaborative Papers (ICP) are shown. Here ICP refers to papers having at least one author from a different country (as seen from author affiliation field). Along with these statistics some calculated values are also shown, these are Average Citations Per Paper (ACPP), Citations Per Capita (CPC) and the well known index h-index. Here, ACPP is the direct ratio of TC to TP and CPC is the ratio of TC to Faculty Size.

Quality-quantity Composite Index: design

The Composite Index proposed here is based on bibliometric indicators, specifically, number of papers, citations, highly cited papers (relative, i.e., top 1 % cited within the set of papers considered) and internationally collaborative papers, with at least one author located outside India. The variables chosen represent quantity of research output as one component of research performance. Citations are used as a proxy for quality. Highly cited papers are a reliable indicator of qualitative performance as they derive from citations. Internationally collaborative papers, while not directly a measure of research excellence, have been found to attract higher number of citations (Glänzel 2001). These are size-dependent or extensive variables. In other words, larger institutions are expected to have larger values of the extensive variables.

For each university there are four size-dependent or extensive variables, TP, TC, HiCP and ICP. Then we have two size-independent or intensive variables, ACPP and CPC, which are taken as quality indicators. These are of the form output/input. CPC is not included as a variable simply because yearwise figures for faculty size were not available. Numbers were available only for the year 2014 (though faculty size did not change very significantly during last 5 years), and have been used in the variable CPC as an estimate. As will be indicated later, this last entity is used as a measure of quality to create a modified version of the Composite Index.

To obtain the Quality–Quantity Composite Index (QQCI) we first reduce the values of each variable to a score lying between 0 and 1 by taking the ratio of the value to the maximum value of each corresponding field in the dataset. We take the average Q score of the scores of the four extensive variables, and then take the product of Q score individally with the scores of the intensive variables ACPP and CPC.

Each Composite Index QQCI is therefore related to two independent quality parameters Average Citations Per Paper and Citations Per Capita (Faculty).

Q score in Eq. (1) can in principle be generalized by using different weights for the extensive variables, viz., papers, citations, highly-cited papers and international collaboration, depending on the stated objectives of the rating agency. The formula for the index contains a linear combination of extensive variables, here specifically with coefficients equal to unity. According to our formulation, the coefficients of the extensive or size-dependent variables can be varied by a user (weights or coefficients being fractions with a total of 1) depending on the objectives, i.e., are they interested in an evaluation based primarily on total output or total citations? For smaller Universities, international collaboration may be too small to be relevant. Based on these considerations a rating agency may choose to give appropriate weights to the different extensive variables (weights would be fractions adding up to unity; of course the weights must not be altered across Universities or time for several consecutive assessments if they are to be compared). The weights used would need to specified when anyone wants to undertake the ranking. We believe this feature to be a strength of the model, making it more general and flexible for a variety of applications and contexts. This was part of our objective. The intensive or size-independent variables cannot be aggregated and are not weighted in this way.

The generalized model can be useful when, for example, more emphasis is desired to be given to say publications over citation or vice versa. In general to keep the rank order stable or consistent these weights should not be changed too frequently. In our case we have decided to give equal weightage to publication, citation, highly cited papers and foreign collaboration. Flexibility is important for national or regional ranking where some institutions may have very small values for some variables. For example, foreign collaboration may be given less weight if it is desired that smaller institutions are not penalized for absence of foreign collaboration. We suggest using equal weights initially, unless there are well argued, convincing reasons for adopting other weights.

To summarize, the mean value of a set of extensive variables relating to quantity is multiplied by an intensive quality variable to obtain the Quality–Quantity Composite Index. To provide a rationale for this procedure we suggest an analogy, the procedure may be interpreted as being analogous to taking a basket of goods (the extensive variables) and multiplying the quantity with the price (the intensive variables) to obtain total value (Quantity * Quality ~ Value). The procedure adopted to obtain the Composite Index provides a better rationale than simply summing over the effect of all the variables.

The design has some similarity to the work of Torres Salinas et al. (2011) who have also considered a bidimensional ‘Quality–Quantity’ index. However, they use the geometric means of three ‘quantity’ indicators and 3 ‘quality’ indicators, viz. Total papers, Total citations and h-index and the ratio of papers in the top JCRFootnote 12 quartile, citations per paper, and proportion of papers that belong to the top 10 % most cited (highly cited) papers. A Composite Index is finally created as the product of the quality and quantity indices.

Our approach differs from international rankings that use awards like the Nobel prize, internationalization of student and faculty, alumni, etc., variables that may not be immediately relevant for many of the universities in our set.

Since the quality variable can be either ACPP or CPC, the two possible values for the product are QQCI(1) which is obtained by taking the product of Q score with ACPP, and QQCI(2) obtained from taking a similar product with CPC. Since yearwise data on faculty size was not available, QQCI(2) has not been explored in detail, although it has been computed (for a shorter period of 5 years) with limited data to demonstrate the methodology. Wherever Composite Index or QQCI is mentioned without specifying which one, it is to be understood as QQCI(1).

Validation

To validate our results we have ranked the institutions by the Composite Index and compared with ranks obtained from the h-index (Hirsch 2005), which is an accepted measure of quality and quantity. Although defined originally for comparing the performance of individuals, the h-index has been used at the level of institutions as well (Huang 2012; Huang and Lin 2011; Huang and Chi 2010). The h-index is known to have several shortcomings (Costas and Bordons 2007), but it was thought to be a suitable comparison since both are ‘Quality–Quantity’ indices with respect to papers and citations, albeit calculated differently. We assume that if the new index produces a rank list that is approximately the same as that produced by the h-index, its validity is established.

We propose that RACPP depicts the rank of an institution calculated from the multiplication of Q score and ACPP score for all the institutions as in Eq. (2). Similarly, RCPC depicts the rank calculated from the multiplication of Q score and CPC score as in Eq. (3). RCPC is calculated for the time period of 2010–2014 as the QQCI (2) is calculated for the same period. Further, Rh-index depicts the rank calculated from the h-index.

Results

Correlation

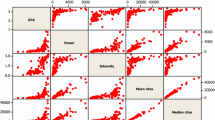

We first test for correlation between the variables. The variables, both extensive and intensive, are well correlated. A cross correlation across the 6 data variables shows high correlation between them indicating that high productivity (measure of research output) and high citation go hand in hand in this data set (Table 2). According to Liu in his paper on the Shanghai Ranking, good correlation in their dataset apparently showed that they were using a cohesive data set (Liu et al. 2005).

Composite Index

In the interest of completeness, intermediate values of Q score and other scores leading up to the index values are given in Table 3. In this table the data is shown for two time periods i.e., one is full time period: 1990–2014 and another is 2010–2014. The two periods will indicate performance in the entire time span and also in the recent past. The data in this table is sorted according to the Q score of 1990–2014 period in descending order. As mentioned earlier the faculty size is available for the year of 2014, so it would not be correct to calculate CPCscore for 1990–2014 with the faculty strength of 2014. But it has been used as an estimated value for the last 5 years i.e., 2010–2014, since faculty size is not expected to change too much in 5 years. Thus, QQCI(2) is designed for the period of the last 5 years (2010–2014) only. The QQCI(1) is computed for both, the whole time period (1990–2014) and the recent 5 year block (2010–2014).

The Composite Index QQCI(1) is compared to the h-index of the Universities and found to vary as a cubic polynomial in h (R 2 = 0.9996) as seen in Fig. 2 for the full time period i.e., 1990–2014. This relationship has also been observed by others (Nishy et al. 2012). However it is not immediately clear as to what is the significance of a cubic relationship.

Ranks

Ranks are assigned for the full period (1990–2014) on the basis of the Composite Index using both Average Citations Per Paper (ACPP) and Citations Per Capita (CPC) in turn to obtain ranks RACPP and RCPC. The relationship between the Composite Index and ranks are shown in Fig. 3 for RACPP. For high values of Composite Index (high rank) the curve falls rapidly, and levels off later. This shows that there will be better discrimination of ranks at the higher levels and the results will be less accurate at lower ranks. We conclude from Fig. 3 that about 15 of the top performing Universities can be assessed, while the remaining have too small a value of the Composite Index (<0.005) to be distinguished well using the Composite Index.

It is also seen in Fig. 4 that the relationship between the cumulative value of the Composite Index QQCI(1) (1990–2014) and the corresponding rank RACPP is Bradfordian in nature (Bradford 1934). This indicates that the Composite Index(1) incorporates the skew distribution properties of a scientometric variable. As mentioned earlier, QQCI(2) has data on faculty size for only 1 year, and so this analysis is omitted for QQCI(2).

RACPP and RCPC are then compared to ranks obtained from the h-index (the Rh-index). As seen in Fig. 5, RACPP is very well correlated with Rh-index. Note that up to about rank 15 the ranks are identical. (Beyond this the method does not yield reliable results).

RCPC is less well correlated with Rh-index, which is to be expected since the additional factor of faculty size has been included in RCPC which does not feature in h-index. Note that the ranks RCPC and Rh-index rarely match, either for the first 15 universities or the rest.

The exercise is repeated for short term data, 2010–2014. It is found that the Spearman rank correlation is less for the short term data (Table 4). This was done to ascertain whether ranking can be done at frequent intervals of time, and if the results would be stable. We find that the correlation is better for longer time intervals.

Normalization by field and by year

In this paper, rank is calculated based on the scores QQCI(1) and QQCI(2) where the first score is the product of the average Q score and the score of ACPP, while the second is where the product of Q score is taken with the score of Citations Per Capita. Raw citation counts have been used. It is known that citation levels vary across disciplines, and also by age of publication, and it is generally accepted that some form of normalization is needed to obtain parity across disciplines (Waltman et al. 2011; Leydesdorff and Opthof 2010). However, normalization requires analyzing citations of all papers in the field (for a given year) at the world level, to obtain average citation levels and to calculate expected citation rates of papers. This is beyond the scope of this exercise, as we are only dealing with national data, while field normalization would require using world papers in a given field.

Comparison with h-index

In order to compare our index with a standard, accepted measure of quality we have chosen the h-index for the period of 1990–2014. It depends on both quality (citations) and quantity (papers) and increases with time (Hirsch 2005). The h-index, though defined initially by Hirsch for individual authors, has also been used at the level of institutions (Huang 2012; Huang and Lin 2011; Huang and Chi 2010). Huang found that the ranking of Universities based on h-index alone compare favourably with the ARWU ranking by the Shanghai Jiao Tong University based on a number factors such as awards and international character, in addition to citations (ARWU Methodology 2011).

The Composite Index(1) has a good correlation with the h-index, increasing our confidence in the new index, as seen earlier from Figs. 2 and 5. What are the advantages if any of using this indicator over the h-index? Firstly, one can work with aggregated numbers such as total papers and citations, and one does not have to know the citations of individual papers as would be required to assign h-index to an institution. (The procedure for finding h-index cannot be easily automated for a large number of institutions. It is available as a built-in function in the WoS.) Our Quality–Quantity Index QQCI includes quantities like percentage of highly cited papers and number of internationally collaborative papers, bringing other dimensions into the performance assessment. Another problem with the h-index is that it increases with time and would have very different numbers for older institutions, i.e., give them an advantage. To eliminate this advantage, one could suggest that h-index be computed over shorter more recent intervals in time. However, this is not easy to implement as citations take several years to accumulate. The h-index also penalizes institutions that are not prolific but have high citations, whereas our method takes the average citation per paper into account. In summary we have tried to argue that our method may be computationally simpler than the h-index, while it gives a ranking that does not differ too much from the h-index ranking and does not suffer from some of the latter’s deficiencies.

Comparison with international rankings

Next we compare our results with international rankings in a limited way since most of the Indian Universities do not get included in the top 500 or so institutions as rated by different ranking agencies. Comparisons are made for the short period 2010–2014 since most of the global rankings fall in nearby time periods. The ranking for 1990–2014 is shown in the last column of Table 5. As the Leiden rankings are entirely based on bibliometric research indicators, we chose to compare our results QQCI(1) and QQCI(2) with the Leiden ranking, both size-dependent and size-independent (Table 5). Of course the Leiden ranking is based on 2014 data while our ranking is based on 1990–2014 data. The default values for Leiden indicators P (10 %) and PP (10 %) have been taken.

The ranks given by the Composite Index(1), i.e., QQCI(1) for the longer time period, are reasonably consistent with ranks based on the h-index. If we compare with the 2015 Leiden ranking we are closer to the size-dependent than the size-independent ranking. Only four Indian Central Universities are in the Leiden list. We note that they are also the top four Universities according to our Composite Index ranks QQCI(1) and QQCI(2) but not in the same order. Considering the time period, the Leiden 2015 ranking needs to be compared with the 2010–2014 ranking. We have included just 25 Universities in our list as many of the smaller or very recently established universities have very small outputs and variables in the calculation of the Composite Index can be zero. In Table 5 we show URAP ranks for 2010–2011 and 2015. There are 9 Indian institutions in 2010–2011 and 10 in 2015. The URAP ranks in 2010–2011 are generally comparable with those for QQCI(1) in 2010–2014, except for one notable exception, the Central University of Kerala, which we rank at 25 for 2010–2014, while URAP ranks it at 8. The top 5 Universities in both time periods based on Composite Index are University of Delhi, Banaras Hindu University, University of Hyderabad, Aligarh Muslim University and Jawaharlal Nehru University. Small changes in rank position are seen between the longer time period and the more recent period.

Conclusions

In summary, the Composite Index takes into account not only aggregate papers and citations, but also the distribution in the top cited papers, and internationally collaborative papers. It has been shown that international collaboration raises impact of organizations (see for example, Basu and Aggarwal 2001). These are the extensive variables representing both quantity and quality, while Average Citations Per Paper (ACPP) and Citations Per Capita (CPC) are the intensive variables representing quality. It would have been desirable to include a financial variable as a further measure of efficiency, but the data are not readily available and often not available on time.

Our main conclusion in this study is that it is possible to design simple Composite Indices that can be used to rank institutions on a national, regional or local basis (say state level), when institutions need to be ranked for purposes where only a local relative position is required, and such a scheme is useful, e.g., when these institutions are not covered by global rankings. The Composite Index includes more factors than the h-index does, such as international collaboration. It incorporates both quantity of research output (papers) and quality (citations). Another quality factor is also introduced through the faculty size in the rank RCPC. Other factors both extensive and intensive (e.g., funding, awards) can also be introduced in a natural extension of the formulation. An index obtained as demonstrated may be useful for funding and policy decisions, but more importantly in our opinion to inform the scholar or bench level scientist where an institution stands in its own context.

It appears from our study that the older, more established universities are still performing at the highest levels, and occupy the highest ranks among the Central Universities. A university which is relatively new but occupies a high rank is the University of Hyderabad.

The QQCI rank lists for Central Universities in the two time periods, a long period (1990–2014) and a short and recent one (2010–2014), are found to be fairly stable. The first four Universities correspond to the ones identified in the Leiden 2015 Ranking. Three out of the four Central Universities that made it to the top Indian Universities in India (as rated by Leiden University) are old, well established Universities: University of Delhi established in 1922, Banaras Hindu University in 1916 and Aligarh Muslim University in 1920. The Composite Index ranks the older and larger Universities, the University of Delhi and Banaras Hindu universities the highest, but also includes the more recent and smaller University of Hyderabad (which has the highest citation rate), indicating that both quality and quantity are incorporated. Other rank positions of the Central Universities can be seen from Table 5.

We conclude that the more recently established Central Universities have still some way to go before they can all be included in ranking exercises. In a study of Italian Universities, Geraci and Esposti (2011) observed that the older universities there have had time to establish themselves and are now considered among the reputed institutions. This is also largely the case in India. Looking at the ranks for the longer and shorter time intervals, we identify the top Universities that have improved their ranks in the recent period (Table 5, columns 7 and 9), namely Aligarh Muslim University (rank 4–3), Jamia Milia Islamia (rank 6–5) and Tezpur University (rank 11–7). Universities that have fallen in rank among the top Central Universities are University of Hyderabad (rank 3–4), Jawaharlal Nehru University (rank 5–6) and Pondicherry University (rank 7–8). Universities that have fallen by over 3 rank positions are North Eastern Hill University (NEHU) (rank 8–11), Tripura University (rank 14–22) and Dr. Harisingh Gour Vishwavidyalaya (HGV) (rank 15–29) and Nagaland University (NLU) (rank 19–28). Other universities that have improved their ranks by at least 3 rank positions are Babasaheb Bhimrao Ambedkar University, Lucknow (BBAU) (rank 16–12), Central University of Tamil Nadu (CUTN) (rank 22–18) and Sikkim University (SKU) (rank 23–20).

A shortcoming in the study is that field and year based normalization have not been attempted. It was assumed that in our case of the Central Universities, the broad base of disciplines covered by each one of them may lessen the requirement for normalization. The statement cannot be proved. It is based on the idea that if Universities were highly specialised in terms of discipline, the need for normalization would be more than if all were broadly general and covered a large number of disciplines. This assumption needs to be verified in future by a more detailed study. One way to address this issue is to produce subject-based rank lists (as was done earlier by Basu et al. (2000) and currently being done by URAP and some others) or a fine-grained thematic area-based ranking (Uddin et al. 2016). It is useful as it identifies the strengths of the Universities in different disciplines rather than try to achieve parity through normalization. Another shortcoming is that due to non-availability of the full data on faculty size, the quality factor CPC could not be properly developed. However, the method has been indicated and can be used if data are available.

In summary, our study identifies institutions with high performance in research by defining a Composite Index which addresses both quality and quantity of research output, as well as output quality as a function of faculty size. The Index is easily generalizable to include weights for different output measures, or even include other outputs (e.g., awards, patents) not included here. We compare ranks obtained using the Composite Index (based on Average Citations Per Paper) with ranks using h-index, and show that the ranks are well correlated (almost identical) in the higher rank range (0–15). (For lower ranks, the Composite Index falls below 0.005 and fails to differentiate sufficiently between the ranks, see Fig. 3).The rank list also correctly identifies the first four Central Universities in the size-dependent Leiden ranking, though the rank order is different. The agreement with URAP, which includes 10 Central Universities, is reasonably good. The ranks were not compared with other global rankings as they include awards and reputation surveys, apart from the fact that very few, if any, Indian or Central Universities appear in their lists.

One problem with publication and citation data is that they are highly skewed. This means that citation statistics can be dominated by a few highly cited papers. This can be avoided by using percentiles or percentile rank classes. Regarding future studies, authors will consider using percentiles and percentile-based indicators to rank Universities (Bornmann 2013; Bornmann and Marx 2014).

We conclude that the Composite Index, as it is defined, is able to reproduce accepted rank lists. It incorporates variables like international collaboration, which the h-index does not. As explained, the formulation can be generalized to include additional relevant variables, and weights of the terms adjusted, for flexibility in assigning priority to different factors in the assessment exercise. (The above statement is made with the caveat that ranks obtained with Composite Index as defined here, with equal weights for the factors, has been validated by comparison with the h-index and Leiden ranking. A similar validation may be necessary once the Index is generalized.) We suggest that the Composite Index can be usefully considered as a multidimensional research performance ranking tool, requiring bibliometric data, that can easily be adopted in national or regional contexts for performance evaluation of research institutions which do not find a place in global and well-known ranking lists.

Notes

No. of papers of an institution in top 1 % of cited papers, or HiCP, where cited papers includes only papers from the current set of institutions.

Journal Citation Reports-Thompson Reuters, https://jcr.incites.thomsonreuters.com/.

References

Academic Ranking of World Universities. (2011). Ranking methodology. Available at: www.arwu.org/ARWUMethodology2007.jsp. Accessed May 11, 2015.

Aguillo, I. F., Ortega, J. L., & Fernández, M. (2008). Webometric ranking of world universities: Introduction, methodology, and future developments. Higher Education in Europe, 33(2–3), 233–244.

Basu, A., & Aggarwal, R. (2001). International collaboration in science in India and its impact on institutional performance. Scientometrics, 52(3), 379–394.

Basu, A., Aggarwal, R., Kumar, D., Kathuria, G., Jha, S., & Rani, T. (2000). Scientific productivity…where do we stand? Second brief report on bibliometric indicators of Indianscience, NISTADS Report, January 2000.

Basu, A., & Nagpaul, P. S. (1998). National mapping of science. NISTADS Report # REP-248/98.

Billaut, J. C., Bouyssou, D., & Vincke, P. (2010). Should you believe in the Shanghai ranking? An MCDM view. Scientometrics, 84(1), 237–263.

Bornmann, L. (2013). How to analyze percentile impact data meaningfully in bibliometrics? The statistical analysis of distributions, percentile rank classes, and top-cited papers. Journal of the Association for Information Science and Technology, 64(3), 587–595.

Bornmann, L., Bowman, B. F., Bauer, J., Marx, W., Schier, H., & Palzenberger, M. (2014), Bibliometric standards for evaluating research institutes in the natural sciences. In B. Cronin, C. R. Sugimoto (Eds.), Beyond bibliometrics (pp. 201–224). Massachusetts Institute of Technology. ISBN: 978-0-262-525551-0.

Bornmann, L., & Marx, W. (2014). How to evaluate individual researchers working in the natural and life sciences meaningfully? A proposal of methods based on percentiles of citations. Scientometrics, 98(1), 487–509.

Bornmann, L., Moritz, S., Felix de Moya, A., & Rüdiger, M. (2014b). What is the effect of country-specific characteristics on the research performance of scientific institutions? Using multi-level statistical models to rank and map universities and research-focused institutions worldwide. Journal of Informetrics, 8(3), 581–593.

Bornmann, L., Moritz, S., Felix de Moya, A., & Rüdiger, M. (2015). Ranking and mapping of universities and research-focused institutions worldwide: The third release of excellencemapping.net. Collnet Journal of Scientometrics and Information Management, 9(1), 65–72.

Bradford, S. C. (1934). Sources of information on specific subjects. Engineering, 137, 85–86. Reprinted in 1985 in Journal of Information Science, 10(4), 173–180.

Costas, R., & Bordons, M. (2007). The h-index: Advantages, limitations and its relation with other bibliometric indicators at the micro level, Special Issue on h-index. Journal of Informetrics, 1(3), 193–203.

García, J. A., Rodriguez-Sánchez, R., Fdez-Valdivia, J., Torres-Salinas, D., & Herrera, F. (2012). Ranking of research output of universities on the basis of the multidimensional prestige of influential fields: Spanish universities as a case of study. Scientometrics, 93(3), 1081–1099.

Geraci, M., & Esposti, D. M. (2011). Where do Italian universities stand? An in depth statistical analysis of national and international rankings. Scientometrics, 87(3), 667–681.

Glänzel, W. (2001). National characteristics in international scientific co-authorship relations. Scientometrics, 51(1), 69–115.

Gupta, B. M. (2011). Indian S&T during 15 years (1996–2010): A quantitative assessment using publication data. DESIDOC Journal of Library and Information Technology, 31(5), 359–370.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. PNAS, 102(46), 16569–16572.

Huang, M.-H. (2012). Exploring the h-index at the institutional level: A practical application in world university rankings. Online Information Review, 36(4), 534–547.

Huang, M. H., & Chi, P. S. (2010). A comparative analysis of the application of h-index, g-index, and a-index in institutional-level research. Journal of Library and Information Studies, 8(2), 1–10.

Huang, M.-H., & Lin, C. S. (2011). Counting methods and university ranking by h-index, ASIST 2011, October 9–13, 2011, New Orleans, LA, USA.

Jeremic, V., Bulajic, M., Martic, M., & Radojicic, Z. (2011). A fresh approach to evaluating the academic ranking of world universities. Scientometrics, 87(3), 587–596.

Lazaridis, T. (2010). Ranking university departments using the mean h-index. Scientometrics, 82(2), 211–216.

Leydesdorff, L., & Opthof, T. (2010). Normalization, CWTS indicators, and the Leiden rankings: Differences in citation behavior at the level of fields. arXiv preprint arXiv:1003.3977.

Liu, N. C., & Cheng, Y. (2005). The Academic Ranking of World Universities. Higher Education in Europe, 30(2), 127–136.

Liu, N. C., Cheng, Y., & Liu, L. (2005). Academic ranking of world universities using scientometrics: A comment to the Fatal Attraction. Scientometrics, 64(1), 101–109.

Liu, N. C., & Liu, L. (2005). University rankings in China. Higher Education in Europe, 30(2), 217–227.

Matthews, A. P. (2012). South African universities in world rankings. Scientometrics, 92(3), 675–695.

Molinari, A., & Molinari, J. (2008). Mathematical aspects of a new criterion for ranking scientific institutions based on the h-index. Scientometrics, 75(2), 339–356.

National Institutional Ranking Framework, NIRF. (2015). A methodology for ranking of universities and colleges in India. Ministry of Human Resource Development, MHRD https://www.nirfindia.org/Docs/Ranking%20Framework%20for%20Universities%20and%20Colleges.pdf. Accessed January 2016.

Nishy, P., Panwar, Y., Prasad, S., Mandal, G., & Prathap, G. (2012). An impact-citations-exergy (iCX) trajectory analysis of leading research institutions in India. Scientometrics, 91(1), 245–251.

Singh, V. K., Uddin, A., & Pinto, D. (2015). Computer science research: The Top 100 institutions in India and in the world. Scientometrics, 104(2), 529–553.

Torres Salinas, D., Moreno-Torres, J. G., Delgado-Lopez-Cozar, E., & Herrera, F. (2011). A methodology for institution-field ranking based on a bi-dimensional analysis. Scientometrics, 88(3), 771–786.

Uddin, A., Bhoosreddy, J., Tiwari, M., & Singh, V. K. (2016). A sciento-text framework to characterize research strength of institutions at fine-grained thematic area level. Scientometrics, 106(3), 1135–1150.

Uddin, A., & Singh, V. K. (2015). A Quantity–Quality composite ranking of Indian Institutions in CS Research. IETE Technical Review, 32(4), 273–283.

UGC Note on Vacant Positions. (2014). http://www.ugc.ac.in/pdfnews/0342004_vacant-position-CU-as-on-01-01-2014.pdf. Accessed on June 18, 2014.

Van Raan, A. F. J. (2005). Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics, 62(1), 133–143.

Vinkler, P. (2006). Composite scientometric indicators for evaluating publications of research institutes. Scientometrics, 68(3), 629–642.

Waltman, L., Calero-Medina, C., Kosten, J., Noyons, E. C. M., Tijssen, R. J. W., Van Eck, N. J., et al. (2012). The Leiden ranking 2011/2012: Data collection, indicators and interpretation. Journal of the American Society of Information Science and Technology, 63(12), 2419–2432.

Waltman, L., & Van Eck, N. J. (2012). A new methodology for constructing a publication level classification system of Science. Journal of the American Society of Information Science and Technology, 63(12), 2378–2392.

Waltman, L., Van Eck, N. J., Leeuwen, T. N., Visser, M. S., & Van Raan, A. F. J. (2011). Towards a new crown indicator: An empirical analysis. Scientometrics, 87(3), 467–481.

Acknowledgments

This work is supported by research grants from Department of Science and Technology, Government of India (Grant: INT/MEXICO/P-13/2012) and University Grants Commission of India [Grant: F. No. 41-624/2012(SR)].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Rights and permissions

About this article

Cite this article

Basu, A., Banshal, S.K., Singhal, K. et al. Designing a Composite Index for research performance evaluation at the national or regional level: ranking Central Universities in India. Scientometrics 107, 1171–1193 (2016). https://doi.org/10.1007/s11192-016-1935-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-1935-0