Abstract

One interesting phenomenon that emerges from the typical structure of social networks is the friendship paradox. It states that your friends have on average more friends than you do. Recent efforts have explored variations of it, with numerous implications for the dynamics of social networks. However, the friendship paradox and its variations consider only the topological structure of the networks and neglect many other characteristics that are correlated with node degree. In this article, we take the case of scientific collaborations to investigate whether a similar paradox also arises in terms of a researcher’s scientific productivity as measured by her H-index. The H-index is a widely used metric in academia to capture both the quality and the quantity of a researcher’s scientific output. It is likely that a researcher may use her coauthors’ H-indexes as a way to infer whether her own H-index is adequate in her research area. Nevertheless, in this article, we show that the average H-index of a researcher’s coauthors is usually higher than her own H-index. We present empirical evidence of this paradox and discuss some of its potential consequences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

One interesting phenomenon that emerges from the typical structure of social networks is the friendship paradox (Feld 1991). It states that, on average, your friends have more friends than you do. This paradox basically exists because of the discrepancy on node degree values in typical social networks (Barabási and Albert 1999), in which individuals with a high number of friends are over-represented when averaging over them (Hodas et al. 2014). As a consequence, the friendship paradox can dramatically skew an individual’s local observation, making such an observation appear far more common than it is in reality (Centola 2010; Salganik et al. 2006).

In this context, identifying variations of this paradox in different ecosystems has been the topic of some important recent research efforts (Eom and Jo 2014; Hodas et al. 2013; Lerman et al. 2015). For instance, two new paradoxes have been verified on Twitter (Hodas et al. 2013): (1) the virality paradox that states that your friends receive more viral content than you do, and (2) the activity paradox that states that your friends post more frequently than you do. More recently, the friendship paradox was generalized to any complex network (Eom and Jo 2014) and its origins are highly correlated with the skewed distribution of node degree (i.e., the number of network friends) (Hodas et al. 2014). In a nutshell, these efforts suggest that any attribute that is highly correlated with node degree is likely to produce this kind of paradox (Eom and Jo 2014). Thus, in this article we take the case of scientific collaborations to investigate whether a similar paradox also arises in terms of a researcher’s scientific productivity as measured by her H-index.

The H-index (Hirsch 2005) is a metric originally proposed to measure a researcher’s scientific output. Its calculation is quite simple as it is based on the researcher’s set of most cited publications and the number of citations they have received. More specifically, a researcher has an H-index h if she has at least h publications that have received at least h citations. Thus, if a researcher has at least ten publications with at least ten citations, her H-index is 10.

Like any metric that attempts to summarize a complex and subjective evaluation in a single number, the H-index has its limitations, including being biased towards the researchers’ scientific lifetime, not accounting for the number of coauthors in the publications and ignoring the distinct citation patterns across different areas (Bornmann and Daniel 2005). Nevertheless, the H-index became popular as it provides a notion of both quality and quantity of a researcher’s scientific output in a simple and easy-to-compute metric. As a consequence, researchers are often tempted to evaluate themselves based on the H-index. Systems like Google ScholarFootnote 1 and ArnetMinerFootnote 2 help researchers track their publication impact and coauthors, as well as to maintain their profiles, where the H-index is clearly stamped. Thus, it is natural to assume that researchers may use their coauthors’ H-indexes as a way to estimate whether their own H-index is adequate in their respective research areas or within a department or university.

Despite recent efforts to generalize the friendship paradox (Eom and Jo 2014), it is still unclear whether a similar paradox actually happens when we consider the H-index in a coauthorship network. However, we have been able to show that the average H-index of a researcher’s coauthors is usually higher than her own H-index.

Next, we briefly discuss how we have estimated the H-index for researchers from distinct Computer Science research communities, and then provide empirical results that corroborates the existence of the H-index paradox.

Estimating H-index

In order to provide evidence of the H-index paradox, we need to be able to (1) identify the coauthors of a large set of researchers and (2) estimate the H-index of these researchers as well as of their respective coauthors.

We focus on constructing the coauthorship network of Computer Science researchers from different areas. To do that, we gathered data from DBLP,Footnote 3 as it offers its entire database in XML format for download. We gathered this data for those researchers who published in the flagship conferences of ten major ACM SIGs (Special Interest Groups):Footnote 4 SIGCHI, SIGCOMM, SIGCSE, SIGDOC, SIGGRAPH, SIGIR, SIGKDD, SIGMETRICS, SIGMOD and SIGPLAN.

There are several tools that measure the H-index of researchers, of which Google Scholar is today the most prominent one. However, in order to have a profile in this system, a researcher needs to sign up and explicitly create it. In a preliminary collection of part of the profiles of the DBLP authors, we found that less than 30 % of these authors had a profile at Google Scholar (Alves et al. 2013). Thus, this strategy would largely reduce our dataset.

To overcome this limitation, we used data from the SHINE (Simple HINdex Estimation) projectFootnote 5 to estimate the researchers’ H-index. SHINE provides a website that shows the H-index of almost 1800 Computer Science conferences. It was created based on a large scale crawl of Google Scholar. Its strategy consisted of searching for the title of all papers published in such conferences, thus effectively estimating their H-index based on the citations computed by Google Scholar. Although SHINE only allows searching for the H-index of conferences, their developers kindly allowed us to use its dataset to infer the H-index of researchers based on the citations received by their conference papers.

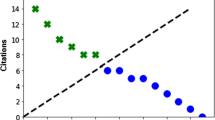

However, besides covering only conferences, SHINE does not track all existing conferences in Computer Science, which might cause the researchers’ H-index to be underestimated when computed with this data. To investigate this issue, we compared the H-index of researchers with a profile on Google Scholar with their estimated H-index based on the SHINE data. For this, we randomly selected ten researchers for each of the ACM SIG’s flagship conferences and extracted their H-indexes from their respective Google Scholar profiles. In comparison with the H-index we estimated from SHINE, the Google Scholar values are, on average, 50 % higher. Figure 1 shows the scatterplot for the two H-index measures. We can note, however, that although the SHINE H-index is lower, the two measures are highly correlated. The Pearson’s correlation coefficient is 0.85, indicating that the H-index estimations are proportional in both systems.

Table 1 summarizes the collected data, including the SIG, the conference acronym, the period considered (some conferences had their period reduced to avoid a hiatus in the data), the conference’s SHINE H-index and the total number of authors, publications and editions. This dataset is useful to our purposes, since it allows us to investigate the H-index paradox on real Computer Science communities, in which researchers might tend to compare themselves with their peers.

Comparing the H-index of a researcher with her coauthors’

Having estimated the H-index of each researcher, we can compare it with her coauthors’. Figure 2 shows the fraction of authors with an H-index that is lower than the average of their coauthors for the ten conferences we have considered. We note that even focusing on authors that have published in flagship conferences of ACM SIGs, the fraction of authors that are below average is quite high for all research communities analyzed, varying from 69 % (POPL) to 81 % (SIGDOC). When we look at the percentage of authors with at least one coauthor with a higher H-index than theirs, the numbers are higher than 90 % for most of the conferences.

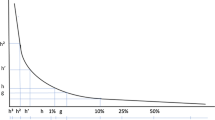

These results confirm the H-index paradox since one’s coauthors in a research community have, on average, a higher H-index than hers. The reasons behind the H-index paradox might be explained by the high correlation between node degree and H-index in a research community. Usually, high degree nodes tend to be senior researchers that not only advise a large number of students but also establish more collaborations, often with different groups along their career (Alves et al. 2013). To further investigate this issue, Fig. 3 shows the distribution of the number of authors as a function of the H-index. It clearly resembles a long tail distribution, thus suggesting that some authors disproportionally contribute to the average H-index. This disproportion on the average H-index might be even sharper with the typical structural properties of coauthorship networks, which are similar to many social networks (Huang et al. 2008; Mislove et al. 2007), i.e., they have a long tail degree distribution, in which highly connected authors create bridges across multiple highly connected components, leading to the properties of high clustering coefficient and short diameter. Finally, we measured the Pearson’s coefficient correlation between a researcher’s H-index and her degree. Such correlation is 0.36 a value that, although not very high, is positive, thus suggesting that a small number of researchers simultaneously have a high H-index and a large number of connections in the network.

Conclusions

In this article we have analyzed a variation of the well-known friendship paradox. By analyzing the average H-index of a researcher’s coauthors for different Computer Science research communities, we show that the H-index paradox arises because the H-index is positively correlated with node degree. One of the implications of the friendship paradox is the fact that it leads to systematic biases in our perceptions. Thus, similarly, the H-index paradox induces researchers to feel that they rank below average in comparison with their coauthors. This phenomenon is an instantiation of a sensation that occurs in different scenarios and is popularly captured by an expression that is common to many languages and cultures: the grass is always greener on the other side of the fence (Giansante et al. 2007).

References

Alves, B. L., Benevenuto, F., & Laender, A. H. F. (2013). The role of research leaders on the evolution of scientific communities. In Proceedings of the 22nd international conference on world wide web (companion volume) (pp. 649–656).

Barabási, A. L., & Albert, R. (1999). Emergence of scaling in random networks. Science, 286(5439), 509–512.

Bornmann, L., & Daniel, H. D. (2005). Does the h-index for ranking of scientists really work? Scientometrics, 65(3), 391–392.

Centola, D. (2010). The spread of behavior in an online social network experiment. Science, 329(5996), 1194–1197.

Eom, Y. H., & Jo, H. H. (2014). Generalized friendship paradox in complex networks: The case of scientific collaboration. Scientific Reports, 4. http://www.nature.com/articles/srep04603.

Feld, S. L. (1991). Why your friends have more friends than you do. American Journal of Sociology, 96(6), 1464–1477.

Giansante, S., Kirman, A., Markose, S., & Pin, P. (2007). The grass is always greener on the other side of the fence: The effect of misperceived signalling in a network formation process. In A. Consiglio (Ed.), Artificial markets modeling: Methods and applications. Lecture notes in economics and mathematical systems (Vol. 599, pp. 223–234). Berlin: Springer.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the United States of America, 102(46), 16569–16572.

Hodas, N. O., Kooti, F., & Lerman, K. (2013). Friendship paradox redux: Your friends are more interesting than you. In Proceedings of the international conference on web and social media (pp. 8–10).

Hodas, N. O., Kooti, F., & Lerman, K. (2014). Network weirdness: Exploring the origins of network paradoxes. In Proceedings of the international conference on web and social media (pp. 8–10).

Huang, J., Zhuang, Z., Li, J., & Giles, C. L. (2008). Collaboration over time: Characterizing and modeling network evolution. In Proceedings of the 2008 international conference on web search and data mining (pp. 107–116).

Lerman, K., Yan, X., & Wu, X. Z. (2015). The majority illusion in social networks. arXiv:1506.03022.

Mislove, A., Marcon, M., Gummadi, K. P., Druschel, P., & Bhattacharjee, B. (2007). Measurement and analysis of online social networks. In Proceedings of the 7th ACM SIGCOMM conference on internet measurement (pp. 29–42).

Salganik, M. J., Dodds, P. S., & Watts, D. J. (2006). Experimental study of inequality and unpredictability in an artificial cultural market. Science, 311(5762), 854–856.

Acknowledgments

This research was partially funded by InWeb—The Brazilian National Institute of Science and Technology for the Web (MCT/CNPq/FAPEMIG Grant 573871/2008-6), and by the authors’ individual grants from CAPES, CNPq and FAPEMIG.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Benevenuto, F., Laender, A.H.F. & Alves, B.L. The H-index paradox: your coauthors have a higher H-index than you do. Scientometrics 106, 469–474 (2016). https://doi.org/10.1007/s11192-015-1776-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-015-1776-2