Abstract

Within the past decade, there has been a growing number of studies examining undermatch—when students apply to or enroll in institutions less selective than their academic qualifications permit. To estimate undermatch, researchers must define institutions’ selectivity levels and determine which students are eligible to gain admission to these selectivity levels. Researchers examining undermatch have used different approaches to defining institutional selectivity and student qualifications. This, in turn, has produced a wide range of undermatch rates, and at times, conflicting or inconclusive findings for underrepresented students. As the body of literature on undermatch expands, the tradeoffs and limitations in estimation approaches must be better understood. Using a nationally representative sample of students (ELS:2002), this study empirically tested these differences in undermatch estimations using two different definitions of institutional selectivity and three distinct approaches of calculating student qualifications on the (1) distribution of students across qualification levels; (2) undermatch rates; and (3) likelihood of undermatch. Findings show that depending on the approach taken, the distribution of student qualifications, undermatch rates, and odds ratios in subsequent analyses can vary greatly. For underrepresented students, the difference in estimation methods can change their representation in various qualification levels, the gaps in undermatch rates, and the significance of results in their likelihood of undermatching. Implications for future undermatch research are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Research has shown many students do not always apply to or enroll in institutions they are qualified to attend, which constitutes undermatching (Bowen et al. 2009; Hoxby and Avery 2012; Roderick et al. 2011; Smith et al. 2012). In particular, underrepresented students (i.e., Black, Latino, low-income and potential first-generation college students) were found to be more likely to undermatch to less selective institutions than their non-underrepresented peers (Bowen et al. 2009; Hoxby and Avery 2012; Roderick et al. 2011; Smith et al. 2012). As one potential consequence of enrolling in less selective institutions, students who undermatch take longer to complete their degrees and are less likely to graduate from college than students who do not undermatch (Bowen et al. 2009; Wyner et al. 2009). These students therefore forfeit the personal benefits associated with getting a baccalaureate degree (Baum et al. 2013). From the societal perspective, failing to broaden access for students at matched institutions is an inefficient way to cultivate the nation’s human capital.

In many studies, postsecondary outcomes (e.g., four-year enrollment the fall after senior year or first-year GPA) are easily defined and observed. However, undermatch is an estimate of the highest level of selectivity for which a student is likely to gain admission compared to where a student applies or enrolls. To determine whether students undermatch, researchers must group colleges in some hierarchical fashion (e.g., Barron’s competitiveness index) and subsequently estimate a threshold for admission to each group based on a combination of college admissions predictors—typically academic ability indicators such as SAT scores, GPA, and advanced coursework (Bowen et al. 2009; Roderick et al. 2011). The actual application and/or enrollment decisions of students are then examined for a match on institutional selectivity based on estimated eligibility using these admissions rubrics.

However, there is little consistency or consensus in the undermatch literature around definitions of selectivity and estimations of student qualifications. Under the banner of undermatch, studies have defined institutional selectivity and their subsequent eligibility requirements in various ways, used datasets representing various populations, and have produced a range of undermatch rates—from 62 % in Chicago (Roderick et al. 2008) to 41 % of students nationally (Smith et al. 2012). There are also discrepancies across studies in the student characteristics that remain statistically significant in multivariate regression analyses. For example, one study that used a national sample of students found Black students were less likely to undermatch than White students (Smith et al. 2012), yet a study using data from Chicago Public Schools (CPS) found there to be no difference between Black and White student’s likelihood to undermatch net of other variables (Roderick et al. 2011). It is unknown to what extent the different, and sometimes conflicting, findings are associated with the differences in study samples or how undermatch is defined.

There is a lack of understanding in how various estimation approaches of undermatch may produce different findings in general, and for underrepresented students in particular. While one study examined the impact of alternative benchmarks for their chosen method of defining student qualifications on undermatch rates (Smith et al. 2012) and a recent essay challenged the ability to accurately categorize students by institutional selectivity (Bastedo and Flaster 2014), no study has empirically tested the implications of the variation in institutional selectivity definitions or methods for determining student qualifications on undermatch rates. However, previous research suggests that the even seemingly minor methodological differences that researchers employ can produce large differences in results (Wells et al. 2011).

The purpose of this study was to examine the implications of the various ways researchers have approached estimating undermatch, and what it could mean for underrepresented students. Findings from this study shed light on the various implications for the estimation of undermatch and prevailing definitions of such terms as selective institutions and academically qualified. Moreover, findings from this study are particularly salient as research intersects with practice and various stakeholders.

Review of the Literature

In order to review the existing literature on undermatch and its various approaches, I first summarize the undermatch rates reported by previous studies and compare results. I then walk through the ways in which previous researchers have defined institutional selectivity and estimated the student qualifications necessary to be admitted. I subsequently close this review with a discussion on the challenges of estimating undermatch.

Undermatch Rates

Previous studies have found rather high rates of undermatch across various student populations (Roderick et al. 2011; Smith et al. 2012). The Chicago Consortium found an overall undermatch rate of 62 % of its 2005 CPS graduates (Roderick et al. 2011). In a national sample of 2004 high school graduates, 41 % of students across all levels of academic ability undermatched (Smith et al. 2012). While Smith and his colleague’s undermatch estimates were lower than Roderick and her colleague’s rates, it is unclear whether the differences in undermatch rates are due to the samples (CPS students versus a national population of students) or the ways in which undermatch was defined.

Other undermatch studies focused specifically on high-achieving students (Bowen et al. 2009; Hoxby and Avery 2012; Roderick et al. 2009). A 2009 study of students enrolled in Advanced Placement (AP) and International Baccalaureate (IB) programs revealed that although CPS’s expansion of these programs led to two-thirds of their advanced program graduates possessing the ACT scores and GPA to be in contention for selective admissions, almost two-thirds (63 %) of these graduates did not enroll in selective institutions (Roderick et al. 2009). State-level analyses by Bowen et al. (2009) concluded that up to 40 % of the most academically qualified students in North Carolina did not enroll in selective institutions. In their study of high-achieving low-income college entrance exam-takers, Hoxby and Avery (2012) found that 53 % of students who scored in the 90th percentile on the SAT or ACT and were in the bottom income quartile were not submitting their exam scores to selective institutions for which they were within the SAT and GPA range to qualify. It is unclear whether high-achievers in Chicago fared worse than those in North Carolina, or even relative to all test takers in the nation. The ability to draw meaningful comparisons from the variations in the undermatch rates across studies is impossible, given the differences in samples and methodological approaches (discussed in the next section).

The likelihood of undermatch also varied by demographic characteristics (Bowen et al. 2009; Roderick et al. 2006, 2008; Smith et al. 2012). Researchers found Latino students undermatched at higher rates than their peers—a relationship that held even after considering multivariate controls of demographic, academic, and high school characteristics (Roderick et al. 2008; Smith et al. 2012). This pattern was not found for Black students. As explained by Smith and colleagues, Black students were less likely to undermatch than White students because fewer Black students are qualified to attend four-year institutions, “and so mechanically, they have less of an opportunity to undermatch” (Smith et al. 2012, p. 13).

Similarly, students with lower family income and whose parents have lower levels of education were also more likely to undermatch when compared to those with higher family income and whose parents have higher levels of education, respectively (Bowen et al. 2009; Smith et al. 2012). Bowen et al. (2009) noted that only one-third of highly qualified students who were first-generation college-goers attended a selective institution. When controlling for measures of demographic, academic, and high school characteristics the likelihood of undermatch by SES, parental education and family income remained (Bowen et al. 2009; Smith et al. 2012). However, one study found measures of SES and income (measured as mother’s education as well as proxies of neighborhood poverty and educational attainment) had no predictive power on the propensity to undermatch when controlling for other student and school-level variables (Roderick et al. 2011). This discrepancy in findings could be a result of differences in population, as poverty and SES measures may have distinct relationships in Chicago or in differences in the statistical approaches to estimating undermatch.

In trying to understand the literature on undermatch, it is impossible to reconcile the differences across studies due to different samples. Moreover, researchers employed different regression models to account for the observed variations in undermatch. Adding to the challenge, researchers have all used distinct ways of estimating undermatch, as detailed in the following section.

Estimating Undermatch

In order to estimate undermatch, researchers first grouped institutions by selectivity, then estimated the likelihood of students being admitted or qualified to attend these selectivity groupings. They subsequently compared the selectivity of institutions where students applied and enrolled to the selectivity of institutions where students were deemed qualified to attend. If students applied to or enrolled in institutions that were less selective than the institutional selectivity they were qualified to attend, then they were identified as having undermatched. While this approach may seem relatively straightforward, previous studies have varied in the ways they have (a) defined selectivity and (b) determined student qualifications.

Defining Selectivity

Many studies refer to the Barron’s Admissions Competitiveness Index as a common definition of selectivity (Bowen et al. 2009; Roderick et al. 2006; Smith et al. 2012), which includes seven levels of selectivity for four-year postsecondary institutions ranging from Most Competitive to Non-Competitive, as the first column in Table 1 illustrates. Barron’s compiled these ratings using SAT/ACT scores of admitted students, the GPA and class rank required for admission and the percentage of applicants accepted (Barron’s Educational Series Inc. 2004).

Some researchers have then collapsed the categories to suit their respective samples (Bowen et al. 2009; Roderick et al. 2011; Smith et al. 2012). Roderick et al. (2011) combined across Barron’s seven categories to produce five categories and added two-year institutions (Two Year, Nonselective, Somewhat Selective, Selective, and Very Selective). Smith et al. (2012) also used a similar grouping of five categories to determine match. In Bowen et al. (2009) study, the authors benchmarked the highest selectivity level against both the University of North Carolina-Chapel Hill (ranked by Barron’s as Most Competitive) and the less selective North Carolina State University (two selectivity levels below at Very Competitive).

Others researchers have taken a different approach to organizing institutions that did not include Barron’s or solely relying on exam scores. These researchers have benchmarked selectivity based on the median SAT or ACT scores of enrolled students at the institution (Dillon and Smith 2013; Hoxby and Avery 2012; Hoxby and Turner 2013). In their study of mismatch, Dillon and Smith (2013) crafted their own index of institutional quality using mean SAT/ACT, percent of applicants who were declined admission, the average salary of instructional faculty, faculty-student ratio, open-access status, and whether the institution reported SAT/ACT scores of incoming freshmen. Their study also included two additional analyses that measured college quality as SAT interquartile range as well as average SAT scores. In two related studies, Hoxby and Avery (2012) as well as Hoxby and Turner (2013) used the median SAT score to determine whether high-achieving low-income students were matching their applications and enrollments to suitable colleges.

The various ways researchers have defined institutional selectivity influences the thresholds for identifying qualified students and the subsequent rate of undermatch found in the sample. For those studies that used selectivity categories, too few selectivity categories would oversimplify the choice process and will suppress undermatch rates, whereas too many categories might result in narrow qualifications that might therefore overestimate undermatch rates. Given their propensity to undermatch relative to their peers, results for underrepresented student populations may be particularly sensitive to these thresholds.

Defining Academic Qualifications

The variation in qualifications has its own set of implications—if qualifications for admission into these selectivity levels are too narrow one will underestimate the prevalence of undermatch, but lax definitions might result in higher undermatch rates. Researchers have estimated qualifications for admission in several different ways. Using a combination of ACT scores, GPA, and a measure of course rigor (participation in IB or AP), the Chicago Consortium (2006) developed a rubric for identifying student eligibility for their four levels of institutional selectivity. These cutoffs were assigned by creating a grid of various combinations of GPAs and composite ACT scores similar to Fig. 1. In one of their approaches, the researchers assigned a selectivity level to every square on the grid by taking the mode of the selectivity level in which students enrolled for each combination of GPA and ACT scores. In other words, they identified the selectivity level in which students with each combination of GPA and ACT scores most frequently enrolled.

Establishing a similar, yet more restrictive rubric of exam scores and GPA to determine student eligibility, Bowen et al. (2009) identified students as academically qualified if their combination of SAT and GPA scores yielded 90 % acceptance rate at the most selective institutions. This was accomplished by creating a grid of GPA and SAT scores and calculating the acceptance rate for every selectivity level for every square on the grid. The authors assigned a selectivity level to each square on the grid by identifying the most selective level that had greater than a 90 % acceptance rate. The 90 % threshold was not empirically determined, but reasoned by the authors as simply a very good chance of gaining admission.

Smith et al. (2012) had a different approach. They computed the individual probabilities of students gaining admission to each level of selectivity using admissions data from a nationally representative dataset. Using a series of logistic regressions, they were able to create probability scores for each student for every selectivity level using students’ academic characteristics (weighted GPA, ACT/SAT scores, and whether students participated in Advanced Placement or International Baccalaureate). This method had the advantage of producing estimates that accounted for several characteristics rather than GPA and college entrance exam scores alone. Because this approach tailored each probability of getting into a selectivity category to every student, there were no across-the-board benchmarks (i.e. GPA or SAT cutoffs) for this method. However, the precision of these estimates is based on the researchers’ choice of admission predictors.

Others have used exam scores as a measure of qualifications (Dillon and Smith 2013; Hoxby and Avery 2012; Hoxby and Turner 2013). Dillon and Smith (2013) used the students’ scores on the Armed Forces Vocational Aptitude Battery (ASVAB). Students’ percentile in the distribution of scores determined their qualifications. The authors also used SAT/ACT scores relative to institutions’ average scores to determine qualifications, similar to other studies (Hoxby and Avery 2012; Hoxby and Turner 2013).

The variation in the methods used to estimate academic qualifications has important implications for underrepresented students. The undermatch rates for these populations are particularly sensitive to the qualification benchmarks set by researchers when considering underrepresented students are less likely to be in the most rigorous academic tracks or take the most rigorous courses (Adelman 2006; Bell et al. 2009; College Board 2012; Engberg and Wolniak 2010; Oakes1992), as well as demonstrate lower average levels of academic achievement than their non-underrepresented counterparts (National Center for Education Statistics 2010). Therefore, the use of GPA and ACT/SAT score benchmarks may adversely impact Black, Latino, low-income, and potential first-generation college students and may not reflect other considerations given in the admissions process, particularly at selective institutions.

Challenges of Estimating Undermatch

Regional differences embedded in the student choice process are part of the challenge in trying to understand undermatch. Because most of the existing studies have based student qualifications for admissions on observed application and/or enrollment behaviors and students tend to apply and enroll at institutions within a region, definitions of qualification benchmarks will vary with the regional landscape of post-secondary institutions. The qualification benchmarks that Roderick et al. (2006, 2008, 2009, 2011) and Bowen et al. (2009) used are linked to the higher education landscapes near and around Chicago and North Carolina, respectively. In the Chicago studies, the authors noted that most of the students enrolled at institutions in the studies’ Very Selective category were enrolled at University of Illinois, Urbana-Champaign. As Bowen et al. (2009) study focused on public institutions in North Carolina, the authors identified the two most selective public institutions in the state (University of North Carolina—Chapel Hill and North Carolina State University) for the most selective benchmark. Therefore, findings were highly relevant for (yet limited to) those particular populations and regions. However, should undermatch, particularly at the most selective institutions, be defined in such a localized way? Selective college recruitment and enrollment crosses state boundaries, with high-achieving students most willing to go the farthest (Mattern and Wyatt 2009). Therefore, a wider set of institutions and a more granular definition of selectivity should be considered, especially for high-achieving students.

Second, many of the current studies estimated undermatch based on what is observed and not necessarily what is possible. In all but one study (Bowen et al. 2009), students who did not have standardized test scores were excluded from the sample. In states or districts where college entrance exams are not taken by all students, this listwise deletion approach threatened external validity as underrepresented groups participate in college entrance exam-taking less than their nonunderrepresented counterparts. Therefore, imputing exam scores increases the number of students for whom qualifications can be estimated. The inclusion of these students allows for a more robust understanding of academic qualification and undermatch for students who may have opted out of taking college entrance exams (e.g., a student enrolling in a community college that does not require SAT or ACT scores) yet completed high school and indicated a desire to continue their education.

Finally, unlike other postsecondary outcomes of interest, undermatch requires a lot of information about where students applied, were accepted, and enrolled that is not typically collected at the student level. Most high schools do not track postsecondary application, admission, or enrollment information. For some districts or states, using postsecondary enrollment information through the National Student Clearinghouse might be the best approximation with regard to observing student behavior. These districts would potentially follow a method similar to Roderick et al. (2006) in using postsecondary enrollment data to create benchmarks. However, we do not know how close this next-best approach approximates qualifications and undermatch.

This study provides several conceptual and methodological contributions to the study of undermatch by using a large nationally representative dataset that addresses many of the aforementioned challenges. Data from the educational longitudinal study of 2002 (ELS:2002) allows for comparisons across definitions of selectivity and approaches of calculating qualifications, leveraging the breadth of selective institutions across the country for high-achieving students. In addition, the imputation of missing data allows for the estimation of undermatch for more students, and in particular students from underrepresented backgrounds.

Methods

The purpose of this study is to empirically test various definitions of selectivity and approaches to estimating student qualifications used to calculate undermatch rates by answering the following research questions:

-

(1)

How do different definitions of selectivity affect estimations of student eligibility by institutional selectivity? How does this affect the estimated eligibility of Black, Latino, low-income and potential first-generation college students?

-

(2)

How do rates of student undermatch differ by academic qualification benchmarks by institutional selectivity? How do rates vary as a result among Black, Latino, low-income and potential first-generation college students?

-

(3)

With the various ways in which undermatch is defined, how does the likelihood of undermatch vary for Black, Latino, low-income and potential first-generation college students?

Data and Sample

I examined the differences and possible limitations among a variety of undermatch estimation methods using a single national dataset, ELS:2002, a nationally representative longitudinal survey administered by the National Center for Education Statistics (NCES). NCES surveyed students as sophomores in 2002, seniors in 2004, and 2 years after they were scheduled to complete high school in 2006. NCES also administered surveys to participants’ parents, teachers, and other school personnel. Using two-level stratified sampling, NCES stratified schools by geography and urbanicity, with an oversampling of private schools; 750 schools were sampled in total, including public, Catholic and other private schools. At the second stage of the sampling, NCES sampled students within the selected schools, with an oversampling of Asians. The base year survey included 15,400 students, 13,500 parents, and 7,100 teachers (Ingels et al. 2007).

Following the approaches of Roderick et al. (2011) and Smith et al. (2012), the sample excludes students who are not traditionally college-bound (i.e., students who are enrolled in special education, or do not have on-time high school completion). In addition, the analytic sample is restricted to students who are present in all three waves (2002, 2004, 2006) of the data collection; seniors who indicated an intention to go to college, similar to previous work from Roderick et al. (2011); and students who did not attend vocational or alternative high schools. Students who did not enroll in college remain in the sample, as not enrolling in college is considered a form of undermatch (Smith et al. 2012). The resultant analytic sample includes 8,960 students. To arrive at a representative analytic sample of 2,029,340 high school seniors in 2004, I used the F2F1WT throughout the analyses provided by NCES.

Table 2 shows that while there was moderate loss of representation of low-income and first-generation students in the analytic sample, there was only a small loss in the representation of Black and Latino students. There was also a gain in female student representation. As expected, restricting the sample to college-bound students increased the means of the academic indicators for the sample.

There are many benefits to using ELS:2002 data. First, a national dataset allows one to consider four-year institutions nationwide and therefore establish a nuanced categorization of selectivity using the full range of Barron’s Admissions Competitiveness Index. Also, a nationally representative sample is generalizable to the population of students in the country on a college-bound path who also indicated an intention to enroll in college. Moreover, the dataset includes application and admission data that allow for predicting college admission. Finally, the analytic power of such a large dataset makes the analyses of subpopulations possible; a noted limitation in the Bowen et al. (2009) study that lacked adequate numbers of Latinos in the dataset.

Defining Undermatch

The outcome of interest in this study is the likelihood of undermatch at the time of enrollment based on various definitions of undermatch. In order to identify undermatch, I first established admission criteria for each level of selectivity, determined where each student is most likely to gain admission based on these criteria, and then determined the highest selectivity of the institutions to which they applied or enrolled. This study defined selectivity in two ways (see Table 3) and utilized three different methods to identify student qualifications. I then compared the selectivity level of the student’s enrollment (including no college) to the student’s qualification level. If the students’ qualifications level exceeds the selectivity of their enrollment, they were identified as having undermatched.

Defining Institutional Selectivity Categories

In this study I used the following two approaches to define selectivity:

Barron’s Categories

For the first method of defining institutional selectivity, I utilized Barron’s competitiveness categories, excluding specialized institutions (e.g., culinary or art institutions), and added two-year institutions. This resulted in six levels of institutional selectivity, Most Selective, Highly Selective, Very Selective, Selective, Nonselective, and Two-Year institutions (Barron’s Educational Services 2004).

Barron’s Categories Collapsed

Previous studies have collapsed Barron’s categories into four categories and added two-year institutions (Roderick et al. 2008; Smith et al. 2012). For the second definition of institutional selectivity I combined the two most selective categories, Most Selective and Highly Selective, into one. The five resulting categories are: Most Selective, Very Selective, Selective, Nonselective, and Two-Year institutions.

Defining Academic Qualifications

I used the following three alternative approaches in this study to estimate academic qualifications (see Fig. 1):

Enrollment Rate Method

Similar to Roderick et al. (2006) approach to defining student qualifications, the enrollment rate method employed postsecondary enrollment information to define student qualifications. I grouped students by combinations of SAT scores and GPA. Within each grouping or cell, the institutional selectivity that students most attended, or the mode, was selected as the qualification level. This method used the least amount of student information (GPA, SAT/ACT score, and enrollment). Similar to the first in a series of Chicago studies (Roderick et al. 2006), researchers who do not have application or admission data might use this approach.

Acceptance Rate Method

The second approach to determining students’ academic qualifications was similar to Crossing the Finish Line (Bowen et al. 2009), where acceptance rates for every selectivity level were calculated for a grid of GPA and SAT score combinations. Using the ELS:2002 sample, each cell on the grid was assigned the selectivity level with an acceptance rate greater than 80 %. For example, students with a combination of 3.0–3.5 GPA and between an 1,100 and 1,190 SAT score might have a 72 % admission rate at the most selective institutions, 79 % admission rate at the highly selective institutions, and 91 % admission rate at very selective institutions. Based on the threshold of an 80 % or greater acceptance rate, students with a GPA between 3.0 and 3.5 and SAT scores between 1,100 and 1,190 are designated as being qualified for Very Selective institutions. I subsequently assigned students to a qualification level based on where their GPA and SAT scores fell on the grid, as shown in Fig. 1. This approach used slightly more student information (GPA, SAT/ACT score, application and admission information) than the enrollment rate method. Further, I lowered the threshold from Bowen et al. 90 to 80 % in order to match the threshold for the predicted probability method (below). An 80 % likelihood of gaining admission remains relatively high.

Predicted Probability Method

ELS:2002 provided rich information on where students applied, were admitted and enrolled. Since the goal of estimating undermatch is to find the highest selectivity for which each student is likely to be admitted, I utilized the approach of Smith et al. study (2012) to predict the probability of admission based on students’ application and admission data. Smith et al. (2012) predict the probability of being admitted to every selectivity level for each student using SAT scores, GPA, and measures of course rigor as predictors. I identified the highest selectivity level with a probability of admission greater than 80 % as the student’s qualification level. While Smith and his colleagues used a 90 % threshold, I chose an 80 % threshold because the models used in this study included many more predictors, which improved the precision of the estimates. Moreover, an 80 % likelihood of gaining admission is still much greater than chance, and allows for the increased representation of Black, Latino, low-income and potential first-generation students. To determine a student’s qualification, for example, a student might have an 83 % likelihood of gaining admission to an institution in the Most Selective category, 86 % likelihood of gaining admission to a Very Selective institution, etc. In this example, I would assign the student to the Most Selective category because it is the highest selectivity rating with over 80 % likelihood. This approach used the most amount of information to predict admission, as it leveraged academic achievement, demographic characteristics [gender, race/ethnicity, parental education, parental income, extracurricular of activities in which the student has participated (student government, sports, community service)], as well as the admission and application data needed to determine the outcome. Table 4 describes the variables used for this study. As a result, the predicted probability approach yields the highest level of precision in determining the likelihood of student qualifications compared to other approaches.

Analytic Methods

In the first step in the analyses, I used multiple imputation in order to address missing data. I employed multiple imputation through the PROC MI procedure found in the SAS software package with an expectation maximization (EM) algorithm, which used all information available to produce estimates for missing data for the entire dataset. For the first two research questions, ten datasets of plausible values were averaged out to produce a single dataset of imputed values. For the third research questions, which utilized a regression approach, standard errors were adjusted using Markov Chain Monte Carlo (MCMC) algorithm. Listwise deletion was not selected for this analysis because the missingness of data was not completely at random—students who were Latino; whose parents do not have college degrees; and from lower income families were less likely to have SAT scores. However, this study assumes that the data are missing at random (i.e., the probability of missing SAT data is unrelated to SAT scores once one accounts for student demographics). Further, the SAT/ACT score is a necessary component in all three approaches to calculate student qualifications. Therefore, listwise deletion of students missing SAT scores would suppress the full extent of undermatch and threaten external validity.

Table 5 presents descriptive information on the distribution of students by select demographic characteristics and illustrates changes in representation of different groups resulting from the imputation of the sample. It shows multiple imputation increased the sample size by nearly 30 % (i.e., it preserved cases that represent 459,450 students that would have otherwise been eliminated from the analyses). The imputation of missing data on academic information increased representation of Latino and Black students, students whose families made less than $50 K, and students whose parents did not have a college degree. In addition, it also substantially increased representation of students who were academically lower performing (GPA less than 2.5 and SAT scores below 1000) yet who nonetheless completed high school and indicated a desire for postsecondary education. Subsequent results discussed represent the imputed sample.

To address the first research question, I compared the representation of students by race/ethnicity, parental education and family income across institutional selectivity for both selectivity definitions (Barron’s categories and Barron’s categories collapsed) and for all three approaches to estimating student qualifications (acceptance rate, enrollment rate and predicted probability). This produced six different qualification estimations.

In order to address the second research question, I calculated undermatch rates for the analytic sample using the three student eligibility approaches—enrollment rate, acceptance rate, predicted probability—that were paired with the two ways in which to define selectivity. This resulted in a three-by-two comparison of undermatch rates. They were examined by students’ race/ethnicity, parental education and family income.

I addressed the third research question using logistic regression (PROC SURVEYLOGISTIC), where I produced odds-ratios using various predictors of undermatch (i.e., GPA, SAT scores, highest level math course taken, number of AP/IB courses taken, and race/ethnicity) drawn from previous research (Bowen et al. 2009; Roderick et al. 2011; Smith et al. 2012). Undermatch was determined in the six different combinations of selectivity definitions and qualifications approaches: Barron’s categories and enrollment rate; Barron’s categories and acceptance rate; Barron’s categories and predicted probability; and Barron’s categories collapsed and acceptance rate, Barron’s categories collapsed and enrollment rate, Barron’s categories collapsed and predicted probability. This allowed for comparisons of estimates across student qualification approaches and definitions of institutional selectivity. The magnitude, direction, and significance of the odds-ratios were compared across the six models.

Findings

Comparison of Representation across Student Qualifications by Institutional Selectivity

As Fig. 1 illustrates, the six different approaches to estimating undermatch produced very different qualification levels for similarly abled students.Footnote 1 The most extreme example is for students who had GPAs within the range of 2.5–2.9 and SAT scores within 1200 and 1290. According to Fig. 1, this group of students were qualified to attend a selective, very selective, or highly selective institution, depending on the estimation approach.Footnote 2 These variations in classification resulted in dramatic shifts in student representation across the various selectivity levels for the different approaches to estimating undermatch, as illustrated in Table 6.

The representation of Black, Latino, first-generation, and low-income students varied across approaches. Generally, as the institutional selectivity increased, the representation of Black, Latino, low-income and first-generation students in the ranks of the qualified steadily decreased (e.g., Black student representation ranged from 0.3 vs. 28.4 % of students who were qualified to attend most selective versus 2-year institutions using the acceptance rate approach and Barron’s categories). However, when using the Barron’s categories (the left side of Table 6), the predicted probability increased the representation of Black, Latino, and low-income students in the more selective institutions. For example, Latinos represented 11.1 % of students who were qualified to attend most selective institutions when using the predicted probability approach versus 1.6 % with the acceptance rate method. There is a large dip in the representation of Black and Latino students who are qualified to attend highly selective institutions when estimating qualifications with the predicted probability approach and Barron’s categories (1.7 and 3.9 %, respectively). In fact, the representation for all racial/ethnic groups except White students is significantly reduced from the most selective to highly selective categories using this estimation. One possible explanation for this dip is that White students were highly represented in the applicant pool of highly selective institutions. Therefore, the logistic regression model used to predict the probability of gaining admission to a highly selective institution would have greater predictive power in estimating White students’ likelihood of admission than other groups.

Collapsing the Barron’s categories modestly increased the representation of Black, Latino, and first-generation students within the top selectivity categories and substantially increased the representation of low-income students for the acceptance rate and enrollment rate approaches. However, it decreased their representation for the predicted probability approach. For the Most Selective category, the result of collapsing the most and highly selective categories resulted in a drop of representation of Black students from 8.2 to 1.5 %; Latino students decreased by about half (11.1 to 5.3 %); students whose families earned $50 K or less remained stable (27.6 to 25.7 %); and students whose parents had no more than a high school degree decreased (17.5 to 11.7 %). In fact, collapsing the categories also resulted in changes (although some were slight) to almost the entire distribution of the predicted probability approach. The reason for these slight changes towards the lower end of the distribution is unclear.

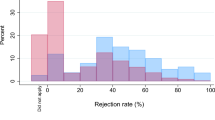

Comparison of Undermatch Rates

Table 7 illustrates undermatch rates by two classifications of selectivity and by three methods of identifying student qualifications for admission. Several patterns emerged when examining differences across the different methods of calculating undermatch. First, the enrollment rate method (columns 2 and 5) consistently produced lower undermatch rates than those produced by the predicted probability or acceptance rate methods. For example, the average undermatch rate was over 20 % points higher when using the predicted probability method than the enrollment rate approach. One possible explanation is the enrollment rate method leveraged the least amount of information when determining student qualifications (GPA, SAT score, and student enrollment) and used existing student behaviors—in other words, where students already go versus where they could go. When comparing students by their qualifications, undermatch rates were roughly 7 to 45 % points lower when using the enrollment rate method than the other two approaches.

Second, across demographic groups, the gaps in undermatch rates varied by estimation approach. The Black-White undermatch gap was largest using the acceptance rate method, irrespective of Barron’s definitions (e.g., 21 % points) and smallest using the enrollment rate method with Barron’s categories (12 % points). The gap between Latino and White student undermatch rates also varied widely from the near negligible 2 % points using the enrollment rate method and Barron’s collapsed categories to 14 % points using the predicted probability approach and Barron’s categories. The gap in undermatch rates between students whose families earned less than $50 K versus those who made over $100 K also fluctuated—from a 1 % point difference using the predicted probability rate approach and Barron’s categories to 11 % points using the acceptance rate method. Generally, the acceptance rate method produced bigger gaps than the other two approaches when considering undermatch rates by family income. This was also true for parental education, where the acceptance rate method produced the largest gaps between students whose parents were college educated and students whose parents either had no more than a high school diploma or some college (e.g., 10 % points, and 7 % points, respectively, using the Barron’s collapsed categories). Therefore, gaps in undermatch rates across different student populations are at the mercy of the estimation approach used.

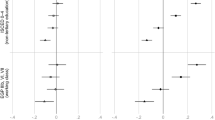

Comparison of Odds-Ratios

Table 8 compares the odds-ratios across various estimation approaches. With few exceptions, the magnitude and significance of the odds-ratios across definitions of selectivity were nearly indistinguishable (e.g., column 3 vs. column 6). However, there were differences in odds ratios across different estimation approaches to student qualifications (e.g., within columns 1–3 or columns 4–6). The acceptance and enrollment rate methods produced odds ratios similar in direction, magnitude and significance. The notable exceptions were the racial/ethnic dummy variables. However, the predicted probability approach had fewer predictors with odds-ratios significantly different than 1 and in some cases in the opposite direction as the acceptance and enrollment rate approaches (e.g., Algebra II/Geometry or SAT scores).

After controlling for demographic and academic characteristics, White students were more likely to undermatch than Black students in four out of the six models (e.g., odds-ratio = 0.67, p < 0.001 in model 2). Latino students were more likely to undermatch than White students only when using the enrollment rate approach (e.g., odds-ratio = 1.38, p < 0.01 in model 1). Moreover, students whose parents do not have a college degree were more likely to undermatch than students whose parents have a bachelor’s degree in almost every model (e.g., odds-ratio = 1.65, p < 0.001 for no more than a high school education in model 1). There was some variation with students whose parents had an associate’s degree. Also, students whose parents earned less than $100 K were generally more likely to undermatch than students whose family income was over $100 K (e.g., odds-ratio = 1.75, p < 0.001 for $0–$25 K in model 1) in all models except for students whose family income was $0–$25 K in the predicted probability models (models 3 and 6).

Although not consistent across all models, there was a split between the way in which academic achievement and academic preparation predict undermatch. The increase in the odds to undermatch was consistently associated with an increase in GPA (odds-ratios between 1.30 and 2.22 across the six models). However, the role of an increase in SAT scores varied across models. Moreover, the relationship between the highest math taken and the increased likelihood of undermatch remained unclear. Table 8 also shows that generally, for the enrollment and acceptance rate methods (columns 1, 2, 4 and 5) students who were on less rigorous math tracks were more likely to undermatch, and for the predicted probability models (columns 3 and 6) these same students were as likely or less likely to undermatch. Students were also less likely to undermatch as the number of AP and IB courses increased (odds-ratios between 0.84 and 0.93 across the six models).

Discussion

The study of undermatch is less than a decade old and what we know about undermatch—and what it means for underrepresented students—is largely inconclusive. This lack of consensus is largely due to each study’s distinct sample and approach to estimating undermatch. Using a single nationally representative sample, I empirically tested how various ways of estimating undermatch can produce differential results in (a) the distribution of student qualifications (b) the rates at which students are identified as having undermatched and (c) the predictive power of subsequent findings in multivariate analyses. In order to estimate undermatch, one must first group institutions in some hierarchical way (e.g., selectivity) and then determine for each student how their qualifications match up with that hierarchy. Drawing from previous research on undermatch, I identified two different ways that institutions were grouped by selectivity in the literature and three different ways in which student qualifications were determined. I then answered the research questions for each of the six combinations of institutional selectivity and academic qualification approaches by examining the differences in (a) distributions of student qualifications, (b) undermatch rates, and (c) odd ratios of a simple predictive model of undermatch. I paid particular attention to how these differences in results would reverberate for findings among underrepresented student. This study has several conclusions that have important implications for the study of undermatch.

Major Findings

First, the methods used to define qualifications, thresholds, and benchmarks distribute students differently across the selectivity spectrum as illustrated in Fig. 1 as well as Table 6. Generally, using student enrollment to determine the types of colleges students have access to functions as a conservative estimate and reflects the status quo through selection bias—as all students who are qualified to attend are not indeed applying and enrolling. The acceptance rate method uses more information (application and admission information) to predict where students are likely to gain admission; although it is limited by only using GPA and SAT scores (in a categorical way) to assign qualification levels. The predicted probability approach not only uses admission and application information, it also uses GPA and SAT scores in their continuous forms and allows for covariates to increase accuracy.

These variations underscore the challenge of gauging the types of institutions to which students are qualified to attend. For example, Fig. 1 shows that a person with a GPA between a 2.50 and 2.99 that has SAT scores between 1200 and 1290 could be categorized into three levels of qualification – selective, very selective, or highly selective institution—depending on the approach taken. As a second example, I point to the relative disappearance of the nonselective four-year category in Fig. 1. All three approaches had difficulty distinguishing the qualifications that would give students access to nonselective four-year institutions. This finding supports Bastedo and Flaster’s (2014) assertions that the determination of cutoffs gets quite murky.

For underrepresented students, this means that their representation across different selectivity groups fluctuated drastically. In the nonselective category, Black students represented one-quarter of students that were qualified to attend nonselective four-year colleges when using the predicted probability approach and Barron’s categories. Yet when using the enrollment rate approach, not one Black student was eligible for nonselective institutions. As Fig. 1 illustrates, only a small band of students with less than a 2.0 grade point average and with SATs between a 1,200 and 1,290 would qualify for nonselective institutions when employing the enrollment rate approach. The sample used for this study simply did not have any Black students who met these narrowly defined benchmarks. On the other end of the selectivity spectrum, the predicted probability approach seemed to favor underrepresented students at the most selective colleges. For example, low-income students (those whose families make less than $50 K) were 28 % of the students who are qualified to attend most selective colleges when employing the predicted probability approach, more than double the representation than the other two approaches. This approach accounted for other characteristics that would make low-income students likely to gain admission beyond SAT and GPA scores. Taken together, these findings underscore how the complications associated with determining student qualifications are especially salient when one attempts to disaggregate findings by student sub-groups, which is a primary goal of the work on undermatch. Moreover, how students are distributed across qualification levels undergirds every subsequent finding.

Second, I found there were variations in undermatch rates by estimation approach. This was expected, given the discussion above regarding the differences in how students were classified by qualifications. The enrollment rates approach produced the lowest undermatch rates, which is unsurprising given how conservatively it estimated student qualifications. The acceptance rate and predicted probability approaches yielded similar undermatch rates.

At first glance, the inability to pinpoint exact undermatch rates might argue against the utility of undermatch as a construct and cast it as somewhat arbitrary. Indeed, researchers have been “careful not to label students’ enrollment choices as ‘right’ or wrong’” (Bastedo and Flaster 2014, p. 2), and so the goal of research and policy efforts around undermatch is not to reduce it to zero. One might ask, so if it will never be zero but there is no absolute rate, then what is the use of measuring undermatch? In the absence of absolute terms, the utility of undermatch lies in the ability to compare across groups. That being said, the way in undermatch is estimated is critical, as it has the potential to either highlight or suppress inequities in college access and choice.

In particular, gaps in undermatch estimates between underrepresented groups and their non-underrepresented peers varied by approach. For example, across the six estimation approaches, the Black-White undermatch rate gap ranged from 12 to 21 % points; and the Latino-White gap in undermatch rates ranged from 2 to 14 % points. Interestingly, the gaps aren’t always in the direction we expect them to be. The findings show that White students undermatched at higher rates. But that is because students who have more options—in terms of selectivity categories—simply have more opportunities to undermatch (Smith et al. 2012). Said differently, if many Black, Latino, low-income and first-generation students are not eligible to attend selective institutions and march off to two-year institutions, then they haven’t undermatched. And considering the dearth of Black, Latino, first-generation, and low-income students in the more selective categories, comparing groups without considering the qualification levels masks these differences in representation that could lead to erroneous conclusions (e.g., there is no undermatch problem for Black students).

Third, the way in which undermatch was estimated mattered to findings in subsequent regression analyses, particularly for race and ethnicity. Table 8 shows that across the six different approaches, and after controlling for other student characteristics, the odds ratios for Latino students not only varied in significance and magnitude, but even direction. However, the odds-ratios for other underrepresented groups remained somewhat consistent. Many of the differences across odds ratios were found in the predicted probability columns. Smith et al. (2012) approach was most like the Barron’s categories collapsed and predicted probability, or column 6, and although they had other covariates in their model, they had similar findings for Black, first-generation students, and most income levels below $100 K.

Indeed, the variables I used to estimate the likelihood of admission in the predicted probability approach had implications in later regression analyses. Controlling for the highest math course taken when predicting admission, for example, suppressed differences in highest math when predicting undermatch. There is a tradeoff here. Because including a variable to predict admissions may preclude the utility of that variable in predicting undermatch, researchers may have to decide between the accuracy of admissions predictions (a function of the institution’s choice) or a better understanding of how a given variable contributes to undermatch (a function of the individual’s choice).

It is worth noting that academic preparation and achievement may not explain undermatch in similar ways. The first two models in Table 8 would suggest under-prepared students and high-achieving students are more likely to undermatch then their peers. However, the predicted probability models are largely driven by academic achievement and preparation, and show very divergent results. Future research should explore the potentially divergent roles that academic preparation and achievement may play, particularly for underrepresented students—and especially as academic preparation is a discernible policy lever. As attention to the study of undermatch grows, it is essential for researchers to better understand the tradeoffs made in estimating undermatch using different approaches and how this has implications for findings.

Finally, with the exception of Bowen et al. (2009), studies of undermatch have thus far not imputed for students who are missing SAT or ACT scores. Therefore students who could have qualified to attend, had they taken one additional step (the SAT or ACT) in high school are omitted from the estimates. These students tend to come from underrepresented backgrounds. In this study, imputing exam scores increased the analytic sample by 30 %. From the policy perspective, including these students who have not maximized on their presumptive potential provides policymakers with an accurate depiction of the talent that is not being capitalized.

Limitations

There are several limitations associated with estimating undermatch and its inherent assumptions. First, I assumed institutions within a selectivity grouping were homogenous with regard to likelihood of gaining admission, availability of aid, degree completion, and other institutional characteristics. Also, particularly at more selective institutions, a student could very well apply to only one institution within his or her qualification level yet not be admitted. In addition, the data is a decade old, as these were seniors in 2004. Surely, the higher education landscape has changed since. Nonetheless, these limitations to estimating undermatch are expected to affect the various approaches in similar ways. Moreover, including more variables in the predicted probability model could have resulted in different findings, although the purpose of the study was not to evaluate the odds-ratios in a substantive way, but rather in a comparative sense.

Implications for Future Research

Collapsing Barron’s categories made little difference to the overall undermatch rates or subsequent findings. While the representation of Black, Latino, and first-generation students in the most selective categories changed, the two most selective categories are also the smallest numerically. Therefore, combining the two categories was a rather minor change in reorganizing a small fraction of students and institutions. This finding is particularly relevant for researchers that decide to collapse these categories to improve power. Here, the tradeoff is masking the potential differences across the most selective institutions in order to gain statistical power. Future research should explore using substantially different definitions of institutional selectivity. Outcomes-based definitions could include not only selectivity, but institutional graduation rates (Roderick et al. 2006) and availability of financial aid as well. This would contribute to the understanding of student choice with direct linkages to postsecondary persistence and completion.

The notion of using selectivity to create a hierarchical structure of institutions falls apart at the less selective end. Because all three qualification approaches had difficulty with parsing out the students who would likely gain admission to nonselective four-year institutions from two-year colleges, future research should consider combining the two categories. Arguably, this merger would increase the heterogeneity of the institution types and their outcomes. However, this is the choice set for many middle-ability college-bound students. While researchers have steadily focused on the highest achieving students’ college choices, very little is known about how middle-ability students make their decisions and whether they are good matches. The middle-ability group is the less the sensational of the two, but they are a much larger share of college enrollments.

It is a common practice for college counselors to advise students to compare their SAT or ACT scores with the median scores at the institutions for which they are interested to see if they are a good “match.” Indeed, this was the approach replicated by recent undermatch studies (Hoxby and Avery 2012; Hoxby and Turner 2013). However, this study did not examine this approach. While I did impute SAT/ACT scores for students, many institutions do not report their median or interquartile range of SAT scores—particularly private colleges. Therefore I was unable to include this approach as part of the analyses. One advantage to this approach is there is no need to rely on Barron’s categories that have a lot of variation within them to make singular claims about where students are likely to gain admission. However, the challenge with comparing students’ position on an institution’s SAT score distribution implicitly argues for heterogeneity on a single student characteristic. In other words, if we were to decrease undermatch using this measure, we would be advocating for bringing in the tails of the SAT/ACT distribution at every institution (i.e., having more students with similar scores). This is not reflective of how admissions goals are crafted at many selective institutions. Moreover, many underrepresented students have lower exam scores, on average, and would invariably be regarded as overmatched, even if they are strong on other measures that colleges value in the admissions process. Overmatch was not examined in this study, as the main focus of most research studies and policy efforts lie in match or undermatch. Notwithstanding these limitations, future research should empirically examine the differences in undermatch rates for this approach and what it would mean for underrepresented students.

For all the approaches examined, the predicted probability method leveraged the most amount of information in order to estimate a student’s likelihood of gaining admission, which matters most at the top. For the most part, it increased representation of Black, Latino, low-income and first-generation students by taking stock of other qualities that are typically considered during the admissions process. However, it also the most expensive approach, as the cost of collecting so detailed information about students’ applications, admissions, high school course-taking, extra-curricular activities, etc. And there is still more data to collect. For example, we know very little about how financial aid offers, degree program availability, the presence of honors colleges, or the proximity of institutions influence match (and in turn, the disparities in the rates of match across different groups).

Conclusion

There are tradeoffs to consider and limitations to acknowledge when determining undermatch. Both the college choice and admissions processes are highly complex, thereby making the estimation of undermatch incredibly challenging. The purpose of this study was not to advocate for any single method, as the population of interest and availability of data would shape the approach researchers take in estimating undermatch. However, this study’s contributions lie in illuminating the differences that result from the choices researchers make. This study found that these differences in qualification and undermatch rates across approaches are not slight. Therefore, stakeholders should be both wary of making summative statements across studies and researchers should be careful in how they estimate and interpret undermatch. Nonetheless, undermatch is useful within a single study to compare across different groups. As such, the study of undermatch is incredibly important, despite the limitations of the literature as it stands, because many students are foregoing the opportunity to improve their college-going outcomes.

Notes

The predicted probability grids are used for illustrative purposes only, as this approach did not rely on GPA/SAT rubrics in the same way that the acceptance rate and enrollment rate approaches did. The grid for predicted probability shows the destination where most students with the given combination of GPA and SAT scores were qualified to have gone using the predicted probability approach, or the mode. There is variation within each cell as to the qualification level to which students were actually assigned.

Because there are very few students in the far reaches of the grids (e.g., with very low grade point averages and very high SAT scores) some smoothing had to occur, where starting from left to right and top to bottom, the highest selectivity superseded subsequent cells underneath it and to the right. This precluded the case of someone with lower GPA and/or SAT scores from being qualified for a more selective institution. In the case of the predicted probability grids, I did not adjust, or smooth, the grid in this manner because its purpose was illustrative and students were not assigned to qualification level based on the grid.

References

Adelman, C., (2006). The toolbox revisited: paths to degree completion from high school through college. Washington: U.S. Department of Education. http://www2.ed.gov/rschstat/research/pubs/toolboxrevisit/index.html?exp=3.

Barron’s Educational Series, Inc. (2004). Barron’s Profiles of American Colleges 2005 (26th ed.). New York: Author.

Bastedo, M., & Flaster, A. (2014). Conceptual and methodological problems in research on college undermatch. Educ Res, 20(10), 1–7. doi:10.3102/0013189X14523039.

Baum, S., Ma, J., Payea, K., (2013). Education pays: The benefits of higher education for individuals and society. (No. 12b-7104). From the College Board website: http://trends.collegeboard.org/education-pays.

Bell, A., Rowan-Kenyon, H. T., & Perna, L. W. (2009). College knowledge of 9th and 11th grade students: Variation by school and state context. J High Educ, 80(6), 663–685. doi:10.1353/jhe.0.0074.

Bowen, W. G., Chingos, M. M., McPherson, M. S., & Tobin, E. M. (2009). Crossing the finish line: Completing college at America’s public universities. Princeton: Princeton University Press.

College Board (2012). The 8th annual AP report to the nation. (No. 12b-5036). From the College Board website: http://apreport.collegeboard.org/sites/default/files/downloads/pdfs/AP_Main_Report_Final.pdf.

Dillon, E.W., & Smith, J.A. (2013). The determinants of mismatch between students and colleges. (NBER Working Paper Series 19286). From National Bureau of Economic Research website: http://www.nber.org/papers/w19286.

Engberg, M. E., & Wolniak, G. C. (2010). Examining the effects of high school contexts on postsecondary enrollment. Research in Higher Education, 51(2), 132–153. doi:10.1007/s11162-009-9150-y.

Hoxby, C., Avery, C., (2012). The missing “one-offs”: The hidden supply of high-achieving, low income students. (NBER Working Paper Series 18586). From National Bureau of Economic Research website: http://www.nber.org/papers/w18586.

Hoxby, C., Turner, S., (2013). Expanding college opportunities for high-achieving, low-income students. (SIEPR Discussion Paper no. 12-014). From Stanford Institute for Economic Policy Research website: http://siepr.stanford.edu/?q=/system/files/shared/pubs/papers/12-014paper.pdf.

Ingels, S.J., Pratt, D.J., Wilson, D., Burns, L.J., Currivan, D., Rogers, J.E., Hubbard-Bednasz, S., (2007). Education longitudinal study of 2002 (ELS:2002) base year to second follow-up data file documentation. (No. NCES 2008-347). Washington: U.S. Department of Education, National Center for Education Statistics.

Mattern, K., Wyatt, J., (2009). Student choice of college: How far do students go for an education? Journal of College Admission (203), 18–29. http://files.eric.ed.gov/fulltext/EJ838811.pdf.

National Center for Education Statistics. (2010). The Nation’s report card: Grade 12 reading and mathematics 2009 national and pilot state results. From U.S. Department of Education, National Center for Education Statistics website: http://nces.ed.gov/nationsreportcard/pubs/main2009/2011455.asp.

Oakes, J. (1992). Can tracking research inform practice? technical, normative, and political considerations. Educational Researcher, 21(4), 12–21. http://www.jstor.org/stable/1177206.

Roderick, M., Coca, V., & Nagaoka, J. (2011). Potholes on the road to college: High school effects in shaping urban students’ participation in college application, four-year college enrollment, and college match. Sociology of Education, 84, 178–211. doi:10.1177/0038040711411280.

Roderick, M., Nagaoka, J., Allensworth, E., (2006) From high school to the future: a first look at Chicago public school graduates’ college enrollment, college preparation, and graduation from four-year colleges. From Consortium on Chicago School Research website: http://ccsr.uchicago.edu/publications/high-school-future-first-look-chicago-public-school-graduates-college-enrollment.

Roderick, M., Nagaoka, J., Coca, V., (2008). From high school to the future: Potholes on the road to college. From Consortium on Chicago School Research website: http://ccsr.uchicago.edu/publications/high-school-future-potholes-road-college.

Roderick, M., Nagaoka, J., Coca, V., Moeller, E., (2009) From high school to the future: making hard work pay off: The road to college for students in CPS’s academically advanced programs. From Consortium on Chicago Research website: http://ccsr.uchicago.edu/publications/high-school-future-making-hard-work-pay.

Smith, J., Pender, M., & Howell, J. (2012). The full extent of student-college undermatch. Economics of Education Review, 32, 247–261. doi:10.1016/j.econedurev.2012.11.001.

Wells, R. S., Lynch, C. M., & Seifert, T. A. (2011). Methodological options and their implications: an example using secondary data to analyze Latino educational expectations. Research in Higher Education, 52(7), 693–716. doi:10.1007/s11162-011-9216-5.

Wyner, J.S., Bridgeland, J.M., Diiulio, J.J., Jr (2009) Achievement trap: How America is failing millions of high-achieving students from lower-income families. From Jack Kent Cooke Foundation website: www.jkcf.org/assets/files/0000/0084/Achievement_Trap.pdf.

Acknowledgments

The research reported here is based on data provided by the Educational Longitudinal Survey of 2002 and was supported in part by the Institute of Education Sciences, U.S. Department of Education, through Grant #R305B090015 to the University of Pennsylvania. The opinions expressed are those of the authors and do not represent the views of the Institute or the U.S. Department of Education.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rodriguez, A. Tradeoffs and Limitations: Understanding the Estimation of College Undermatch. Res High Educ 56, 566–594 (2015). https://doi.org/10.1007/s11162-015-9363-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11162-015-9363-1