Abstract

Research with school-age readers suggests that the contributions of reading and language skills vary across reading comprehension assessments and proficiency levels. With a sample of 168 struggling adult readers, we estimated the explanatory effects of decoding, oral vocabulary, listening comprehension, fluency, background knowledge, and inferencing across three reading comprehension tests and across low, average, and high levels of performance. OLS regression models accounted for 66% of the variance in WJ Passage Comprehension scores with all competencies except listening comprehension as significant predictors; 43% of the variance in RAPID Reading Comprehension scores with decoding and listening comprehension as significant predictors; and 31% of the variance in RISE Reading Comprehension scores with decoding as a significant predictor. Quantile regression models and between-quantile slope comparisons showed that the effects of some predictors on reading comprehension varied across performance levels on one or more tests. Implications for instruction, assessment, and future research are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Reading comprehension is a complex process that involves various competencies (Perfetti & Stafura, 2014). Research on the contributions of these competencies has primarily involved students in the K-12 grades and postsecondary programs (e.g., Aaron, Joshi, Gooden, & Bentum, 2008; Hannon, 2012) and has largely ignored the one in five adults in the United States who read at or below elementary levels (National Center for Education Statistics, 2019). One major challenge facing researchers and practitioners is the heterogeneity of the struggling adult reader population, especially in terms of reading comprehension (Comings & Soricone, 2007; Lesgold & Welch-Ross, 2012). Moreover, complicating the heterogeneity challenge, some norm-referenced assessments do not seem to function as expected for adults who struggle with reading (Nanda, Greenberg, & Morris, 2014; Pae, Greenberg, & Williams, 2012). The goal of this study was to address these issues by examining the effects of reading-related competencies on the reading comprehension performance of struggling adult readers across (a) different reading comprehension tests and (b) different levels of performance.

A theoretical framework for reading comprehension

Since there is no reading comprehension framework specific to struggling adult readers, we turn to theories that have been validated with children and adolescents who read below the high school level. The influential Simple View of Reading (SVR) emphasizes the contributions of decoding and linguistic comprehension to reading comprehension performance (Gough & Tunmer, 1986). Decoding refers to the basic process of translating print words to spoken language. Linguistic comprehension aids the reader in understanding oral language and can be subdivided into meaning-making at the word level (i.e., oral vocabulary) as well as the discourse level (i.e., listening comprehension) (Sabatini, Sawaki, Shore, & Scarborough, 2010; Tunmer & Chapman, 2012). Additionally, reading fluency may have a place in the SVR, because being able to read words efficiently frees up attentional resources and allows the reader to focus on processing the meaning of the text (Tilstra, McMaster, Van den Broek, Kendeou, & Rapp, 2009).

Moving beyond the SVR, the Direct and Inferential Mediation (DIME) framework highlights the importance of background knowledge and inference generation to reading comprehension (Cromley & Azevedo, 2007). Background knowledge, or prior knowledge, refers to the generic and specific knowledge encoded in an individual’s long-term memory (Graesser, Singer, & Trabasso, 1994). Inferencing refers to the skill of understanding implicit associations within a discourse (Kintsch, 1988). These competencies facilitate deep comprehension of the text content and help the reader make connections between what is known and what is learned. When the DIME framework was tested with children and adolescents, both background knowledge and inference explained variance in reading comprehension after controlling for decoding and oral vocabulary (Ahmed et al., 2016; Cromley & Azevedo, 2007).

Together, the SVR and DIME frameworks point to different competencies that may influence reading comprehension: decoding, oral vocabulary, listening comprehension, fluency, background knowledge, and inference. Across multiple investigations involving struggling adult readers, reading comprehension exhibited moderate to strong correlations with decoding, oral vocabulary, listening comprehension, and fluency (e.g., Braze et al., 2016; Mellard, Fall, & Woods, 2010; Talwar, Tighe, & Greenberg, 2018). Moreover, each of these four skills uniquely accounted for variance in reading comprehension scores for struggling adult readers (Braze et al., 2016; Greenberg, Levy, Rasher, Kim, Carter, & Berbaum, 2010; Mellard et al., 2010; Sabatini et al., 2010; Tighe, Little, Arrastia-Chisholm, Schatschneider, Diehm, Quinn et al. 2019). Although less work with this population has focused on background knowledge and inference, the limited findings suggest that both competencies are uniquely important to reading comprehension over and above the skills associated with the SVR (Talwar et al., 2018; Tighe, Johnson, & McNamara, 2017).

Reading comprehension tests

The measurement of the reading comprehension construct is an important issue to consider. Past research suggests that different reading comprehension assessments are not equivalent to one another. Multiple studies have shown that examinees can successfully answer some multiple-choice questions even without reading the source passage (Coleman, Lindstrom, Nelson, Lindstrom, & Gregg, 2010; Katz, Lautenschlager, Blackburn, & Harris, 1990; Keenan & Betjemann, 2006). Additionally, reading comprehension tests may be differentially impacted by word reading and oral language skills (Cutting & Scarborough, 2006; Keenan, Betjemann, & Olson, 2008; Nation & Snowling, 1997).

Specific to struggling adult readers, Mellard, Woods, Desa, and Vuyk (2015) reported that vocabulary made the largest unique contribution to reading comprehension performance on the Test of Adult Basic Education (TABE; CTB/McGraw-Hill, 1996), whereas working memory made the largest unique contribution to reading comprehension performance on the Comprehensive Adult Student Assessment System (CASAS, 2004). Similarly, Tighe et al. (2017) found that vocabulary was a significant predictor of performance on the Woodcock Reading Mastery Test Passage Comprehension subtest (Woodcock, 2011) but not on the Gates–MacGinitie Reading Test (MacGinitie, MacGinitie, Maria, & Dreyer, 2000), whereas the opposite pattern was observed for inferencing. These findings support the notion that literacy research and assessment should include multiple measures of reading comprehension and examine the constructs tapped by each measure (Keenan, 2016).

As computerized testing has become more common, it is valuable to explore reading performance across modes of administration. Some researchers have observed with children and proficient adult readers that examinees score similarly on paper-based and computer-based tests targeting the same domain (Achtyes et al., 2015; Bodmann & Robinson, 2004; Srivastava & Gray, 2012). However, other investigations indicate that adolescents are less likely to identify important information within a passage if it is presented on a screen versus paper (Kobrin & Young, 2003) and adolescents with higher test anxiety generally perform worse on computerized tests (Lu, Hu, Gao, & Kinshuk, 2016). In fact, according to a recent meta-analysis that largely included studies with college students, reading from screens is associated with lower comprehension performance compared to reading from paper, especially for expository texts (Clinton, 2019). Such mode comparison studies have not been conducted specifically with struggling adult readers. Individuals in this population can lack basic computer skills such as identifying specific keys on the keyboard and right-clicking the mouse (Olney, Bakhtiari, Greenberg, & Graesser, 2017), and it is important to investigate whether these deficits are related to performance on computerized tests.

The utility of quantile regression for reading comprehension research

As mentioned previously, struggling adult readers comprise a heterogenous population (Comings & Soricone, 2007; Lesgold & Welch-Ross, 2012). Quantile regression presents a unique way to address the variability in reading levels among these adults. Unlike ordinary least squares (OLS) regression, which estimates the effect of a predictor at the average level of the criterion, quantile regression can estimate this effect at different quantiles (or locations) in the criterion distribution (Davino, Furno, & Vistocco, 2014; Koenker & Basset, 1978; Koenker & Hallock, 2001). With school-age readers, quantile regression analyses have shown that the contributions of vocabulary, oral fluency, and motivation to reading comprehension can differ for readers at different proficiency levels (e.g., Cho, Capin, Roberts, & Vaughn, 2018; Frijters et al., 2018; Lonigan, Burgess, & Schatschneider, 2018). Most pertinently, Hua and Keenan (2017) examined the relations of word recognition and listening comprehension to reading comprehension across five reading comprehension measures. From the 0.1 quantile to the 0.9 quantile, the unique effect of listening comprehension increased on two tests, decreased on two other tests, and stayed the same on one test. Mixed trends were also observed across tests for the effect of word recognition.

Similar quantile regression work with struggling adult readers is strikingly sparse. As a proof of concept, Tighe and Schatschneider (2016) demonstrated with a sample of struggling adult readers that two component skills exhibit differential effects on reading comprehension at different quantiles of performance. Given that the adult literacy field serves a heterogeneous group of learners (Comings & Soricone, 2007; Lesgold & Welch-Ross, 2012), a high research priority should be the identification of competencies that are important at different reading proficiency levels.

The current study

Our main goal in this exploratory study was to examine the importance of different reading-related competencies for struggling adult readers, a historically underserved and understudied population. Informed by the SVR and DIME frameworks, we explored the contributions of decoding, oral vocabulary, reading fluency, listening comprehension, background knowledge, and inference to reading comprehension. We explored these contributions for different reading comprehension measures and different performance quantiles within each measure, based on prior research reporting differential findings across reading tests and proficiency levels (Hua & Keenan, 2017; Mellard et al., 2015; Tighe & Schatschneider, 2016). Our approach addressed gaps in the literature involving competencies that are understudied among struggling adult readers (e.g., background knowledge, inference) and the relative importance of different competencies across reading comprehension performance levels within this population. Two research questions guided our investigation:

-

1.

For struggling adult readers, what are the joint and unique contributions of decoding, oral vocabulary, fluency, listening comprehension, background knowledge and inference to performance on different reading comprehension tests?

-

2.

To what extent do the effects of these predictors vary across levels of performance on different reading comprehension tests?

Methods

Participants

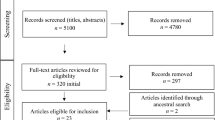

As part of a larger project (Institute of Education Sciences, U.S. Department of Education, Grant R305C120001), data were collected from individuals who attended adult literacy classes that targeted the third through seventh grade reading levels. For the purposes of the current study, we limited the sample to 168 adults who were native English speakers and had completed three reading comprehension measures. The sample included individuals who were 16 or 17 years old, since anyone who is at least 16 years of age and not attending high school can enroll in an adult literacy program. The mean age was 42.19 years (SD = 14.39). Participants attended adult literacy programs in two major metropolitan areas in the Southeast of the United States (57.1%) and in Ontario, Canada (42.9%). The major demographic groups were women (71.4%) and individuals who identified as Black or African American (76.8%). About a quarter (24.4%) of the sample reported having a paying job. The majority (73.8%) reported receiving at least one form of government support (e.g., food assistance, disability assistance). More detailed demographic information is reported in Table 1.

Procedure

Trained graduate research assistants administered measures of reading comprehension, decoding, oral vocabulary, fluency, listening comprehension, background knowledge, and inferencing to participants at their adult literacy program sites. Test administration was started at easier items to reduce testing anxiety. Basal and ceiling rules were followed. Each item was scored as correct or incorrect according to the scoring rules in the publishers’ examiner manuals. All scored measures underwent two additional rounds of checking. In addition to completing literacy measures, participants also answered questions about their demographic backgrounds and computer experience.

Measures

Reading comprehension

All participants completed three measures of reading comprehension.

WJ Passage Comprehension

One of the reading comprehension measures was the Passage Comprehension subtest of the Woodcock–Johnson III Normative Update (WJ; Woodcock, McGrew, & Mather, 2007). The items in the WJ Passage Comprehension (WJ-PC) subtest were connected texts comprised of one or two sentences with missing words indicated by blanks. The participant silently read each item and filled in the blank by speaking the missing word out loud. Administration started at Item 14. This measure was standardized on individuals 2 years old to over 80 years old and the internal reliability estimates ranged from 0.73 to 0.96 (McGrew, Schrank, & Woodcock, 2007).

RISE Reading Comprehension

Another reading comprehension measure was the Reading Comprehension subtest of the Reading Inventory and Scholastic Assessment (RISE), a Web-based assessment battery developed by the Educational Testing Service (Sabatini, Bruce, Steinberg, & Weeks, 2015). On the RISE Reading Comprehension (RISE-RC), the participant was instructed to read passages and answer multiple-choice questions about each passage with the passage still in view. All questions included three answer choices and the participant selected the answer by pressing the 1, 2, or 3 keys on the keyboard.

We used a version of the RISE battery that had been previously used extensively with adolescent readers. Internal reliability estimates on RISE-RC ranged from 0.72 to 0.82 for students in the sixth through eighth grades (Sabatini et al., 2015). The current study was the first to administer this version of the RISE to struggling adult readers. Each adult was administered one of two test forms. Therefore, their RISE-RC performance was analyzed using Scale Scores instead of raw scores. The IRT-based Scale Scores provide a way to link the two forms and ensure that all examinees’ performance is on a common scale (Sabatini et al., 2015).

RAPID Reading Comprehension

The third reading comprehension measure was the Reading Comprehension subtest of the Reading Assessment for Prescriptive Instructional Data (RAPID), a Web-based assessment battery developed by Lexia Learning (Foorman, Petscher, & Schatschneider, 2017). On the RAPID Reading Comprehension (RAPID-RC), the participant was instructed to read passages and answer multiple-choice questions about each passage with the passage still in view. All questions included four answer choices and the participant selected the answer using mouse clicks. Because the RAPID-RC is an adaptive test, not all participants were administered the same passages. Each participant’s starting passage was determined by performance on the other RAPID subtests, and administration continued until a precise estimate of performance was reached or the participant completed three passages.

To our knowledge, the current study was the first to administer the RAPID battery to struggling adult readers. Internal reliability estimates on RAPID-RC ranged from 0.83 to 0.93 for students in the third through ninth grades (Foorman et al., 2017). As recommended in the technical manual, the RAPID-RC Performance Scores were used for analyses (Foorman et al., 2017).

Decoding

WJ Word Attack

In the WJ Word Attack subtest, the participant read pseudowords out loud. Administration started at Item 4. This measure was standardized on individuals 4 years old to over 80 years old and the internal reliability estimates ranged from 0.78 to 0.94 (McGrew et al., 2007).

WJ Letter-Word Identification

In the WJ Letter-Word Identification subtest, the participant read real words out loud. Administration started at Item 33. This measure was standardized on individuals 4 years old to over 80 years old and the internal reliability estimates ranged from 0.88 to 0.99 (McGrew et al., 2007).

Challenge Word Test

In the Challenge Word Test (Lovett et al., 1994, 2000), the participant read aloud real words that contain multiple syllables. Administration started at Item 1. Because this is an experimental measure, no standardization information is available.

Oral vocabulary

WJ Picture Vocabulary

In the WJ Picture Vocabulary subtest, the participant looked at pictures and named the depicted objects or actions. Administration started at Item 15. This measure was standardized on individuals 2 years old to over 80 years old and the internal reliability estimates ranged from 0.70 to 0.93 (McGrew et al., 2007).

Fluency

WJ Reading Fluency

In the WJ Reading Fluency subtest, the participant was given a list of statements printed on paper and given 3 min to silently read as many statements as possible, decide if each statement is true or false, and circle “Y” (for “yes”) or “N” (for “No”) next to each statement. These statements were short, isolated sentences (3–11 words long) and consisted of high-frequency words. The technical manual describes WJ Reading Fluency as a measure of reading speed and semantic processing speed (McGrew et al., 2007). Informed by the manual, the item stimuli, and the timed nature of the task, we treated this measure as an assessment of reading efficiency. Administration started at Item 1. This measure was standardized on individuals 6 years old to over 80 years old and the internal reliability estimates ranged from 0.72 to 0.96 (McGrew et al., 2007).

Listening comprehension

CELF Understanding Spoken Paragraphs

In the Understanding Spoken Paragraphs subtest of the Clinical Evaluation of Language Fundamentals IV (CELF; Semel, Wiig, & Secord, 2003a), the participant listened to very short stories and then answered questions about them. Administration started at Item 1. This measure was standardized on individuals 5–21 years old and the internal reliability estimates ranged from 0.54 to 0.81 (Semel et al., 2003b).

Background knowledge

WJ General Information

The WJ General Information subtest had two subscales: Where and What. The examiner asked questions about where one would usually find certain objects and what one would usually do with certain objects, and the participant provides answered verbally. For both subscales, administration started at Item 1. This measure was standardized on individuals 2 years old to over 80 years old and the internal reliability estimates ranged from 0.82 to 0.96 (McGrew et al., 2007).

Inference

CASL Inference

In the Inference subtest of the Comprehensive Assessment of Spoken Language (CASL; Carrow-Woolfolk, 1999), the examiner read aloud short passages that have missing information and asked a question about the missing information in each item. The participant answered the question using world knowledge or clues in the passage. Administration started at Item 1. This measure was standardized on individuals 7–18 years old and the internal reliability estimates ranged from 0.86 to 0.90 (Carrow-Woolfolk, 2008).

Data analysis strategy

To answer the first research question, OLS regression models were estimated separately for each reading comprehension test. The dependent variable in this model was reading comprehension and the independent variables were decoding, oral vocabulary, fluency, listening comprehension, background knowledge and inference. All independent variables were entered in one step into the model, based on the absence of control variables and the heterogeneous reader profiles that have been observed in the struggling adult reader population (MacArthur, Konold, Glutting, & Alamprese, 2012; Mellard, Woods, & Lee, 2016; Talwar, Greenberg, & Li, 2020). For the significance testing of each independent variable, an alpha level of 0.05 was used in the analysis.

Prior to estimating OLS regression models, the data were examined to determine whether the assumptions of linear regression were tenable. For each reading comprehension test, scatter plots indicated approximately linear relations with all independent variables. Scatter plots of residuals and fitted values did not exhibit a distinct pattern, which supported the assumption of homoscedasticity. Additionally, residuals appeared to be normally distributed as indicated by Q–Q plots and residual means of approximately zero.

To answer the second research question, quantile regression models were estimated separately for each reading comprehension test using the quantreg package (Koenker, 2020) in the R statistical environment (R Core Team, 2020). As in the OLS regression model, the dependent variable was reading comprehension and the independent variables were decoding, oral vocabulary, fluency, listening comprehension, background knowledge and inference. Model parameters were estimated at the 0.1, 0.5, and 0.9 quantiles, which correspond to low, average, and high levels of reading comprehension within the sample (Hua & Keenan, 2017). Additionally, between-quantile slope comparisons were conducted across the 0.1, 0.5, and 0.9 quantiles for each model.

Results

The reliability and mean performance of the current sample are reported in Table 2. Reliability for WJ-PC was estimated at 0.81. Reliability for RISE-RC was estimated at 0.63, which is similar to the estimate of 0.60 reported for fifth grade students but lower than the estimates for middle school students (Sabatini et al., 2015). Reliability could not be computed for RAPID-RC because we did not have access to item-level data from this adaptive assessment.

Correlations across measures are reported in Table 3. The correlation coefficients among WJ Letter-Word Identification, WJ Word Attack, and the Challenge Word Test ranged from 0.79 to 0.90. Due to these strong relationships, a decoding composite was computed for subsequent analyses by taking the mean of z-scores on these measures.

Computer experience

Since RISE-RC and RAPID-RC are administered at computers, it was important to examine whether participants’ performance on these assessments were related to their computer experience. All but two participants indicated that they had used a computer before. Of those who had used a computer, approximately 41% said that they use a computer every day, 31% said that they use a computer a few times a week, 13% said that they use a computer once a week, and 15% said that they use a computer less than once a week. One-way ANOVAs indicated that there were no significant differences among these respondent groups on RISE-RC (F(3,162) = 2.59, p > 0.05) and RAPID-RC (F(3,162) = 2.01, p > 0.05). Additionally, participants reported the number of hours they usually use a computer per day, which ranged from zero to 18 h, with a mean of 2.93 h (SD = 3.19). Number of hours of computer use was not significantly correlated with scores on either test (ps > 0.05). Since computer experience did not appear to be related to performance on RISE-RC and RAPID-RC, it was not included as a covariate in any subsequent analyses.

Research Question 1: What are the joint and unique contributions of reading-related skills to performance on different reading comprehension tests?

The parameter estimates of the OLS regression model for each reading comprehension test are reported in Table 4.

OLS Regression model for WJ Passage Comprehension

The model explained 66% of variance in WJ-PC performance (F(6,153) = 48.99, p < 0.001). Decoding, oral vocabulary, fluency, background knowledge, and inference had significant unique effects on reading comprehension scores. Approximately 10% of the reading comprehension variance was uniquely contributed by decoding, 2% by fluency, 2% by inference, 1% by background knowledge, and 1% by oral vocabulary.

OLS Regression model for RISE Reading Comprehension

The same model was estimated for RISE-RC and explained 31% of variance in reading comprehension performance (F(6,153) = 11.65, p < 0.001). Only decoding had a significant unique effect on reading comprehension scores and uniquely contributed 12% of the reading comprehension variance.

OLS Regression model for RAPID Reading Comprehension

Finally, the OLS regression model was estimated for RAPID-RC and explained 43% of variance in reading comprehension performance (F(6,153) = 19.09, p < 0.001). Decoding and listening comprehension had significant unique effects on reading comprehension score. Approximately 14% of the reading comprehension variance was uniquely contributed by decoding and 4% was uniquely contributed by listening comprehension.

Research Question 2: Do the effects of predictors vary across levels of performance on each reading comprehension tests?

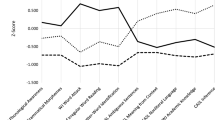

The quantile regression estimates are reported in Table 5.

Quantile regression model for WJ passage comprehension

For WJ-PC scores, the quantile regression model explained approximately 43% of the variance at the 0.1 quantile, 42% at the 0.5 quantile, and 49% at the 0.9 quantile. At the 0.1 and 0.5 quantiles, significant predictors were limited to decoding and oral vocabulary. At the 0.9 quantile, the significant predictors were decoding and background knowledge. Between-quantile slope comparisons indicated that the unique effect of decoding was stable across the 0.1, 0.5, and 0.9 quantiles of WJ-PC performance (ps > 0.05). The effect of oral vocabulary on reading comprehension was greater at the 0.5 quantile than at the 0.9 quantile (p = 0.022). Additionally, the effect of background knowledge on reading comprehension was greater at the 0.9 quantile than at the 0.1 quantile (p < 0.001).

Quantile regression model for RISE reading comprehension

For RISE-RC scores, the quantile regression model explained approximately 15% of the variance at the 0.1 quantile, 17% at the 0.5 quantile, and 23% at the 0.9 quantile. At the 0.1 and 0.5 quantiles, decoding emerged as the only significant predictor of reading comprehension. At the 0.9 quantile, the significant predictors were decoding and background knowledge. Between-quantile slope comparisons indicated that the effect of decoding on reading comprehension was stable across quantiles (ps > 0.05), while the effect of background knowledge increased in magnitude from the 0.5 quantile to the 0.9 quantile (p = 0.039).

Quantile regression model for RAPID reading comprehension

For RAPID-RC scores, the quantile regression model explained approximately 14% of the variance at the 0.1 quantile, 28% at the 0.5 quantile, and 35% at the 0.9 quantile. At the 0.1 and 0.5 quantiles, decoding was the only significant predictor of reading comprehension. At the 0.9 quantile, decoding and listening comprehension emerged as significant predictors. Between-quantile slope comparisons indicated that the effect of decoding on reading comprehension increased in magnitude from the 0.1 quantile to the 0.5 quantile (p = 0.006), from the 0.1 quantile to the 0.9 quantile (p < 0.001), and from the 0.5 quantile to the 0.9 quantile (p = 0.003). Additionally, the effect of listening comprehension was greater at the 0.9 quantile than at the 0.1 quantile (p = 0.040) of reading comprehension.

Discussion

The aim of this study was to examine the shared and unique explanatory effects of reading-related competencies on reading comprehension performance across different tests and proficiency levels for a sample of adults who struggle with reading. Based on the SVR and DIME frameworks, we focused on the constructs of decoding, oral vocabulary, listening comprehension, fluency, background knowledge, and inference. The results suggest that the effects of certain competencies on reading comprehension were influenced by how comprehension was assessed. Furthermore, the magnitude of some effects changed across levels of performance. The only common finding across tests was that decoding made the largest unique contribution to reading comprehension performance.

Differential effects across tests

Similar to research with children (Cutting & Scarborough, 2006; Keenan et al., 2008), the current study found differences in predictor-comprehension relations across reading comprehension tests. With the exception of listening comprehension, all the other predictors made significant unique contributions to WJ-PC performance. Significant contributors to reading comprehension performance were limited to decoding and listening comprehension on RAPID-RC and only decoding on RISE-RC. Although decoding contributed significantly to performance on all three measures, the proportion of variance explained by decoding varied across measures. Similarly, the total variance in reading comprehension performance also differed across measures.

There are a few possible explanations for the differential findings, which are rooted in method differences between WJ-PC and the computerized tests. Given the multiple-choice format of the RISE-RC and RAPID-RC, it is conceivable that an examinee could correctly answer some questions by relying on informed guesses and without engaging in subprocesses of deep comprehension (Coleman et al., 2010; Katz et al., 1990; Keenan & Betjemann, 2006). Another factor to consider is the modality of the examinee’s response. To respond to an item on RISE-RC and RAPID-RC, the examinee has to click a mouse or press a key on a keyboard. In contrast, the items on WJ-PC require an oral response, which is similar to the response requirements of seven of the eight tests that were used to measure the predictor competencies, including the decoding tests. It is possible that examinees may behave differently and may be more or less likely to use certain competencies on a test that involves interaction with a human examiner as opposed to a test taken silently at a computer. The reading comprehension measures also differed in the length of text stimuli; the WJ-PC featured short passages consisting of one or two sentences, whereas the RISE-RC and the RAPID-RC included longer texts. Word-level processing, as indexed by the decoding measures, may be more important for shorter passages, whereas longer text may require competencies that were not measured in this study, such as working memory.

Despite these differential findings, the models for all three reading comprehension tests emphasize the importance of decoding to struggling adult readers. Decoding was the strongest predictor of success across tests regardless of administration mode and question format. Research with children in elementary grades indicates that much of the variance in reading comprehension at that developmental stage is carried by decoding skills (e.g., Hoover & Gough, 1990; Lonigan et al., 2018). Similarly, decoding influenced reading comprehension performance more than higher-level competencies for our sample of adults with elementary-level skills. Overall, the current adult sample demonstrated skill associations that are more reflective of developing young readers than proficient adult readers.

Differential effects across proficiency levels

A novel feature of our research design was the evaluation of predictors at low, average, and high levels of the reading comprehension performance of struggling adult readers. It should be noted that these proficiency labels are relative to this particular sample; overall, all of these adults would be classified as having reading difficulties. Although quantile regression has been utilized in service of this broad question in a handful of prior studies with children (e.g., Cho et al., 2018; Hua & Keenan, 2017; Language and Reading Research Consortium [LARRC] & Logan, 2017), similar work in the adult literacy context is very limited (Tighe & Schatschneider, 2016).

There are multiple interesting findings regarding the stability of predictors across different levels of reading comprehension proficiency. First, the effect of oral vocabulary decreased between average and high levels of WJ-PC performance. This declining importance of oral vocabulary echoes a trend observed by Ahmed et al. (2016) in their investigation of the DIME framework with adolescent readers in seventh through twelfth grades. As grade level increased in their sample, oral vocabulary exhibited a gradually smaller effect on reading comprehension. This is not surprising, because more proficient readers are adept at activating and integrating word meanings in text processing (Perfetti & Stafura, 2014), which would be expected to reduce the influence of word-level semantic representations on comprehension.

Second, the effect of background knowledge increased between low and high levels of WJ-PC performance as well as RISE-RC performance. Poor readers tend to have gaps in the knowledge domains that are generally covered in formal education (Strucker, 2013) and may be less likely to connect their prior knowledge to what they are reading. It is not surprising that the relationship between background knowledge and reading comprehension was observed only at the 0.9 quantile of reading comprehension of both tests. These results add valuable nuance to past work with struggling adult readers reporting that background knowledge makes a unique contribution to reading comprehension scores (Talwar et al., 2018); this effect appears to exist only for relatively stronger comprehenders.

Third, the effect of decoding consistently increased across low, average, and high levels of RAPID-RC performance. This trend was not observed for WJ-PC and RISE-RC, on which decoding emerged as a stable predictor of reading comprehension across proficiency levels. It can be argued that the adaptive algorithm of the RAPID amplifies the influence of word reading skills on comprehension performance, since the starting passage administered to each examinee on this assessment is determined by the examinee’s performance on the other subtests of the RAPID, one of which measures word recognition.

Finally, the effect of listening comprehension increased between low and high levels of RAPID-RC performance. This finding reflects a trend uncovered in cross-sectional research with children and adolescents. As readers’ proficiency level increases, listening comprehension makes larger contributions to reading comprehension variance (Tilstra et al., 2009; Vellutino, Tunmer, Jaccard, & Chen, 2007). Since listening comprehension was a significant predictor of reading comprehension only at the 0.9 quantile, it can be concluded that the adaptive algorithm did not unduly inflate this relationship.

Overall, the quantile regression results imply that oral language skills and higher-order competencies are more important at higher levels of reading comprehension performance. These adults with greater comprehension proficiency are also likely to have advanced decoding skills. For these more proficient readers, it is plausible that decoding is a reliable competency that does not draw greatly on available mental resources, thereby allowing the reader to focus on higher-level processing of text (Kendeou, van den Broek, Helder, & Karlsson 2014; Perfetti & Hart, 2002).

Implications for assessment and instruction

Our findings indicate that different reading comprehension assessments do not appear to target the same underlying construct for struggling adult readers. This pattern has also been observed with other tests, including those that are commonly administered in Adult Basic Education programs (Mellard et al., 2015; Tighe et al., 2017). Although such work is recent in the adult literacy context, these findings have been reported with child samples for at least two decades (Cutting & Scarborough, 2006; Keenan et al., 2008; Nation & Snowling, 1997). The format, modality, and intended purpose of reading comprehension tests should therefore be considered when evaluating adults for educational progress or research purposes.

The findings also provide support for delivering more targeted instruction in adult literacy programs. Instruction in both vocabulary and background knowledge has yielded gains in reading skills for children and adolescents (e.g., Dole, Valencia, Greer, & Wardrop, 1991; Scammacca, Roberts, Vaughn, & Stuebing, 2015), yet the quantile regression results suggest that focusing on both areas may not be appropriate for all adult learners. Vocabulary instruction may be most beneficial for lower-level readers and can be interwoven with lessons on parsing new words and using contextual clues to guess word meanings (Bromley, 2007). Higher-skilled readers may find it more useful to receive instruction in general and academic knowledge, which can improve their comprehension of academic texts (Ozuru, Dempsey, & McNamara, 2009) and better equip them for high school equivalency tests (Strucker, 2013).

Limitations and future research

A caveat commonly noted in research with struggling adult readers is the heterogeneity of this population. It is very likely that our results are only applicable to adults who are native speakers of English and attend classes targeting the third through seventh grade reading levels. Future research should explore the predictors of reading comprehension across different assessments and proficiency levels with adults who are more skilled and who do not speak English as a native language. Some limited evidence suggests that the explanatory effects of certain competencies will be different in such cases (Herman, Cote, Reilly, & Binder, 2013; To, Tighe, & Binder, 2016).

Due to the ad hoc nature of this study, the design was shaped by the measures available from the larger project. The data analyzed here were collected at a single time point. Thus, any inferences about important predictors of reading comprehension are based on correlations among observed variables. Numerous measures were from the WJ III Normative Update battery of subtests, which may have resulted in inflated associations with WJ-PC scores. Additionally, WJ-PC differed from RISE-RC and RAPID-RC in terms of modality (human-administered vs. computer-administered), question and response format (short oral response vs. multiple-choice), and text stimuli length (long vs. short passages). These differences make it difficult to attribute differential results to a specific test characteristic.

Some of the measures used in the study were not normed on adults over the age of 21 years, which can be problematic because some child-normed tests may not function as expected for adult examinees (Nanda et al., 2014). In addition, the sample exhibited low reliability on RISE-RC (0.63), and reliability could not be estimated for RAPID-RC. These reliability issues raise the possibility that one or both of these measures do not present an accurate account of reading comprehension for the sample. Unfortunately, there is a lack of assessments that have been specifically normed on struggling adult readers (Nanda et al., 2014; Tighe, 2019). Until this problem is remedied, research with this population should strive to include multiple measures of key constructs and model latent factors, which would address measurement error to a greater degree.

In the analyses for the current study, we estimated separate OLS regression models for the three reading comprehension assessments. It should be noted that a multivariate regression approach would have been appropriate and statistically sound. We opted for the separate regression approach to allow for comparable quantile regression analyses.

A consideration for future research is the assessment of different types of background knowledge in relation to reading comprehension. The current study followed the measurement approach used in prior research with struggling adult readers, which is to administer assessments of general background knowledge involving broad domains (Strucker & Davidson, 2003; Talwar et al., 2018). In contrast, researchers studying school-age and college readers typically measure specific knowledge that pertains to the content of the texts used to assess reading comprehension (e.g., Ahmed et al., 2016; Ozuru et al., 2009). Recent work with high school students indicates that general and topic-specific background knowledge exhibit differential explanatory effects on reading comprehension performance (McCarthy et al., 2018). Thus, it is important to explore different ways of assessing background knowledge with struggling adult readers and evaluate these knowledge types in terms of their predictive utility to reading comprehension and their suitability as potential targets of instructional interventions.

An important future direction that arises from the current findings involves the computerized testing of reading comprehension with struggling adult readers. Although our results showed that responses to questions about computer experience were not related to performance on RAPID-RC and RISE-RC, it is possible that participants’ self-reports of computer experience were not accurate. This potential relationship between computer familiarity and test performance should be probed with behavioral measures of computer skills, such as the Northstar Digital Literacy Assessments (Minnesota Literacy Council, 2018). Additionally, the field will benefit from a test modality comparison study with struggling adult readers in which the same reading comprehension passages are administered on paper and on a screen (e.g., Mangen, Walgermo, & Brønnick, 2013). Furthermore, it would be valuable to investigate whether adaptive tests like the RAPID function appropriately for this population in terms of item difficulty and progression rules (Greenberg, Pae, Morris, Calhoon, & Nanda, 2009).

References

Aaron, P. G., Joshi, R. M., Gooden, R., & Bentum, K. E. (2008). Diagnosis and treatment of reading disabilities based on the component model of reading: An alternative to the discrepancy model of LD. Journal of Learning Disabilities, 41(1), 67–84.

Achtyes, E. D., Halstead, S., Smart, L., Moore, T., Frank, E., Kupfer, D. J., & Gibbons, R. (2015). Validation of computerized adaptive testing in an outpatient nonacademic setting: The VOCATIONS trial. Psychiatric Services, 66(10), 1091–1096.

Ahmed, Y., Francis, D. J., York, M., Fletcher, J. M., Barnes, M., & Kulesz, P. (2016). Validation of the direct and inferential mediation (DIME) model of reading comprehension in grades 7 through 12. Contemporary Educational Psychology, 44, 68–82.

Bodmann, S. M., & Robinson, D. H. (2004). Speed and performance differences among computer-based and paper-pencil tests. Journal of Educational Computing Research, 31(1), 51–60.

Braze, D., Katz, L., Magnuson, J. S., Mencl, W. E., Tabor, W., Van Dyke, J. A., et al. (2016). Vocabulary does not complicate the simple view of reading. Reading and Writing: An Interdisciplinary Journal, 29(3), 435–451.

Bromley, K. (2007). Nine things every teacher should know about words and vocabulary instruction. Journal of Adolescent & Adult Literacy, 50(7), 528–537.

Carrow-Woolfolk, E. (1999). Comprehensive assessment of spoken language. Bloomington, MN: Pearson Assessments.

Carrow-Woolfolk, E. (2008). Comprehensive assessment of spoken language: Manual. Torrance, CA: Western Psychological Services.

Cho, E., Capin, P., Roberts, G., & Vaughn, S. (2018). Examining predictive validity of oral reading fluency slope in upper elementary grades using quantile regression. Journal of Learning Disabilities, 51(6), 565–577.

Clinton, V. (2019). Reading from paper compared to screens: A systematic review and meta-analysis. Journal of Research in Reading, 42(2), 288–325.

Coleman, C., Lindstrom, J., Nelson, J., Lindstrom, W., & Gregg, K. N. (2010). Passageless comprehension on the Nelson–Denny reading test: Well above chance for university students. Journal of Learning Disabilities, 43(3), 244–249.

Comings, J., & Soricone, L. (2007). Adult literacy research: Opportunities and challenges (NCSALL occasional paper). Cambridge, MA: National Center for the Study of Adult Literacy and Literacy. Retrieved July 2, 2020, from https://eric.ed.gov/?id=ED495439.

Comprehensive Adult Student Assessment System. (2004). CASAS technical manual. San Diego, CA: Author.

Cromley, J. G., & Azevedo, R. (2007). Testing and refining the direct and inferential mediation model of reading comprehension. Journal of Educational Psychology, 99(2), 311–325.

CTB, McGraw-Hill. (1996). Test of adult basic education. Monterey, CA: Author.

Cutting, L. E., & Scarborough, H. S. (2006). Prediction of reading comprehension: Relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading, 10(3), 277–299.

Davino, C., Furno, M., & Vistocco, D. (2014). Quantile regression: Theory and applications. West Sussex: Wiley.

Dole, J. A., Valencia, S. W., Greer, E. A., & Wardrop, J. L. (1991). Effects of two types of prereading instruction on the comprehension of narrative and expository text. Reading Research Quarterly, 26(2), 142–159.

Foorman, B. R., Petscher, Y., & Schatschneider, C. (2017). Reading assessment for prescriptive instructional data. Concord, MA: Lexia Learning.

Frijters, J. C., Tsujimoto, K. C., Boada, R., Gottwald, S., Hill, D., Jacobson, L. A., et al. (2018). Reading-related causal attributions for success and failure: Dynamic links with reading skill. Reading Research Quarterly, 53(1), 127–148.

Gough, P. B., & Tunmer, W. E. (1986). Decoding, reading, and reading disability. Remedial and Special Education, 7(1), 6–10.

Graesser, A. C., Singer, M., & Trabasso, T. (1994). Constructing inferences during narrative text comprehension. Psychological Review, 101(3), 371.

Greenberg, D., Levy, S. R., Rasher, S., Kim, Y., Carter, S. D., & Berbaum, M. L. (2010). Testing adult basic education students for reading ability and progress: How many tests to administer? Adult Basic Education and Literacy Journal, 4(2), 96–103.

Greenberg, D., Pae, H. K., Morris, R. D., Calhoon, M. B., & Nanda, A. O. (2009). Measuring adult literacy students’ reading skills using the Gray Oral Reading Test. Annals of Dyslexia, 59(2), 133–149.

Hannon, B. (2012). Understanding the relative contributions of lower-level word processes, higher-level processes, and working memory to reading comprehension performance in proficient adult readers. Reading Research Quarterly, 47(2), 125–152.

Herman, J., Cote, N. G., Reilly, L., & Binder, K. S. (2013). Literacy skill differences between adult native English and native Spanish speakers. Journal of Research and Practice for Adult Literacy, Secondary, and Basic Education, 2(3), 142–155.

Hoover, W. A., & Gough, P. B. (1990). The simple view of reading. Reading and Writing: An Interdisciplinary Journal, 2(2), 127–160.

Hua, A. N., & Keenan, J. M. (2017). Interpreting reading comprehension test results: Quantile regression shows that explanatory factors can vary with performance level. Scientific Studies of Reading, 21(3), 225–238.

Katz, S., Lautenschlager, G. J., Blackburn, A. B., & Harris, F. H. (1990). Answering reading comprehension items without passages on the SAT. Psychological Science, 1(2), 122–127.

Keenan, J. M. (2016). Assessing the assessments reading comprehension tests. Perspectives on Language and Literacy, 42(2), 17.

Keenan, J. M., & Betjemann, R. S. (2006). Comprehending the Gray Oral Reading Test without reading it: Why comprehension tests should not include passage-independent items. Scientific Studies of Reading, 10(4), 363–380.

Keenan, J. M., Betjemann, R. S., & Olson, R. K. (2008). Reading comprehension tests vary in the skills they assess: Differential dependence on decoding and oral comprehension. Scientific Studies of Reading, 12(3), 281–300.

Kendeou, P., van den Broek, P., Helder, A., & Karlsson, J. (2014). A cognitive view of reading comprehension: Implications for reading difficulties. Learning Disabilities Research & Practice, 29(1), 10–16.

Kintsch, W. (1988). The role of knowledge in discourse comprehension: A construction-integration model. Psychological Review, 95(2), 163–182.

Kobrin, J. L., & Young, J. W. (2003). The cognitive equivalence of reading comprehension test items via computerized and paper-and-pencil administration. Applied Measurement in Education, 16(2), 115–140.

Koenker, R. (2020). quantreg: Quantile Regression. R package version 5.55. Retrieved April 4, 2020, from https://CRAN.R-project.org/package=quantreg.

Koenker, R., & Basset, G. W. (1978). Regression quantiles. Econometrica, 46, 33–50.

Koenker, R., & Hallock, K. F. (2001). Quantile regression. Journal of Economic Perspectives, 15(4), 143–156.

Language and Reading Research Consortium, & Logan, J. (2017). Pressure points in reading comprehension: A quantile multiple regression analysis. Journal of Educational Psychology, 109(4), 451.

Lesgold, A. M., & Welch-Ross, M. (Eds.). (2012). Improving adult literacy instruction: Options for practice and research. Washington, DC: National Academies Press.

Lonigan, C. J., Burgess, S. R., & Schatschneider, C. (2018). Examining the simple view of reading with elementary school children: Still simple after all these years. Remedial and Special Education, 39(5), 260–273.

Lovett, M. W., Borden, S. L., DeLuca, T., Lacerenza, L., Benson, N. J., & Brackstone, D. (1994). Treating the core deficits of developmental dyslexia: Evidence of transfer of learning after phonologically-and strategy-based reading training programs. Developmental Psychology, 30(6), 805.

Lovett, M. W., Steinbach, K. A., & Frijters, J. C. (2000). Remediating the core deficits of developmental reading disability: A double-deficit perspective. Journal of Learning Disabilities, 33(4), 334–358.

Lu, H., Hu, Y.-P., Gao, J.-J., & Kinshuk. (2016). The effects of computer self-efficacy, training satisfaction and test anxiety on attitude and performance in computerized adaptive testing. Computers & Education, 100, 45–55.

MacArthur, C. A., Konold, T. R., Glutting, J. J., & Alamprese, J. A. (2012). Subgroups of adult basic education learners with different profiles of reading skills. Reading and Writing: An Interdisciplinary Journal, 25, 587–609.

MacGinitie, W. H., MacGinitie, R. K., Maria, K., & Dreyer, L. G. (2000). Gates-MacGinitie Reading Tests IV. Itasca, IL: Houghton Mifflin Harcourt.

Mangen, A., Walgermo, B., & Brønnick, K. (2013). Reading linear texts on paper versus computer screen: Effects on reading comprehension. International Journal of Educational Research, 58, 61–68.

McCarthy, K., Guerrero, T., Kent, K., Allen, L., McNamara, D., Chao, S., et al. (2018). Comprehension in a scenario-based assessment: Domain and topic-specific background knowledge. Discourse Processes, 55(5–6), 510–524.

McGrew, K. S., Schrank, F. A., & Woodcock, R. W. (2007). Woodcock–Johnson III Normative Update: Technical manual. Rolling Meadows, IL: Riverside Publishing.

Mellard, D., Fall, E., & Woods, K. (2010). A path analysis of reading comprehension for adults with low literacy. Journal of Learning Disabilities, 43(2), 154–165.

Mellard, D., Woods, K., Desa, M., & Vuyk, M. (2015). Underlying reading-related skills and abilities among adult learners. Journal of Learning Disabilities, 48(3), 310–322.

Mellard, D. F., Woods, K. L., & Lee, J. H. (2016). Literacy profiles of at-risk young adults enrolled in career and technical education. Journal of Research in Reading, 39(1), 88–108.

Minnesota Literacy Council. (2018). Northstar Digital Literacy Assessments. St. Paul, MN: Author. Retrieved July 2, 2020, from https://www.digitalliteracyassessment.org/.

Nanda, A. O., Greenberg, D., & Morris, R. D. (2014). Reliability and validity of the CTOPP Elision and Blending Words subtests for struggling adult readers. Reading and Writing: An Interdisciplinary Journal, 27(9), 1603–1618.

Nation, K., & Snowling, M. (1997). Assessing reading difficulties: The validity and utility of current measures of reading skill. British Journal of Educational Psychology, 67(3), 359–370.

National Center for Education Statistics. (2019). Highlights of the 2017 U.S. PIAAC results web report (NCES 2020-777). Washington, DC: U.S. Department of Education. Retrieved July 2, 2020, from https://nces.ed.gov/surveys/piaac/current_results.asp.

Olney, A., Bakhtiari, D., Greenberg, D., & Graesser, A. C. (2017). Assessing computer literacy of adults with low literacy skills. Proceedings of the 10th International Conference on Educational Data Mining. Wuhan, China.

Ozuru, Y., Dempsey, K., & McNamara, D. S. (2009). Prior knowledge, reading skill, and text cohesion in the comprehension of science texts. Learning and Instruction, 19(3), 228–242.

Pae, H. K., Greenberg, D., & Williams, R. S. (2012). An analysis of differential response patterns on the Peabody Picture Vocabulary Test-IIIB in struggling adult readers and third-grade children. Reading and Writing: An Interdisciplinary Journal, 25(6), 1239–1258.

Perfetti, C., & Hart, L. (2002). The lexical quality hypothesis. Precursors of Functional Literacy, 11, 67–86.

Perfetti, C., & Stafura, J. (2014). Word knowledge in a theory of reading comprehension. Scientific Studies of Reading, 18(1), 22–37.

R Core Team. (2020). R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. Retrieved April 4, 2020, from https://www.R-project.org/.

Sabatini, J., Bruce, K., Steinberg, J., &Weeks, J. (2015). RISE reading components tests, RISE forms: Technical adequacy and test design, 2nd edition (Research Report No. RR-15-32). Princeton, NJ: Educational Testing Service. Retrieved July 2, 2020, from https://doi.org/10.1002/ets2.12076.

Sabatini, J. P., Sawaki, Y., Shore, J. R., & Scarborough, H. S. (2010). Relationships among reading skills of adults with low literacy. Journal of Learning Disabilities, 43(2), 122–138.

Scammacca, N. K., Roberts, G., Vaughn, S., & Stuebing, K. K. (2015). A meta-analysis of interventions for struggling readers in grades 4–12: 1980–2011. Journal of Learning Disabilities, 48(4), 369–390.

Semel, E., Wiig, E., & Secord, W. (2003a). Clinical evaluation of language fundamentals IV. San Antonio, TX: Pearson.

Semel, E., Wiig, E., & Secord, W. (2003b). Clinical evaluation of language fundamentals IV: Examiner’s manual. Bloomington, MN: Pearson.

Srivastava, P., & Gray, S. (2012). Computer-based and paper-based reading comprehension in adolescents with typical language development and language-learning disabilities. Language, Speech, and Hearing Services in Schools, 43(4), 424–437.

Strucker, J. (2013). The knowledge gap and adult learners. Perspectives on Language and Literacy, 39(2), 25–28.

Strucker, J., & Davidson, R. K. (2003). Adult reading components study (NCSALL research brief). Cambridge, MA: National Center for the Study of Adult Learning and Literacy. Retrieved July 2, 2020, from https://eric.ed.gov/?id=ED508655.

Talwar, A., Greenberg, D., & Li, H. (2020). Identifying profiles of struggling adult readers: Relative strengths and weaknesses in lower-level and higher-level competencies. Reading and Writing: An Interdisciplinary Journal, 33, 2155–2171.

Talwar, A., Tighe, E. L., & Greenberg, D. (2018). Augmenting the Simple View of Reading for struggling adult readers: A unique role for background knowledge. Scientific Studies of Reading, 22(5), 351–366.

Tighe, E. L. (2019). Integrating component skills in a reading comprehension framework for struggling adult readers. In D. Perin (Ed.), The Wiley handbook of adult literacy (pp. 89-106). Wiley-Blackwell.

Tighe, E. L., & Schatschneider, C. (2016). A quantile regression approach to understanding the relations among morphological awareness, vocabulary, and reading comprehension in adult basic education students. Journal of Learning Disabilities, 49(4), 424–436.

Tighe, E. L., Johnson, A. M., & McNamara, D. S. (2017). Predicting adult literacy students’ reading comprehension: Variations by assessment type [Paper presentation]. American Educational Research Association 101st Annual Meeting, San Antonio, TX, United States.

Tighe, E. L., Little, C. W., Arrastia-Chisholm, M. C., Schatschneider, C., Diehm, E., Quinn, J. M., et al. (2019). Assessing the direct and indirect effects of metalinguistic awareness to the reading comprehension skills of struggling adult readers. Reading and Writing: An Interdisciplinary Journal, 32(3), 787–818.

Tilstra, J., McMaster, K., Van den Broek, P., Kendeou, P., & Rapp, D. (2009). Simple but complex: Components of the simple view of reading across grade levels. Journal of Research in Reading, 32(4), 383–401.

To, N. L., Tighe, E. L., & Binder, K. S. (2016). Investigating morphological awareness and the processing of transparent and opaque words in adults with low literacy skills and in skilled readers. Journal of Research in Reading, 39(2), 171–188.

Tunmer, W. E., & Chapman, J. W. (2012). The simple view of reading redux: Vocabulary knowledge and the independent components hypothesis. Journal of Learning Disabilities, 45(5), 453–466.

Vellutino, F. R., Tunmer, W. E., Jaccard, J. J., & Chen, R. (2007). Components of reading ability: Multivariate evidence for a convergent skills model of reading development. Scientific Studies of Reading, 11(1), 3–32.

Woodcock, R. W. (2011). Woodcock reading mastery tests III. San Antonio, TX: Pearson.

Woodcock, R. W., McGrew, K. S., & Mather, N. (2007). Woodcock–Johnson III normative update. Rolling Meadows, IL: Riverside Publishing.

Acknowledgements

The research reported here is supported by the Institute of Education Sciences, U.S. Department of Education, through Grant R305C120001, Georgia State University. The opinions expressed are those of the authors and do not represent views of the Institute or the U.S. Department of Education.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Talwar, A., Greenberg, D., Tighe, E.L. et al. Examining the reading-related competencies of struggling adult readers: nuances across reading comprehension assessments and performance levels. Read Writ 34, 1569–1592 (2021). https://doi.org/10.1007/s11145-021-10128-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11145-021-10128-7