Abstract

To assess text comprehension and concept mastery, standards-aligned measures have moved from using multiple-choice questions to using source-based writing tasks (sometimes referred to as Reading-to-Write tasks). For example, it is now common for students to be asked to read a text and then to produce a written response, often a summary or argumentative essay. While this task involves comprehension of the source text, it remains unclear the degree to which these reading-to-write tasks also tap additional skills, such as academic language proficiency, which may support or hamper the writer’s ability to convey information acquired from reading. Given the lack of research focused on this question, in this study, we examined whether variability in early adolescents’ Core Academic Language Skills (CALS) contributes to the quality of their written summaries of science source texts. A total of 259 participants in grades four to eight were administered the Core Academic Language Skills Instrument (CALS-I) and the Global Integrated Scenario-Based Assessment (GISA), which included a reading comprehension test and a summary writing task, both based on the same scientific source text. Findings revealed that CALS, previously shown to be associated with reading comprehension, have a robust positive relation with early adolescents’ science summary writing quality, predicting unique variance over and above students’ source text comprehension and demographic characteristics. Results highlight the relevance of paying instructional attention not only to content but also to language skills when preparing students to become independent learners in a content area. In addition, these findings offer some evidence for CALS as a cross-modality construct relevant to both reading and writing at school.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Exploring the contribution of core academic language and reading comprehension skills to early adolescents’ written summaries

To better reflect the sorts of tasks that we hope for students to successfully complete in academic settings, the next generation of reading assessments has moved beyond a multiple-choice paradigm to include post-reading writing tasks (Graham & Harris, 2015). For example, asking students to read a text and generate a written response for an academic readership (such as a summary or argumentative essay) is now commonplace in daily instruction and on state-mandated and national assessments. These are sometimes referred to as ‘source-based’ writing tasks or ‘Reading-to-Write’ (RtW) tasks (Graham & Harris, 2017). In comparison to traditional reading comprehension measures, these newer assessments attempt to gather more robust evidence about whether students are learning the text comprehension skills required for participation in post-secondary education and in the workforce.

Yet what else does successful performance on a RtW task, such as writing a summary, tell us about our students’ skills? Arguably, beyond comprehension of the source text, multiple factors are associated with the ability to write high-quality summaries (working memory, attention, encoding skills), including the writer’s level of language skill (Abbott & Berninger, 1993; Berninger, Winn, MacArthur, Graham & Fitzgerald, 2006; Kellogg, 2008; Scott, 2009). In particular, we might hypothesize that knowledge of and ability to deploy the constellation of language forms found most often in academic texts and used to communicate abstract ideas in academic settings, often referred to as ‘academic language,’ would be particularly relevant when writing summaries in middle-grade classrooms. Against the backdrop of the wide-adoption of Common Core-aligned assessments in U.S. schools [e.g., assessments developed by the Partnership for Assessment of Readiness for College and Careers (PARCC) and the Smarter Balanced Assessment Consortium (Smarter Balanced)], research that elucidates the language skills that are necessary to complete the Reading-to-Write tasks that dominate these assessments has never been timelier. In this study, we aim to examine academic language skills as an important source of individual differences that may impact middle graders’ performance in summarizing expository texts focused on science topics, which is a common RtW task. In the sections that follow, we selectively survey prior theoretical and empirical work that specifies the important role of linguistic skills in reading and writing performances, as well as situate this study within a line of inquiry that has sought to examine the specific role of academic language skills in these literacy processes.

Study aims and links to a program of research

The claim that language skills contribute to the quality of students’ summaries may appear obvious. What is less obvious, however, is that students exhibit considerable individual differences in their mastery of the language required for participation in middle school literacy tasks. In our research programs, we examine the development of the language skills that support participation in the tasks that occur in academic discourse communities, with a particular focus on those needed to read and write texts. In our prior work, we have examined the development of these academic language skills by proposing an operational construct, Core Academic Language Skills (CALS), and by designing an instrument to capture these skills in students in grades four to eight, the CALS Instrument (CALS-I) (Uccelli, Barr, Dobbs, Phillips Galloway, Meneses, & Sánchez, 2015a). As we will discuss below in more detail, CALS refer to high-utility language skills hypothesized to support literacy across content areas. Our previous studies have found that even among eighth-grade students identified as English proficient by their school districts, there is great variability in students’ CALS knowledge. Furthermore, we document a positive association between academic language skills as captured by the CALS-I and levels of reading comprehension (Phillips Galloway & Uccelli, 2018; Uccelli et al., 2015a; Uccelli, Phillips Galloway, Barr, Meneses & Dobbs, 2015b; Uccelli & Phillips Galloway, 2017).

This paper extends this work by examining the important role played by these same academic language skills in early adolescents’ writing. Specifically, we focus on Core Academic Language Skills as an important source of potential individual differences affecting proficiency in one common RtW task: summarizing scientific expository texts (henceforth referred to as ‘science summaries’). In so doing, we add some support to the understanding of Core Academic Language Skills as a cross-modality construct relevant to both the comprehension and production of academic texts, and worthy of instruction in middle grade classrooms. Additionally, by exploring the relation between students’ CALS and their performance in a disciplinary writing task, we aim to highlight the relevance of cross-content academic language skills instruction as a complement to teaching disciplinary content and language when preparing students to become independent learners in a content area.

Existing theoretical models of discourse comprehension and production: academic language skills as key but understudied

To frame the role of language in science summarization, we consider theoretical representations of how discourse is understood and produced. Indeed, text comprehension and production have long been linked: in models of written discourse production, reading and listening comprehension play a supporting role as writers stop to reread what they have written or to refer to a source text (Hayes, 1996). In this study to fully conceptualize the role of academic language in RtW tasks, we draw on Kintsch and colleagues’ model of text comprehension (Kintsch, 1994; van Dijk, Kintsch & van Dijk, 1983), as well as on Kim and Schatschneider’s (2017) developmental model of writing. These models are complementary and theoretically aligned: Kintsch’s Construction-Integration (CI) model focuses on reading comprehension, and Kim and Schatschneider’s model (2017) extends and adapts the CI model to generate an empirically-supported, developmental model of writing. These models can be understood as expansions of the simple view of reading (SVR) and the simple view of writing (SVW), respectively. Whereas the SVR conceptualizes reading comprehension as the mathematical product of decoding and language comprehension (Gough & Tunmer, 1986); the SVW views writing as the product of transcription skills (for translating sounds into written symbols) and text generation skills (for producing and organizing ideas) (Juel, 1988; Juel, Griffith, & Gough, 1986). Both models complexify a simple view of reading or writing by further specifying the role of oral language skills. Below, we briefly describe both models. We use these models to inform our hypothesis regarding the role of academic language skills in the reading and writing processes involved in science summarization.

When reading an informational science text with the goal of generating a summary, we can assume, following the CI model, that a reader engages with the text at three levels by integrating decoding, language comprehension, and higher-order skills. The reader accesses the source text at the “surface level” as she decodes the actual script, and at the “textbase level” to make meanings from words, sentences, and discourse features, including by comprehending the content-specific and general academic language contained in the text. The penultimate goal, however, is to build a “situation model” that melds the information in the text with the reader’s prior knowledge (Kintsch, 1994; van den Broek, Lorch, Linderholm & Gustafson, 2001; van Dijk et al., 1983). Analogously, as writers summarize a science text, we would expect them to engage in these three levels, relying on their transcription, language, and higher-order cognitive skills. Kim and Schatschneider (2017) provide the most recent empirical evidence to support a not-so-simple view of an à la Kintsch three-level writing model. They examined transcription skills and text generation skills (oral discourse production, discrete language skills, and cognitive factors) as contributors of elementary school narrative writing quality (Kim & Schatschneider, 2017). Aligned with an expanded simple view of writing, Kim and Schatschneider (2017) found that oral discourse production skills (retelling quality) and transcription skills (spelling and handwriting fluency) directly predicted writing quality. All other discrete language skills (vocabulary and syntax) and cognitive factors (theory of mind, inferencing skills, working memory) were only indirectly associated with writing quality via discourse production and transcription skills. Compared to transcription skills, and perhaps not surprisingly, discourse production had the largest effect on writing quality.

As a hybrid task, science summaries draw on language skills (including academic language skills) at two points: students must call on their language knowledge first to comprehend the source text, then, to repackage the information from the source text into a summary. It is not a simple matter of mirroring the source’s surface code and textbase: while summaries call for writers to represent the information in a source text accurately, an implicit expectation of summary writing is that writers ‘use their own words’ (Hood, 2008). Repackaging the information in the text often demands knowledge of academic language. Specifically, students must have enough academic language to represent the ideas in the source text adequately. It is possible that the source text serves as a scaffold—or at least has a priming effect—for writers who can access it by offering an indication of what register of language is expected, and by providing fodder in the form of language and language structures that might be transformed (Cumming et al., 2005; Cumming, Lai, Cho, 2016; Gebril & Plakans, 2009).

This hypothesis that academic language skills play a dual role in summarization by supporting both text comprehension and production is not without theoretical grounding (Fitzgerald & Shanahan, 2000; Tierney & Shanahan, 1991; Shanahan, 2016). Fitzgerald and Shanahan (2000) suggest that knowledge of the attributes of texts—words, syntax and text features—supports readers and writers at the word-level as they decode and encode, and at the sentence- and text-levels as they comprehend and convey meaning. Empirical support for reading-writing links has continued to accumulate (Abbott & Berninger, 1993; Graham & Hebert, 2011; Graham et al., 2018). However, because the field has tended to focus on younger children rather than adolescent writers (Graham et al., 2017), the later-acquired language skills called upon for comprehending or constructing the ideas in an expository text have been minimally studied in comparison to the extensively investigated basic skills involved in decoding or encoding (Kent & Wanzek, 2016; Miller & McCardle, 2011; Nippold & Scott, 2009). In this study, we focus on the former: Core Academic Language Skills (CALS) are comprised of a narrow, but important, subset of the linguistic knowledge used by readers and writers as they comprehend and communicate meaning in academic settings. In the sections that follow, we speculate on the ways in which CALS, already demonstrated to support skilled reading (Uccelli et al., 2015a, b), may be instrumental in the writing of science summaries during the upper elementary and middle school years.

Core Academic Language Skills: hypothesized links to academic writing

Whether writing narrative or expository texts, individual differences in grammatical knowledge, morphology, and vocabulary predict differences in the quality of texts produced by child and adolescent writers (Ahmed, Wagner, & Lopez, 2014; Beers & Nagy, 2009, 2011; Berman & Nir-Sagiv, 2007, 2009; Berman & Ravid, 2009; Dockrell, Lindsay, Connelly, & Mackie, 2007; Kim, Al Otaiba, Folsom, Greulich, & Puranik, 2014; Olinghouse & Wilson, 2013). General oral language skills adequately support written expression in the early grades, where narrative writing is more prevalent. But additional language skills are required as students progress through schooling and are asked to write a broader array of text types (Common Core State Standards Initiative, 2010; Graham & Perin, 2007; Nippold & Sun, 2010). For example, during the period from upper-elementary through high school, intergenre comparisons between writers’ expository and narrative texts indicate that academic or ‘book-like’ language [passive voice, complex noun phrases, and multi-clausal structures, among others] is used more frequently when writing expository texts (Beers & Nagy, 2011; Berman & Nir-Sagiv, 2007; Hall-Mills & Apel, 2015; Jisa, Reilly, Verhoeven, Baruch, & Rosado, 2002; Nippold, 2007).

The task of learning the academic language needed to write expository texts is two-fold: students must gradually acquire the specialized language used in math, science and social studies to communicate disciplinary content; and, to participate in the broader academic discourse community, they must gradually master a crosscutting, academic register (Nagy & Townsend, 2012). Differences in how writers from diverse disciplinary communities use language are well documented, and have been brought to the attention of educators through disciplinary literacy approaches (Halliday & Martin, 2003; Moje, 2015; Snow, 2010). What is less frequently discussed is that academic texts also contain a ‘core’ set of grammatical and discourse features that have their genesis in a shared set of communicative demands faced by academic writers (i.e., precisely communicating abstract information and dynamic processes to a non-present readership) (Gee, 2014; Snow & Uccelli, 2009). For illustration, Hiebert finds that fewer than 1000 words accounted for two-thirds of the words in a cross-disciplinary corpus of textbooks like those used in schools (Hiebert, 2013). These commonalities extend beyond the lexical-level (Biber & Gray, 2015).

Writers gradually learn this language, which we refer to as Core Academic Language Skills (CALS), as they participate in school discourse communities. CALS encompass a set of high-utility language skills needed to manage the linguistic features prevalent in academic texts across content areas, but which are infrequent in colloquial conversations (Uccelli et al., 2015a, b). CALS are a subset of the broader construct of ‘academic language proficiency,’ which also includes the academic language of the disciplines, and is one component of an individual’s overall language proficiency.

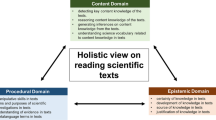

CALS include the domains of language skill delineated below. While our prior work discusses the relevance of these language skills to reading comprehension, here we highlight their role in text production. For each CALS domain, we draw evidence for its inclusion in the language skills that support skilled expository writing from two distinct bodies of research: (1) studies examining the relation of developing writers’ language skills (syntactic, morphology and vocabulary knowledge), often captured through assessment, to skilled writing; and (2) developmental linguistics studies that document the use of language features common in expository texts in students’ writing.

-

I.

Unpacking/Packing dense information | Skill in employing the complex word- and sentence-level structures utilized by academic writers to facilitate concise communication. These structures include nominalizations, embedded clauses, and expanded noun phrases, all of which appear with greater frequency in expository texts.

-

Word-level skills Derivational morphology skills, which allow writers to concisely discuss abstract ideas by shifting a word’s grammatical category (to legalize→legalization), continue to develop in middle school and beyond (Apel, Wilson-Fowler, Brimo, & Perrin, 2012; Nagy, Berninger, Abbott, Vaughn & Vermeulen, 2003). In fact, derived words are more common in the writing of older versus younger children (2nd grade versus 4th grade) (Carlisle, 2010), and in students’ expository writing (Bar-Ilan & Berman, 2007). Morphology skills may also aid the writing process by supporting automaticity in spelling (Nagy, Berninger, & Abbott, 2006).

-

Sentence-level skills Previous studies also document relatively rapid development of syntactic packing skills during the period from elementary through middle school. Texts become more grammatically intricate as writers are better able to use embedded clauses, noun phrases, and prepositional phrases (Beers & Nagy, 2009, 2011; Berman & Verhoeven, 2002; Berman & Nir-Sagiv 2007; Berman & Ravid, 2009; Nir-Sagiv & Berman, 2010).

-

-

II.

Connecting ideas logically | Skill in using words or phrases known as ‘connectives’ and ‘discourse markers’ that signal to readers how ideas and concepts are related in academic texts (e.g., consequently, on the one hand…on the other hand). Developing the skill to use connectives in writing occurs over the middle grades, with mastery not apparent until the end of high school (Berninger, Mizokawa, Bragg, Cartwright, & Yates, 1994; Crowhurst, 1987; Salas, Llauradó, Castillo, Taulé, & Martí, 2016). Langer (1986), for instance, finds that for developing writers, the skill to organize entire texts often hinges on using organizational markers.

-

III.

Tracking participants and ideas | Skill in using varied language when referring to the same participant, theme or idea across a text as a method for reducing repetition and creating cohesion for readers (e.g., Water evaporates at 100 degrees Celsius.This process…). Understanding of text cohesion appears to be supportive in both reading and in writing, with developing writers who are able to use reference skillfully also demonstrating stronger text comprehension skills (Cox, Shanahan & Sulzby, 1990).

-

IV.

Organizing analytic texts | When writing, skill in organizing the sections of analytic texts, especially argumentative ones, in ways that approximate conventional academic text structures (e.g., thesis, argument, counterargument, conclusion) (Sanders & Schilperoord, 2006). These familiar structures support readers’ comprehension. For writers, awareness of these structures offers a road map for organizing thoughts and ideas during composing. Prior studies find that this knowledge of text macrostructures develops across adolescence (Berman & Verhoeven, 2002; Hall-Mills, & Apel, 2015; Phillips Galloway & Uccelli, 2015). Curiously, though, for students in grades four to eight, command of global text structure in expository text construction tends to develop much more slowly than in narratives, potentially suggesting that the timelines for mastering local and global language features differ (Berman & Nir-Sagiv 2007, 2009). Langer (1986) finds similar results in her study of eight to 14 year-olds, who were able to make use of causal structures at the sentence-level. Only with age and history in the practice of writing were developing writers able to use these structures to organize entire essays, often by using organizational markers.

-

V.

Understanding/Expressing precise meanings | Skill in expressing precise meanings when writing to achieve communicative clarity. We focus, in particular, on skill in using and comprehending language to make thinking and reasoning visible to others, known as, ‘metalinguistic vocabulary’ (e.g., hypothesis, generalization, argument). As early as grade four, students’ expository texts contain more of what Berman and Nir-Sagiv (2009) refer to as ‘school-like’ vocabulary than narrative texts written by the same students (Berman & Nir-Sagiv 2007, 2009; Nippold & Scott, 2009; Nir-Sagiv, Bar-Ilan and Berman 2008).

-

VI.

Understanding/Expressing a writer’s viewpoint | Skill in using written language to signal a viewpoint, especially your degree of certainty (e.g., Certainly, It is unlikely that). Indeed, the use of language that conveys the writer’s stance is linked with writing quality in persuasive essays (Uccelli, Dobbs, Scott, 2013).

-

VII.

Recognizing academic language | Skill in recognizing when academic language versus colloquial language is useful for completing a writing task. We situate this as a pre-requisite for engaging in lexicogrammatical choice-making when writers are presented with the task of communicating with an academic readership, and must choose from among the language forms with which they are familiar (Ravid & Tolchinsky, 2002).

As an important clarification, we are not suggesting that other language skills not captured by the CALS domains are not also important for science summarization. On the contrary, more general oral language skills, including basic early language skills, are obviously essential building blocks that support successful text construction for middle graders. We focus on CALS because the majority of students have likely mastered English colloquial language skills by upper elementary school (at least for students not classified as English Learners). In contrast, during early adolescence, CALS displays considerable individual variability.

To date, this construct has been operationalized as a psychometrically-robust assessment for middle graders (the Core Academic Language Skills Inventory, CALS-I), which taps receptive knowledge of these domains (Uccelli et al., 2015a). In line with sociocultural perspectives (Street, 2005), our register-specific approach to assessment aims to surface whether students have had ample opportunity to acquire CALS, and to offer educators targeted information about which language forms to teach in order to scaffold participation in reading and writing practices characteristic of school discourse communities.

CALS: extending prior studies of language-writing connections

In this study, we extend prior studies of language-writing connections by adopting a hybrid measurement approach in which we directly assess students’ knowledge of a subset of school-relevant, academic language skills—Core Academic Language Skills (CALS)—that developmental linguistics studies examining students’ written texts find to be linked with writing quality during the middle grades. Prior studies that directly assess students’ language typically employ instruments originally designed for use in clinical or research applications that focus on formal linguistic levels (e.g., lexical or morpho-syntactic skills). By design, these instruments sample knowledge of a broad array of language forms, including (but not limited to) those found in academic texts. Studies from this generative line of research reveal relations between lexical, morphological, and syntactic skills and writing proficiency in a range of text types (Abbott & Berninger, 1993; Apel & Apel, 2011; Dockrell, Lindsay, & Palikara, 2011; Kim et al., 2014; Silverman et al., 2015).

In contrast, we designed the CALS-Instrument used in this study with the goal of capturing students’ knowledge of the word, sentence, and discourse forms that have the highest probability of appearing in school texts. This probabilistic approach to assessment design reflects our understanding that language exists along a continuum from ‘most likely’ to ‘least likely’ to co-occur with school learning tasks (Snow & Uccelli, 2009). In other words, it would be inaccurate to make categorical distinctions between ‘academic’ and ‘non-academic’ language. For this reason, the CALS-Instrument and measures of language used in prior studies assess some of the same language features; CALS is, after all, an important component of overall language proficiency. While it is beyond the scope of this study to examine this degree of overlap empirically, we suggest that measures like the CALS-I serve as an important complement to the broad measures of language frequently employed in the field.

By directly measuring CALS via an assessment, our approach also differs from developmental linguistics studies. These studies examine adolescents’ language only as manifested in their text production. This approach provides a window into whether students are able to marshal productive academic language within the context of a particular academic writing task (e.g., academic vocabulary, conceptualized also as Latinate vocabulary; complex syntactic structures; or certain transition and stance markers) (Berman and Nir-Sagiv, 2007; Jisa, 2004 for examples in French; Nir-Sagiv & Berman, 2010). In this study, we use the CALS-I to directly measure knowledge of academic language, which we believe has advantages because spontaneous language produced in a text may not represent the full repertoire of language skills an adolescent writer may have mastered.

Our focus on science summaries in a primarily monolingual, middle grade sample is also unique. Prior studies have extensively explored summary writing in adults learning English as an additional language (Cumming et al., 2005, 2016). Summary writing, though ubiquitous in middle school classrooms, has received little attention, with most studies of developing writers focused on narrative or argumentative writing tasks. We also include a socioeconomically-diverse sample as a result of our partnership with large public school districts in the U.S., which is unlike the majority of developmental linguistics studies that focus on middle class samples.

Finally, few studies have explored the role of language skills in reading and writing through the lens of a single operational construct, which is what we have sought to do with CALS across our research program (Uccelli et al., 2015a, b; Phillips Galloway & Uccelli, 2018). In this study, we use this measure to gain insight into whether these receptive academic language skills are linked with the quality of students’ written science summaries. Our prior research reveals that the academic language of text is challenging for large proportions of adolescents, even eighth graders who are classified as English proficient. Guided by this evidence and by the documented contribution of CALS to reading comprehension, we hypothesized that CALS may also significantly predict the quality of science summaries during mid-adolescence, offering some insight into the role of CALS in writing skill for middle grade students.

The current study

In the current study, we aim to examine the role played by fourth to seventh grade students’ academic language resources in producing written summaries of science texts they have read independently. To do so, we assessed students’ comprehension of the source text that they subsequently summarized as well as their knowledge of core academic language features using a group-administered, psychometrically-robust measure—the Core Academic Language Skills Inventory (CALS-I) (Barr, Uccelli, Phillips Galloway, 2018). In addition, we control for a series of student and task variables known to impact holistic summary quality: student characteristics (special education eligibility, grade, gender and free or reduced lunch eligibility) as well as features of the task performance (the topic of the summary, the summary’s length, the amount of text copied from the source text, and student’s spelling fluency). Specifically, we address this research question: Do Core Academic Language Skills predict writing quality of science summaries produced by early adolescents (grades 4–7)?

We anticipated that both students’ academic language and reading comprehension of the source text would predict summary quality, even after controlling for student characteristics and additional aspects of the task performance (the topic of the summary, the summary’s length, the amount of text copied from the source text, and student’s spelling fluency). Translation of ideas in a text to a source-based summary is generally assumed to hinge primarily on the reader’s level of source text comprehension. In this study, we posit that students’ academic language skills also play a role in skilled science summary writing given the explicit expectation that the writer use language not present in the source text. In addition to our main research question, these analyses also allowed us to begin to explore an understudied hypothesis: whether the same constellation of high-utility language skills (i.e., CALS) already demonstrated to play a role in text comprehension (see Uccelli et al., 2015a, b) would also contribute to academic writing proficiency. If this specific hypothesis proves to be correct, then results would not only highlight an important set of pedagogically-relevant language skills, but they would also offer additional evidence that instructional approaches that foster the language needed for reading and writing in an integrated manner may be promising.

Method

This study was conducted within the context of a large multi-year study investigating predictors of skilled reading comprehension among upper elementary and middle grade students in a large sample (n ~ 7000). While the larger investigation included 24 schools drawn from three districts in the Northeastern United States that were randomized to treatment and control conditions, data for the present study are from control schools only (n = 9 schools). Students in the sample were drawn from 68 classrooms, with an average of 4 students per classroom included in the analytic sample. Because a criterion for inclusion was completing the summary task and all data were collected within a single window, all data were complete.

Sample

All participants were in grades 4–7 in the second year of the study (n = 259 students). The sample reflected the demographics of the urban and semi-urban communities of the schools (13% Black, 62% White, 14% Latinx, 5% Asian, 5% Multiple Races) and was comprised primarily of students from low-income families (61% eligible for free/reduced price lunch), with 4% of students designated as English learners (ELs). Finally, 11% of students in the sample were identified as eligible for special education services. Table 1 describes the sample.

Instruments and measures

Students were assessed in the spring at the end of the academic year. Trained research assistants administered the following measures as part of the students’ regular school day.

Core Academic Language Skills-Instrument The Core Academic Language Skills-Instrument (CALS-I) is a group-administered, paper-and-pencil assessment that was designed to capture the CALS elements delineated above in 4th–8th grade populations (Uccelli et al., 2015a, b). The CALS-I includes eight short tasks that include a range of formats: connecting ideas, tracking themes, organizing texts, breaking words, comprehending sentences, identifying definitions, interpreting epistemic or stance markers, and understanding metalinguistic vocabulary. The CALS-I has been normed following a rigorous psychometric process (see Barr et al. 2018). Two forms of the CALS-I, which are vertically aligned, were used in this study: CALS-I-Form 1 for grade six (α = .90, number of items = 49) and CALS-I-Form 2 for grade seven (α = .86, number of items = 46). Most items in the CALS-I are dichotomously scored (1-correct or 0-incorrect. Rasch item response theory analysis was used to generate factor scores using a vertically equated scale. These factor scores are used in the present analysis.

The Global Integrated Scenario-Based Assessment (GISA) Developed by Educational Testing Service, the GISA is a computer-based assessment that uses scenarios to motivate students’ reading and subsequent writing performance. Students are given a series of texts (e-mails, news articles, expository science texts) as well as a plausible purpose for reading (e.g., to decide if a wind farm is a good idea for your community). For students in grades 4 and 5, the source texts discussed satellites that orbit the earth. The texts read by 6th and 7th graders focused on the generation of wind power. Texts were designed to contain the same number of idea units and to follow the same text structure regardless of topic.

Reading comprehension task After reading the passages, students answer a series of comprehension questions, some requiring simple recall of details from the text and others requiring source-based inferences, distinguishing claims and evidence, integrating information across multiple texts, questioning, and predicting.

Summary writing task Finally, students produced summaries of the texts they had read. Writing research has long demonstrated that novice writers perform at higher levels when provided with prompts to say more (Bereiter & Scardamalia, 1987); this particular task posed a series of questions to students to elicit optimal performance. Students we told ‘you will read the first three paragraphs of a passage about (satellites or wind power). When you are done, you will write a short summary on what the passage is about. Here are some guidelines to keep in mind while you write: Your summaries: (1) should include all the main ideas from the passage and only the main ideas; (2) should not include your opinions or information outside the passage—even if the information is correct! (3) should be written in your own words; don’t just cut and paste sentences from the passages.’ The GISA is a computerized assessment that requires, on average, 45 minutes to complete. Though it is a relatively new instrument and psychometric analyses are still ongoing, evaluations suggest that GISA is a reliable and valid measure that can be used to assess students across the expected range of text comprehension levels (O’Reilly, Weeks, Sabatini, Halderman, & Steinberg, 2014; Sabatini, O’Reilly, Halderman & Bruce, 2014a, b). While the scenario-based approach is unique, the GISA demonstrates strong concurrent validity with other more traditional reading comprehension tests. GISA produces a single score, which is reported on a common, cross-form scale based on a large-scale study.

Written summary measures Once the data had been collected, the science summaries produced by students were scored and analyzed using the following measures. Prior to scoring, misspellings were corrected to ensure that raters were not biased in their scoring.

-

Quality of written summary Science summaries were scored for holistic quality using an adaptation of an existing 8-point-rubric designed for classroom assessment purposes (available through Prentice Hall publishing). This holistic rubric uses a scale from 1 (low) to 4 (high) and evaluates two aspects of writing: (1) organization, (2) elements of summaries. Scores on both scales were summed to create a holistic score. Two ELA teachers with prior experience teaching in middle schools scored the essays. Scorers were not familiar with the CALS construct and were blind to the study’s main research questions. Inter-rater reliability was calculated after a third rater scored 20% of the data (Cohen’s K = 0.74–0.92 across the summary tasks used in this study). Reliability of the summary tasks was also calculated using data from our larger study in which students completed multiple summaries (α = .69−.77).

-

Ratio of textual borrowing Because developing writers engage in textual borrowing to manage the demands of writing complex texts, we captured instances of direct textual copying from the source texts (Shi, 2004). Given our interest in examining whether students’ academic language skills were linked with the quality of their texts, we elected to use large amounts of copying from the source text as an exclusion criteria. Sections of text drawn from the source passage consisting of five continuous words or more were considered instances of textual borrowing in this study. The threshold of five words was set based on the findings in the collocation and lexical bundles literature (groups of words that occur routinely together in academic texts), which suggests that pairs and groups of up to four words tend to occur in academic discourse (Biber, Conrad, & Cortes, 2004). A program written in Python allowed for the identification of copied text. In our analysis, the number of copied words were divided by the number of total words in the student’s text to produce a percentage of borrowed text for each summary. This variable was used first as exclusionary criteria: students with more than 50% of the text borrowed from the source text were excluded from the sample, and their summaries were not scored for quality. Students whose texts contained less than 50% borrowed text were included in the analytic sample. Across the sample, the average amount of text copied from the source text was 9%. In our analysis, to control for the impact of copied text, this variable was used as a covariate in all models. We decided not to exclude students’ summaries that contained some amount of textual borrowing (less than 49%) on theoretical grounds. Because textual borrowing is a potentially important strategy for language learning—and appears to be common in middle grade writing—we elected to include the summaries of students that contained some text borrowed from the source text. While the texts that were 50% or more copied were not scored, we were able to examine whether those students who were excluded from the analysis differed from their peers in the same grade on other measures. Results suggested that students, on average, who engaged in higher proportions of textual copying did not have statistically lower academic language or reading comprehension scores at the 0.05 level, although CALS differences approached significance in the sample.

-

Ratio of misspellings Skill in spelling has been linked with extended text generation because ability to spell words accurately and efficiently frees up other resources that can be diverted to the task of translating thoughts to print (Graham, Berninger, Abbott, Abbott, & Whitaker, 1997). In this analysis, we generated a ratio of unconventionally-spelled words over conventionally spelled words to capture each writer’s spelling accuracy. We subsequently used this variable as a covariate in our analysis.

-

Length In this study, we include text length measured by total words as a predictor in all regression models. Interestingly, and across numerous studies, text length has been the best predictor of quality ratings.

-

Topic Students in elementary and middle school were asked to summarize science texts on different topics (wind power or satellites). While texts were designed to be comparable, we control for topic in our analysis given that prior studies suggest the potential for this to impact student writing.

Data analytic approach

Before conducting our analysis, we generated descriptive statistics by grade and by language status (English Learner and English Proficient) for the CALS-I scores, as well as for the other measures used in this study. To address our research question, multi-level hierarchical regression analyses were conducted, with summary writing quality scores as the outcome variable, CALS-I scores as main predictor, and reading comprehension scores, summary features (topic, length, ratio of textual copying, misspelling ratio) and students’ socio-demographic characteristics as covariates. We explored the effects of socio-demographic characteristics by entering these variables as a block (race, English proficiency designation, SES, special education designation and grade). Race variables were dummy coded, with other races serving as a reference category. Next, we examined the contribution of summary features, including length (i.e., number of words), topic (satellites in grades 4 and 5; wind power in grades 6 and 7), ratio of textual copying, and ratio of misspellings. Subsequently, comprehension of the source text was added and, our main predictor, academic language skills as captured by the CALS-I, were entered into the model. Because CALS is a broader skillset than the particular language represented in the reading comprehension task, we entered these variables separately into the model. In addition, we examined interaction terms in this analysis, but did not find that any of these interactions were significant. Because students were nested in classrooms in which the instruction may vary, we elected to fit two-level models. We drew students randomly from 68 classrooms, with an average of four students per classroom included in the analytic sample. This design allowed for a sufficient sample at level-two to conduct multi-level analysis (Maas & Hox, 2005). Prior to conducting this analysis, we centered continuous predictors.

Results

Preliminary descriptive analysis

We generated descriptive statistics for all measures before exploring our research question. Results revealed within- and across-grade variability. Because of the well-known impact of topic on writing performance, we control for topic in this analysis (4th and 5th graders wrote on the topic of satellites, while 6th and 7th graders wrote on the topic of wind power). For the upper elementary school sample, trends reflected the typical pattern of maturation from grade four to five, with students in grade five receiving higher scores on all measures. A notable feature of the sample was that sixth graders appeared to perform at higher levels on all measures when compared to seventh grade students (Table 2).

Notably, as displayed in Table 3, academic language proficiency (CALS-I scores) was strongly and significantly correlated with students’ levels of reading comprehension of the source texts from which they wrote their summaries (r = .74, p < 0.001). CALS-I scores were also significantly correlated with students’ writing quality scores, although the correlation was not as strong (r = .52, p < 0.001). This correlation was well within the expected range with a recent meta-analysis indicating that correlations between oral language measures and summary quality ranged from 0.23 to 0.55 (Kent & Wanzek, 2016). Given the text type, it is unsurprising that summary writing quality was significantly associated with reading comprehension of the source text (r = 0.55, p < 0.001). Summary length was also related to writing quality (r = 0.56, p < 0.001), mirroring findings in the writing literature that link text length with quality ratings. As anticipated, given the links between decoding and encoding skills, the misspelling ratio was negatively associated with reading comprehension skills (r = − 0.20, p < 0.01). We corrected misspellings before essays were scored for quality to prevent negatively biasing raters; therefore, we were not surprised that the presence of misspellings was not highly correlated with text quality in this analysis. However, Core Academic Language Skills (r = − 0.20, p < 0.001) were also negatively correlated with the misspelling ratio. Curiously, textual borrowing was positively associated with gender and grade, with females in the upper grades evidencing a higher rate of textual borrowing from the source text in their written summaries. While we can only speculate, a link between increased rates of textual borrowing and grade may be evidence of a gap between students’ growing metalinguistic awareness of a summary’s functional requirements (to include an accurate accounting of the information in the text) and the language they have to engage in these functions.

The role of Core Academic Language Skills in summary writing

To answer our question, we conducted a multi-level hierarchical linear regression predicting our outcome, holistic writing quality, in order to investigate the independent contribution of students’ academic language skills to the quality of students’ written science summaries. To address the nested structure of the data (students nested in classrooms and schools), we made use of Stata’s multi-level modeling features. Because our first unconditional (null) model suggested that very little variance was accounted for at the school-level, we determined that a three-level model was not necessary, instead opting for a two-level model with students nested in classrooms. Prior to conducting our modeling, all continuous variables were mean-centered (Enders & Tofighi, 2007). Before testing our hypothesis of interest, a 2-level null unconditional model was fit to the data (Table 4, Model 1). This allowed us to assess how much of the variance in students’ summary writing scores was accounted for at the classroom-level. The interclass correlation for summary writing quality was .16 at the classroom-level. This suggested that the greatest variance in students’ summary writing quality scores was at the individual-level (ICC = 0.84).

To examine the impact of each of our predictors, we entered the data in steps. In step one, we examined the impact of socio-demographic characteristics (EL status, SES, special education status and grade) on writing quality. As can be observed in Model 2 (Table 4), special education eligibility was a significant predictor of writing quality in the model, suggesting that students with a special education designation produced summaries that were evaluated to be of lower quality by human raters (coefficient = − 0.62, p < 0.05). Free and reduced priced lunch eligibility, English Language Learner designation and grade were non-significant predictors of summary quality in this sample after accounting for the other predictors and classroom-level random effects. However, these variables were retained in all models to account for maturation and experience with school language. These student characteristics explained an additional 4% of the variance in summary writing quality at the student-level (c2 = 21.53, p < .05).

In the third model, we added variables capturing the length of students’ summaries, the topic of the text summarized, students’ ratio of misspellings and ratio of borrowed text. All variables made a significant contribution to explaining writing quality, with the exception of topic, which was unsurprising given that the texts students read prior to writing were designed to be comparable. The number of unconventionally spelled words in the text made a significant negative contribution to students’ written summary quality scores (coefficient = − 0.21, p < 0.01). Similarly, students whose texts contained a higher proportion of texts directly borrowed from the source texts produced summaries that were assessed to be of lower-quality (coefficient = − 0.27, p < 0.01). Longer summaries were, not surprisingly, linked with higher written summary quality scores (coefficient = 0.03, p < 0.001). The addition of these text-level features explained an additional 31% of the variance in summary writing quality at the student-level (Model 2 versus Model 3, c2 = 103.31, p < .001).

In the fourth model, CALS-I scores were added to the model and were found to explain an additional 17% of the variance in students’ summary quality scores (Model 3 versus Model 4, c2 = 51.99, p < .001). Even while controlling for all of the other variables in the model, academic language proficiency made a significant positive contribution to students’ summarization skills, suggesting that the quality of written summaries depend, in part, on the writer’s level of language skill (coefficient = 0.66, p < 0.001). In the final model, we added students’ levels of reading comprehension of the source text. In line with the hypothesis that summary quality would be impacted by the writer’s comprehension of the source text, this variable was a significant contributor to students’ writing quality scores, explaining an additional 2% of the variance in summary writing quality over and above the other variables (Model 4 versus Model 5, c2 = 12.02, p < .001). Together, the variables included in the model explained a large proportion of the total variance in summary quality (46%) at the student-level (Model 5 versus Model 1, c2 = 167.32, p < .001), which constitutes a moderate effect. Curiously, in the final model, the addition of the reading comprehension scores did not render CALS insignificant, suggesting that there is unique variance contributed by these two measures.

To examine whether the findings were robust, we also conducted analysis in which CALS-I and reading comprehension scores were entered in reverse order. In this model, both CALS-I and reading comprehension scores remained significant. In addition, patterns of association remained consistent with the prior model in which the order of CALS-I scores and reading comprehension was reversed (Table 4, Model 6). In addition, given the high correlation between reading comprehension and CALS-I scores (0.74), we re-ran our final model omitting the multilevel component in order to generate variable inflation factors (VIF). VIF greater than or equal to 10 indicate collinearity. In this analysis, VIF values were below three (CALS-I = 2.69; GISA, Reading Comprehension = 2.73). Finally, because the spelling variable included in the above analysis was potentially functioning as a suppressor variable (it was correlated with the independent variables, but not with the criterion variable), we re-ran the final model excluding this variable. Excluding the misspelling ratio from the text had no influence on the patterns of association or levels of statistical significance (see Table 4, model 7).

Discussion

Linguistic skills are hypothesized to be a key factor that support or constrain a writer’s communication of “what must be said” (content) (Alamargot & Fayol, 2009). It is the case, however, that “what must be said” changes as writers advance through schooling. For primary- and intermediate-grade writers, oral language skills are strongly linked with writing skills, which makes sense given the relative overlap in the language used for writing and speaking (Abbott & Berninger, 1993; Kim & Scatsneider, 2017). For older writers, as the content communicated in speech and in writing diverge, writing tends to require a greater focus on using academic language to achieve cohesion and to communicate content (McCutchen, 1986). This led us to hypothesize that academic language skills, like those captured by the CALS-I, would play a central role in middle graders’ proficiency in writing science summaries, after controlling for students’ reading comprehension of the source text, the impact of student characteristics (grade, English proficiency status, SES, and special education status), and the features of students’ summaries (misspelling ratio, topic, length, ratio of copied text). We find that both academic language skills and comprehension of the source text make unique contributions to science summary writing quality for students in grades four to seven. This supports the hypothesis that motivated this study: that students’ school-relevant linguistic knowledge plays a central role in expressing text understanding in writing. Below, we first discuss these results in relation to prior studies, reflecting on both their theoretical relevance and practical implications.

Comprehension of a source text contributes to the quality of science summaries

Our finding that reading comprehension of a source text independently predicts science summary writing quality adds further empirical support to models of composition that posit that the writer’s level of topic knowledge is linked with producing coherent texts (Flower & Hayes, 1981). This finding is not surprising, given the text type examined in this study (summaries), and in light of evidence from prior studies that consistently find that high-knowledge writers produce higher quality texts (Bereiter & Scardamalia, 1987; Cumming et al., 2016; DeGroff, 1987; McCutchen, 1986). Yet, this is still a noteworthy finding. A common practice in the high-stakes testing arena has been to provide a source text as a way to level the background knowledge playing field. This study suggests that providing textual supports may differentially benefit skilled readers.

Core Academic Language Skills also contribute to the quality of science summaries

We find that performance on the science summary task used in this study was impacted by students’ levels of Core Academic Language Skills. The relevance of academic language skills for students’ summarization of science texts is evident when we examine texts produced by two learners in this sample on the topic of wind turbines. While both writers evidence comprehension of the source text, one text communicates meaning more clearly via the use of Core Academic Language features (Fig. 1).

What this example makes clear is that learning to participate effectively in the academic discourse community requires both coming to understand the content as well as acquiring the language needed to package these ideas. Our finding regarding the role of academic language in summary writing converge with those from prior studies. Across studies conducted in elementary and middle school settings, writers with lower levels of vocabulary and syntactic knowledge produce texts that are evaluated to be of lower quality, potentially because they lack the language resources to express messages and ideas to readers (Apel & Apel, 2011; Dockrell et al., 2011; Kim et al., 2011; Laufer & Nation, 1995; Olinghouse & Wilson, 2013; Silverman et al., 2015). Though writing studies conducted with middle graders have not tended to examine students’ performances in Reading-to-Write tasks, research focused on English as an Additional Language writers in secondary contexts finds that struggling L2 writers often lack knowledge of high-utility academic vocabulary, syntax, grammar and discourse-level language forms (Ascención-Delaney, 2008; Connor & Krammer, 1995; Cumming et al., 2005, 2016). We observe a similar effect of academic language proficiency in this study for a predominately-English proficient population.

In this study, we find that both students’ reading comprehension skills and Core Academic Language Skills make unique contributions to summary writing quality. Studies that have examined reading-writing-oral language relationships in middle graders find similar patterns of relations. Abbott and Berninger (1993) in their examination of predictors to composition quality for students in grades four, five and six, find that oral language and reading skill are unique predictors. In school settings, educators frequently use RtW tasks to assess students’ levels of text comprehension, but it must be acknowledged that these tasks also tap into students’ academic language skills. Additional tasks that isolate these distinct skill sets may be of use to the field. For example, text reconstruction tasks offer insight into text comprehension, without also assessing productive academic language skills (Davidi & Berman, 2014). Furthermore, the CALS-I used in this study was engineered to assess Core Academic Language Skills, while minimizing the demands on text comprehension, background knowledge, and perspective taking.

Support for CALS as a multimodal construct

It has long been suggested that reading and writing may involve a set of overlapping language skills (Fitzgerald and Shanahan, 2000); yet empirical studies have rarely explored the contribution of a common operational construct of language proficiency to text comprehension and production. The innovation of this study is that it offers some provisional evidence of a cross-modality construct of academic language proficiency. We focused only on summaries, which limits the conclusions that can be drawn regarding the contribution of CALS to the larger construct of academic writing. Despite these limitations, this study provides some initial support for the hypothesis that Core Academic Language Skills, which have been demonstrated in prior studies to support text comprehension (Phillips Galloway & Uccelli, 2018; Uccelli et al., 2015a, b), also contribute to writing proficiency in middle grade students.

To date, the pathways through which reading influences writing outcomes have been underspecified. However, amidst growing evidence of the mutually supportive role of reading and writing interventions (Graham & Hebert, 2011; Graham et al., 2018), studies are necessary that make salient the malleable skills that support both processes, and, which should be the focus of intervention. For instance, Graham and colleagues (2018) find that text interactions— reading, reading and analyzing other’s texts, and being privy to peers’ evaluations of a text—are an important lever for improving the quality of students’ writing. While these text encounters foster multiple skills and competencies that impact writing performance, students’ Core Academic Language Skills are very likely among them. After all, academic language is more frequently encountered in print than in speech.

An instructional approach that would teach CALS across reading and writing instruction would align with Ravid and Tolchinsky (2002) theoretical argument that the development of literate skills provides language users with a broader spectrum of linguistic options, allowing them more flexibility. They refer to this as ‘linguistic literacy,’ and reason that a key to later language development is exposure to the language of print at school through reading. In the context of this study, this theory would suggest that students with a longer history of participation in school literacy tasks would have more CALS resources and greater metalinguistic awareness, and so would be better able to write science summaries that align with academic register expectations. In this study’s cross-sectional sample, we do observe a general upward trend from grades four to six in CALS and in the quality of students’ science summaries. The findings correspond with developmental linguistics studies conducted by Berman and colleagues, which find that when compared to elementary graders, the expository texts produced by older students contain a higher proportion of CAL features: longer clauses, more complex noun phrases, and a higher percentage of multi-syllabic, abstract words (Bar-Ilan & Berman, 2007; Berman & Verhoeven, 2002; see also Nippold & Scott, 2009 for a review of expository writing development). Though provisional in nature given the small sample used in this study, these results provide motivation for testing curricular approaches that develop CALS with the goal of supporting developing readers and writers.

In conclusion, we also call for caution in how the results of this study are interpreted. Traditionally, syntactic skills and discourse organization skills have been taught in school settings through prescriptive grammar instruction that sits outside authentic reading and writing tasks; we caution against this approach. Furthermore, this study should not be interpreted as suggesting that general oral language skills are not also relevant to skilled reading and writing—extant evidence attests to their importance. Future studies should explore the CALS-I and measures of general oral language skills concurrently, adding to the field’s understanding of how these skills diverge and overlap.

Limitations and future research directions

As with all studies, we faced limitations in our analysis. One lies in our inability to examine the relationship between CALS and other academic writing tasks. The summary task used in this study to capture reading comprehension and writing proficiency are, by design, narrow. We capture comprehension of a source text—specifically an expository science text— and students’ skill in summarizing it, allowing us to characterize reading and writing relationships as they interact during a writing performance. While we might imagine that CALS would facilitate writing persuasive and expository genres by supporting the expression of ideas precisely, concisely and with a degree of epistemic cautiousness, it was beyond the scope of the present study to examine this claim. As a result, we cannot speak to the reading and writing relations at large. However, future studies might explore the RtW relationship across a range of text types (including non-academic texts), further illuminating the unique contribution of general writing skills and academic language.

The measure of summary writing used here might have been made robust by having students write multiple summaries, offering greater insight into how consistently well students were able to write this text type. This might have increased the amount of variance that could be estimated in our model and, potentially, altered the size of the correlations. Despite this limitation, this study offers insight into the relative contribution of how students’ comprehension of a particular text supported summarization of that text, which is, we argue, a unique and important contribution. Future studies might expand upon the findings from this study by increasing the number and type of summaries students produced.

Another intriguing possibility would be to examine this development over time. In this study, we have captured only a segment of the language-writing relationship by focusing on pre-adolescent and adolescent writers. Curiously, though, and with important implications for how we understand the developmental end goal of CALS' instruction, distinctions between everyday and academic language become less categorical with development: very skilled writers often purposefully use elements of conversational language in their academic texts (Beers & Nagy, 2011; Berman & Nir-Sagiv, 2007; Hall-Mills, & Apel, 2015). In light of this, we envision CALS learning as an additive, concomitant developmental process in which language knowledge needed to participate in academic discourse communit(ies), like classrooms, is expanding alongside the language needed to navigate other social communities (Cummins, 2017; Gee, 2014). CALS is only one component of an individual’s overall language proficiency. Ideally, future studies might track not only the development of academic language, but also of rhetorical flexibility in students' expository writing.

Despite including a large number of control variables, another limitation lies in our lack of control variables to capture other elements linked with writing proficiency, including working memory and additional language skills not captured by the CALS-I. Working memory is widely acknowledged to play a role in language production and has been linked with writing skill (Berninger, 1999; Gathercole & Baddeley, 2014), however it was beyond the scope of this study to explore whether working memory skills constrained performance in this RtW task. Moreover, the CALS-I is designed to capture skills particularly relevant to academic reading and writing; however, given that this study does not include general language measures, it is unclear how much additional variance might be explained by the inclusion of these variables or whether the CALS-I would remain a significant predictor. Future studies should explore these relationships between more general language skills and competencies, academic language, reading and writing outcomes.

Conclusions

In conclusion, we have shown that comprehension of a source text predicts students’ skill in summarization; and that academic language skills play a facilitative role in science summary writing, which constitutes an important academic text type to examine given how often students are asked to write them. While text-based writing is often assumed to serve primarily as an indication of students’ text understanding, this study complicates this thinking by suggesting that language skills may play a central role in students’ expression of information acquired through reading. Most centrally, however, this study identifies CALS, which has already been linked with text comprehension to skilled academic writing, offering support for the assertions that academic language skills may be a multimodal construct. In closing, these findings raise important questions about how instruction might leverage this finding to support students to become successful readers and writers of academic text and full participants in the academic community.

References

Abbott, R. D., & Berninger, V. W. (1993). Structural equation modeling of relationships among developmental skills and writing skills in primary-and intermediate-grade writers. Journal of Educational Psychology, 85(3), 478.

Ahmed, Y., Wagner, R. K., & Lopez, D. (2014). Developmental relations between reading and writing at the word, sentence, and text levels: A latent change score analysis. Journal of Educational Psychology, 106(2), 419.

Alamargot, D., & Fayol, M. (2009). Modeling the development of written transcription. In R. Beard, D. Myhill, M. Nystrand, & J. Riley (Eds.), Handbook of writing development (p. 2347). London: Sage.

Apel, K., & Apel, L. (2011). Identifying intraindividual differences in students’ written language abilities. Topics in Language Disorders, 31(1), 54–72.

Apel, K., Wilson-Fowler, E. B., Brimo, D., & Perrin, N. A. (2012). Metalinguistic contributions to reading and spelling in second and third grade students. Reading and Writing, 25(6), 1283–1305.

Ascención-Delaney, Y. (2008). Investigating the reading-to-write construct. Journal of English for Academic Purposes, 7(3), 140–150.

Bar-Ilan, L., & Berman, R. A. (2007). Developing register differentiation: The Latinate-Germanic divide in English. Linguistics, 45(1), 1–35.

Barr, C., Uccelli, P. & Phillips Galloway, E. (2018). Core Academic Language Skills-Instrument (Technical Report, No.1).

Barr, C., Uccelli, P., & Phillips Galloway, E. (under review). Design and validation of a measure of academic language proficiency.

Beers, S. F., & Nagy, W. E. (2009). Syntactic complexity as a predictor of adolescent writing quality: Which measures? Which genre? Reading and Writing, 22(2), 185–200.

Beers, S. F., & Nagy, W. E. (2011). Writing development in four genres from grades three to seven: Syntactic complexity and genre differentiation. Reading and Writing: An Interdisciplinary Journal, 24(2), 183–202.

Bereiter, C., & Scardamalia, M. (1987). An attainable version of high literacy: Approaches to teaching higher-order skills in reading and writing. Curriculum Inquiry, 17(1), 9–30.

Berman, R. A., & Nir-Sagiv, B. (2007). Comparing narrative and expository text construction across adolescence: A developmental paradox. Discourse Processes, 43(2), 79–120.

Berman, R., & Nir-Sagiv, B. (2009). Cognitive and linguistic factors in evaluating text quality: Global versus local. In V. Evans & S. Pourcel (Eds.), New directions in cognitive linguistics (pp. 421–440). Amsterdam: John Benjamins.

Berman, R. A., & Ravid, D. (2009). Becoming a literate language user: Oral and written text construction across adolescence. In D. R. Olson & N. Torrance (Eds.), Cambridge handbook of literacy (pp. 92–111). Cambridge: Cambridge University Press.

Berman, R. A., & Verhoeven, L. (2002). Developing text-production abilities across languages, genre, and modality. Written Languages and Literacy, 5(1), 1–44.

Berninger, V. W. (1999). Coordinating transcription and text generation in working memory during composing: Automatic and constructive processes. Learning Disability Quarterly, 23, 99–112.

Berninger, V. W., Mizokawa, D. T., Bragg, R., Cartwright, A., & Yates, C. (1994). Intraindividual differences in levels of written language. Reading & Writing Quarterly: Overcoming Learning Difficulties, 10(3), 259–275.

Berninger, V. W., Winn, W., MacArthur, C. A., Graham, S., & Fitzgerald, J. (2006). Implications of advancements in brain research and technology for writing development, writing instruction, and educational evolution. In A. MacArthur, S. Graham, & J. Fitzgerald (Eds.), Handbook of writing research (pp. 96–114). New York: Guilford Press.

Biber, D., Conrad, S., & Cortes, V. (2004). If you look at…: Lexical bundles in university teaching and textbooks. Applied Linguistics, 25(3), 371–405.

Biber, D., & Gray, B. (2015). Grammatical complexity in academic English: Linguistic change in writing. Cambridge: Cambridge University Press.

Carlisle, J. F. (2010). Effects of instruction in morphological awareness on literacy achievement: An integrative review. Reading Research Quarterly, 45(4), 464–487.

Common Core State Standards Initiative. (2010). Common core standards for English language arts and literacy in history/social studies, science, and technical subjects. Washington, DC: Council of Chief State School Officers (CCSSO).

Connor, U. M., & Kramer, M. G. (1995). Writing from sources: Case studies of graduate students in business management. In D. Belcher & G. Braine (Eds.), Academic writing in a second language: Essays on research and pedagogy (pp. 155–182). Norwood, NJ: Ablex.

Cox, B. E., Shanahan, T., & Sulzby, E. (1990). Good and poor elementary readers’ use of cohesion in writing. Reading Research Quarterly, 25(1), 47–65.

Crowhurst, M. (1987). Cohesion in argument and narration at three grade levels. Research in the Teaching of English, 21(2), 185–201.

Cumming, A., Kantor, R., Baba, K., Erdosy, U., Eouanzoui, K., & James, M. (2005). Differences in written discourse in independent and integrated prototype tasks for next generation TOEFL. Assessing Writing, 10(1), 5–43.

Cumming, A., Lai, C., & Cho, H. (2016). Students’ writing from sources for academic purposes: A synthesis of recent research. Journal of English for Academic Purposes, 23, 47–58.

Cummins, J. (2017). Teaching minoritized students: Are additive approaches legitimate? Harvard Educational Review, 87(3), 404–425.

Davidi, O., & Berman, R. A. (2014). Writing abilities of pre-adolescents with and without language/learning impairment in restructuring an informative text. In B. Arfé, J. Dockerell, & G. Berninger (Eds.), Writing development in children with hearing loss, dyslexia, or oral language problems: implications for assessment and instruction. Oxford: Oxford University Press.

DeGroff, L. J. C. (1987). The influence of prior knowledge on writing, conferencing, and revising. The Elementary School Journal, 88(2), 105–118.

Dockrell, J. E., Lindsay, G., Connelly, V., & Mackie, C. (2007). Constraints in the production of written text in children with specific language impairments. Exceptional Children, 73(2), 147–164.

Dockrell, J. E., Lindsay, G., & Palikara, O. (2011). Explaining the academic achievement at school leaving for pupils with a history of language impairment: Previous academic achievement and literacy skills. Child Language Teaching and Therapy, 27(2), 223–237.

Enders, C. K., & Tofighi, D. (2007). Centering predictor variables in cross-sectional multilevel models: A new look at an old issue. Psychological Methods, 12(2), 121–138.

Fitzgerald, J., & Shanahan, T. (2000). Reading and writing relations and their development. Educational Psychologist, 35(1), 39–50.

Flower, L., & Hayes, J. R. (1980). The cognition of discovery: Defining a rhetorical problem. College Composition and Communication, 31(1), 21–32.

Flower, L. S., & Hayes, J. R. (1981). A cognitive process theory of writing. College Composition and Communication, 32, 365–387.

Galloway, E. P. & Uccelli, P. Developmental relationships between academic language and reading comprehension. Journal of Educational Psychology. (in press).

Gathercole, S. E., & Baddeley, A. D. (2014). Working memory and language. Hove: Psychology Press.

Gebril, A., & Plakans, L. (2009). Investigating source use, discourse features, and process in integrated writing tests. Spaan Fellow Working Papers in Second or Foreign Language Assessment, 7(1), 47–84.

Gee, J. P. (2014). Decontextualized language: A problem, not a solution. International Multilingual Research Journal, 8(1), 9–23.

Gough, P. B., & Tunmer, W. E. (1986). Decoding, reading, and reading disability. Remedial and Special Education, 7(1), 6–10.

Graham, S., Berninger, V. W., Abbott, R. D., Abbott, S. P., & Whitaker, D. (1997). Role of mechanics in composing of elementary school students: A new methodological approach. Journal of Educational Psychology, 89(1), 170–182.

Graham, S., & Harris, K. R. (2015). Common core state standards and writing: Introduction to the special issue. The Elementary School Journal, 115(4), 457–463.

Graham, S., & Harris, K. R. (2017). Reading and writing connections: How writing can build better readers (and vice versa). In C. Ng & B. Bartlett (Eds.), Improving reading and reading engagement in the 21st century (pp. 333–350). Singapore: Springer.

Graham, S., & Hebert, M. (2011). Writing to read: A meta-analysis of the impact of writing and writing instruction on reading. Harvard Educational Review, 81(4), 710–744.

Graham, S., Liu, K., Bartlett, B., Ng, C., Harris, K. R., Aitken, A., et al. (2017). Reading for writing: A meta-analysis of the impact of reading and reading instruction on writing. Review of Educational Research, 88(2), 243–284.

Graham, S., Liu, X., Bartlett, B., Ng, C., Harris, K. R., & Aitken, A. (2018). Reading for writing: A meta-analysis of the impact of reading interventions on writing. Review of Educational Research, 88(2), 243–284.

Graham, S., & Perin, D. (2007). A meta-analysis of writing instruction for adolescent students. Journal of Educational Psychology, 99(3), 445.

Halliday, M. A. K., & Martin, J. R. (2003). Writing science: Literacy and discursive power. Abingdon: Taylor & Francis.

Hall-Mills, S., & Apel, K. (2015). Linguistic feature development across grades and genre in elementary writing. Language, speech, and hearing services in schools, 46(3), 242–255.

Hayes, J. R. (1996). A new framework for understanding cognition and affect in writing. In C. M. Levy & S. Ransdell (Eds.), The science of writing: Theories, methods, individual differences, and applications (pp. 1-27). Mahwah, NJ: Lawrence Erlbaum.

Hiebert, E. H. (2013). Core vocabulary and the challenge of complex text. In S. B. Neuman & L. B. Gambrell (Eds.), Quality reading instruction in the age of common core standards (pp. 149–161). Newark, DE: International Reading Association.

Hood, S. (2008). Summary writing in academic contexts: Implicating meaning in processes of change. Linguistics and Education, 19(4), 351–365.

Jisa, H. (2004). Growing into academic French. In R. A. Berman (Ed.), Language development across childhood and adolescence (pp. 135–162). Amsterdam: Benjamins.

Jisa, H., Reilly, J., Verhoeven, L., Baruch, E., & Rosado, E. (2002). Cross-linguistic perspectives on the use of passive constructions in written texts. Journal of Written Language and Literacy, 5(2), 163–181.

Juel, C. (1988). Learning to read and write: A longitudinal study of 54 children from first through fourth grades. Journal of Educational Psychology, 80(4), 437.

Juel, C., Griffith, P. L., & Gough, P. B. (1986). Acquisition of literacy: A longitudinal study of children in first and second grade. Journal of Educational Psychology, 78(4), 243.

Kellogg, R. T. (2008). Training writing skills: A cognitive developmental perspective. Journal of Writing Research, 1(1), 1–26.

Kent, S. C., & Wanzek, J. (2016). The relationship between component skills and writing quality and production across developmental levels: A meta-analysis of the last 25 years. Review of Educational Research, 86(2), 570–601.

Kim, Y. S., Al Otaiba, S., Folsom, J. S., Greulich, L., & Puranik, C. (2014). Evaluating the dimensionality of first-grade written composition. Journal of Speech, Language, and Hearing Research, 57(1), 199–211.

Kim, Y. S., Al Otaiba, S., Puranik, C., Folsom, J. S., Greulich, L., & Wagner, R. K. (2011). Componential skills of beginning writing: An exploratory study. Learning and Individual Differences, 21(5), 517–525.

Kim, Y. S. G., & Schatschneider, C. (2017). Expanding the developmental models of writing: A direct and indirect effects model of developmental writing (DIEW). Journal of Educational Psychology, 109(1), 35–50.

Kintsch, W. (1994). Text comprehension, memory, and learning. American Psychologist, 49(4), 294.

Langer, J. A. (1986). Children reading and writing: Structures and strategies. New York City: Ablex Publishing.

Laufer, B., & Nation, P. (1995). Vocabulary size and use: Lexical richness in L2 written production. Applied Linguistics, 16(3), 307–322.

Maas, C. J., & Hox, J. J. (2005). Sufficient sample sizes for multilevel modeling. Methodology, 1(3), 86–92.

McCutchen, D. (1986). Domain knowledge and linguistic knowledge in the development of writing ability. Journal of Memory and Language, 25(4), 431–444.

Miller, B., & McCardle, P. (2011). Reflections on the need for continued research on writing. Reading and Writing, 24(2), 121–132.

Moje, E. B. (2015). Doing and teaching disciplinary literacy with adolescent learners: A social and cultural enterprise. Harvard Educational Review, 85(2), 254–278.