Abstract

Does rationality require imprecise credences? Many hold that it does: imprecise evidence requires correspondingly imprecise credences. I argue that this is false. The imprecise view faces the same arbitrariness worries that were meant to motivate it in the first place. It faces these worries because it incorporates a certain idealization. But doing away with this idealization effectively collapses the imprecise view into a particular kind of precise view. On this alternative, our attitudes should reflect a kind of normative uncertainty: uncertainty about what to believe. This view refutes the claim that precise credences are inappropriately informative or committal. Some argue that indeterminate evidential support requires imprecise credences; but I argue that indeterminate evidential support instead places indeterminate requirements on credences, and is compatible with the claim that rational credences may always be precise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

A traditional theory of uncertainty says that beliefs come in degrees. Degrees of belief (“credences”) have real number values between 0 and 1, where 1 conventionally represents certain belief, 0 represents certain disbelief, and values in between represent degrees of uncertainty. We have elegant, well-understood normative theories for credences: norms for how credences should hang together at a time, how they should change in response to new evidence, and how they should influence our preferences.

Many have argued, against the traditional theory, that rational subjects have imprecise credences. Instead of sharp, real number values like .4, rational agents have credences that are spread out over multiple real numbers, e.g. intervals like [.2, .8]. There are descriptive and normative versions of the view.

The descriptive (psychological) version argues that humans’ cognitive capacities don’t allow for infinitely sharp credences. In creatures like us, there’s typically no genuine psychological difference between having credence .8 and credence .8000000000000001. So we are better represented with imprecise credences.Footnote 1

The normative (epistemic) version argues that even idealized agents without our cognitive limitations shouldn’t have sharp credences. Epistemic rationality sometimes demands imprecise credences.Footnote 2

This paper concerns the latter view. The former view may be correct as a matter of psychological fact. Creatures like us may be better represented by some imprecise credence model or other. But this doesn’t tell us much about epistemology. Epistemic theories of imprecise credences say that, for evidential reasons, it can be irrational to have precise credences. But these theories typically represent ideal rationality in ways that go far beyond human cognitive capacities.

This paper concerns, instead, the contents of epistemic norms.Footnote 3 Does rationality require imprecise credences? Many hold that it does: imprecise evidence requires correspondingly imprecise credences. I’ll argue that this is false. Imprecise evidence, if such a thing exists, at most requires uncertainty about what credences to have. It doesn’t require credences that are themselves imprecise.

In Sects. 1 and 2, I summarize motivations for the imprecise view and put forward a challenge for it. Briefly, the view faces the same arbitrariness worries that were meant to motivate it in the first place. The imprecise view faces these challenges because it incorporates a certain idealization. But doing away with that idealization effectively collapses the imprecise view into a particular kind of precise view. On this alternative, our attitudes should reflect a kind of normative uncertainty: uncertainty about what to believe. I defend this proposal in Sect. 3.

In Sects. 4 and 5, we reach the showdown. Section 4 argues against the claim that precise credences are inappropriately informative or committal. Section 5 argues against the claim that indeterminate evidential support requires imprecise credences. Are there any reasons to go imprecise that don’t equally support going precise with normative uncertainty? The answer, I argue, is no. Anything mushy can do, sharp can do better.

1 Imprecise credences

1.1 What are imprecise credences?

Imprecise credences, whatever they are, are doxastic states that are more coarse-grained than precise credences. The psychological nature of imprecise credences is widely contested. How are they behaviorally manifested? How are they functionally distinct from precise credences?Footnote 4 Are nonideal agents like us capable of having imprecise credences—for example, interval or set-valued credences? No orthodox answer to these questions has emerged.

How should imprecise credences be modeled? Some have suggested representing imprecise credences with a comparative confidence ranking.Footnote 5 Some suggest using imprecise credence functions, from propositions (sentences, events, ...) to lower and upper bounds, or to intervals within [0, 1].Footnote 6 I’ll focus on a model, defended at length by Joyce (2010), that represents agents’ doxastic states with sets of precise probability functions.

I’ll use the following notation: C is the set of probability functions c that characterize an agent’s belief state; call C an agent’s “representor.” For representing imprecise credences toward propositions, we can say C(A) is the set of probabilities assigned to A by some probability function in an agent’s representor.

A representor can be thought of as a committee of probability functions. The committee decides by unanimous vote. If the committee unanimously votes to assign A credence greater than .5, then the agent is more confident than not in A. If the committee unanimously votes that the agent should perform some action, then the agent is rationally required to perform that action.Footnote 7 And so on.

1.2 The epistemic argument for imprecise credences

Many proponents of imprecise credences claim that in the face of some bodies of evidence, it is simply irrational to have precise credences. These bodies of evidence are somehow ambiguous (they point in conflicting directions) or unspecific (they don’t point in any direction).Footnote 8 It’s an open question how widespread this kind of evidence is. Often it’s implicitly assumed that we can only have precise credences where we have knowledge of objective chances. Any evidence that doesn’t entail chances is imprecise, and requires imprecise credences.

Note that the dialectic here is somewhat different from more common debates about imprecise credences. In other contexts, fans of imprecise credences have faced the challenge of showing that imprecise credences are rationally permissible: for example, in debates over whether imprecise credences can serve as a guide to rational actionFootnote 9 or allow for inductive learning.Footnote 10

This paper concerns the evidentialist argument for imprecise credences. The argument is thought to show that imprecise credences aren’t merely permissible: in the face of imprecise evidence, they’re rationally required. Since the phrase “proponent of imprecise credences” doesn’t distinguish between these two views, we have to introduce new terminology. Proponents of rationally required imprecise credences will be called “mushers.”Footnote 11 I’ll defend the “sharper” view: that precise credences are always rationally permissible.

We’ll consider two examples that are commonly used to elicit the musher intuition:

Jellyfish. “A stranger approaches you on the street and starts pulling out objects from a bag. The first three objects he pulls out are a regular-sized tube of toothpaste, a live jellyfish, and a travel-sized tube of toothpaste. To what degree should you believe that the next object he pulls out will be another tube of toothpaste?” (Elga 2010, 1)

If there’s any such thing as imprecise evidence, this looks like a good candidate. Unless you have peculiar background beliefs, the evidence you’ve received will feel too unspecific or ambiguous to support any particular assignment of probability. It doesn’t obviously seem to favor a credence like .44 over a credence like .78 or .21. According to the musher, it would be epistemically inappropriate to assign any precise credence. Instead, you should take on a state that equally encompasses all of the probability functions that could be compatible with the evidence.

A second case:

Mystery Coin. You have a coin that was made at a factory where they can make coins of any bias. You have no idea whatsoever what bias your coin has. What should your credence be that the next time you toss the coin, it’ll land heads? (See e.g. Joyce 2010.)

This case is more theoretically loaded. There is a sharp credence that stands out as a natural candidate: .5. After all, you have no more reason to favor heads than to favor tails; your evidence is symmetric. Credence .5 in both heads and tails seems prima facie to preserve this symmetry.

But Joyce (2010) and others have argued that the reasoning that lands us at this answer is faulty. The reasoning presumably relies on the principle of indifference, which says that if there is a finite set of mutually exclusive possibilities and you have no reason to believe any one more than any other, then you should distribute your credence equally among them. But the principle of indifference faces serious (though perhaps not decisive) objections.Footnote 12 Without something like it, what motivates the .5 answer?

According to Joyce, nothing does. There’s no more reason to settle on a credence like .5 than a credence like .8 or .421. Joyce sees this as a case of imprecise evidence. If you knew the objective chance of the coin’s landing heads, then you should adopt a precise credence. But if you have no information about the chance, you should have an imprecise credence that includes all of the probabilities that could be equal to the objective chance of the coin’s landing heads, given your evidence. In this case, that may be the full unit interval [0, 1].Footnote 13

The musher’s assessment of these cases: any precise credence function would be an inappropriate response to the evidence. It would amount to taking a definite stance when the evidence doesn’t justify a definite stance. It would mean adopting an attitude that is in some way more informative than what the evidence warrants. It would mean failing to fully withhold judgment where judgment ought to be fully withheld.

Mushers in their own words:

[E]ven if men have, at least to a good degree of approximation, the abilities bayesians attribute to them, there are many situations where, in my opinion, rational men ought not to have precise utility functions and precise probability judgments. (Levi 1974, 394–395)

If there is little evidence concerning \(\Omega\) then beliefs about \(\Omega\) should be indeterminate, and probability models imprecise, to reflect the lack of information. (Walley 1991)

Precise credences ...always commit a believer to extremely definite beliefs about repeated events and very specific inductive policies, even when the evidence comes nowhere close to warranting such beliefs and policies. (Joyce 2010, 285)

[In Elga’s Jellyfish case,] you may rest assured that your reluctance to have a settled opinion is appropriate. At best, having some exact real number assessment of the likelihood of more toothpaste would be a foolhardy response to your unspecific evidence. (Moss 2014, 2)

Hillary Clinton will win the 2016 U.S. presidential election. Tesla Motors’ stock price will be over $310 on January 1, 2016. Dinosaurs were wiped out by a giant asteroid, rather than gradual climatic change. If you have a perfectly precise credence on any of these matters, you might be a little off your rocker. Having a precise credence for a proposition X means having opinions that are so rich and specific that they pin down a single estimate c(X) of the truth-value of X. ...If your evidence is anything like mine, it is too incomplete and ambiguous to justify such a rich and specific range of opinions. (Konek, forthcoming, 1)

The musher’s position in slogan form: imprecise evidence requires imprecise credences.Footnote 14

There are two strands of thought amongst mushers about the nature of imprecise evidence:

-

1.

The most common assumption is that any evidence that doesn’t entail a precise objective chance for a proposition A is imprecise with respect to A.Footnote 15 The notion of imprecise evidence is often illustrated by contrasting cases like Mystery Coin with fair coin tosses; the form of evidence that appears in the Ellsberg paradox is another example.Footnote 16 In practice, this arguably means that unless evidence entails either A or its negation, it is imprecise with respect to A. There are arguably no realistic cases where it’s rational to be certain of precise, non-extremal objective chances.

-

2.

Some mushersFootnote 17 seem to treat imprecise evidence as less ubiquitous, arising in cases where individual items of evidence pull hard in opposing directions: court cases where both the prosecution and defense have mounted compelling arguments with complex and detailed evidence; cases of entrench disagreement among experts on the basis of shared evidence; and so on. These mushers generally allow that highly incomplete evidence is also imprecise. (For example, one’s attitude about the defendant’s guilt upon receiving a jury summons, before learning anything about the case; for another, the Jellyfish example above.) For this sort of musher, the notion of imprecise evidence is left both intuitive and vague: there may be no bright dividing line between precise and imprecise evidence, as there is for the first sort of musher.

I intend to cast a broad net, and my use of “imprecise evidence” is intended to be compatible with either interpretation. It’s the mushers’ contention that there are some bodies of evidence that intuitively place special demands on our credences: they require a kind of uncertainty or suspension of judgment beyond merely having middling credence in the relevant propositions. The musher holds that the best way of understanding the required form of uncertainty uses the notion of imprecise credences. My contention is that if any form of evidence does place these special demands, the special form of uncertainty they demand is better represented in other ways.

2 A challenge for imprecise credences

2.1 The arbitrariness challenge

In cases like the Jellyfish case, the musher claims that the evidence is too unspecific or ambiguous to support any precise credence. If you were to adopt a precise credence that the next item pulled from the bag would be toothpaste, what evidential considerations could possibly justify it over a nearby alternative?

But the musher faces a similar challenge. What imprecise credence is rationally permissible in the Jellyfish case? The musher is committed to there being one. Suppose their answer is that, given your total evidence, the rationally obligatory credence is the interval [.2, .8]. Then the musher faces the same challenge as the sharper: why is it rationally required to have credence [.2, .8]—

What evidential considerations could possibly justify that particular imprecise credence over nearby alternatives? How could the evidence be so unambiguous and specific as to isolate out any one particular interval? This objection is discussed in Good (1962), Walley (1991), and Williamson (2014).Footnote 18

Three moves the musher could make:

First, the musher might go permissivist. They might say [.2, .8] and [.2, .80001] are both rationally permissible, given the relevant total evidence. We don’t need to justify adopting one over the other.

The problem: going permissivist undermines the most basic motivations for the musher position. The permissivist sharper can equally say: when the evidence is murky, more than one precise credence is rationally permitted. The musher was right, the permissivist sharper allows, that imprecise evidence generated an epistemic situation where any precise credence would be arbitrary. But any imprecise credence would also be arbitrary. So, thus far we have no argument that imprecise credences are epistemically better. (This may be why, as a sociological fact, mushers tend not to be permissivists.)

Moreover, the problem still re-emerges for the imprecise permissivist: which credence functions are rationally permissible, which are rationally impermissible, and what could possibly ground a sharp cutoff between the two? Suppose the greatest permissible upper bound for an imprecise credence in the toothpaste case is .8. What distinguishes permissible imprecise credences with an upper bound of .8 from impermissible imprecise credences with an upper bound of .80001? (You might say: go radically permissive; permit all possible sets of credences. But this is to abandon the musher view, since precise credences will be rationally permissible. Otherwise, what justifies permitting set-valued credences of cardinality 2 while forbidding those with cardinality 1?)

Second, the musher might say that in cases like the Jellyfish case, there’s only one rationally permissible credence, and it’s [0, 1]. The only way it would be permissible to form any narrower credence would be if you knew the objective chances.

Problem: given how rarely we ever have information about objective chances of unknown events—personally, I’ve never seen a perfectly fair coin—virtually all of our uncertain credences would be [0, 1]. Surely rational thinkers can be more confident of some hypotheses over others without knowing anything about chances.

One might object: even with ordinary coins, you do know that the chance of heads is not .9. So you needn’t have maximally imprecise credences. Reply: there’s no determinate cutoff between chances of heads I can rule out and those I can’t. So the musher still must explain how a unique imprecise credence could be rationally required, or how a unique set of imprecise credences could be rationally permissible. Mushers would still rely unjustifiably on sharp cutoff points in their models—cutoffs that correspond to nothing in the epistemic norms.

2.2 A solution: vague credences

Finally, the musher might say that set-valued credences are a simplifying idealization. Rational credences are not only imprecise but also vague. Instead of having sharp cutoffs, as intervals and other sets do—

The sharp cutoff was generated by the conception of imprecise credences as simply representable by sets of real numbers. Set membership creates a sharp cutoff: a probability is either in or out. But evidence is often too murky: there isn’t a bright line between probabilities ruled out by the evidence and probabilities ruled in.

So imprecise credences should be represented with some sort of mathematical object more structured than mere sets of probabilities. We need a model that represents their vagueness. The natural way to achieve this is to introduce a weighting. We should allow some probabilities to have more weight than others. In the example above, probabilities nearer to 0 or 1 receive less weight than probabilities closer to the middle.Footnote 19

What does the weighting represent? It should just represent a vague version of what set membership represents for non-vague imprecise credences.Footnote 20 What is represented about an agent by the claim that a probability function c is in an agent’s representor C? Joyce (2010) writes: “Elements of C are, intuitively, probability functions that the believer takes to be compatible with her total evidence” (288).

It’s not clear what Joyce means by “compatible with her total evidence.” He certainly can’t mean that these probability functions are rationally permissible for the agent to adopt as her credence function. After all, the elements of C are all precise.

So we have to interpret “compatible with total evidence” differently from “rational to adopt.” Leave it as an open question what it means for the moment. If imprecise credences are to be vague, we should assume this “compatibility” comes in degrees. Then we let the weighting over probabilities represent degrees of “compatibility.”

Note that imposing some structure over imprecise credences can solve problems beyond the arbitrariness challenge I’ve presented. One of the big challenges for fans of imprecise credences is to give a decision theory that makes agents behave in ways that are intuitively rational. A comparatively uncontroversial decision rule, E-Admissibility, permits agents to choose any option with maximal expected utility according to at least one probability in her representor. But, as Elga (2010) argues, E-admissibility permits agents to accept a variant of a diachronic Dutch book. Generating a decision rule that avoids this problem is difficult.Footnote 21

The added structure of weighted imprecise credences makes Elga’s pragmatic challenge easier to avoid. Probabilities that have greater weight within an imprecise credence plausibly have more effect on what actions are rational for an agent to perform. Probabilities with less weight have less bearing on rational action. We can use the weighting to generate a weighted average of the probabilities an imprecise credence function assigns to each possible state of the world. Then our decision rule could be to maximize expected utility according to the weighted average probabilities.Footnote 22

Summing up: we’ve pieced together a model for imprecise credences that insulates the musher view from the same arbitrariness worries that beleaguer precise credences. We did so by representing imprecise credences as having added structure: instead of mere sets of probabilities, they include a weighting over those probabilities. Indeed, the sets themselves become redundant: whichever probabilities are definitively ruled out will simply receive zero weight. All we need is a weighting over possible probability functions.

So far so good. But there is a problem. How should we interpret this weighting? Where does it fit into our psychology of credences?

We introduced the weighting to reflect what Joyce calls “compatibility with the evidence.” That property isn’t all-or-nothing; it admits of borderline cases. The weighting reflects “degrees of compatibility.” But we already had something to fill this role in our psychology of credences, independently of imprecision. The ideally rational agent has credences in propositions about compatibility with the evidence.

And so the sharper will conclude: this special weighting over probability functions is just the agent’s credence in propositions about what credences the evidence supports. In other words, it represents the agent’s normative uncertainty about rationality. A probability gets more weight just in case the agent has higher credence that it is compatible with the evidence.Footnote 23

The structured musher view adds nothing new. It’s just an unnecessarily complicated interpretation of the standard precise credence model.

Now, there might be other strategies that the musher can pursue for responding to the arbitrariness worry. Perhaps there is some fundamental difference in the mental states associated with the weighted musher view and its sharper cousin. I don’t claim to have backed the musher into a corner.

I draw attention to the strategy of imposing weightings over probabilities in imprecise representors for two reasons. First, I think it’s the simplest and most intuitive strategy the musher can adopt for addressing the arbitrariness challenge. Second, it brings into focus an observation that I think is important in this debate, and that has been so far widely ignored: uncertainty about what’s rational can play the role that imprecise credences were designed for. Indeed, I’m going to try to convince you that it can play that role even better than imprecise credences can.

3 The precise alternative

3.1 The job of imprecise credences

Before showing how this sort of sharper view can undermine the motivations for going imprecise, let’s see what exactly the view amounts to.

Instead of having imprecise credences, I suggested, the rational agent will have a precise credence function that includes uncertainty about its own rationality and about the rationality of alternative credence functions. The weighting of each credence function c is just the agent’s credence that c is rational.

Take the probability functions that might be compatible with the evidence: \(c_1, c_2, \ldots , c_n\). The musher claims you should have the belief state \(\{c_1, c_2, \ldots , c_n\}\). The sharper view I want to defend says: you should have specific attitudes toward \(c_1, c_2, \ldots , c_n\). A key difference: the musher demands a special kind of attitude (a special vehicle of content). The sharper suggests an ordinary attitude toward a particular kind of content.

Imprecise credences are thought to involve a particular kind of uncertainty: whatever form of uncertainty is appropriate to maintain in the face of ambiguous or unspecific evidence. It is often said that this form of uncertainty makes it impossible to assign a probability to some proposition. I’ll discuss three forms of uncertainty about probability that may fit the bill.

First: uncertainty about objective probabilities.Footnote 24 The Mystery Coin case is a case where objective probabilities are unknown. In such cases, one’s uncertainty goes beyond the uncertainty of merely not knowing what will happen: for example, whether the coin will land heads.

Second, uncertainty about subjective probabilities. Examples like the Jellyfish case reveal that it can sometimes be difficult for ordinary agents like us to introspect our own credences. If I do, in fact, assign a precise credence to the proposition that the next item from the bag will be a tube of toothpaste, I have no idea what that credence is. Similarly if I assign an imprecise credence: I still don’t know. Of course, agents like us have introspective limitations that ideally rational agents may not have. It’s not obvious what ideal rationality requires: it might be that in some cases, rational agents should be uncertain about what their own credences are.Footnote 25 I leave this as an open possibility.

Finally, uncertainty about epistemic probabilities. In some cases, it’s unclear which way the evidence points. Epistemic probabilities are probabilities that are rationally permissible to adopt given a certain body of evidence.Footnote 26 They characterize the credences, or credal states, that are rational relative to that evidence. In the Jellyfish example, it’s hard to see how one’s evidence could point to a unique precise assignment of probability. It’s also hard to see how it could point to a unique imprecise assignment of probability. Indeed, the evidence may not determinately point at all.

So: there are three factors that may be responsible for the intuition that, in cases like Jellyfish and Mystery Coin, the evidence is imprecise: uncertainty about objective probabilities, uncertainty about subjective probabilities, and uncertainty about epistemic probabilities. Some cases of (putative) imprecise evidence may involve more than one of these forms of uncertainty. My primary focus will be on objective and epistemic probabilities.

3.2 Precise credences do the job better

On the musher view, I suggest, rational uncertainty about the objective and epistemic probabilities of a proposition A are reflected in an agent’s attitude toward A. But plausibly, the rational agent has doxastic attitudes not just toward A, but also toward propositions about the objective and epistemic probabilities of A. When evidence is imprecise, she will also be uncertain about these other propositions.

In the case of objective probabilities this should all be uncontroversial, at least to the friend of precise credences. But I want to defend a more controversial claim: that genuinely imprecise evidence, if there is such a thing, demands uncertainty about epistemic probabilities. This is a form of normative uncertainty: uncertainty about what’s rational. The norms of rationality are not wholly transparent even to ideally rational agents.

It’s compatible with even ideal rationality for an agent to be uncertain about what’s rational. [This thesis, which sometimes goes under the name Modesty, is defended in Elga (2007).]Footnote 27 An ideally rational agent can even be somewhat confident that her own credences are not rational. For example: suppose a rational agent is faced with good evidence that she tends to be overconfident about a certain topic and good competing evidence that she tends to be underconfident about that same topic. The appropriate response to this sort of evidence is to become relatively confident that her credences do not match the epistemic probabilities. (If the two competing forms of evidence are balanced, she won’t need to adjust her first-order credences at all. And no adjustment of them will reassure her that she’s rational.) So a rational agent may not know whether she has responded correctly to her evidence.Footnote 28

I claim that imprecise evidence, if it exists, requires uncertainty about epistemic probabilities. This is, of course, compatible with continuing to have precise credences.Footnote 29 Once we take into account uncertainty about objective and epistemic probabilities, it’s hard to motivate imprecision.

For example, consider the argument Joyce (2010) uses to support imprecise credences in the Mystery Coin case:

\(f_U\) [the probability density function determined by the principle of indifference] commits you to thinking that in a hundred independent tosses of the [Mystery Coin] the chances of [heads] coming up fewer than 17 times is exactly 17/101, just a smidgen (\(= 1/606\)) more probable than rolling an ace with a fair die. Do you really think that your evidence justifies such a specific probability assignment? Do you really think, e.g., that you know enough about your situation to conclude that it would be an unequivocal mistake to let $100 ride on a fair die coming up one rather than on seeing fewer than seventeen [heads] in a hundred tosses? (284)

Answer: No. If the Mystery Coin case is indeed a case of imprecise evidence, then I may be uncertain about whether my evidence justifies this (epistemic) probability assignment. If so, I will be uncertain about whether it would be an unequivocal mistake to bet in this way. After all, whether it’s an unequivocal mistake is determined by what credences are rational, not by whatever credences I happen to have.

A rational agent might independently know what’s rational to believe. She might know the higher-order chances; she might learn from the Oracle what’s rational.Footnote 30 If the Oracle tells an agent that a certain precise credence is rational, then the agent should adopt that credence. In such cases, there’s no good reason to think of the evidence as imprecise. There’s nothing murky about it. Chances are just one of the many facts that evidence might leave unsettled.

So: contra the musher orthodoxy, I conclude that evidence that underdetermines chances isn’t necessarily imprecise evidence. What is distinctive about imprecise evidence? I claim: the distinctive feature of imprecise evidence is that it rationalizes uncertainty about rationality.

Whatever imprecise evidence might require of our attitudes about what’s rational, it only places a weak constraint: uncertainty. A question looms: which precise credences are rational? After all, the musher’s initial challenge to the sharper was to name any first order credences in the Jellyfish case that could seem rationally permissible.

The kind of view I’m advocating does not and should not offer an answer to that question. The view is that, in cases of imprecise evidence, rationality requires withholding judgment about which credences are rational. The view would be self-undermining if it also tried to answer the question of what credences are rational.Footnote 31

One might wonder: how does normative uncertainty of this sort translate into rational action? How should I decide how to behave when I’m uncertain what’s rational? The most conservative view is that normative uncertainty doesn’t affect how it’s rational to act, except insofar as it constrains what first-order credences are rational.Footnote 32 A simple example: suppose you are offered a bet about whether A. Then you should choose on the basis of your credence in A, not your credence in the proposition that your credence in A is rational. Of course, if it’s rational to be uncertain about whether your credences are rational, then even though your decisions are rational, you might not be in a position to know that they are.Footnote 33

Now we have a sharper view on the table. This view shows the same sensitivity to imprecise evidence as the musher view. It does all the epistemic work that the musher view was designed for. What’s more, the best response that the musher can give to address arbitrariness worries and decision theoretic questions will in effect collapse the musher view into a notational variant of this sharper view.

So are there any reasons left to go imprecise? In the remainder of this paper, I’m going to argue that there aren’t.

4 Undermining imprecision

There is a lot of pressure on mushers to move in the direction of precision. For example, there is pressure for mushers to provide decision rules that make agents with imprecise credences behave as though they have precise credences. Preferences that are inconsistent with precise credences are often intuitively irrational.Footnote 34 Various representation theorems purport to show that if an agent’s preferences obey intuitive rational norms, then the agent can be represented as maximizing expected utility relative to a unique precise probability function and utility function (unique up to positive affine transformation).

Suppose rational agents must act as though they have precise credences. Then on the widely presupposed interpretivist view of credences—that whatever credences the agent has are those that best explain and rationalize her behavioral dispositions—the game is up. As long as imprecise credences don’t play a role in explaining and rationalizing the agent’s behavior, they’re a pointless complication.Footnote 35

But the musher might simply reject interpretivism. Even if rational agents are disposed to act as though they have precise credences in all possible situations, the musher might claim, epistemic rationality nevertheless demands that they have imprecise credences. Imprecise credences might play no role in determining behavior. Their role is to record the imprecision of one’s evidence.

This bullet might be worth biting if we had good reason to think that epistemic norms in fact do require having imprecise credences. So the big question is: is there any good motivation for the claim that epistemic norms require imprecise credences?

I’m going to argue that the answer is no. Whatever motivations there were for the musher view, they equally support the sharper view that I’ve proposed: that imprecise evidence requires uncertainty about rationality. I’ll consider a series of progressive refinements of the hypothesis that imprecise evidence requires imprecise credences. I’ll explain how the motivations for each can be accommodated by a sharper view that allows for uncertainty about epistemic and objective probabilities. This list can’t be exhaustive, of course. But it will show that the musher view has a dangerously low ratio of solutions to problems.

4.1 Do precise credences reflect certainty about chances?

In motivating their position, mushers often seem to identify precise credences with full beliefs about objective probabilities. Here’s an example:

A...proponent of precise credences...will say that you should have some sharp values or other for [your credence in drawing a particular kind of ball from an urn], thereby committing yourself...to a definite view about the relative proportions of balls in your urn...Postulating sharp values for [your credences] under such conditions amounts to pulling statistical correlations out of thin air. (Joyce 2010, 287, boldface added)

Another example:Footnote 36

[H]aving precise credences requires having opinions that are so rich and specific that they pin down a single estimate c(X) of the truth-value of every proposition X that you are aware of. And this really is incredibly rich and specific. It means, inter alia, that your comparative beliefs—judgments of the form Xis at least as probable asY—must be total. That is, you must either thinkX is at least as probable as Y, or vice versa, for any propositions X and Y that you are aware of. No abstaining from judgment. Same goes for your conditional comparative beliefs. You must either think thatX given D is at least as probable as Y given \(D'\), or vice versa, for any propositions X and Y, and any potential new data, D and \(D'\). Likewise for your preferences. You must either think that bet A is at least as choiceworthy as bet B, or vice versa, for any A and B. (Konek, forthcoming, 2, boldface added)

Joyce and Konek seem to be endorsing the following principle:

-

CREDENCE/CHANCE: having credence n in A is the same state as, or otherwise necessitates, believing or having credence 1 that the chance of A is (or at some prior time was) n.Footnote 37

A flatfooted objection seems sufficient here: one state is a partial belief, the content of which isn’t about chance. The other is a full belief about chance. So surely they are not the same state.

More generally: whether someone takes a definite stance about the chance of A isn’t the kind of thing that can be read locally off of her credence in A. There are global features of an agent’s belief state that determine whether that credence reflects some kind of definite stance or whether it simply reflects a state of uncertainty.

For example, in the Mystery Coin case, someone whose credence in heads is .5 on indifference grounds will have different introspective beliefs and different beliefs about chance from someone who believes that the objective chance of heads is .5. The former can confidently believe: I don’t have any idea what the objective chance of heads is; I doubt the chance is .5; etc. Obviously, neither is rationally compatible with taking a definite position that the chance of heads is .5.

Another example: a credence \(c(A) = n\) doesn’t always encode the same degree of resiliency relative to possible new evidence. The resiliency of a credence is the degree to which it is stable in light of new evidence.Footnote 38 When an agent’s credence in A is n because she believes the chance of A is n, that credence is much more stubbornly fixed at n. Precise credences formed under uncertainty about chances are much less resilient in the face of new evidence.Footnote 39 For example, if you learn that the last three tosses of the coin have all landed heads, you’ll substantially revise your credence that the next coin will land heads. (After all, three heads is some evidence that the coin is biased toward heads.) But if your .5 credence comes from certainty that the chance of heads is .5, then your credence should be highly resilient in response to this evidence.

In short: the complaint against precise credences that Joyce and Konek seem to be offering in the passages quoted above hinges on a false assumption: that having a precise credence in a hypothesis A requires taking a definite view about chance. Whether an agent takes a definite view about the chance of A isn’t determined by the precision of her credence in A.

For rational agents who have credences in propositions about chances and about rationality, imprecise credences are redundant. They represent a form of uncertainty about objective probability that’s already represented in the agent’s attitudes toward other propositions.

The imprecise representation of uncertainty about probabilities is not merely redundant; it’s also a worse representation than higher-order credences. Consider the Mystery Coin case. Using imprecise credences in heads to represent uncertainty about objective chances is effectively to revert to the binary belief model. It is to treat uncertainty about whether the coin lands heads as a binary belief about the objective chance of heads. The belief rules out every epistemically impossible chance, rules in every epistemically possible chance, and incorporates no information whatsoever about the agent’s relative confidence in each chance hypothesis. This loss of information can be repaired only by introducing higher-order credences or credal states, which leaves the first-order imprecision otiose.

4.2 Are precise credences are “too informative”?

The second motivation for imprecise credences is a generalization of the first. Even if precise credences don’t encode full beliefs about objective probabilities, they still encode some information that they shouldn’t.

Even if one grants that the uniform density is the least informative sharp credence function consistent with your evidence, it is still very informative. Adopting it amounts to pretending that you have lots and lots of information that you simply don’t have. (Joyce 2010, 284)

If you regard the chance function as indeterminate regarding X, it would be odd, and arguably irrational, for your credence to be any sharper...How would you defend that assignment? You could say “I don’t have to defend it—it just happens to be my credence.” But that seems about as unprincipled as looking at your sole source of information about the time, your digital clock, which tells that the time rounded off to the nearest minute is 4:03—and yet believing that the time is in fact 4:03 and 36 seconds. Granted, you may just happen to believe that; the point is that you have no business doing so. (Hájek and Smithson 2012, 38–39)

Something of this sort seems to underpin a lot of the arguments for imprecise credences.

There’s a clear sense in which specifying a set of probability functions can be less informative than specifying a unique probability function. But informative about what? The argument in Sect. 4.1 generalizes. The basic challenge to the musher is the same: if they claim that precise credence c(A) is too informative about some topic B, then they need to explain why it isn’t adequate to pair c(A) with total suspension of judgment about B.

Imprecise credences do encode less information than precise credences. But we should ask:

-

(1)

What kind of information is encoded?

-

(2)

Is it irrational to encode that information?

The answers: (1) information about agents’ mental states, and (2) no.

Precise credences are not more informative about things like coin tosses. Rather, ascriptions of precise credences are more informative about the psychology and dispositions of the agent. This is third-personal, theoretical information about an agent’s attitudes, not information in the contents of agent’s first-order attitudes.Footnote 40

Suppose you learn that I have credence .5 in A. Then you know there’s a precise degree to which I’m disposed to rely on A in my reasoning and decisions on how to act. Just as there is a precise degree to which I feel pain, or a precise degree to which I am hungry, on some psychologically appropriate scale.

Of course, we don’t always have access to these properties of our psychology. But it’s reasonable to expect that our psychological states are precise, at a time, at least up to a high degree of resolution. I might have the imprecise degree of pain [.2, .21], but I won’t have the imprecise degree [0, 1] on a zero-to-one scale. What imprecision there might be results from the finitude of bodies. I claim the same is true of our credences. And in both cases, it is not out of the question that these quantities are precise. Finite bodies can determine precise quantitative measurements: for example, blood pressure.Footnote 41

Precise credences provide unambiguous and specific information about rational agents’ psychology and dispositions. But why would there be anything wrong with being informative or unambiguous or specific in this way?

Objection 1. In the Mystery Coin case, if your credence in heads is .5, then you are committed to claiming: “The coin is .5 likely to land heads.” That counts as information about the coin that the agent is presuming to have. Any precise credence will commit an agent to some claim about likelihood; imprecise credences do not.

Reply. What does it mean, in this context, to affirm: “The coin is .5 likely to land heads”? It doesn’t mean that you think the objective probability of the coin landing heads is .5; you don’t know whether that’s true. It doesn’t even mean that you think the epistemic probability of the coin landing heads is .5; you can be uncertain about that as well.

In fact, “It’s .5 likely that heads” doesn’t express any commitment at all. It just expresses the state of having .5 credence in the coin’s landing heads.Footnote 42 But then the .5 part doesn’t tell us anything about the coin. It just characterizes some aspect of your psychological state.

Objection 2. If the evidence for a proposition A is genuinely imprecise, then there is some sense in which adopting a precise credence in A means not withholding judgment where you really ought to.

Reply. If an agent’s credence in A is not close to 0 or 1, then the agent is withholding judgment about whether A. That’s just what withholding judgment is. The musher seems to think that the agent should double down and withhold judgment again.

In short: there’s just no reason to believe that imprecise evidence requires imprecise credences. Why should our attitudes be confusing or messy just because the evidence is? (If the evidence is unimpressive, that doesn’t mean our credences should be unimpressive.) What is true is that imprecise evidence should be reflected in our credences somehow or other. But that can amount to simply believing that the evidence is ambiguous and unspecific, being uncertain what to believe, having non-resilient credences, and so on.

5 Evidential indeterminacy

5.1 Imprecise evidence as indeterminate confirmation

We’ll consider one final refinement of the motivation for going mushy. This refinement involves breaking down the claim that precise credences are overly informative into two premises:

Imprecise Confirmation: The confirmation relation between bodies of evidence and propositions is imprecise.

Strict Evidentialism: Your credences should represent only what your evidence confirms.

These two claims might be thought to entail the musher view.Footnote 43

According to the first premise, for some bodies of evidence and some propositions, there is no unique precise degree to which the evidence supports the proposition. Rather, there are multiple precise degrees of support that could be used to relate bodies of evidence to propositions. This, in itself, is not a claim about rational credence, any more than claims about entailment relations are claims about rational belief. So in spite of appearances, this is not simply a denial of the sharper view, though the two are tightly related.

In conjunction with Strict Evidentialism, though, it might seem straightforwardly impossible for the sharper to accommodate Imprecise Confirmation.

Of course, some sharpers—in particular, objective Bayesians—will consider it no cost at all to reject Imprecise Confirmation. They consider this a fundamental element of the sharper view, not some extra bullet that sharpers have to bite.

But whether rejecting Imprecise Confirmation is a bullet or not, sharpers don’t have to bite it. The conjunction of Imprecise Confirmation and Strict Evidentialism is compatible with the sharper view.

It’s clear that Imprecise Confirmation is compatible with one form of the sharper view: precise permissivism, e.g. subjective Bayesianism. If there are multiple probability functions that each capture equally well what the evidence confirms, then according to precise permissivists, any of them is permissible to adopt as one’s credence function. Permissivism was essentially designed to accommodate Imprecise Confirmation.

Some mushers might think that adopting a precise credence function entails violating Strict Evidentialism. But this requires the assumption that precise credences are somehow inappropriately informative. I’ve argued that this assumption is false. Precise permissivism is compatible with both claims.

Perhaps more surprisingly, precise impermissivism is also compatible with both claims. If Imprecise Confirmation is true, then some bodies of evidence fail to determine a unique credence that’s rational in each proposition. And so epistemic norms sometimes don’t place a determinate constraint on which credence function is rational to adopt. But this doesn’t entail that the epistemic norms require adopting imprecise credences. It might just be that in light of some bodies of evidence, epistemic norms don’t place a determinate constraint on our credences.

Suppose this is right: when our evidence is imprecise, epistemic norms underdetermine what is rationally required. Instead of determinately permitting multiple credence functions, though, we interpret the norms as simply underdetermining the normative status of those credence functions. They are neither determinately permissible nor determinately impermissible. This is compatible with the sharper view: it could be supervaluationally true that our credences must be precise. Moreover, this is compatible with impermissivism: it could be supervaluationally true that only one credence function is permissible.

How could it be indeterminate what rationality requires of us? There are cases where morality and other sorts of norms don’t assign determinate normative statuses. Here is an analogy: suppose I promise to pay you a £100 if you wear neon yellow trousers to your lecture tomorrow. You bashfully show up wearing trousers that are a borderline case of neon yellow. Am I bound by my promise to pay you £100?Footnote 44

According to the precise permissivist, it’s determinately permissible for me not to pay you, and it’s also determinately permissible for me to pay you. According to the precise impermissivist, it’s indeterminate whether I’m obligated to pay you. According to the musher, it’s determinately obligatory that I somehow indeterminately pay—perhaps by hiding £100 somewhere near you?

The upshot is clear: Indeterminacy in obligations doesn’t entail an obligation to indeterminacy.Footnote 45

Analogously: even if epistemic norms underdetermine what credences are rational, it might still be the case that there’s a unique precise credence that’s rational to adopt in light of our evidence. It’s just indeterminate what it is.

5.2 Indeterminacy and uncertainty

A common concern is that there’s some analogy between the view I defend and epistemicism about vagueness. Epistemicism is the view that vague predicates like “neon yellow” have perfectly sharp extensions. We simply don’t know what those extensions are; and this ignorance explains away the appearance of indeterminacy.

One might think that precise impermissivism with uncertainty about epistemic probabilities amounts to something like an epistemicism about imprecise evidence. Instead of allowing for the possibility of genuine indeterminacy, the thought goes, this view suggests we simply don’t know which sharp credences are determinately warranted. Still, though, the credences that are determinately warranted are perfectly sharp.

But a sharper view that countenances genuine indeterminacy (that is, indeterminacy that isn’t merely epistemic) is fundamentally different from epistemicism about vagueness. The supervaluational hypothesis I mentioned above is obviously analogous to supervaluationism about vagueness. The supervaluationist about vagueness can hold that, determinately, there is a sharp cutoff point between neon yellow and non-neon yellow; it just isn’t determinate where that cutoff point lies.

Similarly, the precise impermissivist who accepts Imprecise Confirmation accepts that for any body of evidence, there is determinately a precise credence function one ought to have in light of that evidence; it’s just indeterminate which precise credence function is the right one.

Is this proposal—that imprecise evidence fails to determine certain rational requirements—a competitor to the view I’ve been defending—that imprecise evidence requires normative uncertainty? It depends on an interesting theoretical question: what attitude we should take toward indeterminate propositions.

It might be that, for some forms of indeterminacy, if it’s indeterminate whether A, then it’s rational to be uncertain whether A. For example, perhaps it’s rational to be uncertain whether a hummingbird is blue or green, even though you know that it’s indeterminate. (Uncertainty simply involves middling credence: it doesn’t commit you to thinking there’s a fact of the matter.) And perhaps it’s rational to be uncertain about whether it’s rational to assign a particular credence to a proposition when the normative status of that credence is indeterminate.

There are plenty of reasonable worries about this view, though. For example, prima facie, this seems to make our response to known indeterminacy indistinguishable from our response to unknown determinate facts. It’s outside the scope of this paper to adjudicate this debate.

If uncertainty is not a rational response to indeterminacy, then the two proposals I’ve defended—normative uncertainty and indeterminate norms—may be competitors. But if uncertainty is a rational response to indeterminacy, then the two fit together nicely. Similarly for if they are appropriate responses to distinct forms of imprecise evidence: evidence that warrants uncertainty about rationality and evidence that leaves indeterminate what is rationally required.

6 Conclusion

The musher claims that imprecise evidence requires imprecise credences. I’ve argued that this is false: imprecise evidence can place special constraints on our attitudes, but not by requiring our attitudes to be imprecise. The musher’s view rests on the assumption that having imprecise credences is the only way to manifest a sort of epistemic humility in the face of imprecise evidence. But the forms of uncertainty that best fit the bill have a natural home in the sharper framework. Imprecise evidence doesn’t demand a new kind of attitude of uncertainty. It just requires ordinary uncertainty toward a particular group of propositions—propositions that we often forget rational agents can question.

The kind of sharper view I defend can accommodate all the intuitions that were taken to motivate the musher view. So what else does going imprecise gain us? Only vulnerability to arbitrariness worries and decision theoretic challenges. Better to drop the imprecision. When it’s unclear what the evidence supports, it’s rational to question what to believe.

Notes

This might be interpreted as a question about epistemic norms for cognitively idealized agents. I’d rather interpret it as a question about what evidentialist epistemology requires.

Some have associated certain patterns of preferences or behaviors with imprecise credences: for example, having distinct buying and selling prices for gambles (Walley 1991), or being willing to forego sure gains in particular diachronic betting contexts (Elga 2010). But treating these as symptomatic of imprecise credences, rather than precise credences, depends on specific assumptions about how precise credences must be manifested in behavior: for example, that agents with precise credences are expected utility maximizers.

E.g. Fine (1973).

E.g. Kyburg (1983).

“Unspecific bodies of evidence” may include empty bodies of evidence.

E.g. in Elga (2010).

Imprecise credences are often called “mushy” credences.

There are other kinds of motivation for rationally permissible imprecise credences. One is the view that credences intuitively needn’t obey Trichotomy, the claim that for all propositions A and B, c(A) is either greater than, less than, or equal to c(B). (See e.g. (Schoenfield 2012).) Moss (2014) argues that imprecise credences provide a good way to model rational changes of heart (in a distinctly epistemic sense). Hájek and Smithson (2012) suggest imprecise credences as a way of representing rational attitudes towards events with undefined expected value. Finally, there’s the empirical possibility of indeterminate chances, also discussed in Hájek and Smithson (2012): if there are set-valued chances, the Principal Principle seems to demand set-valued credences. Only the last of these suggests that imprecise credences are rationally required; I’ll return to it in Sect. 4.

See e.g. Joyce (2010): “Since the data we receive is often incomplete, imprecise or equivocal, the epistemically right response is often to have opinions that are similarly incomplete, imprecise or equivocal.”

More cautiously, we might distinguish between first- and higher-order objective chances. Suppose a coin has been chosen at random from an urn containing 50 coins biased 3/4 toward heads and 50 coins biased 3/4 toward tails. The first-order objective chance that the chosen coin will land heads on the next toss is either .75 or .25, but the second-order objective chance of heads is .5. Mushers in the first category might allow for precise credences where only higher-order objective chances are known. This seems to be the position of Joyce (2010).

Ellsberg (1961).

Schoenfield (2012) and Konek (forthcoming) seem to fall into this category, given their choice of motivating examples.

Walley more or less concedes this point (104–105). He distinguishes “incomplete” versus “exhaustive” interpretations of imprecise credences, similar to the “imprecise” versus “indeterminate” interpretations discussed in Levi (1985). On the incomplete interpretation, which he generally uses, the degree of imprecision can be partly determined by the incomplete elicitation of an agent’s belief state. On the exhaustive interpretation, by contrast, imprecision is determined solely by indeterminacy in the agent’s belief state. The latter interpretation, Walley acknowledges, requires “the same kind of precise discriminations as the Bayesian theory” (105). The musher position, as I’ve defined it, is concerned with the exhaustive interpretation: there are no epistemic obligations to be such that someone else has incomplete information about one’s belief state. Walley offers no theory of (complete) imprecise credences that is not susceptible to arbitrariness worries.

Of course, the distribution of weights need not be symmetric or smooth.

More accurately, it should represent the non-vague grounding base for the vague version of what set membership represents.

\(\Gamma\)-Maximin—the rule according to which one should choose the option that has the greatest minimum expected value—is not susceptible to Elga’s objection. But that decision rule is unattractive for other reasons, including the fact that it sometimes requires turning down cost-free information. (See Seidenfeld 2004; thanks to Seidenfeld for discussion.) Still other rules, such as Weatherson’s (2008) rule “Caprice” and Williams’s (2014) rule “Randomize,” are committed to the controversial claim that what’s rational for an agent to do depends not just on her credences and values, but also her past actions.

For this to work out as an expectation, we’ll need to normalize the weighting such that the total weights sum to 1. Assuming the weighted averages are probabilistic—a plausible constraint on the weighting—the resulting recommended actions will be rational (or anyway not Dutch-bookable).

In economics, it’s common to distinguish “risk” and “uncertainty,” in the sense introduced in Knight (1921). Knightian “risk” involves known (or knowable) objective probabilities, while Knightian “uncertainty” involves unknown (or unknowable) objective probabilities. This is not the ordinary sense of “uncertainty”—i.e. the state of not being certain—that I use throughout this paper.

A brief argument: introspection may be a form of perception (inner sense). Our perceptual faculties sometimes lead us astray. Whether introspection is a form of perception is arguably empirical. Rationality doesn’t require certainty about empirical psychology. So it’s possible that ideal rationality doesn’t require perfect introspection. And it’s possible that ideal rationality does require perfect introspection but doesn’t require ideal agents to know that they can introspect perfectly.

Note: this is not an interpretation of epistemic probability that presupposes objective Bayesianism.

A caveat: it’s compatible with the view I’m defending that there are no such bodies of evidence. It might be that every body of evidence not only supports precise credences, but supports certainty in the rationality of just those precise credences.

It might even be that the Mystery Coin example is not really an example of a case where it’s not clear what credence to have. Credence .5 is the obvious candidate, even without the principle of indifference to bolster it. If you had to bet on heads in the Mystery Coin case, I suspect you’d bet as though you had credence .5.

I’ll mention some possible constraints that uncertainty about rationality places on our other credences. What’s been said so far has been neutral about whether there are level-bridging norms: norms that link one’s beliefs about what’s rational with what is in fact rational. But a level-bridging response, involving something like Christensen’s (2010) principle of Rational Reflection, is a live possibility. (See Elga 2013) for a refinement of the principle.) According to this principle, our rational first-order credences should be a weighted average of the credences we think might be rational (on Elga’s version, conditional on their own rationality), weighted by our credence in each that it is rational. This principle determines what precise probabilities an agent should have when she is rationally uncertain about what’s rational.

Note, however, that a principle like this won’t provide a recipe to check whether your credences are rational: whatever second-order credences you have, you may also be uncertain about whether your second-order credences are the rational ones to have, and so on. And so this kind of coherence constraint doesn’t provide any direct guidance about how to respond to imprecise evidence.

And except in the rare case where, e.g., you’re betting on propositions about what’s rational.

Thanks to Julia Staffel for pressing me on this point.

Again, see Elga (2010).

Hájek and Smithson (2012) argue that interpretivism directly favors modeling even ideal agents with imprecise credences. After all, a finite agent’s dispositions won’t determine a unique probability function/utility function pair that can characterize her behavioral dispositions. And this just means that all of the probability/utility pairs that characterize the agent are equally accurate. So, doesn’t interpretivism entail at least the permissibility of imprecise credences? I find this argument compelling. But it doesn’t tell us anything about epistemic norms. It doesn’t suggest that evidence ever makes it rationally required to have imprecise credences. And so this argument doesn’t support the musher view under discussion.

Konek is more circumspect about the kind of probability at issue. My objections to this view apply equally well if we sub some other form of probability in for chance.

This is a relative of what White (2009) calls the Chance Grounding Thesis, which he attributes to a certain kind of musher: “Only on the basis of known chances can one legitimately have sharp credences. Otherwise one’s spread of credence should cover the range of chance hypotheses left open by your evidence” (174).

See Skyrms (1977).

See also White (2009), 162–164.

Of course, the rational agent may ascribe herself precise or imprecise credences and so occupy the theorist’s position. But in doing so, the comparative informativeness in her ascription of precise credences is still informativeness about her own psychological states, not about how coin-tosses might turn out.

Thanks to Chris Meacham for discussion and to Graham Oddie for this example.

Cf. Yalcin (2012).

Thanks to Wolfgang Schwarz, R.A. Briggs, and Alan Hájek for suggesting this formulation of the motivation.

Roger White suggested an analogous example to me in personal communication.

This point extends to another argument that has been given for imprecise credences. According to Hájek and Smithson (2012), there could be indeterminate chances, so that some event E’s chance might be indeterminate—not merely unknown—over some interval like [.2, .5]. This might be the case if the relative frequency of some event-type is at some times .27, at others .49, etc.—changing in unpredictable ways, forever, such that there is no precise limiting relative frequency. Hájek & Smithson argue that the possibility of indeterminate objective chances, combined with a generalization of Lewis’s Principal Principle, yields the result that it is rationally required to have imprecise credences. Hájek & Smithson suggest that the following generalization of the Principal Principle captures how we should respond to indeterminate chances:

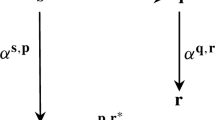

- PP*:

-

Rational credences are such that \(C(A \mid Ch(A) = [n,m]) = [n,m]\) (if there’s no inadmissible evidence).

But there are other possible generalizations of the Principal Principle that are equally natural, e.g. PP\(\dagger\):

- PP\(\dagger\):

-

Rational credences are such that \(C(A \mid Ch(A) = [n,m]) \in [n,m]\) (if there’s no inadmissible evidence).

The original Principal Principle is a special case of both. (Note that PP\(\dagger\) only states a necessary condition on rational credences and not a sufficient one. So it isn’t necessarily a permissive principle.) Hájek & Smithson don’t address this alternative, but it seems to me perfectly adequate for the sharper to use for constraining credences in the face of indeterminate chances. So it’s not obvious that indeterminate chances require us to have indeterminate credences.

References

Bertrand, J. (1889). Calcul des probabilités. Paris: Gauthier-Villars.

Christensen, David. (2010). Rational reflection. Philosophical Perspectives, 24(1), 121–140.

Elga, A. (2007). Reflection and disagreement. Noûs, 41(3), 478–502.

Elga, A. (2010). Subjective Probabilities Should Be Sharp. Philosophers’ Imprint, 10(05).

Elga, A. (2013). The puzzle of the unmarked clock and the new rational reflection principle. Philosophical Studies, 164(1), 127–139.

Ellsberg, D. (1961). Risk, ambiguity, and the Savage axioms. Quarterly Journal of Economics, 75(4), 643–669.

Fine, T. L. (1973). Theories of Probability. New York: Academic Press.

Good, I. J. (1962). Subjective probability as the measure of a non-measurable set. In P. S. E. Nagel & A. Tarski (Eds.), Logic, methodology and philosophy of science (pp. 319–329). Palo Alto: Stanford University Press.

Hájek, A., & Smithson, M. (2012). Rationality and Indeterminate Probabilities. Synthèse, 187(1), 33–48.

Jeffrey, Richard C. (1983). Bayesianism with a human face. In Testing Scientific Theories (Vol. 10, pp. 133–156). University of Minnesota Press

Joyce, J. M. (2005). How probabilities reflect evidence. Philosophical Perspectives, 19(1), 153–8211.

Joyce, J. M. (2010). A defense of imprecise credences in inference and decision making. Philosophical Perspectives, 24(1), 281–323.

Knight, F. (1921). Risk, uncertainty, and profit. Boston: Houghton and Mifflin.

Konek, J. (forthcoming). Epistemic Conservativity and Imprecise Credence. Philosophy and Phenomenological Research.

Kyburg, H. (1983). Epistemology and inference. Minneapolis: University of Minnesota Press.

Lasonen-Aarnio, M. (2014). Higher-order evidence and the limits of defeat. Philosophy and Phenomenological Research, 88(2), 314–345.

Levi, I. (1974). On indeterminate probabilities. Journal of Philosophy, 71(13), 391–418.

Levi, I. (1980). The enterprise of knowledge: an essay on knowledge, credal probability, and chance. Cambridge: MIT Press.

Levi, I. (1985). Imprecision and indeterminacy in probability judgment. Philosophy of Science, 52(3), 390–409.

Moss, S. (2014). Credal dilemmas. Noûs, 49(4), 665–683.

Pedersen, A. P., & Wheeler, G. (2014). Demystifying dilation. Erkenntnis, 79(6), 1305–1342.

Savage, L. (1972). The foundations of statistics, 2nd revised edition (first published 1954). New York: Dover.

Schoenfield, M. (2012). Chilling out on epistemic rationality: A defense of imprecise credences (and other imprecise doxastic attitudes). Philosophical Studies, 158, 197–219.

Seidenfeld, T. (1978). Direct inference and inverse inference. Journal of Philosophy, 75(12), 709–730.

Seidenfeld, T. (2004). A contrast between two decision rules for use with (convex) sets of probabilities: \(\Gamma\)-maximin versus e-admissibility. Synthèse, 140, 69–88.

Skyrms, B. (1977). Resiliency, propensities, and causal necessity. Journal of Philosophy, 74, 704–713.

Sliwa, P., & Horowitz, S. (2015). Respecting all the evidence. Philosophical Studies, 172(11), 2835–2858.

Sturgeon, S. (2008). Reason and the grain of belief. Noûs, 42(1), 139–165.

van Fraassen, B. (1989). Laws and Symmetry. Oxford University Press: Oxford.

van Fraassen, B. (1990). Figures in a probability landscape. In Truth or consequences (pp. 345–356). Dordrecht: Kluwer.

Walley, P. (1991). Statistical reasoning with imprecise probabilities. Boca Raton: Chapman & Hall.

Weatherson, B. (2008). Decision making with imprecise probabilities (Unpublished).

White, R. (2009). Evidential symmetry and mushy credence. In Oxford studies in epistemology. Oxford: Oxford University Press.

Williamson, J. (2014). How uncertain do we need to be? Erkenntnis, 79, 1249–1271.

Williams, J., & Robert, G. (2014). Decision making under uncertainty. Philosophers Imprint, 14(4), 1–34.

Williamson, T. (2007). Improbable knowing (unpublished notes).

Yalcin, S. (2012). Bayesian expressivism. Proceedings of the Aristotelian Society, 112(2.2), 123–160.

Acknowledgements

Thanks to R.A. Briggs, Ryan Doody, Alan Hájek, Richard Holton, Sophie Horowitz, Wolfgang Schwarz, Teddy Seidenfeld, Julia Staffel, Roger White, and audiences at the Australian National University, UCSD, and SLACRR for invaluable feedback.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Carr, J.R. Imprecise evidence without imprecise credences. Philos Stud 177, 2735–2758 (2020). https://doi.org/10.1007/s11098-019-01336-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11098-019-01336-7