Abstract

For this study, researchers critically reviewed documents pertaining to the highest profile of the 15 teacher evaluation lawsuits that occurred throughout the U.S. as pertaining to the use of student test scores to evaluate teachers. In New Mexico, teacher plaintiffs contested how they were being evaluated and held accountable using a homegrown value-added model (VAM) to hold them accountable for their students’ test scores. Researchers examined court documents using six key measurement concepts (i.e., reliability, validity [i.e., convergent-related evidence], potential for bias, fairness, transparency, and consequential validity) defined by the Standards for Educational and Psychological Testing and found evidence of issues within both the court documents as well as the statistical analyses researchers conducted on the first three measurement concepts (i.e., reliability, validity [i.e., convergent-related evidence], and potential for bias).

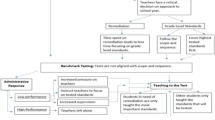

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Summarized in an article published in the United States (U.S.), in its national popular press outlet Education Week (2015), as of 2015 there were 15 lawsuits throughout the U.S. in which teacher plaintiffs were contesting how they were being evaluated and held accountable using their students’ standardized test scores. These 15 cases were located within seven states: Florida (n = 2), Louisiana (n = 1), Nevada (n = 1), New Mexico (n = 4), New York (n = 3), Tennessee (n = 3), and Texas (n = 1). Teacher plaintiffs across cases were contesting the high-stakes consequences attached to their alleged impacts on their students’ test scores over time, including but not limited to merit-pay in Florida, Louisiana, and Tennessee; teacher tenure decisions in Louisiana; teacher termination in Houston, Texas, and Nevada; and other “unfair penalties” in New York. To measure teachers’ impacts on their students’ achievement over time, the education metric of choice, and of issue across cases, was the value-added model (VAM).

In the simplest of terms, VAMs and growth models (hereafter referred to more generally as VAMsFootnote 1) help to statistically measure and then classify teachers’ levels of effectiveness according to teachers’ purportedly causal impacts on their students’ achievement over time. VAM modelers typically calculate teacher effects by measuring student growth over time on standardized tests (e.g., the tests mandated throughout the U.S. by the federal No Child Left Behind [NCLB] Act 2001), and then aggregating this growth at the teacher-level while statistically controlling for confounding variables such as students’ prior test scores and other student-level (e.g., free-and-reduced lunch [FRL], English language learner [ELL], special education [SPED]) and school-level variables (e.g., class size, school resources), although control variables vary by model. Teachers whose students collectively outperform students’ projected levels of growth (i.e., typically estimated 1 year prior) are to be identified as teachers of “added value,” and teachers whose students fall short are to be identified as teachers of the inverse (e.g., teachers not of “added value”).

Given the statistical sophistication VAMs were to bring to the objective evaluation of teachers’ effects, and stronger accountability policies and initiatives in the name of U.S. educational reform (see, for example, Collins 2014; Eckert and Dabrowski 2010; Kappler Hewitt 2015), VAMs were incentivized by former U.S. President Obama’s Race to the Top Competition (2011). Via Race to the Top, the U.S. government offered states $4.35 billion in federal support (upon which all U.S. states historically rely), on the condition that states would attach teachers’ evaluations to students’ test scores using a VAM-based system. States’ VAM implementations were also underscored via the U.S. congressional authorization of states’ NCLB waivers (U.S. Department of Education 2014), excusing states who implemented a VAM-based teacher evaluation system from not needing to meet NCLB’s former goal that required that 100% of students across states to reach 100% proficiency by 2014 (U.S. Department of Education 2010). Consequently, as a result of both of these federal policy initiatives, almost all 50 states plus Washington D.C. constructed or adopted and then implemented a VAM-based teacher evaluation system by 2014 (Paufler and Amrein-Beardsley 2014).

While the federal government has since reduced the strictness of its aforementioned policy-based footholds, namely through the passage of its Every Student Succeeds Act (ESSA 2016) which reduced federal mandates surrounding VAMs, it is worth noting that many states and school districts continue to use VAMs for high-stakes employment decisions. More specifically, while the federal passage of ESSA allowed for greater local control over states’ and school districts’ teacher evaluation systems and no longer required states and school districts to rely on VAMs as a measure meant to “meaningfully differentiate [teacher] performance… including as a significant factor, data on student growth [in achievement over time] for all students” (U.S. Department of Education 2012), current evidence indicates that many states and school districts still continue to use VAMs state- and district-wide (Close et at. 2020; Ross and Walsh 2019). For example, 12 states still allow or encourage districts to make teacher termination decisions as solely or primarily based on their VAM data, and 23 states allow or encourage such decisions as per a combination of districts’ VAM and other evaluative (e.g., observational) data (Close et al. 2020). Given the human and financial capital that states had already invested in their use, it stands to reason that policy inertia is providing for the continued use of VAMs, also in the New Mexico case at hand.

Likewise, the theory of change at the time (and still ongoing; see Koretz 2017) is that by objectively holding teachers accountable for that which they did or did not do effectively, in terms of the “value” they did or did not “add” to their students’ achievement over time, teachers would be incentivized to teach more effectively and students would consequently learn and achieve more. If high stakes were attached to teachers’ students’ test output (e.g., teacher pay, tenure, termination), teachers would take their teaching and their students’ learning and achievement more seriously. This, along with the data that VAMs were to also provide to help teachers improve upon their practice (i.e., the formative functions of VAMs), would ultimately help improve achievement throughout the U.S., especially in the disadvantaged schools most in need of educational reform, all of which would help the U.S. reclaim its global superiority (see, for example, Weisberg et al. 2009).

It should be noted here, though, that this theory of change, along with the use of VAMs to help satisfy it, was not isolated to the U.S. In his 2011 book Finnish Lessons, for example, Sahlberg coined the Global Educational Reform Movement (GERM) acronym which captured other countries’ (e.g., Australia, England, Korea, Japan) similar policy movements to accomplish similar goals and outcomes. In short, GERM “radically altered education sectors throughout the world with an agenda of evidence-based policy based on the [same] school effectiveness paradigm…combin[ing] the centralised formulation of objectives and standards, and [the] monitoring of data, with the decentralisation to schools concerning decisions around how they seek to meet standards and maximise performance” (p. 5).

Likewise, while the U.S. was leading other nations in terms of its policy-backed and funded initiatives as based on VAMs, other countries (e.g., Chile, Ecuador, Denmark, England, Sweden) continue to entertain similar policy ideas. Put differently, “in the U.S. the use of VAM as a policy instrument to evaluate schools and teachers has been taken exceptionally far [emphasis added] in the last 5 years,” while “most other high-income countries remain [relatively more] cautious towards the use of VAM[s]” (Sørensen 2016, p. 1). Although with the support of global bodies such as the Organisation for Economic Co-operation and Development (OECD), VAMs continue to be adopted worldwide for purposes and uses similar to those in the U.S. (see also Araujo et al. 2016).

Notwithstanding, because the U.S. took VAMs and VAM-based educational reform policies “exceptionally far” (Sørensen 2016, p. 1), what we have learned from states’ uses of VAMs can and should be understood by others across nations considering whether to adopt or implement VAMs for similar purposes. In the U.S., because VAMs were also literally that which landed the above states (and districts within states) in court, this manuscript should be of interest to others both nationally and internationally. It is prudent that others within and beyond U.S. borders pay attention to that which happened within and across these cases, and what eventually led to the “lots of litigation” filed throughout U.S. courts in these regards by 2015 (Education Week 2015).

2 Purpose of the study

Given Education Week (2015) presents a broad, case-by-case description of the aforementioned 15 cases, for this study researchers purposefully selected one of these 15 cases to help others: (1) better understand the measurement and pragmatic issues at play, in some way or another, across cases in that the issues generalize across cases, and likely states, especially given their ongoing use of VAMs (Amrein-Beardsley and Geiger 2019; Close et al. 2020); and (2) better understand these issues in context, in the case of the largest and arguably most high-profile, controversial, and consequential cases of the set. The case in point occurred in New Mexico, with consequences to be attached to teachers’ VAM scores including but not limited to the flagging of teachers’ professional files if determined to be not of “added value,” which ultimately prevented teachers from moving teaching positions within the state given their official “ineffective” classifications. Also at issue were teacher termination policies attached to New Mexico teachers’ VAM scores.

3 The case of New Mexico

During the 2013–2014 through 2015–2016 school years, New Mexico’s teacher evaluation system, the NMTEACH Educator Effectiveness System (EES), was seen by many as one of the toughest across the U.S. (Burgess 2017; Kraft and Gilmour 2017; see also Amrein-Beardsley and Geiger 2019). Not only was student growth data the preponderant criterion informing a teacher’s overall evaluation score, but the overall distributions of teacher effectiveness ratings across the state were nearly normal for these 3 years. That is, the majority of teachers were rated as effective; fewer and nearly equal proportions of teachers were rated as slightly worse and slightly better than effective, respectively; and even fewer and nearly equal proportions of teachers were rated as much worse and much better than effective, respectively (see Fig. 1).

Unlike other states (e.g., North Carolina, Tennessee) that contracted with companies (e.g., SAS Institute Inc. 2019) that sold VAMs (e.g., the EVAAS), the New Mexico VAM was a homegrown model created by Pete Goldschmidt (see Martinez et al. 2016; see also Reiss 2017). Per teacher, a separate VAM score was calculated per subject, per grade, and per standardized assessment. Each teacher’s overall VAM score was a weighted average of all individual VAM scores. The statistical model used to generate each individual VAM score was supposed to control for whether a course had been identified as an “intervention course,” the student’s grade level (if a course contained students from multiple grades), and the proportion of time a student had spent with each specific teacher (New Mexico Public Education Department [NMPED] 2016; see pp. 14–22 for full model specifics and formulas). A VAM-eligible teacher (i.e., one who taught in a tested subject and grade) had up to half of their overall evaluation based on their VAM score, with the remaining half based on a combination of classroom observations, student surveys, and attendance (NMPED 2016) (see Table 1).

In comparison, other states’ evaluation systems either did not rely on student growth data nearly as much, and/or teachers had a much higher likelihood of receiving an evaluation of effective or better. For example, during the 2015–2016 school year, only one third of states in the U.S. (including New Mexico) required that student growth data be the “preponderant criterion” in teachers’ overall evaluation scores (Doherty and Jacobs 2015). Further, New Mexico was only one of two states where over 1% of all teachers received an overall evaluation score in the lowest possible category (Kraft and Gilmour 2017).

In the New Mexico case, which was titled American Federation of Teachers – New Mexico and the Albuquerque Federation of Teachers (Plaintiffs) v. New Mexico Public Education Department (Defendants) and was being tried and heard in the state’s First Judicial District Court, the primary issue was the use of the state’s homegrown VAM (see Swedien 2014), specifically to account for up to 50% of every VAM-eligible teacher’s annual evaluation. The specific violations contested were that (1) New Mexico teachers received poor VAM-based ratings because of flawed and incomplete student-level data (e.g., teachers were linked to the wrong students, students they never taught, subject areas they never taught, or using tests that did not map onto that which they taught); and (2) the aforementioned consequential decisions to be attached to all VAM-eligible teachers’ VAM-based evaluation scores (e.g., flagging files and teacher termination decisions) were arbitrary and not legally defensible, as per the education profession’s Standards for Educational and Psychological Testing (American Educational Research Association (AERA), American Psychological Association (APA),, and National Council on Measurement in Education (NCME) 2014), hereafter referred to as the Standards.

4 Conceptual framework

In order to investigate the empirical and pragmatic matters addressed in this court case, researchers conducted a case study analysis of all documents, exhibits, and data submitted for this case. Researchers framed their analyses using the key measurement concepts resident within the Standards (AERA et al. 2014), more specifically given issues with: (1) reliability, (2) validity (i.e., convergent-related evidence), (3) bias, (4) fairness, and (5) transparency, with emphases also on (6) consequential validity, as per (6a) whether VAMs are being used to make consequential decisions using concrete (e.g., not arbitrary) evidence and (6b) whether VAMs’ unintended consequences are also of legal pertinence and concern. Researchers define and describe each of these areas of measurement and pragmatic concern next, also as per the current research literature per concept.

4.1 Reliability

As per the Standards (AERA et al. 2014), reliability is defined as the degree to which test- or measurement-based scores “are consistent over repeated applications of a measurement procedure [e.g., a VAM] and hence and inferred to be dependable and consistent” (p. 222–223) for the individuals (e.g., teachers) to whom the test- or measurement-based scores pertain. In terms of VAMs, reliability (also known as intertemporal stability; see, for example, McCaffrey et al. 2009) should be observed when VAM estimates of teacher effectiveness are more or less consistent over time, from 1 year to the next, regardless of the type of students and perhaps subject areas teachers teach. This is typically captured using “standard errors, reliability coefficients per se, generalizability coefficients, error/tolerance ratios, item response theory (IRT) information functions, or various indices of classification consistency” (AERA et al. 2014, p. 33) that help to both situate and make explicit VAM estimates and their (sometimes sizeable) errors.

Reporting on reliability is also done to make transparent the sometimes sizeable errors that come along with VAM estimates, to better contextualize the VAM-based inferences that result. This is especially critical when VAM outputs are to be attached to high-stakes consequences upon which the stability of these measures over time also rely, because without adequate reliability, valid interpretations and uses are difficult to defend.

What is becoming increasingly evident across research studies in this area is that VAMs are often unreliable, unstable, and sometimes “notoriously” unhinged (Ballou and Springer 2015, p. 78). While “proponents of VAM[s] are quick to point out that any statistical calculation has error of one kind or another” (Gabriel and Lester 2013, p. 4; see also Harris 2011), the errors prevalent across VAMs are consistently large enough to warrant caution, especially before high-stakes consequences are attached to VAM output.

More pragmatically, researchers have found that the possibility of teachers being misclassified (i.e., classified as adding value 1 year and then not adding value 1 to 2 years later) can range from 25% to as high as 59% (Martinez et al. 2016; Schochet and Chiang 2013; Yeh 2013). While reliability can be increased with 3 years of data, there still exists at least a 25% chance that teachers may be misclassified. Additionally, after including 3 years of data, the strength that more data add to VAM reliability plateaus (Brophy 1973; Cody et al. 2010; Glazerman and Potamites 2011; Goldschmidt et al. 2012; Harris 2011; Ishii and Rivkin 2009; Sanders as cited in Gabriel and Lester 2013).

What this means in practice, for example, with a correlation of r = 0.40 and R2 = 0.16, which is an optimistic r and R2 given the current research, is illustrated in Table 2 (adapted with permission and corrections from Raudenbush and Jean 2012).

Illustrated is that out of every 1000 teachers, 750 teachers would be identified correctly and 250 teachers would not. That is, one in four teachers would be falsely identified as either being worse or better than they were originally classified.

Of concern here is the prevalence of false positive or false discovery errors (i.e., type I errors), whereas an ineffective teacher is falsely identified as effective. However, the inverse is equally likely, defined as false negative or false nondiscovery errors (i.e., type II errors), whereas an ineffective teacher might go unnoticed instead. Regardless of which type of error is worse, in that “[f]alsely identifying [effective] teachers as being below a threshold poses risk to teachers but failing to identify [ineffective] teachers who are truly ineffective poses risks to students” (Raudenbush and Jean 2012), there still exists one in four teachers who are likely to be falsely identified. This is clearly problematic, especially when consequential decisions are to be tied to VAM output (see also Briggs and Domingue 2011; Chester 2003; Glazerman et al. 2011; Guarino et al. 2012; Harris 2011; Rothstein 2010; Shaw and Bovaird 2011; Yeh 2013).

4.2 Validity

As per the Standards (AERA et al. 2014), validity “refers to the degree to which evidence and theory support the interpretations of test scores for [the] proposed uses of tests” (p. 11). Likewise, “[v]alidity is a unitary concept,” as measured by “the degree to which all the accumulated evidence supports the intended interpretation of [the test-based] scores for [their] proposed use[s]” (p. 14). As per Kane (2017):

If validity is to be used to support a score interpretation, validation would require an analysis of the plausibility of that interpretation. If validity is to be used to support score uses, validation would require an analysis of the appropriateness of the proposed uses, and therefore, would require an analysis of the consequences of the uses. In each case, the evidence need for validation would depend on the specific claims being made. (p. 198)

When establishing evidence of validity, or explicating validity (Kane 2017), accordingly, one must be able to support with evidence that accurate inferences can be drawn from the data being used for whatever inferential purposes are at play (see also Cronbach and Meehl 1955; Kane 2006, 2013).

While there is a “multiplicity of validity vocabularies” (Markus 2016, p. 252), however, as well as multiple forms of validity evidences (e.g., content-related, criterion-related, construct-related, consequential-related) that are used to both accommodate and differentiate validations of test score inferences and justifications of test use (Cizek 2016), most often examined in this area of research is convergent-related evidence of validity (i.e., “the degree of relationship between the test scores and [other] criterion scores” taken at the same time; Messick 1989, p. 7). While current conceptions of validity have evolved well beyond capturing any specific evidences or instances of validity (e.g., convergent-related evidence of validity), especially in isolation of other evidences of validity (e.g., that should be used to capture a more holistic interpretation of validity), it is important to note, again, that researchers examining VAM-related evidences of validity have consistently and disproportionately focused on convergent-related evidences of validity in this area of research. While arguably overly simplistic and reductionistic (see, for example, Newton and Shaw 2016), because these evidences are what exist in this area of research, so much so that other types of validity are rarely mentioned or discussed (e.g., consequential-related evidences of validity), and given the purpose of this study revolves around the court documents pertaining to the New Mexico case in which only these evidences were presented to the court, only evidences of convergent-related validity are discussed and examined in this study.

In New Mexico, accordingly, convergent-related evidences of validity were used to assess the extent to which measures of similar constructs concurred or converged. The construct at issue here was teacher effectiveness, and evidences of convergent-related evidence of validity were positioned to the court as necessary to assess, for example, whether teachers who posted large and small value-added gains or losses over time were the same teachers deemed effective or ineffective, respectively, using other measures of teacher effectiveness (e.g., observational scores, student survey scores) collected at the same time.

In terms of the VAM literature writ large, convergent-related evidences of validity as per the current research suggest that VAM estimates of teacher effectiveness do not strongly correlate with the other measures typically used to measure the teacher effectiveness construct (i.e., observational scores). While some argue that the measures other than VAMs are at fault given their imperfections, others argue that all of the measures, including VAMs, are at fault because they are all in and of themselves imperfect and flawed. Likewise, while some also argue that knowing there is a low correlation between any VAM and any set of observational scores tells us nothing about whether either one, neither, or both measures are useful, others argue that should these indicators be mapped onto a general construct called teaching effectiveness, they should correlate. Should high-stakes decisions be attached to output from either or multiple measures, these correlations must be higher before consequences can be defended.

If the large-scale standardized achievement test scores that contribute to VAM estimates and the other measures typically used to measure teacher effectiveness were all reliable and valid measures of the teaching effectiveness construct, effective teachers would rate well, more or less continuously, from 1 year to the next, across indicators. Conversely, ineffective teachers would rate poorly, more or less consistently, from 1 year to the next, across indicators used. However, this does not occur in reality, except in slight magnitudes, whereby the correlations being observed among both mathematics and English/language arts value-added estimates and teacher observational or student survey indicators are low to moderateFootnote 2 in size (Sloat et al. 2018; Grossman et al. 2014; Harris 2011; Hill et al. 2011; see also Koedel et al. 2015). These correlations are also akin to those observed via the renowned Bill & Melinda Gates Foundation’s Measures of Effective Teaching (MET) studies in which researchers searched for and assessed the same evidences of convergent-related validity (Cantrell and Kane 2013; Kane and Staiger 2012; see also Polikoff and Porter 2014; Rothstein and Mathis 2013).

4.3 Bias

As per the Standards (AERA et al. 2014), bias pertains to the validity of the inferences to be drawn from test-based scores. The Standards define bias as the “construct underrepresentation of construct-irrelevant components of test scores that differentially affect the performance of different groups of test takers and consequently the…validity of interpretations and uses of their test scores” (p. 216). Biased estimates, also known as systematic error as pertaining to “[t]he systematic over- or under-prediction of criterion performance” (p. 222), are observed when said criterion performance varies for “people belonging to groups differentiated by characteristics not relevant to the criterion performance” (p. 222) of measurement.

Specific to VAMs, since schools do not randomly assign teachers the students they teach (Paufler and Amrein-Beardsley 2014), whether teachers’ students are invariably more or less motivated, smart, knowledgeable, capable, and the like can bias students’ test-based data and teachers’ test-based data once aggregated. Bias is subsequently observed when VAM estimates of teacher effectiveness correlate with student background variables other than the indicators of interest (i.e., student achievement). If VAM-based estimates are highly correlated to biasing factors, it becomes impossible to make valid inferences about the causes of student achievement growth and teachers’ impacts on said growth, given the factors that bias such indicators and ultimately distort their interpretations (Messick 1989; see also Haladyna and Downing 2004).

Many VAM researchers agree that estimates of teachers who teach smaller classes; disproportionate percentages of gifted students, ELLs, special education (SPED) students, and students who receive free-or-reduced (FRL) lunches; and students retained in grade are adversely impacted by bias (Ballou and Springer 2015; McCaffrey et al. 2009; Newton et al. 2010; Rothstein 2009, 2010, 2017). In perhaps the most influential study on this topic, Rothstein (2009, 2010) illustrated VAM-based bias when he found that students’ 5th grade teachers were better predictors of students’ 4th grade growth than were the students’ 4th grade teachers. While others have certainly called into question Rothstein’s work (see, for example, Goldhaber and Chaplin 2015; Guarino et al. 2014; Koedel and Betts 2009), what is known in this regard is that over the past decade VAM-based evidence of bias has been investigated at least 33 times in articles published in top peer-reviewed journals (Lavery et al. 2019). Demonstrated across these articles is that bias is still of great debate, as is whether statistically controlling for bias by using complex statistical approaches to account for nonrandom student assignment makes such biasing effects negligible or “ignorable” (Rosenbaum and Rubin 1983; see also Chetty et al. 2014; Koedel et al. 2015; Rothstein 2017).

4.4 Fairness

The Standards define fairness as the impartiality of “test score interpretations for intended use(s) for individuals from all [emphasis added] relevant subgroups” (AERA et al. 2014, p. 219). Issues of fairness arise when a test or test-based inference or use impacts some more than others in unfair or prejudiced, yet often consequential ways. Given the recent emphases on issues of fairness, accordingly, the Standards have prompted an expanded book focusing on such issues (Dorans and Cook 2016).

The main issue here is that states and districts can only produce VAM-based estimates for approximately 30–40% of all teachers (Baker et al. 2013; Gabriel and Lester 2013; Harris 2011). The other 60–70%, which sometimes includes entire campuses of teachers (e.g., early elementary and high school teachers) or teachers who do not teach the core subject areas assessed using large-scale standardized tests (e.g., mathematics and English/language arts), cannot be evaluated or held accountable using teacher-level value-added data. What VAM-based data provide, then, are measures of teacher effectiveness for only a relatively small handful of teachers (Harris and Herrington 2015; Jiang et al. 2015; Papay 2010). When stakeholders use these data to make consequential decisions, issues with fairness become even more important, whereas some teachers are more likely to realize the negative or positive consequences attached to VAM-based data, simply given the grades and subject areas they teach.

4.5 Transparency

While the Standards (AERA et al. 2014) do not explicitly define transparency, this concept pertains to the openness, understandability, and eventual use of the inferences derived via educational measurements and instruments including VAMs. For the purposes of this study, researchers define transparency as the extent to which something is quite simply accessible (e.g., attainable) and then comprehensible and usable.

In terms of VAMs, the main issue here is that most VAM-based estimates do not seem to make sense to those at the receiving end. Teachers and principals do not seem to understand the models being used to evaluate teachers; hence, they are reportedly unlikely to use VAM output for the formative purposes for which VAMs are also intended (Eckert and Dabrowski 2010; Gabriel and Lester 2013; Goldring et al. 2015; Graue et al. 2013; Kelly and Downey 2010). Rather, practitioners describe VAM reports as inaccessible, confusing, not comprehensive in terms of the concepts and objectives teachers teach, ambiguous in terms of teachers’ efforts at individual student and composite levels, and oft-received months after students leave teachers’ classrooms.

For example, teachers in Houston (home to another one of the legal cases highlighted in Education Week 2015) collectively expressed that they are learning little about what they did effectively or how they might use their value-added data to improve their instruction (Collins 2014). Teachers in North Carolina reported that they were “weakly to moderately” familiar with their value-added data (Kappler Hewitt 2015, p. 11). Eckert and Dabrowski (2010) also demonstrated that in Tennessee (home to two other legal cases cited in Education Week 2015), teachers maintained that there was no to very limited support in helping teachers understand or use their value-added data to improve upon their practice (see also Harris 2011). Altogether, this is problematic in that one of the main purported strengths of nearly all VAMs is the wealth of diagnostic information accumulated for formative purposes (see also Sanders et al. 2009), though at the same time, model developers sometimes make “no apologies for the fact that [their] methods [are] too complex for most of the teachers whose jobs depended on them to understand” (Carey 2017; see also Gabriel and Lester 2013).

4.6 Consequential validity

As per Messick (1989), “[t]he only form of validity evidence [typically] bypassed or neglected in these traditional formulations is that which bears on the social consequences of test interpretation and use” (p. 8). In other words, the social and ethical consequences matter as well (Messick 1980; Kane 2013). The Standards (AERA et al. 2014) recommend ongoing evaluation of both the intended and unintended consequences of any test as an essential part of any test-based system, including those based upon VAMs.

The Standards (AERA et al. 2014) state that the responsibility of ongoing evaluation of social and ethical consequences should rest on the shoulders of the governmental bodies that mandate such test-based policies, as they are those who are to “provide resources for a continuing program of research and for dissemination of research findings concerning both the positive and the negative effects of the testing program” (AERA 2000; see also AERA Council 2015). However, this rarely occurs. The burden of proof, rather, typically rests on the shoulders of VAM researchers to provide evidence about the positive and negative effects that come along with VAM use, to explain these effects to external constituencies including policymakers, and to collectively work to determine whether VAM use, given the consequences, can be rendered as acceptable and worth the financial, time, and human resource investments (see also Kane 2013).

Intended consequences

As noted prior, the primary intended consequence of VAM use is to improve teaching and help teachers (and schools/districts) become better at educating students by measuring and then holding teachers accountable for their effects on students (Burris and Welner 2011). The stronger the consequences, the stronger the motivation leading to stronger intended effects. Secondary intended consequences included replacing and improving upon the nation’s antiquated teacher evaluation systems (see, for example, Weisberg et al. 2009).

Yet, in practice, research evidence supporting whether VAM use has led to these intended consequences is suspect given the void of evidence supporting such intended effects. For improving teaching and student learning, as also noted prior, VAM estimates may tell teachers, schools, and states little-to-nothing about how teachers might improve upon their instruction, or how all involved might collectively improve student learning and achievement over time (Braun 2015; Corcoran 2010; Goldhaber 2015). For reforming the nation’s antiquated teacher evaluation systems, recent evidence suggests that this has not occurred (Kraft and Gilmour 2017).

Unintended consequences

Simultaneously, stakeholders often fail to recognize VAMs’ unintended consequences (AERA 2000; see also AERA Council 2015). Policymakers must present evidence on whether VAMs cause unintended effects and whether the said unintended effects outweigh their intended effects, all things considered. Policymakers should also contemplate the educative goals at issue (e.g., increased student learning and achievement), alongside the positive and negative implications for both the science and ethics of using VAMs in practice (Messick 1989, 1995).

As summarized by Moore Johnson (2015), unintended consequences include, but are not limited to: (1) teachers being more likely to “literally or figuratively ‘close their classroom door’ and revert to working alone…[which]…affect[s] current collaboration and shared responsibility for school improvement” (p. 120); (2) teachers being “[driven]…away from the schools that need them most and, in the extreme, causing them to leave [or to not (re)enter] the profession” (p. 121); and (3) teachers avoiding teaching high-needs students if teachers perceive themselves to be at greater risk of teaching students who may be more likely to hinder their value-added, “seek[ing] safer [grade level, subject area, classroom, or school] assignments, where they can avoid the risk of low VAMS scores” (p. 120), all the while leaving “some of the most challenging teaching assignments…difficult to fill and likely…subject to repeated [teacher] turnover” (p. 120; see also Baker et al. 2013; Collins 2014; Hill et al. 2011). The findings from these studies and others point to damaging unintended consequences where teachers view and react to students as “potential score increasers or score compressors; [s]uch discourse dehumanizes students and reflects a deficit mentality that pathologizes these student groups” (Kappler Hewitt 2015, p. 32; see also Darling-Hammond 2015; Gabriel and Lester 2013; Harris and Herrington 2015).

In sum, as per the Standards (AERA et al. 2014), ongoing evaluation of all these measurement issues as pertaining to VAMs and VAM use is essential, although this is not being committed to, nor committedly done. The American Statistical Association (ASA 2014), the AERA Council (2015), and the National Academy of Education (Baker et al. 2010) have underscored similar calls for research within their associations’ positions statements about VAMs (see also Harris and Herrington 2015).

Notwithstanding, researchers used the above to frame this case study analysis, again, as primarily related to the measurement and pragmatic issues presented to the court in this case in New Mexico. It is this set of issues that researchers set out to make more transparent, to help others throughout the U.S. and internationally better understand the issues of primary dispute in this high-profile case, and also across cases (see Education Week 2015), all of which surround states’ (or districts’) high-stakes uses of teachers’ value-added estimates and these measurement areas of concern. It is important to note again, however, that while researchers examined court documents using these six key measurement concepts (i.e., reliability, validity [convergent-related evidence], bias, fairness, transparency, and consequential validity) and found evidences of issues across all areas of interest, researchers conducted actual analyses using data as pertinent to only the first three (i.e., reliability, validity [i.e., convergent-related evidence], and bias).

5 Methods

Researchers conducted a case study analysis (Campbell 1975; Flyvbjerg 2011; Gerring 2004; Ragin and Becker 2000; Thomas 2011; VanWynsberghe and Khan 2007) to examine the legal documents in this case including official complaints, exhibits, affidavits, and other court documents including court rulings. The case study approach, according to VanWynsberghe and Khan (2007), best suits this type of study given researchers’ (1) nonrepresentative sample of participants (i.e., from the state of New Mexico), (2) emphases on contextual detail (i.e., official court documents as situated within New Mexico), (3) focus on nonexperimentally controlled events (i.e., a teacher-evaluation system implemented and lived in practice), (4) well-defined parameters (i.e., the state of New Mexico’s teacher evaluation system), and (5) multiple data sources (i.e., legal documents including complaints, exhibits, affidavits, etc.).

Researchers’ primary intent was to determine what the issues of the case were, as aligned to the afore-described conceptual framework (i.e., reliability, validity [i.e., convergent-related evidence], bias, fairness, transparency, and consequential validity). Researchers’ intent was to help others understand how this teacher evaluation system was being used and played out in the real world (Flyvbjerg 2011). Again, this teacher evaluation system was predicated upon one state’s large-scale educational policies, with high-stakes consequential decisions also at stake and legal dispute. However, generalizations may not be permitted given researchers’ sample of convenience (i.e., all documents taken from one state that may or may not generalize to other states’ teacher evaluation systems), although naturalistic generalizations (e.g., across other states with similar teacher evaluation systems) might certainly be warranted on a case-by-case basis (Stake 1978; Stake and Trumbull 1982).

Researchers also calculated and assessed New Mexico’s teacher evaluation systems’ actual levels of reliability, validity (i.e., convergent-related evidence), and potential for bias (as also aligned with researchers’ afore-described conceptual framework). Researchers did this statistically, with their primary intent to determine whether New Mexico’s teacher evaluation data were indeed reliable, valid, and biased (or unbiased), in isolation and in comparison, to other states’ or districts’ teacher evaluation systems as based on the current research literature. Researchers’ intent was not to test the state-level VAM (e.g., by running the state data through another VAM to assess the state’s homegrown VAM). Rather, researchers’ intent was to assess the actual VAM output as used throughout New Mexico and situate findings within the current literature capturing what we know about other VAMs in terms of their levels of reliability, validity (i.e., convergent-related evidence), and potential for bias (or a lack thereof) to determine how this system was functioning, relatively and perhaps well enough to make and then legally defend the consequential decisions being attached to New Mexico teachers’ VAM estimates.

5.1 Data collection

As mentioned, researchers examined the official complaints, exhibits, affidavits, and other court documents of legal pertinence in this case. More specifically, researchers examined 26 exhibits (i.e., exhibits A–Z) that included the following: exhibits A–E included documents describing the key components of New Mexico’s teacher evaluation system, as well as the VAM upon which the system was based (i.e., as developed by the state’s former Assistant Secretary for Assessment and Accountability and value-added modeler, Pete Goldschmidt; see also Swedien 2014), although it should be mentioned that nowhere across exhibits and court documents is the actual model equation illustrated or explained (exhibit D was the closest, but only included information about general VAMs and VAM equation approximations and assumptions). Otherwise, no information was made available to the court to describe the model, or the model’s technical properties or merits. Apparently, “post implementation/use statistics from the [New Mexico] model…[are]…managed by the [New Mexico] Public Education Department.” Those external to that system are not privy to data or results (T. Hand, personal communication, March 17, 2017). This also speaks to the model’s potential issues regarding transparency, as defined prior (see also forthcoming).

Exhibits F–I included memorandums released to all New Mexico school superintendents and human resource officers to supplement, help explain, or respond to questions given the information included in exhibits A–E. Exhibits J–N included technical information about the student-level tests that were used to calculate teachers’ value-added estimates (e.g., the state of New Mexico’s Standards Based Assessments [SBAs], End of Course assessments [EOCs], Dynamic Indicators of Basic Early Literacy Skills [DIBELS]). Exhibits O–S included information about the state’s observational system, modifiedFootnote 3 from Charlotte Danielson’s Framework for Teaching (Danielson Group, n.d.), and the state’s homegrown student survey system, both of which were considered to be the state’s other “multiple measures” (along with teacher attendanceFootnote 4) used to help evaluate New Mexico’s teachers. Exhibit T included the state’s press release regarding the teacher evaluation scores from academic year 2014–2015. Lastly, exhibits U–Z included the results from analyses of teachers’ evaluation scores conducted by a New Mexico Legislative Education Study Committee that was created “to compile general perceptions, issues, and concerns into a summary report, which [was] to be provided to the [state].” Related, also included within exhibits U–Z was a series of letters submitted by teachers concerned about the scores they received, and a series of tables describing the errors that the state had allegedly made when calculating teachers’ value-added estimates. These errors included but were not limited to teachers being held accountable for test scores of students they did not teach, teachers’ students whose scores were missing, teachers being listed as teaching grade levels or subject areas different than those they actually taught, teachers being held accountable using test data for subject areas they did not teach, and the like. Researchers also examined two affidavits, both of which were submitted by the expert witness working on behalf of the plaintiffs in this case (totaling 113 pages of text), and all other relevant court documents including plaintiff and defendant witness disclosures, retention agreements, deposition notices, official complaints, case documents, and court rulings.

It should also be noted here, though, that in many cases researchers also sought out supplementary resources to help them explore particular questions they had while reviewing the documents officially submitted for this case. However, they did not find any additional documents of value in terms of helping to explain, for example, the state’s VAM, the technical properties of the tests used by the states beyond that which was included in exhibits J–N, user guides or other technical information about the observational and survey systems used in the state, and the like. While problematic, also for members of the profession or public looking for this type of information that many might argue should be made more publicly available, this also verifies that that which was reviewed for this study as taken directly from the case was all that really spoke to the issues at hand, again, in court as well as in practice.

For researchers’ statistical analyses of New Mexico’s teacher evaluation systems’ levels of reliability, validity (i.e., convergent-related evidence), and potential for bias, researchers collected: (1) teacher-level VAM-based estimates for all New Mexico teachers, as calculated by the state; (2) classroom observation scores with (2a) scores from Danielson’s modified domains 2 and 3 (i.e., as related to student “learning”) weighted differentially than (2b) scores from Danielson’s modified domains 1 and 4 (i.e., as related to Planning, Preparation, and Professionalism [PPP]); and (3) student survey scores. Researchers used the latter indicators to assess whether teachers’ VAM scores converged (i.e., convergent-related evidence of validity) with teachers’ observational and survey scores.

Population and subsample

The initial data files provided by the state for this lawsuit included 26,966 unique teachers across three academic years: 2013–2014, 2014–2015, and 2015–2016. Of the 26,966 educators, 97.0% (n = 26,160) were certified teachers. For purposes of this analysis, researchers restricted this sample to include certified teachers who had VAM and observation data for all three academic years, as is standard and recommended practice (Brophy 1973; Cody et al. 2010; Glazerman and Potamites 2011; Goldschmidt et al. 2012; Harris 2011; Ishii and Rivkin 2009; Sanders as cited in Gabriel and Lester 2013). Researchers did this while also taking into consideration that these two measures carry the majority of weight in the state’s teacher evaluation system (i.e., these two measures are of most evaluative value as weighted, although weights were not used in these analyses). This resulted in the final sample including 7777 teachers, which was 28.8% of the full dataset or 29.7% of all certified teachers. That the final sample included approximately 30% of the teachers included in the main data files is also important to note as directly related to issues of fairness, which were also of concern to the court in this case (see also forthcoming).

5.2 Data analyses

For case study purposes, researchers analyzed all of the written text included within the pages of the documents described prior. Researchers read through each document coding for text, quotes, and concepts related to the elements of their a priori framework (Miles and Huberman 1994) aligned with the Standards (AERA et al. 2014). Using this deductive approach to coding (in which the categories were preselected from this framework), researchers grouped text, quotes, and concepts by element in the framework per document. This systematic approach to coding also modeled the framework method (Gale et al. 2013; Ritchie et al. 2013).

In terms of the statistical analyses researchers conducted in order to assess the New Mexico teacher evaluation system’s levels of reliability, validity (i.e., convergent-related evidence), and potential for bias, researchers engaged in the following methods of data analyses per area of interest. In terms of reliability, researchers investigated the distribution of teachers’ VAM estimates per teacher over time, as well as the correlations among scores over time. For comparative purposes, researchers calculated the correlations among teachers’ observational, PPP, and student survey scores over the same period of time. Researchers conducted chi-square (χ2) tests to determine if teachers’ ratings along the four variables’ score distributions and degrees of score variation significantly differed from year to year. To do this, they calculated quintiles of each measure’s scores to determine what percentages of teachers moved among quintiles (chosen given the state of New Mexico classifies teachers as per their evaluation output using five effectiveness ratings) from 1 year to the next.

In terms of convergent-related evidence of validity, researchers investigated the (cor)relationships between the same indicators (i.e., teachers’ VAM-based estimates, observational scores, PPP scores, and student survey scores). Researchers analyzed correlations among all four variables via calculations of Pearson’s r coefficients between each pair of variables for each year. To determine if the differences between bivariate correlations from year to year were significant, researchers used Fisher’s Z tests (Dunn and Clark 1969, 1971).

In terms of potential for bias (or the lack thereof), researchers compared the scores of all four measures per multiple teacher- and school-level subgroups (see Appendix 1 for a full list of the demographics used). Researchers calculated descriptive statistics for teachers’ scores by these teacher- and school-level subgroups, while also analyzing statistically significant differences using t tests or fixed effects analyses of variance (ANOVA). It should also be noted that researchers did not have access to more nuanced or granular data, such as criterion-specific scores for each observation domain or individual item scores for the student surveys, that would have allowed for a more sophisticated analysis of potential bias.

6 Findings

6.1 Reliability

Across the documents analyzed for the case study section of this study, the concept of reliability was noted once and only peripherally. Only in exhibit E did the state include information about how teachers’ students’ test scores would be used to calculate teachers’ value-added given how many years of VAM-based data teachers had. The more value-added data the teacher had, the more weight (i.e., at least 50%) the teacher’s value-added scores were to carry. While written in exhibit E was the goal to have 3 years of data per teacher, also written was that teachers with less than 3 years of data (but no less than 1 year of data) would be held accountable for their value-added, or lack thereof.

Again, according to the literature, reliability can be increased with 3 years of data, although after including 3 years of data the strength more data adds to efforts to increase reliability plateaus. As such, having a minimum of 3 years of data is now widely accepted as standard practice in order to get the most reliable VAM estimates possible. What New Mexico had made official, then, contradicts field standards in terms of guaranteeing all evaluated teachers a 3-year minimum. This is especially important when consequential decisions are at play (Brophy 1973; Cody et al. 2010; Glazerman and Potamites 2011; Goldschmidt et al. 2012; Harris 2011; Ishii and Rivkin 2009; Sanders as cited in Gabriel and Lester 2013).

In terms of researchers’ calculations of the state’s actual levels of reliability, using only those teachers for whom 3 years of value-added data were available, researchers found that a plurality of teachers’ VAM-based quintile rankings were the same from year to year (i.e., 31.6% [n = 2455/7771] from 2013–2014 to 2014–2015 and 31.6% [n = 2454/7766] from 2014–2015 to 2015–2016) or differed by one quintile (i.e., 40.6% [n = 3157/7771] from 2013–2014 to 2014–2015 and 39.4% [n = 3060/7766] from 2014–2015 to 2015–2016). However, many teachers also received dissimilar quintile rankings over the same period of time, with over 25% of teachers with scores that differed by two or more quintiles year to year (i.e., 27.8% [n = 2159/7771] from 2013–2014 to 2014–2015; 29.0% [n = 2252/7766] from 2014–2015 to 2015–2016).

To help illustrate what this looked like in practice, researchers generated a figure illustrating New Mexico teachers’ VAM ratings from year one (2013–2014) to year three (2015–2016) to illustrate how teachers’ value-added scores fluctuated over time. Evidenced in Fig. 2 are the percentages of teachers who got a given rating in 1 year (i.e., illustrated on the left) and the same teachers’ subsequent ratings 2 years later (i.e., illustrated on the right).

Visible in Fig. 2 is that 43.1% of teachers who scored in the top quintile as per their VAM ratings in 2013–2014 remained in the same quintile (e.g., highly effective) 2 years later (2015–2016); 24.0% of these same teachers dropped one quintile (e.g., from highly effective to effective); 14.4% dropped two quintiles (e.g., from highly effective to average); 10.9% dropped three quintiles (e.g., from highly effective to ineffective); and 7.5% dropped four quintiles (e.g., from highly ineffective to highly ineffective). Inversely, 17.71% of teachers who scored in the bottom quintile as per their VAM ratings in 2013–2014 remained in the same quintile 2 years later (e.g., highly ineffective); 19.5% of these same teachers moved up by one quintile (e.g., from highly ineffective to ineffective); 19.9% moved up by two quintiles (e.g., from highly ineffective to average); 21.0% moved up by three quintiles (e.g., from highly ineffective to effective); and 17.7% moved up by four quintiles (e.g., from highly ineffective to highly effective). See also all other permutations illustrated.

These results make sense when situated in the current literature, as also noted prior, whereas teachers classified as “effective” 1 year typically have a 25% to 59% chance of being classified as “ineffective” 1 or 2 years later (Martinez et al. 2016; Schochet and Chiang 2013; Yeh 2013). In New Mexico, 18.4% of teachers deemed “effective” (e.g., highly effective or effective) in the first year were deemed “ineffective” (e.g., ineffective or highly ineffective) 2 years later, and 38.7% of teachers deemed “ineffective” (e.g., ineffective or highly ineffective) in the first year were deemed “effective” (e.g., highly effective or effective) 2 years later. This yields an average movement, akin to the afore-cited literature, of 29% or approximately one out of every three teachers jumping effectiveness ratings within 3 years.

In comparison to the other measures used to evaluate New Mexico teachers, teachers’ observation scores appeared to be the most stable or reliable over time, again, as based on similar reliability estimates of teachers’ observational, PPP, and student survey scores. See Appendix 2 for these scores’ levels of reliability over time.

6.2 Validity

Across the documents analyzed for the case study part of this study, the concept of validity (i.e., convergent-related evidence of validity) was noted nowhere. Validity, more broadly speaking, was mentioned once in terms of content-related evidence of validity, or whether test scores can be used to make inferences about student achievement, over time, as well as teachers’ impacts on student achievement over time. In exhibit N, the state noted that districts might opt to use tests in addition to those that were the state-approved tests, but districts must evidence to the state that each test pass a content review during which “a panel of content experts and skilled item writers should evaluate the quality of newly-written items” included in the tests to be used for teacher evaluation purposes, after which the district would be required to submit a report to the state describing results. Here, and again elsewhere across documents, the state stops at the most basic level of validity, encouraging or relying seemingly only on logic, face validity, and nothing more empirical. Related, across exhibits J–N that included technical information about the tests used to calculate teachers’ value-added estimates for all of New Mexico’s VAM-eligible teachers, no empirical information was presented to evidence that the states’ tests could or should be used to evaluate student achievement over time or teachers’ impacts on student achievement over time. Rather, standard statistics (e.g., internal consistency statistics, test–retest and split-half reliability indicators, proportion-correct [p] values) were used to evidence that the tests were reliable and valid for purposes of measuring student achievement at one point in time. It seems that because many of these tests are already in place across states (e.g., as mandated via the federal government’s former NCLB Act 2001), these tests are being used more out of convenience (and cost savings) for their newly but not yet validated tasks and uses.Footnote 5

Otherwise, and in terms of researchers’ calculations of the state’s VAM-based levels of convergent-related evidence of validity as aligned with current practice in this area of research, researchers found that correlations among all measures used in New Mexico to evaluate its teachers were weak to very weak.Footnote 6 This was true across all years, with the exception of the correlations between teachers’ observation and PPP scores, which were strongFootnote 7 (see Table 3).

The strong correlations observed between teachers’ observation and PPP scores, however, make sense given teachers’ observation and PPP scores came from within the same observational instrument (i.e., as modified from the Danielson’s Framework [Danielson Group, n.d.]). If anything, such strong correlations might suggest that separating out teachers’ observational scores (i.e., from modified domains 2 and 3) from teachers’ PPP scores (i.e., from modified domains 1 and 4) is not defensible or warranted, given such high or strong correlation coefficients often suggest a universal but not divisible or detachable factor structure. Rather, this may suggest that the factor structure pragmatically posited and used throughout New Mexico may not empirically hold (Sloat 2015; see also Sloat et al. 2017; Polat and Cepik 2015).

Notwithstanding, illustrated in Table 3 is that correlations were the weakest between teachers’ VAM-based estimates and student survey scores across years, ranging between the statistically significant yet very weakFootnote 8 levels of r = 0.031 and 0.135. Conversely, other than the strong relationships observed between teachers’ observation and PPP scores, again as taken from within the same instrument, correlations were stronger (albeit still weakFootnote 9) between teachers’ observation and student survey scores across years, ranging from r = 0.211 to 0.235.

Perhaps most importantly, as also situated within the current literature, the correlations between teachers’ VAM and observation scores ranged from r = 0.153 to r = 0.210. Lower correlations were observed between teachers’ VAM and PPP scores ranging from r = 0.128 to r = 0.189. To help illustrate what the higher of the two VAM and observational correlations looked like in practice (i.e., taking the observational score from domains 2 and 3 which mapped onto student learning), researchers generated Fig. 3 to illustrate the distributions of New Mexico teachers’ VAM and observation ratings across quintiles for all 3 years of focus (i.e., 2013–2014, 2014–2015, and 2015–2016).

Demonstrated is that regardless of which year is chosen, of the three sets of VAM and observational data displayed, New Mexico’s teachers’ data are more or less randomly distributed. Visible is that, on average (with the average taken across the 3 years of data visualized) 30.4% of teachers who scored in the top quintile as per their VAM scores also landed in the top quintile as per their observation scores (i.e., with both indicators suggesting that these teachers were highly effective); 26.6% of these same teachers dropped one quintile from the top VAM quintile to the second highest observation quintile (e.g., from highly effective to effective); 18.4% dropped two quintiles (e.g., from highly effective to average); 16.1% dropped three quintiles (e.g., from highly effective to ineffective); and 8.6% dropped four quintiles (e.g., from highly ineffective to highly ineffective). Inversely, 20.9% of teachers who scored in the bottom quintile as per their VAM scores also landed in the bottom quintile as per their observation scores (i.e., with both indicators suggesting that these teachers were highly ineffective); 23.8% of these same teachers moved up by one quintile from the bottom VAM quintiles to the second lowest observation quintile (e.g., from highly ineffective to ineffective); 19.1% moved up by two quintiles (e.g., from highly ineffective to average); 21.0% moved up by three quintiles (e.g., from highly ineffective to effective); and 15.2% moved up by four quintiles (e.g., from highly ineffective to highly effective). See also all other permutations illustrated.

These results make sense as per the current literature, as also noted prior, given the correlations between teachers’ VAM scores in general. Not only are the correlations for New Mexico teachers very weak,Footnote 10 they are also relatively very weak as situated within the literature. The literature has been saturated with evidence that correlations between multiple VAMs and observational scores typically range from 0.30 ≤ r ≤ 0.50 (see, for example, Grossman et al. 2014; Hill et al. 2011; Kane and Staiger 2012; Polikoff and Porter 2014; Wallace et al. 2016). It can be concluded, then, that New Mexico’s correlations, with regard to convergent-related evidence of validity, are very weak also in comparison to other VAMs.

6.3 Bias

Across the documents analyzed for the case study section of this study, the concept of bias was noted twice. First, in one of the memorandums (i.e., exhibit I of exhibits F–I) released to all New Mexico school superintendents and human resource officers, the state noted that the state’s VAM is one of the few that does not include covariates to control for or block student demographic variables that may bias teachers’ value-added scores (e.g., socioeconomic status, race, ELL status). The memo did not offer anything further, however, by disclosing or discussing the extent to which not controlling for such variables may yield more or less biased VAM output.

Elsewhere in exhibit N, as also related to the above comments about the tests that districts might use in addition to the state-level tests required, the state also encouraged districts to review the tests they might use for bias within the tests themselves. However, the state again stopped short of requiring empirical evidence (e.g., analyses of bias in test score outcomes by student demographics) and required, instead, “bias reviews” by “panel[s] of subgroup representatives who are competent in [the] content area [who] can conduct an acceptable bias evaluation.” The state suggested one analytical technique to do this, that of “Differential Item Functioning (DIF) [which] is perhaps the most widely-recognized bias examination method;” although, the state also noted that “the [state would] accept,” but not require such evidence, as this “require[d] highly specialized expertise to perform.”

Otherwise, and as previously explained, potentially biased estimates of teacher effectiveness are observed when performance varies for different subgroups of teachers, often even despite the sophistication of statistical controls put into place to control for or block such bias (see also Paufler and Amrein-Beardsley 2014; Baker et al. 2010; Collins 2014; Kappler Hewitt 2015; McCaffrey et al. 2004; Michelmore and Dynarski 2016; Newton et al. 2010; Rothstein and Mathis 2013). In fact, current practice suggests that all such demographics should be included in any VAM just to be increasingly certain that the biasing effects of such variables are negated (Koedel et al. 2015). With that being said, researchers found indicators of bias throughout the data examined at the teacher (e.g., gender, race/ethnicity) and school levels (e.g., proportion of ELL students, proportion of minority students) likely (or at least in part) as a result. Researchers also found that the potential for bias existed across the measures of teacher effectiveness used in New Mexico, or that bias was not just limited to the state’s VAM.

6.3.1 Teacher-level differences

Researchers found evidence of possible teacher-level bias across all 3 years, all four of New Mexico’s measures of teacher effectiveness, and as per the five teacher-level subgroups that they analyzed (see Appendix 3, Tables 6, 7, 8, and 9).

Gender

Compared to female teachers, male teachers had significantly higher VAM scores in 2013–2014 (t = 3.471, p = 0.001), but significantly lower VAM scores in 2015–2016 (t = 7.150, p < 0.001). VAM-based bias did not hold by gender. However, female teachers had significantly greater observation scores, PPP scores, and student survey scores compared to male teachers for each of the 3 years; hence, if anything, these three measures (i.e., not including VAM estimates across years) might have been biased in favor of female teachers (see also Bailey et al. 2016; Steinberg and Garrett 2016; Whitehurst et al. 2014), although some might argue that female teachers were better than their male colleagues.

Race/ethnicity

While there were no significant differences between Caucasian and non-Caucasian teachers’ VAMs (with non-Caucasian teachers defined as Asian (< 1%), African American, Hispanic, and Native American as per the state’s classifications), there were statistically significant differences between the two groups of teachers on the other three measures. Caucasian teachers had significantly higher observation and PPP scores than non-Caucasian teachers for each of the 3 years. Hence, it could be concluded that Caucasian teachers may be perceived as better teachers than non-Caucasians given these instruments or the scorers observing teachers in practice may be biased against some versus other teacher types by race (see also Bailey et al. 2016; Steinberg and Garrett 2016; Whitehurst et al. 2014). Inversely, non-Caucasian teachers had higher student survey scores than Caucasian teachers for all 3 years, with 2014–2015 (t = 4.258, p < 0.001) and 2015–2016 (t = 5.607, p < 0.001) being statistically significantly different. Again, this could be due to bias (i.e., that teachers’ students’ had differing perspectives of their teachers’ qualities by race), given standard issues with surveys (e.g., low response rates that might distort validity; Nunnally 1978), or given non-Caucasian teachers may have indeed been better teachers than their Caucasian colleagues.

Years of experience

Overall, teachers with fewer years of experience had VAM estimates that were significantly lower than teachers with more years of experience. Similar patterns were observed for teachers’ observation scores and PPP scores, with teachers with the least amount of experience routinely earning scores that were significantly lower than their more experienced counterparts across each of the 3 years. This could mean, as also in line with common sense as well as the current research (Darling-Hammond 2010), that teachers with more experience are typically better teachers. These findings might support the validity of teachers’ VAMs and observational scores in this regard. Survey scores did not follow this pattern, however, which might be due to issues with survey research also noted prior.

Grades taught

In 2013–2014, elementary school teachers had significantly lower VAM scores than middle school teachers and high school teachers (F = 149.465, p < 0.001), and high school teachers had significantly higher VAM estimates than both elementary teachers and middle school teachers in 2014–2015 (F = 6.789, p = 0.001). This might mean, in the simplest of terms, that elementary school teachers might be worse than teachers in high school, or that VAM estimates and the ways they are calculated (e.g., using different tests at different levels) might be biased against teachers of younger students. There were no other significant differences observed across teachers’ observation and PPP scores based on grades taught, although significant differences did exist for survey scores. High school teachers had the lowest scores as based on their student survey data, and elementary school teachers had the highest scores in 2013–2014 (F = 60.929, p < 0.001) and 2014–2015 (F = 178.239, p < 0.001).

Subject taught

Overall, teachers who taught ELL or SPED classes had lower VAM estimates across all 3 years than those who did not teach such classes. Those that were significant were for ELL teachers who had significantly lower VAMs than non-ELL teachers in 2014–2015 (t = 2.001, p = 0.046), and SPED teachers who had significantly lower VAMs than non-SPED teachers in 2014–2015 (t = 2.248, p = 0.025) and 2015–2016 (t = 7.354, p < 0.001). Contrariwise, teachers who taught gifted classes had significantly higher VAMs than nongifted teachers in 2013–2014 (t = 4.724, p < 0.001) and 2014–2015 (t = 3.147, p = 0.002). This runs counter to the research evidencing that gifted students often prevent gifted teachers from displaying growth given ceiling effects (Cole et al. 2011; Kelly and Monczunski 2007; Koedel and Betts 2007; Linn and Haug 2002; Wright et al. 1997).

Otherwise, patterns similar to those mentioned above were also observed for ELL, SPED, and gifted teachers on their observation and PPP scores. Consistently across all years, non-ELL, non-SPED, and gifted teachers had significantly better observation and PPP scores than their ELL, SPED, and nongifted counterparts (see also Bailey et al. 2016; Steinberg and Garrett 2016; Whitehurst et al. 2014). The one exception to this pattern was for ELL teachers in 2015–2016, as while their PPP scores were higher than those of non-ELL teachers, the difference was not significant. Regarding student survey scores, ELL teachers had significantly higher scores than non-ELL teachers for all 3 years, and SPED teachers had significantly higher scores than non-SPED teachers in 2015–2016. There were no significant differences between gifted and nongifted teachers’ survey scores.

6.3.2 School-level differences

Researchers found evidence of the potential for school-level bias across all 3 years, all four of New Mexico’s measures of teacher effectiveness, and as per the six school-level subgroups that researchers analyzed (see Appendix 3, Tables 10, 11, 12, and 13).

6.3.3 Total enrollment

Teachers in schools with low enrollments (i.e., enrollment less than the sample median; hereafter referred to as low enrollment schools) had significantly higher VAMs in 2013–2014 (t = 2.428, p = 0.015) and 2014–2015 (t = 5.017, p < 0.001) than teachers in high enrollment schools, although this was reversed in 2015–2016 where teachers in high enrollment schools had significantly greater VAMs (t = 4.300, p < 0.001). Observation scores significantly differed only in 2015–2016, where teachers in low enrollment schools scored significantly higher than teachers in high enrollment schools (t = 2.888, p = 0.004). Teachers in low enrollment schools had significantly lower PPP scores in 2013–2014 (t = 5.386, p < 0.001), but significantly higher PPP scores in year 2015–2016 (t = 2.807, p = 0.005). Only on teachers’ student survey scores did researchers observe a consistent pattern. Teachers in low enrollment schools had significantly higher survey scores for all the 3 years, although concerns about the student survey measures noted prior likely also come into play here.

SPED student population

Teachers in schools with low populations of SPED students (i.e., hereafter referred to as low SPED schools) consistently had significantly greater VAMs, observation scores, and PPP scores than teachers in high SPED schools across all 3 years. This suggests that teachers in low SPED teachers are as a group better or that VAM estimates might be biased against teachers teaching in high SPED schools, preventing them from demonstrating comparable growth. This pattern was reversed for teachers’ student survey scores, however, as teachers in low SPED schools had lower survey scores than teachers in high SPED schools. This was trued across the board, although the only significant difference was in 2015–2016 (t = 3.003, p = 0.003).

ELL student population

Similar to teachers in low SPED schools, teachers in low ELL schools consistently had significantly greater VAMs, observation scores, and PPP scores than teachers at high ELL schools for all 3 years. Teachers in low ELL schools also had lower survey scores for each of the 3 years, although the only statistically significant difference was, again, in 2015–2016 (t = 9.131, p < 0.001). This would suggest that teachers in low ELL schools are as a group better, or that VAM estimates might be biased against teachers teaching in high ELL schools, preventing them from demonstrating comparable growth.

FRL student population

Similar to teachers in low ELL and low SPED schools, teachers in low FRL schools consistently had significantly greater VAM, observation, and PPP scores than teachers at high FRL schools for all 3 years. Again, this would suggest that teachers in low FRL schools are as a group better, or that VAM estimates might be biased against teachers teaching in such schools, preventing them from demonstrating comparable growth. Teachers in high FRL schools had significantly higher survey scores for each of the 3 years.

Gifted student population

Related to the discussion about gifted teachers prior, teachers in schools with higher proportions of gifted students (hereafter referred to as high gifted schools) had significantly greater VAM scores as a whole than teachers at low gifted schools in 2013–2014 (t = 2.980, p = 0.003) and 2014–2015 (t = 6.075, p < 0.001). This pattern was the same for observation and PPP scores; hence, this would, again, suggest that teachers in high gifted schools are as a group better, or that VAM estimates might be biased against teachers teaching in schools with fewer gifted students, preventing them from demonstrating comparable growth. Teachers in low gifted schools, however and perhaps not surprisingly at this point, had significantly greater survey scores than teachers in high gifted schools for each of the 3 years.

Minority student population

Lastly, and in line with teachers in low SPED, ELL, and FRL schools, teachers in schools with lower populations of non-Caucasian students (hereafter referred to as low minority schools) consistently had significantly higher VAMs than teachers in high minority schools for all 3 years. Again, this was the case for teachers’ observation and PPP scores, again suggesting that teachers in low minority schools are as a group better, or that VAM estimates might be biased against teachers teaching in schools with more minority students, preventing them from demonstrating comparable growth. Survey scores were slightly more varied based on minority student population, with the only significant difference between scores in 2014–2015, where teachers in low minority schools had significantly higher survey scores than teachers in high minority schools (t = 2.902, p = 0.004).

6.4 Fairness

Across the documents analyzed for the case study section of this study, the concept of fairness was noted multiple times. In terms of the state’s general teacher evaluation model, the state made it explicit across multiple documents that in order to include more teachers in the state’s teacher evaluation system, the state developed three different evaluation models to be more inclusive in its efforts to evaluate three different teacher types, as per the grades and subject areas they taught. These teacher types included group a, group b, and group C teachers. Group A teachers taught grades (e.g., grades 3–11) and subject areas that were tested using the state’s SBAs (e.g., in mathematics, English/language arts, science, and social studies), and were, therefore, the teachers who were eligible for teacher-level VAM scores (i.e., VAM-eligible). These teachers’ students’ test scores were to count for at least 50% of these teachers’ overall evaluation scores, alongside their other evaluation data. However, in some cases, VAM data were used in isolation of the other data. For example, some plaintiff teachers’ files were flagged solely given their VAM scores, thus suggesting that these teachers were ineffective. Regardless of these teachers’ other data, when the state used the VAM score to flag these (and other) teachers’ files, this meant that the VAM carried 100% of the evaluative weight.

Group B and group C teachers were VAM-ineligible teachers because they taught students in nontested subject areas or nontested grade levels. More specifically, group B teachers included all physical education, music, art, foreign language, etc. teachers; grade 9 and grade 12 mathematics and English/language arts teachers; and all high school science [e.g., biology, chemistry, physics] and social studies teachers [e.g., world history, geography, economics]. Group C teachers taught students in grades kindergarten through grade 2.

While at face value this might seem fair, this still leaves group A teachers as the only ones who are VAM-eligible. Given that VAM scores are the scores to which the state ties its most important decisions (e.g., flagging teachers’ files and teacher termination decisions), this reinforces New Mexico’s issues with fairness in that, as also situated in the current research, still only approximately 30–40% of all teachers in the state are VAM- as well as consequence-eligible (Baker et al. 2013; Gabriel and Lester 2013; Harris 2011).