Abstract

Several cancelable biometric procedures have been developed to preserve personal data security. This research presents a cancelable biometric authentication mechanism to ensure personal data security, while maintaining the biometric templates secure from hackers. Therefore, our major approach is to provide a new authentication system based on 3D chaotic maps, Piecewise Linear Chaotic Map (PWLCM), logistic map, and Deoxyribonucleic Acid (DNA) sequencing theory. The approach is based on confusion as well as a diffusion. Firstly, a 3D chaotic map is made up to allow 3D chaos creation, chaos histogram equalization, row rotation, column rotation, and XOR operation. Secondly, the PWLCM and the Logistic Map are used for generating the main values required to make use of the DNA theory to generate the key image. Thirdly, DNA theory is applied as a second encryption algorithm on the output of the 3D chaotic algorithm. Fourthly, the intermediate image is decoded, rotated 90 degrees anticlockwise, and used in the following step in the DNA encoding process. Finally, step 3 is repeated on columns to acquire the final encrypted image. The proposed framework hides all discriminative properties of biometric templates, resulting in a fully unspecified biometric pattern. Various face, as well as palmprint biometric datasets, have been examined and evaluated. Various evaluation factors are employed to examine the efficiency of the recommended cryptography algorithm including Area under the Receiver Operating Characteristic (AROC), False Acceptance Rate (FAR), histogram analysis, Histogram Deviation (\(D_{H}\)), correlation coefficient, Structural Similarity Index Metric (SSIM), and Peak Signal-to-Noise Ratio (PSNR). The computational results prove that the proposed cryptosystem is trustworthy. A comparative study of the recommended approach with various strategies is also provided. Simulation studies illustrate that the suggested approach produces an average AROC of approximately 1, an average FAR of \(6.2 \times 10^{ - 3}\), an average \(D_{H}\) of 0.8755, and finally, an average PSNR of 8.2061.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Biometric recognition has advanced rapidly, and it is now virtually and universally utilized. Biometric recognition approaches identify and check unique characteristics precisely, quickly, and properly to govern the access process in specialized applications (Arqub and Abo-Hammour 2014; Soliman et al. 2021a; Abo-Hammour et al. 2013; Algarni et al. 2020a; Ibrahim et al. 2020a). Therefore, restricting access and blocking attackers from damaging or identifying the templates are critical.

The two types of biometrics utilized by humans are physical and logical biometrics (Alarifi et al. 2020; Ibrahim et al. 2020b). The face, iris, retina, palmprint, and fingerprint have specific physical characteristics, but logical or behavioral characteristics, such as voice, signature, keystroke patterns, and walking style, are measured by the behavior of the body and its response to various conditions.

All of these biometric represent aspects or characteristics of our human body, which are used to ensure that no hackers may get admission to manage the services provided (El-Shafai et al. 2023a, 2022a; Elazm et al. 2023). Passwords have historically been used to protect the key for encryption from being stolen or hacked. Most people employ the same passwords across many applications and never change them as the utilization of different lengthy passwords is difficult. If an attacker attempts to get access to the database and a portion of the secret password is stolen, the other services may be at risk (El-Shafai et al. 2021a).

Organizations continuously look forward to preserve their data safely and improve the network service to prevent unauthorized access. Authentication and identification are performed to ensure that the permitted user can only access a secure and reliable location. Traditional encryption mechanisms, such as personal identification numbers (PINs) as well as passwords, have been used for years. For increased security, magnetic cards and PINs have been implemented (Faragallah et al. 2020; El-Shafai et al. 2023b; Hassan et al. 2022).

Some problems of the conventional methods arise from the fact that they recognize some characteristics related to the owner rather than the owner himself. The system can simply admit or control any outsider if these tokens are stolen or destroyed. There is a new methodology in verification strategies that uses biometrics in many applications, such as government services, commercial applications, and forensic evidence applications that rely on human being supervision to identify biometrics (Nassar et al. 2023; Almomani et al. 2023).

Biometric templates should be guarded when an application requires a high degree of secrecy. This in turn increases the accuracy and secrecy of identifying people (Elazm et al. 2023; El-Shafai et al. 2022a). As illustrated in Fig. 1, the biometric system is divided into four levels: image sensor, processing unit, database, and output device (Ahmad et al. 2022). In the enrollment stage, a biometric system is learned to recognize a specific client. The client initially presents an identity, like an identity card. The biometric (e.g., fingers, hand, face, or iris) is then presented to the input (sensor) device. The characteristics or features are obtained and saved as a reference pattern for the next examinations. After enrollment, the next step is to confirm that the client is the person who enrolled. After the user supplies whatever identity he or she registered with, the captured biometric is presented, and a new template is generated. The system next verifies the new biometric pattern to the individual's reference pattern, which was saved upon enrolment, to see if the new and stored patterns are identical.

Biometric-based authentication systems have provided powerful security characteristics, particularly in telemedicine applications, to protect personal data against offline password threats (Ahmad et al. 2022). Even though biometrics solve numerous security problems, hackers have discovered strategies to attack biometric authentication processes and databases containing biometric information. Biometrics are difficult to secure since the hashing strategy used to safeguard passwords does not operate with biometric information. As a result, any business that uses biometrics to authenticate its members must guarantee that their biometric information is appropriately protected (Salama et al. 2022; Almomani et al. 2022a). Biometric encryption approaches provide excellent confidentiality, security, and uniqueness for permitted persons. Encryption keys boost the security of biometric cryptosystems. Original biometric characteristics are not stored directly in the server with these cryptosystems but are first processed and turned into distorted, transformed templates (El-Shafai et al. 2022b, 2022c).

Cancelable biometrics (El-Shafai et al. 2022d) play a vital role in biometric security by modifying the basic biometric into a new irreversible domain. Instead of saving the basic characteristics directly into the server, cancelable biometrics are first generating by deform the biometric characteristics using various modification functions as shown in Fig. 2.

These cancelable templates have four significant properties (Ibrahim et al. 2020a): (i) diversity, (ii) non-invertiblity, (iii) revocablity, (iv) high performance. Diversity typically indicates that no two users should be assigned the same cancelable biometric pattern. Non-invertiblity indicates that original biometric characteristics cannot be retrieved using these cancelable biometric patterns. Revocablity indicates that if an individual's cancelable biometric pattern is stolen, a new cancelable pattern will be assigned to him/her without affecting the actual biometric identity. Finally, the high performance property specifies that the recognition accuracy of a cancelable biometric system should be as high as that of a standard biometric system. If one's allocated cancelable biometric template is hacked, a new template will be assigned to him by deleting the prior one, as is the case with ATM passwords.

The motivation behind the development of this work is to address the security and privacy concerns associated with biometric recognition systems. Biometric recognition systems are widely used for identification and authentication purposes, but they have a vulnerability to attacks such as spoofing and replay attacks, which can compromise the security of the system. The proposed system uses 3D optical chaos and DNA encryption to enhance the security of biometric recognition systems. The system is cancellable, which means that the biometric data can be modified without affecting the recognition performance, thus protecting the privacy of the users. The use of 3D optical chaos and DNA encryption ensures that the biometric data cannot be easily replicated or tampered. Overall, the motivation behind the development of this system is to provide a more secure and privacy-preserving biometric recognition system that is robust against various attacks and can be used in a wide range of applications, including access control, surveillance, and forensics.

In this research work, a new authentication system built on 3D chaotic maps, Piecewise Linear Chaotic Map (PWLCM), Logistic Map, and finally, Deoxyribonucleic acid (DNA) sequence theory is suggested. First, a 3D chaotic map is made up of the following processes: (1) 3D chaos creation, (2) chaos histogram equalization, (3) row rotation, (4) column rotation, and (5) XOR process. Secondly, PWLCM and Logistic Map are used for generating all of the main values required by DNA theory to generate the key image. Thirdly, DNA theory is applied as a second encryption algorithm to the output of the 3D chaotic algorithm. Fourthly, the intermediate image is decoded and used in the following step in the DNA encoding process. Finally, step 3 is repeated on columns to acquire the final encrypted image.

The rest of this paper is organized as follows: Sect. 2 includes some past works. Section 3 presents the suggested cancelable biometric authentication architecture. Section 4 gives an explanation of the assessment metrics that were used. Section 5 summarizes the computer simulation results and performance evaluations. Section 6 summarizes the final remarks.

2 Related work

Cancellable biometric methodologies are utilized in the verification system to provide distorted versions of biometrics (El-Hameed et al. 2022; Helmy et al. 2022a). If required, authorized features can be removed or replaced in hacking cases. The cancelable biometric strategy is devoted to preserving the highest precision level of the saved biometrics to improve user privacy.

Mohamed et al. (2022) developed an iris recognition authorization strategy. It is dependent on a mix of non-invertible transformations with encryption to disguise the iris template. They achieved a 99.9% recognition rate. Various security strategies for face authentication are provided in Ayoup et al. 2022a. They employed a variety of procedures to extract geometric characteristics. Another proposed approach is based on creating concealed templates after using Gabor filters. This approach presented two types of chaotic maps: logistic and modified logistic maps. With the chaotic logistic map, this methodology achieved 99.08% accuracy and 1.175% EER. Also, the genetic (GA) encryption algorithm (Arqub and Abo-Hammour 2014; Abo-Hammour et al. 2013) can be used to attain biometric image cancelability (Alshammri et al. 2022). The GA (Abo-Hammour et al. 2014; Abu Arqub et al. 2012) method chooses the appropriate secret key with the best length to increase encryption. The researchers of Soliman et al. (2018a) described a face biometric authentication method based on Double Random Phase Encoding (DRPE) algorithm. The bio-convolving approach was used to safeguard the safety and privacy of the individuals' faces. In addition, the same researchers (Soliman et al. 2018b) proposed a multi-biometric authentication architecture.

It is focused on combining multiple biometric pattern characteristics. A one-way iris pattern is created using the Fractional Fourier Transform (FRFT)-based approach. The cryptographic keys RPM1 and RPM 2 are employed in the described cancelable biometric strategy. RPM1 is the first cover for the left iris characteristic vector, and RPM2 is for the right iris feature pattern of the individual. The implemented strategy achieved an EER of 0.63% and an efficiency of 99.75%.

El-Hameed et al. (2022) proposed a cancelable geometric methodology for fingerprint recognition. It works by deducing feature pixels and then using polar and Cartesian coordinate processes to generate cancelable fingerprint characteristics. This method maintains cancelability while achieving high privacy and security accuracy. The researchers of Helmy et al. (2022a) presented a fingerprint recognition security technique based on numerous spiral curves and fuzzy principles. The equal error rate (EER) of this method is 1.17%.

The researchers of Hammad et al. (2019) used a 2-dimensional Gabor filter to extract features of a palmprint, followed by a two-dimensional palm Hash algorithm to mask the features and construct the cancelable palmprint vector. Leng et al. (2014), a method for multi-biometric characteristics is used to construct cancelable biometrics in order to obtain high privacy and secrecy depending on different feature fusion degrees. Qiu et al. (2019), a new palmprint pattern protection system is developed based on random comparison and noise data. An anisotropic filter (AF) is used to collect the palmprint's orientation information. The palmprint's orientation characteristic is then assessed using a chaotic matrix, resulting in a protected and cancelable palmprint pattern. Furthermore, as the last cancelable palmprint pattern, noise data having independent and identically dispersed distribution is included to improve the template's privacy security. In addition, a method for producing cancelable palmprint patterns was developed using coupled nonlinear dynamic filters and various orientation palm codes (Qiu et al. 2019). In conclusion, cancelable biometric formats can be created using a variety of techniques, including random projection (Soliman et al. 2021b; Asaker et al. 2021; El-Gazar et al. 2022), hashing function (YYYY xxxx), and salting (Almomani et al. 2022b), but they all have the same basic goal of transforming the original biometric pattern into a distorted version, attempting to make it complicated for an enemy to recover the original template from the distorted one. Cancellable template models have been used to biometric characteristics from numerous attributes, such as the face and palmprint, to create several safe biometric architectures.

3 Proposed cancelable biometric authentication framework

In this session, we proposed a Cancellable biometric methodology based on two hybrid encryption techniques: pixel rotation with XOR-based encryption technique using 3D chaos and DNA theory as a second encryption algorithm to the output of 3D chaotic algorithm to provide distorted versions of biometrics. The 3D chaotic system is utilized for position permutation and the value transformation approach. In the position permutation strategy, the pixel position is permuted without affecting the pixel value of the actual image, but in the value transformation strategy, the pixel value is changed by another pixel value without modifying the position as in Helmy et al. (2022b). Figure 3 depicts the flowchart of the recommended cancelable biometric authentication architecture. The suggested framework's major stages are illustrated and summarised as follows:

-

(a)

3D Chaos generation.

-

(b)

Chaos histogram equalization.

-

(c)

Row rotation.

-

(d)

Column rotation.

-

(e)

XOR operation.

-

(f)

Piecewise liner chaotic map and logistic map.

-

(g)

MD5 hash.

-

(h)

Deoxyribonucleic acid string (DNA).

-

(i)

DNA cryptosystem.

3.1 3D chaos generation system

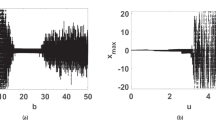

The logistic chaos map is used to generate 3D chaos in the proposed chaotic-based cryptosystem. The logistic map is one of the most basic and well-known chaotic maps, based on changing the placements of pixels in images and adjusting gray-level pixel values. An equation that describes the nonlinear logistic map chaos generation system is as follows:

where \(0 < x_{n} < 1\) and \(\mu = 4\) is the condition to do this formula chaotic. The 3D logistic map chaos generation expressions are formulated as in Helmy et al. (2022b) and Ayoup et al. (2022):

where \(3.53 < \gamma < 3.81, 0 < \beta < 0.022 ,0 < \alpha < 0.015\), and the preliminary values of \(x,y,z\) in between 0 and 1. These parameters make the 3D logistic map more complicated and secure than 1D or 2D logistic maps.

3.2 Chaos histogram equalization

The histogram of X, Y, and Z has non-uniform distribution, as illustrated in Fig. 4a–c. The histogram should be equalized to raise the degree of security. The following equations are used to equalize the histogram for a grey image of \(MXN\) dimensions:

where \(N_{2} ,N_{4} , N_{6}\) are huge random numbers more than 10,000. We may also assume \(N_{2} , N_{4} , \;{\text{and}} N_{6}\) to be equal for the simplicity. Using \(N_{2} = N_{4} = N_{6} = 100,000,M = 256,N = 256\), Fig. 4d–f depict the equalized histogram.

3.2.1 Row rotation

Depending on the value of chaos \(x\) from Eq. (5), Helmy et al. (2022b) proposed a novel strategy for row rotation that would aid us in image pixel permutation. When the chaos value is even, the rows must be rotated left; when the chaos value is odd, the rows must be rotated right, as shown in Fig. 5.

3.2.2 Column rotation

Column rotation and row rotation have a lot in common. The value of chaos Y determines a column rotation in Eq. (6). When the chaos is an even number, we must rotate the columns up, and when the chaos is an odd value, we must rotate the columns down (Helmy et al. 2022b), as shown in Fig. 6.

3.2.3 XOR operation

The XOR operation is the final stage in this encryption procedure. First, the pixel value is changed to a new value via the XOR operation, which cannot be reversed without having the chaos key. Next, we XOR the chaos and row-column shifted the image to produce the encrypted image.

3.2.4 Piecewise liner chaotic map and logistic map

The Piecewise Linear Chaotic Map (PWLCM) and Logistic Map procedures are utilized to generate all of the parameters required by the approach. PWLCM offers various advantages, according to Ayoup et al. (2022), Eldesouky et al. (2022) and Faragallah et al. (2022), such as its representation simplicity and execution efficiency. It is utilized to create the key image, whereas the logistic map is used to govern the processes (operations) or DNA standards (rules) chosen for encoding and to reconstruct every row of the basic image. Equation (8) defines the PWLCM, whereas Eq. (9) represents the logistic map.

where \(X_{n} \in \left( {0,1} \right)\) and \(p \in \left( {0,0.5} \right).\) Assume that \(p = 0.25678900.\)

where \(X_{n} \in \left( {0,1} \right)\) and \(\mu \in \left( {0,4} \right]\). Assign the value of µ = 3.99999999 (Ayoup et al. 2022b).

3.2.5 MD5 hash

MD5 (Message-Digest Mechanism 5) is a widely used encryption algorithm that generates a 128-bit hash value from the data input, which is generally a 32-digit hexadecimal integer (El-Shafai et al. 2022e). The initial values of chaos maps are determined by the MD5 encryption estimated real value, as measured by Eq. (10):

The chaotic map's beginning number is \(X_{0}\), and the estimated number of \(X_{0}\) can either be 0 or 1 with a certain probability; if this happens, discard it and use Eq. (10) to get another. The parameters \(d_{1} ,d_{2} ,d_{3} ,d_{4}\) are calculated from the fundamental image's MD5 hashing result. The hash estimated value of the basic image is divided into four 32-bit bits. \(d_{1} ,d_{2} ,d_{3} ,d_{4}\) are the first 32 bits, each of which represents a single byte. Before using Eq. (10), just convert \(d_{1} ,{ }d_{2} ,{ }d_{3} ,d_{4}\) from 1 and 0 s to decimal.

3.2.6 Deoxyribonucleic acid string (DNA)

DNA is divided into four separate nucleic acid bases: adenine (A), thymine (T), cytosine (C), and guanine (G), as well as other required components. A and T, as well as C and G, create complementary sequences (Eldesouky et al. 2022). The complementing rule is satisfied by eight rules. The fundamental image is encoded using four separate nucleo bases that follow eight different standards. The decimal number 201, for example, has a binary equivalent of '11001001'. '11001001' is encoded following to DNA principles, resulting in eight different forms: 'TACG', 'TAGC', 'ATCG', 'ATGC', 'CGTA', 'CGAT', 'GCTA', and 'GCAT’.

Furthermore, to encrypt the data, use numerous DNA sequence techniques like addition, subtraction, and exclusive OR (XOR). Tables 1, 2, 3 and 4 outline the DNA match rules and processes (Ayoup et al. 2022; Eldesouky et al. 2022; Faragallah et al. 2022).

3.2.7 DNA cryptosystem

In the DNA cryptosystem, we should apply the following steps:

-

1.

Use Eqs. (8) and (11) to produce the key image

$${\text{pixel}} = \left[ {{ }X{ } \times { }256} \right],$$(11)The recurrence value for PWLCM is \({\text{X}} \in \left( {0,1} \right).\) Equation (10) determines the starting value of Eq. (8). Neighboring pixels in the key image are likely to be unrelated to one another. Pixels produced from a chaotic map are a great way to achieve these requirements. The predicted value of the next pixel generated differs from the current one.

-

2.

The FECT and key images are encoded on rows using the DNA standards defined by Eqs. (9) and (12).

$${\text{Rule}}\left( {{\text{standard}}} \right) = \left[ {{\text{X}} \times 8} \right] + 1,$$(12)The initial result of Eq. (9) is obtained from Eq. (10). Table 1 contains a list of the eight DNA standards. Each pixel in a row is encoded with one of the DNA standards, and each row uses a new set of DNA standards until the entire image is encoded. Corresponding to DNA encoding standards, 8 bits of each image pixel are separated and encoded into 4 categories of nucleobases.

-

3.

DNA operations are performed row by row between the Encoded (FECT) and the encoded key image. Equations (9) and (13) indicate the type of DNA procedures to be carried out. Tables 2, 3 and 4 outline the fundamentals of DNA processes.

$${\text{process}}\left( {{\text{operation}}} \right) = \left[ {{\text{X}} \times 3} \right] + 1,$$(13)Different types of DNA operations (XOR, +, −) are conducted in a row-by-row way until the encoded intermediate representation image appears.

-

4.

By using a decoding methodology on the past intermediate representation image, you would be able to obtain the decoded intermediate one. Equation (12) expresses the decoding procedure. Using this process, we may obtain a preliminary cipher representation.

-

5.

The first cipher image is rotated 90 degrees anti-clockwise. In this case, we merely study images by rows and then by columns.

-

6.

Repeat steps 2 through 4 for the columns to create a Final Encrypted cancelable biometric template.

4 Authentication evaluation metrics

The visual examination is important in assessing cancelable biometrics since robust encryption and great cancelability arise from highly coveted features in the recommended cancelable biometric cryptosystem. Quality assessment does not rely just on direct observation. As a result, numerous measurements are used to assess the progress of the cancelable biometric architecture. Correlation criteria assess the relationship between a recorded biometric pattern and a biometric input pattern. The greater the factor value, the more similar the templates will be. Access to the system is granted if the correlation score for a checked user exceeds a certain level. Mathematically, an authorised person's correlation ratio must be greater than a hacker attempting to get access to the database.

The receiver operating characteristic (ROC) curve may be used to assess the success of the presented cancelable biometric authentication system. The true positive factor (TPF) and the false-positive factor (FPF) are represented by the ROC curve as in Ibrahim et al. (2020b) and Hashad et al. (2020). The ROC curve idea is based on a decision variable. In every biometric identification system, the tested information includes genuine and false templates, allowing each pattern value to be dispersed around a given mean value.

As a result, the authentic template's mean value is greater than the false template's mean value. Furthermore, the encryption efficiency is evaluated by calculating correlation coefficients, histogram deviation, SSIM, MSE (Mean Square Error), PSNR (Peak Signal-to-Noise Ratio), Number of Pixel Change Rate (NPCR), UACI (Unified Average Changing Intensity), and histogram uniformity among protected and original biometrics. For cancelable biometric systems, the values of Correlation, PSNR, and SSIM should be at their lowest. But values for NPCR, MSE, and UACI should be at their highest levels. These authentication metrics will be explored in depth in order to assess the quality of the planned cancelable biometric authentication framework as follows:

4.1 Histogram analysis

The histogram depicts the distribution degree for every pixel strength in a biometric image. In the case of cancelable biometric technologies, the histogram must have both features for the protected biometric template, which is based on encryption methodology (Algarni et al. 2020b):

-

1.

The cipher biometric image's histogram differs from the basic biometric image's histogram.

-

2.

It must have an identical distribution, meaning all pixel values are distributed uniformly.

4.2 Correlation score

The correlation is a comparison of the biometric template and its distorted replica. It is calculated between two adjacent pixels of an image as in the following equation (Helmy et al. 2022b):

where \(M\) is the number of pixels, \(C_{i,j}\) is the correlation coefficient, and \(i,j\) are grayscale values of two neighboring pixels in the basic image or ciphered image. The correlation assessment has two scenarios, which are detailed below:

-

1.

When the correlation coefficient is near one, it explores the highest score, which occurs only when 2 biometric images are strongly correlated.

-

2.

The \(C_{i,j}\) score is close to or equal to zero, indicating a considerable difference between the permitted biometric image and its encrypted form, where the ciphered biometric pattern is completely independent of the primary one.

4.3 The probability of true distribution (PTD) and false distribution (PFD)

In permitted access scenarios, the PTD reflects the probability distribution of the statistical correlation. The PFD displays the statistical correlation's probability distribution in spoofed access conditions. Only if a biometric test score exceeds a specified level (Equal Error Rate (EER)), which is calculated at the intersection of the imposter and real distributions, is access permitted to the system. As in El-Shafai et al. (2021b), the inaccurate reject and wrong accept errors are equivalent at this junction point.

4.4 The receiver operating characteristic (ROC) curve analysis

Receiver operating characteristic (ROC) analysis is a graphical method of evaluating the efficiency of the structure. The sensitivity (true positive rate) of a ROC curve is depicted as a mathematical function of the specificity (false positive rate) at different intersection locations as in Faragallah et al. (2021a).

4.5 Structural similarity index metric (SSIM)

It is a strategy for determining the similarity between two images. The SSIM is used as a measure for encryption efficiency in this research. A strong encryption technique should have SSIM values (the difference between the actual and encrypted pictures) near zero, as in El-Shafai et al. (2021b). It is described with the following equation:

where \(\mu_{I} \;{\text{and}}\;{ }\mu_{T}\) are the averages of an input image (I) and the encrypted image \(T\), respectively, \(\sigma_{I}^{2}\) and \(\sigma_{T}^{2}\) correspond to the variances of I and \(T\), respectively, \(\sigma_{IT}\) signifies the covariance between them, and finally \(S_{1}\) and \(S_{2}\) are small values.

4.6 Statistical histogram deviation (\({\varvec{D}}_{{\varvec{H}}}\))

This parameter is used to assess the recommended cryptosystem's ciphering strength by determining the highest amount of deviations among the histograms of pure and ciphered images as in Alqahtani et al. (2022). It can be determined using Eq. (19):

where \(q_{i}\) is the absolute difference between the pure and ciphered images' estimated histograms at a gray level i. Hence, the greater the magnitude of \(D_{H}\), the greater the deviation of the cipher image from the main image.

4.7 PSNR metric

The peak signal-to-noise ratio (PSNR) is employed to assess the suggested cryptosystem’s strength in the case of channel noise. It is assessed among the actual and ciphered images. PSNR is an abbreviation for the ratio of a signal's highest possible value (power) to the strength of distorting noise that influences the quality of its representation. The value of \(PSNR \in \left[ {0,\infty } \right]\). The PSNR ratios between the cypher image and the basic image should be low for an efficient encryption procedure, as in Alqahtani et al. (2022). The PSNR is calculated analytically as indicated in Eq. (20):

where n is the number of bits/pixel, and MSE is the Mean Squared Error. The value of PSNR ϵ [0,∞]. MSE can be calculated by the following equation:

where O is the reference image, \(T\) is the encrypted image.

4.8 Number of pixel change rate (NPCR)

The NPCR measure is defined as a percentage of different pixel amounts between two ciphered images. If the ciphering procedure produces a higher NPCR value, the ciphering technique given is resistant to differential attacks. The NPCR metric is calculated as shown in (22) and (23) as in El-Shafai et al. (2022d):

\(g\left( {i,j} \right)\) denotes the difference between related pixels of the plain image and the encrypted image. The estimated NPCR value of a ciphered image must be near to 100.

4.9 Unified average changing intensity (UACI)

It is employed to measure the intensity value difference between two images. The value of UACI in cancelable biometric technology must be high as in El-Shafai et al. (2022d). It can be computed as in Eq. (24):

5 Simulation results and discussions

The images used in the study are \(256 \times 256\) images with an 8-bit per pixel resolution. The suggested cryptosystem has the benefit of being able to deal with any sort of image (grayscale/color) of varying sizes and characteristics. The proposed cryptosystem is implemented on the Intel(R) processor Core (TM) i5-10210U CPU @ 1.60 GHz, an operating system of Windows 10, 8.00 GB RAM. The experimental results are obtained using MATLAB R2019a. The simulation analyses are reviewed visually and by various criteria for an efficient evaluation of the proposed cryptosystem, such as histogram uniformity, correlation coefficient, histogram deviation, Structural Similarity (SSIM) Index, Peak Signal-to-Noise Ratio (PSNR), FAR (False Acceptance Rate), and AROC. We examine two various datasets of faces (UFI database-LFW deep funneled) and ROI palmprint dataset to evaluate the recommend cancelable biometric authentication architecture. In simulation studies, the first two trial samples are for 12 different biometric faces of various persons, while the remaining samples are for different palmprint images of various users, as shown in Figs. 7, 8 and 9.

The recommended cryptosystem's ciphering performance for each of the evaluated biometric samples is shown in Figs. 10, 11 and 12. From the perspective of a visual encryption assessment, the suggested cancelable biometric architecture achieves high performance. Tables 5, 6, 7 and 8 present Correlation coefficients of neighboring pixels in the tested twelve biometrics images and their ciphered of all examined databases.

Distortion and encryption so that the actual biometrics may be kept securely in a cloud server. Figures 13, 14 and 15 show the ROC, PFD, and PTD curves of the authentication stage for the proposed encryption technique for all analyzed biometric samples. The PFD and PTD intersection point specifies the threshold crossing rate, which is then used to verify whether or not this individual is a real user.

Figures 16, 17 and 18 show the histograms of the original and ciphered biometrics for the offered cryptosystem of all tested biometric datasets. The recommended cryptosystem is shown to provide relatively uniform and flat histogram results, demonstrating its better decision-making. Hence, applying this cryptosystem on the ROI palmprint biometrics database histogram, for example, achieves security features and makes this database secure from intruders. Two biometrics images have been examined for all simulation testing in the verification process. Both belong to different users—one is the real user, and the other is an impostor. To assess the performance of the proposed encryption strategy, the correlation and SSIM values are checked between the two examined encrypted patterns (false and genuine) and the real stored secured biometric patterns. Our research considers that the physical environment contains some noise that might impact the saved or tested biometric patterns. Therefore, noise is a factor in all experimental data.

Tables 8, 9 and 10 present the results of the fake/genuine correlation analysis and the SSIM evaluation for a selection of patterns from the three biometrics datasets. In Table 8, the average correlation/SSIM values of the LFW deep funneled database in the case of the false face are \(0.0162{ }\) and \(8.033 \times 10^{ - 3}\) respectively, while in the case of the genuine face, the average correlation/SSIM values are \(0.9051\) and \(0.9004,\) respectively. In Table 9, the average values of the UFI database correlation/SSIM for the false face are \(3.85 \times 10^{ - 3}\) and \(0.0101,\) respectively, whereas the average values for a genuine face are shown as \(0.9055\) and \(0.9008,\) respectively. In Table 10, the average ROI palmprint database correlation/SSIM values for the false face are \(0.0150\) and \(8.15 \times 10^{ - 3}\) respectively, even though the average values for a real face are \(0.9050\) and \(0.9003,\) respectively. This indicates that palmprint authentication is successful because the results for a real person are close to one, while the results for a false person are close to zero. According to the outcomes in the tables, the proposed technique produces the highest correlation coefficients and SSIM scores in genuine biometrics enrollment, while it achieves the lowest results in false biometrics enrollment. Several criteria have been studied to ensure the efficacy of the proposed cipher scheme, such as FAR, AROC, PSNR, and Histogram Deviation (\(D_{H}\)). Table 11 shows the mathematical evaluation of the FAR, AROC, Histogram Deviation, and PSNR.

According to Table 11, the values of PSNR between the original image and the ciphered image for all the datasets range from (7.5473 to 9.2309), indicating the proposed algorithm's effectiveness. Tables 12, 13 and 14 show the current influence of various Gaussian noise variances on the evaluated biometrics for the suggested cancelable approach, with average FAR, PSNR,\(D_{H}\), and AROC values. The measured FAR, \(D_{H}\), PSNR, and AROC values demonstrate that the proposed architecture has a low noise sensitivity.

In Table 15, further trials are done to test the performance success of the recommend authentication approach with the traditional authentication approaches (El-Shafai et al. 2022d, 2021c; Nagar et al. 2010; El-Shafai and Hemdan 2021; Badr et al. 2021; Faragallah et al. 2021b) in order to further evaluate the overall effectiveness of the discussed cryptosystem for creating an efficient cancelable biometric authentication mechanism.

6 Conclusion and future work

This research studied an enhanced encryption technique for generating and constructing a more secure cancelable biometric authorization system. In order to provide a strong cancelable biometric identification system, this proposal's key contribution combines 3D chaotic maps, Piecewise Linear Chaotic Map (PWLCM), and Logistic Map with DNA sequence theory. This technique is constructed on confusion as well as diffusion approach. First, the 3D chaotic map is based on the following steps: (1) 3D Chaos creation, (2) chaos histogram equalization, (3) row rotation, (4) column rotation, and (5) XOR process. Secondly, PWLCM and Logistic Map are used for generating all of the main values required by DNA theory. Thirdly, DNA theory is applied as a second encryption algorithm to the output of the 3D chaotic algorithm. So, the proposed framework hides the whole discriminative properties of biometric templates, resulting in a fully unspecified biometric pattern. According to research, numerous biometric datasets may be encrypted using the specified cryptosystem technique. It also demonstrates its ability to protect biometric patterns compared to conventional approaches. The proposed cancelable biometric approach exhibits an average FAR of \(6.2 \times 10^{ - 3}\), an average \(D_{H}\) of 0.8755, an average AROC of approximately 1, and an average PSNR of 8.2061. We recommend developing multi-biometric templates based on hybrid encryption techniques for future work to improve efficiency and secure biometric pattern storage against threats.

Availability of data and materials

All data are available upon request from the corresponding author.

Abbreviations

- DNA:

-

Deoxyribonucleic acid

- PWLCM:

-

Piecewise linear chaotic map

- EER:

-

Equal error rate

- AROC:

-

A receiver operating characteristic curve

- FAR:

-

False acceptance rate

- \(D_{H}\) :

-

Histogram deviation

- PINs:

-

Personal identification numbers

- DSP:

-

Digital signal processing

- DRPE:

-

Double random phase encoding algorithm

- RPM 1:

-

Random phase mask

- RPM 2:

-

Random phase mask

- FRFT:

-

Fractional Fourier transform

- AF:

-

“À Trous” filtering

- AT:

-

À Trous transform algorithm

- A:

-

Adenine

- T:

-

Thymine

- C:

-

Cytosine

- G:

-

Guanine

- XOR:

-

Exclusive OR

- MD5:

-

Message-digest mechanism 5

- PSNR:

-

Peak signal to noise ratio

- SSIM:

-

Structural similarity index metric

- TPF:

-

True positive rate

- FPF:

-

False positive rate

- PTD:

-

Probability of true distribution

- PFD:

-

Probability of false distribution

- Var(G):

-

Variance (genuine correlation)

- Var(F):

-

Variance (fake correlation)

- \(x_{n}\) :

-

The initial value of the chaos map \(0 < x_{n} < 1\)

- \(y_{n}\) :

-

The initial value of chaos map \(0 < y_{n} < 1\)

- \(z_{n}\) :

-

The initial value of chaos map \(0 < z_{n} < 1\)

- \(\mu\) :

-

Constant parameter = 4

- \({\upgamma }\) :

-

Control parameter \(3.53 < \gamma < 3.81\)

- \(\beta\) :

-

Control parameter \({ }0 < \beta < 0.022{ }\)

- \(\alpha\) :

-

Control parameter \(0 < \alpha < 0.015\)

- \(N_{2} ,N_{4} , N_{6}\) :

-

Random numbers > 10,000

- \(M\) :

-

Number of columns

- \({\text{N}}\) :

-

Number of rows

- \(p\) :

-

Control parameter \(p \in \left( {0,0.5} \right)\)

- \(d_{1} ,d_{2} ,d_{3} ,d_{4}\) :

-

Each of them represents a single byte

- pixel:

-

The pixel value of the key image

- Rule:

-

One of the 8 rules of DNA

- \(process\) :

-

One of DNA operations \(\left( {XOR, + , - } \right)\)

- \(C_{i,j}\) :

-

The correlation coefficient

- \(i,j\) :

-

The grayscale values of two neighboring pixels in the image

- \(E\) :

-

The expectation

- \(E\left( i \right)\) :

-

Mean of \(i\)

- \(E\left( j \right)\) :

-

Mean of \(j\)

- \(\sqrt {D\left( i \right)}\) :

-

Standard deviation of \(i\)

- \(\sqrt {D\left( j \right)}\) :

-

Standard deviation of \(j\)

- \({\upmu }_{{\text{I}}}\) :

-

The average of an input image (I)

- \(\mu_{T}\) :

-

The average of the encrypted image

- \(\sigma_{I}^{2}\) :

-

The variance of the input image

- \(\sigma_{T}^{2}\) :

-

The variance of the encrypted image

- \(\sigma_{IT}\) :

-

Signifies the covariance between the input and encrypted images

- \(S_{1}\) and \(S_{2}\) :

-

Small values

- \(q_{i}\) :

-

The absolute difference between the pure and ciphered images' estimated histograms at a gray level i

- n :

-

The number of bits/pixel

- \(O\) :

-

The reference image

- \(T\) :

-

The encrypted image

References

Abo-Hammour, Z.E., Alsmadi, O., Momani, S., Abu Arqub, O.: A genetic algorithm approach for prediction of linear dynamical systems. Math. Probl. Eng. 2013 (2013)

Abo-Hammour, Z., Abu Arqub, O., Momani, S., Shawagfeh, N.: Optimization solution of Troesch’s and Bratu’s problems of ordinary type using novel continuous genetic algorithm. Discrete Dyn. Nat. Soc. 2014 (2014)

Abu Arqub, O., Abo-Hammour, Z., Momani, S., Shawagfeh, N.: Solving singular two-point boundary value problems using continuous genetic algorithm. In: Abstract and Applied Analysis, vol. 2012. Hindawi (2012)

Ahmad, M., Alkanhel, R., El-Shafai, W., Algarni, A.D., El-Samie, F.E.A., Soliman, N.F.: Multi-objective evolution of strong s-boxes using non-dominated sorting genetic algorithm-II and chaos for secure telemedicine. IEEE Access 10, 112757–112775 (2022). https://doi.org/10.1109/ACCESS.2022.3209202

Alarifi, A., Sankar, S., Altameem, T., Jithin, K.C., Amoon, M., El-Shafai, W.: A novel hybrid cryptosystem for secure streaming of high efficiency H. 265 compressed videos in IoT multimedia applications. IEEE Access 8, 128548–128573 (2020)

Algarni, A.D., El Banby, G., Ismail, S., El-Shafai, W., El-Samie, F.E.A., Soliman, F., N.: Discrete transforms and matrix rotation based cancelable face and fingerprint recognition for biometric security applications. Entropy 22(12), 1361 (2020a)

Algarni, A.D., El Banby, G.M., Soliman, N.F., El-Samie, F.E.A., Iliyasu, A.M.: Efficient implementation of homomorphic and fuzzy transforms in random-projection encryption frameworks for cancellable face recognition. Electronics 9(6), 1046 (2020b)

Almomani, I., Ahmed, M., El-Shafai, W.: DefOff: defensive/offensive system based on hiding technologies. In: 2022a 2nd International Conference of Smart Systems and Emerging Technologies (SMARTTECH), pp. 214–219. IEEE (2022a)

Almomani, I., Alkhayer, A., El-Shafai, W.: A crypto-steganography approach for hiding ransomware within HEVC streams in android IoT devices. Sensors 22(6), 2281 (2022b)

Almomani, I., El-Shafai, W., AlKhayer, A., Alsumayt, A., Aljameel, S.S., et al.: Proposed biometric security system based on deep learning and chaos algorithms. Comput., Mater. Contin. 74(2), 3515–3537 (2023)

Alqahtani, F., Amoon, M., El-Shafai, W.: A Fractional Fourier based medical image authentication approach. CMC-Comput. Mater. Contin. 70(2), 3133–3150 (2022)

Alshammri, G.H., Samha, A.K., Hemdan, E.E.D., Amoon, M., El-Shafai, W.: An efficient intrusion detection framework in software-defined networking for cybersecurity applications. CMC-Comput. Mater. Contin. 72(2), 3529–3548 (2022)

Arqub, O.A., Abo-Hammour, Z.: Numerical solution of systems of second-order boundary value problems using continuous genetic algorithm. Inf. Sci. 279, 396–415 (2014)

Asaker, A.A., Elsharkawy, Z.F., Nassar, S., Ayad, N., Zahran, O., El-Samie, A., Fathi, E.: A novel cancellable Iris template generation based on salting approach. Multimedia Tools Appl. 80(3), 3703–3727 (2021)

Ayoup, A.M., Khalaf, A.A., Alraddady, F., Abd El-Samie, F.E., El-Safai, W., Eldin, S.M.S.: Cancelable multi-biometric template generation based on dual-tree complex wavelet transform (2022)

Ayoup, A.M., Khalaf, A.A., El-Shafai, W., Abd El-Samie, F.E., Alraddady, F., Eldin, S.M.S.: Cancellable multi-biometric template generation based on Arnold cat map and aliasing. CMC-Comput. Mater. Contin. 72(2), 3687–3703 (2022a)

Ayoup, A.M., Khalaf, A.A., Alraddady, F., Abd El-Samie, F.E., El-Safai, W., Eldin, S.M.S.: Selective cancellable multi-biometric template generation scheme based on multi-exposure feature fusion. Intell. Autom. Soft Comput. 33(1), 549–565 (2022b)

Badr, I.S., Radwan, A.G., El-Rabaie, E.S.M., Said, L.A., El Banby, G.M., El-Shafai, W., Abd El-Samie, F.E.: Cancellable face recognition based on fractional-order Lorenz chaotic system and Haar wavelet fusion. Dig. Signal Process. 116, 103103 (2021)

Elazm, L.A.A., El-Shafai, W., Ibrahim, S., Egila, M.G., Shawkey, H., et al.: Efficient hardware design of a secure cancellable biometric cryptosystem. Intell. Autom. Soft Comput. 36(1), 929–955 (2023)

Eldesouky, S., El-Shafai, W., Ahmed, H.E.D.H., El-Samie, F.E.A.: Cancelable electrocardiogram biometric system based on chaotic encryption using three-dimensional logistic map for biometric-based cloud services. Secur. Priv. 5(2), e198 (2022)

El-Gazar, S., El Shafai, W., El Banby, G.M., Hamed, H.F., Salama, G.M., Abd-Elnaby, M., Abd El-Samie, F.E.: Cancelable speaker identification system based on optical-like encryption algorithms. Comput. Syst. Sci. Eng. 43(1), 87–102 (2022)

El-Hameed, H.A.A., Ramadan, N., El-Shafai, W., Khalaf, A.A., Ahmed, H.E.H., Elkhamy, S.E., El-Samie, F.E.A.: Cancelable biometric security system based on advanced chaotic maps. Vis. Comput. 38(6), 2171–2187 (2022)

El-Shafai, W., & Hemdan, E. E. D. (2021). Robust and efficient multi-level security framework for color medical images in telehealthcare services. J. Ambient Intell. Hum. Comput. 1–16

El-Shafai, W., Almomani, I.M., Alkhayer, A.: Optical bit-plane-based 3D-JST cryptography algorithm with cascaded 2D-FrFT encryption for efficient and secure HEVC communication. IEEE Access 9, 35004–35026 (2021a)

El-Shafai, W., Khallaf, F., El-Rabaie, E.S.M., El-Samie, F.E.A.: Robust medical image encryption based on DNA-chaos cryptosystem for secure telemedicine and healthcare applications. J. Ambient. Intell. Hum. Comput. 12(10), 9007–9035 (2021b)

El-Shafai, W., Mohamed, F.A.H.E., Elkamchouchi, H.M., Abd-Elnaby, M., Elshafee, A.: Efficient and secure cancelable biometric authentication framework based on genetic encryption algorithm. IEEE Access 9, 77675–77692 (2021c)

El-Shafai, W., Almomani, I., Ara, A., et al.: An optical-based encryption and authentication algorithm for color and grayscale medical images. Multimed Tools Appl (2022a). https://doi.org/10.1007/s11042-022-14093-3

El-Shafai, W., Khallaf, F., El-Rabaie, E.S.M., El-Samie, F.E. A.: Proposed 3D chaos-based medical image cryptosystem for secure cloud-IoMT eHealth communication services. J. Ambient Intell. Hum. Comput. 1–28 (2022b)

El-Shafai, W., Khallaf, F., El-Rabaie, E.S.M., El-Samie, A., Fathi, E.: Proposed neural SAE-based medical image cryptography framework using deep extracted features for smart IoT healthcare applications. Neural Comput. Appl. 1–25 (2022c)

El-Shafai, W., Abd El-Hameed, H.A., Khalaf, A.A., Soliman, N.F., Alhussan, A.A., Abd El-Samie, F.E.: A hybrid security framework for medical image communication. CMC-Comput. Mater. Contin. 73(2), 2713–2730 (2022d)

El-Shafai, W., Mesrega, A.K., Ahmed, H.E.H., El-Bahnasawy, N.A., Abd El-Samie, F.E.: An efficient multimedia compression-encryption scheme using latin squares for securing Internet-of-things networks. J. Inf. Secur. Appl. 64, 103039 (2022e)

El-Shafai, W., Abd El-Hameed, H.A., El-Hag, N.A., Khalaf, A.A.M., Soliman, N.F., et al.: Proposed privacy preservation technique for color medical images. Intell. Autom. Soft Comput. 36(1), 719–732 (2023b)

El-Shafai, W., Elsayed, M.A., Rashwan, M.A., Dessouky, M.I., El-Fishawy, A.S., et al.: Optical ciphering scheme for cancellable speaker identification system. Comput. Syst. Sci. Eng. 45(1), 563–578 (2023a)

Faragallah, O.S., Afifi, A., El-Shafai, W., El-Sayed, H.S., Naeem, E.A., Alzain, M.A., et al.: Investigation of chaotic image encryption in spatial and FrFT domains for cybersecurity applications. IEEE Access 8, 42491–42503 (2020)

Faragallah, O.S., Naeem, E.A., El-Shafai, W., Ramadan, N., Ahmed, H.E.D.H., Elnaby, M.M.A., et al.: Efficient chaotic-Baker-map-based cancelable face recognition. J. Ambient Intell. Hum. Comput. 1–39 (2021a)

Faragallah, O.S., El-Sayed, H.S., El-Shafai, W.: Efficient opto MVC/HEVC cybersecurity framework based on arnold map and discrete cosine transform. J. Ambient Intell. Hum. Comput. 1–16 (2021b)

Faragallah, O.S., El-Shafai, W., Sallam, A.I., Elashry, I., EL-Rabaie, E.S.M., Afifi, A., et al.: Cybersecurity framework of hybrid watermarking and selective encryption for secure HEVC communication. J. Ambient Intell. Hum. Comput. 13(2), 1215–1239 (2022)

Hammad, M., Luo, G., Wang, K.: Cancelable biometric authentication system based on ECG. Multimedia Tools Appl. 78(2), 1857–1887 (2019)

Hashad, F.G., Zahran, O., El-Rabaie, S., Elashry, I.F., Elbanby, G., Dessouky, M.I., et al.: Cancelable fingerprint recognition based on encrypted convolution kernel in different domains. Menoufia J. Electron. Eng. Res. 29(2), 133–142 (2020)

Hassan, H.A., Hemdan, E.E., El-Shafai, W., et al.: Intrusion detection systems for the internet of thing: a survey Study. Wirel. Pers. Commun. (2022). https://doi.org/10.1007/s11277-022-10069-6

Helmy, M., El-Shafai, W., El-Rabaie, E.S.M., El-Dokany, I.M., Abd El-Samie, F.E.: A hybrid encryption framework based on Rubik’s cube for cancelable biometric cyber security applications. Optik 258, 168773 (2022a)

Helmy, M., El-Shafai, W., El-Rabaie, S., El-Dokany, I.M., El-Samie, F.E.A.: Efficient security framework for reliable wireless 3D video transmission. Multidimens. Syst. Signal Process. 33(1), 181–221 (2022b)

Ibrahim, S., Egila, M.G., Shawkey, H., Elsaid, M.K., El-Shafai, W., Abd El-Samie, F.E.: Hardware implementation of cancellable biometric systems. In: 2020a 4th International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC), pp. 1145–1152. IEEE (2020a)

Ibrahim, S., Egila, M.G., Shawky, H., Elsaid, M.K., El-Shafai, W., El-Samie, A., Fathi, E.: Cancelable face and fingerprint recognition based on the 3D jigsaw transform and optical encryption. Multimedia Tools Appl. 79(19), 14053–14078 (2020b)

Leng, L., Teoh, A.B.J., Li, M., Khan, M.K.: Analysis of correlation of 2DPalmHash Code and orientation range suitable for transposition. Neurocomputing 131, 377–387 (2014)

Mohamed, F.A.H.E., El-Shafai, W., Elkamchouchi, H.M., ELfahar, A., Alarifi, A., Amoon, M., et al.: A cancelable biometric security framework based on RNA encryption and genetic algorithms. IEEE Access 10, 55933–55957 (2022)

Nagar, A., Nandakumar, K., Jain, A.K.: A hybrid biometric cryptosystem for securing fingerprint minutiae templates. Pattern Recogn. Lett. 31(8), 733–741 (2010)

Nassar, M., Ali, A.M., El-Shafai, W., Saleeb, A., AbdEl-Samie, F.E., et al.: Hybrid of distributed cumulative histograms and classification model for attack detection. Comput. Syst. Sci. Eng. 45(2), 2235–2247 (2023)

Qiu, J., Li, H., Zhao, C.: Cancelable palmprint templates based on random measurement and noise data for security and privacy-preserving authentication. Comput. Secur. 82, 1–14 (2019)

Salama, G.M., El-Gazar, S., Omar, B., Nassar, R.M., Khalaf, A.A., El-Banby, G.M., et al.: Cancelable biometric system for IoT applications based on optical double random phase encoding. Opt. Express 30(21), 37816–37832 (2022)

Soliman, R.F., El Banby, G.M., Algarni, A.D., Elsheikh, M., Soliman, N.F., Amin, M., Abd El-Samie, F.E.: Double random phase encoding for cancelable face and iris recognition. Appl. Opt. 57(35), 10305–10316 (2018a)

Soliman, R.F., Amin, M., El-Samie, A., Fathi, E.: A double random phase encoding approach for cancelable iris recognition. Opt. Quant. Electron. 50(8), 1–12 (2018b)

Soliman, N.F., Khalil, M.I., Algarni, A.D., Ismail, S., Marzouk, R., El-Shafai, W.: Efficient HEVC steganography approach based on audio compression and encryption in QFFT domain for secure multimedia communication. Multimedia Tools Appl. 80(3), 4789–4823 (2021a)

Soliman, N.F., Algarni, A.D., El-Shafai, W., Abd El-Samie, F.E., El Banby, G.M.: An efficient GCD-based cancelable biometric algorithm for single and multiple biometrics. CMC-Comput. Mater. Contin. 69(2), 1571–1595 (2021b)

Acknowledgements

The authors are very grateful to all the institutions in the affiliation list for successfully performing this research work. The authors would like to thank Prince Sultan University for their support.

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

All authors are equally contributed.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical approval and consent to participate

All authors are contributing and accepting to submit the current work.

Consent for publication

All authors are accepting to submit and publish the submitted work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Eldawy, H.A.A., El-Shafai, W., Hemdan, E.ED. et al. A robust cancellable face and palmprint recognition system based on 3D optical chaos-DNA cryptosystem. Opt Quant Electron 55, 970 (2023). https://doi.org/10.1007/s11082-023-04840-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11082-023-04840-7