Abstract

In this paper, we propose the idea for combining several iteration functions in order to construct new families of iterative methods with both high-order of convergence and high computational efficiency. As an illustration, combining the most famous iteration functions with two or three arbitrary iteration functions, we obtain three new families of high-order iterative methods for the simultaneous computation of simple or multiple polynomial zeros. To demonstrate the influence of the different corrections and to show how one can manage the convergence order and the efficiency when constructing particular methods, we prove a local convergence theorem with a priori and a posteriori error estimates and an estimate of the asymptotic error constant about one of the proposed general families called Schröder-like methods with two corrections.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \({(\mathbb {K}, |\cdot |)}\) be an arbitrary valued field with a non-trivial absolute value \(|\cdot |\) and \({f :\mathbb {K}\rightarrow \mathbb {K}}\) be an arbitrary function. It is well known that the iterative methods are among the most commonly used tools for finding the zeros of f, and undoubtedly the most famous iterative methods in the literature are Newton, Halley [1], and Chebyshev’s [2] methods.

Let \(\mathbb {K}[z]\) be an univariate polynomial ring over \(\mathbb {K}\) and \(f \in \mathbb {K}[z]\) be a polynomial of degree \({n \ge 2}\). In 1891, Weierstrass [3] offered a different approach for approximating the zeros of f, namely to compute all of them simultaneously. To this end, he proposed his famous iterative method which can be defined in the vector space \(\mathbb {K}^n\) by the following iteration:

where the Weierstrass iteration function \({W_f :\mathcal {D} \subset \mathbb {K}^n \rightarrow \mathbb {K}^n}\) is defined as follows

where \(a_0\) is the leading coefficient of f. Here and throughout the whole paper \(I_n\) denotes the set \(\{1, 2, \ldots , n\}\) and \(\mathcal {D}\) denotes the set of all vectors in \(\mathbb {K}^n\) with pairwise distinct components, i.e.,

Since 1960, the Weierstrass’ method (1) has drawn a great interest among the mathematical community (see, e.g., the monographs of Sendov, Andreev, and Kyurkchiev [4], Kyurkchiev [5], Petković [6], and references therein), and such methods are often called simultaneous methods. The next famous simultaneous methods in the literature, are due to Dochev and Byrnev [7] (also known as Tanabe’s method [8]), Ehrlich [9] and Börsch-Supan [10]. It is well known that the Weierstrass’ method is quadratically convergent while the other three methods are cubically convergent but when the zeros are simple.

Let \({\xi _1,\ldots ,\xi _s}\) be all distinct zeros of f with multiplicities \({m_1,\ldots ,m_s}\), respectively. To preserve the convergence order, in 1972, Sekuloski [11] presented the first simultaneous method for multiple polynomial zeros. Thereafter, many papers have been devoted to this topic (see, e.g., Petković [6], Farmer and Loizou [12], Gargantini [13], Petković et al. [14], Kjurkchiev and Andreev [15], Iliev and Iliev [16], Proinov and Cholakov [17], Proinov and Ivanov [18], and references therein). In particular, the Weierstrass iteration function for multiple zeros \(w_f\) can be defined in the vector space \({\mathbb {K}^s}\) (\(2 \le s \le n\)) as follows (see, e.g., Petković [6, Eq. (1.40)]):

In 1977, Nourein [19, 20] provided the idea for combining different iteration functions to increase the convergence order of the simultaneous methods, thus two fourth-order methods were obtained by combining Ehrlich’s method with Newton’s one, and Börsch-Supan’s method with Weierstrass’ method (1). There since, many authors (see, e.g., [21,22,23] and references therein) have applied Nourein’s approach to construct simultaneous methods with accelerated convergence combining two particular iteration functions. Such methods are often called simultaneous methods with correction [6, 18]. In 1987, Wang and Wu [24] were the first who constructed and studied a simultaneous method involving an arbitrary correction but this had not led to the increase of the convergence order. In 2021, Proinov and Vasileva [25] constructed and studied a family of Ehrlich’s type methods with arbitrary correction and arbitrary convergence order for simple polynomial zeros while very recently, Proinov and Ivanov [18] constructed and studied the local and the semilocal convergence of a family of Sakurai-Torii-Sugiura type methods, with arbitrary correction and arbitrary convergence order, for simple and multiple polynomial zeros.

In this paper, developing the last mentioned ideas, in Sect. 2, we show how to construct and study new general families of high-order simultaneous methods involving more than one arbitrary correction. To demonstrate that any correction has a different influence on the methods, in Sect. 3, we prove a local convergence theorem (Theorem 3.6) about one of the proposed families, called Schröder-like methods with two corrections. Theorem 3.6 shows that our approach opens up an opportunity to manage the corrections in order to achieve a balance between the convergence order and the computational efficiency [26] when constructing some particular methods. A numerical example (Example 3.8) is also provided to confirm the obtained theoretical results and to compare our methods with some classical ones.

2 Families of simultaneous methods with more corrections

In this section, using some of the most famous iteration functions in the literature, we construct three new families of simultaneous methods with more than one correction for the approximation of simple or multiple polynomial zeros.

For this aim, we suppose that \({\Omega }\) and \({\Gamma }\) are two arbitrary iteration functions in the vector space \({\mathbb {K}^s}\). Using these functions and (3), we define two modified Weierstrass iteration functions in \({\mathbb {K}^s}\) by \({\mathcal {W}(x) = (\mathcal {W}_1(x), \ldots , \mathcal {W}_s(x))}\) and \({\mathscr {W}(x) = (\mathscr {W}_1(x), \ldots , \mathscr {W}_s(x))}\) with

Obviously, \(\mathcal {W}_i\) and \(\mathscr {W}_i\) have the same zeros as the polynomial f. In what follows, using these iteration functions we shall construct three families with more than one correction.

2.1 Schröder-like methods with two corrections

In 2017, Kyncheva et al. [27] studied the well known Schröder’s method [28] as a method for the simultaneous computation of polynomial zeros of unknown multiplicity, i.e., as a method in \(\mathbb {K}^s\). Namely, they have defined it by the following iteration:

where Schröder’s iteration function S is defined in \(\mathbb {K}^s\) by \(S(x) = (S_1(x), \ldots , S_s(x))\) with

Without much ado, we combine Schröder’s iteration function (6) with the above defined modified Weierstrass functions \(\mathcal {W}\) and \(\mathscr {W}\) to define an iteration function T in \(\mathbb {K}^s\) by \({T(x) = (T_1(x), \ldots , T_s(x))}\) with

Now let \(f(x_i) \ne 0\), \({x \,\, \#\,\, \Omega }\) and \({x \,\, \#\,\, \Gamma }\), where \(\#\) denotes a binary relation on \(\mathbb {K}^s\) defined by \({x \,\, \#\,\, y}\) if and only if \({x_i \ne y_j}\) for all \({i, j \in I_s}\) such that \({i \ne j}\), then using some well known identities (see [6, Eq.(1.46)]), from (4) we get the following relations:

From these relations and (7), we get the following family of Schröder-like methods with two corrections (SLMC):

where the iteration function \({T :D \subset \mathbb {K}^s \rightarrow \mathbb {K}^s}\) is defined by

where \(L_{\,i}(\Omega ; x)\) and \(F_{\,i}(\Gamma ; x)\) are defined as follows:

If the set \(\mathscr {D}\) is the interception of the domains of \(\Omega \) and \(\Gamma \), then the domain of (10) is the set

In Theorem 3.6, we prove that the iteration family (9) has Q-convergence order \(r \ge 3\).

2.2 Chebyshev-Halley methods with two corrections

In 2022, Ivanov [29] studied the Chebyshev-Halley family for multiple zeros defined by the iteration

where the Chebyshev-Halley iteration function \({T_\alpha :\mathbb {K}\rightarrow \mathbb {K}}\) is defined by

with \(\alpha \in \mathbb {C}\), \({N(x) = f(x)/f'(x)}\) and \({M(x) = N(x)\, f''(x)/f'(x)}\). It is worth noting that the iteration (13) includes, as special cases, the most famous iteration methods in the literature, namely Halley’s method for \({\alpha = 1/2}\), Chebyshev’s method for \({\alpha = 0}\), Super-Halley method for \({\alpha = 1}\) and Osada’s method for \({\alpha = 1/(1 - m)}\) and \(m > 1\).

In 2017, Kyncheva et al. [30] studied the convergence of Newton, Halley, and Chebyshev’s methods as methods in \(\mathbb {K}^s\). Here, the same way as in [30], we consider Chebyshev-Halley family (13) in \(\mathbb {K}^s\) and then, as in the previous section, we combine the Chebyshev-Halley iteration function with the modified Weierstrass functions \(\mathcal {W}\) and \(\mathscr {W}\) to construct the following new family of Chebyshev-Halley-like methods with two corrections:

where the iteration function \({\mathcal {T} :D \subset \mathbb {K}^s \rightarrow \mathbb {K}^s}\) is defined by

where \(L_{\,i}(\Omega ; x)\) and \(F_{\,i}(\Gamma ; x)\) are defined by (11). We have to note that, in the case \({\alpha = 1/2}\) and \(\Omega = \Gamma \), the iteration (15) reduces to Sakurai-Torii-Sugiura’s method with correction which was recently studied in [18].

2.3 Weierstrass-like methods with three corrections

Recently, Marcheva, and Ivanov [31] have provided a detailed convergence analysis of a modification of the Weierstrass’ method (1) that has been derived by Nedzhibov [32], in 2016.

Let \(\Delta \) be another arbitrary iteration function in \(\mathbb {K}^s\). In the present section, we implement our new idea to the mentioned method and thus we construct the following family of Weierstrass-like methods with three corrections:

where the iteration function \(\Phi \) is defined in \(\mathbb {K}^s\) as follows:

where \(\mathcal {W}_{\,i}(x)\) and \(\mathscr {W}_{\,i}(x)\) are defined by (4). The family (17) has Q-convergence order \(r \ge 2\).

Remark 2.1

Our new approach can be further applied to obtain high-order and high-efficient analogs of many other iteration methods for the approximation of simple or multiple polynomial zeros.

3 Local convergence analysis of Schröder-like methods with two corrections

In this section, using the concepts of Proinov [33], we obtain a general local convergence result (Theorem 3.6) about the iterative methods of the family (9) for computing multiple polynomial zeros with known multiplicity.

Henceforward, we shall use the following notations and conventions: The vector space \(\mathbb {R}^s\) is endowed with the standard coordinate-wise ordering \(\,\preceq \,\) defined by \(x \preceq y\) if and only if \(x_i \le y_i\) for each \({i \in I_s}\) and the vector space \(\mathbb {K}^s\) is equipped with the max-norm \(\Vert \cdot \Vert _\infty \) and with a vector norm \({\Vert \cdot \Vert }\) with values in \(\mathbb {R}^s\) defined by

We define \({d :\mathbb {K}^s \rightarrow \mathbb {R}^s}\) by \(d(x) = (d_1(x), \ldots , d_s(x))\) with \(d_i(x) = \min _{j \,\ne \, i}{|x_i - x_j\,|}\) and, assuming that \({\mathbb {K}^s}\) is an algebra over \(\mathbb {K}\), we define the coordinate-wise division of two vectors \({x, y \in \mathbb {K}^s}\) by \( {x}/{y} = ({x_1}/{y_1},\cdots , {x_s}/{y_s}) \) provided that y has only nonzero components. For a non-negative integer k and \(r \ge 1\), \({S_k(r)}\) stands for the sum

From now on, we assume by definition that \(0^0 = 1\) and we denote by \(\mathbb {R}_+\) the set of non-negative numbers, and with J some interval in \(\mathbb {R}_+\) containing 0.

Let \({f \in \mathbb {K}[z]}\) be a polynomial of degree \({n \ge 2}\) and let \({m_1,\ldots ,m_s}\) be natural numbers such that \({m_1 + \ldots + m_s = n}\), then a vector \({\xi \in \mathbb {K}^s}\) is said to be a root-vector of f with multiplicity m if ([18, Definition 2.3])

where \(a_0\) is the leading coefficient of f. If f has only simple zeros, we simply say a root-vector of f.

For the sake of self-dependence, before proceeding further we recall some important definitions and results of [33] and [18].

Definition 3.1

([33, Definitions 7–8]) A function \({\varphi :J \rightarrow \mathbb {R}_+}\) is said to be quasi-homogeneous of (exact) degree \({p \ge 0}\) if it satisfies the following two conditions:

-

1.

\( \displaystyle \varphi (\lambda t) \le \lambda ^p \varphi (t) \quad \text {for all }\, \lambda \in [0,1] \,\text { and }\, t \in J; \vspace{0.2cm} \)

-

2.

\( \displaystyle \lim _{t \rightarrow 0^+} \frac{\varphi (t)}{t^p} \ne 0. \)

Definition 3.2

([33, Definition 9]) A function \({F :D \subset \mathbb {K}^s \rightarrow \mathbb {K}^s}\) is said to be an iteration function of first kind at a point \({\xi \in \mathcal {D}}\) if there exists a quasi-homogeneous function \({\phi :J \rightarrow \mathbb {R}_+}\) of degree \({p \ge 0}\) such that for each vector \({x \in \mathbb {K}^s}\) with \({E(x) \in J}\) the following conditions are satisfied:

where the function \(E :\mathbb {K}^s \rightarrow \mathbb {R}_+\) is defined by

The function \(\phi \) is said to be control function of F.

Theorem 3.3

([33, Theorem 3]) Suppose \({T :D \subset \mathbb {K}^s \rightarrow \mathbb {K}^s}\) and \({\xi \in \mathbb {K}^s}\) is a fixed point of T with pairwise distinct components. Let T be an iteration function of first kind at \({\xi \in \mathbb {K}^s}\) with control function \({\phi :J \rightarrow \mathbb {R}_+}\) of degree \(p \ge 0\), and let \(x^{(0)} \in \mathbb {K}^s\) be an initial guess of \(\xi \) such that

Then, the Picard iteration \({x^{(k+1)} = T(x^{(k)})}\), \({k = 0, 1, 2, \ldots }\), is well defined and converges to \(\xi \) with Q-order \({r = p + 1}\) and with the following error estimates for all \({k \ge 0}\):

where \({\lambda = \phi (E(x^{(0)}))}\). Besides, the following estimate for the asymptotic error constant holds:

where \(\delta (\xi ) = \min _{i \ne j}|\xi _i - \xi _j|\).

Lemma 3.4

([18, Lemma 2.7]) Let \({x, y, \xi }\) be three vectors in \(\mathbb {K}^s\), and let \(\xi \) has pairwise distinct components. If \({\, \Vert y - \xi \, \Vert \preceq \alpha \, \Vert \, x - \xi \, \Vert \,}\) for some \({\alpha \ge 0}\), then for all \({i, j \in I_s}\), we have

where the function E is defined by (19).

Before to state and prove our main result of this section, for two quasi-homogeneous functions \({\omega :J_{\omega } \rightarrow \mathbb {R}_+}\) of degree \({p \ge 0}\) and \({\gamma :J_{\gamma } \rightarrow \mathbb {R}_+}\) of degree \({q \ge 0}\), we define the functions g and h as follows

Using the functions g and h defined by (20), we define the functions A, B and \(\phi \) by

where the number a is defined by

According to the properties of the quasi-homogeneous functions of exact degree (see [33, Proposition 1]), A is a quasi-homogeneous function of degree \(p + 2\) while B is a quasi-homogeneous function of degree \(q + 3\) and therefore \(\phi \) is quasi-homogeneous functions of degree \(m = \min \{p + 2, q + 3\}\).

The following is our main lemma. The more curious reader can find a similar proof in [18, Lemma 2.8].

Lemma 3.5

Let \({f \in \mathbb {K}[z]}\) be a polynomial of degree \({n \ge 2}\), \({\xi \in \mathbb {K}^s}\) be a root-vector of f with multiplicity \({{\textbf {m }}}\), and let \({\Omega }\) and \(\Gamma \) be two iteration functions of first kind at \({\xi }\) with control functions \({\omega }\) and \(\gamma \) of respective degrees \(p \ge 0\) and \(q \ge 0\). Then \({T :D \subset \mathbb {K}^s \rightarrow \mathbb {K}^s}\) defined by (10) is an iteration function of first kind at \(\xi \) with control function \({\phi :J \rightarrow \mathbb {R}_+}\) of degree \(m = \min \{p + 2, q + 3\}\) defined by (21).

Proof

Let x be a vector in \({\mathbb {K}^s}\) such that \({E(x) \in J}\), where E(x) is defined by (19) and

According to Definition 3.2, we have to prove that

where D is defined by (12).

Since \(\Omega \) and \(\Gamma \) are iteration functions of first kind at \(\xi \) with control functions \(\omega \) and \(\gamma \), then by Definition 3.2 we have

So, applying Lemma 3.4 and taking into account that \({E(x) \in J}\), we get for all \({i \ne j}\),

These inequalities imply that \({x \,\, \#\,\, \Omega (x)}\) and \({x \,\, \#\,\, \Gamma (x)}\).

Now, let \({f(x_i) \ne 0}\) for some i. In accordance with (12), it remains to prove that \(F_i(\Gamma ; x) \ne 0\). Using some known identities (see, e.g., [18, Lemma 2.6]), we get

From this, \({E(x) \in J}\) and the second inequalities in (24) and (25), we get

Thus, we have \(|1 - \beta _i| \ge 1 - |\beta _i| \ge 1 - B(E(x)) > 0\) which means that \(F_i(\Gamma ; x) \ne 0\) ans so \(x \in D\).

To complete the proof of the lemma, it remains to prove the inequality of (23). Note that if \(x_i = \xi _i\) for some i, then the inequality in (23) becomes an equality. Suppose \(x_i \ne \xi _i\). In this case, from (10) and (11), we obtain

where \(\beta _i\) is defined by (26) and \(\alpha _i\) is defined by

From this, the first inequalities of (24) and (25), and the Hölder’s inequality, we get the estimate (see [18, Eq. (2.18)])

Finally, from the triangle inequality and the estimates (30) and (27), we obtain

which, together with (28), proves the desired inequality of (23) and completes the proof of the lemma.

The following theorem provides sufficient conditions and error estimates to guarantee the Q-convergence of the SLMC (9) with order \(r \ge 3\).

Theorem 3.6

(Local convergence of SLMC) Let \(f \in \mathbb {K}[z]\) be a polynomial of degree \({n \ge 2}\), \({\xi \in \mathbb {K}^s}\) be a root-vector of f with multiplicity \({{\textbf {m }}}\) and let \({\Omega }\) and \(\Gamma \) be two iteration functions of first kind at \({\xi }\) with control functions \({\omega :J_\omega \rightarrow \mathbb {R}_+}\) and \(\gamma :J_\gamma \rightarrow \mathbb {R}_+\) of respective degrees \(p \ge 0\) and \(q \ge 0\). Suppose \(x^{(0)} \in \mathbb {K}^s\) is an initial guess satisfying

where \(\Lambda = A + 2B\) with functions A and B defined by (21). Then the iteration (9) is well defined and converges to \(\xi \) with Q-order \(r = \min \{p + 3, q + 4\}\) and with the following error estimates for all \(k \ge 0\):

where \({\lambda = \phi (E(x^{0}))}\) with \(\phi \) defined by (21). Besides, we have the following estimate of the asymptotic error constant:

where \(m = \min \{p + 2, q + 3\}\), a is defined by (22) and \(\delta (\xi ) = \min _{i \ne j}|\xi _i - \xi _j|\).

Proof

The proof follows immediately from Lemma 3.5 and Theorem 3.3. \(\square \)

Remark 3.7

Theorem 3.6 shows that the optimal choice of the corrections \({\Omega }\) and \(\Gamma \) is when \(p = q + 1\). For example, if one chooses to put Newton’s or Weierstrass’ iteration function (IF) (\({p = 1}\)) at the place of \({\Omega }\) they will obtain a fourth-order method no matter if \(\Gamma \) is the identity function (\({q = 0}\)) or the IF of some high-order method as Newton, Weierstrass, Halley, etc (\({q \ge 1}\)) (see Example 3.8). So, when constructing some particular method it is reasonable to make the optimal choice of \({\Omega }\) and \(\Gamma \) in order to preserve the computational efficiency.

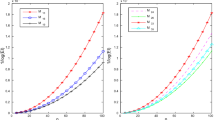

In the following example, we implement three particular members of the family (9) to exemplify the results of Theorem 3.6. On the other hand, to emphasize the performance of our methods, we compare them with two classical such ones.

Example 3.8

Let \(\mathbb {K}= \mathbb {C}\). In this example, we apply three particular methods of the family (9), namely the case

that we shall call Schröder-like method with one Newton’s correction (SLMN), the case

called Schröder-like method with two Newton’s corrections (SLMNN) and the case

called Schröder-like method with Newton and Halley’s corrections (SLMNH) to the polynomial

In order to compare our methods with existing such ones, we also apply the classical Sakurai-Torii-Sugiura’s method (STS) [34, 35] and the Nourein’s method (EN) \(x^{(k + 1)} = \Phi (x^{(k)})\), where the iteration function is defined by \(\Phi (x) = (\Phi _1(x),\ldots , \Phi _n(x))\) with

Here, we use the Aberth’s initial approximation [36]:

with \(a_1 = 0\), \(R = 2\) and \(n = 6\). Note that the value of R is chosen with accordance to the Cauchy’s bound for the zeros of f.

In Table 1, we give the values of the error \(\varepsilon _k\) at k-th iteration and the computational order of convergence (COC) \(\rho _k\) defined by [37]

It is worth noting that the concept of COC has been introduced by Weerakoon and Fernando [38] and further developed by Cordero and Torregrosa [37], Grau-Sánchez, et al. [39] and Proinov and Ivanov [18].

One can see from the table that our three methods have similar convergence behavior and the COC \(\rho _k\) confirms the theoretical one r, obtained in Theorem 3.6. It is also seen that any of our methods behave slightly better than the considered classical ones.

4 Conclusions

In the present paper, we have offered the idea for composing several iteration functions in order to construct new families of iterative methods with both high-order of convergence and high computational efficiency. To illustrate our idea, we have combined some of the most famous iteration functions with two or three arbitrary iteration functions (corrections) to obtain three new families of high-order iterative methods for the simultaneous computation of simple or multiple polynomial zeros. To show that any correction has a different influence that can be managed in order to achieve a balance between the convergence order and the computational efficiency when constructing some particular methods, we have proved a local convergence theorem about one of the proposed general families called Schröder-like methods with two corrections. A numerical example has been provided to confirm the theoretical results and to compare some of our methods with the classical ones.

Comprehensive analysis of the convergence, dynamics, and computational efficiency of the presented new families as well as of other newly constructed ones will be performed in our future works.

Availability of supporting data and materials

Not applicable

Code availability

Not applicable

References

Halley, E.: A new, exact, and easy method of finding the roots of any equations generally, and that without any previous reduction. Philos. Trans. Roy. Soc. 18, 136–148 (1694)

Chebyshev, P.L.: Complete Works of P.L. Chebishev, pp. 7–25. USSR Academy of Sciences, Moscow (1973). http://books.e-heritage.ru/book/10079542

Weierstrass, K.: Neuer Beweis des Satzes, dass jede ganze rationale Function einer Veränderlichen dargestellt werden kann als ein Product aus linearen Functionen derselben Veränderlichen. Sitzungsber. Königl. Preuss. Akad. Wiss. Berlinn II, 1085–1101 (1891). https://biodiversitylibrary.org/page/29263197

Sendov, B., Andreev, A., Kjurkchiev, N.: Numerical solution of polynomial equations. In: Ciarlet, P., Lions, J. (eds.) Handbook of numerical analysis, vol. III, pp. 625–778. Elsevier, Amsterdam (1994)

Kyurkchiev, N.V.: Initial approximations and root finding methods. Mathematical Research, vol. 104. Wiley, Berlin (1998)

Petković, M.: Point estimation of root finding methods. Lecture Notes in Mathematics, vol. 1933. Springer, Berlin (2008)

Dochev, K., Byrnev, P.: Certain modifications of Newton’s method for the approximate solution of algebraic equations. USSR Comput. Math. Math. Phys. 4(5), 174–182 (1964). https://doi.org/10.1016/0041-5553(64)90148-X

Tanabe, K.: Behavior of the sequences around multiple zeros generated by some simultaneous methods for solving algebraic equations. Tech. Rep. Inf. Process Numer. Anal. 4(2), 1–6 (1983). ((in Japanese))

Ehrlich, L.W.: A modified Newton method for polynomials. Commun. ACM 10, 107–108 (1967). https://doi.org/10.1145/363067.363115

Börsch-Supan, W.: Residuenabschätzung für Polynom-Nullstellen mittels Lagrange Interpolation. Numer. Math. 14, 287–296 (1970)

Sekuloski, R.M.: Generalization of Prešic’s iterative method for factorization of a polynomial. Mat Vesnik 9, 257–264 (1972)

Farmer, M.R., Loizou, G.: An algorithm for the total, partial, factorization of a polynomial. Math. Proc. Cambridge Philos. Soc. 82, 427–437 (1977). https://doi.org/10.1017/S0305004100054098

Gargantini, I.: Parallel square-root iterations for multiple roots. Comput. Math. Appl. 6, 279–288 (1980). https://doi.org/10.1016/0898-1221(80)90035-8

Petković, M., Milovanović, G.V., Stefanović, L.V.: Some higher-order methods for the simultaneous approximation of multiple polynomial zeros. Comput. Math. Appl. 12, 951–962 (1986). https://doi.org/10.1016/0898-1221(86)90020-9

Kjurkchiev, N.V., Andreev, A.: On Halley-like algorithms with high order of convergence for simultaneous approximation of multiple roots of polynomials. Compt. rend. Acad. bulg. Sci. 43(9), 29–32 (1990)

Iliev, A., Iliev, I.: On a generalization of Weierstrass-Dochev method for simultaneous extraction of all roots of polynomials over an arbitrary Chebyshev system. Compt. rend. Acad. bulg. Sci. 54, 31–36 (2001)

Proinov, P.D., Cholakov, S.I.: Convergence of Chebyshev-like method for simultaneous approximation of multiple polynomial zeros. Compt. rend. Acad. bulg. Sci. 67(7), 907–918 (2014)

Proinov, P.D., Ivanov, S.I.: A new family of Sakurai-Torii-Sugiura type iterative methods with high order of convergence. J. Comput. Appl. Math. 436, 115428–16 (2024). https://doi.org/10.1016/j.cam.2023.115428

Nourein, A.W.M.: An improvement on Noureins method for the simultaneous determination of the zeros of a polynomial (an algorithm). J. Comput. Appl. Math. 3, 109–110 (1977). https://doi.org/10.1016/0771-050X(77)90006-7

Nourein, A.W.M.: An improvement on two iteration methods for simultaneous determination of the zeros of a polynomial. Int. J. Comput. Math. 6, 241–252 (1977). https://doi.org/10.1080/00207167708803141

Petković, M.S., Stefanović, L.V.: On some improvements of square root iteration for polynomial complex zeros. J. Comput. Appl. Math. 15, 13–25 (1986). https://doi.org/10.1016/0377-0427(86)90235-9

Petković, M.S., Tričković, S., Herceg, D.: On Euler-like methods for the simultaneous approximation of polynomial zeros. Japan J. Indust. Appl. Math. 15, 295–315 (1998). https://doi.org/10.1007/BF03167406

Petković, L.D., Petković, M.S.: On a high-order one-parameter family for the simultaneous determination of polynomial roots. Appl. Math. Let. 74, 94–101 (2017). https://doi.org/10.1016/j.aml.2017.05.013

Wang, D., Wu, Y.: Some modifications of the parallel Halley iteration method and their convergence. Computing 38, 75–87 (1987). https://doi.org/10.1007/BF02253746

Proinov, P.D., Vasileva, M.T.: A new family of high-order Ehrlich-type iterative methods. Mathematics 9(16), 1855–25 (2021). https://doi.org/10.3390/math9161855

Petković, M.S., Petković, L.D., Džunić, J.: Accelerating generators of iterative methods for finding multiple roots of nonlinear equations. Comput. Math. Appl. 59, 2784–2793 (2010). https://doi.org/10.1016/j.camwa.2010.01.048

Kyncheva, V.K., Yotov, V.V., Ivanov, S.I.: On the convergence of Schröder’s method for the simultaneous computation of polynomial zeros of unknown multiplicity. Calcolo 54(4), 1199–1212 (2017). https://doi.org/10.1007/s10092-017-0225-4

Schröder, E.: Über unendlich viele Algorithmen zur Auflösung der Gleichungen. Math. Ann. 2, 317–365 (1870). https://doi.org/10.1007/BF01444024

Ivanov, S.I.: Unified convergence analysis of Chebyshev-Halley methods for multiple polynomial zeros. Mathematics 10, 135–12 (2022). https://doi.org/10.3390/math10010135

Kyncheva, V.K., Yotov, V.V., Ivanov, S.I.: Convergence of Newton, Halley and Chebyshev iterative methods as methods for simultaneous determination of multiple polynomial zeros. Appl. Numer. Math. 112, 146–154 (2017). https://doi.org/10.1016/j.apnum.2016.10.013

Marcheva, P.I., Ivanov, S.I.: Convergence analysis of a modified Weierstrass method for the simultaneous determination of polynomial zeros. Symmetry 12, 1408–19 (2020). https://doi.org/10.3390/sym12091408

Nedzhibov, G.H.: Convergence of the modified inverse Weierstrass method for simultaneous approximation of polynomial zeros. Commun. Numer. Anal., 74–80 (2016)

Proinov, P.D.: Two classes of iteration functions and \(Q\)-convergence of two iterative methods for polynomial zeros. Symmetry 13(3), 371–29 (2021). https://doi.org/10.3390/sym13030371

Sakurai, T., Torii, T., Sugiura, H.: A high-order iterative formula for simultaneous determination of zeros of a polynomial. J. Comput. Appl. Math. 38, 387–397 (1991). https://doi.org/10.1016/0377-0427(91)90184-L

Proinov, P.D., Ivanov, S.I.: Convergence analysis of Sakurai-Torii-Sugiura iterative method for simultaneous approximation of polynomial zeros. J. Comput. Appl. Math. 357, 56–70 (2019). https://doi.org/10.1016/j.cam.2019.02.021

Aberth, O.: Iteration methods for finding all zeros of a polynomial simultaneously. Math. Comp. 27, 339–344 (1973). https://doi.org/10.1090/S0025-5718-1973-0329236-7

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007). https://doi.org/10.1016/j.amc.2007.01.062

Weerakoon, S., Fernando, T.G.I.: A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 13, 87–93 (2000). https://doi.org/10.1016/S0893-9659(00)00100-2

Grau-Sánchez, M., Noguera, M., Gutiérrez, J.M.: On some computational orders of convergence. Appl. Math. Lett. 23, 472–478 (2010). https://doi.org/10.1016/j.aml.2009.12.006

Author information

Authors and Affiliations

Contributions

All contributions are due to Stoil I. Ivanov

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ivanov, S.I. Families of high-order simultaneous methods with several corrections. Numer Algor 97, 945–958 (2024). https://doi.org/10.1007/s11075-023-01734-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01734-3