Abstract

In this paper, a two-sided, parallel Kogbetliantz-type algorithm for the hyperbolic singular value decomposition (HSVD) of real and complex square matrices is developed, with a single assumption that the input matrix, of order n, admits such a decomposition into the product of a unitary, a non-negative diagonal, and a J-unitary matrix, where J is a given diagonal matrix of positive and negative signs. When J = ±I, the proposed algorithm computes the ordinary SVD. The paper’s most important contribution—a derivation of formulas for the HSVD of 2 × 2 matrices—is presented first, followed by the details of their implementation in floating-point arithmetic. Next, the effects of the hyperbolic transformations on the columns of the iteration matrix are discussed. These effects then guide a redesign of the dynamic pivot ordering, being already a well-established pivot strategy for the ordinary Kogbetliantz algorithm, for the general, n × n HSVD. A heuristic but sound convergence criterion is then proposed, which contributes to high accuracy demonstrated in the numerical testing results. Such a J-Kogbetliantz algorithm as presented here is intrinsically slow, but is nevertheless usable for matrices of small orders.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Kogbetliantz algorithm [14] is the oldest effective method discovered that computes the singular value decomposition (SVD) of a square matrix G as G = UΣV∗, where U and V are unitary matrices, while Σ is a diagonal matrix with the non-negative diagonal elements, called the singular values, that are usually ordered non-increasingly, i.e., Σ = diag(σ1,…,σn) and σk ≥ σℓ ≥ 0, for 1 ≤ k < ℓ ≤ n, with n being the matrix order of G, U, Σ, and V.

In this paper, a Kogbetliantz-type algorithm for the hyperbolic singular value decomposition [26] (the HSVD in short) is developed and called the J-Kogbetliantz algorithm in the following. Since the Kogbetliantz-type algorithms operate only on square matrices, Definition 1.1 of the HSVD is restricted to such a case.

Definition 1.1

Let J and G be two square matrices of order n, such that J is a diagonal matrix of signs, J = diag(± 1), and G is a matrix over \(\mathbb {F}\in \{\mathbb {R},\mathbb {C}\}\) with rank(GJG∗) = rank(G). A decomposition of G,

such that U is a unitary matrix over \(\mathbb {F}\), V is a J-unitary matrix over \(\mathbb {F}\) with respect to J (i.e., V∗JV = J, so V is hypernormal in the terminology of [4]), and Σ is a real diagonal matrix with a non-negative diagonal, is called the hyperbolic singular value decomposition of G. The diagonal elements of Σ are called the hyperbolic singular values of G, which are assumed to be ordered non-increasingly (non-decreasingly) in any, not necessarily contiguous, range of diagonal elements of Σ for which the corresponding range of diagonal elements of J contains only positive (negative) signs.

When J = ±In the HSVD becomes the ordinary SVD, with V unitary, and the J-Kogbetliantz algorithm reduces to the ordinary Kogbetliantz algorithm for the SVD.

In Definition 1.1 the assumption that rank(GJG∗) = rank(G) ensures [36] that the HSVD of G exists, with a diagonal Σ. If the assumption does not hold, or if G is rectangular, for the J-Kogbetliantz algorithm G should be preprocessed by, e.g., the J-URV factorization [28, 29] to a square matrix G0 of order n0 ≤ n, such that \(\text {rank}(G_{0}^{} J_{0}^{} G_{0}^{\ast })=\text {rank}(G_{0}^{})\), i.e., \(G=U_{0}^{\ast } \widetilde {G}_{0}^{} V_{0}^{}\), where U0 is unitary, V0 is J-unitary, J0 = diag(± 1) is of order n0, and \(G_{0}=\widetilde {G}_{0}(1:n_{0},1:n_{0})\). Otherwise, let n0 = n, G0 = G, V0 = U0 = In, and J0 = J.

Besides an obvious application as the main part of a method for solving a Hermitian indefinite eigenproblem [30, 31], the HSVD has also been used in various signal processing applications [26], and recently in a modified approach to the Kalman filtering, especially in the ill-conditioned case [15,16,17]. The HSVD can be efficiently and accurately computed by the one-sided blocked Jacobi-type algorithms [8, 9], even on the massively parallel architectures [21]. A two-sided, Kogbetliantz-type method proposed here is not intended to outperform the existing one-sided HSVD algorithms. Instead, it is meant to showcase the tools (e.g., a careful dynamic parallel ordering and a sound convergence criterion) designed to cope with the perils of non-unitary transformations, that empirically happen to be a greater risk for numerical stability of the two-sided methods—here, for the hyperbolic, but possibly also applicable to the more general, orthosymmetric [18] SVD—than for that of the one-sided algorithms.

The ordinary Kogbetliantz algorithm usually transforms an upper triangular matrix R (see, e.g., [10]), resulting from the preprocessing of G by the QR factorization, in order to simplify the transformations and lower their number. If a particular cyclic pivot ordering is applied, at the end of the first cycle a lower triangular matrix is obtained, while after the subsequent cycle the iteration matrix becomes upper triangular again. Unfortunately, a simple generalization of the Kogbetliantz algorithm to a hyperbolic one (by introducing the hyperbolic transformations but leaving the pivot strategy intact) can fail, since even a single hyperbolic transformation from the right, with a sufficiently high condition number, can cause an excessive growth of the off-diagonal elements of the iteration matrix and ruin the convergence of the algorithm. A different, dynamic (data-dependent) pivot strategy is therefore needed to keep the sometimes unavoidable growth of the off-diagonal norm in check. As a consequence, the iteration matrix cannot be assumed to have or retain any particular structure.

The J-Kogbetliantz algorithm computes the HSVD of G0 in a sequence of transformations, infinite in general but cut off to a finite leading part when computing in machine precision and a convergence criterion is met, as

where, for each k, 1 ≤ k ≤ N, \(U_{k}^{\ast }\) and \(V_{k}^{}\) are the embeddings of the 2 × 2 transformations \(\widehat {U}_{k}^{\ast }\) and \(\widehat {V}_{k}^{}\), respectively, into \(I_{n_{0}}^{}\), that are applied to the iteration matrix \(G_{k-1}^{}\), forming a sequence of the iteration matrices

where \(G_{k}^{}=U_{k}^{\ast }G_{k-1}^{}V_{k}^{}\). If G0 is already in the form required of Σ, then \(V=U=I_{n_{0}}^{}\), \({{\varSigma }}=G_{0}^{}\), and no transformations take place. Else, \(U_{k}^{\ast }\) is unitary (orthogonal in the real case), while \(V_{k}^{}\) is \(J_{0}^{}\)-unitary (\(J_{0}^{}\)-orthogonal in the real case). Each \(\widehat {U}_{k}^{\ast }\) is unitary (orthogonal), and \(\widehat {V}_{k}^{}\) is \(\widehat {J}_{k}^{}\)-unitary (\(\widehat {J}_{k}^{}\)-orthogonal), where \(\widehat {J}_{k}^{}=\text {diag}(J_{0}^{}(p_{k},p_{k}),J_{0}^{}(q_{k},q_{k}))\) for the k th pivot index pair (pk,qk), 1 ≤ pk < qk ≤ n0. The decomposition is finalized by forming \(U=(U^{\ast })^{\ast }=U_{1}^{}U_{2}^{}{\cdots } U_{N}^{}\), while by multiplying \(V^{\ast }J_{0}^{}V=J_{0}^{}\) from the left by \(J_{0}^{}\), and noting that \({J_{0}^{2}}=I_{n_{0}}^{}\), it follows that \(V^{-1}=J_{0}^{}V^{\ast }J_{0}^{}\).

In the k th step the elements (1,1), (2,1), (1,2), and (2,2) of \(\widehat {U}_{k}^{\ast }\) (and \(\widehat {V}_{k}^{}\)) are embedded into \(U_{k}^{\ast }\) (and \(V_{k}^{}\)) at the pivot positions (pk,pk), (qk,pk), (pk,qk), and (qk,qk), respectively, where the pivot indices are chosen such that for the pivot matrix \(\widehat {G}_{k-1}^{}\),

holds at least one of

When \(\widehat {J}_{k}^{}(1,1)=\widehat {J}_{k}^{}(2,2)\) (such a case is called trigonometric in the following, while the other case is called hyperbolic), \(\widehat {G}_{k-1}^{}\) is also a transformation candidate if it is diagonal, with the non-negative diagonal elements such that \(\widehat {G}_{k-1}^{}(1,1)<\widehat {G}_{k-1}^{}(2,2)\) if \(\widehat {J}_{k}^{}=I_{2}^{}\), or \(\widehat {G}_{k-1}^{}(1,1)>\widehat {G}_{k-1}^{}(2,2)\) if \(\widehat {J}_{k}^{}=-I_{2}{}\). This is summarized in Definition 1.2.

Definition 1.2

In a step k ≥ 1, let pk and qk, 1 ≤ pk < qk ≤ n0, be such indices for which \(\widehat {G}_{k-1}\) from (1.1) does not have a form required of a matrix of hyperbolic singular values, as specified in Definition 1.1. Then, \(\widehat {G}_{k-1}\) is called a transformation candidate or a pivot (sub)matrix. If such indices do not exist, there are no transformation candidates in the k th step. If more than one index pair satisfies the defining condition, one pivot index pair is selected according to the chosen pivot strategy.

If the step index is omitted, then for the pivot index pair (p,q) the transformation matrices (\(\widehat {U}^{\ast }\) and \(\widehat {V}\)), the pivot matrix (\(\widehat {G}\)), and the corresponding matrices of signs (\(\widehat {J}\)) and hyperbolic singular values (\(\widehat {{{\varSigma }}}\)) can be written as

With \(\widehat {G}\) given, \(\widehat {U}^{\ast }\) and \(\widehat {V}\) are sought for, such that \(\widehat {U}^{\ast }\widehat {G}\widehat {V}=\widehat {{{\varSigma }}}\), with σpp and σqq being the hyperbolic singular values of \(\widehat {G}\), and \(\widehat {V}^{\ast }\widehat {J}\widehat {V}=\widehat {J}\).

If there are no transformation candidates in the k th step, or if the convergence criterion is satisfied, the algorithm stops successfully, with (an approximation of) the HSVD of G found. Otherwise, \(\widehat {U}_{k}^{\ast }\) and \(\widehat {V}_{k}^{}\) are computed for one, suitably chosen transformation candidate of \(G_{k-1}^{}\), and applied to the pivot rows pk and qk from the left (\(\widehat {U}_{k}^{\ast }\)) and the pivot columns pk and qk from the right (\(\widehat {V}_{k}^{}\)), in that order for determinacy, to form \(G_{k}^{}\). The process is then repeated in the step k + 1.

Note that several, but at most ⌊n0/2⌋ successive steps can be grouped to form a multi-step k, where 0 ≤|k|≤⌊n0/2⌋ is the chosen length of k, if and only if {pk,qk}∩{pl,ql} = ∅ for all k≠l such that \(\{k,l\}\subseteq \mathbf {k}\), i.e., all basic steps within a multi-step can be performed in parallel (with t ≥ 1 parallel tasks at hand). The number of basic steps may vary from one multi-step to another if, e.g., not enough transformation candidates are available, and may reach zero when no further transformations are possible. With n0 = 6, e.g., the multi-step 1 might be {1,2,3}, 2 might be {4,5}, 3 might be {6,7,8}, continuing until some N at which the algorithm halts.

The parallel application of the 2 × 2 transformations has to take into account that all transformations from one side (e.g., the row transformations from the left) have to precede any transformation from the other side (e.g., the column transformations from the right). However, all transformations from the same side can proceed concurrently, and the choice of the first side to be transformed is arbitrary.

To fully describe the J-Kogbetliantz algorithm, it therefore suffices to specify:

-

1.

a method for computing the HSVD of the pivot matrices of order two,

-

2.

the details of performing the row and the column transformations,

-

3.

a pivot strategy that selects the transformation candidate(s) in a (multi-)step, and

-

4.

a convergence criterion that halts the execution.

The above list guides the organization of this paper as follows. The first item is covered in Section 2, the second one in Section 3, the third one in Section 4, and the last one in Section 5, containing an overview of the algorithm. The numerical testing results are summarized in Section 6, and the paper is concluded with some remarks on the future work in Section 7. The Appendix contains several technical proofs.

2 Computing the HSVD of matrices of order two

In the ordinary Kogbetliantz algorithm, it is preferred that the pivot matrices throughout the process remain (upper or lower) triangular [5, 10] under a cyclic pivot strategy: a serial (e.g., the row or the column cyclic, with G0 triangular) or a parallel one (e.g., the modulus strategy, with G0 preprocessed into the butterfly form [11])—a fact relied upon for a simple and accurate computation of the transformation parameters [7, 19, 20]. However, the J-Kogbetliantz algorithm employs a strategy that has no periodic (cyclic) pattern, so no particular form of the pivot matrices can be guaranteed and no special form of G0 is presumed. Depending on \(\widehat {J}\) and its own structure, a transformation candidate \(\widehat {G}\) might have to be preprocessed into a real, triangular form with non-negative elements that is suitable for a novel, numerically stable computation of the transformation matrices \(\widehat {U}^{\ast }\) and \(\widehat {V}\) and the hyperbolic singular values in \(\widehat {{{\varSigma }}}\).

2.1 The \(\widehat {J}\)-UTV factorization of \(\widehat {G}\)

The preprocessing of \(\widehat {G}\) starts with checking if \(\widehat {G}\) is diagonal, because this information will affect the convergence criterion (see Section 5.1) and can simplify an optimized implementation of the 2 × 2 HSVD. Then, \(\widehat {G}\) is transformed into a real, triangular matrix \(\widehat {T}\) with non-negative elements by the \(\widehat {J}\)-UTV factorization that preserves the hyperbolic singular values. The \(\widehat {J}\)-UTV factorization is similar to the URV [34] factorization, with the form

where \(\check {U}\) is unitary, \(\check {V}\) is \(\widehat {J}\)-unitary, and \(\widehat {T}\) is either upper or lower triangular with real, non-negative elements. Moreover, \(\widehat {T}\) is upper triangular in the trigonometric case, and \(\hat {t}_{11}^{2}\ge \hat {t}_{12}^{2}+\hat {t}_{22}^{2}\). In the hyperbolic case \(\widehat {T}\) is either upper triangular, as described, or lower triangular, with \(\hat {t}_{22}^{2}\ge \hat {t}_{21}^{2}+\hat {t}_{11}^{2}\), depending on whether the first or the second column of \(\widehat {G}\) has a greater Frobenius norm, respectively. The 2 × 2 \(\widehat {J}\)-UTV factorization always exists and is uniquely determined, as demonstrated by Algorithm 1, which, given \(\widehat {G}\), operates on a scaled matrix \(\widehat {G}_{0}=2^{s}\widehat {G}\) to avoid the possibility of floating-point overflows. Determination of s is described in Section 2.1.1, with the only assumption that all (components of) the elements of \(\widehat {G}\) are finite. Assume \(\arg (0)=0\) for simplicity, and let P2 be the 2 × 2 permutation matrix \(\left [\begin {array}{cc}0 & 1\\1 & 0 \end {array}\right ]\). Let Boolean flags—those being only true (⊤) or false (⊥)—be denoted by small caps (e.g., d, h, and c, indicating whether \(\widehat {G}_{0}\) is diagonal, whether the hyperbolic or the trigonometric case is considered, and whether the columns of \(\widehat {G}_{0}\) have been swapped, respectively).

When \(\widehat {T}\) is upper triangular, Algorithm 1 is a refinement of the URV factorization, as described in detail in [22] for the trigonometric case, where \(R=\widehat {T}\) is real and non-negative, with a prescribed relation among the magnitudes of its elements. The 2 × 2 \(\widehat {J}\)-UTV factorization guarantees that \(\check {V}\) is both unitary and \(\widehat {J}\)-unitary in all cases. When \(\widehat {G}\) is real, a multiplication of an element \(\hat {g}\) of the working matrix by \(e^{-\arg {\hat {g}}}\) amounts to a multiplication by \(\text {sign}~{\hat {g}}\), without any rounding error.

In brief, Algorithm 1 consists of two possible pipelines, having in common the first four stages. The longer pipeline has eight, and the shorter one six stages in total.

In a stage \(\mathfrak {s}\ge 1\), the working matrix and either \(\check {U}^{\ast }\) or \(\check {V}\) are transformed in place, either by a left multiplication by \(\widehat {U}_{\mathfrak {s}}^{\ast }\), or by a right multiplication by \(\widehat {V}_{\mathfrak {s}}^{}\), respectively. The working matrix thus progresses from the state \(\widehat {G}_{\mathfrak {s}-1}\) to \(\widehat {G}_{\mathfrak {s}}\), until it is eventually reduced to \(\widehat {T}\), at which point \(\check {U}^{\ast }\) and \(\check {V}\) are considered final, i.e., the \(\widehat {J}\)-UTV factorization is computed. Initially, \(\check {U}^{\ast }\) and \(\check {V}\) are set to identities, and d and h are determined.

In stage 1, if the column pivoting of \(\widehat {G}_{0}^{}\) is needed (i.e., its first column is of lower Frobenius norm than its second), c is set to ⊤ and \(\check {V}_{1}^{}\) to \(P_{2}^{}\), else they become ⊥ and \(I_{2}^{}\), respectively. Note that \(\check {V}_{1}^{}\) is not \(\widehat {J}\)-unitary if h and c are ⊤, but in that case \(\check {V}_{1}^{}\) will be canceled by \(P_{2}^{}=P_{2}^{\ast }\), with no intervening right transformations, in stage 5l.

In stage 2, the first column of \(\widehat {G}_{1}^{}\) is made real and non-negative by \(\check {U}_{2}^{\ast }\), effectively setting the elements of the first column of \(\widehat {G}_{2}^{}\) to the magnitudes of those from \(\widehat {G}_{1}^{}\). Then, it is possible to compare them in stage 3. If the first is less than the second, the rows of \(\widehat {G}_{2}^{}\) are swapped by \(\check {U}_{3}^{\ast }\). In stage 4 (the final one shared among the two pipelines) the QR factorization of \(\widehat {G}_{3}^{}\) is performed. If \(\widehat {G}_{0}^{}\) was diagonal, \(\widehat {G}_{3}^{}\) will come out as such from the previous stage, and the factorization is trivial. Else, the real Givens rotation \(\check {U}_{4}^{\ast }\) (i.e., Q∗) is computed from the elements of the first column of \(\widehat {G}_{3}^{}\). Due to the row pivoting, the rotation’s angle ϕ lies between 0 and π/4. Now, R from the factorization, i.e., \(\widehat {G}_{4}^{}\), is upper triangular, with its second column possibly complex, while \((\widehat {G}_{4}^{})_{11}^{}\) is by construction real and of the largest magnitude in \(\widehat {G}_{4}^{}\).

The remaining stages of the longer pipeline, i.e., the one in which h and c are ⊤, are described first. In stage 5l \(\check {V}_{1}^{}=P_{2}^{}\) is canceled by \(\check {V}_{5}^{}=P_{2}^{}\), i.e., the columns of \(\widehat {G}_{4}^{}\) are swapped to obtain \(\widehat {G}_{5}^{}\), which in stage 6l gets its rows swapped by \(\check {U}_{6}^{\ast }\) to become the lower triangular \(\widehat {G}_{6}^{}\). Stage 5l is necessary to make the current \(\check {V}\) \(\widehat {J}\)-unitary (identity) again. Stage 6l brings the working matrix into a more “familiar” (i.e., lower triangular) form. The working matrix in this pipeline remains lower triangular until the end. To emphasize that, “l” is appended to the stage identifiers. To make the working matrix real, \(\check {V}_{7}^{}\) (obviously unitary but also \(\widehat {J}\)-unitary, since \(\check {V}_{7}^{\ast }\widehat {J}\check {V}_{7}^{}=\widehat {J}\), where \(\widehat {J}\) has two opposite signs on its diagonal) is applied to it in stage 7l, to turn \((\widehat {G}_{6}^{})_{21}^{}\) into its magnitude. Finally, in stage 8l, \((\widehat {G}_{7}^{})_{11}^{}\) is turned into its magnitude by \(\check {U}_{8}^{\ast }\).

The shorter pipeline, followed when h or c are ⊥, keeps the working matrix upper triangular, and thus, the stage identifiers have “u” appended to them. Stage 5u turns \((\widehat {G}_{4}^{})_{12}^{}\) into its magnitude by (similarly as above, both unitary and \(\widehat {J}\)-unitary) \(\check {V}_{5}^{}\), while stage 6u completes the pipeline by turning \((\widehat {G}_{5}^{})_{22}^{}\) into its magnitude by \(\widehat {U}_{6}^{\ast }\).

Figure 1 illustrates the possible progressions of a non-diagonal complex matrix \(\widehat {G}_{0}\) through the stages of Algorithm 1 in both its pipelines (but only one pipeline is taken with any given input), and as if all optional transformations take place (the column and/or the row pivoting may not actually happen). A diagonal matrix admits a simplified procedure, evident enough for its details to be omitted here for brevity. The state of the working matrix before the operations of each stage commence is shown, while the transformation matrices are implied by the effects of a particular stage.

A schematic representation of the \(\widehat {J}\)-UTV factorization pipelines of a complex 2 × 2 matrix \(\widehat {G}_{0}\). The upper pipeline is followed in the trigonometric case, or in the hyperbolic case when no column pivoting was performed in stage 1, resulting in an upper triangular \(\widehat {T}\) after six transformations. Otherwise, the lower pipeline is followed from stage 5 onwards, resulting in a lower triangular \(\widehat {T}\) after eight transformations of the working matrix. The symbols with a black background stand for the complex elements, while those with a white background denote the real non-negative ones. The circled numbers represent the transient values, while the framed operators are reserved for the final ones, such that \(\boxdot ^{2}\ge \circledcirc ^{2}+\circledast ^{2}\)

The numerical issues of computing in the machine precision (in Fortran) are dealt with in Section 2.1.1 for the column norms, in Section 2.1.2 for the polar form of a complex number, and in Section 2.1.3 for the rotation-like transformations. It is assumed that the floating-point arithmetic is not trapping on an exception, that the rounding to nearest (with tie to even) is employed, that the gradual underflow and the fused multiply-add (fma) operation are available, that \(\texttt {HYPOT}(a,b)=\sqrt {a^{2}+b^{2}}\) intrinsic causes no undue overflow, and that HYPOT(a,b) = 0 ⇔ a = b = 0.

Algorithm 1 is conditionally reproducible, i.e., its results on any given input are bitwise identical in every floating-point environment behaving as described above and employing the same datatype, provided that the intrinsic is reproducible.

2.1.1 Determination of the scaling parameter s

For a complex number z, |z| can overflow in floating-point arithmetic. The magnitude of z can also pose a problem, e.g., in computing of the norms of the columns of \(\widehat {G}\) in stage 1 of Algorithm 1 for the column pivoting in the QR factorization. There,

so it has to be ensured, by an appropriate prescaling of \(\widehat {G}\), that |a|, |b|, and c do not overflow. A similar concern is addressed in the xLARTG LAPACK [1] routines, but for efficiency it is advisable to avoid the overhead of function calls in the innermost computational parts. At the same time, to avoid computing with the subnormal (components of) numbers, \(\widehat {G}\) could be upscaled when no overflow can occur. Therefore, to efficiently mitigate as many overflow and underflow issues as practicable, either the following prescaling of \(\widehat {G}\), and the corresponding postscaling of the resulting \(\widehat {{{\varSigma }}}\), should be employed, or, without any scaling needed, the entire computation of the 2 × 2 HSVD should be performed in a floating-point datatype at least as precise as the type of (the components of) the elements of \(\widehat {G}\), but with a wider exponent range (e.g., in the Intel’s 80-bit, hardware supported extended precision datatype).

Down- and up-scalings of \(\widehat {G}\) can be made (almost) exact, without any loss of precision in the vast majority of cases, by decreasing, or respectively increasing, the exponents of (the components of) the elements only, i.e., by multiplying the values by 2s with \(s\in \mathbb {Z}\), leaving the significands intact (except for a subnormal value and s < 0, when the |s| lowest bits of the significand—some of which may not be zero—perish), as explained in [22, subsection 2.1] and summarized here in Fortran parlance.

Let η(a) = (a) for a floating-point value a, and let ν = η(Ω), where Ω = () is the largest finite DOUBLE PRECISION value. For a finite, let

be a measure of how much, in terms of its exponent, a has to be downscaled, or how much it can be safely upscaled. Here and in the following, ω is the smallest non-zero positive subnormal floating-point number,Footnote 1 while \(\widehat {\omega }=\mathtt {TINY}(\mathtt {0D0})\) is the smallest positive normal DOUBLE PRECISION value. Two sets of such exponent differences,

are then formed, and the scaling parameter s is taken to be the minimum of ER∪ EI,

Each component c of the elements of \(\widehat {G}\) is then scaled as c0 = (c,s) to get \(\widehat {G}_{0}\).

Backscaling of the computed hyperbolic singular values by 2−s can cause overflows or underflows (to the subnormal range, or even zero), but they are unavoidable if the scaling should be transparent to the users of the 2 × 2 HSVD. A remedy, discussed in [22], would be to keep the scales in addition to the values to which they apply, in a separate array. Alternatively, computing in a wider datatype avoids both scaling and such issues, at a cost of a slower and not easily vectorizable arithmetic.

In brief, the scaling parameter s from (2.1) is chosen in such a way that:

-

1.

no column norm of a scaled 2 × 2 real or complex matrix can overflow, and

-

2.

no ordinary singular value of a scaled matrix can overflow [22, Theorem 1], and

-

3.

as many subnormal (components of the) matrix elements as possible are brought up to the normal range, for a faster and maybe more accurate computation.

The following Example 2.1 illustrates several reasons for and issues with the scaling.

Example 2.1

Assume \(\widehat {J}^{[i]}=I_{2}\), and consider the following real \(\widehat {G}^{[i]}\) for 1 ≤ i ≤ 3,

alongside their scaled versions \(\widehat {G}_{0}^{[i]}=2^{s^{[i]}}\widehat {G}^{[i]}\),

with the scaling parameters, computed from (2.1), being s[1] = s[3] = − 2 and s[2] = 2.

The singular values of \(\widehat {G}^{[1]}\), computed symbolically, are \({{\varOmega }}(\sqrt {145}\pm 5)/16\), and obviously the larger one would overflow without the scaling. In \(\widehat {G}^{[2]}\) there are three subnormal values. Two of them (the off-diagonal ones) get raised into the normal range, while the diagonal one stays subnormal, after the scaling. Finally, \(\widehat {G}^{[3]}\) is a pathological case of a non-singular matrix that is as ill-conditioned as possible, which becomes singular after the scaling, since ω/4 = 0 in the rounding-to-nearest mode.

2.1.2 Accurate floating-point computation of the polar form of a complex number

Expressing z as \(|z|e^{\mathrm {i}\arg {z}}\) requires a reliable way of computing \(e^{\mathrm {i}\arg {z}}\), where

One such method, presented in [22, eq. (1)], is summarized as follows. It is assumed that z has already been scaled as described in Section 2.1.1, so |z| cannot overflow.

Let be a floating-point minimum function,Footnote 2 with a property that if its first argument is a and the second is not, the result is the second argument. For the __ Fortran 2018 [13] intrinsic such a behavior is guaranteed, but is also present with the intrinsic of the recent Intel Fortran compilers, and several others. The same property will be required for a maximum function later on. Define

When |z| = 0, ensures the correct value of \(\cos \limits (\arg {z})\), because (0/0,1) = 1, while in that case taking the maximum in the denominator of \(\sin \limits (\arg {z})\) makes the whole quotient well defined, since 0/ω = 0. When |z| > 0, it holds \(\max \limits \{|z|,\omega \} = |z|\).

2.1.3 Performing the rotation-like transformations in floating-point

Some real-valued quantities throughout the paper are obtained by the expressions of the form a ⋅ b + c, and should be computed by a single fma, i.e., with a single rounding. One such example is \(\cos \limits \phi =1/\sqrt {\tan \phi \cdot \tan \phi +1}\) in stage 4 of Algorithm 1.

Regrettably, there is no widespread hardware support for the correctly rounded reciprocal square root (i.e., \(1/\sqrt {x}\)) operation. Therefore, the computation of a cosine involves at least three roundings: from the fused multiply-add operation to obtain the argument of the square root, from the square root itself, and from taking the reciprocal value. Instead of the cosines, the respective secants can be computed with two roundings, without taking the reciprocals. Then, in all affected formulas the multiplications by the cosines can be replaced by the respective divisions by the secants. Such an approach is slower than the usual one, but more accurate in the worst case.

There is no standardized way to accurately compute d = a ⋅ b + c with some or all values being complex. However, the real fused multiply-add operation can be employed as in the CUDA [24, cuComplex.h header] implementation of the complex arithmetic for an efficient, accurate, and reproducible computation of d as

when a, b, and c are complex. Otherwise, when a is real,

and when a is non-zero and purely imaginary,

Such operations can be implemented explicitly by the _ Fortran 2018 [13] intrinsic, or implicitly, by relying on the compiler to emit the appropriate fma instructions. By an abuse of notation, fma(a,b,c) in the following stand for both the real-valued and the above complex-valued operations, depending on the context.

With a and b complex, a multiplication a ⋅ b can be expressed as fma(a,b,c) with c = 0 and implemented as such, in a reproducible way, but simplified by converting all real fma operations involving a component of c to real multiplications.

A plane rotation, if it is to be applied from the left, can be written as (see, e.g., [6])

and similarly, if a plane (i.e., trigonometric) rotation is to be applied from the right,

A multiplication by T can be realized by a single fma per an element of the result, while the subsequent scaling by C can be converted to divisions by the corresponding secants. A similar factorization holds for a hyperbolic rotation, e.g., from the right,

2.2 The HSVD of \(\widehat {T}\)

Given \(\widehat {T}\) from Algorithm 1, the HSVD of \(\widehat {T}\), \(\widetilde {U}^{\ast }\widehat {T}\widetilde {V}^{}=\widetilde {{{\varSigma }}}\), now remains to be found.

First, \(\widetilde {U}^{\prime \ast }\widehat {T}\widetilde {V}^{\prime }=\widetilde {{{\varSigma }}}^{\prime }\) is computed, where (despite the notation) all matrices are real, \(\widetilde {{{\varSigma }}}^{\prime }\) is diagonal with non-negative elements, \(\widetilde {U}^{\prime \ast }\) is orthogonal, and is sought in the form of a plane rotation, while \(\widetilde {V}^{\prime }\) is \(\widehat {J}\)-orthogonal, and is sought in the form of a plane rotation when \(\widehat {J}=I_{2}^{}\) or \(\widehat {J}=-I_{2}^{}\), and in the form of a hyperbolic rotation otherwise.

If \(\widehat {J}\ne -I_{2}^{}\), \(\widetilde {{{\varSigma }}}^{\prime }\) is equal to the matrix \(\widetilde {{{\varSigma }}}\) of the hyperbolic singular values of \(\widehat {T}\). Else, \(\widetilde {{{\varSigma }}}^{\prime }=P_{2}^{}\widetilde {{{\varSigma }}}P_{2}^{\ast }\), i.e., the diagonal elements of \(\widetilde {{{\varSigma }}}^{\prime }\) are in the order opposite to the one prescribed by Definition 1.1, and thus have to be swapped to get \(\widetilde {{{\varSigma }}}=P_{2}^{\ast }\widetilde {{{\varSigma }}}^{\prime }P_{2}^{}\). In the latter case, when \(\widehat {J}=-I_{2}^{}\), let \(\widetilde {U}^{\ast }\) and \(\widetilde {V}\) be \(P_{2}^{\ast }\widetilde {U}^{\prime \ast }\) and \(\widetilde {V}^{\prime }P_{2}^{}\), respectively; else, let \(\widetilde {U}^{\ast }=\widetilde {U}^{\prime \ast }\) and \(\widetilde {V}=\widetilde {V}^{\prime }\). Note that \(\widetilde {{{\varSigma }}}\) holds the scaled hyperbolic singular values of \(\widehat {G}\).

There are four non-disjoint cases for \(\widehat {T}\) and \(\widehat {J}\), to be considered in the following order, stopping at, and proceeding as in, the first case for which its condition is satisfied:

-

1.

if \(\widehat {T}\) is already diagonal then let \(\widetilde {U}^{\prime \ast }=\widetilde {V}^{\prime }=I_{2}^{}\) and \(\widetilde {{{\varSigma }}}^{\prime }=\widehat {T}\), else

-

2.

if \(\widehat {J}=I_{2}\) or \(\widehat {J}=-I_{2}\), \(\widehat {T}\) is upper triangular and proceed as in Section 2.2.1, else

-

3.

if \(\widehat {T}\) is upper triangular, then proceed as in Section 2.2.2, else

-

4.

\(\widehat {T}\) is lower triangular, and proceed as in Section 2.2.3.

The first case above corresponds to d = ⊤, the second one to h = ⊥, the third one to h ∧¬c = ⊤, and the last one to h ∧c = ⊤, in terms of the states of Algorithm 1.

In fact, instead of \(\widetilde {{{\varSigma }}}^{\prime }\), the backscaled \(\widehat {{{\varSigma }}}^{\prime }=2^{-s}\widetilde {{{\varSigma }}}^{\prime }\) is directly computed for stability, i.e., the hyperbolic singular values of \(\widehat {G}\), instead of \(\widehat {T}\), are finally obtained by the backscaling procedure described in a separate paragraph and Algorithm 2 below.

Backscaling.

A backscaling routine from Algorithm 2 is used in Algorithms 3, 4, and 5. Algorithm 2 distributes the scale 2−s, with s from (2.1), among one of the values to which it should be applied and the given factor f, 1 ≤ f ≤Ω/4. The values \(d_{1}^{}\) and \(d_{2}^{}\) correspond to the diagonal elements of \(\widehat {T}\), in some order. From (2.8), (2.14), and (2.20), it follows that the scaled hyperbolic singular values are of the form \(d_{1}^{}f\) and \(d_{2}^{}/f\), for a certain f. The idea behind Algorithm 2 is to upscale f (since s ≥− 2, it cannot be by more than fourfold) or downscale it as much as possible, while keeping it above the underflow threshold \(\widehat {\omega }\), to \(f^{\prime }\). Any remaining backscaling factor is applied to \(d_{1}^{}\) before multiplying it by \(f^{\prime }\) to get \(d_{1}^{\prime }\), while \(d_{2}^{}\) is divided by f and the result is fully backscaled by 2−s to obtain \(d_{2}^{\prime }\). With such a distribution of 2−s among \(d_{1}^{}\) and f, neither of them should lose accuracy in an intermediate computation leading to \(d_{1}^{\prime }\) by being needlessly pushed down to the subnormal range, what could otherwise happen by multiplying any of them by the full backscaling factor.

For example, let f = 1′, the immediate floating-point successor of unity, and \(2^{-s}=\widehat {\omega }/2\). Then, 2−sf would cause the least significant bit of f to perish, with the result being subnormal. It might also happen that \(2^{-s}d_{1}^{}\) becomes subnormal. Instead, with Algorithm 2, \(f^{\prime }\) would be the immediate successor of \(\widehat {\omega }\), so no accuracy would be lost and normality of the result would be preserved, and ξ = 1, i.e., \(2^{-\xi }d_{1}^{}=d_{1}^{}/2\).

Let in the following α be (Ω) ≈ 1.34078079299425956 ⋅ 10154 (when computing in DOUBLE PRECISION), corresponding to \(\sqrt {\mathtt {DBL\_MAX}}\) parameter from [22].

2.2.1 Trigonometric case with \(\widehat {T}\) upper triangular

This special case of the ordinary SVD and has been thoroughly covered in [22] (with the trigonometric angles of the opposite signs), but is summarized here for completeness. Many techniques to be introduced here are also used in the hyperbolic case.

The diagonalization requirement \(\widetilde {U}^{\prime \ast }\widehat {T}\widetilde {V}^{\prime }=\widetilde {{{\varSigma }}}^{\prime }\) can be expressed as

where the order of the two matrix multiplications is arbitrary. Let such order be fixed to \((\widetilde {U}^{\prime \ast }\widehat {T})\widetilde {V}^{\prime }\). By observing that \(\hat {t}_{11}^{}\ge \max \limits \{\hat {t}_{12}^{},\hat {t}_{22}^{}\}\ge 0\) and \(\hat {t}_{11}^{}>0\), and by restricting the ranges for φ and ψ such that \(\cos \limits \varphi >0\) and \(\cos \limits \psi >0\), it follows that both sides can be scaled by \(1/(\hat {t}_{11}^{}\cos \limits \varphi \cos \limits \psi )\) to obtain a system of equations in \(\tan \varphi \) and \(\tan \psi \) as

where \(0\le \min \limits \{x,y\}\le \max \limits \{x,y\}\le 1\). This crucial conversion of one arbitrary value to a constant (\(\hat {t}_{11}^{}\to 1\)), enabled by the special form of \(\widehat {T}\), is the key difference from the standard derivation of the Kogbetliantz formulas used in, e.g., xLASV2 LAPACK routines, that makes the ones to be derived here simpler but still highly accurate [22].

Equating the off-diagonal elements of the left- and the right-hand side of (2.2) gives the annihilation conditions

from which \(\tan \psi \) can be expressed as

where the right-hand side is not defined if and only if \(y-x\tan \varphi =0\), what would imply, from (2.3), that \(\tan \varphi =0\) and thus y = 0, so \(\tan \psi =x\) and the decomposition of \(\widehat {T}\) is complete. In all other cases, (2.4) leads to a quadratic equation in \(\tan \varphi \),

or, with the terms rearranged,

where \(\tan ^{2}\varphi =1\) would imply x2 − y2 + 1 = 0, what cannot happen since 0 ≤ y ≤ 1 and x > 0 (due to the assumption that \(\widehat {T}\) is not diagonal). Thus, \(0\le \tan (2\varphi )<\infty \),

and \(0\le \tan \varphi <1\). Note that y = 0 implies \(\tan (2\varphi )=0\), so \(\tan \varphi =0\) and no special handling, as above, of this case is actually required. Also, all squares of the elements of the scaled \(\widehat {T}\) have vanished from the denominator in (2.5). Then,

while, from (2.4),

The following result has been proven as a part of [22, Theorem 1].

Theorem 2.1

It holds \(\sqrt {2}\ge \tan \psi \ge \tan \varphi \ge 0\) and \(\tilde {\sigma }_{11}^{\prime }\ge \tilde {\sigma }_{22}^{\prime }\ge 0\), where

From Theorem 2.1 it follows that the computed singular values of \(\widehat {T}\) are ordered descendingly. When \(\widehat {J}=-I_{2}\), they have to be swapped, as described in Section 2.2.

Algorithm 3 stably computes the derived quantities in floating-point and works also for a diagonal \(\widehat {T}\). Bounding \(\tan (2\varphi )\) from above by α is necessary to avoid a possible overflow of the argument of the square root in computing \(\tan \varphi \), without resorting to or losing accuracy of the result (see [22, subsection 2.3]). The expression 2xy is computed as (2a)b, where \(a=\min \limits \{x,y\}\) and \(b=\max \limits \{x,y\}\), to prevent the avoidable underflows of xy (e.g., when x is just above the underflow threshold and y ≈ 1/2). No division a/b should be replaced by a(1/b), nor square root inaccurately approximated. Algorithm 3 is reproducible if a method of computing \(1/\sqrt {x}\) is fixed.

2.2.2 Hyperbolic case with \(\widehat {T}\) upper triangular

The diagonalization requirement \(\widetilde {U}^{\prime \ast }\widehat {T}\widetilde {V}^{\prime }=\widetilde {{{\varSigma }}}^{\prime }\), scaled by \(1/(\hat {t}_{11}\cos \limits \varphi \cosh \psi )>0\) in the hyperbolic case for \(\widehat {T}\) upper triangular, is

from which, similarly to the trigonometric case, the annihilation conditions follow as

If y = 0 then \(\tan \varphi =0\) and \(\tanh \psi =-x\) satisfy (2.10), so the decomposition of \(\widehat {T}\) is complete, unless x = 1 and therefore \(\tanh \psi =-1\), what is impossible. Thus, the HSVD is not defined when the columns of \(\widehat {T}\) (equivalently, of \(\widehat {G}\)) are identical.

In the remaining cases, with y > 0, \(\tanh \psi \) can be expressed as

what gives, in a manner and with caveats similar to the trigonometric case,

and the remaining functions of φ are computed as in (2.6). From (2.11) it follows

Theorem 2.2 is an analogon of Theorem 2.1 in this hyperbolic case.

Theorem 2.2

If, in (2.9), x≠ 1, then \(|\tanh \psi |<1\) and

Proof

From (2.12), \(-1<\tan \varphi \le 0\), and from (2.13), \(|\tanh \psi |=|x+y\tan \varphi |\). With x fixed, \(|\tanh \psi |\) attains the maximum value for \(y\tan \varphi \) being either the smallest, i.e., when approaching − 1 from the right, or the largest possible, i.e., zero. In the first case, \(|\tanh \psi |<|x-1|\le 1\). In the second, \(|\tanh \psi |=|x|=x\), so if x≠ 1 then \(|\tanh \psi |<1\).

Now (2.14) is proven. From (2.9) and (2.13) it follows

what, after multiplying both equations by \(\hat {t}_{11}^{}\cos \limits \varphi \cosh \psi \), gives (2.14). □

Algorithm 4, similarly to Algorithm 3, computes reproducibly and as stably as practicable the HSVD of an upper triangular (including diagonal) \(\widehat {T}\) when \(\widehat {J}\ne \pm I_{2}\).

Here and in Algorithm 5 from Section 2.2.3 a safety parameter \(\upsilon \lesssim 1\) is introduced that will be fully explained in Section 2.3. For now, assume υ = 1.

2.2.3 Hyperbolic case with \(\widehat {T}\) lower triangular

The diagonalization requirement \(\widetilde {U}^{\prime \ast }\widehat {T}\widetilde {V}^{\prime }=\widetilde {{{\varSigma }}}^{\prime }\), scaled by \(1/(\hat {t}_{22}\cos \limits \varphi \cosh \psi )>0\) in the hyperbolic case for \(\widehat {T}\) lower triangular, is

from which the annihilation conditions follow as

If y = 0 then \(\tan \varphi =0\) and \(\tanh \psi =-x\) satisfy (2.16), so the decomposition of \(\widehat {T}\) is complete, unless x = 1 and thus \(\tanh \psi =-1\), what is impossible. The HSVD of \(\widehat {T}\) is not defined, same as in Section 2.2.2, when the columns of \(\widehat {T}\) (i.e., \(\widehat {G}\)) are identical.

In the remaining cases, with y > 0, \(\tanh \psi \) can be expressed as

what gives, similarly to the other hyperbolic case,

and the remaining functions of φ are computed as in (2.6). From (2.17) it follows

Theorem 2.3 is an analogon of Theorem 2.2 in this hyperbolic case.

Theorem 2.3

If, in (2.15), x≠ 1, then \(|\tanh \psi |<1\) and

Proof

From (2.18)–(2.19), \(0\le \tan \varphi <1\) and \(|\tanh \psi |=|y\tan \varphi -x|\). With x fixed, \(|\tanh \psi |\) attains the maximum value for \(y\tan \varphi \) being either the largest, i.e., when approaching unity from the left, or the smallest possible, i.e., zero. In the first case, \(|\tanh \psi |<|1-x|\le 1\). In the second, \(|\tanh \psi |=|-x|=x\), so \(x\ne 1\implies |\tanh \psi |<1\).

Now (2.20) is proven. From (2.15) and (2.19) it follows

what, after multiplying both equations by \(\hat {t}_{22}^{}\cos \limits \varphi \cosh \psi \), gives (2.20). □

Algorithm 5, similarly to Algorithm 4, computes reproducibly and as stably as practicable the HSVD of a lower triangular (including diagonal) \(\widehat {T}\) when \(\widehat {J}\ne \pm I_{2}\).

Let ε, unless noted otherwise, be half the unit in the last place (ulp) of 1, or equivalently, ε = 1 −′1, where ′1 is the immediate floating-point predecessor of 1 in the chosen datatype; e.g., ε = 2− 24 and ε = 2− 53 in single and double precision, respectively. In Fortran, the latter is equal to the result of ()/.

From (2.13) and (2.19) it follows \(\sqrt {\varepsilon }\le \text {sech}\psi \le 1\), since the argument of the secant’s defining square root cannot be less than ε whenever \(|\tanh \psi |<1\) (what is implied by \(\tanh ^{2}\psi \le |\tanh \psi |\le {'1}\)). Also, from (2.5), (2.12), and (2.18) it can be concluded that \(|\tan \varphi |<1\), so \(1\le \sec \varphi \le \sqrt {2}\) and \(1\le \sec \varphi /\text {sech}\psi \le \sqrt {2/\varepsilon }\). In the trigonometric case, \(1\le \sec \psi \le \sqrt {3}\) and \(\sec \varphi \le \sec \psi \), due to (2.7) and Theorem 2.1, so \(1\le \sec \psi /\sec \varphi \le \sqrt {3}<\sqrt {2/\varepsilon }\). Hence, \(f\le \sqrt {2/\varepsilon }\) in the uses of Algorithm 2.

2.3 The HSVD of \(\widehat {G}\)

The HSVD of \(\widehat {G}\) as \(\widehat {U}^{\ast }\widehat {G}\widehat {V}=\widehat {{{\varSigma }}}\) is obtained in four phases:

-

1.

computing the scaling parameter s and \(\widehat {G}_{0}=2^{s}\widehat {G}\) as in Section 2.1.1,

-

2.

factoring \(\widehat {G}_{0}^{}\) as \(\check {U}^{\ast }\widehat {G}_{0}^{}\check {V}=\widehat {T}\) by Algorithm 1,

-

3.

computing the HSVD of \(\widehat {T}\), \(\widetilde {U}^{\ast }\widehat {T}\widetilde {V}=\widetilde {{{\varSigma }}}\) and \(\widehat {{{\varSigma }}}=2^{-s}\widetilde {{{\varSigma }}}\), as in Section 2.2, and

-

4.

assembling \(\widehat {U}^{\ast }=\widetilde {U}^{\ast }\check {U}^{\ast }\) and \(\widehat {V}=\check {V}\widetilde {V}\) (and, if required, \(\widehat {V}^{-1}=\widehat {J}\widehat {V}^{\ast }\widehat {J}\)).

The third phase can fail if the HSVD is not defined, or is unsafe, as indicated by the return values of Algorithm 4 and Algorithm 5. Safety (or a lack thereof), parametrized by υ in those algorithms, is a user’s notion of how close \(|\tanh \psi |\) can get to unity from below to still define a hyperbolic rotation that is well-enough conditioned to be applied to the pivot columns of the iteration matrix. For the 2 × 2 HSVD in isolation, υ = 1, but for the n0 × n0 HSVD it might sometimes be necessary to set it a bit lower if the HSVD process otherwise fails to converge, e.g., to υ = 0.8, as in [35], or even lower. There is no prescription for choosing an adequate υ in advance; if an execution takes far more (multi-)steps than expected (see Section 6 for some estimates in terms of cycles), it should be aborted and restarted with a lower υ.

If υ < 1, \(|\tanh \psi |<1\), and the return value from either algorithm is ⊥, there are two possibilities. First, \(\tanh \psi \) can be set to \(\text {sign}(\tanh \psi )\upsilon \), and the hyperbolic transformation can be computed accordingly [32]. However, the off-diagonal elements of the transformed pivot matrix cannot be considered zeros anymore, and they have to be formed in the iteration matrix and included in the weight computations (see Section 3.2). An inferior but simpler solution declares that the 2 × 2 HSVD is not defined, as if \(|\tanh \psi |\ge 1\), and the pivot pair in question will no longer be a transformation candidate in the current multi-step, in the hope that it will again become one, with a better conditioned hyperbolic rotation, after its pivot row(s) and column(s) have been sufficiently transformed. In the implementation the latter option has been chosen, but the former might lead (not tested) to a faster convergence when it has to be employed.

3 Row and column transformations

If the HSVD of \(\widehat {G}_{k-1}\) is not defined, the algorithm stops. Else, having computed \(\widehat {U}_{k}^{\ast }\), \(\widehat {{{\varSigma }}}_{k}\), and \(\widehat {V}_{k}\) for a transformation candidate with the pivot indices pk and qk, the pkth and the qkth row of Gk− 1 are transformed by multiplying them from the left by \(\widehat {U}_{k}^{\ast }\),

Then, \(G_{k}^{}\) is obtained from \(G_{k-1}^{\prime }\) after transforming the \(p_{k}^{}\)th and the \(q_{k}^{}\)th columns of \(G_{k-1}^{\prime }\) by multiplying them from the right by \(V_{k}^{}\),

and setting Gk(pk,pk) to the first diagonal element of \(\widehat {{{\varSigma }}}_{k}\), Gk(qk,qk) to the second one (in both cases reusing the possibly more accurate hyperbolic singular values from the HSVD of \(\widehat {G}_{k-1}\) then those computed by the row and the column transformations of Gk), while explicitly zeroing out Gk(pk,qk) and Gk(qk,pk).

If the left and the right (hyperbolic) singular vectors are desired, in a similar way as above the current approximations of U∗ and V are updated by \(\widehat {U}_{k}^{\ast }\) and \(\widehat {V}_{k}^{}\), respectively.

3.1 Effects of a hyperbolic transformation

If \(\widehat {V}_{k}\) is unitary, the square of the off-diagonal Frobenius norm of the transformed Gk is reduced by |Gk− 1(qk,pk)|2 + |Gk− 1(pk,qk)|2 ≥ 0. Else, if \(\widehat {V}_{k}\) is \(\widehat {J}_{k}\)-unitary, the following Lemma 3.1 sets the bounds to the relative change of the square of the Frobenius norm of the pkth and the qkth columns multiplied from the right by a hyperbolic rotation. Note that a complex \(\widehat {V}_{k}\) is of the form

which can alternatively be expressed as

where the rightmost matrix (call it B) is unitary and does not change the Frobenius norm of any matrix that multiplies it from the left. Lemma 3.1 is thus stated in full generality, for \(\widehat {V}_{k} B^{\ast }\), since \(\|X\widehat {V}_{k} B^{\ast }\|_{F}=\|X\widehat {V}_{k} B^{\ast }B\|_{F}=\|X\widehat {V}_{k}\|_{F}\) for all conformant X.

Lemma 3.1

If x and y are complex vectors of length n, such that \(\left \|\left [\begin {array}{cc}\mathbf {x}&\mathbf {y} \end {array}\right ]\right \|_{F}>0\), and

then

The following Lemma 3.2 refines the bounds stated in Lemma 3.1. Both Lemmas, proved in the Appendix, are used in the proof of Theorem 3.1 in Section 3.2.

Lemma 3.2

In the lower or the upper bound established in Lemma 3.1 the equality is attainable if and only if y = ±eiβx or ψ = 0. The lower bound is always positive but at most unity, and the upper bound is at least unity.

Another observation is that the norm of the transformed columns depends both on the norm of the original columns, as well as on the hyperbolic transformation applied. Therefore, ψ of a relatively large magnitude does not by itself pose a problem if the original columns have a modest norm. And contrary, even ψ of a relatively small magnitude can—and in practice, will—cause the columns’ elements of a huge magnitude, should such exist, to overflow in the finite machine arithmetic.

3.2 Weight of a transformation candidate

Let \(\text {off}_{F}^{2}(A)\) be the square of the off-diagonal Frobenius norm of \(A\in \mathbb {F}^{n\times n}\), i.e.,

The following Theorem 3.1 deals with the amount of change \(\text {off}_{F}^{2}(G_{k-1}^{})-\text {off}_{F}^{2}(G_{k}^{})\).

Theorem 3.1

For k such that 1 ≤ k ≤ N and \(w_{k}^{}=\text {off}_{F}^{2}(G_{k-1}^{})-\text {off}_{F}^{2}(G_{k}^{})\) it holds

where hk = 0 and wk is non-negative if Vk is unitary. Otherwise, for hk holds

and wk can be negative, positive, or zero.

Proof

When Vk is unitary, the statement of the Theorem 3.1 is a well-known property of the Kogbetliantz algorithm, and a consequence of \(U_{k}^{\ast }\) also being unitary, as well as the Frobenius norm being unitary invariant.

Else, if Vk is not unitary, then observe that the elements of Gk at the pivot positions (i.e., having their indices taken from the set {pk,qk}) do not contribute to \(\text {off}_{F}^{2}(G_{k}^{})\), since the off-diagonal elements at those positions are zero. The change from \(\text {off}_{F}^{2}(G_{k-1}^{})\) to \(\text {off}_{F}^{2}(G_{k}^{})\) is therefore the sum of squares of the magnitudes of those elements, plus any change (hk) happening outside the pivot positions, as in (3.1).

The left transformation is unitary, and therefore the only two rows affected, pkth and qkth, shortened to have the pivot elements removed, keep their joint Frobenius norm unchanged from \(G_{k-1}^{}\) to \(G_{k-1}^{\prime }\). Since the right transformation affects only the pkth and the qkth columns, either of which intersect the pkth and the qkth row in the pivot positions only, there is no further change from \(\text {off}_{F}^{2}(G_{k-1}^{\prime })\) to \(\text {off}_{F}^{2}(G_{k}^{})\) when the off-diagonal norm is restricted to the shortened and transformed rows, so a contribution to hk from the left transformation is zero.

Therefore, only the right transformation is responsible for the value of hk, which can be bounded by Lemma 3.1, applied to the computed hyperbolic transformation \(\widehat {V}_{k}\) and the pkth and the qkth columns with the pivot elements removed from them. The square of the joint Frobenius norm of the shortened columns might either fall or rise after the transformation, due to Lemma 3.2. If it falls, hk and thus wk is positive. If it rises, depending on the hyperbolic angle ψ and on the off-diagonal elements, hk can become negative and so large in magnitude to push wk down to zero or below.

For example, let 0 < ε ≪ 1 and observe that in the real case

(see Section 2.2.2). Let a and b lie in pkth and the qkth columns, respectively, in a row ℓ∉{pk,qk}, and let the other non-pivot elements be zero. Then a and b are transformed to \(\tilde {a}\) and \(\tilde {b}\), respectively, where

If a = b = 1, then \(\tilde {a}=-\tilde {b}=\sqrt {2-\varepsilon }/\sqrt {\varepsilon }\), so

what is by magnitude far greater than 02 + |ε − 1|2 ≈ 1, thus wk < 0. Oppositely,

while \(\tilde {a}\) and \(\tilde {b}\) are

If a = b = 1, then \(\tilde {a}=\tilde {b}=\sqrt {\varepsilon }/\sqrt {2-\varepsilon }\), so

thus wk > 0. Finally, let a > 0, b = 0, and assume \(\check {V}_{k}=I_{2}\) for simplicity. Then

what, together with \(1=\cosh ^{2}\psi -\sinh ^{2}\psi \), gives

what is equal to − 2a2 for \(|\sinh \psi |=1\). Setting

The sum of squares of the off-diagonal elements of \(\widehat {G}_{k-1}\) is 2a2 and thus wk = 0. □

A sequence of matrices (Gk)k≥ 0 converges to a diagonal form if and only if \((\text {off}_{F}^{2}(G_{k}^{}))_{k\ge 0}\) tends to zero. The sequence \((\text {off}_{F}^{2}(G_{k}^{}))_{k\ge 0}\) does not have to be monotonically decreasing, i.e., wk can be negative for some k in the HSVD case, unlike in the ordinary Kogbetliantz algorithm. That significantly complicates any reasoning about convergence in theory, and attainment of (a satisfactory rate of) convergence in practice. To aid the latter, the pivot weights wk from (3.1) should be kept as high as possible, by a careful choice of the pivot submatrix among all admissible transformation candidates in each step. In the SVD computation, choosing a candidate with the maximal weight guarantees convergence [25], and is trivially accomplished since the weights are directly computable, due to hk = 0. In the hyperbolic case, hk from (3.2) cannot be known in advance, without performing the right (column) transformation, and it cannot be estimated (roughly, due to Lemma 3.2) by means of Lemma 3.1 without computing the associated \(\widehat {V}_{k}\) and occasionally recomputing the column norms, what is of the same linear complexity as transforming those columns.

The computation of hk is therefore preferable to an estimation. In each step, it has to be performed for all admissible transformation candidates, and non-trivially for all indices p and q such that p < q, jpp≠jqq, and the associated \(\widehat {V}_{k}\ne I_{2}\) (if \(\widehat {V}_{k}\) is not defined, let \(h_{k}=-\infty \) instead of halting), i.e., at most \({n_{0}^{2}}/4\) times, if J0 has the same number of positive and negative signs. The left transformation by \(\widehat {U}_{k}^{\ast }\) is not needed here, so only the right one by \(\widehat {V}_{k}\) has to have a virtual variant, that does not change the elements of Gk− 1, but for each row i∉{p,q} computes what would Gk(i,p) and Gk(i,q) be from Gk− 1(i,p), Gk− 1(i,q), and \(\widehat {V}_{k}\), and updates hk using (3.2). That can be done accurately by applying twice for each i∉{p,q} the accumulation rule

once for a = |Gk− 1(i,p)| and \(\tilde {a}=|G_{k}(i,p)|\), and again for a = |Gk− 1(i,q)| and \(\tilde {a}=|G_{k}(i,q)|\). If ρ is initialized to the sum of squares of the magnitudes of the off-diagonal elements of \(\widehat {G}_{k-1}\) instead of to zero, then the final ρ is wk instead of hk.

All virtual transformations in a step are mutually independent, so they should be performed in parallel. With enough memory available (\(O({n_{0}^{3}})\) in parallel), the computed elements of \(\widehat {U}_{k}^{\ast }\), \(\widehat {V}_{k}\), and Gk could be stored, separately for each virtual transformation, and reused if the corresponding candidate has been selected as a pivot. Sequentially, only the data (O(n0) values) of a candidate with the maximal weight should be stored. In the tested prototype of the algorithm the virtual transformations are performed in parallel, but all data generated, save the weights, are discarded.

It now emerges that each step requires at least \(O({n_{0}^{2}})\), and at most \(O({n_{0}^{3}})\) operations just for computing all wk. The complexity of the whole HSVD algorithm is therefore quartic in n0 in the general case, far worse than the usual cubic algorithms for the ordinary SVD. It is legitimate to ask what impedes development of a Kogbetliantz-type HSVD algorithm with a cubic complexity. For that, the pivot strategy should either ignore the weights and select the pivots in a prescribed order (as, e.g., the row-cyclic or the column-cyclic serial pivot strategies do), or compute all weights in a step with no more than a quadratic number of operations (e.g., by ignoring hk in (3.1) and calculating the rest of wk). Either approach works well sometimes, but the former failed in the numerical experiments when n0 went up to 2000, and the latter even with n0 around 100, both with a catastrophic increase (overflow) of the off-diagonal norm. It remains an open question whether employing in both cases a much wider floating-point datatype (precision-wise as well as exponent-wise) could save the computation and eventually lead to convergence, but that is of more interest to theory than practice. The pivot strategy motivated here and described in detail in Section 4, however slow, is designed to keep the growth of the off-diagonal norm minimal (at least with a single pivot) when it is unavoidable, and can be used for the matrix orders of up to a few thousands with enough parallelism at hand. A faster but at least equally safe pivot strategy would make the J-Kogbetliantz algorithm competitive in performance with the pointwise one-sided hyperbolic Jacobi method.

It is remarkable that the extensive numerical tests conducted with many variants of the parallel blocked one-sided hyperbolic Jacobi method, all of them with some prescribed cyclic pivot strategy, either on CPU [30, 31] or on GPU [21, 23], have never shown an indication of a dangerous off-diagonal norm growth, even though the hyperbolic angle of a 2 × 2 transformation might be of a large magnitude there as well, at least in theory. It may be interesting to look further into why that did not happen (and probably is hard to make happen) in practice, unlike with the Kogbetliantz-type algorithm, which requires such a complex pivot strategy to reduce the norm growth.

3.3 Floating-point considerations

The sum of squares from (3.1) can overflow, as well as each of the squares, leading to \(w_{k}=\infty \). A dynamic rescaling of the whole Gk− 1 by an appropriate power of two could mitigate that issue, but with a risk that the smallest values by magnitude become subnormal and lose precision. However, if the weights are computed in a wide enough floating-point datatype (e.g., using the Intel’s 80-bit extended), no overflow can occur. Similarly, one or both squares can underflow (a fact to be relied upon in Section 5) to a point of becoming zero(s). Then, if hk = 0, the only way of avoiding wk = 0, without computing in a wider datatype, is scaling Gk− 1 upwards, thus risking overflow of the largest elements by magnitude. As a partial remedy, the augmented weights from Section 4.1 are always distinct and well ordered, so a deterministic pivot selection is possible even with some (or all) weights being \(\infty \) or underflowing.

There is no rule of thumb how to properly prescale G0, so that such issues, as well as the potential overflows due to the hyperbolic transformations, do not needlessly occur. Monitoring the computed weights can indicate should the latter problems be immediately avoided by downscaling Gk− 1. In the tested prototype of the algorithm the dynamic scaling of the whole matrix, unlike the scaling from Section 2.1.1, has not been implemented, but should otherwise be if robustness is paramount.

4 Dynamic pivot selection based on weights

A dynamic pivot strategy (DPS in short) based on block weights was introduced in [3] for the two-sided block-Jacobi SVD algorithm (as the block-Kogbetliantz algorithm is also called), while the global and the asymptotic quadratic convergence of such a coupling was proven in [25] for the serial (a single block pair per step) and the parallel (multiple block pairs per step) annihilation. As the pointwise Jacobi algorithms are but a special case of the block ones, when the blocks (matrices) contain only one, scalar element, all properties of the dynamic pivoting hold in that context as well.

However, a DPS used in the pointwise Kogbetliantz-type HSVD algorithm differs in several aspects from the one for the SVD. A weight, i.e., the amount of the off-diagonal norm reduction, in the latter is finite (up to a possible floating-point overflow) and non-negative, while in the former it can be of arbitrary sign and infinite. Yet, the goal in both cases is the same: to reduce the off-diagonal norm in each step as much as possible. In the latter the off-diagonal norm growth is impossible, while in the former it is sometimes necessary, but is still kept as low as practicable.

Another important difference is in handling a situation when some or all weights are the same. In the former, a concept of augmented weight is introduced, as follows.

4.1 DPS in the sequential case

Definition 4.1

Let the weight of a 2 × 2 submatrix of Gk− 1 at the intersection of the p th and the q th row with the p th and the q th columns be computed according to (3.1) if that submatrix is a transformation candidate. Else, if the submatrix does not need to be transformed, or cannot be transformed due to at least one its elements being non-finite, define its weight as a quiet . Let a triple \(w_{pq}^{[k]}=(w,p,q)\) be called an augmented weight, where w is the weight of the submatrix induced by (p,q). Also, let \(\mathbf {w}_{k}^{} = \{w_{pq}^{[k]}\mid 1\!\le \! p\!<\!q\!\le \! n_{0}\}\) be the set of all augmented weights in the k th step.

For any given k, a total order ≼ can be defined on the augmented weights that makes all of them distinct, even though the weights themselves may be equal.

Definition 4.2

Let, for some k, a and b be two augmented weights in wk, and let them be considered equal, denoted as a = b, if and only if their corresponding components are equal, i.e., a.w = b.w, a.p = b.p, and a.q = b.q. Contrary to the usual definition of , in this context let = and < c for any other c. Let ≼ be the union of the relations ≺ and =, where a ≺ b if and only if

-

1.

a.w > b.w, or

-

2.

a.w = b.w and a.q − a.p > b.q − b.p, or

-

3.

a.w = b.w, a.q − a.p = b.q − b.p, and a.q > b.q.

Proposition 4.1

The relation ≼ from Definition 4.2 makes wk well ordered; specifically, every non-empty subset of wk, including wk, has a unique ≼-smallest element.

Proof

It is easy to verify that ≼ is a total order on wk. Since wk is finite, it is well ordered by ≼. If all weights in S, \(\emptyset \ne S\subseteq \mathbf {w}_{k}\), are different, the smallest element is the one with the largest weight (due to condition 1 from Definition 4.2). The quantities a.q − a.p and b.q − b.p indicate a band, i.e., a sub/super-diagonal of Gk− 1 at which (q,p) and (p,q) lie, respectively, with the main diagonal being band 0. If several elements of S have the same maximal weight, the smallest element is the one among them in the farthest band (condition 2). If more than one such element exists, the smallest is the one lying lowest, i.e., with the largest column index (condition 3). □

The following Corollary 4.1 is a direct consequence of Proposition 4.1 and defines the DPS in the sequential case, i.e., when only one pivot is transformed in each step.

Corollary 4.1

Let \(\widetilde {\mathbf {w}}_{k}^{}=\mathbf {w}_{k}^{}\setminus \{a| a.w=\mathtt {NaN}\}\) be a set of augmented weights such that the weights themselves are not . If \(\widetilde {\mathbf {w}}_{k}=\emptyset \), no transformations are possible and the algorithm stops with N = k. Else, let \(\hat {a}\) be the ≼-smallest element of \(\widetilde {\mathbf {w}}_{k}\). If \(\hat {a}.w=-\infty \), no transformation is valid and the algorithm halts with an error. Else, \((\hat {a}.p,\hat {a}.q)\) are the indices of a single pivot to be chosen in the k th step.

Finding the smallest element of \(\widetilde {\mathbf {w}}_{k}\) is linear in \(c=|\widetilde {\mathbf {w}}_{k}|\), i.e., at most quadratic in n0, if a naïve method is used. However, any t disjoint subsets of \(\widetilde {\mathbf {w}}_{k}\), each of them of size at most ⌈c/t⌉ and at least one less that, can be linearly searched for their smallest elements, all of them in parallel. The smallest elements thus found can in turn be ≼-reduced in parallel with \(\lceil \log _{2} t\rceil \) complexity to get the smallest element overall.

Furthermore, observe that only the weights in the pivot rows and columns change after a step. Then, in the next step, the weights in the changed positions have to be recomputed and compared with the unchanged weights in the remaining part of the matrix, for which the ≼-smallest element can already be found in the previous step. Therefore, in each step two elements of \(\widetilde {\mathbf {w}}_{k}\) have to be found: the ≼-smallest one a, and its closest ≼-successor b such that {a.p,a.q}∩{b.p,b.q} = ∅. Finding such a and b would be easiest if \(\widetilde {\mathbf {w}}_{k}\) would have already been sorted ≼-ascendingly. But if such a and b are found, they define two pivots that can both be transformed in parallel, i.e., in a multi-step of length two. Repeating the observation of this paragraph, both a sketch of a method and an argument for the parallel DPS emerges, where a sequence of pivots, all with their indices disjoint, is incrementally built to be transformed in a multi-step. The case of a single pivot per step is here abandoned in favor of the parallel, multi-step case, albeit it can be noticed that some pivots in such a multi-step can lead to the off-diagonal norm growth when the ≼-smallest one does not. The sequential case is thus locally (i.e., in each step, but not necessarily globally) optimal with respect to the change of the off-diagonal norm, but the parallel one may not be.

4.2 DPS in the multi-step case

For a multi-step k, let the augmented weights \(w_{pq}^{[\mathbf {k}]}\) and the set wk of them be defined as in Definition 4.1, with the smallest k ∈k. Definition 4.2, Proposition 4.1, and Corollary 4.1 are then modified accordingly. Also, let \(\widehat {\mathbf {w}}_{\mathbf {k}}\) be a ≼-ascendingly sorted array of the elements of \(\widetilde {\mathbf {w}}_{\mathbf {k}}\setminus \{a\mid a.w=-\infty \}\). An option to get \(\widehat {\mathbf {w}}_{\mathbf {k}}\) from \(\widetilde {\mathbf {w}}_{\mathbf {k}}\) is the parallel merge sort. In the prototype implementation, the Baudet–Stevenson odd-even sort with merge-splitting of the subarrays [2] is used, since it is simple and keeps ⌈t/2⌉ tasks active at any given time, even though its worst-case complexity is quadratic. Both choices require a work array of c augmented weights, but that scratch space can be reused elsewhere. For t = 1, a sequential merge (or quick) sort is applicable.

Definition 4.3

Let Sk be the set of all ≼-ascending sequences of length at most |k| of the augmented weights from \(\widehat {\mathbf {w}}_{\mathbf {k}}\) with non-intersecting indices, i.e., of all (not necessarily contiguous) subarrays of \(\widehat {\mathbf {w}}_{\mathbf {k}}\) of length at most |k|, such that for any two elements a and b from a subarray holds {a.p,a.q}∩{b.p,b.q} = ∅. Let Sk ∈Sk be arbitrary, m ≥ 1 be the length of Sk, and define the following functions of Sk,

as its weight and as a sequence of indices that its elements have in \(\widehat {\mathbf {w}}_{\mathbf {k}}\), respectively.

It suffices to restrict Definition 4.3 to the sequences of length m > 0 only, since \(|\widehat {\mathbf {w}}_{\mathbf {k}}|=0\) implies that no valid transformations are possible, and the execution halts.

Definition 4.4

Let |k|, \(\widehat {\mathbf {w}}_{\mathbf {k}}\), and ℓ such that \(1\le \ell \le |\widehat {\mathbf {w}}_{\mathbf {k}}|\) be given. Then, a parallel ordering \(O_{\mathbf {k}}^{\ell }\in \mathbf {S}_{\mathbf {k}}^{}\) is the sequence of maximal length, but not longer than |k|, such that \(O_{\mathbf {k}}^{\ell }(1)=\widehat {\mathbf {w}}_{\mathbf {k}}^{}(\tau _{1})\), with τ1 = ℓ, and \(O_{\mathbf {k}}^{\ell }(l)=\widehat {\mathbf {w}}_{\mathbf {k}}^{}(\tau _{l})\) for l > 1, where τl is the smallest index of an element of \(\widehat {\mathbf {w}}_{\mathbf {k}}\) such that

for all τi such that 1 ≤ i < l. If \(O_{\mathbf {k}}^{\ell }\) is of length |k|, it is denoted by \(PO_{\mathbf {k}}^{\ell }\). A parallel DPS is a pivot strategy that for each k finds \(O_{\mathbf {k}}^{\ell }\), given an admissible ℓ.

Given an admissible ℓ, \(O_{\mathbf {k}}^{\ell }\) from Definition 4.4 exists and is unique. Let its length be mℓ. Then, \(\mathbf {o}(O_{\mathbf {k}}^{\ell })=(\tau _{l}^{})_{l=1}^{m_{\ell }^{}}\). Taking the maximal mℓ possible reflects an important choice of having most possible pivots per each multi-step transformed in parallel, even though it might imply that \(w(O_{\mathbf {k}}^{\ell })\) is smaller than it would have been if only the first \(m_{\ell }^{\prime }<m_{\ell }^{}\) augmented weights were left in \(O_{\mathbf {k}}^{\ell }\). Also, |k| should be considered to stand for the desired number of steps in k, until a parallel ordering has been found as described below and the actual, maybe lower, number of steps has been determined.

Algorithm 6 sequentially constructs a parallel ordering from Definition 4.4 for a given index ℓ of \(\widehat {\mathbf {w}}_{\mathbf {k}}\). It can also be constructed in parallel with Algorithm 7. Definition 4.4 indicates validity of Algorithms 6 and 7. All parallel constructs from here on in the paper are the OpenMP [27] ones, acting on the shared memory.

Algorithm 7 is the one chosen for the prototype implementation when t > 1 (as was the case in the tests), with a fallback to Algorithm 6 when t = 1.

Example 4.1

Let A be a matrix representation of the \(\widehat {\mathbf {w}}_{\mathbf {k}}\) computed for some Gk− 1,

Here, apq = aqp for 1 ≤ p < q ≤ n0 = 6 is the index of \(w_{pq}^{[\mathbf {k}]}=(w_{pq}^{},p,q)\) in \(\widehat {\mathbf {w}}_{\mathbf {k}}\). Then, \(PO_{\mathbf {k}}^{1}\) is obtained by either Algorithm 6 or 7, with the pivot index pairs denoted in A as well with the boxes of diminishing thickness, corresponding to the decreasing weights. Moreover, if all weights are the same (zeros, e.g.), then, by Definition 4.2, A always has the same form as above, enlarged or shrunk according to n0, regardless of the actual elements in Gk− 1. Near the end of the Kogbetliantz process, where most weights are the same, the pivot strategy predictably selects many of the pivots in a pattern resembling the antidiagonal, and expanding towards the SE and the NW corners.

The initial ordering of the elements of wk is irrelevant mathematically. However, to simplify parallelization of the iteration over a triangular index space, i.e., over the indices of the strictly upper triangle of Gk− 1, a one-dimensional array \({\mathscr{I}}\) of n = n0(n0 − 1)/2 index pairs is pregenerated as follows. Let i+ := 1 and i− := n. For each index pair (p,q) in the column-cyclic order (column-by-column, and top-to-diagonal within each column), if J0(p,p) = J0(q,q) let \({\mathscr{I}}(i_{+}):=(p,q)\) and i+ := i+ + 1; else, let \({\mathscr{I}}(i_{-}):=(p,q)\) and i− := i−− 1. This way \({\mathscr{I}}\) is partitioned into two contiguous subarrays, \({\mathscr{I}}_{+}\) and \({\mathscr{I}}_{-}\) (which might be empty), of the index pairs leading to the trigonometric or to the hyperbolic transformations, respectively. One parallel loop over \({\mathscr{I}}_{+}\) then computes all the weights in the trigonometric case, and another parallel loop over \({\mathscr{I}}_{-}\) does the same in the hyperbolic case. Within each of the loops all iterations are of equal complexity, what would not have been the case if the two loops were fused into one, since computing the weights is essentially faster in the trigonometric (O(1) per weight) than in the hyperbolic (O(n0) per weight) case.

5 Overview of the algorithm

In this section the convergence criterion, a vital part of the J-Kogbetliantz algorithm, is discussed in Section 5.1, and the algorithm is summarized in Section 5.2.

5.1 Convergence criterion

Traditionally, a convergence criterion for the Jacobi-like (including the Kogbetliantz-like) processes is simple and decoupled from the choice of a pivot strategy. However, in the hyperbolic case, where even a small off-diagonal norm can oscillate from one step to another, a “global” stopping criterion, based on the fall of the off-diagonal norm, relative to the initial one, below a certain threshold, or on stabilization of the diagonal values, e.g., does not suffice alone for stopping the process unattended.

The former approach may stop the process when the off-diagonal norm has relatively diminished, but when there may still be some valid transformations left that can both change the approximate singular values and raise the off-diagonal norm.

On the other hand, if the threshold has been set too low, the process may never (literally or practically) stop and may keep accumulating the superfluous transformations computed almost exclusively from the leftover rounding errors off the diagonal.

If a stopping criterion is solely based on observing that the diagonal elements, i.e., the approximate singular values, have not changed at all in a sequence of successive steps of a certain, predefined length, a few conducted tests indicate that the computed singular values are accurate in a sense of (6.2) and the diagonal has converged to its final value, but the singular vectors are not, with the relative error (6.1) of order \(\sqrt {\varepsilon }\), where ε is the machine precision, i.e., the transformations left unperformed would have contributed to the singular vectors significantly, but not to the singular values.

A “local” convergence criterion is thus needed, based on the 2 × 2 transformations in a multi-step belonging to a narrow class of matrices, as shown in Algorithm 8.

The steps of each multi-step k are categorized as either big or small. A step is big if its 2 × 2 pivot submatrix is not diagonal, and either the left or the right transformation is not identity; else, it is small. A non-trivial small step is just a scaling by the factors of unit modulus and/or a swap of the diagonal elements, so it is a heuristic but reasonable expectation that an absence of big steps is an indication of convergence.

5.2 The J-Kogbetliantz algorithm

The J-Kogbetliantz algorithm is summarized in Algorithm 9. Note that accumulating the left and the right singular vectors is optional, and that Σ is the diagonal of GN.

6 Numerical testing

Testing was performed on the Intel Xeon Phi 7210 CPUs, running at 1.3 GHz with TurboBoost turned off in Quadrant cluster mode, with 96 GiB of RAM and 16 GiB of flat-mode MCDRAM (which was not used), under 64-bit CentOS Linux 7.9.2009 with the Intel compilers (Fortran, C), version 19.1.3.304, and the GNU compilers (Fortran, C), version 9.3.1, for the error checking. No BLAS/LAPACK routines from Intel Math Kernel Library were used in the final prototype implementation.

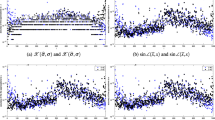

The prototype code has been written in Fortran for the DOUBLE PRECISION and DOUBLE COMPLEX datatypes, with some auxiliary routines written in C. The real and the complex J-Kogbetliantz algorithms are implemented as two programs. There are also two error checkers in quadruple precision (Fortran’s KIND=REAL128), one which finds the absolute and then the relative normwise error of the obtained HSVD as

while the other compares \({{\varSigma }} J_{0} {{\varSigma }}^{T}\) with the eigenvalues Λ of \(H=G_{0}^{} J_{0}^{} G_{0}^{\ast }\), i.e.,

where \({{\varLambda }}^{\prime }=P_{{{\varLambda }}}^{}{{\varLambda }} P_{{{\varLambda }}}^{T}\) (PΛ being a permutation) has the eigenvalues on the diagonal sorted descendingly to match the ordering of \({{\varSigma }} J_{0} {{\varSigma }}^{T}\). All eigenvalues are non-zero.

The close-to-exact eigenvalues are known since each H has been generated by taking its double precision eigenvalues Λ pseudorandomly from one of the ranges:

-

1.

λ ∈〈ε,1], drawn uniformly from 〈0,1],

-

2.

|λ|∈〈ε,1], drawn uniformly from [− 1,1],

-

3.

|λ|∈〈ε,1], drawn from the normal variable \({\mathscr{N}}(\mu =0,\sigma =1)\),

with a given ε ∈{ε1 = 10− 13,ε2 = 10− 15}. Then, H = UΛU∗ (or UΛUT) is formed by applying n0 − 1 pseudorandom Householder reflectors to Λ in extended precision.

The Hermitian/symmetric indefinite factorization with complete pivoting [29, 33] of H gives J0 and \(G_{0}^{\prime }\), which is rounded to a double (complex/real) precision input G0. For each n0 twelve pairs (G0,J0) have been generated, six each for the real and the complex case. In each case two pairs come with \(J_{0}=I_{n_{0}}\), corresponding to the first range above. For a given n0, ε, and a range, the eigenvalues of the real H are the same as those of the complex H, due to a fixed pseudo-RNG seed selected for that ε.

For each field \(T\in \{\mathbb {R},\mathbb {C}\}\), range L ∈{1,2,3} of the eigenvalues of H, and ε as above, a sequence of test matrices was generated, with their orders ranging from n0 = 4 to n0 = 2048 with a variable step: four up to n0 = 128, eight up to n0 = 256, 16 up to n0 = 512, 32 up to n0 = 1024, and 256 onwards. For each n0, the number of tasks for a run of the J-Kogbetliantz algorithm was \(t=\min \limits \{64,n_{0}(n_{0}-1)/2\}\), since the CPU has 64 cores, and to each core at most one task (i.e., an OpenMP thread) was assigned by setting OMP_PLACES=CORES and OMP_PROC_BIND=SPREAD environment variables.

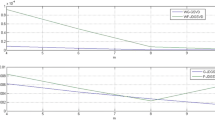

Let N, 0 ≤N ≤ N, be the number of multi-steps performed until convergence. Then, define C, the number of “virtual” sweeps (also called cycles) performed, as

A “virtual” sweep has at most the same number of steps as would a “real” sweep by a cyclic pivot strategy have, i.e., n0(n0 − 1)/2, but in it any transformation candidate can be transformed up to ⌊n0/2⌋ times. Note that C does not have to be an integer.