Abstract

In this paper, we consider the problem of disturbance decoupling for a class of non-minimum-phase nonlinear systems. Based on the notion of partially minimum phaseness, we shall characterize all actions of disturbances which can be decoupled via a static state feedback while preserving stability of the internal residual dynamics. The proposed methodology is then extended to the sampled-data framework via multi-rate design to cope with the rising of the so-called sampling zero dynamics intrinsically induced by classical single-rate sampling.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As well known, a variety of control problems is concerned with partial cancelation of the dynamics which is achieved by inducing unobservability [1,2,3,4,5,6,7,8]. In the linear case, this is achieved by designing a feedback that assigns the eigenvalues coincident with the zeros of the system so making the corresponding dynamics unobservable. Such an approach is at the basis of feedback linearization which is achieved by maximizing unobservability, that is by canceling the so-called zero dynamics whose stability is thus necessary for guaranteeing feasibility of the control system [9].

In [10], the problem of partial cancelation of the zero dynamics has been introduced and exploited to deal with feedback linearization of nonlinear non-minimum-phase systems (i.e., whose zero dynamics are unstable). The design approach generalizes to the nonlinear context the idea of assigning part of the eigenvalues over part of the zeros of the transfer function of a linear system (partial zero-pole cancelation). As the intuition suggests, when dealing with nonlinear systems, stability of the feedback system can be achieved when only a stable component of the zero dynamics is canceled. Such a stable component can be identified by considering the output associated with the minimum-phase factorization of the transfer function of the linear tangent model at the origin. More in details, a two-step design is proposed: considering the linear tangent model (LTM) of the original system, a dummy output is first constructed via a suitable factorization of the numerator of its transfer function so that the corresponding linearized system is minimum phase; then, classical input–output linearization of the locally minimum-phase nonlinear system is performed with respect to the aforementioned dummy output. Finally, it is proved that when applying the resulting feedback to the original system, input–output linearization still holds with respect to the actual output while guaranteeing stability of the internal dynamics.

In this work, we extend the proposed methodology to the problem of output-disturbance decoupling with internal stability. The problem of decoupling, attenuating or rejecting the effect of the perturbations acting over a nonlinear plant is of paramount importance from both practical and methodological points of view [11,12,13,14,15,16,17,18]. As well known, given a general plant disturbance decoupling is related to generating unobservability so to make the output evolutions independent upon the perturbations acting over the dynamics [19,20,21,22,23]. Starting from the linear time-invariant (LTI) case, the idea we develop makes use of the output factorization introduced in [10] so allowing to: (i) solve the disturbance-decoupling problem for a given action of disturbances while preserving internal stability; (ii) characterize all the actions of disturbances for which disturbance decoupling is solvable while preserving internal stability. As expected, the family of disturbances which can be decoupled in this case is smaller than in the standard one (when canceling out all the zero dynamics). At the best of the authors knowledge, necessary and sufficient conditions for characterizing all the actions of disturbances which can be decoupled from the output while preserving stability are not available. An exception to this is provided by [24], where the problem is solved for classes of nonlinear systems admitting a strict-feedback structure.

The proposed methodology is then applied to the sampled-data context that is when measures of the output (say the state) are available only at some time instants and the control is piecewise constant over the sampling period [25, 26]. In this context, the problem under study is even more crucial because of the further unstable zero dynamics intrinsically arising due to sampling [27]. As a consequence, the minimum-phase property of a given nonlinear continuous-time system is not preserved by its sampled-data equivalent [28,29,30,31]. To overcome those issues, several solutions were proposed based on different sampling procedures [32,33,34,35,36]. Among these, the first one was based on multi-rate sampling in which the control signal is sampled-faster (say r times) than the measured variables. Accordingly, this sampling procedure introduces further degrees of freedom and prevents from the appearance of the unstable sampling zero dynamics while preserving the continuous-time relative degree [28, 37].

In the sampled-data framework, we shall show how multi-rate sampling can be suitable exploited to solve the problem of characterizing all disturbances whose effect can be decoupled by feedback at any sampling instants. In this context, we shall see how sampling induces a more conservative design which requires the disturbance to be measurable and piecewise constant over the sampling period. Related works in the sampled-data and linear contexts have been carried out in [38, 39] under the minimum-phaseness assumptions.

The paper is organized as follows. the classical disturbance-decoupling problem is recalled in Sect. 2 while the problem is settled in Sect. 3. The underlying idea of the proposed approach is discussed in Sect. 4. The solution to the problem for LTI systems is provided in Sect. 5, and the main result is stated in Sect. 6. The case of sampled-data systems is discussed and detailed in Sect. 7 while a simulated example over the TORA system is in Sect. 8. Section 9 concludes the paper with some highlights on future perspectives and current work.

Notations and definitions: All the functions and vector fields defining the dynamics are assumed smooth and complete over the respective definition spaces. \(M_U\) (resp. \(M_U^I\)) denotes the space of measurable and locally bounded functions \(u: \mathbb {R} \rightarrow U\) (\(u: I \rightarrow U\), \(I \subset \mathbb {R}\)) with \(U \subseteq \mathbb {R}\). \(\mathcal {U}_\delta \subseteq M_U\) denotes the set of piecewise constant functions over time intervals of fixed length \(\delta \in ]0, T^*[\); i.e. \(\mathcal {U}_\delta = \{ u \in M_U\ \text {s.t.} \ u(t) = u_k, \forall t \in [k\delta , (k+1)\delta [; k \ge 0\}\). Given a vector field f, \(\mathrm {L}_f\) denotes the Lie derivative operator, \(\mathrm {L}_f = \sum _{i= 1}^n f_i(\cdot )\nabla _{x_i}\) with \(\nabla _{x_i} := \frac{\partial }{\partial x_i}\) while \(\nabla = (\nabla _{x_1}, \dots , \nabla _{x_n})\). Given two vector fields f and g, \(ad_f g = [f, g]\) and iteratively \(ad_{f}^i g = [f, ad_f^{i-1}g]\). The Lie exponent operator is denoted as \(e^{\mathrm {L}_f}\) and defined as \(e^{\mathrm {L}_f}:= \text {Id} + \sum _{i \ge 1}\frac{\mathrm {L}_f^i}{i!}\). A function \(R(x,\delta )= O(\delta ^p)\) is said to be of order \(\delta ^p\) (\(p \ge 1\)) if whenever it is defined it can be written as \(R(x, \delta ) = \delta ^{p-1}\tilde{R}(x, \delta )\) and there exist function \(\theta \in \mathcal {K}_{\infty }\) and \(\delta ^* >0\) such that \(\forall \delta \le \delta ^*\), \(| \tilde{R} (x, \delta )| \le \theta (\delta )\). We shall denote a ball centered at \(x_0 \in \mathbb {R}^n\) and of radius \(\epsilon > 0\) as \(B_\epsilon (x_0)\).

2 Classical DDP for linear and nonlinear systems

In the sequel, we investigate the problem of characterizing the perturbations which can be decoupled under feedback for a given plant of the form

with \(x \in \mathbb {R}^n, u \in \mathbb {R}, y \in \mathbb {R}\) and \(w\in \mathbb {R}\) being an external disturbance. We shall refer to such a problem as disturbance decouplability problem (DDP) as a standard revisitation of classical disturbance decoupling.

Consider at first the case of a LTI system of the form

where P defines a family of disturbance actions acting over. The following result concerning DDP for LTI systems is revisited here from [19].

Proposition 1

([19]) Let the system (2) be controllable and possess relative degree \(r\le n\). The disturbance decoupling is solvable for all actions of disturbances such that P verifies the following inclusion

with \(V^*\) being the maximal (A,B)-invariant distribution contained in \(\ker C\) and given by

The feedback ensuring output-disturbance decoupling is given by

Whenever P satisfies (3), the feedback solving DDP gets the form (5) which, by construction, makes the closed-loop dynamics maximally unobservable. To see this, introduce the coordinate transformation

with \(T_2\) such that \(T_2 B = 0\), putting the closed-loop system in the so-called normal form as

with \((\hat{A}, \hat{B})\) being in Brunowski form and \(\hat{C} = (1\ \mathbf {0})\). Accordingly, (6b) corresponds to the component of the system which is made unobservable under feedback coinciding with the zero dynamics as \(w \equiv 0\). It turns out that because \(\sigma (Q)\) coincides with the zeros of (2), stability in closed loop is guaranteed if, and only if, (2) is minimum phase (i.e., \(\sigma (Q)\subset \mathbb {C}^-\)). If this is not the case, the control law (5) is generating unstability of the feedback system. Still, even if necessary and sufficient for the solvability of DDP (regardless of stability), condition (3) is also conservative as it is based on the idea of generating maximal unobservability by canceling all zeros of (2) and making \(V^*\) feedback invariant.

In the nonlinear context, similar arguments hold true. From now on, when dealing with nonlinear systems, all properties are meant to hold locally unless explicitly specified. Assuming that (1) has relative degree \(r\le n\) at the origin (or, for the sake of brevity, relative degreer) that is

with \(h(x) = Cx\), existence of a solution to the DDP is recalled from [9, Proposition 4.6.1].

Proposition 2

([9]) Suppose the system (1) has relative degree \(r \le n\). DDP is solvable for all \(p: \mathbb {R}^n \rightarrow \mathbb {R}^n\) verifying

In this case, then the DDP feedback is given by

Remark 1

Along the lines of the linear case, the result above can be interpreted in a differential-geometry fashion by stating that DDP is solvable for all the actions of disturbances verifying the following relation

with

being the maximal involutive distribution which is invariant under (1) and contained in \(\ker \mathrm {d} h(x)\).

Whenever DDP is solvable, one deduces the normal form associated to (1) by introducing

with \(\phi _2(x)\) such that \(\nabla \phi _2(x) g(x) = 0\). In the new coordinates and under the feedback (7), (1) rewrites as

with \(C = ( 1 \ \mathbf {0})\) and (9b) being the dynamics that is made unobservable under feedback coinciding, when \(\zeta \equiv 0\), with the zero dynamics. Thus, it turns out that a necessary condition for DDP with stability to be solved by (7) is that the zero dynamics are asymptotically stable. If this is not the case, independently of the disturbance, the aforementioned feedback generates unstability by inverting the unstable component of the dynamics so preventing to fulfill design specifications such as output regulations or tracking with boundedness (or input-to-state stability) of the residual internal dynamics.

To summarize, although necessary and sufficient conditions are available for solving DDP, they do not keep into account stability in both the linear and nonlinear settings as generally based on generating maximal unobservability via the cancelation of the zero dynamics. In what follows, we shall present new conditions allowing to state solvability of the disturbance-decoupling problem for linear and nonlinear dynamics while guaranteeing stability.

3 Problem settlement

We consider nonlinear input-affine dynamics with linear output map of the form (1) under the following standing assumptions:

-

1.

when \(w = 0\), the dynamics (1a) is feedback linearizable [9, Theorem 4.2.3];

-

2.

the system (1) has relative degree \(r \le n\) and is partially minimum phase in the sense of the following definition.

Definition 1

Consider a non-minimum-phase nonlinear system (1) with LTM model at the origin (2) whose zeros are the roots of a not Hurwitz polynomial N(s); we say that (1) is partially minimum phase if there exists a factorization of \(N(s) = N_1(s) N_2(s)\) so that \(N_2(s)\) is Hurwitz.

The linear tangent model (LTM) at the origin associated to (1) is of the form (2) and is controllable because (1a) is assumed feedback linearizable. Without loss of generality, we assume (2) exhibits the controllable canonical form that is

with \(\mathbf {a} = (a_0 \ \dots \ a_{n-1})\) being a row vector containing the coefficients of the associated characteristic polynomial and possessing relative degree coinciding, at least locally, with r.

Remark 2

If (A, B, C) is not in the canonical controllable form (10), one preliminarily applies to (1) the linear transformation

with \(\gamma = \begin{pmatrix} \mathbf {0}&1 \end{pmatrix} \begin{pmatrix} B&A B&\dots A^{n-1}B \end{pmatrix}^{-1}\) so transforming the system into the required form.

In this setting, one looks for all disturbances which can be input–output decoupled under feedback while preserving stability of (1); namely, given the triplet (f, g, h), we shall characterize the class of disturbances that can be output decoupled under feedback while guaranteeing stability of the internal dynamics. In other words, we shall seek for the maximal subspace of (1) which can be made unobservable under feedback and over which all suitably characterized disturbances can be constrained to act. From now on, we shall refer to such a problem as the Disturbance Decouplability Problem with Stability (DDP-S).

First, the underlying idea of the approach we propose is recalled from [10] in the LTI case. Then, the result is stated for linear time-invariant and nonlinear systems.

4 Partial zero-dynamics cancelation

Let us start discussing how partial cancelation of the zero dynamics can be used to assign the dynamics under feedback. To this end, let (2) be the LTM at the origin of (1) when \(p(\cdot ) \equiv 0\). Since (A, B) is controllable, the transfer function of the system is provided by

with \(N(s) = b_0 + b_1 s + \dots + b_{m} s^m\) and \( D(s) = a_0 + a_1 s + \dots + a_{n-1}s^{n-1} + s^n\) and relative degree \(\hat{r} = n-m\).

Given any factorization of the numerator \(N(s) = N_1(s)N_2(s)\) and fixed D(s), the dummy output \(y_i = C_i x\) with \(C_i = (b_0^i \dots b_{m_i}^i \ \mathbf {0})\) corresponds to the transfer function having

(\(i = 1,2\)) as numerator and relative degree \(r_i=n-m_i\) (\(i = 1,2\)). Accordingly, the outputs y, \(y_1\) and \(y_2\) are related by the differential forms

so getting for \(j \ne i\) and \(\mathrm {d} = \frac{\mathrm {d}}{\mathrm {d}t}\)

Remark 3

The feedback

transforms (2) into a system with closed-loop transfer function given by

The feedback \(u = F_i x + v\) places \(r_i\) eigenvalues of the system coincident with the zeros of \(N_i(s)\) and the remaining ones to 0 so that stabilization in closed loop can be achieved via a further feedback v if and only if \(N_i(s)\) is Hurwitz. The previous argument is the core idea of assigning the dynamics of the system via feedback through cancelation of the stable zeros only. Accordingly, if N(s) is not Hurwitz (i.e., \(N_j(s)\) has positive real part zeros), the closed-loop system will still have non-stable zeros that will play an important role in filtering actions, but that will not affect closed-loop stability. Concluding, given any controllable linear system one can pursue stabilization in closed loop via partial zeros cancelation: starting from a suitable factorization of the polynomial defining the zeros, this is achieved via the definition a dummy output with respect to which the system is minimum phase.

5 DDP-S for LTI systems

Consider the LTI system (2) with relative degree \(r < n\) and being partially minimum phase. Based on the arguments developed in the previous section, the result below provides a characterization of the actions of disturbances which can be decoupled from the output under feedback and with stability. In doing so, we shall show that the problem admits a solution if the disturbance can be constrained onto the largest sub-dynamics of (2) which can be rendered unobservable under feedback while preserving stability; in other words, the problem is solvable if and only if the action of disturbances to be decoupled is contained into the unobservable subspace generated by canceling only the stable zeros of (2).

Theorem 1

Consider the system (2) being controllable and possessing relative degree \(r<n\) and being partially minimum phase. Denote by \(N(s) = b_0 + b_1 s + \dots b_{n-r} s^{n-r} \) the not Hurwitz polynomial identifying the zeros of (2). Consider the maximal factorization of \(N(s) = N_1(s) N_2(s)\) with

such that \(N_2(s)\) is a Hurwitz polynomial of degree \(n-r_2\) and introduce \(C_2 = (b_0^2 \dots b_{m_2}^2 \ \mathbf {0})\). Then, then DDP-S admits a solution for the system (2) for all P verifying

with \(V_s \subseteq V^*\) as in (4) and, for \(r_2 = n - m_2\),

Proof

The proof is straightforward by showing that \(V_s \subseteq V^* \subseteq \ker C\). To this end, one exploits the differential relation \(y = N_1(\mathrm {d})y_2\) by deducing

for \(r_2> r\) by construction. As a consequence, one gets

so gettingFootnote 1\(V_s \equiv \ker T_s \subseteq \ker T^* \equiv V^*\). As a consequence, one gets that \(V_s \subseteq \ker C\) so getting that all the disturbances that can be made independent on the output are such that \(\text {Im} P \subseteq V_s\). \(\square \)

Remark 4

From the result above, it is clear that the problem is not solvable if \(\{ s \in \mathbb {C} \text { s.t. } N(s) = 0\} \subset \mathbb {C}^+\) that is whenever the system (2) is not partially minimum phase and only the trivial factorization holds with \(N_2(s) = 1\). This pathology also embeds the case of \(r = n-1\) corresponding to the presence of only one zero in (2) that is on the right-hand side of the complex plane.

Remark 5

The previous result shows that whenever (2) is partially minimum phase and DDP-S is solvable, the dimension of the range of disturbances which can be decoupled under feedback while guaranteeing stability is decreasing with respect to the standard DDP problem recalled in Sect. 2 as \(\text {dim}(V_s) < \text {dim} (V^*)\). This is due to the fact that one is constraining the disturbance to act only on the stable lower-dimensional component of the zero dynamics associated to (2) and evolving according to the zeros defined by the Hurwitz sub-polynomial of N(s).

Remark 6

The previous result might be reformulated by stating that DDP-S for (2) is solvable if, and only if the classical DDP is solvable for the minimum-phase system

deduced from (2) and having input–output transfer function \(W_2(s) = \frac{N_2(s)}{D(s)}\).

Corollary 1

If DDP-S is solvable for (2), then the disturbance-output decoupling feedback is given by

Proof

First, introduce the coordinate transformation

with \(T_2\) such that \(T_2 B = 0\). By exploiting the differential relation

with, in the new coordinates, \(y_2 = (1 \ \mathbf {0})\zeta \) and that \(\mathrm {d}\zeta _i = \dot{\zeta }_i = \zeta _{i+1}\) for all \(i = 1, \dots , r_2-r\), the system (2) under the feedback (15) gets the form

with \(\hat{C} = \begin{pmatrix} b_0^1&\dots&b_{r_2 - r}^1&\mathbf {0} \end{pmatrix}\) clearly underlying that the disturbance-decoupling problem is solved. As far as stability is concerned, it results that, by construction, \(\sigma (Q_2) \equiv \{s \in \mathbb {C} \text { s.t. } N_2(s) = 0 \} \subset \mathbb {C}^-\) so implying that the unobservable dynamics (16b) are asymptotically stable. \(\square \)

Remark 7

The transfer function of the closed-loop system (16) is provided by

so emphasizing on the fact that the feedback (15) is canceling only the stable zeros of while leaving the remaining ones unchanged to perform a filtering action that is not compromising the required input–output behavior.

6 DDP-S for nonlinear systems

Consider now the nonlinear system (1) under the standing assumptions detailed in Sect. 3. We shall now investigate on the problem of characterizing the action of disturbances which can be locally decoupled from the output evolutions while ensuring stability in closed loop despite the dynamics (1) is non-minimum phase. To this end, we first recall the auxiliary lemma below from [10].

Lemma 1

Consider the nonlinear system (1) and suppose that its LTM at the origin is controllable in the form (10) and non-minimum phase with relative degree r. Denote by \(N(s) = b_0 + b_1 s + \dots b_{n-r} s^{n-r} \) the not Hurwitz polynomial identifying the zeros of the LTM of (1) at the origin. Consider the maximal factorization of \(N(s) = N_1(s) N_2(s)\)

such that \(N_2(s)\) is a Hurwitz polynomial of degree \(n-r_2\). Then, the system

\(C_2 = \begin{pmatrix} b_0^2&b_1^2&\dots&b_{n-r_2}^2&\mathbf {0} \end{pmatrix}\) has relative degree \(r_2>r\) and is locally minimum phase.

Proof

By computing the linear approximation at the origin of (18), one gets that the matrices \((A, B, C_2)\) are in the form (10) so that the entries of \(C_2\) are the coefficients of \(N_2(s)\) that is the numerator of the corresponding transfer function. By construction, \(N_2(s)\) is a Hurwitz polynomial of degree \(n-r_2\). It follows that, in a neighborhood of the origin, the relative degree of (18) is \(r_2\). Furthermore, since the linear approximation of the zero dynamics of (18) coincides with the zero dynamics of its LTM model at the origin, one gets that (18) is minimum phase. \(\square \)

Remark 8

It is a matter of computations to verify that the zero dynamics of (18) locally coincides with the stable component of the zero dynamics of (1).

In what follows, we show that DDP-S is solvable for the non-minimum-phase system (1) for all disturbances allowing classical DDP to be solved over the auxiliary minimum-phase system (18) in the sense of Proposition 2. In other words, solvability of DDP-S for (1) is equivalent to solvability of DDP for (18).

Theorem 2

Consider the nonlinear system (1) and suppose that its LTM at the origin is controllable in the form (10) and non-minimum phase with relative degree r. Denote by \(N(s) = b_0 + b_1 s + \dots b_{n-r} s^{n-r} \) the not Hurwitz polynomial identifying the zeros of the LTM of (1) at the origin. Consider the maximal factorization of \(N(s) = N_1(s) N_2(s)\)

such that \(N_2(s)\) is a Hurwitz polynomial of degree \(n-r_2\) so deducing the dummy output \(y_2 = h_2(x) = C_2 x\) verifying \(y = N_1(\mathrm {d}) y_2\). Then, DDP-S is solvable for all disturbances for which DDP is solvable for the minimum-phase system (18); namely, DDP-S is solvable for all \(p: \mathbb {R}^n \rightarrow \mathbb {R}^n\) such that

In this case, then the DDP-S feedback is given by

Proof

First, let us assume that DDP is solvable for the system (18). Thus, consider the closed-loop system (18) under (25) and introduce the coordinate transformation

with \(\phi _2(x) \) such that \(\nabla \phi _2(x)g(x) =0\) under which it exhibits the normal form

The zero dynamics of (18) is given by

which is locally asymptotically stable by Lemma 1. Consider now the original system (1) under the feedback (21). Setting now the transformation (22) to (1) so getting, because \(y = N_1(\mathrm {d}) y_2\)

It turns out that the effect of the disturbance is constrained onto the dynamics (24b) which is made unobservable under feedback coinciding, as \(\zeta = 0\) and \(w = 0\), with the zero dynamics of (18) which is locally stable by assumption so concluding the proof. \(\square \)

Remark 9

The previous result shows that even if a nonlinear system is non-minimum phase, a suitable partition of the output can be performed on its LTM at the origin so that output-disturbance decoupling with stability can be pursued while preserving stability of the internal dynamics. This is achieved by inverting (making unobservable) a lower-dimensional component of the zero dynamics of (1) which is known to possess an asymptotically stable equilibrium at the origin.

Remark 10

As in the standard case, Theorem 2 can be interpreted in a differential-geometry fashion by stating that DDP-S is solvable for all the actions of disturbances verifying the following relation

with

being such that \(\varDelta _s(x) \subseteq \varDelta ^*(x) \subseteq \ker \mathrm {d}h(x) \) for \(\varDelta ^*(x)\) as in (8) and the feedback (25) being the so-called friend of \(\varDelta _s\).

Once stability of the unobservable dynamics is guaranteed by the decoupling feedback (25), the residual component of the control action can be designed so to guarantee further control specifications with boundedness (or local input-to-state stability) of the residual dynamics (24b). In addition, fixing in (25) the residual control as

with \(c_i\) for \(i = 1, \dots , r_2-1\) being the coefficients of a Hurwitz polynomial, one can conclude [9, Appendix B.2] that for each \(\varepsilon >0\) there exist \(\delta _\varepsilon > 0\) and \(K>0\) such that

and, thus, boundedness of (1) in closed loop.

Remark 11

Whenever the disturbance w is measurable, the condition (20) in Theorem 2 can be weakened to requiring

In this case, then the DDP-S feedback is given by

aimed at rejecting the effect of the disturbance over the input–output dynamics.

7 DDP-S under sampling

In this section, we are settling the problem of defining the action of disturbances that can be output decoupled under sampling and at any sampling instant \(t = k\delta \) with \(\delta >0\) denoting the sampling period. To this end, we introduce the following requirements over the system (1):

-

1.

the feedback is piecewise constant over the sampling period of length \(\delta >0\) that is \(u(t) \in \mathcal {U}_\delta \);

-

2.

measures are available only at the sampling instants that is \(y(t) = h(x(k\delta ))\) for \(t \in [k\delta , (k+1)\delta [\);

-

3.

the disturbance belongs to the class of piecewise constant signals over the sampling period that is \(w(t) = w_k\) for \(t \in [k\delta , (k+1)\delta [\).

7.1 Sampled-data systems: from single to multi-rate sampling

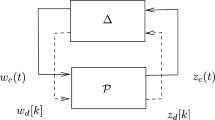

In this framework, the dynamics of (1) at the sampling instants is described by the single-rate sampled-data equivalent model

with \(x_{k} := x(k\delta )\), \(y_{k} := y(k\delta )\), \(u_{k} := u(k\delta )\), \(h(x) = Cx\) and

Remark 12

We underline that requiring the disturbance to be a piecewise constant signal might be quite unrealistic. Though, this choice is made for the sake of the sampled-data design. As a matter of fact, if w is continuously varying signal, (26) would be affected by all the time-derivatives of the perturbation (i.e., \(\dot{w}\), \(\ddot{w}\), ...) computed at \(t = k\delta \) so generally preventing from exactly solving DDP-S. In this scenario, the sampled-data design can be pursued in an approximate way by considering only samples of the disturbance and neglecting the derivative terms so applying the feedback strategy to be presented.

Assuming for the time-being \(w = 0\), it is a matter of computations to verify that

so that

Thus, the relative degree of the sampled-data equivalent model of (1) is always falling to \(r_d = 1\), independently from the continuous-time one. As a consequence, whenever \(r > 1\), the sampling process induces a further zero dynamics of dimension \(r -1\) (i.e., the so-called sampling zero dynamics [27, 28]) that is in general unstable for \(r > 1\). As a consequence, disturbance decoupling under single-rate feedback computed over the sampled-data equivalent model (26) cannot be achieved while guaranteeing internal stability even when the original continuous-time system (1) is minimum phase. In addition, denoting by \(r_w \ge 0\) the first integer such that

one also gets that, for \(x \in B_\epsilon (0)\)

This imposes, in general, that measures of the disturbance at all \(t = k\delta \) are needed to guarantee output-disturbance decoupling under sampling so making the problem more conservative.

As far as the first pathology is concerned, it was shown in [29] that multi-rate sampling allows to preserve the relative degree and hence avoid the rising of the unstable sampling zero dynamics. Accordingly, one sets \(u(t) = u^i_k\) for \(t \in [(k+i-1)\bar{\delta }, (k+i)\bar{\delta }[\) for \(i = 1, \dots , r\) and \(y(t) = y_k\) for \(t \in [k\delta , (k+1)\delta [\) so that the multi-rate equivalent model of order \(r_2\) of (1) gets the form

where \(\bar{\delta } = \frac{\delta }{r_2}\) and

7.2 The DDP-S sampled-data feedback

In the sequel, we shall investigate on the way multi-rate feedback can be suitably employed with the arguments in Theorem 2 to characterize all disturbances whose effect can be output decoupled under multi-rate feedback and at any sampling instants \(t = k\delta \) while preserving stability of the internal dynamics. We shall prove that DDP-S under sampling can be solved via multi-rate under the same hypotheses as in continuous time plus the possibility of measuring the disturbance at any sampling instant.

Accordingly, the multi-rate feedback solving the problem \(\underline{u}_k = \underline{\gamma }(\bar{\delta }, x_k, \underline{v}_k, w_k)\) with \(\underline{u} = \text {col}(u^1, \dots , u^{r_2})\) and \(\underline{v} = \text {col}(v^1, \dots , v^{r_2} \)) is designed so to ensure decoupling with respect to the dummy output \(y_2 = C_2 x\) and, in turn, with respect to the original one \(y = Cx\). This is achieved by considering the sampled-data dynamics (27) with augmented dummy output \(Y_{2k} = H_2(x_k)\) composed of \(y_2 = C_2 x\) and its first \(r_2-1\) derivatives; namely, we consider

with \(\bar{\delta } = \frac{\delta }{r_2}\) and output vector

possessing by construction a vector relative degree \(r^{\delta } = (1, \dots , 1)\). Accordingly, the following results can be stated by referring to [37, 41] where these concepts are introduced and similar manipulations detailed with analog motivations.

Theorem 3

Consider the dynamics (1) under the hypotheses of Theorem 2 with \(y_2 = C_2 x\) being the dummy output with respect to which (1a) is minimum phase. Assume the disturbance \(w(t) = w_k\) for \(t \in [k\delta , (k+1)\delta [\) is measured at all sampling instants \(t = k\delta \) and let (27) be the multi-rate sampled-data equivalent model of (1a) of order \(r_2\). Then, DDP-S is solvable under sampling for all piecewise constant disturbances such that \(p: \mathbb {R}^n \rightarrow \mathbb {R}^n\) verifies

If that is the case, the feedback ensuring DDP-S is the unique solution \(\underline{u} = \underline{u}^{\bar{\delta }} = \text {col}(\gamma ^1(\bar{\delta }, x_k, \underline{v}_k, w_k)\), \(\dots , \gamma ^{r_2}(\bar{\delta }, x_k, \underline{v}_k, w_k))\) to the Input–Output Matching (I–OM) equality

for all \(x_k = x(k\delta )\), \(v(t) = v(k\delta ) := v_k\), \(\underline{v}_k = (v_k, \dots , v_k)\) as \(t \in [k\delta , (k+1)\delta [\), \(k\ge 0\) and with

Such a solution exists and is uniquely defined as a series expansion in powers of \(\bar{\delta }\) around the continuous-time feedback \(\gamma (x, v, w)\); i.e., for \(i = 1, \dots , r_2\)

Proof

First, we rewrite (29) as a formal series equality in the unknown \(\underline{u}^{\bar{\delta }}\); i.e.,

with, for \(i = 1, \dots , r_2\),

Thus one looks for \(\underline{u} = \underline{\gamma }(\bar{\delta }, x, v, w)\) satisfying

where each term rewrites as \(S^{\delta }_{i}(x, \underline{u}^{\bar{\delta }}, w) = \sum _{j\ge 0} \delta ^j S_{ij}(x, \underline{u}^{\bar{\delta }}, w) \) with

and \(\frac{\varDelta _j}{j!} = (\frac{j^{r_2-j +1} -(j-1)^{r_2-j+1}}{j!} \ \frac{ (j-1)^{r_2-j +1} - (j-2)^{r_2-j+1}}{j!} \dots \ \frac{1}{j!} ).\) It results that

solves (33) as \(\bar{\delta } \rightarrow 0\). More precisely, as \(\bar{\delta } \rightarrow 0\), one gets the equation

with \(\varDelta = (\varDelta _1^{\top }, \dots \varDelta _{r_2}^{\top })^{\top }\) and \(D=\text {diag}(r_2^{r_2}, \dots , r_2)\). Furthermore, as \(\bar{\delta }\rightarrow 0\) the Jacobian of \(S^{\bar{\delta }}\) with respect to \(\underline{u}^{\bar{\delta }}\)

is full rank by definition of the continuous-time relative degree \(r_2\) and because \(\varDelta \) is invertible (see [29] for details) so concluding, from the Implicit Function Theorem, the existence of \(\delta \in ]0, T^*[\) so that (29) admits a unique solution of the form (31) around the continuous-time solution (30). Disturbance decoupling and stability of the zero dynamics are ensured by multi-rate sampling as proven in [29] combined with the arguments of Theorem 2. As a matter of fact, under the coordinate transformation (22), the system (27) with output \(y = Cx\) rewrites as

with

with (\(\hat{A}, \hat{B}\)) being in the Brunowski form. Accordingly, the sampled-data unobservable dynamics (35b) verifies

which is Schur stable as \(Q_2 = \nabla _\zeta q_2(0, 0)\) provided in (23) is Hurwitz by Lemma 1. \(\square \)

The feedback solution of the equality (29) ensures matching, at any sampling instants \(t = k\delta \), of the output evolutions of (18) which are decoupled from the disturbance. Moreover, by matching, one gets that the sampled-data feedback is making the stable component of the \(n-r\) dimensional zero dynamics of (27) with output \(y = Cx\) which locally coincides with the one of the continuos-time original system (1).

7.3 Some computational aspects

The feedback control is in the form of a series expansion in powers of \(\bar{\delta }\). Thus, iterative procedures can be carried out by substituting (31) into (29) and equating the terms with the same powers of \(\bar{\delta }\) (see [41] where the explicit expression for the first terms are given). Unfortunately, only approximate solutions \(\underline{u} = \gamma ^{[p]}(\bar{\delta }, x, \underline{v}, w)\) can be implemented in practice through truncations of the series (31) at finite order p in \(\bar{\delta }\); namely, setting

one gets for \(i = 1, \dots , r_2\)

When \(p = 0\), one recovers the sample-and-hold (or emulated) solution

The preservation of performances under approximate solutions has been discussed in [42] by showing that although global asymptotic stability is lost, input-to-state stability (ISS) and practical global asymptotic stability can be deduced in closed loop even throughout the inter sampling period.

8 The TORA example

Let us consider the dynamics of the so-called Translational Oscillator with Rotating Actuator (or, for the sake of brevity, TORAFootnote 2 [43]) described by

with: \(\varepsilon \in ]0, 1[\); \(z_1 = x_1 - \varepsilon \sin x_3\) and \(z_2 = x_2 - \varepsilon x_4 \cos x_3 \) being the displacement and velocity of the platform; \(x_3\) and \(x_4\) being the angle and angular velocity of the rotor carrying the mass; u being the control torque applied to the rotor. It is a matter of computations to verify that (37) has relative degree \(r = 1\) and is not minimum phase as the LTM at the origin possesses transfer function

Suppose now that a disturbance \(w \in \mathbb {R}\) is affecting (37) through the vector

so getting the perturbed dynamics

It is a matter of computation that classical DDP as in Sect. 2 is solvable for (38) without preserving internal stability as a consequence of the instability of the zero dynamics. According to the arguments of Sect. 6, DDP with stability is still solvable when considering the auxiliary output

with T being computed as in Remark 2 and provided by

It is a matter of computations to verify that with respect to the new output (39) the system has relative degree \(r_2 = 2\) and is minimum phase with transfer function of the corresponding LTM at the origin provided by

Moreover, DDP with stability is solvable as the relative degree condition (20) is met so that the feedback (25) with

fulfills the requirements. Moreover, setting \(v = -k_1 h_2(x) - k_2 L_f h_2(x) \) one gets \(y(t) \rightarrow 0\) as \(t \rightarrow \infty \) whenever \(k_1, k_2 >0\).

To solve the problem under sampling, the multi-rate feedback \(\underline{\gamma }^{[1]}(\delta , x,w ,\underline{v})\) in (36) can be easily deduced for \(p = 1\) with

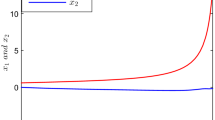

Figures 1, 2 and 3 depict simulations of the aforementioned situations under continuous-time feedback (25) and the sampled-data feedback (36) with one correcting term (i.e., \(p = 1\)) and for several values of the sampling period and different simulating scenario:

-

1.

the full continuous-time case as proposed in Sect. 6 where the disturbance is also continuously varying over time (in red);

-

2.

the ideal sampled-data framework proposed in Sect. 7 where \(w(t) = w_d(t)\) with \(w_d(t) = w_k\) for \(t \in [k\delta , (k+1)\delta [\) (in blue);

-

3.

the realistic sampled-data case in the disturbance is continuously varying over time (and is not piecewise constant) albeit the feedback is computed based on samples of the disturbance at all sampling instants \(t = k\delta \) (in cyan);

-

4.

the emulation-based control scheme where the continuous-time feedback is implemented through mere sample-and-hold devices with no further sampled-data re-design (in magenta).

The disturbance is implemented as a general white noise randomly generated through Simulink–MATLAB.

It results from Figs. 1, 2 and 3 that in case of the continuous-time scenario that the proposed feedback computed via partial dynamic inversion succeeds in isolating the effect of the disturbance from the output for the original system as the output goes to zero with an acceptable behavior of the zero dynamics which is still converging to the origin despite the perturbation.

As far as the sampled-data system is concerned, simulations underline that although an approximate feedback is implemented a notable improvement of the performances is achieved with respect to the mere emulation. Moreover, even when the disturbance is continuously varying, the approximate sampled-data feedback yields promising performances that appear even better than the ideal scenario (i.e., when the disturbance affecting the system is piecewise constant). This fact is not surprising as in the latter case, the relative degree of the sampled-data output with respect to the disturbance falls to 1 so compromising the closed-loop behavior. This result motivates and deserves a further formal and general study of this fact which has been empirically illustrated. Finally we note that, as \(\delta \) increases, the proposed multi-rate strategy yields more than acceptable performances even when emulation fails to stabilize the input–output evolutions (Fig. 3).

9 Conclusions and perspectives

In this paper, new conditions for characterizing all the disturbances that can be locally decoupled from the output evolutions of nonlinear systems have been deduced by also requiring preservation of the internal stability. The approach is based on a local factorization of the polynomial defining the zeros of the corresponding linear tangent model at the origin and, thus, on partial dynamics cancelation. Future works are toward the extension of these arguments to the multi-input/multi-output case and to a global characterization of the results possibly combined with input–output stability and related results. Finally, the effect of an approximate sampled-data feedback over a continuously perturbed dynamics (as in the third scenario of the reported simulations) deserves further investigation. The study of zeros of the sampled-data systems in a pure hybrid context [44] is of paramount interest as well.

Notes

Given three matrices M, N, S of suitable dimensions such that \(M N = S\), then \(\ker N \subseteq \ker S\) [40].

The output and the disturbance we consider are unrealistic as they are exploited to illustrate the methodology we propose.

References

Isidori, A., Krener, A., Gori-Giorgi, C., Monaco, S.: Nonlinear decoupling via feedback: a differential geometric approach. IEEE Trans. Autom. Control 26(2), 331 (1981)

Isidori, A., Byrnes, C.I.: Output regulation of nonlinear systems. IEEE Trans. Autom. Control 35(2), 131 (1990). https://doi.org/10.1109/9.45168

De Luca, A.: Zero Dynamics in Robotic Systems, pp. 68–87. Birkhäuser, Boston, MA (1991)

Persis, C.D., Isidori, A.: A geometric approach to nonlinear fault detection and isolation. IEEE Trans. Autom. Control 46(6), 853 (2001). https://doi.org/10.1109/9.928586

Ortega, R., Van Der Schaft, A., Castanos, F., Astolfi, A.: Control by interconnection and standard passivity-based control of port-Hamiltonian systems. IEEE Trans. Autom. Control 53(11), 2527 (2008)

Priscoli, F.D., Isidori, A., Marconi, L., Pietrabissa, A.: Leader-following coordination of nonlinear agents under time-varying communication topologies. IEEE Trans. Control Netw. Syst. 2(4), 393 (2015)

Astolfi, A., Karagiannis, D., Ortega, R.: Nonlinear and Adaptive Control with Applications. Springer, Berlin (2008)

Di Giorgio, A., Pietrabissa, A., Priscoli, F.D., Isidori, A.: Robust output regulation for a class of linear differential-algebraic systems. IEEE Control Syst. Lett. 2(3), 477 (2018)

Isidori, A.: Nonlinear Control Systems. Springer, Berlin (1995)

Mattioni, M., Hassan, M., Monaco, S., Normand-Cyrot, D.: On partially minimum phase systems and nonlinear sampled-data control. In: 2017 IEEE 56th Annual Conference on Decision and Control (CDC), pp. 6101–6106. IEEE (2017)

Cabecinhas, D., Cunha, R., Silvestre, C.: A nonlinear quadrotor trajectory tracking controller with disturbance rejection. Control Eng. Pract. 26, 1 (2014)

Menini, L., Possieri, C., Tornambè, A.: Sinusoidal disturbance rejection in chaotic planar oscillators. Int. J. Adapt. Control Signal Process. 29(12), 1578 (2015)

Wu, J., Li, J.: Adaptive fuzzy control for perturbed strict-feedback nonlinear systems with predefined tracking accuracy. Nonlinear Dyn. 83(3), 1185 (2016)

Wang, X., Hong, Y., Ji, H.: Distributed optimization for a class of nonlinear multiagent systems with disturbance rejection. IEEE Trans. Cybern. 46(7), 1655 (2016)

Di Giorgio, A., Giuseppi, A., Liberati, F., Ornatelli, A., Rabezzano, A., Celsi, L.R.: On the optimization of energy storage system placement for protecting power transmission grids against dynamic load altering attacks. In: 2017 25th Mediterranean Conference on Control and Automation (MED), pp. 986–992. IEEE (2017)

Shi, S., Xu, S., Li, Y., Chu, Y., Zhang, Z.: Robust predictive scheme for input delay systems subject to nonlinear disturbances. Nonlinear Dyn. 93(3), 1035–1045 (2018)

Yao, X.Y., Ding, H.F., Ge, M.F.: Task-space tracking control of multi-robot systems with disturbances and uncertainties rejection capability. Nonlinear Dyn. 92(4), 1649 (2018)

Lin, X., Dong, H., Yao, X.: Tuning function-based adaptive backstepping fault-tolerant control for nonlinear systems with actuator faults and multiple disturbances. Nonlinear Dyn. 91(4), 2227 (2018)

Willems, J.: Almost invariant subspaces: an approach to high gain feedback design-Part I: almost controlled invariant subspaces. IEEE Trans. Autom. Control 26(1), 235 (1981)

Marino, R., Respondek, W., Van der Schaft, A.: Almost disturbance decoupling for single-input single-output nonlinear systems. IEEE Trans. Autom. Control 34(9), 1013 (1989)

Willems, J.C., Commault, C.: Disturbance decoupling by measurement feedback with stability or pole placement. SIAM J. Control Optim. 19(4), 490 (1981)

Marino, R., Respondek, W., Van der Schaft, A., Tomei, P.: Nonlinear \(\infty \) almost disturbance decoupling. Syst. Control Lett. 23(3), 159 (1994)

Zheng, Q., Chen, Z., Gao, Z.: A practical approach to disturbance decoupling control. Control Eng. Pract. 17(9), 1016 (2009)

Isidori, A.: Global almost disturbance decoupling with stability for non minimum-phase single-input single-output nonlinear systems. Syst. Control Lett. 28(2), 115 (1996)

Tanasa, V., Monaco, S., Normand-Cyrot, D.: Backstepping control under multi-rate sampling. IEEE Trans. Autom. Control 61(5), 1208 (2016)

Jia, J., Chen, W., Dai, H., Li, J.: Global stabilization of high-order nonlinear systems under multi-rate sampled-data control. Nonlinear Dyn. 94(4), 2441–2453 (2018)

Åström, K., Hagander, P., Sternby, J.: Zeros of sampled systems. Automatica 20(1), 31 (1984). https://doi.org/10.1016/0005-1098(84)90062-1

Monaco, S., Normand-Cyrot, D.: Zero dynamics of sampled nonlinear systems. Syst. Control Lett. 11(3), 229 (1988). https://doi.org/10.1016/0167-6911(88)90063-1

Monaco, S., Normand-Cyrot, D.: Multirate sampling and zero dynamics: from linear to nonlinear. In: Nonlinear Synthesis, pp. 200–213. Birkhäuser, Boston, MA (1991)

Yuz, J., Goodwin, G.C.: Sampled-Data Models for Linear and Nonlinear Systems. Springer, London (2014)

Goodwin, G.C., Aguero, J.C., Garridos, M.E.C., Salgado, M.E., Yuz, J.I.: Sampling and sampled-data models: the interface between the continuous world and digital algorithms. IEEE Control Syst. 33(5), 34 (2013). https://doi.org/10.1109/MCS.2013.2270403

Kabamba, P.: Control of linear systems using generalized sampled-data hold functions. IEEE Trans. Autom. Control 32(9), 772 (1987)

Hagiwara, T., Yuasa, T., Araki, M.: Stability of the limiting zeros of sampled-data systems with zero-and first-order holds. Int. J. Control 58(6), 1325 (1993)

Ishitobi, M.: Stability of zeros of sampled system with fractional order hold. IEE Proc. Control Theory Appl. 143(3), 296 (1996)

Carrasco, D.S., Goodwin, G.C., Yuz, J.I.: Modified Euler–Frobenius polynomials with application to sampled data modelling. IEEE Trans. Autom. Control 62(8), 3972 (2017). https://doi.org/10.1109/TAC.2017.2650784

Yamamoto, Y., Yamamoto, K., Nagahara, M.: Tracking of signals beyond the Nyquist frequency. In: 2016 IEEE 55th Conference on Decision and Control (CDC), Dec 2016, pp. 4003–4008. IEEE. https://doi.org/10.1109/CDC.2016.7798875

Monaco, S., Normand-Cyrot, D.: Issues on nonlinear digital systems. Semi-Plenary Conf. Eur. J. Control 7(2–3), 160 (2001)

Grizzle, J.W., Shor, M.H.: Sampling, infinite zeros and decoupling of linear systems. Automatica 24(3), 387–396 (1988)

Oloomi, H.M., Sawan, M.E.: High gain sampled data systems: deadbeat strategy, disturbance decoupling and cheap control. Int. J. Syst. Sci. 27(10), 1017 (1996)

Horn, R.A., Johnson, C.R.: Matrix analysis. Cambridge University Press (2012)

Monaco, S., Normand-Cyrot, D.: On Nonlinear Digital Control, vol. 3, pp. 127–153. Chapman & Hall, London (1997)

Mattioni, M., Monaco, S., Normand-Cyrot, D.: Immersion and invariance stabilization of strict-feedback dynamics under sampling. Automatica 76, 78 (2017)

Sepulchre, R., Janković, M., Kokotović, P.: Constructive Nonlinear Control. Springer, New York (1997)

Galeani, S., Possieri, C., Sassano, M.: Zeros and poles of transfer functions for linear hybrid systems with periodic jumps. In: 2017 IEEE 56th Annual Conference on Decision and Control (CDC), pp. 5469–5474. IEEE (2017)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Partially funded by Université Franco-Italienne/Università Italo-Francese through the VINCI 2016 Mobility Grant.

Rights and permissions

About this article

Cite this article

Mattioni, M., Hassan, M., Monaco, S. et al. On partially minimum-phase systems and disturbance decoupling with stability. Nonlinear Dyn 97, 583–598 (2019). https://doi.org/10.1007/s11071-019-04999-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-04999-3