Abstract

The locality preserving projections (LPP) method is a hot dimensionality reduction method in the machine learning field. But the LPP method has the so-called small-sample-size problem, and its performance is unstable when the neighborhood size parameter k varies. In this paper, by theoretical analysis and derivation, a maximum difference criterion for the LPP method is constructed, and then a simple and robust LPP method has been proposed, called Locality Preserving Projections based on the approximate maximum difference criterion (LPPMDC). Compared with the existing approaches to solve the small-sample-size problem of LPP, the proposed LPPMDC method has three superiorities: (1) it has no the small-sample-size problem and can get the better performance, (2) it is robust to neighborhood size parameter k, (3) it has low computation complexity. The experiments are performed on the three face databases: ORL, Georgia Tech, and FERET, and the results demonstrate that LPPMDC is an efficient and robust method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recently, as a manifold-based dimensionality reduction method,Locality Preserving Projections (LPP) [1] been proposed and has received active attention from researchers . LPP is the linearization version of the well-known Laplacian eigenmaps (LE) [2]. Based on the nearest-neighbor graph constructed, LPP is designed to seek a linear mapping from the high-dimension data space to a subspace and optimally preserves local neighborhood information. The LPP method and its variants are widely used in various fields, for example, gait recognition [3], surface roughness prediction [4], image and face recognition [5]. Compared with manifold-based dimensionality reduction methods, the classical principal component analysis (PCA) [6] and linear discriminant analysis (LDA) [7, 8] are dimensionality reduction methods to maintain the global structure of the dataset.

Unfortunately, the LPP method has two disadvantages. One is that the LPP method has the so-called small-sample-size (SSS) problem. The reason is that the LPP method will be to solve a generalized eigenvalue problem. However, since the dimensionality of the samples is usually much higher than the number of samples, the matrices of the generalized eigenvalue problem may be singular, which results in that the solving of the equation becomes difficult. That is the so-called small-sample-size (SSS) problem [9].

The PCA + LPP method [9] is the common method to solve the SSS problem, and it is usually referred to as LPP. It firstly uses the PCA method to reduce the dimensionality of the original samples so that the matrices of the generalized eigenvalue problem are non-singular and then uses the LPP method on the reduced sample space. However, while reducing the dimensionality of the original samples, some critical information may also be discarded [10].

Regularization is also a common way to solve the SSS problem. The oldest regularization method for LPP is regularization LPP (RLPP) [11]. Followed by the parametric regularized locality preserving projections method (PRLPP), which regulates features corresponding to small and zero eigenvalues [12]. The latest regularization method is proposed in [13]. There are two versions of this method. One is the TSVD regularization method (LPP+TSVD), and the other is the Tikhonov regularization method (LPP+TR).

Two dimensional locality preserving projection (2DLPP) [14] and its variants can avoid the SSS problem of LPP. These methods are based on the two-dimension image matrices rather than one-dimension image vectors, so they have no the SSS problem. The latest of these methods is the rearranged modular two-dimensional locality preserving projection method (Rm2DLPP) [15].

There are also some new improvements for LPP, such as the locality adaptive preserving projections (LAPP) method [16] and the locality sparsity preserving projections (LSPP) [17].

The other disadvantage is that the performance of LPP is very sensitive to the neighborhood size parameter k. The LPP method firstly needs to construct the nearest-neighbor graph from the manually set local neighborhood. Still, it isn’t easy to select a suitable local neighborhood size. Consequently, the performance of LPP will be greatly different when the neighborhood size varies [10, 18]. And so, when constructing the nearest-neighbor graph, it is worthy for studying how to choose the right neighborhood size parameter k. At this point, the ELPP method has made some improvements with the matrix exponential transformation [10, 18]. However, the performance of ELPP is still unsteady when the neighborhood size varies, which may be seen in the experimental section.

Recently, a matrix exponential discriminant analysis (EDA) [19] has been proposed to solve the SSS problem of the LDA, which is based on the property that the matrix exponential has no zero eigenvalues and thus the singularity of the matrix is avoided. As a general and effective method, the idea of the EDA method is also applied to address the SSS problem of these methods based on manifold. For example, the exponential version of Locality Preserving Projections (ELPP) [10, 18], and the exponential version of Neighborhood Preserving Embedding (ENPE) [20], the exponential version of Discriminant Locality Preserving Projection (EDLPP) [21, 22], the exponential version of Local Discriminant Embedding (ELDE) [23], the exponential version of Semi-supervised Discriminant Embedding (ESDE) [24] and the exponential version of Neighborhood Preserving Discriminant Embedding [25]. However, the main limitation of the matrix exponential method is that the computation time is much longer because the computation complexity of the matrix exponential function is much larger.

In this paper, combining the results of ELPP with the matrix exponential transformation, a maximum difference criterion for the LPP method is constructed. Then a new method, i.e., Locality Preserving Projections based on the Maximum Difference Criterion (LPPMDC), is proposed. Compared with the existing approaches to address the SSS problem of LPP, LPPMDC avoids the SSS problem, has low computation complexity, and importantly, the new method is stable when the neighborhood size varies. The experiments are performed on the public face databases: ORL, Georgia Tech, and FERET, and the results validate the above advantages of LPPMDC.

2 Review of Related Work

2.1 Locality Preserving Projections

Suppose there are m original samples \(\textit{x}_1,\textit{x}_2,...,\textit{x}_m \) in \( \mathbb {R}^n \) . Firstly, LPP constructs a local adjacency graph G. Usually, k nearest neighborhood method is used to construct such a gragh G. Secondly, set the weight \( S_{ij} \) of any two neighbors \( x_{i} \) and \( x_{j} \) in graph G. The weight \( S_{ij} \) is usually defined with heat kernel function:

where t is a parameter. The similarity matrix S is defined as \( S =\big (S_{ij}\big )\). Thirdly, Define the objective function. LPP method aims to design such an objective function: \( x_{i} \) in high-dimensional space \( \mathbb {R}^n \) is mapped into in low-dimensional space \( \mathbb {R}^d \) with the linear \( y _i= W ^\top x _i\) , and making \( y_{i} \) and \( y_{j} \) are close if the nodes \( x_{i} \) and \( x_{j} \) are close. A desired transformation matrix W can be obtained by minimizing the objective function:

where \(X = [x_1,x_2,...,x_m]\), L is the Laplacian matrix, \( L = D - S \) , and D is a diagonal matrix and \( D_{ii} = \sum _j S_{ij} \) . To impose the constraint \( { W ^\top X D X ^\top W } = I\) on the objective function,i.e., Eq. (2), the objective function of LPP becomes:

where \({\text {tr}}(\cdot )\) represents the trace of a matrix. The desired transformation W is formed with the eigenvectors belong to the first few minimum eigenvalues of the equation: \( X L X ^\top w =\lambda X D X ^\top w .\) Denote \( S_L = X L X ^\top \) and \( S_D = X D X ^\top \), then one has:

For the detailed discussion of LPP, please refer to Ref. [1]. Generally, the dimensionality of the samples is much larger than the number of samples, and then the matrices \( S_L \) and \( S_D \) are singular. And then, the solving of Eq. (4) becomes problematic. This is the so-called small-sample-size (SSS) problem, and the LPP method suffers from such difficulty [9]. The other disadvantage of LPP is that the performance of LPP will be greatly different when the neighborhood size varies [10, 18].

2.2 Exponential locality preserving projections

By Section 2.1, the objective function of LPP, i.e., Eq. (3) may be rewritten as:

For solving the SSS problem of LPP, ELPP replaces the matrix \( S_L \) with the matrix exponential \( \exp \left( S_L\right) \) and replaces \( S_D \) with the matrix exponential \( \exp \left( S_D \right) \). Then, Eq. (5) becomes:

Equation (6) is the criterion of ELPP, and it can be reduced to the generalized eigenvalue problem:

Solving Eq. (7), the matrix formed with the d eigenvectors belong to the d smallest eigenvalues order by ascending is the transformation matrix W.

2.3 Matrix Exponential

Let A be an \(n\times n\) square matrix, its matrix exponential is [26]:

where \( I \) is the identity matrix. The matrix exponential \(\exp \big ( A \big )\) has the following properties [19, 20]:

(1) \(\exp \big ( A \big )\) is invertible, and its inverse matrix is

(2) Let \( v _1, v _2,\ldots , v _n\) be eigenvectors of \( A \) that correspond to the eigenvalues \(\lambda _1,\lambda _2,\ldots ,\lambda _n\), then \( v _1, v _2,\ldots , v _n\) are also eigenvectors of \(\exp \big ( A \big )\) that correspond to the eigenvalues \(e^{\lambda _1},e^{\lambda _2},\ldots ,e^{\lambda _n}\).

(3) Let A and B are two n-order square matrices, \( AB \ne BA \) . Let \( AB = BA + \varDelta C \), where \( \varDelta C \) is the error matrix. One has

where \( \varDelta E \) is the error matrix, and the norm of the error matrix \( \varDelta E \) be estimated as:

where \( {{\left\| \cdot \right\| }} \) is a compatible norm. The property (3) and its detailed proof are given in the appendix of Ref. [20].

3 LPP Based on Maximum Difference Criterion

3.1 The Proposed LPPMDC

In this subsection, combining the results of ELPP with the matrix exponential transformation, the maximum difference criterion for LPP is constructed, and then a new LPP method, LPPMDC, is proposed.

With the property (2) of the matrix exponential, the eigenvalue of a matrix exponential always has the form \( \textit{e} ^{\lambda } \) and \( \textit{e}^{\lambda } \geqslant 0 \) , so a matrix exponential has always been a full-rank matrix. Then the matrix \( \exp \left( S_D \right) \) in Eq. (7) is invertible, and Eq. (7) can be rewritten as:

Then, by Eq. (8), one has:

For the matrices \( S_L \) and \( S_D \), one has \( S_L S_D \ne S_D S_L \) . Let \( S_L S_D = S_D S_L + \varDelta C \), where \( \varDelta C \) is the error matrix. Then Eqs. (9) and (10) hold for the matrices \( S_L \) and \( S_D \) , i.e., one has

where \( \varDelta E \) is the error matrix between \( \exp (-S_D)\exp (S_L) \) and \( \exp (S_L - S_D) \), \( {{\left\| \cdot \right\| }} \) is a compatible norm.

Usually, the matrices \( S_L \) and \( S_D \) are normalized, so the error matrix \( \varDelta C \) is a small value matrix, and then the upper bound of the norm of the error matrix \( \varDelta E \) in Eq. (14) is much little. To illustrate the small error, \(\Vert \varDelta C \Vert \) and the upper bound of \(\Vert \varDelta E \Vert \) in Eq. (14) are estimated on the ORL database for the different neighborhood sizes, and the results are listed in Table 1. The first row is the neighborhood size parameter k, the second row is the value of \(\Vert \varDelta C \Vert \) , and the third row is the upper bound of \(\Vert \varDelta E \Vert \) .

From the results in Table 1, the upper bound value of \(\Vert \varDelta E \Vert \), \( B(\Vert \varDelta E \Vert ) \), is much small, so the matrix \( \exp (-S_D)\exp (S_L) \) can be replaced with the matrix \( \exp (S_L - S_D) \). And then, Eq. (12) may be rewritten as:

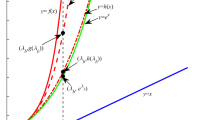

By the property (2) of the matrix exponential, the matrix \( \exp \left( S_L - S_D \right) \) and the matrix \( S_L - S_D \) have the same eigenvectors. So, Eq. (15) and the following Eq. (16) have the same eigenvectors:

Note that the eigenvalue of Eq. (15) has the form \( \lambda = e^{\lambda _0} \), or the eigenvalue of Eq. (16) has the form \( \lambda _0 = ln(\lambda ) \). Without loss of generality, one can still use \( \lambda \) to represent the eigenvalues in Eq. (16), i.e.,

That is to say, with the above matrix exponential transformation, the criterion of LPP can be reduced to the standard eigenvalue problem (17). To solve Eq. (17) and to select the d eigenvector \( w_i(i=1,2,\cdots ,d) \) associated with the smallest d eigenvalues ordered by ascending, and then to construct the matrix \( W = [ w_1,w_2,\cdots , w_d] \) . The matrix W is the optimal linear mapping matrix.

We can define the following criterion of the LPP method:

By linear algebra processing, Eq. (18) can be reduced to Eq. (17). Thus, the solutions of Eq. (17) are the solutions of the criterion Eq. (18). That is, the results of the above matrix exponential transformation can be viewed as a derivation from the criterion Eq. (18), so we can regard Eq. (18) as a new criterion for the LPP method. Obviously Eq. (18) may be viewed as maximizing the difference:

In this view, the proposed method is named Locality Preserving Projections based on the Maximum Difference Criterion (LPPMDC).

3.2 Theoretical Analysis

What are the advantages of the new criterion for LPP and the corresponding LPPMDC method? In this section, with theoretical analysis, it will be seen that the LPPMDC method can avoid the SSS problem, has lower computation cost, and, importantly, is insensitive when the neighborhood size varies.

(1) Has no the SSS problem

LPP has the SSS problem. It is because that the matrix \( S_D \) in Eq. (4) is usually singular, which is proved in ref. [18]. In this paper, the proposed LPPMDC method is based on the criterion Eq. (18) and can be reduced to Eq. (17). Even if the matrix \(S_D\) is singular, Eq. (17) is always solvable, so LPPMDC avoids naturally the SSS problem.

(2) The analysis of sensitivity about the neighborhood size

In this section, the sensitivity of LPP, ELPP and LPPMDC to neighborhood size will be discussed from the perspective of eigenvector perturbation analysis. When the neighborhood size varies, the matrices \( {{S}_{L}} \) and \( {{S}_{D}} \) of the LPP, ELPP, and LPPMDC methods take another two matrices, denoting \( {{\tilde{S}}_{L}} \) and \( {{\tilde{S}}_{D}} \). In mathematics, it may be viewed as that the matrices\( {{S}_{L}} \) and \( {{S}_{D}} \) are perturbed to the matrices \( {{\tilde{S}}_{L}} \) and \( {{\tilde{S}}_{D}} \), respectively. Thus, when the neighborhood size varies, for the LPP method, Eq. (4) becomes:

Similarly, for the ELPP method, Eq. (7) becomes:

For the LPPMDC method, the corresponding form of Eq. (17) may be written as:

For the LPP method, when the neighborhood size parameter varies from \( k_1 \) to \( k_2 \), the extracted features are different. It is from that the projection axes, i.e., the eigenvectors gotten from Eqs. (4) and (19) are different. The eigenvectors from Eq. (4) constitute an eigenvector space, the eigenvectors from Eq.(19) constitute another eigenvector space. The greater the distance between two eigenvector spaces is, the greater the difference of projection axes is, then the greater the difference of the features extracted is. Thus the performance of LPP is more sensitive to the k value. In view of eigenvector perturbation analysis, the distance between two eigenvector spaces may be measured by the sine value of the angle between two eigenvector spaces, which can be expressed as \({{\left\| \sin \Theta \left( {{X}_{1}},{\tilde{X}}{}_{1} \right) \right\| }_{ui}}\) [27], where \({{X}_{1}},{{\tilde{X}}_{1}}\) is the eigenvector subset from Eqs. (4) and (19). For the ELPP and LPPMDC methods, a similar analysis can be done.

Regarding measuring the distance between two vector spaces, two theorems are given for the standard eigenvalue problem and the generalized eigenvalue problem [27].

(1) The standard eigenvalue problem

Let \({Ax}=\lambda {x}\) be a standard eigenvalue problem. If the matrix A is diagonalizable, the eigendecomposition of A is \({AX}={X\Lambda }\), where X is the matrix composed of the eigenvectors of the matrix A, \({\Lambda }\) is a diagonal matrix composed of the eigenvalues of the matrix A, i.e., \({\Lambda }={\text {diag}}({{\lambda }_{1}},{{\lambda }_{2}},\cdots {{\lambda }_{n}})\). If to write the matrices X, \({\Lambda }\) as the partitioned matrices, one has \( {A}\left[ {{{X}}_{1}},{{{X}}_{2}} \right] =\left[ {{{X}}_{1}},{{{X}}_{2}} \right] \left[ \begin{matrix} {{{\Lambda }}_{1}} &{} {} \\ {} &{} {{{\Lambda }}_{2}} \\ \end{matrix} \right] , \) where \({{{X}}_{1}},{{{\Lambda }}_{1}}\) are \(n\times k\) matrices, \({{{X}}_{2}},{{{\Lambda }}_{2}}\) are \(n\times \left( n-k \right) \) matrices. Let the matrix A is perturbed to the matrix \({\tilde{A}}\), \({\tilde{A}}\) also diagonalizable. For the matrix \({\tilde{A}}\), the same eigendecomposition may be got, except putting tildes on all the matrices \({{{X}}_{i}},{{{\Lambda }}_{i}}\left( i=1,2 \right) \).

Theorem 1 ( [27]). If \({\tilde{X}}=\left[ {{{{\tilde{X}}}}_{1}},{{{{\tilde{X}}}}_{2}} \right] \)is inversible, denote \({{\left[ {{{{\tilde{X}}}}_{1}},{{{{\tilde{X}}}}_{2}} \right] }^{-1}}={{\left[ {{{{\tilde{Y}}}}_{1}},{{{{\tilde{Y}}}}_{2}} \right] }^{H}}\), one has

where the superscript “H”represents the transposed conjugate of the matrix, \( {{\left\| \cdot \right\| }_{ui}} \) represents is an unitarily invariant norm.

For the LPPMDC method, Eq. (17) is a standard eigenvalue problem, and the matrices \({{{S}}_{L}}\)and \({{{S}}_{D}}\) in Eq. (17) are semi-positive definite, then the matrix \({{{S}}_{L}}-{{{S}}_{D}}\) is real symmetric, i.e., \({{{S}}_{L}}-{{{S}}_{D}}\) is diagonalizable. So, the distance between two eigenvector spaces from Eqs. (17) and (21) may be measured by Eq. (22).

(2) The generalized eigenvalue problem

Let \({Ax}=\lambda {Bx}\) be a generalized eigenvalue problem. If the matrix \({A}-\lambda {B}\) is diagonalizable, one has \({AX}={Y\Lambda }\), \({BX}={Y\Omega }\), where X and Y are invertible, \({\Lambda }\) and \({\Omega }\) are diagonal. Let X and Y, \({\Lambda }\) and \({\Omega }\) are partitioned as \({X}=\left[ {{{X}}_{1}},{{{X}}_{2}} \right] \), \({Y}=\left[ {{{Y}}_{1}},{{{Y}}_{2}} \right] \), \({\Lambda }=\left[ \begin{matrix} {{{\Lambda }}_{1}} &{} {} \\ {} &{} {{{\Lambda }}_{2}} \\ \end{matrix} \right] \) and \({\Omega }=\left[ \begin{matrix} {{{\Omega }}_{1}} &{} {} \\ {} &{} {{{\Omega }}_{2}} \\ \end{matrix} \right] \), where \({{{X}}_{1}},{{{Y}}_{1}},{{{\Lambda }}_{1}},{{{\Omega }}_{1}}\) are \(n\times k\) matrices, \({{{X}}_{2}},{{{Y}}_{2}},{{{\Lambda }}_{2}},{{{\Omega }}_{2}}\) are \(n\times \left( n-k \right) \) matrices, \({\Lambda }={\text {diag}}({{\alpha }_{1}},{{\alpha }_{2}},\cdots {{\alpha }_{n}})\), \({\Omega }={\text {diag}}({{\beta }_{1}},{{\beta }_{2}},\cdots {{\beta }_{n}})\). Note that the column of X is the eigenvector matrix of \({Ax}=\lambda {Bx}\).

Let the matrices A and B are perturbed to the matrices \({\tilde{A}}\) and \({\tilde{B}}\), the generalized eigenvalue problem is \({\tilde{A}x}=\lambda {\tilde{B}x}\), and the same eigendecomposition may be got, except putting tildes on all the matrices \({{{X}}_{i}},{{{Y}}_{i}},{{{\Lambda }}_{i}},{{{\Omega }}_{i}}\left( i=1,2 \right) \).

Theorem 2 ( [27]). If \({\tilde{X}}=\left[ {{{{\tilde{X}}}}_{1}},{{{{\tilde{X}}}}_{2}} \right] \) is inversible, denote \({{\left[ {{{{\tilde{X}}}}_{1}},{{{{\tilde{X}}}}_{2}} \right] }^{-1}}=\left[ \begin{matrix} {P}_{1}^{H} \\ {P}_{2}^{H} \\ \end{matrix} \right] \), one has

For the LPP method, Eq. (4) is a generalized eigenvalue problem. The matrices \({{{S}}_{L}}\) and \({{{S}}_{D}}\) in Eq. (4) are semi-positive definite, then the matrix \({{{S}}_{L}}-\lambda {{{S}}_{D}}\) is real symmetric, i.e., \({{{S}}_{L}}-\lambda {{{S}}_{D}}\) is diagonalizable. And so, the distance between two eigenvector spaces from Eqs. (4) and (19) may be measured by Eq. (23). Similarly, the distance between two eigenvector spaces of the ELPP method from Eqs. (7) and (20) may also be measured by Eq. (23). To compare the distance of two eigenvector spaces for the LPP, ELPP, and LPPMDC methods, an experiment is conducted on the ORL face database. In this experiment, for the LPP, ELPP, and LPPMDC methods, we firstly set k = 2, then set \(k=j(j=3,4,\cdots ,12)\), and then calculate the distance of two eigenvector spaces between k = 2 and k = j with Eqs. (22) and (23). The experiment results are listed in the following Table 2. For other the parameter pair \( k_i \) and \( k_j \), similar results can be gotten.

Based on the above analysis and experiment, compared with the LPP and ELPP methods, the distance of the two eigenvector spaces corresponding to the parameter pair k = 2 and \(k=j(j=3,4,\cdots ,12)\) from the LPPMDC method is the smallest. That is, the performance of LPPMDC will show more robustness and stability when the neighborhood size k varies, which is also verified by the experiment in Sect. 4.

(3) The computation complexity

The computation complexity of the three methods, LPP, ELPP and LPPMDC are evaluated in this section. Recently, there have been some researches on the topic of time complexity. For example, the fractional-order neural networks are modified, and then the computational complexity is decreased in Ref. [28, 29], etc. In Ref. [30,31,32], the 4D, 5D and 6D four-wing memristive hyperchaotic system have been proposed respectively, and the spectral entropy complexity are also reported respectively. In this paper, We use the term flam [33] to denote the operation counts, which represents a composite operation consisting of one addition and one multiplication. Let n be the dimensionality of the samples, m be the number of samples. For the three methods, they all need to perform the following four steps:

(1) To construct the k-nearest neighbor graph and require \( \dfrac{1}{2}m^2n+m^2\log m+2mn \) flams [34].

(2) To calculate the two matrices \( S_L = X L X ^\top \) and \( S_D = X D X ^\top \) and require at least \( 3mn^2 \) flams.

(3) To process the original samples or the matrices \( S_L \) and \( S_D \). For LPP, it reduces the dimensionality of the original sample with PCA, which requires at least \( 2m^3+m^2n+mn \) flams.

For ELPP, the main cost is the computations of the matrix exponential \( \exp (S_L) \) and \( \exp (S_D) \). The most widely used method for calculating a matrix exponential is the scaling and squaring method [35], which requires \( (\pi _m + \lceil \log _2(\Vert A\Vert _1/\theta _m)\rceil + 9)n^3 \) flams, where \( \pi _m \), \( \theta _m \) are the related parameters to compute the matrix exponential, \( \Vert \cdot \Vert _1 \) is 1-norm of a matrix. So the calculations of \( \exp (S_L) \) and \( \exp (S_D) \) require \( 2(\pi _m + \lceil \log _2(\Vert A\Vert _1/\theta _m)\rceil )n^3 \) flams.

For LPPMDC, it does not need to process the samples.

(4) To solve the eigenvalue problem, which can be referred to [36]. For the LPP method, without loss of generality, suppose that the dimensionality of the samples after the PCA stage is m(in most cases, one has \( m < n \).). The LPP method solves the Eq. (4) and requires \( \dfrac{9}{2} m^3\) flams. The ELPP method solves the Eq. (7) and requires \( \dfrac{9}{2}n^3 \) flams. For the LPPMDC method, it solves the standard eigenvector problem in Eq. (17). The time complexity is roughly half of that of the generalized eigenvector problem, i.e., \( 2n^3 \) flams.

The total computational complexities for the LPP, ELPP, and LPPMDC methods are the sum of the above four steps. The results are reported in the following Table 3.

From Table 3, the computation complexity of ELPP is the largest. For LPP and LPPMDC, the computation complexity may be comparable, depending on the value of n and m in real life. At the end of Sect. 4, there is a comparison of the computational time of the three methods.

4 Experiment Results

4.1 The Comparisons with the Common Methods

In this experiment section, the new method LPPMDC is compared with two common methods for solving the SSS problem of LPP, PCA+LPP [9] and ELPP [10, 18]. In addition, as a classical unsupervised dimensionality reduction method, PCA is also compared with LPPMDC. Where, three public face image databases: ORL, Georgia Tech and FERET, are used to make experiments.

Usually, the performance of these methods is related to the training sample size, the subspace dimension, and the neighborhood size parameter k (not including the PCA method). For all databases, for each subject, we set p images as the training sampless, and set the subspace dimension is from 10 to 100 in steps of 10. For the given p and the given subspace dimension, the neighborhood size k takes the value from the minimum value (=2) to N-1 at a certain step, where N is the number of training samples. In order to obtain stable experimental results, we repeat the experiment 20 times (the p training samples is randomly taken each time).

The performance of these methods is evaluated in four aspects:

(1) To evaluate the best recognition rate of these methods. For the given p, the best recognition rate from the best configuration parameters, including the best k value and the best subspace dimension, is regarded as the recognition result. For the 20 times experiments, the average of the 20 recognition results is made as to the recognition rate of the current p.

(2) To evaluate how these methods change with subspace dimensions. For each subspace dimension, there are different recognition rates for different k values, and the best recognition rate is taken as the recognition result . For the 20 times experiments, the average of the 20 recognition results is made as the recognition rate of the current subspace dimension.

(3) To evaluate the sensitivity of each method with relation to the parameter k. We use the term, Mean Maximum Difference (MMD), to evaluate each method. For the given p and subspace dimension, the parameter k is taken from the minimum value (=2) to N-1. Then, there is a maximum and a minimum recognition rate. Their difference is the maximum difference. For the 20 times experiment, there are 20 maximum differences, and their average value is the Mean Maximum Difference (MMD) in the current p-value and subspace dimension. In addition, we also graphically demonstrate the variation of the recognition rate of each method with relation to the parameter k.

(4) To evaluate the time complexities from the computational time of these methods.

4.1.1 Experiment Results on ORL Face Database

The ORL face database is composed of 40 people, each of which has 10 different images, for a total of 400. There are variation in expressions, illumination, and poses for each image. Some samples of this database are shown in the following Fig. 1.

In this face database, the experimental setup is as follows: The number of the training sample, i.e., the value of p is set to 3, 4, 5, 6, 7. The subspace dimension is from 10 to 100 in steps of 10. The neighborhood parameter k is taken from \(\left\{ 2,3,\cdots ,N-1 \right\} \) in turn in steps of 5, namely: \( k=\left\{ 2,7,12,\cdots ,2+5\times \left[ \dfrac{N-1}{5} \right] \right\} \).

The best average recognition rate of PCA, LPP, ELPP, and LPPMDC methods are reported in Table 4. The recognition rate curves of the four methods with relation to the subspace dimension are shown in Fig. 2. The comparison of MMDs of the LPP, ELPP, and the proposed LPPMDC method is shown in Table 5, where the subspace dimension = 40. From Table 5, the MMDs of the LPPMDC method are much less, and they are smaller than that of the LPP and ELPP methods. Thus, when the neighborhood parameter k varies, the performance of LPPMDC shows a stable trend. For other subspace dimensions, similar results can be obtained.

To intuitively demonstrate the insensitivity of the LPPMDC method with relation to the parameter k, we present the following Fig. 3. In Fig. 3, the variation of the recognition rate of the LPP, ELPP, and LPPMDC methods with relation to the parameter k are visualized, where p = 6, the subspace dimension is 40, and the randomly selected five experiment results are used. The horizontal axis is the parameter k of five random experiments, and the vertical axis is the recognition rate. The vertical line is used to separate the curves of the five experiments. The value of k is traversed from 2 to the maximum value between the two vertical lines. For p = 6, the maximum value is 237, so k is 2–237. As can be seen from Fig. 3, LPP and ELPP show volatility, while LPPMDC is smoother. That is, the LPPMDC method is stable and robust with relation to k. From the above experimental results, the new method LPPMDC is better than PCA, LPP and ELPP.

4.1.2 Experiment Results on Georgia Tech Database

The Georgia Tech database [37] collects face images of 50 people taken between Jun. 6 and Nov. 1999. This database includes facial images with different expressions taken from different angles, as well as lighting conditions and proportions as well as cluttered backgrounds. In the experiment, each image was adjusted to a grayscale image of \( 32\times 32 \) pixels. Some samples of this database are shown in the following Fig. 4.

In this face database, the experimental setup is as follows: the number of the training sample, i.e., the value of p, is set to 8, 9, 10, 11, 12. The subspace dimension is from 10 to 100 in steps of 10. The neighborhood parameter k is taken from \(\left\{ 2,3,\cdots ,N-1 \right\} \) in turn in steps of 5, namely: \( k=\left\{ 2,7,12,\cdots ,2+5\times \left[ \dfrac{N-1}{5} \right] \right\} \).

Table 6 shows the best recognition rates of the four methods for different training sample numbers. Figure 5 shows the different accuracy for the three algorithms in subspace dimensions from 10 to 100, where the training sample p = 12. It can be seen that LPPMDC is better than LPP and ELPP under different training samples and different subspace dimensions.

Similarly, we use the mean maximum difference (MMD) to evaluate the sensitivity of the methods with relation to the parameter k. Table 7 shows the MMDs when training sample p = 8, 9, 10, 11, 12, where the subspace dimension d = 40. Figure 6 shows the curves of the recognition rates related to the parameter k for five experiments, where p=10, the subspace dimension d = 40. From Fig. 6, the LPPMDC method is more stable than the other two methods.

4.1.3 Experiment Results on FERET Database

In this experiment, a subset of the FERET face database [38] is used to make experiment. The subset is composed of 200 people, 7 samples per person. Some samples of this database are shown in Fig. 7.

Similar to the first two face databases, we do the same experiment on this database. In this face database, for each subject, the training sample numbers p = 2, 3, 4, 5, 6, the others are used as the test samples. The subspace dimension is still from 10 to 100 in steps of 10. The neighborhood parameter k is taken from \(\left\{ 2,3,\cdots ,N-1 \right\} \) in turn in steps of 10, so the parameter k is taken from: \( k=\left\{ 2,12,\cdots ,2+10\times \left[ \dfrac{N-1}{10} \right] \right\} \).

Table 8 shows the best recognition rates of the four methods when the training sample numbers p = 2, 3, 4, 5. Figure 8 shows the recognition rates versus the subspace dimension of the four methods, where the training sample p = 5. Table 9 reports the MMDs of LPP, ELPP, and LPPMDC methods, where the training sample p = 2, 3, 4, 5, 6 and the subspace dimension d = 40. Figure 9 shows the curve of the recognition rate with relation to the parameter k from 5 experimental results, where p = 5, the subspace dimension d = 40.

From the above experimental results, the LPPMDC method has better classification performance. Importantly, the LPPMDC shows more stability when the parameter k varies than the existing methods. However, the recognition rate of the LPP and ELPP methods changes greatly, even if the parameter k takes small values.

4.1.4 The Comparison of the Time Complexity

In this section, an experiment is made to compare the calculation time of LPP, ELPP and LPPMDC. In the experiment, the neighborhood size parameter k = 12 and the subspace dimension = 40. The number of training samples p = 7, 12, and 6 for ORL, Georgia Tech and FERET databases, respectively. Each method is run 20 times repeatedly, and training samples are randomly selected each time. The average value of the 20 calculation times is made as the calculation time of the method. The results are presented in Table 10. As can be seen that the time complexity of ELPP is largest, and the time complexity of the proposed LPPMDC method are lower than that of the other two methods, which is one of the superiorities of the proposed method.

4.2 The Further Experiments

In this section, the proposed LPPMDC method are also compared with the latest LPP variant methods, including the LPP+TSVD [13], LPP+TR [13], LAPP [16] , LSPP [17], and Rm2DLPP [15] methods. Where LPP+TSVD and LPP+TR are the latest regularization methods, and Rm2DLPP is the latest method based on two-dimensional image matrices. LAPP and LSPP are the two latest LPP variant methods.

Since the source codes of these methods are unavailable, when comparing, we use the recognition rate reported in the literatures to compare with that of LPPMDC. The comparison results are reported in Table 11. From the results, the recognition rates of LPPMDC are better than that of the latest LPP variant methods.

In addition, as can be seen from the above Figs. 3, 6, and 9, when the parameter k is small, the recognition rate of the LPPMDC method is much stable. In fact, the parameter k can be taken as a smaller value. As in Ref. [1, 23], the value of k is only taken as a default value or in a small range. So, we evaluate the LPPMDC method when the parameter k takes small values. The results are shown in the following Figs. 10, 11, and 12, where the parameter k is from 2 to 30 in steps of 2, and the subspace dimension is 40 (Similar results can be gotten for other subspace dimensions). Figure 10 is for the ORL face database, where the training sample number p = 3, 4, 5, 6, 7. Figure 11 is for the Georgia Tech face database, where the training sample number p = 8, 9, 10, 11, 12. Figure 12 is for the FERET face database, where the training sample number p = 2, 3, 4, 5, 6. As shown in Figs. 10, 11, and 12, the recognition rates of the LPPMDC method show much stablility and robustness when the parameter k takes small values, which is significant in real life.

5 Conclusions

The small-sample-size (SSS) problem is a common problem in the Locality Preserving Projections (LPP) method. Based on the results of the ELPP method and matrix exponential transformation, a new method, named as Locality Preserving Projections based on the Maximum Difference Criterion (LPPMDC), is proposed to address this problem. Compared with the existing approaches, the proposed LPPMDC method has no SSS problem, low sensitivity to neighborhood size parameter k, and low computation complexity. The experiments on the ORL, Georgia Tech, and FERET face database show that LPPMDC is simple, efficient and robust.

The further work of the research is to use the idea of this paper to the other manifold-based dimensionality reduction methods, such as ISOMAP projections (IsoP) [39], neighborhood preserving embedding (NPE) [40], neighborhood preserving projections (NPP) [41], linear LTSA (LLTSA) [42], etc. Similar to LPP methods, these methods all have the SSS problems, and their performance is also related to neighborhood size parameter k. Therefore, the idea of this paper may be able to solve these problems.

References

He X, Niyogi P (2004) Locality preserving projections. Adv Neural Inf Process syst (NIPS) 16:153–160

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. In: proceedings of the neural information processing systems, pp. 585-591

Huang S, Elgammal A, Lu JW, Yang D et al (2015) Cross-speed gait recognition using speed-invariant gait templates and globality-locality preserving projections. IEEE Trans Inf Forensics Sec 10(10):2071–2083

Kong D, Zhu J, Duan C, Lu L, Chen D (2021) Surface roughness prediction using kernel locality preserving projection and bayesian linear regression. Mech Syst Signal Process 152(2):107474

Ran R, Ren Y, Zhang S, Fang B (2021) A novel discriminant locality preserving projections method. J Math Imaging Vis 63(5):541–554

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Fisher RA (1936) The use of multiple measurements in taxonomic problems. Ann Eugenics 7:179–188

Rao CR (1948) The utilization of multiple measurements in problems of biological classification. J R Stat Soc B 10:159–203

He X, Yan S, Hu Y, Niyogi P, Zhang H (2005) Face recognition using Laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Wang S, Yan S, Yang J et al (2014) A general exponential framework for dimensionality reduction. IEEE Trans Image Process 23(2):920–930

Cai D, He X, Han J(2007) Spectral regression for efficient regularized subspace learning. In: IEEE conference computer vision (ICCV), Rio de Janeiro, Brazil, pp. 1–8

Lu J, Tan Y (2010) Regularized Locality Preserving Projections and Its Extensions for Face Recognition. IEEE Trans Syst Man Cybern Part B Cybern 40(3):963–985

Wei W, Dai H, Liang W (2020) Regularized least squares locality preserving projections with applications to image recognition. Neural Netw 128(7):322–330

Zhu L, Zhu S (2007) Face recognition based on two dimensional locality preserving projections. J Image Graph 12(11):2043–2047

Huxidan J, Gulnaz A (2018) Face recognition based on rearranged modular two dimensional locality preserving projection. Int J Pattern Recognit Artif Intell 32(12):1856016

Wang A, Zhao S, Liu J, Yang J, Chen G (2020) Locality adaptive preserving projections for linear dimensionality reduction. Exp Syst Appl 151:113352

Wen Y, Yang S, Hou L, Zhang H, (2016) Face recognition using locality sparsity preserving projections. In: 2016 international joint conference on neural networks (IJCNN). Vancouver, BC, Cannada, pp 3600–3607

Wang SJ, Chen HL, Peng XJ, Zhou CG (2011) Exponential locality preserving projections for small sample size problem. Neurocomputing 74(17):3654–3662

Zhang TP, Fang B, Tang YY, Shang Z, Xu B (2010) Generalized discriminant analysis: a matrix exponential approach. IEEE Trans System Man Cybern B Cybern 40(1):186–197

Ran R, Fang B, Wu X (2019) Exponential neighborhood preserving embedding for face recognition. IEICE Trans Inf Syst 101(5):1410–1420

Huang S, Zhuang L (2016) Exponential discriminant locality preserving projection for face recognition. Neurocomputing 208:373–377

Lu G, Wang Y, Zou J (2018) Matrix exponential based discriminant locality preserving projections for feature extraction. Neural Netw 97:127–136

Dornaika F, Bosaghzadeh A (2013) Exponential local discriminant embedding and its application to face recognition. IEEE Trans Cybern 43(3):921–934

Dornaika F, Traboulsi YE (2017) Matrix exponential based semi-supervised discriminant embedding for image classification. Pattern Recogni 61:92–103

Ran R, Fang B, Zhang S et al (2019) Face recognition based on exponential neighborhood preserving discriminant embedding. J Comput (Taiwan) 30(6):1–14

Golub GH, Loan CF (1983) Matrix computation. The Johns Hopkins University Press, Baltimore

Sun JG (2001) Matrix perturbation analysis. Science Press, Beijing

Xu C, Liao M, Li P et al (2019) \( PD^9 \) control strategy for a fractional-order chaotic financial model. Complexity 2019:1–14

Pratap A et al (2020) Mittag-Leffler stability and adaptive impulsive synchronization of fractional order neural networks in quaternion field. Math Methods Appl Sci 7:1–31

Yu F, Qian S, Chen X, Huang Y, Liu L, Shi C et al (2020) A new 4d four-wing memristive hyperchaotic system: dynamical analysis, electronic circuit design, shape synchronization and secure communication. Int J Bifurcat Chaos 30(10):1–20

Yu F, Liu L, He B, Huang Y, Shi C, Cai S et al (2019) Analysis and fpga realization of a novel 5d hyperchaotic four-wing memristive system, active control synchronization, and secure communication application. Complexity 2019:1–18

Yu F, Liu L, Shen H, Zhang Z, Huang Y, Shi C et al (2020) Dynamic analysis, circuit design, and synchronization of a novel 6d memristive four-wing hyperchaotic system with multiple coexisting attractors. Complexity 2020:1–17

Stewart GW (1998) Matrix algorithms volume I: basic decompositions. SIAM, Philadelphia

Cai D, He XF, Han JW (2007) Spectral regression: a unified subspace learning framework for content-based image retrieval. In: proceedings of the 15th ACM international conference on multimedia, pp. 403-412

Higham N (2005) The scaling and squaring method for the matrix exponential revisited. SIAM J Matrix Anal Appl 26(4):1179–1196

Stewart GW (2001) Matrix algorithms volume II: eigensystems. SIAM, Philadelphia

Sim T, Baker S, Bsat M (2003) The CMU pose, illumination, and expression database. IEEE Trans Pattern Anal Mach Intell 25(12):1615–1618

Phillips PJ, Moon H, Rizvi SA, Rauss PJ (2000) The FERET evaluation methodology for face-recognition algorithms. IEEE Trans Pattern Anal Mach Intell 22(10):1090–1104

Cai D, He XF, Han JW (2007) Isometric projection. In: proceedings of AAAI, pp. 528-533

He XF, Cai D, et al. (2005) Neighborhood preserving embedding. In: proceedings conference on computer vision (ICCV), Beijing, China, pp. 1208–1213

Kokiopoulou E, Saad Y (2007) Orthogonal neighborhood preserving projections: a projection-based dimensionality reduction technique. IEEE Trans Pattern Anal Mach Intell 29(12):2143–2156

Zhang T, Yang J, Zhao D, Ge X (2007) Linear local tangent space alignment and application to face recognition. Neurocomputing 70:1547–1553

Acknowledgements

This work was supported by National Natural Science Foundation of China under grant 61876026, Humanities and Social Sciences Project of the Ministry of Education of China under grant 20YJAZH084, Chongqing Technology Innovation and Application Development Project under Grant cstc2020jscx-msxmX0190 and cstc2019jscx-mbdxX0061, Project of Natural Science Foundation Project of CQ CSTC of China under grant cstc2016jcyjA0419 and cstc2017jcyjAX0316, and Science and Technology Research Program of Chongqing Municipal Education Commission under grant KJZD-K202100505.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ran, R., Qin, H., Zhang, S. et al. Simple and Robust Locality Preserving Projections Based on Maximum Difference Criterion. Neural Process Lett 54, 1783–1804 (2022). https://doi.org/10.1007/s11063-021-10706-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10706-4