Abstract

This paper investigates finite-time synchronization of complexed-valued neural networks with multiple time-varying delays and infinite distributed delays. By separating the complex-valued neural networks into the real and the imaginary parts, the corresponding equivalent real-valued systems are obtained. Some sufficient conditions are derived for finite-time synchronization of the drive-response system based on the new Lyapunov–Krasovskii function and the new analysis techniques. Numerical examples demonstrate the effectiveness of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past decades, complex-valued neural networks (CVNN for short) have received increasing interest due to their promising potential in engineering applications such as [1,2,3,4,5,6,7]. Besides, CVNN has more different and more complicated properties than the real-valued ones. Therefore it is necessary to study the dynamic behaviors of the systems deeply.

One of the hottest topics in the investigation of CVNN is the chaos synchronization, there have been some researches on synchronization due to the pioneering work of Pecora and Carroll in [8] and the chaotic behavior of biological neurons in [9]. Recently, synchronization has important applications in associative memory[10], pattern recognition [11], chemical reaction [12], secure communication [13], etc. There are many kinds of synchronizations of chaotic neural networks that have been considered, which include exponential synchronization [14], asymptotic synchronization [15, 16], finite-time synchronization[17], in these synchronization types, the finite-time synchronization is the optimal [18] because of its great efficiency and confidentiality when it is applied to secure communication compared with the exponential and asymptotic synchronization. However, most of these finite-time synchronization results did not consider time delay.

Recently, the authors of [19, 20] studied finite-time synchronization of intermittent control. In [21, 22], finite-time synchronization of real-valued neural networks with delays has been investigated. In addition, the problem of finite-time \(H^{\infty }\) synchronization for complex networks with time-varying delays and semi-Markov jump topology, finite-time synchronization control for uncertain Markov jump neural networks with input constraints, this can refer to [23, 24]. Yang proposed a new control and analytical technique to study finite-time synchronization of neural networks with time delays in [25], moreover, they investigated the finite-time synchronization of nonidentical drive-response systems with different multiple time varying delays and bounded external perturbations in [26]. In [27], the authors consider finite-time synchronization of CVNNs. Unfortunately, to the best of our knowledge, there is no similar results of CVNN with multiple time-varying delays and infinite distributed delays. Therefore, it is necessary to study the finite-time synchronization of CVNN with multiple time-varying delays and infinite distributed delays.

Based on the above discussions, this paper investigates finite-time synchronization of complexed-valued neural networks with multiple time-varying delays and infinite distributed delays. By separating the complex-valued neural networks into the real and the imaginary parts, the corresponding equivalent real-valued systems are obtained. Some sufficient conditions are derived for finite-time synchronization of the drive-response system based on the new Lyapunov–Krasovskii function and the new analysis techniques. we overcome the difficulties brought by the integral term by means of iterative and cumulative methods in comparison to [26]. Numerical examples demonstrate the effectiveness of the theoretical results.

The rest of this paper is organized as follows. In Sect. 2, complexed-valued neural networks with with multiple time-varying delays and infinite distributed delays are presented. Some necessary assumptions, definitions are also given in this section. In Sect. 3, finite-time synchronization of CVNN is studied. Then, in Sect. 4, simulation examples are given to show the effectiveness of the theoretical results. Finally, Sect. 5 gives some conclusions.

2 Model Description and Preliminaries

Suppose C denote complex number set, for complex number vector \(z\in C^{n}\), we consider the complex-valued neural networks with time-varying delays and unbounded delays, which can be described by

where \(z_{k}\in C\) represents the state of the kth neuron at time t, \(k=1,2,\ldots ,n\), n corresponds to the number of neurons. \(D=diag(d_{1},d_{2},\ldots ,d_{n})\in \mathbb {R}^{n\times n}\) with \(d_{k}>0\), \(A=(a_{kj})_{n\times n}\in C^{n\times n}\), \(B=(b_{kj})_{n\times n}\in C^{n\times n}\), \(P=(p_{kj})_{n\times n}\in C^{n\times n}\) are the connection weight matrices, \(J=(J_{1},J_{2},\ldots ,J_{n})^{T}\in C^{n}\) is external constant input vector, \(f(z(t))=(f_{1}(z(t)),f_{2}(z(t)),\ldots ,f_{n}(z(t)))^{T}\) represents activation function, \(\theta _{kj}:[0,+\infty )\rightarrow [0,+\infty )\) are bounded scalar function, \(\tau _{k_{1}j}(t),\ldots ,\tau _{k_{n}j}(t)\) are the internal multiple time-varying delays.

Remark 1

Specially, when the delay kernels satisfy the following condition:

where \(\beta _{kj}>0 (k,j=1,2,\ldots ,n)\) are constants, then system (1) becomes the following complex-valued neural network with finite-time distributed delays:

Based on the concept of drive-response synchronization, we take (1) as the drive system, the corresponding response system is constructed as follows:

where \(Z(t)=(Z_{1}(t),Z_{2}(t),\ldots ,Z_{n}(t))^{T}\in C^{n}\) is the state vector of the response system at time t and \(U_{k}(t)\) is the control input to be designed. Let \(z_{k}(t)=x_{k}(t)+iy_{k}(t)\), \(Z_{k}(t)=X_{k}(t)+iY_{k}(t)\) be the complex number, here i denotes the imaginary unit, i.e. \(i=\sqrt{-1}\).

Remark 2

Obviously, if drive-response synchronization in the case of (1) can be realized in finite time, then the drive-response synchronization in the case of (3) can also be realized in finite time. However, this paper will point out that the setting time for (1) cannot be estimated, while it can be estimated for (3) when the bounds of the delays and the initial values are known.

Remark 3

The novel points in comparison with the previous work is that we consider the multiple time-varying delays and infinite distributed delays, which means our work is more closely related to real problems and we overcome the difficulties brought by the integral term by some analysis techniques.

We define the synchronization errors \(e_{k}(t)=e_{k}^{R}(t)+ie_{k}^{I}(t)=Z_{k}(t)-z_{k}(t)\), subtracting (1) from (4) yields the following error system:

where \(g_{j}(e(t))=f_{j}(Z(t))-f_{j}(z(t))\), \(g_{j}(e_{j}(t-\tau _{k_{1}j}(t),\ldots ,e_{n}(t-\tau _{k_{n}j}(t)))=f_{j}(Z_{1}(t-\tau _{k_{1}j}(t),\ldots ,Z_{n}(t-\tau _{k_{n}j}(t))) -f_{j}(z_{1}(t-\tau _{k_{1}j}(t),\ldots ,z_{n}(t-\tau _{k_{n}j}(t)))\doteq F_{j}(t)\), the initial condition is \(\psi _{k}(s)=\varphi _{k}(s)-\phi _{k}(s)\), \(k=1,2,\ldots ,n\).

The following assumptions for the delays and the activation functions are needed in this paper.

\(\mathbf{(H_{1})}\) There exist positive constants \(\tilde{\tau }\) and \(\mu <1\) such that \(0<\tau _{k_{h}j}(t)\le \tilde{\tau }\), \(\dot{\tau }_{k_{h}j}(t)\le \mu , k,j=1,2,\ldots ,n, h=1,2,\ldots n\).

\(\mathbf{(H_{2})}\) For \(z=x+iy\in C^{n}\) with \(x, y\in R^{n}\), the activation function can be given by \(f_{k}(z)=f_{k}^{R}(x, y)+if_{k}^{I}(x, y)\), the activation function with multiple time-varying delays can be given by\(F_{k}(t)=F_{k}^{R}(t)+iF_{k}^{I}(t)\), there exist positive constants \(l_{k}^{RR}, l_{k}^{RI}, l_{k}^{IR}\) and \(l_{k}^{II}(k=1,2,\ldots ,n)\) such that, for any \(z=x+iy, Z=X+iY\in C^{n}\),

\((H_{3})\) There exists positive constants \(\tilde{\theta }_{kj}\) such that

Definition 1

[25]. The system (4) is said to be finite-time synchronization with (1) if for a suitable designed feedback controller, there exists a constant \(t_{1}>0\)(depends on the initial state vector error value \(\psi (s)\) and the time-delay), such that \(|e(t_{1})|_{1}=0\) and \(|e(t)|_{1}\equiv 0\) for \(t>t_{1}\), where \(|e(t)|_{1}=\sum \nolimits _{k=1}^{n}|e_{k}(t)|=\sum \nolimits _{k=1}^{n}\sqrt{(e^{R}_{k}(t))^{2}+(e^{I}_{k}(t))^{2}}\), \(t_{1}\) is called the setting time.

3 Main Results

From Definition 1, finite-time synchronization between the system (4) and (1) is equivalent to the finite-time stabilization problem of the error dynamical system (5) at the origin. Therefore, the designed controllers \(U_{k}(t)\) should satisfy the condition that \(U_{k}(t)=0\) when \(e_{k}(t)=0, k=1,2,\ldots ,n\), we design the following discontinuous controllers:

where \(\xi _{k}>0(k=1,2,\ldots ,n)\) are the control gains to be determined, and \(\delta _{k}>0\), \(\eta _{k}>0\) are the tunable constants, then we can get the following main result.

Theorem 1

Suppose that the assumption conditions \((H_{1})-(H_{3})\) are satisfied. Then the complex-valued neural networks (4) is synchronized with (1) in finite-time under the controller (6) if \(\delta _{k}>0, \eta _{k}>0, k=1,2,\ldots ,n\) and the following conditions are satisfied:

where \(l_{k}=\max \{l_{k}^{RR}, l_{k}^{RI}, l_{k}^{IR},l_{k}^{II} \}\).

Proof

Consider the following Lyapunov–Krasovskii function:

where

Substitute the controller (6) into the error system (5). Calculating the time derivative of \(V_{1}(t)\) along the trajectory of the error system (5), it can be derived from \((H_{2})\) that

It follows from \((H_{2})\) that

where \(\lambda _{k}=|sgne^{R}_{k}(t))|, \rho _{k}=|sgn(e^{I}_{k}(t))|\). It is obtained from \(V_{2}(t)\),

then,

it is derived from \((H_{1})\)

and

substituting the condition (7) into (12) yields the following inequality:

where \(\alpha _{k}=\min \{\delta _{k}, \eta _{k}\}>0\). When \(\Vert e(t)\Vert _{1}\ne 0\), it can be obtained that there exists at least one index \(k\in \{1,2, \ldots , n\}\) such that \(\lambda _{k}+\rho _{k}=1\), so in this case, one has \(\sum \nolimits _{k=1}^{n}(\lambda _{k}+\rho _{k})\ge 1\) and

where \(\alpha =\min \{\alpha _{k}, k=1,2, \ldots , n\}\).

Integrating both sides of the inequality (14) from 0 to t, one has

If \(|e_{k}(t_{1})|=0\) at a instant \(t_{1}\in (0, +\infty )\) for \(k=1, 2, \ldots , n\), then we can proceed the discussion from (17). If \(\Vert e(t)\Vert _{1}>0\) for all \(t\in [0, +\infty )\), then \(\sum \nolimits _{k=1}^{n}(\lambda _{k}+\rho _{k})\ge 1, \alpha <0\) for all \(t\in [0, +\infty )\). In this case, the inequality (15) means that \(\lim \nolimits _{t\rightarrow t_{1}}V(t)=-\infty \). This contradicts to the fact that \(V(t)\ge 0\). Hence, there exists nonnegative constant \(V^{*}\) and \(t_{1}\in (0, +\infty )\) such that

Next we prove that

Firstly, we prove that \(\Vert e(t_{1})\Vert _{1}=0\). Otherwise \(\Vert e(t_{1})\Vert _{1}>0\), then there exists small constant \(\epsilon >0\) such that \(\Vert e(t_{1})\Vert _{1}>0\) for all \(t\in [t_{1}, t_{1}+\epsilon ]\), so there exists at least one \(k_{0}\in \{1, 2, \ldots , n\}\) such that \(|e^{R}_{k_{0}}(t)|>0\) or \(|e^{I}_{k_{0}}(t)|>0\) for \(t\in [t_{1}, t_{1}+\epsilon ]\), which lead to \(\dot{V}(t)\le -\alpha _{k_{0}}<0\) holds for all \(t\in [t_{1}, t_{1}+\epsilon ]\). This contradicts (16).

Secondly, we prove that \(\Vert e(t)\Vert _{1}\equiv 0\) for all \(t\ge t_{1}\). For the contradiction, without loss of generality, suppose there exists at least one \(k_{0}\in \{1,2,\ldots , n\}\) and \(t_{2}>t_{1}\) such that \(|e^{R}_{k_{0}}(t_{2})|_{1}>0\). Let \(t_{s}=\sup \{t\in [t_{1}, t_{2}]:\Vert e(t)\Vert _{1}=0\}\), we have \(t_{s}<t_{2}\), \(\Vert e(t_{s})\Vert _{1}=0\) and \(|e^{R}_{k_{0}}(t)|_{1}>0\) for all \(t\in (t_{s}, t_{2}]\). Furthermore, there exists \(t_{3}\in (t_{s}, t_{2}]\) such that \(|e^{R}_{k_{0}}(t)|\) is monotonously increasing on the interval \([t_{s}, t_{3}]\). Therefore V(t) is also monotonously increasing on the interval \([t_{s}, t_{3}]\), i.e., \(\dot{V}(t)>0\) for \(t\in (t_{s}, t_{3}]\). On the other hand, from the first part of the discussion, we can get \(\dot{V}(t)\le -\alpha <0\) holds for all \(t\in [t_{s}, t_{3}]\), which is a contradiction. Hence, \(\Vert e(t)\Vert _{1}\equiv 0\) for all \(t\ge t_{1}\).

Therefore, the conditions in (17) hold. According to the Definition 1, the neural network (4) is synchronized with (1) in a finite-time under the controller (6). This completes the proof. \(\square \)

Remark 4

In Theorem 1, finite-time synchronization criterion has been obtained for CVNN with with multiple time-varying delays and infinite distributed delays. It is difficult to estimate the synchronization time since the exact value of \(V^{*}\) cannot be precisely obtained due to the infinite-time distributed delay [25].

Theorem 2

For given positive constants \(\xi _{k}\), \(\delta _{k}, \eta _{k}, k=1, 2, \ldots , n\), if the assumption conditions \((H_{1})\) and \((H_{2})\) are satisfied, and

then the complex-valued neural networks (1) and (4) with the delay kernels \(\theta _{kj}(t)\) satisfying (2) are synchronized in finite-time under the controller (6), where \(l_{k}=\max \{l_{k}^{RR}, l_{k}^{RI}, l_{k}^{IR},l_{k}^{II} \}\). Moreover, the setting time is estimated as

, where \(\alpha =\min \{\delta _{k}, \eta _{k}, k=1, 2, \ldots , n\}>0\).

Proof

Consider the following Lyapunov–Krasovskii function:

where \(V_{1}(t)\) and \(V_{2}(t)\) are defined as Theorem 1,

From (16), arguing as in the proof of Theorem 1, we can follow

where \(\lambda _{k}, \eta _{k}\) is defined in the proof of Theorem 1, \(\alpha _{k}=\min \{\delta _{k}, \eta _{k}\}>0\), so there exists nonnegative constant \(V^{*}\) and \(t_{1}\in (0, +\infty )\) such that

Furthermore, as the proof of Theorem 1, we can get

Now we prove \(V^{*}>0\). If \(V^{*}>0\), then it is obtained from (19) that there exist \(t_{2}\) and \(t_{3}\) satisfying \(t_{1}-\max \{\tilde{\tau }, \beta _{jk}, k, j=1, 2, \ldots n\}\le t_{3}<t_{2}<t_{1}\) such that \(\Vert e(t)\Vert _{1}>0\) for all \(t\in [t_{3}, t_{2}]\). Notice \(\Vert e(t_{1})\Vert _{1}=0\) and \(\Vert e(t)\Vert _{1}\equiv 0\) for \(t\ge t_{1}\). It is obtained \(V^{*}=\bar{V}(t_{1})>\bar{V}(t_{4})=V^{*}\) by the definition of \(\overline{V}(t)\) for any instant \(t_{4}>t_{1}\), this contradicts to the fact that \(\overline{V}(t)\) is decreasing. Therefore, \(V^{*}=0\).

Integrating \(\overline{V}(t)\) from 0 to \(t_{1}\) as the proof of Theorem 1, one has

so

This completes the proof of Theorem 2. \(\square \)

When complex-valued neural networks without delay are concerned, i.e. \(p_{kj}=0\), \(k, j=1, 2, \ldots , n\), we get the following Corollary from Theorem 2.

Corollary 1

For given positive constants \(\xi _{k}\), \(\delta _{k}, \eta _{k}, k=1, 2, \ldots , n\), if the assumption conditions \((H_{1})\) and \((H_{2})\) are satisfied, and

then the complex-valued neural networks (1) and (4) with the delay kernels \(\theta _{kj}(t)\) satisfying (2) are synchronized in finite-time under the controller (6), where \(l_{k}=\max \{l_{k}^{RR}, l_{k}^{RI}, l_{k}^{IR},l_{k}^{II} \}\). Moreover, the setting time is estimated as

where \(\alpha =\min \{\delta _{k}, \eta _{k}, k=1, 2, \ldots , n\}>0\).

Corollary 2

Suppose that \(b_{kj}=p_{kj}=0\), \(k, j=1, 2, \ldots , n\). If the assumption conditions \((H_{2})\) are satisfied and

then the complex-valued neural networks (1) and (4) are synchronized in finite-time under the controller (6). Moreover, the setting time is estimated as \(t_{1}\le \frac{1}{\alpha }\sum \nolimits _{k=1}^{n}\bigg (|e^{R}_{k}(0)|+|e^{I}_{k}(0)|\bigg )\), where \(\alpha =\min \{\delta _{k}, \eta _{k}, k=1, 2, \ldots , n\}>0\).

4 Numerical Simulation Examples

In this section, the numerical examples with simulations will be given to show the effectiveness of the established results.

Example 1

Consider the complex-valued neural networks described by

where \(\tau _{k_{1}j}(t)=\tau _{k_{2}j}(t)=0.5, \theta _{kj}(t)=e^{-t}, k, j=1, 2,\) and

It is assumed that the activation functions are

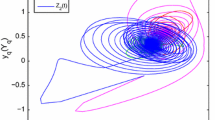

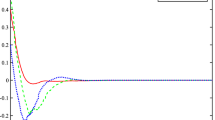

We take all the initial conditions as \(z_{1}(s)=2+i\), \(z_{2}(s)=2.5+7i\), \(s\in (-\infty , 0]\). It is easy to get that \(\bar{\tau }_{kj}=0.5\) and \(\mu _{kj}=0<1\), \(\tilde{\theta }_{kj}=2, k, j=1, 2\). Therefore, conditions \((H_{1}-(H_{3})\) are satisfied. By Theorem 1, it implies that systems (1) and(4) can realize finite-time synchronization under the controller (6) for any positive constants \(\delta _{k}\) and \(\eta _{k}\), k=1, 2. Choose the initial value of the response system as \(Z_{1}(s)=-\,1.5+3.5i\), \(Z_{2}(s)=-\,1.5+6i\), \(s\in (-\infty , 0]\), we take \(\xi _{1}=20\), \(\xi _{2}=14.6\), Figs. 1 and 2 show the synchronization errors with the initial conditions.

Example 2

Consider the following 4-dimensional fractional-order neural networks as the drive system

where \(\alpha =0.95\), \(x(t)=(x_1(t),x_2(t),x_3(t)),x_4(t))^T\), \(g(x(t))=(\tanh (x_1(t)),\tanh (x_2(t)),\)\(\tanh (x_3(t)),\)\(\tanh (x_4(t)))^T\), \(J=(0,0,0,0)^T\),

and the corresponding response system is described as

where \(\alpha =0.95\), \(y(t)=(y_1(t),y_2(t),y_3(t),y_4(t))^T\), \(g(y(t))=(\sin (y_1(t)),\)\(\sin (y_2(t)),\)\(\sin (y_3(t)),\sin (y_4(t)))^T\), and parameters A, B and J are the same as in the drive system (50).

In control scheme, the gain matrix K and impulsive matrix \(E_k\) are selected as \(K={\mathrm{diag}}(4,2,2,3)\) and \(E_k={\mathrm{diag}}(-\,0.5,-\,0.5,-\,0.5,-\,0.5)\). Let \(Q=I\) and \(\lambda =1\), by simple calculation, we have \(\lambda _{max}(\Pi _1)=-\,1.4915<0\), then all conditions in Theorem 2 hold. Hence, we can obtain from the Theorem 2 that the drive system (25) and the response system (26) can achieve global Mittag–Leffler synchronization. In numerical simulations, Fig. 3 exhibits the state trajectories between the drive system and the response system with a group of randomly selected initial values \(x(0)=(-\,0.61,-\,3.89,-\,2.42,-\,0.91)^T\) and \(y(0)=(0.95,-\,2.38,1.03,2.12)^T\), and Fig. 4 depicts the time responses of the state variables of the synchronization error system with several randomly selected initial values.For the following complex-valued neural networks:

where \(\tau _{k_{1}j}(t)=0.5|\sin t|, \tau _{k_{2}j}(t)=0.2|\cos t|,k, j=1, 2,\) and

It is assumed that the activation functions are

By calculation, we have

We take all the initial conditions as \(z_{1}(s)=-\,0.3+1.9i\), \(z_{2}(s)=3-0.5i\), \(s\in (-\infty , 0]\). It is easy to get that \(\tilde{\tau }=0.5\) and \(\mu =0.5<1\). Therefore, conditions \((H_{1}-(H_{3})\) are satisfied. Take

By Corollary 1, it implies that systems (1) and (4) can realize finite-time synchronization under the controller (6) for any positive constants \(\delta _{1}\) and \(\delta _{2}\). Choose the initial value of the response system as \(Z_{1}(s)=3+0.1i\), \(Z_{2}(s)=2+1.1i\), \(s\in (-\infty , 0]\), we take \(\xi _{1}=29.1\), \(\xi _{2}=41.5\), Figs. 3 and 4 show the synchronization errors with the initial conditions.

5 Conclusions

In this paper, we investigated the finite-time synchronization of complexed-valued neural networks with multiple time-varying delays and infinite distributed delays. By separating the complex-valued neural networks into the real and the imaginary parts, the corresponding equivalent real-valued systems have been obtained. We have gotten some sufficient conditions for finite-time synchronization of the drive-response system based on the new Lyapunov–Krasovskii function and the new analysis techniques. The numerical examples have been provided to demonstrate the effectiveness of the theoretical results.

References

Lee D (2006) Improvement of complex-valued Hopfield associative memory by using generalized projection rules. IEEE Trans Neural Netw 17(5):1341–1347

Zhou W, Zurada J (2009) Discrete-time recurrent neural networks with complex-valued linear threshold neurons. IEEE Trans Circuits Syst II 56(8):669–673

Hirose A, Yoshida S (2012) Generalization characteristics of complexvalued feedforward neural networks in relation to signal coherence. IEEE Trans Neural Netw Learn Syst 23(4):541–551

Dini D, Mandic D (2012) Class of widely linear complex Kalman filters. IEEE Trans Neural Netw Learn Syst 23(5):775–786

Zhou B, Song Q (2013) Boundedness and complete stability of complex-valued neural networks with time delay. IEEE Trans Neural Netw Learn Syst 24(8):1227–1238

Xu X, Zhang J, Shi J (2014) Exponential stability of complex-valued neural networks with mixed delays. Neurocomputing 128(128):483–490

Xu X, Zhang J, Shi J (2017) Dynamical behaviour analysis of delayed complex-valued neural networks with impulsive effect. Int J Syst Sci 48(4):686–694

Pecora L, Carroll T (1990) Synchronization in chaotic systems. Phys Rev Lett 64(8):821–824

Aihara K, Takabe T, Toyoda M (1990) Chaotic neural networks. Phys Lett A 144(6):333–340

Tan Z, Ali M (2001) Associative memory using synchronization in a chaotic neural network. Int J Mod Phys C 12(1):19–29

Hoppensteadt F, Izhikevich E (2002) Pattern recognition via synchronization in phase-locked loop neural networks. IEEE Trans Neural Netw 11(3):734–738

Park H (2006) Chaos synchronization between two different chaotic dynamical systems. Chaos Solitons Fractals 27(2):549–554

Bowong S, Kakmeni F, Fotsin H (2006) A new adaptive observer-based synchronization scheme for private communication. Phys Lett A 355(3):193–201

Sheng L, Yang H (2008) Exponential synchronization of a class of neural networks with mixed time-varying delays and impulsive effects. Neurocomputing 71(16):3666–3674

Song Q (2009) Design of controller on synchronization of chaotic neural networks with mixed time-varying delays. Neurocomputing 72(13):3288–3295

Wang Z, Zhang H (2013) Synchronization stability in complex interconnected neural networks with nonsymmetric coupling. Neurocomputing 108(5):84–92

Shen J, Cao J (2011) Finite-time synchronization of coupled neural networks via discontinuous controllers. Cogn Neurodyn 5(4):373–385

Yang X, Cao J (2010) Finite-time stochastic synchronization of complex networks. Appl Math Model 34(11):3631–3641

Mei J, Jiang M, Wang X, Han J, Wang S (2014) Finite-time synchronization of drive-response systems via periodically intermittent adaptive control. J Frankl Inst 351(5):2691–2710

Fei Y, Mei J, Wu Z (2016) Finite-time synchronisation of neural networks with discrete and distributed delays via periodically intermittent memory feedback control. IET Control Theory Appl 10(10):1630–1640

Huang J, Li C, Huang T, He X (2014) Finite-time lag synchronization of delayed neural networks. Neurocomputing 139(13):145–149

Hu C, Yu J, Jiang H (2014) Finite-time synchronization of delayed neural networks with Cohen–Grossberg type based on delayed feedback control. Neurocomputing 143(16):90–96

Shen H, Park J, Wu Z, Zhang Z (2015) Finite-time \(H^{\infty }\) synchronization for complex networks with semi-Markov jump topology. Commun Nonlinear Sci Numer Simul 24(1–3):40–51

Shen H, Ju H, Wu Z (2014) Finite-time synchronization control for uncertain Markov jump neural networks with input constraints. Nonlinear Dyn 77(4):1709–1720

Yang X (2014) Can neural network swith arbitrary delays be finite-timely synchronized. Neurocomputing 143(16):275–281

Shi L, Yang X, Li Y, Feng Z (2016) Finite-time synchronization of nonidentical chaotic systems with multiple time-varying delays and bounded perturbations. Nonlinear Dyn 83(1):75–87

Zhou C, Zhang W, Yang X, Xu C, Feng J (2017) Finite-time synchronization of complex-valued neural networks with mixed delays and uncertain perturbations. Neural Process Lett 1(4):1–21

Acknowledgements

This work was partially supported by the National Natural Science Foundation of China (Grant No. 61403050), the science and technology commission project of Chongqing (cstc2017jcyjA1082, cstc2018jcyjAX0810), the Scientific and Technological Research Program of Chongqing Municipal Education Commission (KJ1501412, KJ1601401, KJ1601410), and the Foundation of CQUE (KY201702A,KY201720B).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, Y., Qin, Y., Huang, J. et al. Finite-Time Synchronization of Complex-Valued Neural Networks with Multiple Time-Varying Delays and Infinite Distributed Delays. Neural Process Lett 50, 1773–1787 (2019). https://doi.org/10.1007/s11063-018-9958-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-018-9958-6