Abstract

Unique visual features of 4D light field data have been shown to affect detection of salient objects. Nevertheless, only a few studies explore it yet. In this study, several helpful visual features extracted from light field data are fused in a two-stage Bayesian integration framework for salient object detection. First, background weighted color contrast is computed in high dimensional color space, which is more distinctive to identify object of interest. Second, focusness map of foreground slice is estimated. Then, it is combined with the color contrast results via first-stage Bayesian fusion. Third, background weighted depth contrast is computed. Depth contrast has been proved to be an extremely useful cue for salient object detection and complementary to color contrast. Finally, in the second-stage Bayesian fusion step, the depth-induced contrast saliency is further fused with the first-stage saliency fusion results to get the final saliency map. Experiments of comparing with eight existing state-of-the-art methods on light field benchmark datasets show that the proposed method can handle challenging scenarios such as cluttered background, and achieves the most visually acceptable salient object detection results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Salient object detection is a challenge in computer vision, which plays an important role in a variety of applications such as image and video segmentation [1], object recognition [2], object class discovery [3], image retargeting [4], image quality assessment [5], image super-resolution [6], and person re-identification [7], image deblurring [8] to name a few.

Most of existing saliency approaches, such as CNTX [9], RC [10] and HDCT [11], etc, only focused on static or dynamic 2D scenes. These saliency methods extracts visual features and cues such as color, intensity, texture and motion [12, 13] for saliency detection from 2D image. In HDCT, saliency map of an image is represented as a linear combination of high-dimensional color space where salient regions and backgrounds can be distinctively separated. Boundary connectivity [14] is a robust background measure, proposed in RBD, which characterizes the spatial layout of image regions with respect to image boundaries. In addition, Lin et al. [12] proposed a macroblock classification method for various video processing applications involving motions. And a convex-hull-based process is proposed in [13] to automatically determine the regions of interest of the motions. As an important cue, the motion feature computed in the both works is also helpful for saliency detection. In general, these 2D saliency algorithms work well when dealing with simple images, but perform poorly with challenging scenarios. Moreover, 2D saliency methods are inherently different from how human visual system detects saliency. Recently, a few deep learning based frameworks such as [15] have been proposed, and obtained the remarkable results and significant improvements. But this kind of algorithms is still aimed at 2D images, which does not only take into account depth information, but also requires a lot of labeled training data. As is known to all, human eye can conduct dynamic refocusing that enables rapid sweeping over different depth layers. Man uses two eyes to estimate scene depth for more reliable saliency detection whereas most existing 2D approaches including deep learning based methods assume that the depth information is mainly unknown. For more details about 2D saliency models, please refer to Borji et al.’s studies [16, 17] .

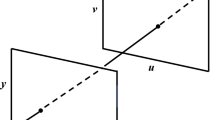

Fortunately, as a new research field in recent years, light field has a unique capability of post-capture refocusing, which can be represented as a 4D function of light rays in terms of their positions and directions. The information of positions and directions can be converted into focal slices focusing on different depth levels, all-focus images and depth maps using rendering and refocusing techniques. The availability of the focal slices is in line with the focusness cue [18,19,20] which can be computed to separate in-focus and out-of-focus regions so as to identify salient objects. A moderately accurate depth map [21,22,23,24] can also greatly help distinguish the foreground from the background. In short, light field data provides a wealth of information such as depth cue and focueness cue. It has been recently proved to be greatly useful for salient object detection in the previous literatures [19, 20, 23, 25], which can be easily acquired in a single shot by commercial light field cameras such as Lytro and Raytrix. The pioneering research LFS [19], as the first salient object detection method on light field, which explores these useful cues mentioned above, can deal with many tricky scenarios including clustered background, similar foreground and background,etc. However, it ignores the explicit use of depth information of salient objects, and the framework is significantly different from previous 2D and 3D solutions so that it is hard to take advantage of previous models to extend its research. Moreover, the results in LFS are not clear enough. Li et al. [20] puts forward a unified saliency detection framework for handling heterogenous types of input data, including 2D, 3D and 4D data. Many visual features such as color, texture, depth and focusness are incorporated together to highlight the region of interesting. This algorithms can handle heterogenous types of input data, but it does not fully exploit the rich information embedded in 3D and 4D data. In fact, appending directly depth to feature vector is not a good choice for salient object detection. Zhang et al. [25] proposes a new saliency detection method, denoted as DILF, which examines focusness, depth and all-focus cues from light field data. First, it computes color contrast saliency and depth-induced contrast saliency. Then, it combines the two saliency maps by linear weighted sum to get the final saliency map. According to previous studies, linear fusion is probably not the best choice for saliency detection. Several visual results of these saliency algorithms are shown in Fig. 1.

Inspired by these outstanding models, an effective two-stage Bayesian integration framework for salient object detection is proposed to handle the light field data in this paper. The main stages of our proposed method are illustrated in Fig. 2. Firstly, the all-focus image is segmented into a set of super-pixels via simple linear iterative clustering method [26], and boundary connectivity is calculated as an effective background measure. Then, background probability is achieved by fusing boundary connectivity with location prior, which is used as weight factors. Secondly, feature vector is composed of multiple features in a high-dimensional color space, which is used to estimate color contrast saliency. Meanwhile, depth-induced contrast saliency is estimated on depth map by computing L2-norm distance between pairs of super-pixels. Thirdly, focusness map of foreground slice is obtained by computing focusness [19, 20] of focus stack. All these three maps are weighted by background probability. Finally, we fuse these background weighted saliency maps in a two-stage Bayesian framework and gain the final saliency map.

In brief, the main contributions of this letter are as follows: Firstly, a new computational framework for salient object detection is proposed, which explored how to effectively use existing visual features of image to achieve better performance and results of saliency detection. Secondly, a two-stage Bayesian integration strategy is adopted in the proposed computational framework, which further improved performance of salient object detection.

2 Contrast-Based Saliency Computation

The proposed approach mainly integrates three saliency maps: color contrast saliency of all-focus images, depth contrast saliency of depth map and focusness map of foreground slice. As shown in Fig. 2, we first conduct the estimation of high-level feature prior such as background probability and focusness. Next, the aforementioned three saliency maps are computed respectively. Finally, all the three maps are weighted by background probability to accurately highlight informative objects of an image.

2.1 Estimation of Background Probability

According to our observation, like all focus image, foreground objects and background regions in the depth map differ in their structures and spatial layouts. Following RBD [14], boundary connectivity prior is computed as a background measure for saliency detection. For superpixel p, it is defined as follows.

where \(Len_{bnd}(p)\) is the length along the boundary, and Area(p) is the spanning area of each superpixel p, are computed in the following formulas:

Accordingly, background probability based on boundary connectivity is defined as:

2.2 Focusness Estimation of Foreground Slice

For focusness of 4D light field, focusness of each focus slice \(f_i\) is defined as the mean distance to its 8-neighbors in the LAB color space:

where \(fea^{color}\) is the color vector of superpixel p in LAB color space. Next, for each slice \(f_i\), the focusness of all pixels along the x and y axes are projected respectively to get two 1D focusness distributions, denoted as \(D_x^i\) and \(D_y^i\), which are separately defined as:

In order to select foreground slice, similar with LFS [19], a background likelihood score is computed by suppression filter for each focus slice \(f_i\):

where \(\rho = \exp \left( \dfrac{\lambda \cdot i}{N}\right) \) is the weighting factor, \(u\left( \cdot ,\cdot \right) \) is a U-shaped 1D band suppression filter defined in LFS. The slice with the lowest BLS score is selected as the foreground slice fg. The focusness map of foreground slice is denoted as \(S_F\left( p\right) \).

2.3 Color Contrast Computation in High-Dimensional Color Space

For color-based saliency, following HDCT [11], we compute various color features, including the average pixel color, color contrast and color histogram, which are in different color space such as RGB, CIELab and HSV. Color contrast contains two types: local contrast and global contrast. Local contrast is defined as the difference between superpixel p and the k nearest neighbor patches, and global contrast is defined as the difference between superpixel p and all patches except for p with respect to color features. But unlike HDCT, we also take into account the Gabor filter responses with 4 scales and 12 orientations as additional texture features. Texture feature is extremely helpful when informative objects with similar color appear in both the foreground and background regions. These aforementioned features are concatenated into the super-pixel feature vectors consisting of 123 dimensions for color-based saliency \(S_C\left( p\right) \).

2.4 Depth-Induced Contrast Computation

Depth has been proved greatly helpful for salient object detection and complementary to color contrast. But it is probably a poor choice to append directly depth map to feature vector. Since the proposal is similar to most contrast-based methods such as RBD [14] and DILF [25], we compute depth-induced contrast \(S_D\left( p_i\right) \) for superpixel \(p_i\):

where \(U_{f}\) is the average depth value of super-pixel p. \(W_{pos}\left( p_i,p_j\right) \) is the \(L_2\)-norm distance between superpixel \(p_i\) and others.

2.5 Two-Stage Bayesian Fusion Framework for Salient Objection Detection

According to our observations, background prior is not only complementary to color contrast, but also complementary to depth-induced contrast and focusness of foreground slice. Hence, all the three maps including color contrast, depth-induced contrast and focusness map are weighted by background probability bgPb(p) as follows:

The following step is to fuse saliency maps through Bayesian framework. In this paper, Bayesian fusion is successively conducted twice. The Bayes formula has been used to compute saliency by the posterior probability in recent studies [27, 28]:

where H(z) is a feature vector of pixel z and the prior probability S(z) is a coarse saliency map. The likelihood probabilities are computed as:

where \(N_F\) and \(N_B\) are the number of pixels in the foreground F and background B respectively. \(N_{bF\left( r(z)\right) }\) and \(N_{bB\left( r(z)\right) }\) denote the number of pixels whose color features belong to the foreground bin \(bF\left( r(z)\right) \) and background bin \(bB\left( r(z)\right) \) respectively.

We first fuse color contrast saliency \(S_C^B\) with focusness map \(S_F^B\) in the first fusion stage, in which color contrast saliency \(S_C^B\) is treated as the prior probability, focusness map \(S_F^B\) is used to compute the likelihood probability \(Pb\left( S_F^B(z)|F_1\right) \), and vise versa, focusness map \(S_F^B\) as the prior probability and color contrast saliency \(S_C^B\) is used to compute the likelihood probability \(Pb\left( S_C^B(z)|F_2\right) \). Consequently, the two corresponding posterior probabilities are computed by Bayes formula and then used to integrate a final saliency map:

Then, the new-found fusion result in the first fusion stage, denoted as \(S_{CF}^B\), is further fused similarly with depth-induced contrast saliency \(S_D^B\) in the second fusion stage to get the final saliency map.

3 Experimental Results

3.1 Visual Comparison with Other Methods

To illustrate the effectiveness of the proposed approach, we have performed experiments on the only light field dataset LFSD [19]. LFSD includes 40 outdoor scenes and 60 indoor scenes, where each light field scenes is captured by Lytro camera. For each data, there are three users who were asked to manually label the salient objects from the all-focus image.

The proposed approach is qualitatively compared with all light field methods we know, including LFS [19], WSC [20] and DILF [25]. A visual comparison of our method with the others is demonstrated in Fig. 3. It is noted that the proposed method is not only able to highlight the entire salient object, but also alleviate the noise of the background obviously. Our proposed model can robustly detect object of interest in challenging scenarios such as similar foreground and background, cluttered background, etc., and achieves the most visually acceptable salient object detection results.

3.2 Performance Evaluation Measures

In order to conduct a quantitative performance evaluation, we select the methodologies of the authoritative precision-recall curve (PRC). For a given saliency map, with saliency values in the range [0,255], we threshold the saliency map at a threshold T within [0,255] to obtain a binary mask for the salient object. Then we vary this threshold from 0 to 255, and compute the precision, recall and F-Measure values at each value of the threshold for comparing the quality of different saliency maps. The PRC of different methods on LFSD are shown in Fig. 4a, which show that resulting curves of the proposed approach is higher than the other approaches for most of given recall rates. Following FT [32], F-measure is also used for evaluation, which is an overall performance measurement with the weighted harmonic of precision and recall, defined as:

As shown in Fig. 4b, F-measure shows that it is hard to tell which one is better, the proposed method or the DILF, but it is obvious that the proposed method is superior to the rest. In order to further validate the superiority of our method, the precision, recall and F-measure scores of all methods in our comparative experiment are shown in Fig. 4c. It demonstrates the results of ours fully exceed the others except that the recall is next only to DILF.

As suggested in SF [31], the PRC is lack of considering the true negative detection of the salient and non-salient pixels in an image. Therefore, the mean absolute error (MAE) between the saliency map and ground truth is also used for a more balanced comparison, which is defined as:

where S is a saliency map and GT represents the ground truth image. W and H are the width and the height of S and GT ,respectively. As shown in Fig. 4d, our result of MAE is the lowest one in all methods. In our experiments, we set the number of superpixels N to be 300, and set \(\beta ^2 =0.3\) to highlight the precision in F-measure.

In Table 1, we show a comparison of the average computational time for each image of the state-of-the-art algorithms mentioned above, including our method. The running time is measured on a computer with an Intel Dual Core i5-2320 3.0 GHz CPU. Considering that the proposed algorithm is implemented by using MATLAB 2015a with unoptimized code, the computational complexity of our algorithm is comparable to that of other algorithms.

As illustrated in Fig. 5, we also exhibit two failures brought by the proposed approach. The performance of our approach is partially dependent on the accuracy of depth map. If the depth map is seriously blurred or amorphous, our model would get incorrect results. Although there are many outstanding models which can be used to estimate the depth map, it is still a thought-provoking problem to obtain accurate depth map in natural cluttered images.

4 Conclusion

In this paper, the color-based contrast, depth-induced contrast and focusness map of foreground slice, weighted by background probability, are fused in a two-stage Bayesian integration algorithm for salient object detection. We investigate the importance of depth and focusness cue with regard to salient object detection on light field data. Experimental results show that color feature is complementary to focusness and depth cue. The three features are of a great help for salient object detection, and the proposed method brings out desirable results on the light field dataset, LFSD, compared with many state-of-the-art saliency approaches. Due to the partial dependency on the quality and performance of depth map, the proposed method might fail in certain cases. In the future, estimation of more accurate depth maps from light fields will be conducted to improve the accuracy of salient object detection.

References

Schade U, Meinecke C (2011) Texture segmentation: do the processing units on the saliency map increase with eccentricity? Vis Res 51(1):1–12

Ren Z, Gao S, Chia L-T, Tsang IWH (2014) Region-based saliency detection and its application in object recognition. IEEE Trans Circuits Syst Video Technol 24(5):769–779

Zhu J-Y, Jiajun W, Yan X, Chang EIC, Tu Z (2015) Unsupervised object class discovery via saliency-guided multiple class learning. IEEE Trans Pattern Anal Mach Intell 37(4):862–875

Chen Y, Pan Y, Song M, Wang M (2015) Image retargeting with a 3D saliency model. Signal Process 112:53–63

Saha A, Wu QMJ (2013) A study on using spectral saliency detection approaches for image quality assessment. In: IEEE international conference on acoustics, speech and signal processing, ICASSP 2013, Vancouver, 26–31 May 2013, pp 1889–1893

Sadaka NG, Karam LJ (2011) Efficient super-resolution driven by saliency selectivity. In: 18th IEEE international conference on image processing, ICIP 2011, Brussels, 11–14 Sept 2011, pp 1197–1200

Zhao R, Ouyang W, Wang X (2013) Unsupervised salience learning for person re-identification. In: 2013 IEEE conference on computer vision and pattern recognition, Portland, 23–28 June 2013, pp 3586–3593

Zhang C, Lin W, Li W, Zhou B, Xie J, Li J (2013) Improved image deblurring based on salient-region segmentation. Signal Process Image Commun 28(9):1171–1186

Goferman S, Zelnik-Manor L, Tal A (2010) Context-aware saliency detection. In: The 23th IEEE conference on computer vision and pattern recognition, CVPR 2010, San Francisco, 13–18 June 2010, pp 2376–2383

Cheng M-M, Mitra NJ, Huang X, Torr PHS, Hu S-M (2015) Global contrast based salient region detection. IEEE Trans Pattern Anal Mach Intell 37(3):569–582

Kim J, Han D, Tai Y-W, Kim J (2014) Salient region detection via high-dimensional color transform. In: 2014 IEEE conference on computer vision and pattern recognition, CVPR 2014, Columbus, 23–28 June 2014, pp 883–890

Lin W, Sun MT, Li H, Chen Z, Li W, Zhou B (2012) Macroblock classification method for video applications involving motions. IEEE Trans Broadcast 58(1):34–46

Han X, Li G, Lin W, Su X, Li H, Yang H, Wei H (2012) Periodic motion detection with ROI-based similarity measure and extrema-based reference-frame selection. In: Signal & information processing association annual summit and conference (APSIPA ASC), 2012 Asia-Pacific, pp 1–4

Zhu W, Liang S, Wei Y, Sun J (2014) Saliency optimization from robust background detection. In: 2014 IEEE conference on computer vision and pattern recognition, CVPR 2014, Columbus, 23–28 June 2014, pp 2814–2821

Zhao R, Ouyang W, Li H, Wang X (2015) Saliency detection by multi-context deep learning. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 1265–1274

Borji A, Sihite DN, Itti L (2012) Salient object detection: a benchmark. In: Computer vision–ECCV 2012: 12th European conference on computer vision, Florence, 7–13 Oct 2012, Proceedings, part II, pp 414–429

Borji A, Cheng M-M, Jiang H, Li J (2015) Salient object detection: a benchmark. IEEE Trans Image Process 24(12):5706–5722

Jiang P, Ling H, Yu J, Peng J (2013) Salient region detection by UFO: uniqueness, focusness and objectness. In: IEEE international conference on computer vision, ICCV 2013, Sydney, 1–8 Dec 2013, pp 1976–1983

Li N, Ye J, Ji Y, Ling H, Yu J (2014) Saliency detection on light field. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Li N, Sun B, Yu J (2015) A weighted sparse coding framework for saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5216–5223

Niu Y, Geng Y, Li X, Liu F (2012) Leveraging stereopsis for saliency analysis. In: 2012 IEEE conference on computer vision and pattern recognition, Providence, 16–21 June 2012, pp 454–461

Desingh K, Madhava Krishna K, Rajan D, Jawahar CV (2013) Depth really matters: improving visual salient region detection with depth. In: British machine vision conference, BMVC 2013, Bristol, 9–13 Sept 2013

Peng H, Li B, Xiong W, Hu W, Ji R (2014) RGBD salient object detection: a benchmark and algorithms. In: Computer vision–ECCV 2014—13th European conference, Zurich, 6–12 Sept 2014, Proceedings, part III, pp 92–109

Ren J, Gong X, Yu L, Zhou W, Yang MY (2015) Exploiting global priors for RGB-D saliency detection. In: 2015 IEEE conference on computer vision and pattern recognition workshops (CVPRW), pp 25–32

Zhang J, Wang M, Gao J, Wang Y, Zhang X, Wu X (2015) Saliency detection with a deeper investigation of light field. In: Proceedings of the 24th international joint conference on artificial intelligence, IJCAI 2015, Buenos Aires, 25–31 July 2015, pp 2212–2218

Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Süsstrunk S (2012) SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell 34(11):2274–2282

Xie Y, Lu H, Yang MH (2013) Bayesian saliency via low and mid level cues. IEEE Trans Image Process Publ IEEE Signal Process Soc 22(5):1689–1698

Li X, Lu H, Zhang L, Ruan X, Yang M-H (2013) Saliency detection via dense and sparse reconstruction. In: IEEE international conference on computer vision, ICCV 2013, Sydney, 1–8 Dec 2013, pp 2976–2983

Yang C, Zhang L, Lu H, Ruan X, Yang M-H (2013) Saliency detection via graph-based manifold ranking. In: 2013 IEEE conference on computer vision and pattern recognition, Portland, 23–28 June 2013, pp 3166–3173

Wei Y, Wen F, Zhu W, Sun J (2012) Geodesic saliency using background priors. In: Computer vision– ECCV 2012—12th European conference on computer vision, Florence, 7–13 Oct 2012, Proceedings, part III, pp 29–42

Perazzi F, Krähenbühl P, Pritch Y, Hornung A (2012) Saliency filters: contrast based filtering for salient region detection. In: 2012 IEEE conference on computer vision and pattern recognition, Providence, 16–21 June 2012, pp 733–740

Achanta R, Hemami SS, Estrada FJ,Süsstrunk S (2009) Frequency-tuned salient regiondetection. In: 2009 IEEE computer society conference on computervision and pattern recognition (CVPR 2009), Miami, 20–25 June 2009, pp 1597–1604

Acknowledgements

We thank reviewers for valuable comments to improve the paper. This study in part is funded by The National Key Research and Development Program of China (Grants Nos. 2016YFB0700802, 2016YFB0800600), The National Natural Science Foundation of China (Grant No. 61305091), The Innovative Youth Projects of Ocean Remote Sensing Engineering Technology Research Center of State Oceanic Administration of China (Grant No. 2015001), and The Foundation of Sichuan Educational Committee (Grant No. 13ZB0103).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, A., Wang, M., Li, X. et al. A Two-Stage Bayesian Integration Framework for Salient Object Detection on Light Field. Neural Process Lett 46, 1083–1094 (2017). https://doi.org/10.1007/s11063-017-9610-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9610-x