Abstract

Images captured under low-light conditions often suffer from severe loss of structural details and color; therefore, image-enhancement algorithms are widely used in low-light image restoration. Image-enhancement algorithms based on the traditional Retinex model only consider the change in the image brightness, while ignoring the noise and color deviation generated during the process of image restoration. In view of these problems, this paper proposes an image enhancement network based on multi-stream information supplement, which contains a mainstream structure and two branch structures with different scales. To obtain richer feature information, an information complementary module is designed to realize the information supplement for the three structures. The feature information from the three structures is then concatenated to perform the final image recovery operation. To restore more abundant structures and realistic colors, we define a joint loss function by combining the L1 loss, structural similarity loss, and color-difference loss to guide the network training. The experimental results show that the proposed network achieves satisfactory performance in both subjective and objective aspects.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Images acquired by camera equipment under insufficient or uneven light conditions often have some problems such as detail loss, partial underexposure, and low resolution (Rao & Chen, 2012). This type of image provides a very poor visual experience and also affects the accuracy of the computer vision system in collecting image information. There are usually two solutions to this problem. One is to upgrade the hardware equipment, the other is to improve the performance of image enhancement algorithms. With the development of industrial technology, camera equipment has been rapidly improved. However, when taking pictures at night, cameras are often ineffective in obtaining high brightness images. This makes their use impractical because of the high cost of upgrading the hardware. Therefore, researchers attempted to address the problem of insufficient illumination through software and employed image-enhancement algorithms to improve the brightness and contrast of captured images (Ko et al., 2017; Yu & Zhu, 2019).

Image-enhancement methods are mainly divided into two categories: traditional methods and deep learning methods. Earlier algorithms mainly utilize local or global histogram equalization to dynamically stretch the range of the gray values of low-light images to enhance the contrast of the image and improve the visual effect. Li et al. (2015) proposed an image-enhancement framework for simultaneous image contrast enhancement and image denoising. Sujee and Padmavathi (2017) introduced the idea of a pyramid into histogram equalization to improve image contrast and extract as much image information as possible. Although these methods require a short time for image enhancement, they do not consider the degradation factors in the actual imaging, and their performance is poor in complex scenes.

Since the Retinex theory was first proposed by Land (1977) in the 1960s, several Retinex model-based enhancement methods have been proposed. This theory explains that the scene observed by the human eye is determined by two factors: the reflection property of the object itself and the illumination around the object. The Retinex mathematical model can be expressed as:

where S represents the acquired low-light image, R is a normal exposure image, L is an illuminance image, and ‘o’ is a symbol representing element-wise multiplication.

At present, most of the traditional enhancement algorithms for low-light images are based on the Retinex theory. Previously, Jobson et al., (1997a) proposed a single-scale Retinex enhancement method and then introduced the idea of multiscale Retinex (Jobson et al., 1997b) to enhance the image and achieve color recovery. Yang et al., (2018) combined a dual-tree complex wavelet transform and the Retinex theory to adjust the contrast of an image to obtain an enhanced image. Park et al. (2017) proposed the use of variational optimization to separate the reflection and illumination of the Retinex model to achieve image enhancement at a low cost. Yu et al. (2017) proposed an illumination-reflection model that not only can enhance the image taken in low light but also can remove the amplified noise. Although the aforementioned methods can yield clearer images, the unknown parameter L needs to be estimated when solving the parameters of the Retinex model. If these parameters are not accurately estimated, the enhanced results will be prone to overexposure or underexposure and to color distortion.

In recent years, because of its good learning ability, deep learning has also made many achievements in image enhancement. Guo et al., (2019) proposed an image enhancement network based on a discrete wavelet transform and a convolutional neural network (CNN); it is composed of a denoising module and an image enhancement module. Tao et al. (2017) proposed a joint framework for image enhancement, which included a CNN-based denoising network and an enhancement model based on an atmospheric scattering model. Lee et al., (2020) introduced the idea of unsupervised learning into the field of image enhancement for the first time and employed a saturation loss function to preserve the image details. Jiang et al., (2021) proposed an efficient and unsupervised generative adversarial network, called EnlightenGAN, which is trained without relying on low/normal-light paired images. The above methods can avoid solving the parameters of the Retinex model in the traditional method and obtain good enhancement results. However, they do not consider the loss of color in low-light images.

On the basis of the analysis described above, we propose an end-to-end image enhancement network based on a multi-stream information supplement in this paper. To avoid losing details in the process of network learning, we ensure that the size of the feature maps in the mainstream structure is consistent with that of the network input. To recover more semantic information, two branches are constructed by reducing the scale of the feature maps to provide supplementary information for the mainstream structure. To avoid the color distortion of the enhancement results, we introduce the color difference loss as one term of the joint loss function to measure the color similarity between the enhancement result and the ground truth.

The main contributions of this work are as follows:

-

1.

An image enhancement network based on multi-stream information supplement is proposed for low-light image restoration. The network includes a mainstream structure and two branch structures, which are designed to learn the feature information of different scales.

-

2.

To learn more abundant structural details, an information complementary module is designed to realize the mutual supplement of the feature information extracted by the three branches.

-

3.

To ensure that the enhancement result is closer to the real natural image, a joint loss function is defined to guide the training of the network.

2 The proposed method

2.1 The network framework

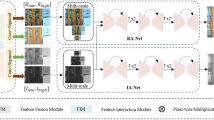

Most of the deep learning network models expand the receptive field of convolution kernels by reducing the size of the extracted feature maps and then recover the size of the feature maps by performing a deconvolution operation using the U-Net (Ronneberger et al., 2015) structure. Although this method can learn the feature information of different scales, it will also cause the loss of the information due to the increase of network depth. In order to reduce the loss of information, we propose an image enhancement network based on multi-stream information supplement, as shown in Fig. 1. After the feature extraction layers, we design a multi-stream structure containing three branches, each of which receives feature information of different scales. In each branch, the size of the feature maps remains unchanged to reduce the loss of information in the learning process. The first branch is also called the mainstream branch to ensure that the network learns more details at the original scale. The second branch receives the down-sampled feature maps from the mainstream branch by using convolutions with stride-2. The third branch receives the down-sampled feature maps from the mainstream branch and the second branch. In this branch, the down-sampling operations are realized by using convolutions with stride-4 and stride-2, respectively. In the multi-stream structure, reducing the scale of the feature maps can expand the receptive field of the convolution kernels and extract more semantic information. In addition, the cross-scale feature supplement method, that is, the third branch receives feature maps of multiple branches, can further enhance the feature information of the image.

To learn more complete information, an information supplement mechanism among the three branches is constructed, as shown in the dashed box in the lower left corner in Fig. 1. The way of information supplement between different branches is as follows. The feature maps of the mainstream branch are down-sampled and then supplemented to the second branch and the third branch, and the feature maps of the second branch are up-sampled and down-sampled and then sent to the mainstream branch and the third branch, respectively. In the same way, the feature maps of the third branch are up-sampled and then sent to the second branch and the mainstream branch, respectively. These feature maps of the three branches are interactively supplemented and then integrated through the convolutional layers.

The integrated feature maps are then sent to the image recovery part to realize the reconstruction of the enhanced image through the following four stages. First, the feature maps of the third branch are up-sampled and concatenated with the feature maps of the second branch. Then, a convolutional layer and an up-sampling operation are used to reduce the number of channels and restore the size of feature maps. Next, the up-sampled feature maps of the second branch are concatenated with the feature maps of the mainstream branch, and finally the final enhanced image is obtained through a convolutional layer.

In the network of Fig. 1, different colors are used to represent different scales, each color block represents 32 channels of the feature maps, and the size of all convolution kernels is 3 × 3.

2.2 Loss functions

The quality of the network model is affected by the loss function used in the training, in addition to the training set and the network framework itself. To recover good structural information and realistic color during the enhancement process, we designed a joint loss function that combines the L1 loss, structural similarity loss, and color difference loss to train the proposed network.

2.2.1 L1 loss

L1 loss provides a quantitative method to measure the pixel level difference between the enhanced image and the real image. Therefore, we use the L1 normal formula as a part of the total loss function, which is calculated as follows:

where N is the number of pixels, y represents the ground truth, i is the index of the pixel, f(S) is the output of the network, and \(|| \cdot ||_{1}\) represents the L1 norm.

2.2.2 Structural similarity loss

The structural similarity index (SSIM) is a measure of the structural similarity between two images (Wang et al., 2004). The proposed method involves a mainstream network structure and two tributaries, in which a convolution operation is performed on three scales of the feature maps to learn the structural details. To avoid blurring or loss of details in the image scale transformation, we utilize a multiscale SSIM (MSSSIM) quality evaluation method (Wang et al., 2003) to construct a structural similarity loss function as one term of the joint loss function. The MSSSIM is defined as follows:

where a1 and a2 are the corresponding input images, l(a1, a2) is the luminance, c is the contrast, s is the structural similarity, i is the index of the pixel, m is the total number of pixels, and α, β and γ are the parameters that adjust the importance of l, c and m. The larger the value of MS, the more complete the structural information. For the convergence of the network training, the structural similarity loss function is defined as follows:

Therefore, based on Eq. (4), the structural similarity loss function LS of the image brightness enhancement network can be defined as:

2.2.3 Color-difference Loss

In low-light images, the color information of objects is easily hidden owing to insufficient light. However, owing to the lack of reference information in the process of image enhancement, the enhanced results are prone to color distortion. The CIEDE2000 index (Sharma et al., 2005) is used to calculate the color difference between two images. Therefore, this paper introduces the CIEDE2000 index to construct the color difference loss, which is used to measure the color similarity between the enhanced image and the ground truth. The calculation process is as follows: First, the two images are converted from the RGB color space to the Lab color space; then, the color difference ΔE between each pixel of the ground truth \(y\) and the enhanced image f(S) is calculated according to the CIEDE2000 method. The color difference loss of the two images is defined as follows:

where ΔE measures the color difference of each pixel between two images in the Lab space.

2.2.4 Total loss

Based on the aforementioned definition, the joint loss function is expressed as follows:

where L1 is the L1-norm loss, LS is the structural similarity loss, Lc is the color difference loss, and ρ, σ and δ are the weights of the losses, which are set to 1, 1, and 3, respectively, and are obtained by trial and error.

3 Experiments and analysis

3.1 Dataset and experimental setup

To avoid the overfitting of the network caused by a small amount of training data, we used two datasets to train the proposed network. The first one is called the LOw-Light (LOL) dataset (Wei et al., 2018), which contains 485 training images, and the second one is a synthetic database, which contains 1000 normal exposure images with a size of 400 × 600 pixels selected from the RAISE dataset (Duc et al., 2015). For testing, the test set of the LOL dataset which includes 15 images and 60 test images from the dataset of CVPR2021 (Afifi et al., 2021) were used.

During the training process, we set the learning rate to 0.0004 and the batch size to 6. All experiments were performed using an NVIDIA GTX 1080Ti GPU and the PyTorch framework.

3.2 Visual comparison

To illustrate the effectiveness of the proposed method, we compared it with some existing mainstream methods, including DONG (Dong et al., 2011), LIME (Guo et al., 2017), MF (Fu et al., 2016), BIMEF (Ying et al., 2017), Retinex-net (Wei et al., 2018), KinD (Zhang et al., 2019) and EnlightenGan (Jiang et al., 2021) on LOL dataset. To demonstrate the performance of the methods, we selected two test images from the dataset to illustrate the enhancement results of various methods, as shown in Figs. 2 and 3, respectively.

As can be seen from Fig. 2, the results of DONG, LIME, MF, Retinex-net and EnlightenGan show serious color deviation, and the color of the background wall is obviously different from that of the ground truth. The overall brightness of BIMEF is lower than that of the ground truth. KinD can obtain a good brightness result, but cannot restore the color well, and there is an excessive smoothing phenomenon. The result of the proposed method can not only restore the brightness well, but also can obtain the color closer to the ground truth.

It can be seen in Fig. 3, the results of BIMEF and MF are relatively dark, owing to the insufficient exposure of the image. The result of Retinex-net shows slight color differences and amplified noise, such as serious noise on the sleeves of the clothes. The result of LIME has a better visual effect; however, compared with the ground truth, the red region is brighter, and the dark region is darker. The result of EnlightenGan has slight color deviation especially on the background wall. The result of DONG is better, but prominent noise can be observed on the background wall. The result of KinD shows evident edge blurring and color deviation in the red rectangular area. The proposed method can better restore the brightness and detail of the image, and the result is closer to the ground truth.

3.3 Quantitative comparison

To prove the effectiveness of the proposed network further, we used the indexes of the PSNR and SSIM to evaluate the enhancement results quantitatively. The larger the two indexes, the better the enhancement results. We used the test dataset of LOL (Wei et al., 2018) for the experiment and calculated the average values of the SSIM and PSNR for different enhancement methods. The results are shown in Table 1, and the best results are highlighted in bold. It can be seen that the proposed method achieves the highest PSNR and SSIM values among all the enhancement methods. The experiments presented here confirm that the proposed network has better performance.

3.4 Generalization verification

To further prove the generalization ability of the proposed network, we also tested our method on 60 images from an additional dataset proposed by “Learning Multi-Scale Photo Exposure Correction” in CVPR 2021 (Afifi et al.,). Taking an image as an example, the subjective results by different methods are shown in Fig. 4. As can be seen from the figure, the results of DONG, Retinex-net, and KinD have serious distortions compared to the ground truth. The result of LIME is over-enhanced and the results of MF and BIMEF is under-enhanced. The result of EnlightenGan has obvious color deviation. The proposed method achieves the closest result to ground truth in terms of brightness and color. In addition, we calculated the average quantitative evaluation results of different method, as shown in Table 2. From the table, it can be seen that our results obtain the highest average PSNR and SSIM index values.

Enhancement results of different comparison methods on the dataset of CVPR2021 (Afifi et al., 2021)

3.5 Ablation Experiment

To verify the impact of the number of branches on network performance, we compared the image enhancement results of the networks with one mainstream structure and one branch (MS + 1), one mainstream structure and two branches (MS + 2), and one mainstream structure and three branches (MS + 3) on LOL dataset. The experimental results are shown in Fig. 5. It can be seen that the SSIM and PSNR values of the results obtained using MS + 1 and MS + 3 are lower than those obtained using MS + 2. The results show that the network with the MS + 3 structure has an overfitting problem, resulting in a significant reduction of the PSNR and SSIM values. The MS + 1 structure is not sufficient for completing the enhancement task, because the image information has not been extracted completely. Therefore, we chose the MS + 2 structure in the network.

4 Conclusion

This paper proposes an end-to-end multi-stream information supplement network for low-light image enhancement. To reduce the loss of image feature information owing to the increase in network depth, we constructed an MS + 2 network, which includes a mainstream structure and two branches. The mainstream structure learns the main information by keeping the size of the feature map consistent with the size of the input image. The other two branches provide supplementary information for the mainstream structure by learning the feature information of two different scales of the image. At the same time, to better learn the feature information of the image and reduce the color distortion of the enhancement results, we defined a joint loss function that combines the L1 loss, structure similarity loss, and color difference loss to train the network. Compared with some state-of-the-art enhancement methods, our method can obtain better results in terms of subjective and objective evaluations.

References

Afifi, M., Derpanis, K. G., Ommer, B., & Brown, M. S. (2021). Learning multi-scale photo exposure correction. In Proc. IEEE int. conf. comput. vision pattern recognit. (pp. 9157–9167).

Dong, X., Wang, G., Pang, Y., Li, W., Wen, J., Meng, W., & Lu, Y. (2011). Fast efficient algorithm for enhancement of low lighting video. In Proc. IEEE int. conf. multimedia expo. (pp. 1–6).

Duc, T., Dang, N., Cecilia, P., Valentina, C., & Giulia, B. (2015). Raise: a raw images dataset for digital image forensics. In Proc. 6th ACM multimedia syst. conf. (pp. 219–224).

Fu, X., Zeng, D., Huang, Y., Liao, Y., Ding, X., & John, P. (2016). A fusion-based enhancing method for weakly illuminated images. Signal Processing, 129, 82–96.

Guo, X., Li, Y., & Ling, H. (2017). LIME: Low-light image enhancement via illumination map estimation. IEEE Transactions on Image Processing, 26(2), 982–993.

Guo, Y., Ke, X., Ma, J., & Zhang, J. (2019). A pipeline neural network for low-light image enhancement. IEEE Access, 7, 13737–13744.

Jiang, Y., Gong, X., Liu, D., Cheng, Y., Fang, C., Shen, X., Yang, J., Zhou, P., & Wang, Z. (2021). EnlightenGAN: Deep light enhancement without paired supervision. IEEE Transactions on Image Processing, 30, 2340–2349.

Jobson, D. J., Rahman, Z., & Woodell, G. A. (1997a). Properties and performance of a center/surround Retinex. IEEE Transactions on Image Processing, 6(3), 451–462.

Jobson, D. J., Rahman, Z., & Woodell, G. A. (1997b). A multiscale Retinex for bridging the gap between color images and the human observation of scenes. IEEE Transactions on Image Processing, 6(7), 965–976.

Ko, S., Yu, S., Kang, W., Park, C., Lee, S., & Paik, J. (2017). Artifact-free low-light video enhancement using temporal similarity and guide map. IEEE Transactions on Industrial Electronics, 64(8), 6392–6401.

Land, E. H. (1977). The Retinex theory of color vision. Scientific American, 237(6), 108–128.

Lee, H., Sohn, K., & Min, D. (2020). Unsupervised low-light image enhancement using bright channel prior. IEEE Signal Processing Letters, 27, 251–255.

Li, L., Wang, R., Wang, W., & Gao, W. (2015). A low-light image enhancement method for both denoising and contrast enlarging In Proc. IEEE int. conf. image process. (pp. 3730–3734).

Park, S., Moon, B., Ko, S., Yu, S., & Paik, J. (2017). Low-light image enhancement using variational optimization-based Retinex model. In Proc. IEEE int. conf. consum. electron. (pp. 70–71).

Rao, Y., & Chen, L. (2012). A survey of video enhancement techniques. Journal of Information Hiding and Multimedia Signal Processing, 3(1), 71–99.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In Proc. IEEE int. conf. medical image comput. comput.—Assisted intervention (pp. 234–241).

Sharma, G., Wu, W., & Dalal, E. (2005). The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Research and Application, 30(1), 21–30.

Sujee, R., & Padmavathi, S. (2017). Image enhancement through pyramid histogram matching. In Proc. int. conf. comput. commun. inform. (pp. 1–5).

Tao, L., Zhu, C., Song, J., Lu, T., Jia, H., & Xie, X. (2017). Low-light image enhancement using CNN and bright channel prior. In Proc. IEEE int. conf. image process. (pp. 3215–3219).

Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(11), 600–612.

Wang, Z., Simoncelli, E. P., & Bovik, A. C. (2003). Multiscale structural similarity for image quality assessment. In Proc. IEEE conf. rec. 37th Asilomar conf. signals syst. comput. (Vol. 2, pp. 1398–1402).

Wei, C., Wang, W., Yang, W., & Liu, J. (2018). Deep Retinex decomposition for low-light enhancement. In Proc. British mach. vis. conf. (pp. 1–10).

Yang, M., Tang, G., Liu, X., Wang, L., Cui, Z., & Luo, S. (2018). Low-light image enhancement based on Retinex theory and dual-tree complex wavelet transform. Optoelectronics Letters, 14(6), 470–475.

Ying, Z., Li, G., Gao, W. (2017). A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv:1711.00591

Yu, S., & Zhu, H. (2019). Low-illumination image enhancement algorithm based on a physical lighting model. IEEE Transactions on Circuits and Systems for Video Technology, 29(1), 28–37.

Yu, X., Luo, X., Lyu, G., & Luo, S. (2017). A novel Retinex based enhancement algorithm considering noise. In Proc. 16th int. conf. comput. inf. sci. (pp. 649–654).

Zhang, Y., Zhang, J., & Guo, X. (2019). Kindling the darkness: A practical low-light image enhancer. In Proc. ACM multimedia. (pp. 1632–1640).

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Nos. 61862030 and 62072218), by the Natural Science Foundation of Jiangxi Province (Nos. 20182BCB22006, 20181BAB202010, 20192ACB20002, and 20192ACBL21008), and by the Talent project of Jiangxi Thousand Talents Program (No. jxsq2019201056).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, Y., Hu, W., Huang, S. et al. Low-light image enhancement network based on multi-stream information supplement. Multidim Syst Sign Process 33, 711–723 (2022). https://doi.org/10.1007/s11045-021-00812-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-021-00812-w