Abstract

This paper provides a fusion technique for multi-focus imaging using cross bilateral filter and non-subsampled contourlet transform. The snapshots are decomposed into distinct approximation and detail components. The original image and approximation component is passed through cross bilateral filter to obtain approximation weight map. Whereas, the detail components are combined using weighted average to obtain detail weight map. The weights are combined together and used with original images to obtain the resultant fused image. Visual and quantitative analysis shows the significance of proposed scheme in comparison to state of art fusion schemes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

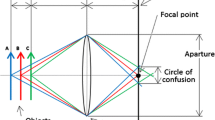

Optical lenses due to narrow depth-of-focus often provide images containing both “in focus” and “out focus” regions. One way to resolve this issue is to combine several pictures into a single composite fused photograph containing all focus region (Zhang and Blum 1999; Song et al. 2006). Conventional image fusion techniques are based on numerical operations like addition, multiplication, maximum and median operators. These techniques provide efficiency but are not much accurate to fuse multi-focus images.

Classical multi-scale transform (MST) based techniques including Laplacian pyramid (LP) (Burt and Adelson 1983), ratio of low-pass pyramid (RP) (Toet 1989), discrete wavelet transform (DWT) (Li et al. 1995), stationary wavelet transform (SWT) (Beaulieu et al. 2003), dual-tree complex wavelet transform (DTCWT) (Lewis et al. 2007), curvelet transform (CVT) (Nencini et al. 2007), non-subsampled contourlet transform (NSCT) (Zhang and Guo 2009), orthogonal wavelet transform (http://www.metapix.de/), and shift invariant discrete wavelet transform (SIDWT) are widely used to fuse different types of images. These techniques extract the underlying salient information by decomposing the images into different coefficients (Li et al. 2011), however sometimes fail to preserve useful image details (Rockinger 1997).

Multiscale decomposition and bilateral filter (BF) based fusion is presented for preserving edges and directional information (Aslantas and Toprak 2014). Multi-level local extrema based technique decomposes the images into coarse and detailed layers and fuses using local energy and contrast function (Jameel et al. 2015). Fusion of low frequency sub-bands employing window neighborhood entropy and high frequency sub-band using window standard deviation, utilizes the correlation information which is present between adjacent pixels (Kumar 2013). A similar technique (based on wavelet transform and human visual system) uses visibility and variance measure for fusion and window-based consistency verification for minimizing noise and preserving homogeneity (Stathaki 2011). Two-state hidden Markov tree and shift-invariant shearlet transform based fusion minimizes color distortion (Liu et al. 2017). Multiscale fusion utilizing cross-scale rule and intra/inter scale consistencies exploits neighborhood information for optimal selection of coefficients (Patel et al. 2015).

Cross BF (CBF) based technique considers intensity resemblance and geometric closeness for computation of fusion weights (Kumar 2015). Guided filter fusion (GFF) utilizes guided filter for edge preservation in the fused images (Li et al. 2013). Multi-focus image fusion (NEMIF) uses decision map along with energy of Laplacian in guided filtering to compensate sharp changes (Zhan et al. 2015). Fast filtering image fusion (FFIF) based fusion provides less computational complexity but still blurred boundaries are not efficiently addressed (Zhan et al. 2017). However, this technique is not much feasible for fusion of multi-focus snapshots as the blurred regions do not have clear boundaries.

To overcome these limitations, an improved weighted fusion scheme for multi-focus imaging using CBF and NSCT is proposed. NSCT helps to disintegrate the source images into different approximation and detail components. The original image and approximation component is passed through cross bilateral filter to obtain approximation weight map. Whereas, the detail components are combined using weighted average to obtain detail weight map. The weights are combined together and used with original images to obtain the resultant fused image. Proposed technique provides better visual and quantitative fusion results as compared to existing fusion techniques.

2 Proposed methodology

The first step of proposed technique is decomposition of source images into different detail and approximation components. For this purpose, NSCT provides better shift invariance and better directional selectivity (compared to other MST transforms) (Liu et al. 2015). Let, multi-focus images (having dimension \(N\times O\), where \(n=1,2 \ldots N\) and \(o=1,2 \ldots O\)) are represented by P and Q respectively. The NSCT decomposition is,

where \(j=1,2,\ldots ,J\) represents decomposition level, \((P_A,Q_A)\) are approximation and \((P_{D_j},Q_{D_j})\) are detail components (of dimension \(N\times {O}\)) respectively.

2.1 Weights of components

In order to compute weights of approximation components (\(P_A\), \(Q_A\)) CBF (Kumar 2015) is used. The CBF output \(C_{P_A}\) for image \(P_A\) at location (n, o) is,

where, \(\xi _H\) is a normalizing constant, \(n-\lfloor \acute{N}/2 \rfloor \le \acute{n} \le n+\lfloor \acute{N}/2 \rfloor \) and \(o-\lfloor \acute{O}/2 \rfloor \le \acute{o} \le o+\lfloor \acute{O}/2 \rfloor \). The window size of \(\acute{N} \times \acute{O}\) is chosen empirically depending on the image characteristics. The geometric closeness function \(\varGamma _{\sigma _{d}}\) is,

The gray-level similarity function \(\varGamma ^{Q_H Q_H}_{\sigma _{r}}\) is,

where, the parameters \(\sigma _d\) and \(\sigma _r\) are utilized to check the fall-off weights in spatial and intensity domains respectively. Similarly for image \(Q_A\), the CBF output \(C_{Q_H}\) is,

The difference images \(X_{P_A}\) and \(X_{Q_A}\) are computed to find the strength of information present in \(C_{P_A}\) and \(C_{Q_A}\) images respectively.

The weight matrix \(W_{P_A}\) and \(W_{Q_A}\) are computed using pixel statistical properties (Liu et al. 2015), i.e.

Both weight matrices \(W_{P_A}\) and \(W_{Q_A}\) for component \(P_A\) and \(Q_A\) are treated as initial sub CBF weight matrices. Initial CBF based weights \(W_{P}\) and \(W_{Q}\) of both input images P and Q are computed in the same way.

First CBF weight matrix \(W_{\dot{P}}\) is computed by combining \(W_{P_{A}}\) and \(W_P\), i.e.,

where \(\alpha _P\) and \(\alpha _Q\) are weighting factor. Weighted images \(W_{P_{D}}\) and \(W_{Q_{D}}\) of detail components \(P_{D_j}\) and \(Q_{D_j}\) are computed using Eq. 12 and combined to obtain \(W_{P_f}\)\(W_{Q_f}\) respectively.

Finally fused image F is computed as given below,

Figure 1 shows the flow chart of proposed technique.

Step wise output a, b Multi-focus input images c, d approximation components e, f first detail components g, h second detail components i, j detail weight maps k, l CBF (Kumar 2015) weight maps m, n final weight maps o fused image

3 Results and analysis

The overall performance of existing and proposed schemes are evaluated on different multi-focus images (Saeedi and Faez 2015; Nejati et al. 2015). The source images belong to different classes of normal and tumor cases. For quantitative analysis of existing and proposed techniques, different statistical and objective measures are used. These include average pixel intensity (\(\chi ^{\text {API}}\)), entropy (\(\chi ^{\text {E}}\)), correlation coefficient (\(\chi ^{\text {CC}}\)), (\(\chi ^{\text {Q0}}\)), (\(\chi ^{\text {Qw}}\)) , structural similarity index measure (\(\chi ^{\text {SSIM}}\)) and objective fusion measure, (\(\chi ^{\text {QABF}}\)), (\(\chi ^{LAB/F}\)) (Petrovic and Xydeas 2005). For better fusion, the values of these measures should be high except fusion metric (i.e. \(\chi ^{LAB/F}\)) (Fig. 1).

Figure 2 shows the step by step images of the proposed technique. Figure 2c, d shows the approximation component of input images in which edges are not prominent. Figure 2e–h shows the detail component of input images in which edges are clearly demarcated. Figure 2i, j shows weight maps of detail components which contain more prominent boundaries. Figure 2k, l shows weight maps obtained from CBF (Kumar 2015). Figure 2m, n shows final weight maps which helps to efficiently categorize focused and defocused areas and these weights are used to compute the required fused image as shown in Fig. 2o. This idea is clearly demonstrated in this step by step image.

Figure 3a, b show multi-focus images of a “Model Girl” with buildings in background (Nejati et al. 2015). Figure 3c–i are the fused images obtained through FFIF (Zhan et al. 2017), GFSF (Bavirisetti et al. 2017), NEMIF (Zhan et al. 2015), CBF (Kumar 2015), GFF (Li et al. 2013), Rockinger CP (Rockinger 1997) and proposed fusion scheme respectively. Hair of “Model Girl” and background building peeks are not much clearly observed in existing methods. CBF (Kumar 2015) scheme in Fig. 3h is close to proposed fusion scheme but it still lack minute visual details. NEMIF (Zhan et al. 2015 technique in fig. 3(e) shows that background middle top building shows a cloudy look. Rockinger CP (Rockinger 1997) scheme in Fig. 3h is slightly blur near hand on head of “Model Girl”. In it hand boundaries of hand is mixed with hairs. The presented scheme provides superior fusion outcomes as compared to current schemes as shown in Fig. 3i. For instance, using proposed scheme, the “Model Girl” hair and background peaks of different buildings are clearly observed with sharp and better demarcated boundaries (hence better suitable for visual analysis).

Figure 4a, b show multi-focus images of a “Baby Doll” with a cartoon and girl as backgroundarea. Figure 4c—i are the fused images obtained through FFIF (Zhan et al. 2017), GFSF (Bavirisetti et al. 2017), NEMIF (Zhan et al. 2015), CBF (Kumar 2015) , GFF (Li et al. 2013), Rockinger CP (Rockinger 1997) and proposed fusion scheme respectively. From these images it can be clearly observed that face of baby is blurred (defocused) in first “Baby Doll” image and background is blurred (defocused) in second “Baby Doll” image (Saeedi and Faez 2015). In Fig. 4f CBF (Kumar 2015) scheme, the top right hairs of “Baby Doll” are not clearly distinguished shade is observed under right eye. In Fig. 4e NEMIF (Zhan et al. 2015) shows a cloudy look with a background standing behind the cartoon. In Fig. 4h Rockinger CP (Rockinger 1997) scheme shows slightly blurr look near top right eye. The existing fusion methods method produces blur images at background doll boundary edges. Whereas in proposed scheme the background edges are more clear, making it more valuable for human analysis. And final resultant fused image authenticate the novelty of the proposed scheme.

Figure 5a, b show multi-focus images of “Heart” with background of different building structures (Nejati et al. 2015). Figure 5c–i are the fused images obtained through FFIF (Zhan et al. 2017), GFSF (Bavirisetti et al. 2017), NEMIF (Zhan et al. 2015), CBF (Kumar 2015), GFF (Li et al. 2013), Rockinger CP (Rockinger 1997) and proposed fusion scheme respectively. “Heart” inner surface scene and background building structures are blurred and not clearly demarcated. In Fig. 6f CBF (Kumar 2015) scheme, the right side of “Heart” is not clearly demarcated with the background buildings. The fused image in Fig. 5i obtained by proposed scheme offers different benefits as compared to other methods. For example the sharp and clear edges can provide an exact extent of differentiation. “Heart” inner surface structure and background building structures along with river are also very clear.

Figure 6a, b show multi-focus images of a “Horse” with background of small ground and trees (Saeedi and Faez 2015). Figure 6c–i are the fused images obtained through FFIF (Zhan et al. 2017), GFSF (Bavirisetti et al. 2017), NEMIF (Zhan et al. 2015), CBF (Kumar 2015), GFF (Li et al. 2013), Rockinger CP (Rockinger 1997) and proposed fusion scheme respectively. In Fig. 6f CBF (Kumar 2015) scheme, the background scene over trees is not clearly visible. Figure 6e NEMIF (Zhan et al. 2015) shows mixed edge of man’s white cap and ground. Figure 4h Rockinger CP (Rockinger 1997) scheme is slightly blurr near boundary with respect to background of “Horse”. Results of present fusion techniques for multi-focus images are analyzed and inside the developed head areas of the fused results, it produces fade images with low contrast state along with the blurred regions. In The fused image in Fig. 6i obtained by proposed scheme avoids the cited problems which includes blurring effect and additionally produces a clear fused image with excessive details.

Table 1 shows the quantitative assessment of the proposed and existing techniques on different images. It could be observed that the proposed approach have generally higher quantitative values as compared to other techniques. Table 2 summarizes the quantitative results of existing and proposed fusion schemes on multiple images. The proposed scheme gives better quantitative results in comparison to state of the art existing strategies. In nut-shell, the proposed fusion technique generates higher fusion results both visually and quantitatively.

4 Conclusion

An improved weighted fusion scheme for multi-focus imaging using CBF and NSCT is proposed. NSCT helps to disintegrate the source images into different approximation and detail components. The original image and approximation component is passed through cross bilateral filter to obtain approximation weight map. Whereas, the detail components are combined using weighted average to obtain detail weight map. The weights are combined together and used with original images to obtain the resultant fused image. Proposed technique provides better visual and quantitative fusion results as compared to existing fusion techniques.

References

Aslantas, V., & Toprak, A. N. (2014). A pixel based multi-focus image fusion method. Optics Communications, 332, 350–358.

Bavirisetti, D. P., Kollu, V., Gang, X., & Dhuli, R. (2017). Fusion of MRI and CT images using guided image filter and image statistics. International Journal of Imaging Systems and Technology, 27(3), 227–37.

Beaulieu, M., Foucher, S., & Gagnon, L. (2003). Multi-spectral image resolution refinement using stationary wavelet transform. In Proceedings of 3rd IEEE international geoscience and remote sensing symposium (pp. 4032–4034).

Burt, P., & Adelson, E. (1983). The laplacian pyramid as a compact image code. IEEE Transactions on Communications, 31(4), 532–540.

Jameel, A., Ghafoor, A., & Riaz, M. M. (2015). All in focus fusion using guided filter. Multidimensional Systems and Signal Processing, 26(3), 879–89.

Kumar, B. S. (2013). Multifocus and multispectral image fusion based on pixel significance using discrete cosine harmonic wavelet transform. Signal, Image and Video Processing, 7(6), 1125–43.

Kumar, B. S. (2015). Image fusion based on pixel significance using cross bilateral filter. Signal, Image and Video Processing, 9(5), 1193–204.

Lewis, J., OCallaghan, R., Nikolov, S., Bull, D., & Canagarajah, N. (2007). Pixel- and regionbased image fusion with complex wavelets. Information Fusion, 8(2), 119–130.

Li, S., Kang, X., & Hu, J. (2013). Image fusion with guided filtering. IEEE Transactions on Image Processing, 22(7), 2864–75.

Li, H., Manjunath, B., & Mitra, S. (1995). Multisensor image fusion using the wavelet transform. Graphical Models and Image Processing, 57(3), 235–245.

Liu, Y., Liu, S., & Wang, Z. (2015). A general framework for image fusion based on multi-scale transform and sparse representation. Information Fusion, 1(24), 147–64.

Liu, S., Shi, M., Zhu, Z., & Zhao, J. (2017). Image fusion based on complex-shearlet domain with guided filtering. Multidimensional Systems and Signal Processing, 28(1), 207–24.

Li, S., Yang, B., & Hu, J. (2011). Performance comparison of different multi-resolution transforms for image fusion. Information Fusion, 12(2), 74–84.

Nejati, M., Samavi, S., & Shirani, S. (2015). Multi-focus image fusion using dictionary-based sparse representation. Information Fusion, 1(25), 72–84.

Nencini, F., Garzelli, A., Baronti, S., & Alparone, L. (2007). Remote sensing image fusion using the curvelet transform. Information Fusion, 8(2), 143–156.

Patel, R., Rajput, M., & Parekh, P. (2015). Comparative study on multi-focus image fusion techniques in dynamic scene. International Journal of Computer Applications, 109(6), 5–9.

Petrovic, V., & Xydeas, C. (2005). Objective image fusion performance characterisation. In Tenth IEEE international conference on computer vision ICCV (Vol. 2, pp. 1866–1871). IEEE.

Rockinger, O. (1997). Image sequence fusion using a shift-invariant wavelet transform. In Proceedings of international conference on image processing (Vol. 3, pp. 288–291). IEEE.

Saeedi, J., & Faez, K. (2009). Fisher classifier and fuzzy logic based multi-focus image fusion. In IEEE international conference on intelligent computing and intelligent systems ICIS (Vol. 4, pp. 420–425). IEEE.

Saeedi, J, & Faez, K. (2015). Multi-focus image dataset. pp. 420–425.

Song, Y., Li, M., Li, Q., & Sun, L. (2006). A new wavelet based multi-focus image fusion scheme and its application on optical microscopy. In IEEE international conference on 2006 robotics and biomimetics ROBIO’06 (pp. 401–405). IEEE.

Stathaki, T. (2011). Image fusion: Algorithms and applications. Amsterdam: Elsevier.

Toet, A. (1989). Image fusion by a ratio of low pass pyramid. Pattern Recognition Letters, 9(4), 245–253.

Zhang, Z., & Blum, R. S. (1999). A categorization of multiscale-decomposition-based image fusion schemes with a performance study for a digital camera application. Proceedings of the IEEE, 87(8), 1315–26.

Zhang, Q., & Guo, B. (2009). Multifocus image fusion using the nonsubsampled contourlet transform. Signal Processing, 89(7), 1334–1346.

Zhan, K., Teng, J., Li, Q., & Shi, J. (2015). A novel explicit multi-focus image fusion method. Journal of Information Hiding and Multimedia Signal Processing, 6(3), 600–612.

Zhan, K., Xie, Y., Wang, H., & Min, Y. (2017). Fast filtering image fusion. Journal of Electronic Imaging, 26(6), 063004.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ch, M.M.I., Riaz, M.M., Iltaf, N. et al. Weighted image fusion using cross bilateral filter and non-subsampled contourlet transform. Multidim Syst Sign Process 30, 2199–2210 (2019). https://doi.org/10.1007/s11045-019-00646-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-019-00646-7