Abstract

Diabetes has become one of the significant reasons for public sickness and death in worldwide. By 2019, diabetes had affected more than 463 million people worldwide. According to the International Diabetes Federation report, this figure is expected to rise to more than 700 million in 2040, so early screening and diagnosis of diabetes patients have great significance in detecting and treating diabetes on time. Diabetes is a multi factorial metabolic disease, its diagnostic criteria are difficult to cover all the ethology, damage degree, pathogenesis and other factors, so there is a situation for uncertainty and imprecision under various aspects of the medical diagnosis process. With the development of Data mining, researchers find that machine learning and deep learning, playing an important role in diabetes prediction research. This paper is an in-depth study on the application of machine learning and deep learning techniques in the prediction of diabetics. In addition, this paper also discusses the different methodology used in machine and deep learning for prediction of diabetics since last two decades and examines the methods used, to explore their successes and failure. This review would help researchers and practitioners understand the current state-of-the-art methods and identify gaps in the literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

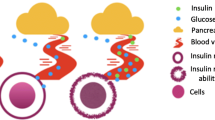

According to the International Diabetes Federation (IDF) [1] statistics, there were 415 million people suffering from diabetes around the world in 2015. By 2040 this number is expected to rise to over 642 million, as a consequence, diabetes has become the main cause of national disease and death in most countries. Diabetes is a group of metabolic diseases in which a person has high blood glucose, either because the body does not produce enough insulin, or because the cells do not respond to the insulin that is produced [2, 3]. Most diabetes can be categorized into 3 subgroups: type 1 diabetes (T1D), type 2 diabetes (T2D), and gestational diabetes (GDM). Over the long term, T2D patients become resistant to the normal effects of insulin and gradually lose their capacity to produce enough of this hormone. A wide range of therapeutic options is available for patients with T2D. At the early stages of disease, they commonly receive medications that improve insulin secretion or insulin absorption, but eventually they must receive external doses of insulin. On the other hand, T1D patients have severe impairments in insulin production, and must use external insulin exclusively to manage their blood glucose (BG). Treatment of T1D requires consistent doses of insulin through multiple daily injections (MDIs) or continuous subcutaneous insulin infusion (CSII) using a pump. GDM is treated similarly to T2D, but only occurs during pregnancy due to the interaction between insulin and hormones released by the placenta. Figure 1 represents the statistical data of diabetics’ patients from the year 2000 onwards.

In 2000, the global estimate of adults living with diabetes was 151 million. By 2009 it had grown by 88% to 285 million. Today, 9.3% of adults aged 20–79 years – a staggering 463 million people – are living with diabetes. A further 1.1 million children and adolescents under the age of 20, live with type 1 diabetes. A decade ago, in 2010, the global projection for diabetes in 2025 was 438 million. With over five years still to go, that prediction has already been surpassed by 25 million. IDF (International Diabetes Federation) estimates that there will be 578 million adults with diabetes by 2030, and 700 million by 2045. Diabetes is one of the deadliest diseases that claim millions of lives each year. According to the WHO (World Health Organization), it was estimated that 3.4 million deaths are caused due to high blood sugar. It has been found that the over diagnosis of diabetes may lead to comorbidity like cognitive impairment, stroke, cancer, kidney problem etc. Therefore, it should be diagnosed at the earliest. In year 2000, India topped the world with 31.7 million people suffered from diabetes followed by China with second place and United States with third place [4]. It is predicted that by the year 2030 diabetes mellitus may affect up to 79.40 million people in India [5]. In last 40 years, a fourfold rise has been witnessed for this contagious disease [6]. According to International Diabetes Federation, in 2017, there are around 425 million populations suffering from diabetes across the world. It is also estimated that by 2045 the rise in the diabetic population will be increased by 32% [7]. Currently, China, India, USA, Brazil, and Russia are the top five countries with the highest rate of diabetic population. Figure 2 [8] shows the percentage of people affected by diabetes.

Data Mining and Artificial Intelligence (AI) plays an important role in the prediction of diabetes. With the continuous development of artificial intelligence and data mining technology, researchers begin to consider using machine learning and deep learning techniques to search for the characteristics of diabetes. Machine learning techniques can find implied pathogenic factors in virtue of analyzing and using diabetic data, with a high stability and accuracy in diabetes diagnosis. Therefore, machine learning techniques which can find out the reasonable threshold risk factors and physiological parameters provide new ideas for screening and diagnosis of diabetes [9]. Diabetes is a very serious disease that, if not treated properly and on time, can lead to very serious complications, including death. This makes diabetes, one of the main priorities in medical science research, which in turn generates huge amounts of data. Constantly increasing volumes of data are very well suited to be processed using data mining that can readily handle them. Using data-mining methods in diabetes research is one of the best ways to utilize large volumes of available diabetes-related data for extracting knowledge. Both descriptive (association and clustering) and predictive (classification) data-mining methods are used in the process. These data-mining methods are different from traditional statistic approaches in many ways [10].

1.1 Machine learning/deep learning and its application for diabetic prediction

Machine learning and deep learning techniques hold great promise in improving the early detection and management of diabetes, potentially leading to better patient outcomes and reduced healthcare costs.

-

Data Collection and Preprocessing: The first step involves collecting relevant data from patients, which may include demographic information (age, gender), medical history (family history of diabetes, past diagnoses), lifestyle factors (diet, exercise), and clinical measurements (blood glucose levels, blood pressure, cholesterol levels). This data needs to be preprocessed to handle missing values, normalize features, and remove noise.

-

Feature Selection and Engineering: Feature selection involves identifying the most relevant variables that contribute to the prediction of diabetes. Feature engineering may involve creating new features from existing ones or transforming the data to improve model performance.

-

Model Selection: Various machine learning algorithms can be employed for diabetic prediction, including logistic regression, decision trees, random forests, support vector machines (SVM), and neural networks. Deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), can also be used to capture complex patterns in the data.

-

Model Training and Evaluation: The selected model is trained using labeled data, where the outcome (diabetes status) is known. The model's performance is evaluated using metrics such as accuracy, precision, recall, F1-score, and area under the receiver operating characteristic curve (AUC-ROC) on a separate validation dataset.

-

Deployment and Integration: Once the model is trained and evaluated, it can be deployed in clinical settings to assist healthcare providers in identifying individuals at risk of diabetes. Integration with electronic health record (EHR) systems can facilitate real-time prediction and decision-making.

-

Continuous Monitoring and Updating: As new data becomes available, the model should be periodically retrained and updated to ensure its accuracy and relevance over time. Continuous monitoring of model performance and outcomes can help improve its effectiveness in predicting diabetes and related complications.

Some challenges and considerations in diabetic prediction using machine learning and deep learning include the need for large and diverse datasets, addressing class imbalance (since the number of diabetic patients may be much smaller than non-diabetic patients), interpretability of models, and ensuring privacy and security of patient data. Healthcare data, including patient information and medical records, are sensitive and subject to strict privacy regulations such as HIPAA (Health Insurance Portability and Accountability Act) in the United States. Ensuring the privacy and security of patient data is essential when developing and deploying diabetic prediction models. Integrating ML and DL models into clinical practice requires rigorous validation to demonstrate their effectiveness and safety. The recent proliferation of data mining techniques has given rise to disease prediction systems. Specifically, with the vast amount of medical data generated every day [440].

The remainder of this paper is structured as follows. Section 2 highlights the details of different data mining technique utilized in prediction of diabetes; Section 3 mainly focuses on a detail review of diabetes prediction based on machine learning; Section 4 mainly focuses on a detail review of diabetes prediction based on deep learning; Section 5 mainly for discussion and comparission; and finally concludes the papers in section 6.

2 Data mining techniques

Both Data Mining and Machine learning are areas which have been inspired by each other, though they have many things in common, yet they have different ends. Figure 3 represents the relationship between the machine learning and data mining. Data mining, machine learning, artificial intelligence, and statistics are closely related fields that share common goals and methodologies for analyzing and extracting insights from data. They complement each other and are often used together in various applications, such as predictive modeling, pattern recognition, data visualization, and decision support.

Artificial Intelligence can enable the computer to think. The computer is made much more intelligent by AI. Machine learning is the subfield of AI study. Various researchers think that without learning, intelligence cannot be developed. There are many types of Machine Learning Techniques that are shown in Fig. 4. Supervised, Unsupervised, Semi Supervised, Reinforcement, Evolutionary Learning and Deep Learning are the types of machine learning techniques. These techniques are used to classify the data set [11]. Both supervised and unsupervised learning techniques are used depending on the nature of the data and the specific problem being addressed. Additionally, semi-supervised learning techniques combine elements of both paradigms by leveraging a small amount of labeled data along with a larger pool of unlabeled data. Reinforcement learning is a powerful paradigm in machine learning that enables agents to learn optimal behavior through trial and error, interaction with the environment, and feedback in the form of rewards.

2.1 Supervised learning

A supervised learning technique is used when the historical data is available for a certain problem. The system is trained with the inputs and respective responses and then used for the prediction of the response of new data [12]. Classification and regression are the types of Supervised Learning.

2.1.1 Classification

It gives the prediction of Yes or No, for example, “Is this tumour cancerous?”, and “Does this cookie meet our quality standards?” Common classification approaches include artificial neural network, back propagation, decision tree, support vector machines, Naive Bayes classifier, K-Nearest Neighbors (K-NN), Random forest [12]. Classification is used to classify data into predefined categorical class labels. “Class” in classification, is the attribute or feature in a data set, in which users are most interested. It is defined as the dependent variable in statistics. To classify data (or records), a classification algorithm creates a classification model consisting of classification rules. For example, banks have constructed classification models to categorize the bank loan and mortgage applications into risky or safe. In the medical field, classification can be used to help define medical diagnosis and prognosis based on symptoms and health conditions.

Support Vector Machine (SVM)

Support vector machine (SVM) is used in both classification and regression. An SVM classifier, a concept by Vladimir Vapnik, finds the optimal separating hyperplane between positive and negative classes of data. The optimal hyperplane is the one that gives maximum margin between the training examples that lie closest to the hyperplane and the data points on the two sides belong to different classes. In linear SVM the given data set is considered as p-dimensional vector that can be separated by maximum of p-1 planes called hyper-planes. These planes separate the data space or set the boundaries among the data groups for classification or regression problems. The best hyper-plane can be selected among the number of hyper-planes on the basis of distance between the two classes it separates. The plane that has the maximum margin between the two classes is called the maximum-margin hyper-plane. It can handle nonlinear classification tasks efficiently by mapping the samples into a higher dimensional feature space by using a nonlinear kernel function. Since the SVM approach is data-driven and model free, it has important discriminating power for classification. SVM algorithms use a set of mathematical functions that are defined as the kernel. The function of the kernel is to take data as input and transform it into the required form. Different SVM algorithms use different types of kernel functions. These functions can be different types. For example linear, nonlinear, polynomial, radial basis function (RBF), and sigmoid. The most used type of kernel function is RBF [442]. Because it has localized and finite response along the entire x-axis. The kernel functions return the inner product between two points in a suitable feature space. Both SVM and RF are widely used for classification tasks, including the classification of diabetic patients based on various features such as medical history, clinical measurements, and demographic information. Both algorithms can provide insights into feature importance. SVM determines the support vectors, which are the data points closest to the decision boundary, while RF calculates feature importance based on how much each feature contributes to the model's predictive performance. In diabetes research, this can help identify the most relevant features for predicting diabetes or assessing disease progression.

Decision Tree (DT)

Decision tree (DT) is a supervised learning that can be used as a regression tree while the response variable is continuous and as a classifcation tree while the response variable is categorical. Whereas the input variables are any types, as like graph, text, discrete, continuous, and so on in the case of both regression and classification. The finding of a solution with the help of decision trees starts by preparing a set of solved cases. The whole set is then divided into 1) a training set, which is used for the induction of a decision tree, and 2) a testing set, which is used to check the accuracy of an obtained solution [443]. A decision tree is a tree structure based model which describes the classification process based on input features.

The steps of DT as follows:

-

Construct a tree with its nodes as input features.

-

Select the feature to predict the output from the input features whose gives the highest information gain.

-

Repeat the above steps to form sub trees based on features which was not used in the above nodes.

The decision tree is the most powerful and popular tool for classification and prediction. A Decision tree is a flowchart like tree structure, where each internal node denotes a test on an attribute, each branch represents an outcome of the test, and each leaf node (terminal node) holds a class label. Decision trees classify instances by sorting them down the tree from the root to any leaf node, which provides the classification of the instance.

Naive Bayes Classifier (NB)

Naive Bayes classifier is a well-known type of classifiers, i.e., of programs that assign a class from a predefined set to an object or case under consideration based on the values of descriptive attributes. A well-known Bayesian network classifier is the Naïve Bayes’ classifier is a probabilistic classifier based on the Bayes’ theorem, considering Naïve (Strong) independence assumption [444]. the stage of the calculation Naive Bayes as follows:

-

Find the value of prior probability for each class calculate the average of each class.

-

Find the value of the likelihood that is a process of calculating the probability of each attribute against the class, the possibility of the emergence of a class when an attribute is selected.

-

Find the value of the posterior that is result of calculation likelihood in the form of the probability of the attribute class, calculated to divert the possibility of the attribute of the input with the class, in the process of this can be the probability of the end.

It is a classification technique based on Bayes’ Theorem with an assumption of independence among predictors. In simple terms, a Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature. For example, a fruit may be considered to be an apple if it is red, round, and about 3 inches in diameter. Even if these features depend on each other or upon the existence of the other features, all of these properties independently contribute to the probability that this fruit is an apple and that is why it is known as ‘Naive’. The naive Bayes model is easy to build and particularly useful for very large data sets. Along with simplicity, Naive Bayes is known to outperform even highly sophisticated classification methods.

K-Nearest Neighbors (KNN)

KNN algorithms are supervised non-parametric learning algorithms that learn the relationship between input and output observations. It is simply based on the idea that “objects that are ‘near’ each other will also have similar characteristics. Thus if you know the characteristic features of one of the objects, you can also predict it for its nearest neighbour.” k-NN is an improvisation over the nearest neighbour technique. It is based on the idea that any new instance can be classified by the majority vote of its ‘k’ neighbours, - where k is a positive integer, usually a small number [445].

The criterion is defined by Euclidean distance; and if the two locations contain O1 = {x11, x12, x13, ………. ., x1n} and O2 = {x21, x22, x23, ………. ., x2n} then the Euclidean distance between them is defined according to Eq. (1).

The algorithm is based on the distance between two instances, which represents their similarity. KNN identifies k instances in the training set and then classifies a new instance based on how similar (i.e., near) it is to its neighbors. Generally speaking, a new instance is classified by a majority vote of its neighbors. Thus, when the algorithm is used for classification purposes, the output is the class membership of the new instance.

The hyperparameter k is a user-defined positive odd integer, typically small. If k = 1, the algorithm considers the neighbor nearest to the unclassified instance. If k = 3, then KNN compares the distances of the three neighbors nearest to the unclassified instance.

Random Forest (RF)

A random forest classifier is the assembly of tree-structured classifiers. This algorithm supplements the objects from array of input to every tree of the forest. The elements of the unit vector are individually voted for classification by every single tree. The forest filters the most voted classifications out of the forest. The simplest random forest with random features is formed by selecting at random, at each node, a small group of input variables to split on. Grow the tree using CART methodology to maximum size and do not prune. Random forest is a machine learning methodology for classification, which is commonly used in computational biology fields. Independently trained decision trees are merged in a random forest, which is done by subsets randomly sampled with replacement from the training data. Every branch of decision tree discovers a best feature in the training time. The best feature randomly chosen from a subset of feature space. Because trees are trained in subset of feature space and training data, they should not be produced with post-pruning [446] .The prediction of RF is the average or a majority vote of all tree predictions that have been trained. Random forest algorithm has three parameters that should be set in training time. These parameters are the number of growing trees, the minimum node size to split and the number of features to select randomly for each split. RF reduces the degree of over-fitting by combining multiple overfit evaluators (ie, decision trees) to form an ensemble learning algorithm. Each decision tree can get the corresponding classifcation decision result. By using the voting results of each decision tree in the forest, the category of the sample to be tested is determined according to the principle of minority obeying the majority, and the category with higher votes in all decision trees was determined to be the final result.

One of the biggest advantages of random forest is its versatility. It can be used for both regression and classification tasks, and it’s also easy to view the relative importance it assigns to the input features. Random forest is also a very handy algorithm because the default hyperparameter it uses often produce a good prediction result. Understanding the hyperparameter is pretty straightforward, and there's also not that many of them. One of the biggest problems in machine learning is overfitting, but most of the time this won’t happen thanks to the random forest classifier. If there are enough trees in the forest, the classifier won’t overfit the model. The main limitation of random forest is that a large number of trees can make the algorithm too slow and ineffective for real-time predictions [447]. In general, these algorithms are fast to train, but quite slow to create predictions once they are trained. A more accurate prediction requires more trees, which results in a slower model. In most real-world applications, the random forest algorithm is fast enough but there can certainly be situations where run-time performance is important and other approaches would be preferred. And, of course, random forest is a predictive modeling tool and not a descriptive tool, meaning if you're looking for a description of the relationships in your data, other approaches would be better.

2.1.2 Regression

Regression analysis consists of a set of machine learning methods that allow us to predict a continuous outcome variable (y) based on the value of one or multiple predictor variables (x). Briefly, the goal of regression models is to build a mathematical equation that defines y as a function of the x variables. Next, this equation can be used to predict the outcome (y) on the basis of new values of the predictor variables (x). Linear regression is the most simple and popular technique for predicting a continuous variable. It assumes a linear relationship between the outcome and the predictor variables.

The linear regression equation can be written as y = b0 + b*x + e, where b0 is the intercept, b is the regression weight or coefficient associated with the predictor variable x and e is the residual error. When it has multiple predictor variables, say x1 and x2, the regression equation can be written as y = b0 + b1*x1 + b2*x2 +e. In some situations, there might be an interaction effect between some predictors that is for example, increasing the value of a predictor variable x1 may increase the effectiveness of the predictor x2 in explaining the variation in the outcome variable [448].

In some cases, the relationship between the outcome and the predictor variables is not linear. In these situations, it needs to build a non-linear regression, such as polynomial and spline regression. Regression is a supervised learning technique which helps in finding the correlation between variables and enables us to predict the continuous output variable based on the one or more predictor variables. It is mainly used for prediction, forecasting, time series modeling, and determining the causal-effect relationship between variables.

In Regression, plot a graph between the variables which best fits the given data points, using this plot, the machine learning model can make predictions about the data. In simple words, "Regression shows a line or curve that passes through all the data points on target-predictor graph in such a way that the vertical distance between the data points and the regression line is minimized." The distance between data points and the line tells whether a model has captured a strong relationship or not. The most common regression techniques are: Linear regression (LR), Logistic regression, Polynomial regression, support vector regression, Decision tree regression and Random forest regression.

2.2 Unsupervised learning

Unsupervised Learning is a machine learning technique in which the users do not need to supervise the model. Instead, it allows the model to work on its own to discover patterns and information that was previously undetected. It mainly deals with the unlabeled data. It allows users to perform more complex processing tasks compared to supervised learning, whereas unsupervised learning can be more unpredictable compared with other natural learning methods. Unsupervised learning algorithms include clustering, association rule, neural networks, etc.

2.2.1 Clustering

Clustering is the task of dividing the population or data points into a number of groups such that data points in the same groups are more similar to other data points in the same group and dissimilar to the data points in other groups. It is basically a collection of objects on the basis of similarity and dissimilarity between them. Clustering is very much important as it determines the intrinsic grouping among the unlabeled data present. There are no criteria for a good clustering. It depends on the user, what are the criteria they may use which satisfy their needs [449] . There are different forms of clustering, which is explained below.

-

Density-Based Methods: These methods are considered the clusters as the dense region having some similarity and different from the lower dense region of the space. These methods have good accuracy and the ability to merge two clusters. Example DBSCAN (Density-Based Spatial Clustering of Applications with Noise), OPTICS (Ordering Points to Identify Clustering Structure) etc.

-

Hierarchical Based Methods: The clusters formed in this method form a tree-type structure based on the hierarchy. New clusters are formed using the previously formed one. It is divided into two categories Agglomerative (bottom up approach) and Divisive (top down approach).

2.2.2 Association rule

Association rule mining finds interesting associations and relationships among large sets of data items. This rule shows how frequently an item set occurs in a transaction. A typical example is Market Based Analysis. Association rule learning is a type of unsupervised learning technique that checks for the dependency of one data item on another data item and maps accordingly so that it can be more profitable. It tries to find some interesting relations or associations among the variables of the dataset. It is based on different rules to discover the interesting relations between variables in the database [450]. To measure the associations between thousands of data items, there are several metrics. These metrics are given below:

-

Support: Support is the frequency of A or how frequently an item appears in the dataset. It is defined as the fraction of the transaction T that contains the item set X. If there are X datasets, then for transactions T, it can be written as\(Supp\ (x)=\frac{freq(x)}{T}\).

-

Confidence: Confidence indicates how often the rule has been found to be true. Or how often the items X and Y occur together in the dataset when the occurrence of X is already given. It is the ratio of the transaction that contains X and Y to the number of records that contain X.

-

Lift: It is the strength of any rule. It is the ratio of the observed support measure and expected support if X and Y are independent of each other. It has three possible values: If Lift= 1: The probability of occurrence of antecedent and consequent is independent of each other. If lift>1: It determines the degree to which the two item sets are dependent on each other. If lift<1: It tells us that one item is a substitute for other items, which means one item has a negative effect on another. Apriori Algorithm is the most common association technique used in machine learning application.

2.3 Semi-supervised learning

Semi-supervised learning is the type of machine learning that uses a combination of a small amount of labeled data and a large amount of unlabelled data to train models. This approach to machine learning is a combination of supervised machine learning, which uses labeled training data, and unsupervised learning, which uses unlabelled training data. Semi-supervised machine learning is a combination of supervised and unsupervised learning. The basic procedure involved is that first, the developer will cluster similar data using an unsupervised learning algorithm and then use the existing labeled data to label the rest of the unlabelled data [451].

2.4 Reinforcement learning

Reinforcement learning differs from the supervised learning in a way that in supervised learning the training data has the answer key with it so the model is trained with the correct answer itself whereas in reinforcement learning, there is no answer but the reinforcement agent decides what to do to perform the given task. In the absence of a training dataset, it is bound to learn from its experience [452].

2.5 Evolutionary learning

Evolutionary algorithms are a heuristic-based approach to solving problems that cannot be easily solved in polynomial time, such as classically NP-Hard problems, and anything else that would take far too long to exhaustively process. When used on their own, they are typically applied to combinatorial problems; however, genetic algorithms are often used in tandem with other methods, acting as a quick way to find a somewhat optimal starting place for another algorithm to work off of [453].

2.6 Deep learning

Deep learning is an artificial intelligence (AI) function that imitates the workings of the human brain in processing data and creating patterns for use in decision making. Deep learning is a subset of machine learning in artificial intelligence that has networks capable of learning unsupervised from data that is unstructured or unlabelled also known as deep neural learning or deep neural network [454]. It has the following characteristics.

-

Deep learning is an AI function that mimics the workings of the human brain in processing data for use in detecting objects, recognizing speech, translating languages, and making decisions.

-

Deep learning AI is able to learn without human supervision, drawing from data that is both unstructured and unlabelled.

-

Deep learning, a form of machine learning, can be used to help detect fraud or money laundering, among other functions.

The different types of neural networks in deep learning are convolutional neural networks (CNN), recurrent neural networks (RNN), artificial neural networks (ANN), etc. The neural network has been widely used to train predictive models for applications such as image processing, disease prediction, and face recognition [439].

2.6.1 ANN

Artificial Neural Network, or ANN, is a group of multiple perceptrons/ neurons at each layer. ANN is also known as a Feed-Forward Neural network because inputs are processed only in the forward direction. ANN consists of 3 layers – Input, Hidden and Output. The input layer accepts the inputs, the hidden layer processes the inputs, and the output layers produce the result. Essentially, each layer tries to learn certain weights. In a neural network, one neuron to the other neuron connection exists with some strength known as weight or synaptic weight. The neural network consists of feedback, and information can flow from the input layer to the output layer via one or more hidden layers, and vice versa known as a feedback neural network [455].

2.6.2 CNN

A Convolutional Neural Network (CNN) is a Deep Learning algorithm which can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other. The pre-processing required in a CNN is much lower as compared to other classification algorithms. While in primitive methods, filters are hand-engineered, with enough training, CNN has the ability to learn these filters/characteristics. Convolutional neural network (CNN) is one of the most popular and used of DL networks . Because of CNN, DL is very popular nowadays. The main advantage of CNN compared to its predecessors is that it automatically detects the significant features without any human supervision which made it the most used [456].

2.6.3 RNN

In a feed-forward neural network, the information only moves in one direction — from the input layer, through the hidden layers, to the output layer. The information moves straight through the network and never touches a node twice.

Feed-forward neural networks have no memory of the input they receive and are bad at predicting what’s coming next. Because a feed-forward network only considers the current input, it has no notion of order in time. It simply can’t remember anything about what happened in the past except its training. In a RNN the information cycles through a loop. When it makes a decision, it considers the current input and also what it has learned from the inputs it received previously [457].

A usual RNN has a short-term memory. In combination with an LSTM they also have a long-term memory. A recurrent neural network, however, is able to remember those characters because of its internal memory. It produces output, copies that output and loops it back into the network. Therefore, a RNN has two inputs: the present and the recent past. This is important because the sequence of data contains crucial information about what is coming next, which is why a RNN can do things other algorithms can’t. A feed-forward neural network assigns, like all other deep learning algorithms, a weight matrix to its inputs and then produces the output. RNNs apply weights to the current and also to the previous input. Furthermore, a recurrent neural network will also tweak the weights for both through gradient descent and back propagation through time (BPTT).

3 Review based on machine learning technique in diabetes prediction

3.1 Supervised learning (Classification)

3.1.1 Support vector machine (SVM)

Diabetic retinopathy has become a common eye disease in most developed countries. It occurs in 80% of all diabetic cases and is the leading cause of blindness [13]. Regular screening is the most efficient way of reducing the preventable eye damages. There are two kinds of symptoms in the diabetic retinopathy. One is dark lesion that includes hemorrhages, and microaneurysms. The other is bright lesion such as exudates and cotton wool spots. Microaneurysms are commonly detected in the retinal fluorescein angiography [14, 15].

In 2005, Zhang and Chutatape [16] introduced an SVM approach for detection of hemorrhages in background diabetic retinopathy. This paper focuses on the detection of hemorrhages which have "dot" and "blot" configurations in the background diabetic retinopathy with their color similar to the blood vessels. In this paper, a top-down strategy is applied to detect hemorrhages. The SVM classifier uses features extracted by combined 2DPCA (Two-Dimensional Principal Component Analysis) instead of explicit image features as the input vector. After locating the hemorrhages in the ROI (Region of Interest), the boundaries of the hemorrhages can be accurately segmented by the post-processing stage. The paper demonstrates a new implementation of various techniques on the problem and shows the improvement it offers over the others. Combined 2DPCA is proposed and virtual SVM is applied to achieve higher accuracy of classification. The test result demonstrates that the TP (True Positive) rate of SVM is 89.1%, while that of ANN is 84.6% at FP rate of two FP per image. The Gaussian kernel is used in SVM. The SVM based on SRM (Structural Risk Minimization) appears to be superior to ANN that employs ERM (Empirical Risk Minimization). It also compared the performance of SVM with VSVM (Virtual SVM) and found that classification accuracy of VSVM that uses the rotation invariance and illuminance invariance is better than SVM. When number of FP remains 2 per image, the TP rate of VSVM is 94% while the TP of SVM is 93.2%.

In 2006, Stoean et al. [17] proposed an ESVM (Evolutionary support vector machine) technique for diagnosis of diabetes mellitus. The main aim of this paper is to validate the new paradigm of evolutionary support vector machines (ESVMs) for binary classification also through an application to a real world problem, i.e. the diagnosis of diabetes mellitus. Different algorithms like (CPLEX (COmmercial Solvers for Integer Programming and Mathematical Programming by Linear Programming Extensions), SVM light, Active SVM, and Critical SVM) have been utilized for experimental evaluation and compare the performance with ESVM. The test result depicts that proposed technique offers a good enough accuracy in comparison to the state-of-the-art classical approaches and to the standard SVM formulation. Possibly, application of parameter tuning methods like SPO on ESVMs with a polynomial kernel would lead to better values for the evolutionary parameters that would improve the proportion of self-determined training errors. The proposed method achieves training accuracy of 77.95%, whereas test accuracy is 80.22%.

In 2008, Balakrishnan et al. [18] introduced a feature selection approach for finding an optimum feature subset that enhances the classification accuracy of the Naive Bayes classifier. Experiments were conducted on the Pima Indian Diabetes Dataset to assess the effectiveness of our approach. The results confirm that SVM Ranking with Backward Search approach leads to promising improvement in feature selection and enhances classification accuracy. Polat et al. [19] proposed a new cascade learning system based on Generalized Discriminant Analysis and Least Square Support Vector Machine. The proposed system consists of two stages. The first stage, used Generalized Discriminant Analysis to discriminant feature variables between healthy and patient (diabetes) data as a pre-processing process. The second stage used LS-SVM in order to classification of diabetes dataset. While LS-SVM obtained 78.21% classification accuracy, using 10-fold cross validation, the proposed system called GDA–LS-SVM obtained 82.05% classification accuracy using 10-fold cross validation.

In 2009, WU et al. [20] developed a semi-supervised based learning method (LapSVM) for diabetes disease diagnosis. Firstly, LapSVM was trained as a fully-supervised learning classifier to predict diabetes dataset and 79.17% accuracy was obtained. Then, it was trained as a semi-supervised learning classifier and got the prediction accuracy 82.29%. The obtained accuracy 82.29% is higher than other previous reports. The experiments led to the finding that LapSVM offers a very promising application, i.e., LapSVM can be used to solve a fully-supervised learning problem by solving a semi-supervised learning problem. The result suggests that LapSVM can be of great help to physicians in the process of diagnosing diabetes disease and it could be a very promising method in the situations where a lot of data are not class-labelled.

In 2010, Yu et al. [21] develop and validate SVM models for two classification schemes: Classification Scheme I (diagnosed or undiagnosed diabetes vs. pre-diabetes or no diabetes) and Classification Scheme II (undiagnosed diabetes or prediabetes vs. no diabetes). The SVM models were used to select sets of variables that would yield the best classification of individuals into these diabetes categories. The overall discriminative ability of classification Schemes I and II are represented by their AUC values (83.47% and 73.18%, respectively). Barakat et al. [22] proposed a support vector machine (SVM) for the diagnosis of diabetes. In particular, use an additional explanation module, which turns the “black box” model of an SVM into an intelligible representation of the SVM’s diagnostic (classification) decision. Result in a real-life diabetes dataset shows that intelligible SVMs provide a promising tool for the prediction of diabetes, where a comprehensible ruleset have been generated, with prediction accuracy of 94%, sensitivity of 93%, and specificity of 94%.

In 2011, Calisir et al. [23] proposed an automatic diagnosis system for diabetes on Linear Discriminant Analysis (LDA) and Morlet Wavelet Support Vector Machine Classifier: LDA–MWSVM is introduced. The Linear Discriminant Analysis (LDA) is used to separate features variables between healthy and patient (diabetes) data in the first stage. The healthy and patient (diabetes) features obtained in the first stage are given to inputs of the MWSVM classifier in the second stage. Finally, in the third stage, the correct diagnosis performance of this automatic system based on LDA–MWSVM for the diagnosis of diabetes is calculated by using sensitivity and specificity analysis, classification accuracy, and confusion matrix, respectively. The classification accuracy of this system was obtained at about 89.74%.Gupta et al. in [24] present a study aimed to do the performance analysis of several data mining classification techniques using three different machine learning tools over the healthcare datasets. In this study, different data mining classification techniques have been tested on four different healthcare datasets. The standards used are percentage of accuracy and error rate of every applied classification technique. The experiments are done using the 10 fold cross validation method. A suitable technique for a particular dataset is chosen based on highest classification accuracy and least error rate. The test result based on PIMA Indian Diabetes dataset show that an accuracy rate of 96.74% and 3.18% of the error rate is achieved using SVM technique which is superior when contrasted with another technique.

In 2020, Xue et al. [88] proposed an automatic diagnosis system for diabetes using supervised machine-learning algorithms like Support Vector Machine (SVM), Naive Bayes classifier and LightGBM to train on the actual data of 520 diabetic patients and potential diabetic patients aged 16 to 90. Although the naive Bayes classifier is the most popular classification algorithm, the final accuracy rate on the given data set is only 93.27%. SVM has the highest accuracy rate, with an accuracy rate of 96.54%. The accuracy of LightGBM is only 88.46%. It is found that SVM has the highest accuracy through the confusion matrix evaluation test.

In 2021, Chaves et al. [91] introduce a comparative study of data mining techniques for early diagnosis of diabetes. We use a publicly accessible data set containing 520 instances, each with 17 attributes. Naive Bayes, Neural Network, AdaBoost, k-Nearest Neighbors, Random Forest and Support Vector Machine methods have been tested. The results suggest that Neural Networks should be used for diabetes prediction. The proposed model presents an AUC of 98.3% and 98.1% accuracy, an F1-Score, Precision and Sensitivity of 98.4% and a Specificity of 97.5%. In the first experiment, author applied the Naive Bayes classifier, which correctly predicted 452 instances out of 520, a success rate of 86.92%. In the second experiment, author applied the Neural Network classifier, which correctly predicted 510 instances out of 520, a success rate of 98.08%. In the third experiment, author applied the AdaBoost classifier, which correctly predicted 506 instances out of 520, a success rate of 97.31%. In the fourth experiment, author applied the kNN classifier, which correctly predicted 506 instances out of 520, a success rate of 97.31%.In the fifth experiment, author applied the Random Forest classifier, which correctly predicted 504 instances out of 520, a success rate of 96.92%.In the last experiment, author applied the SVM classifier, which correctly predicted 505 instances out of 520, a success rate of 97.12%.

In 2022, Li et al. [408] proposed an effective biomarkers for an efficient diagnosis of type 2 diabetes. The sensitivity and specificity of the SVM model for identifying patients with type 2 diabetes were 100%, with an area under the curve of 1 in the training as well as the validation dataset. In 2023, Lei et al. [441] propose a publicly verifiable and secure SVM classification scheme (PVSSVM) for cloud-based health monitoring services. It utilize homomorphic encryption and secret sharing to protect the model and data confidentiality in the cloud server, respectively. Based on a multi-server verifiable computation framework, PVSSVM achieves public verification of predicted results. The proposed scheme achieves a reduction of approximately 83.71% in computation overhead through batch verification, as compared to one-by-one verification. Table 1 represent the related work on the diagnosis of diabetes based on SVM algorithm.

3.1.2 Decision Tree (DT)

In 2002, Breault et al. [102] introduce a classification tree approach in Classification and Regression Trees (CART) with a binary target variable of HgbA1c >9.5 and 10 predictors: age, sex, emergency department visits, office visits, comorbidity index, dyslipidemia, hypertension, cardiovascular disease, retinopathy, end-stage renal disease. The first level of the tree shows that just dividing people using an age cut-point of 65.581 years of age, 19.4% of younger people (n ¼ 3987) have a bad HgbA1c. This is 2.8 times the rate of bad HgbA1c values in those who are older (7.0%, n ¼ 3966). The dataset contains the data from 442 bed tertiary care hospital, a 500 physician multi-specialty clinic in 25 locations.

In 2004, Haung [105] investigated the potential for data mining in order to spot trends in the data and attempt to predict outcome. Feature selection has been utilized to enhance the efficiency of the data mining algorithm. Decision Tree (C4.5) is utilized in this work and results show that before feature selection, discretized C4.5 had the best performance of classification. And after feature selection C4.5 obtained the best result. Diabetic data has been collected from the Ulster community and trust hospital for the year 2000 to 2004. The dataset contained 2017 type-2 diabetic patients’ clinical information having 1124 males and 893 females.

In 2008, Liou et al. [109] proposed to detect fraudulent or abusing the reporting by health care providers using their invoice for diabetic outpatient services. The proposed work is validated in Taiwan’s National health insurance system and three kinds of a data mining algorithm like decision tree, logistic regression and neural network have been applied for this proposed work. The experimental result shows that the correct identification rate of decision tree based algorithm (99%) outperforms than the logistic regression model (92%) and neural network model (96%).

In 2010, Patil et al. [112] developed a Hybrid Prediction Model (HPM) model which uses Simple K-means clustering algorithm aimed at validating chosen class label of the given data (incorrectly classified instances are removed, i.e. pattern extracted from original data) and subsequently applying the classification algorithm to the result set. C4.5 algorithm is used to build the final classifier model by using the k-fold cross-validation method. The Pima Indians diabetes data were obtained from the University of California at Irvine (UCI) machine learning repository datasets. The proposed HPM obtained a classification accuracy of 92.38%. In order to evaluate the performance of the proposed method, sensitivity and specificity performance measures that are used commonly in medical classification studies were used.

In 2012, Kelarev et al. [116] introduced detection and monitoring of cardiovascular autonomic neuropathy, CAN, in diabetes patients. Using a small set of features identified previously, this work consists of empirical investigation and comparison of several ensemble methods based on decision trees for a novel application of the processing of sensor data from diabetes patients for pervasive health monitoring of CAN. The experiments relied on an extensive database collected by the Diabetes Complications Screening Research Initiative at Charles Sturt University and concentrated on the particular task of the detection and monitoring of cardiovascular autonomic neuropathy. The best outcomes have been obtained by the novel combined ensemble of AdaBoost (accuracy=94%) and Bagging (accuracy=92.99%) based on J48.

In 2014, Kaur et al. [129] proposed an improved J48 algorithm for the prediction of diabetics. In this proposed work, the modified J48 classifier is used to increase the accuracy rate of the data mining procedure. The data mining tool WEKA has been used as an API of MATLAB for generating the J-48 classifiers. Experimental results showed a significant improvement over the existing J-48 algorithm. Proposed algorithm has large accuracy difference than other algorithms. It has accuracy rate of 99.87% rather than others that show maximum of 77.21%accuracy. The experiment is carried out at Pima Indians diabetes dataset.

In 2018, Zou et al. [155] introduced a decision tree, random forest and neural network to predict diabetes mellitus. The dataset is the hospital physical examination data in Luzhou, China. It contains 14 attributes. In this study, five-fold cross validation was used to examine the models. In order to verity the universal applicability of the methods, chose some methods that have the better performance to conduct independent test experiments. It randomly selected 68994 healthy people and diabetic patients’ data, respectively as training set. Due to the data unbalance, it randomly extracted 5 times data. And the result is the average of these five experiments. In this study, it used principal component analysis (PCA) and minimum redundancy maximum relevance (mRMR) to reduce the dimensionality. The results showed that prediction with random forest could reach the highest accuracy (ACC = 0.8084) when all the attributes were used.

In 2020, Pei et al. [167] proposed a J48 decision tree based diabetic prediction system for chinesh people. A total of 10,436 participants who had a health check-up from January 2017 to July 2017 were recruited. With appropriate data mining approaches, 3454 participants remained in the final dataset for further analysis. Seventy percent of these participants (2420 cases) were then randomly allocated to either the training dataset for the construction of the decision tree or the testing dataset (30%, 1034 cases) for evaluation of the performance of the decision tree. The proposed approach achieved an accuracy of classification of 90.3% with a precision of 89.7% and a recall of 90.3%. Table 2 represent the related work on diagnosis of diabetes based on Decision Tree (DT) algorithm.

3.1.3 K nearest neighbour (KNN)

In 2010, Lee et al. [175] proposed a monitoring and advisory system for diabetes patient management using a Rule-Based method and KNN. This paper proposes a system that can provide appropriate management for diabetes patients, according to their blood sugar level. The system is designed to send the information about the blood sugar levels, blood pressure, food consumption, exercise, etc., of diabetes patients, and manage the treatment by recommending and monitoring food consumption, physical activity, insulin dosage, etc., so that the patient can better manage their condition. The system is based on rules and the K Nearest Neighbor (KNN) classifier algorithm, to obtain the optimum treatment recommendation. Also, a monitoring system for diabetes patients is implemented using Web Services and Personal Digital Assistant (PDA) programming.

In 2015, Farahmandian et al. [181] introduced a case study on data mining algorithms which are crucial in the diagnosis and prediction of diabetes. In this work Support Vector Machine (SVM), K Nearest Neighbors (KNN), Naïve Bayes, ID3, C4.5, C5.0, and CART algorithms are used. Evaluation and conclusion of data mining algorithms which contain 768 records of different patients have been carried out on Pima dataset. Results have shown that the degree of Accuracy in SVM algorithm equals to 81.77.

In 2018, Dey et al. [186] implement a Web Application to Predict Diabetes Disease using Machine Learning Algorithm. This work consists of development of an architecture which has the capability to predict where the patient has diabetes or not. The main aim of this exploration is to build a web application based on the higher prediction accuracy of some powerful machine learning algorithm. It used a benchmark dataset namely Pima Indian which is capable of predicting the onset of diabetes based on diagnostics manner. With an accuracy of 82.35% prediction rate Artificial Neural Network (ANN) shows a significant improvement of accuracy. The proposed model achieved an accuracy of 66.5% using KNN.

In 2020, Gupta et al. [195] introduced a Performance enhancement of diabetes prediction by finding optimum K for KNN classifier with feature selection method. The proposed work KNN and machine learning methods are used in the prediction model to classify whether the patient is diabetic or non-diabetic. The PIMA diabetes dataset is used for research purpose in the python implemented model. A research study has been performed to improve the performance of the KNN classifier by using a feature selection method, normalization and considering the different number of neighbors. The performance of classifier is measured based on different metrics such as accuracy, precision, sensitivity, specificity, f1 score and error rate. The best performance of KNN is achieved when no of neighbors (K) is 33, 40 or 45. The accuracy and error rate is same on these K and it is 87.01% and 12.99 % respectively, while a little variation is shown in other metric’s values.

In 2018, Sarkar et al. [197] proposed a K-Nearest Neighbor Learning based Diabetes Mellitus Prediction and Analysis for eHealth Services. The proposed work consists of optimal K Nearest Neighbor (Opt-KNN) learning based prediction model based on the patient’s habitual attributes in various dimensions. This approach determines the optimal number of neighbors with low error rate for providing better prediction outcome in the resultant model. The effectiveness of this machine learning eHealth model is examined by conducting experiments on the real-world diabetes mellitus data collected from medical hospitals. The setting of this K-value should be optimized according to the patterns in the dataset. It achieved lowest error rate when K=3. Thus, proposed Opt-KNN based prediction model dynamically selects K=3 as an optimal value to build an effective disease risk prediction model.

In 2021, Mohanty et al. [199] developed a KNN based prediction model for diabetic patients. The proposed work machine learning based algorithm is used to figure out various patterns in our dataset and to calculate the accuracy of this data, with hope that this serves as a stepping stone towards developing tools that can help in medical diagnosis/treatment in future. Creating an efficient diagnostic tool will help improve healthcare to a great extent. The fundamental factors considered in this dataset are age, gender, region of stay and Blood groups. The data should be 98 % accurate for it to be acceptable in real time diagnostic tool development.

In 2021, Patra et al. [200] introduced an Analysis and Prediction of Pima Indian Diabetes Dataset using the SDKNN Classifier Technique. The proposed technique is based on a new distance calculation formula to find nearest neighbors in KNN. It utilized standard deviation of attributes as a powerful tool to measure the distance between train dataset and test dataset. This concept is applied on Pima Indian Diabetes Dataset (PIDD). The analysis is carried out on data set by splitting 90% of training data and 10% of testing data. The proposed approach achieved an accuracy rate of 83.2%, which shows better improvement as compared to the other technique. Table 3 represents the related work on diabetes based on KNN.

3.1.4 Naive Bayes (NB)

In 2010, Sopharak et al. [202] proposed a Machine learning approach for automatic exudate detection in retinal images from diabetic patients. The proposed work consists of a series of experiments on feature selection and exudates classification using naive Bayes and support vector machine (SVM) classifiers. First fit the naive Bayes model to a training set consisting of 15 features extracted from each of 115,867 positive examples of exudate pixels and an equal number of negative examples. Perform feature selection on the naive Bayes model, repeatedly removing features from the classifier, one by one, until classification performance stops improving. To find the best SVM, begin with the best feature set from the naive Bayes classifier, and repeatedly add the previously-removed features to the classifier. The result reveals that the naive Bayes and SVM classifiers perform better than the NN classifier. The overall best sensitivity, specificity, precision, and accuracy are 92.28%, 98.52%, 53.05%, and 98.41%, respectively.

In 2013, Lee [206] introduced a prediction of fasting plasma glucose status using anthropometric measures for diagnosing of Type 2 Diabetes. This study aims to predict the fasting plasma glucose (FPG) status that is used in the diagnosis of type 2 diabetes by a combination of various measures among Korean adults. A total of 4870 subjects (2955 females and 1915 males) participated in this study. Based on 37 anthropometric measures, we compared predictions of FPG status using individual versus combined measures using two machine-learning algorithms. The values of the area under the receiver operating characteristic curve in the predictions by logistic regression and naive Bayes classifier based on the combination of measures were 0.741 and 0.739 in females, respectively, and were 0.687 and 0.686 in males, respectively.

In 2016, Songthung et al. [148] proposed a novel approach to enhance the Type 2 Diabetes Mellitus Risk Prediction using different machine learning technique. The proposed work consists of an extensive dataset gathered from 12 hospitals in Thailand during 2011-2012 with 22,094 records of screened population who are females age 15 years or older. This study used RapidMiner Studio 7.0 with Naive Bayes and CHAID (Chi-squared Automatic Interaction Detector) Decision Tree classifiers to predict high risk individuals and compared the results with existing hand-computed diabetes risk scoring mechanisms. The result shows that Naive Bayes has good coverage and good high-risk percentages compared to both risk scoring and Decision Tree.

In 2018, Das et al. [209] introduced an approach for classification of diabetes mellitus disease using data mining technique. The aim of this research is to predict diabetes based on some of the DM techniques like classification and clustering. Out of which, classification is one of the most suitable methods for predicting diabetes. In this study, J48 and Naïve Bayesian techniques are used for the early detection of diabetes. The experimental results based on data from 200 patient records reveal that Naive Bayes algorithm is better than the J48 as the time to build the model is less.

In 2019, Khan et al. [213] introduced a machine learning based intelligent system for predicting diabetes. The objective of this research is to propose an intelligent system based on a machine learning algorithm to improve the accuracy of predicting diabetes. To attain this objective firstly, an algorithm was proposed based on Naïve Bayes with prior clustering. Secondly, the performance of the proposed algorithm was evaluated using 532 data related to diabetic patients. Finally, the performance of the existing Naïve Bayes algorithm was compared with the proposed algorithm. The results of the comparative study showed that the improvement in the accuracy has been made apparent for the proposed algorithm.

In 2021, Jackins et al. [216] proposed an AI based smart prediction of clinical disease using random forest classifier and Naive Bayes. For diabetes data, the Naive Bayes algorithm gives 76.72 and 74.46 accuracies for training and test data, respectively. Random forest algorithm gives 98.88 and 74.03 for training and test data, respectively. Performance analysis of the disease data for both algorithms is calculated and compared. The results of the simulations show the effectiveness of the classification techniques on a dataset, as well as the nature and complexity of the dataset used.

In 2020, Rghioui et al. [218] introduced a smart glucose monitoring system for diabetic patient. The proposed work presents an intelligent architecture for the surveillance of diabetic disease that will allow physicians to remotely monitor the health of their patients through sensors integrated into smartphones and smart portable devices. The proposed architecture includes an intelligent algorithm developed to intelligently detect whether a parameter has exceeded a threshold, which may or may not involve urgency. To verify the proper functioning of this system developed a small portable device capable of measuring the level of glucose in the blood for diabetics and body temperature. The evaluation result showed that the system using the J48 algorithm exhibited excellent classification with the highest accuracy of 99.17%, a sensitivity of 99.47% and a precision of 99.32%. Table 4 represents the related work on diabetes based on NB.

3.1.5 Random Forest (RF)

In 2020, Wang et al. [230] introduced a Prediction of medical expenditures of diagnosed diabetics and the assessment of its related factors using a random forest model. In this work data were collected from the US household component of the medical expenditure panel survey, 2000–2015. Random forest (RF) model was performed with the programs of the random Forest in R software. Spearman correlation coefficients (rs), mean absolute error (MAE) and mean-related error (MRE) was computed to assess the prediction of all the models. The experimental result indicated that the RF model was little superior to traditional regression model. RF model could be used in prediction of medical expenditure of diabetics and an assessment of its related factors well.

In 2021, Wang et al. [231] present an exploratory study on classification of diabetes mellitus through a combined Random Forest Classifier. This study explored different supervised classifiers, combined with SVM-SMOTE and two feature dimensionality reduction methods (Logistic stepwise regression and LAASO) to classify the diabetes survey sample data by unbalanced categories and complex related factors. Analysis and discussion of the classification results of 4 supervised classifiers based on 4 data processing methods. Five indicators, including Accuracy, Precision, Recall, F1-Score and AUC are selected as the key indicators to evaluate the performance of the classification model. According to the result, Random Forest Classifier is combining SVM-SMOTE resampling technology and LASSO feature screening method (Accuracy= 0.890, Precision = 0.869, Recall = 0.919, F1-Score = 0.893, AUC= 0.948) proved the best way to tell those at high risk of DM. Besides, the combined algorithm helps enhance the classification performance for prediction of high-risk people of DM.

In 2021, Ooka et al. [232] present a Random forest approach for determining risk prediction and predictive factors of type 2 diabetes for the peoples of Japan. This study included a cumulative total of 42 908 subjects not receiving treatment for diabetes with an HbA1c <6.5%. It used two analytical methods to compare the predictive powers: RF as a new model and multivariate logistic regression (MLR) as a conventional model. The RF model showed a higher predictive power for the change in HbA1c than MLR in all models. The RF model, including change values showed the highest predictive power. Table 5 represents the related work on diabetes based on RF.

3.2 Supervised learning (Regression)

In 2009, Gani et al. [261] introduced a data-driven model based on glucose data from one diabetic subject, and subsequently applied to predict subcutaneous glucose concentrations of other subjects, even of those with different types of diabetes. This work employed three separate studies, each utilizing a different continuous glucose monitoring (CGM) device, to verify the model’s universality. The predictive capability of the models was found not to be affected by diabetes type, subject age, CGM device, and inter individual differences.

In 2012, Georga et al. [264] present a predictive modeling of subcutaneous (s.c.) glucose concentration in type 1 diabetes. In this work support vector regression (SVR) technique is utilized. The proposed method is evaluated using a dataset of 27 patients in free-living conditions. Tenfold cross validation is applied to each dataset individually to both optimize and test the SVR model. In the case, where all the input variables are considered, the average prediction errors are 5.21, 6.03, 7.14, and 7.62 mg/dl for 15-, 30-, 60-, and 120-min prediction horizons, respectively. The results clearly indicate that the availability of multivariable data and their effective combination can significantly increase the accuracy of both short-term and long-term predictions.

In 2015, Paul et al. [268] proposed a technique of linear auto-regressive (AR) and state space, time series models to analyze the glucose profiles for predicting upcoming glucose levels. However, these modelling approaches have not adequately addressed the inherent dependencies and volatility aspects in the glucose profiles. The prediction performances of GARCH approach were compared with other contemporary modelling approaches such as lower and higher order AR, and the state space models. The GARCH approach appears to be successful in both realizing the volatility in glucose profiles and offering potentially more reliable forecasting of upcoming glucose levels.

In 2018, Wu et al. [279] introduced a novel model based on data mining techniques for predicting type 2 diabetes mellitus (T2DM). The model is comprised of two parts, the improved K-means algorithm and the logistic regression algorithm. The Pima Indians Diabetes Dataset and the Waikato Environment for Knowledge Analysis toolkit were utilized to compare the results with the results from other researchers. The conclusion shows that the model attained a 3.04% higher accuracy of prediction than those of other researchers. Moreover, our model ensures that the dataset quality is sufficient.

In 2019, Qiu et al. [280] present an improved prediction method for diabetes based on a feature-based least angle regression algorithm. This work consists of a method based on feature weights to improve diabetes prediction that combines the advantages of traditional least angle regression (LARS) algorithms and principal component analysis (PCA) algorithms. First of all, a principal component analysis algorithm is used to obtain the characteristic independent variables found in typical diabetes prediction regression models. The experimental results show that the algorithm improved the approximation speed for the dependent variables and the accuracy of the regression coefficients.

In 2019, Yao et al. [281] proposed a multivariable logistic regression and back propagation artificial neural network based model to predict diabetic retinopathy. A total of 530 Chinese residents, including 423 with type 2 diabetes (T2D) aged 18 years or older participated in this study. In this work a back propagation artificial neural network (BP-ANN) model is utilized by selecting tan-sigmoid as the transfer function of the hidden layers nodes, and pure-line of the output layer nodes, with training goal of 0.5×10−5. Based on these parameters, the area under the receiver operating characteristic (ROC) curve for the BP-ANN model was significantly higher than that by MLR (0.84 vs. 0.77, P < 0.001).

In 2020, Alshamlan et al. [282] introduced a gene prediction function for type 2 diabetes mellitus using logistic regression. In this study the process of feature selection is performed using the Fisher score and chi-square approaches. The total selected number of genes ranges from 1800-2700.The experimental results show that shows that logistic regression produces the highest accuracy with the fisher score for GSE38642 dataset with 90.23% and GSE13760 dataset with 61.90%. Feature selections with logistic regression, classification were used. The obtained accuracy result of logistic regression on two datasets based on fisher score feature selection was higher than Ch-2 feature selection. The accuracy results of two data were 90.23% and 61.90% respectively.

In 2020, Kopitar et al. [283] proposed an early detection of type 2 diabetes mellitus using machine learning based prediction models. This study compares machine learning-based prediction models (i.e. Glmnet, RF, XGBoost, LightGBM) to commonly used regression models for prediction of undiagnosed T2DM. With 6 months of data available, simple regression model performed with the lowest average RMSE of 0.838, followed by RF (0.842), LightGBM (0.846), Glmnet (0.859) and XGBoost (0.881). When more data were added, Glmnet improved with the highest rate (+ 3.4%). The aim of this study was to investigate whether novel machine learning-based approaches offered any advantages over standard regression techniques in the early prediction of impaired fasting glucose (IFG) and fasting plasma glucose level (FPGL) values. Table 6 represents the related work on diabetes based on regression technique.

3.3 Un-supervised learning (clustering technique)

In 2010, Paul et al. [235] introduced a technique how to use the background knowledge of medical domain in clustering process to predict the likelihood of diseases. To find the likelihood of diseases, it proposed constraint k-Means-Mode clustering algorithm. The proposed method also gives much better accuracy when compared to the k-means and K-Mode with about 77-78% over k-means and about 82-83% over k-mode. The developed algorithm can handle both continuous and discrete data and perform clustering based on anticipated likelihood attributes with core attributes of disease in data point. We have demonstrated its effectiveness by testing it for a real world patient data set.

In 2011, Hazemi et al. [236] proposed a grid-based interactive diabetes system. In this work agglomerative clustering algorithm is utilized as primary algorithm to focus medical researcher in the findings to predict the implication of the undertaken diabetes patient. This focusing was clearly shown that the grouped (red) line; which represented the optimized view of blood sugar changes over the newly selected period of time; was providing netted view of blood sugar measurements than the measurements (blue) line. GIDS was tested to study a basic history of a diabetes patient who was under supervision for less than a month. The test was performed to check two functions provided by GIDS which are changing the basic algorithm that GIDS used (Chronological Clustering Algorithm) and changing the full view of the supervision period in the time domain in the study.

In 2013, Khanna et al. [234] introduced an integrated approach towards the prediction of the likelihood of diabetes. This paper performs classification on diabetes dataset taken from SGPGI, Lucknow (A super specialty hospital in Lucknow, Uttar Pradesh, India). It predicts an unknown class label for a given set of data and helpful to find out whether the class label for the dataset under consideration would be of low risk, medium risk or high risk. The classifier is further trained on the basis of weights assigned to different attributes which are generated by means of expert guidelines. The accuracy of classifier is verified by kappa statistics and accuracy, evaluation criteria for classifiers.

In 2015, Flynt et al. [243] introduced a model-based clustering approach for the likelihood of diabetic. This work consists of model-based clustering, an unsupervised learning approach, to fid latent clusters of similar US counties based on a set of socioeconomic, demographic, and environmental variables chosen through the process of variable selection. Then use Analysis of Variance and Post-hoc Tukey comparisons to examine differences in rates of obesity and diabetes for the clusters from the resulting clustering solution. The results of the cluster analysis can be used to identify two sets of counties with significantly lower rates of diet-related chronic disease than those observed in the other identified clusters.

In 2017, Bhatia et al. [245] proposed a hybrid based clustering technique in diabetic prediction. In this research work, K-means has been used for removal of the inconsistency found in the data and for optimal feature selection, genetic algorithm is used with SVM for the purpose of classification. K-means is an optimized hierarchical clustering method which aims at reduction of computational cost. The application of the proposed hybrid clustering model applied to a Pima Indians Diabetes dataset shows increase in accuracy by 1.351% and in both sensitivity and positive predicted value by 2.0411%. The proposed model attains better results in comparison to the already existing models in the literature.

In 2018, Ahlqvist et al. [247] presents k-means and hierarchical clustering technique in prediction of diabetes. The clusters were based on six variables (glutamate decarboxylase antibodies, age at diagnosis, BMI, HbA1c, and homoeostatic model assessment 2 estimates of β-cell function and insulin resistance), and were related to prospective data from patient records on development of complications and prescription of medication. It identified five replicable clusters of patients with diabetes, which had significantly different patient characteristics and risk of diabetic complications. In particular, individuals in cluster 3 (more resistant to insulin) had significantly higher risk of diabetic kidney disease than individuals in clusters 4 and 5, but had been prescribed similar diabetes treatment. Cluster 2 (insulin deficient) had the highest risk of retinopathy. In support of the clustering, genetic associations in the clusters differed from those seen in traditional type 2 diabetes.

In 2020, Nguyen et al. [252] presents a Binning Approach based on Classical Clustering for Type 2 Diabetes Diagnosis. In this study, we propose a method combining K-means clustering algorithm and unsupervised binning approaches to improve the performance in metagenome-based disease prediction. We illustrate by experiments on metagenomic datasets related to Type 2 Diabetes that the proposed method embedded clusters generated by K-means allows to increase the performance in prediction accuracy reaching approximately or more than 70%. Table 7 represents the related work on diabetes based on clustering technique.

3.4 Un-supervised learning (association rule)

In 2000, Hsu et al. [284] proposed a knowledge discovery system for the diabetic patient database, the interesting issues that have surfaced, as well as the lessons we have learnt from this application. The proposed work uses classification with association rule mining (CBA) technique to find all such patterns. It uses minimum support of 1% and minimum confidence of 50% as suggested by the doctors to mine association rules. Approximately 700 rules are generated in total. The result based on 200,000 screening diabetic records suggested that the proposed exploration, mining methodology aims to give the doctors a better understanding of their data and the discovered patterns by helping the doctors to step through the massive amount of information in stages. Table 8 represents the related work on diabetes based on association rule technique.

4 Review of deep learning technique in diabetes prediction

4.1 Convolutional Neural Network (CNN)

A Convolutional Neural Network (ConvNet/CNN) is a Deep Learning algorithm which can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other. Table 9 represents the related work on diabetes based on CNN technique.

4.2 Recurrent neural networks (RNN)