Abstract

Class overlap in imbalanced datasets is the most common challenging situation for researchers in the fields of deep learning (DL) machine learning (ML), and big data (BD) based applications. Class overlap and imbalance data intrinsic characteristics negatively affect the performance of classification models. The data level, algorithm level, ensemble, and hybrid methods are the most commonly used solutions to reduce the biasing of the standard classification model towards the majority class. The data level methods change the distribution of class instances thus, increasing the information loss and overfitting. The algorithm-level methods attempt to modify its structure which gives more weight to the misclassified minority class instances in the learning phases. However, the changes in the algorithm are less compatible for the users. To overcome the issues in these methods, an in-depth discussion on the state-of-the-art methods is required and thus, presented here. In this survey, we presented a detailed discussion of the existing methods to handle class overlap in imbalanced datasets with their advantages, disadvantages, limitations, and key performance metrics in which the method shown outperformed. The detailed comparative analysis mainly of recent years’ papers discussed and summarized the research gaps and future directions for the researchers in ML, DL, and BD-based applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Now a days class overlap in imbalanced datasets is a common and challenging situation for machine learners. The datasets in real-world applications are suffering from class imbalance and overlap due to limitations in data collection. In recent years researchers found that class overlap is a painful and more problematic situation even if a dataset is balanced. It degrades the performance of the standard classification models context to machine learning (ML) [1,2,3] and deep learning (DL) [4, 5] in small, medium, and big data (BD) [6,7,8,9,10,11,12] domains. Class overlapping is a region where multiple class instances are shared in a common region in data space. The reason behind class overlapping is that data instances have similar feature values but belong to different classes. The performance of the standard learning algorithm decreases in both situations i.e. imbalanced and overlapped data. The joint impact of both imbalance and overlap on the performance is more degrading in comparison to individuals [2]. The existing learning algorithms are not able to train effectively in overlapped regions because of poor visibility of minority instances. In this situation, majority class instances are more dominant in the overlapped regions and frequently visible to the learner in comparison to minority instances. This problem tends to transfer choice boundary toward the majority class and thus, high misclassification of the minority class instances. It increases the false positive rate which is a harsh situation and undesirable in real problems especially in the field of medical science [13,14,15,16,17,18], anomaly detection [19,20,21,22], machinery fault detection [23,24,25,26,27,28,29,30,31,32,33], business management [34,35,36,37,38], financial sector [39,40,41,42], fake spam analysis [43,44,45], Data mining and text classification [46,47,48,49,50,51,52,53,54], computer vision and image processing [55,56,57,58,59,60,61,62], bio-informatics [63,64,65,66,67,68,69], Detection of faulty software modules [70,71,72], and information & cybernetic security [73,74,75,76,77,78] etc.

The existing solutions discussed in the literature for handling overlapped and imbalanced datasets were categorized based on class distribution or overlap of class instances [2, 38, 79,80,81,82,83]. The instance distribution-based method resolved the issue by resampling class instances i.e. by performing under, over, or hybrid sampling of instances. These methods suffer from loss of informative instances in case of under-sampling and increase overfitting in oversampling. Class overlapping-based methods work in two phases. Identification of overlapping region is performed in the first phase and instances in the overlapped region are handled in the second phase. There are three approaches, discarding overlapped regions, merging overlapped regions, and separating overlapped regions [84]. In the discarding-based approach overlapped region is ignored and the algorithm learns in a non-overlapped region. In the merging approach overlapped regions are considered as a new class level and handled using two-tier architecture classification models. In the upper tier of the structure, the model focuses on the entire dataset with one additional new class label ("overlap_class") of the overlapped region. If the test sample belongs to the overlapped region which is identified by the upper-level model, then the model used in the lower tier predicts the actual class of the sample. In separating overlapped approaches two different models are needed for learning and testing purposes. One model is used for separating entire data into the overlapped and non-overlapped regions and the second model is used for learning both regions separately. The K-Nearest Neighbor (KNN) is used for separating data into overlap and non-overlap regions and two support vector machines (SVMs) are used for learning each region separately (Fig. 1).

Imbalance and overlap are key problems in machine learning [3, 79, 80, 82], deep learning [85,86,87], and big data [7, 88, 89]. Today DL and BD become the fastest growing research areas where new architectures are added very frankly and CNN become the most popular classification model for time series prediction and analysis [90]. The year-wise number of publications included in this survey is shown in Fig. 2. This survey presents a detailed overview of the majority of recent year methods based on DL, and ML context in small, medium, and BD domains. The summarized structure of the survey is shown in Fig. 1.

1.1 Motivation

Class imbalanced and overlapped datasets are bound to bias the performance of the standard machine learning algorithms and pull off good results towards the majority. But if any domain in which minorities are a more interesting pattern as compared to the majority then it will be a crucial problem because in such applications misclassification of minority class instance is dangerous for example in medical diagnostics if the prediction of COVID-19 positive (minority) patients as negative (majority). In many such applications like medical diagnostics, fraud detection, defect prediction, etc., approximately a hundred percent minority accuracy is expected. Many recent studies showed that the presence of overlapping is more dangerous even if data is balanced. Many methods and frameworks have been proposed by researchers in recent years. Occurrences of class overlapping and imbalance are natural at the time of data generation and preparation and cannot be avoided. Therefore an efficient method is required to handle class overlaps. A huge number of solutions for handling classes overlapping in the imbalanced domain are continuously being proposed by researchers. It is quite difficult to select a feasible solution and method for such real-world applications. The proposed methods may not be suitable to tackle problems in all types of datasets globally, therefore it is still required and in demand to develop an optimal solution that may have the ability to stop the biasing nature of algorithm performances towards the majority class.

1.2 Why this survey?

The existing surveys [2, 38, 79,80,81,82,83,84] only explored class overlapping issues in specific domains i.e. machine learning, deep learning, and big data problems in limited applications. Here in the present work we briefly summarized issues, research gaps, and future directions in extreme imbalance domain-based applications where class overlapping problems have been taken into consideration. Major challenges in class overlapping domains are under consideration at the data analysis level, preprocessing, algorithm level, and meta-learning environment. For the data analysis purpose important and core focusing terms are multi-classification, binary classification, and singular problems. Open challenges at the data preprocessing level are undersampling, oversampling, hybrid sampling, feature extraction, and selection. At the algorithm level, crucial challenges are specialized strategies, hyperparameter, and cost threshold. Meta-learning barriers are recommended ensemble methods, classifiers, and data resampling strategies.

Our major contributions in this paper are outlined as follows:

-

1.

Presented most used and recent year methods for handling class overlapping and imbalanced problems context to machine learning and deep learning in small, medium, and big data domains.

-

2.

Briefly discussed category-wise methods handling class overlap and imbalance with advantages, disadvantages, limitations, and issues.

-

3.

Presented comparative analysis of past year surveys based on important parameters such as imbalance, overlap, advantages, limitations, research gap, and performance metrics.

-

4.

Outlined summary of detailed research gaps, issues, and challenges in overlap and imbalance handling methods for researchers in context to machine learning, and deep learning in small, medium, and big data problems.

-

5.

Apart from these above the survey also presents recent imbalanced and overlapped application domains along with their major related research papers for the current researchers in these domains.

The remaining parts of the survey are organized in the following order: Section 2, presented data intrinsic difficulties such as overlap and imbalance, overlap- -characterization task, application domains, and important performance evaluation metrics of the classifiers used to analyze class overlapping effects. In Section 3, we addressed the last five years’ literature methods and categorized them based on techniques used in ML, DL, and BD environments. In Section 4, we presented a comparative analysis of the existing surveys. In Section 5, we presented a performance metric summary of the existing methods and summarized research gaps. At last in Section 6, we concluded and discussed the future direction of the researcher.

2 Dataset intrinsic difficulties, applications, and performance evaluation

This section presents the class overlap and imbalanced domains where these datasets’ intrinsic properties need to be tackled and draw attention to the data science and machine learning domains researchers. In these application domains, the class of interest patterns belongs to the minority class instances and has a high penalty for misclassification errors. In the remaining part of this section, the imbalanced application domain along with recent related articles and important metrics for measuring the performance of classification models in the unbalanced learning domain have been discussed.

2.1 Dataset intrinsic difficulties

Generally compiled data with unequal class distribution degrades the performances of standard learning models [91]. On the other hand, it is well known that class imbalancement is not only responsible for this unexpected behavioral nature of real-world dataset [92]. Many other factors are solely responsible for degrading the performance of the learning models. These factors are the data intrinsic characteristics (class overlap and imbalance) [93, 94], data irregularities (missing-values, small-disjuncts) [93], and data difficulties (data-lacking, data-noise, data- shifting) [95]. The most harmful problem that has been characterized by data science researchers is class overlapping [2, 79, 80]. The combined effects of both imbalance and overlap have been an extremely hot topic for researchers for decades in several domains such as medical, commuter vision & image processing, security, etc. [2, 79, 80, 96, 97].

2.1.1 Class imbalance

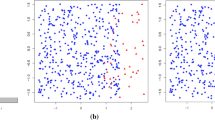

The datasets in which class instances are not equally distributed is called imbalanced dataset The class imbalance in the dataset creates more problems when minority instances are very less and have comparatively high misclassification costs. The majority and minority classes are known as negative and positive classes respectively. The imbalance is measured by imbalance ratio (IR), which is defined as the ratio of the counts of the majority class instances and the counts of minority class instances as given in (1). The minority percent is computed by (2) [2, 3]. Class imbalance is illustrated in Fig. 3, where the blue star represents the majority class instance and the red triangle represents minority class instances.

2.1.2 Class overlapping

The dataset in which instances of multiple class shares a common region in the data space is called an overlapped dataset as illustrated in Fig. 3. These instances are similar in some or all feature values but belong to a different class, and such a problem is a considerable barrier in classification tasks during training and learning. In the overlap region, the majority class becomes dominant because of the highly visible to the learners in comparison to the minority. This causes the choice margin for prediction to transfer toward the majority thus, leading to the miss-classification of minority instances closer to the margin of overlap, which is not expected in real applications. The amount of class overlap area was not well-formulated [98], and a global dimension to measure overlap percentage is undefined. Many methods have been proposed to estimate the overlapped percentages but with restrictions. In [99], the overlap percentage is estimated from the ratio of overlap area to entire data space. Another popular approach is using the classification error use to estimate the overlap percentage miss-classified by the KNN [100,101,102]. However other techniques were proposed in the past year by researchers but they cannot be used globally [98, 103, 104]. Another method proposed in [105] is based on support vector data description (SVDD). The SVDD shrinks the spherical class boundary towards its centroid subject to minimize the radius and then counts the number of instances that are common in both classes. This is applicable only for spherical data shapes. The class overlapping percentage is estimated by the formula given in (3) [2].

2.1.3 Class overlap characterizing components

Identifying and characterizing class overlap in an imbalanced learning domain is still a trouble and tight spot for researchers, since there is no standard and clear well-defined quantification and measurement of this hot topic for real-world application domains [1, 2, 79]. The class overlapping problem can be characterized using three primary consecutive tasks i.e. data domain decomposition, overlap identification, and quantifying class overlap. The decomposition task crumbles entire data into regions of attention for learning and prediction. The region identification task spots the overlap regions. The quantification task measures the amount of overlapped regions. Class overlap may be characterized in different perspectives based on approaches applied to these primary tasks thus leading to different representations of the overlap problem. Thus individual representations measure overlap differently according to the application domains. All the existing approaches for measuring class overlap have three major essential components as shown in Fig. 4.

-

Decomposition of data domain into interesting regions: The Three most used approaches based on the distance for dividing entire data feature space into interesting regions are statistical, geometrical, and graph-based distances. Statistical distance approach based on the distance between class distributions for example Fisher Linear Discriminant. The geometrical distance approach is based on the distance between pairs of class instances for example Euclidean distance. Graph-based distance approach based on the geodesic distance for example Minimum Spanning Tree.

-

Identification of interesting regions: The major approaches for identifying overlap regions are: Discriminative analysis is used for determining the distinctive capability of the features, and in-depth class distribution characteristics need to be analyzed. Interesting regions are observed in the area where classes are overlapped and maximize class separability. Feature space division is used for dividing feature space into discrete intervals for analyzing the characteristics of data. The problematical regions are encircled in the specific range of feature space. Neighborhood search is based on k-nearest neighbor (KNN) search and interested regions are those that have maximum error generated by the KNN classifier. Hypersphere coverage the informative and necessary class instances are covered by subsets and problematic regions are on all sides of the hypersphere. The minimum spanning tree is used to represent the entire data spanning tree and problematical regions are those connected vertices that do not satisfy membership degrees.

-

Quantifying overlap area: Finally this component is used to measure the quantity of the interesting regions. The four major interested region representations are based on feature, instance, structural, and multi-resolution overlaps.

2.2 Applications

Class overlapping and imbalance domains have drawn attention from Researchers over the years because modern datasets are inherently prone to these problems. Figure 5 shows application areas based on our literature search. The detailed domain area along with recent related research references are given below:

-

Medical science: Machine learning tools have proved to be very effective in the diagnosis of some life-threatening diseases. However, the number of patients suffering from the disease in the data set used to train the machine-learning model is much less than normal people. And there is more similarity in the symptoms of patients and normal people such as cancer, heart failure, etc [13,14,15,16,17,18].

-

Machine fault detection: Rolling bearing is one of the most important components in a rotating machine. Machine learning-based fault detection is the most commonly used method which plays a vital role in reliable and safe operation. The most common issue is severe data imbalance because the frequency of a faulty component is much lower than that of a non-faulty one [23,24,25,26,27,28,29,30,31,32,33].

-

Stock market: Current research and studies show that imbalance and overlap negatively affect stock returns. The experimental result has proven that a one percent trading imbalance, a difference of around seven percent can result in an overall trading return [39,40,41,42].

-

Intrusion detection system: These systems are used to detect suspicious behavioral activities and generate alert messages as an alarm when such incidents happen. The recent research carried out in network intrusion detection systems are: [19,20,21,22].

-

Fraud detection: Detecting fraud transactions is a big challenge for credit and debit card companies as there is a high level of behavioral similarities between fraud and normal transactions. Apart from this, occurrences of fraud transactions are much less than normal transactions. Therefore the performance of the machine learning algorithm is biased toward normal transactions due to such intrinsic nature of transactional dataset [8, 106].

-

Software fault detection: In software development only a small part of modules are faulty and most of them are non-faulty so such data is naturally imbalanced. Most classification models face the problem of working with data with imbalanced class distributions since most such models usually assume that each class has the same misclassification cost [70,71,72].

-

Computer vision: Systems are used to retrieve important information about the image including image features, structure, and labels of image data. The imbalance occurs when there is a lack of interesting images in a huge amount of image data [61, 62].

-

Image processing: The method operates on image data to improve quality and extract useful information for various purposes like medical diagnosis, remote and ultraviolet sensing, robot vision etc.[55,56,57,58,59,60]

-

Information security: In the last few years, the use of machine learning algorithms has played an important role in security and authentication purposes. The data used for this purpose is highly imbalanced and overlapped [73,74,75,76,77,78].

-

Churn prediction: Customer churning is a rare event in many companies such as telecommunications, credit cards, and many other service providers. In other words, we can say that churners counting is very low as compared to non-churners. A small percentage of customer retention may generate a larger percentage of revenue in such business companies [34,35,36,37].

-

Spam detection: In this domain, the instances of spam reviewers are in the minority as compared to non-spammers. The ML-based filters face great challenges due to this imbalance of distribution while filtering spammers, because of the biasing nature of learning algorithms [43,44,45].

-

Data mining: Many applications such as text summarization, novel pattern mining from historical data, time series analysis, web mining, etc. are using machine learning algorithms for prediction. The dataset used for training purposes is imbalanced and overlapped. Since interesting mining patterns are minority class instances, therefore improved and adaptive methods need to tackle these issues [50,51,52,53,54].

-

Text classification: Class imbalance and larger features are two major problems of textual data classification. there are many areas belonging to this domain such as sentiment analysis, healthcare fraud detection, etc. The feature selection-based techniques have been used by the researcher for handling imbalanced big data in this domain [46,47,48,49].

-

Bio-informatics: It is an essential application tool used for computational analysis to interpret biological information such as Gene therapy, protein structure prediction, new drug discovery, evolutionary and microbial, etc. The machine learning-based application is used to handle data intrinsic characteristics that are imbalance and overlap [63,64,65,66,67,68,69].

-

Business marketing: It allows individual organizations including commercial and Government institutions to sell their products and other services. Machine learning and business intelligence based tools are used to search for customers, increase productivity, and prediction of future demands [107,108,109,110,111,112,113].

2.3 Performance metrics

Some performance metrics are not affected by imbalanced instance distributions but many of them are misleading. The most common metrics for the classification of overlapped and imbalanced datasets are sensitivity, specificity, and balanced accuracy(BA), G-mean, Area Under Curve (AUC), and F-score [1,2,3]. In imbalanced applications, accurate detection of minority class instances is very difficult. This is usually assessed in terms of recall or true positive rate (TPR) and evaluated by using (6), where TP, TN, FP, and FN are taken from the confusion matrix as shown in Table 1. As sensitivity only reflects the performance over one class also relates to another metric, such as specificity i.e. true negative rate as given in (8), where TN and FP represent true negative and false positive respectively.

Balanced accuracy is defined as the average accuracy of both classes and is also known as balanced mean accuracy, average accuracy, or macro accuracy, etc., and computed by using (9). Accuracy computed as given in (4) can mislead in case of highly imbalanced data and the majority class accuracy (TN and TN + FP) are highly dominant. For example, a correctly predicted majority class of 10000 instances with a total misclassified positive class with 100 instances gives an accuracy of 99 percent, which is bad for a good classifier. And similarly, 50% BA reflects the good performance of the model. Therefore it frequently replaces the accuracy metric and becomes the most common performance measure used in an imbalanced case.

G-mean metric is used to assess the overall performance which geometric mean of sensitivity and specificity and evaluated as given in (10). G-mean is most frequently used as an assessing performance metric. Since both are evaluating the average of common parameters sensitivity and specificity both can be used interchangeably. The inequality relation between geometric mean and square root mean is given by formula as in (11), hence similar inequality relationship must be satisfied between balanced accuracy G-mean performance metrics as formulated in (12).

Precision describes the ability of a classification model to identify a relevant instance in testing data instances i.e. a higher precision denotes a higher probability of correct prediction of a positive instance. It is the ratio of positive predicted value and relevant retrieved instances as given in (5). It is also known as a positive predicted value. It answers that among all positive predictions how many are real positives and how many are real negatives but the model incorrectly predicts them as positives. This metric is very useful when datasets are imbalanced. The formula for computing precision is the ratio of true positives to the sum of true positives and false positives.

Receiver Operating Characteristics Curve (ROC) is a plot between TPR on the y-axis and FPR on the x-axis. The curve area between (0, 0) and (1, 1) is called the area under the curve (AUC). A higher AUC value of a model has a better capability to predict an actual positive class as a positive class and an actual negative class as a negative class in comparison to a model having a lower AUC. The minimum threshold value is 0.5 which means the model predicts a positive class to be negative and a negative class to be positive. Two or more models’ performances can be compared based on AUC value.

Sensitivity describes the ability of a classification model to identify all data instances in a relevant class i.e. higher sensitivity means the model can predict maximum positive instances as positive. It is defined as the ratio of true positive and the sum of true positive and false negative as given in (6). It describes how efficient the classifier is when predicting a positive class on desired positive outcomes.

Specificity is the true negative rate of the model. It is the ratio of TN and the sum of TN and FP as given in (8). Balance accuracy is the average of sensitivity and specificity and is computed by using (9). The accuracy of the model is the ratio of truly predicted values of target instances and their actual label value in the testing part of the dataset. In many cases generally for imbalance data accuracy may be descriptive i.e. model performance is good for the majority class and poor for the minority class and it is not sufficient to measure the performance of the model. The accuracy is the ratio of correct prediction to the sum of correct and incorrect predictions which are computed by (4):

F- score is used to relate precision and recall together where recall is prioritized over precision, in this case, the F-score of a model can be calculated by using (7). For \(\beta \) =1,\( F_{1}\) score is the harmonic mean of precision and sensitivity.

Matthews correlation coefficient (MCC) is a more consistent and powerful statistical measure of the classification models used in the imbalance learning domain. The MCC value range between -1 t0 +1, where -1 indicates a total disagreement and +1 represents the total agreement between predicted and actual instance labels. The MCC is computed by using (13).

3 Literature review

A detailed discussion about the past year’s research in the context of deep, machine learning, and big data is presented in this part of the survey. The reviews are based on the category of methods handling overlap plus imbalances in both DL, ML, and BD. The current survey includes the majority of the past five years of research and major surveys in DL, ML, and BD domains. The first part comprises a detailed discussion of ML and category-wise related work. The first part describes the DL background and related research. The third part presents reviews of big data environments

3.1 Machine learning based review

In previous literature surveys and various reviews, methods of handling imbalanced and overlapped data were grouped based on their convenience and used techniques. Some studies categorized methods as per compatibility whether it was able to handle skewness of data or similarities in feature value. Some studies categorized it based on approaches without any changes in the original data. Some studies cauterized it based on desirable requirements of performance metrics. Many studies concluded that the negative effect of the existing standard classification model is very high in overlapping scenarios in comparison to imbalance. The overlap impacts the performance even if data is balanced [2, 3]. The existing methods deal either with features of datasets or with the instance. Here in our study, we categorized more optimistic ways of the existing methods of handling class overlapping in an imbalanced dataset. The schematic representation of our categorization is shown in Fig. 6.

3.1.1 Data level methods

This includes methods that handle the class overlapping issues by changing the original data distribution and further subdividing it into instance distribution and overlap-based subcategories.

Instance Distribution Based Method These methods are based on data re-sampling (undersampling, oversampling, and hybrid-sampling) of instances of the entire dataset.

The major issues with these methods are excessive elimination of majority instances causes information loss and regenerating synthetic instances creates overfitting. An in-depth summary of the instance distribution based methods is shown in Table 2 along with their pros and cons.

Under-sampling In the undersampling based method majority of instances were eliminated from the entire dataset. Vuttipittayamongkol et al. [114] proposed an under-sampling method based on recursive neighbor searching (URNS). The URNS identifies class overlap regions by searching the KNN of the majority class instances. URNS removes negative instances after pre-processing data by z-score normalization. The KNN searches nearer the surrounding minority instances and then removes majority instances which highly weakens the visibility of minority instances. To prevent excessive elimination URNS is used to target only those majority instances for elimination which weakens the visibility of more than one minority instance. URNS also ensures sufficient elimination by a twice repeated recursive search. URNS ensures high sensitivity. The major benefits of URNS are that under-sampling is independent of imbalance and methods dealing with a class overlap which is the cause of misclassification. Rui et al. [115] proposed an under-sampling method based on the random forest cleaning rule (RFCL). The RFCL eliminated those majority instances that cross newly updated classification boundaries within the margin threshold. The threshold value is estimated by maximizing the f-1 score of the classifier. The method can minimize both overlap and imbalance. The method used a random forest tree for computing the margin value of class instances by (14) where V\(_{\text {true}}\) and V\(_{\text {false}}\) are the numbers of true and false voting by random forest tree respectively. Soumya Goyal proposed a neighborhood-based under-sampling approach for software defect prediction using an artificial neural network. The method reduces both overlapping and imbalancement.

The method calculates the margin value of each instance and then draws a box plot for the majority and minority classes respectively. The overlapping degree is OD= \(Q_3\) (minority) - \(Q_1\) (majority) where \(Q_3\) third quartile of the minority margin box plot and \(Q_1\) is the first quartile of the majority margin box plot. The smaller value of OD represents a higher separation between majority and minority. The methods search margin threshold if the majority instance crosses the margin threshold boundary then it will be eliminated.

Devi et al. [116] proposed a model based on one class SVM and undersampling to handle overlapping effects. The model is designed in two stages i.e. pre-processing and training. Stage one involves the identification of overlapped regions, removing overlapped instances, and under-sampling. The SVM is used to detect outliers and nest stage removing overlapped instances using the Tomek link pair. Finally, three classification models feed-forward neural networks, Naive Bayes and SVL trained with pre-processed data in the first stage. Pattaramon et al. [100] proposed an under-sampling method to capitalize the visibility of minority instances in the overlap region. The method uses soft clustering and elimination threshold criteria for overlapped instances. For optimality purposes only overlapped borderline instances are considered for elimination. Fuzzy C-means soft clustering is used for overlapped based undersampling of majority instances. Vuttipittayamongkol et al. [117] proposed an undersampling method that removes majority instances from overlap regions to improve the visibility of minority instances. The methods evaluate class membership degree (cmd) of minority and majority class instances using the Fuzzy C-means clustering technique and then cmd is used to eliminate majority class instances from the overlapping region. The majority of instances whose membership degree is less considered in the overlap region.

Over Sampling In oversampling-based method synthetic minority class instances regenerated subject to minimize overlap percent. Tao et al. [102] proposed SVDD and density peaks clustering oversampling (DPCO) technique for identifying optimal overlapped regions and regenerating synthetic instances to overcome the deficiency in the overlapped and imbalanced dataset. The SVDDDPCO finds dirty minority instances, eliminates them, and oversampled minority instances on the boundary. Ibrahim [118] proposed an outlier detection-based oversampling technique (ODBOT) for imbalanced dataset learning which reduces the risk of class overlapping. The ODBOT generates the synthetic instances of minority classes and later on, it detects outliers to reduce overlapping degrees in classes. The first step method determines the number of synthetic samples (NSS) minority class by the formula NSS= (P*M)/100 where M is the number of majority instances and P= (imbalance ratio-1)*100. Then in the second step, the method computes the dissimilarity relation between classes using a k-mean clustering algorithm. The algorithm finds k clusters of both majority and minority classes with a centroid of each. The third step method detects outliers in the minority class by the different relation between the cluster center of the majority and minority class. The summation of the minority cluster distance (SMCD) is computed as the difference between centers of minority and majority classes. The minimum SMCD means the minority class cluster contains outliers. Finally, the synthetic sample was generated to the boundary of minority instances of best clusters. Tao et al. [119] proposed SVDD based oversampling method for imbalance and overlapped domains. The SVDD is used to identify boundaries to avoid overlap then synthetic minority samples are generated using the weighted SMOTE technique. Maldonado et al. [121] proposed an oversampling method based on feature weighted to reduce overlap and imbalance. The method first selects relevant features based on the threshold value and then generates synthetic minority instances.

Hybrid Sampling In the hybrid, sampling-based method majority instances are removed from the overlapped region, and regenerates some minority class instances. Yuanwei et al. [120] proposed a hybrid method that removes the majority of instances from overlap regions that is less informative for the learning algorithm. The method performs under-sampling in the overlapped region and oversampling in non overlapped region. The method first detects overlapped regions and then finds out the optimal majority samples for elimination subjects to minimize the imbalance ratio, minimize the overlap ratio, and minimize the loss of original information.

Instance Overlap Based Methods: These methods handle the overlapping issue in two phases: In the first phase novel algorithm use split, entire data into the overlapped and non-overlapped region, and in the second step one of the following three approaches are used to reduce the negative effect of classification models. A support vector data description (SVDD) method was proposed in [122] which identified whether instances create overlapping or not. The SVDD finds the class domain boundary with minimum area by shrinking the radius of data space in this class. Once both class domains are identified by SVDD then common data instances belonging to both classes are put into overlapped regions. Xiong et al. [84] conducted a systematic study on existing methods of handling region-based class overlapping problems. Three schemes were proposed in past years’ research which are discarding, merging, and separating overlapped regions. The separating-based methods give a higher performance in comparison to the remaining two, especially when using SVM, KNN, NB, and C4.5 classifiers. The summary of the instance overlap-based methods is shown in Table 3.

Re-sampling In these methods, under-sampling, oversampling, and hybrid sampling are performed on instances nearer to the borderline or in an entire overlapped region. Kumar et al. [1] proposed entropy and improved k-nearest neighbor based undersampling that eliminates only those majority class instances which having less potential informative. The overlap region identified by using improved kNN search and then eliminated majority instances if their entropy value is less than threshold entropy. Pattaramom et al. [3] proposed four methods to handle imbalance based on under-sampling by eliminating the majority instance from the overlapped region. All four methods are based on searching the target majority instance for elimination if there exists at least one minority instance within k nearest neighbor of the target instance. In the first method, the majority instance is eliminated without any consultation of the minority instance i.e. a majority instance is eliminated when its k nearest neighbor has a minority instance. In the second method, a majority instance eliminated when its k nearest neighbor has a minority instance, and further, it is also must be within the range of the k nearest neighbor of this minority instance. In the third method all majority instances are eliminated which is common in the KNN of two minority instances. In the fourth method, the elimination is further recursively applied to the result set which is to be eliminated in the third method. The proposed methods maximize the visibility of monitory instances in overlap regions and also minimize excessive elimination. It optimizes the trade-off between minimum information loss and maximum sensitivity. In these methods, there is the chance of excessive elimination of majority instances which decreases the accuracy. Only uniform distribution of instances has been considered in these methods. Estimating K-value in KNN is play an important role while identifying target instances. The higher k value can be used when minority density is lower than majority class density otherwise small k value is good for neighbor search. Sima and Hamid [123] proposed under-sampling (DBUS) and hybrid sampling (DBHS) two methods based on the density of instances. The DBUS deletes neighbors of higher density instances which reduces overlapping and balances data distribution while DBHS used a combination of under-sampling and oversampling for the same purpose.

Overlap Discarding Based Method In this method, the model ignores the overlapped data and learns from the remaining non-overlapped data, and later on, testing is performed on a model trained on this subset of data. The most common overlap discarding technique is SMOTE + Tomek Links used to handle problem [98]. Ronal et al. [101] proposed a model to evaluate the performance of clustering techniques to handle class overlapping. The result showed that soft clustering versions like FCM, rough c-mean, and their hybrid combination are powerful in comparison to other clustering techniques. FCM computes the membership of instances from each cluster center based on the distance similarity metric. Instances closer to the cluster center assigned high membership degrees from this cluster.

Overlap Merging Based Method In this method, the overlapped data instances are assigned a new class label and the original dataset includes one additional label named “overlap-label”. Then after that, the existing model continues its learning phase, and two models are required for this scheme, one is for assigning a new label to instances of the overlapped region and the second is for the overlapping region. During the testing phase, each target data is first tested by model one if it belongs to the overlap label then tested after it will be on the second model to determine actual its label.

Overlap Separating Based Method This method deals with overlapped and non-overlapped data separately for this purpose it requires two different learning models corresponding to each region. Then target data tests on individual models and determine whether it falls in the overlapped or non-overlapped region. Zian et al. [124] proposed a method that divides the dataset into overlapped and non-overlapped regions using k-mean clustering later on each region is trained and tested separating using two different classification models and finally all results are combined. Li et al. [106] proposed a hybrid-based method to handle imbalanced data with class overlap for fraud detection. The method is based on the divide and conquers approach. The dataset is divided into overlapping and non-overlapping subsets. Unlike other methods, the proposed method is not used any distance metric for obtaining overlapping regions. The method used an unsupervised anomaly detection model to learn the basic profile of the minority sample which belongs to fraud transactions. By using this learn profile an overlapped set can be formed which includes almost all minorities (fraud transaction) and some majority which is highly matched with the learning profile. The rest of the minority and majority samples form a non-overlapping set. During the conquer phase two subsets were tackled independently. Since the non-overlapping set has only a few minority samples in the non-overlapping region hence they can be classified as the majority one for simplicity. For overlapping sets, a powerful supervised classifier is applied for learning in the overlapped region. For assessment purposes method proposed dynamic weight entropy (DWE) to assess the quality of the overlapping and non-overlapping sets. The DWE is the multiplication of signal to noise ratio of minority class and entropy of overlapped subset.

Sumana et al. [125] proposed a model which initially divides the original dataset into overlapped and non-overlapped regions using the k mean clustering algorithm and then applied SVM optimization on each region separately to classify target data. Various data pre-processing applied before clustering such as handling missing values, min-max normalization, data balancing, removal of Tomek link, feature selection using random forest, and box plots to remove noise. Gupta and Gupta’s [126] proposed a method to handle overlapping based on outlier instance identification and in the dataset. The proposed method measures the overlap degree by sequential using of the Nearest-Enemy-Ratio, Sub-Concept-Ratio, Likelihood-Ratio, and Soft-Margin-Ratio. Finally, the overlapped instances separated and said to noisy data and NB, C4.5, KNN, and SVM classifiers trained by non-overlapped data regions. Chujai et al. [127] proposed a cluster-based model which separates the dataset into the overlapped and non-overlapped regions and then both regions use to train two different classifiers for the prediction of unknown target data. Xiong et al. [103] proposed a method based on the Naive Bayes (NB) classification model in which NB is used to identify the overlapped region and further NB classification model trained on overlapped and non-overlapped region data separately. Liu et al. [128] proposed an anomaly detection model for the overlapped region in system log-based data. The model first detects the membership of an instance against both class label i.e. normal or abnormal and then use fuzzy KNN use to separate overlapped and non-overlapped region. The Adaboost-based ensemble learning is used to train the classification model and finally soft and hard voting methods are used to predict the class of unknown target data. Miguel et al. [129] proposed an n-dimensional generalized overlap function to determine the overlap degree in the imbalanced dataset. In the proposed function they generalized it from the multiclass data set to the binary class dataset. The difference is only in the number of sufficient boundary conditions, in n-dimensional it is more than the general overlap function.

3.1.2 Algorithm level methods

These methods do not change the class distribution of training data, they performed the learning process so that the importance of the minority class which is the class of interest in the learning domain, increases [38]. Most of the methods are modified based on the consideration of either class penalty or class weight or shifting decision threshold manage such a way the biasing towards majority is reduced. In a cost-sensitive method higher cost is assigned to the misclassification cost of the minority class [130, 131]. These methods are applicable on both instance level and also feature level selection and further sub categorized into cost sensitive and threshold.

Cost Sensitive Based Algorithms Cost-sensitive feature selection methods are proposed in [132] to reduce the effect of overlapping. Bo-Wen et al. [133] proposed density-based adaptive k nearest neighbor (DBANN) which handle both overlapping and imbalance problem together.

The DBANN uses an adaptive distance adjustment strategy to identify the most appropriate query neighbors. The method first divides the dataset into six partitions using the density-based clustering method which are minority noise samples, majority noise samples, minority samples in the overlapping cluster, majority samples in the overlapping cluster, minority samples in the non-overlapping cluster, and majority samples in the non-overlapping cluster. Now every six sets assigned a reliable coefficient to each training instance unlike KNN in other methods. The distance metric of each part is modified using local (within a partition) and global (entire dataset) distribution. Finally, the query neighbor is selected using a new distance metric. Saez et al. [146] proposed the use of one-vs-one (OVO) strategies to reduce overlapping degrees without any modification to the original data and the standard algorithm. The OVO decompose the multiclass problem into sets of binary class problems for example it divides C class problems into C(C-1)/2 binary sub-problems and each sub-problem deal with different classifiers separately. OVO can increase the separability between binary class problems, thus reducing the overlap degree and increasing the performance of classification models. Lee and Kim [139] proposed an overlap sensitive margin (OSM) model based on a fuzzy support machine and KNN to handle overlapped and imbalanced datasets. The OSM divides the entire dataset into hard and soft regions then regions classified using decision boundary 1NN and SVM. Using hard and soft regions it is easy to determine the closeness of the unknown dataset. Tung et al. [104] proposed an extended supervised dimensional reduction method more likely to principal component analysis to reduce overlap degree and increase the model performance on the overlapped and imbalanced dataset. The method advised learners to preserve the predictive power of smaller dimension subspace. It maximizes the inter-class covariance and minimizes inter-class covariance.

Threshold Feature Selection Based Algorithms These algorithms select a subset of key features instead of all for training and learning purposes. Although these methods are more challenging underclass overlapped in an imbalanced scenario in terms of data complexity but give good results in f-score and g-mean metrics. The penalty-based feature selection method is proposed in [142]. The feature subset selection method namely modified Relief that can handle both feature interactions and data overlapping problems. The method by assigning appropriate rank and penalty to each feature by their relevancy in data space and then the distance-based penalty discriminates against features whether they provide a clear boundary or not between two classes. Fatima et al. [143] proposed a feature selection method to minimize overlapped degrees to improve imbalanced learning in fraud detection applications. The method uses adaptive feature selection criteria to reduce overlap degree and then combined it with sampling strategies like no-sampling, SMOTE, and ADASYN. For feature selection, R-value uses to measure the overlap degree. The R-value is based on the average number of differences between the nearest neighbor and threshold nearest neighbor of each class. Using the R-value of both classes, R-augmented of predictor set of datasets computed which use to measure overlap degree in the dataset. As imbalance increases the augmented R-value also increases, therefore, the minority class has a larger weight in comparison to the majority class.

Feature Weight Based Algorithms These categories of algorithms assign weight to each feature according to their negative effects on the overlapped dataset. An adaptive method proposed in [144], which assigns a weight value to each feature according to the overlap degree measured using maximum Fisher’s discriminant ratio (FDR). Datasets with a low value of FDR have a high degree of overlap. The F value is the maximum FDR valued feature computed by using (15). Xiaohui et al. [141] proposed a recursive features elimination method based on a support vector machine (SVM-RFE) to handle the overlapping problem in high dimensional data. The iteration of SVM-RFE ranks features based on weight and the bottom-ranked feature is eliminated. The weight of the feature determines by SVM-RFE-OA which combines the overlapping degree and accuracy of the samples. The overlapping degree of the sample xi is given by equation r(\(x_i\))= different label(\(x_i\))/k -OR(\(x_i\)). Where different label(\(x_i\)) samples have a different class label in k-nearest neighbors of a sample \(x_i\) and OR(\(x_i\)) is a non-homogeneous sample ratio in a dataset. The ratio r(\(x_i\))>0 denotes sample ratio of different classes in neighbors of xi is greater than the heterogeneous data sample ratio in the original data set. For correct measure of overlapping degree is normalized Nr(\(x_i\)) form of r(\(x_i\)) need to be evaluate which is obtain by Nr(\(x_i\))= r(\(x_i\)) / OR(\(x_i\)). The small average Nr(x) value of all samples represents high separability among the different class labels. The Nr(x) can represent descriptive information for discrimination. Shaukat et al. [147] proposed overlap sensitive artificial neural network (ANN) which handle both imbalance and class overlapping situation together with noisy class instances. The basic idea behind the method is weighting instances based on feature location before training to neurons. The advantage of these methods is to identify instances in the overlapping region instead of the entire region like other methods. For handling the overlap method apply weight to the instances concerning overlap level. The higher weight means less overlapping and the lower weight means more overlapping. For simplicity KNN(K=5) is used to evaluate propensity P=NN/5 where NN is the number of instances of the same class. The P=0 value represents an instance that lies in other class regions and is treated as outliers. The value P=1 represents instances surrounded by the same class instances. The value 0<P<1 represents the overlapping of instances. The method uses a propensity range of outliers [0.0, 0.20]. The summary of the algorithm-based methods is shown in Table 4.

3.1.3 Other emerging methods

Unlike the re-sampling based method these need not change the class distribution of the original dataset but use random sampling with replacement to create n training sets of an equal number of instances of the dataset. The specific classification algorithm was applied to each training set and took majority voting as the final prediction of unknown target data.

Ensemble Based Methods These methods can be categorized as either homogeneous or heterogeneous depending on whether the classification models are the same or different for each training set during ensemble. Yuan et al. [148] proposed an ensemble method to handle overlap and imbalance in real datasets based on random forest trees called overlap and imbalanced sensitive random forest (OIS-RF). The OIS-RF derives a coefficient called hard to learn (HTL). The HTL coefficient ensures the priority and importance of the instances at training time to the classifiers. The HTL depends upon overlap degree (OD) and imbalance ratio (IR). The OD(x) = (k1+1)/(k+1) where k1 is several instances of other classes in k-nearest neighbours of x. For instance x belongs to majority class HTL(x)= OD and HTL(x)=OD.IR for instance belongs to the minority class. This method provides higher precedence to the minority class instances that belong to the overlap region for training to the random tree. The algorithm gives better results in comparison to another algorithm in the context of the f-1 score, AUC, and precision for large and medium-sized datasets. Nve and Lynn [149] proposed KNN based under-sampling (K-US) method to filter instances from the overlapped region. The proposed model works in two stages i.e. data pre-processing and learning stages. The K-US resolve overlapped and imbalanced problems. In the learning stage, pre-processed data is used to train J48, KNN, Naive Bayes, and further ensemble with the AdaBoost strategy. Afridi et al. [137] proposed a three-way clustering approach to estimate the threshold which plays a major role to identify the overlapped class region. The threshold estimation is based on determining whether an instance belongs to a particular cluster, outside the cluster, or partially belongs to a cluster. The threshold value of an instance x is the ratio of the number of the neighbor of x that belongs to a particular class and the total number of neighbor objects. Yan et al. [150] proposed a method based on the local density of minority class instances in which the overlapping degree of a majority instance depends on the local density of surrounding minority instances and is then eliminated. The oversampling of minority instances is based on its local density to balance distribution.

3.1.4 Hybrid based methods

These methods combine both re-sampling and ensemble-based methods. Ensemble applied after reducing overlap degree by using any of the re-sampling methods i.e. under-sampling, oversampling, or hybrid sampling. These methods are time-consuming but useful where other performance metrics are desirable along with accuracy. Zian et al. [124] proposed a neighborhood under-sampling stacked ensemble (NUS-SE) to handle imbalanced and overlapping in the dataset. The NUS categorized data instances into safe, borderline, rare, and outlier. The borderline is overlapped instances and the outlier is noise instances. Unlike other under-sampling methods, NUS assigned instances weight according to neighborhood instances. The main idea behind NUS is to select higher-weight majority instances except for outliers for the training subset. The weight function of the instance is computed by the formula NUS(r) = exp(ar) where a is the exponential rate and r is the local neighborhood ratio of several minorities and the number of k nearest neighbors. After pre-processing using NUS then ensemble stacking is done which is the majority voting result of the heterogeneous classification model on different samples with replacement. The advantage of this method is to avoid the elimination of the informative majority class. Chen et al. [70] software defect prediction model which combines class overlapping reduction and ensemble learning to improve the prediction of defected software in imbalanced data. In the proposed method first neighbor cleaning rule (NCL) is employed to eliminate the majority of instances from the overlapping region then random under sampling-based ensemble learning is employed on the balanced dataset generated in the previous step. During the NCL step sampling eliminates non-defective instances to reduce overlap degree but in the ensemble, phase under-sampling eliminates non-defective instances to reduce the imbalance. Z. Li et al proposed a dynamic entropy-based hybrid approach for credit card fraud detection [106]. Wang et al. [41] proposed a hybrid method based on extreme-SMOTE and synchronous_Sample_LearningMethod for detection of financial distress. The training dataset used for this purpose was highly imbalanced and overlapped.

Hybrid of Feature and Instance-Based Method This method combines both feature selection and a re-sampling scheme. For key feature selection, the R-value measure computes the rank of features based on overlapped degrees proposed in [143]. The method proposed three feature selection algorithms RONS (Reduce Overlapping with No-sampling), ROS (Reduce Overlapping with SMOTE), and ROA (Reduce Overlapping with ADASYN), which are derived throughout sparse feature selection to minimize the overlapping. Rubbo and Silva [134] proposed filter-based instance selection (FIS) method which uses self-organizing maps neural network (SOM) and entropy theory of information. The SOM learns from the training dataset after that training instances are mapped with the closest blueprint of the SOM neurons. Further training datasets are filtered by satisfying the minimum threshold in the majority class filter (high filtered instance selection (HFIS)), minority class filter (low filter instance selection (LFIS)), and both class filters (both filter instance selection (BFIS)). These filtered instances are depending on the entropy of neurons. If the entropy is greater than or equal to the high threshold value, then overlapped instances which is less informative are removed (HFIS).

If entropy is less than or equal to a low threshold then lower probability class instances removed by ensuring removed instances do not with majority class can smooth the class boundary(LFIS). The BFIS combines both HIF and LFIS and border overlapped instances are removed. After the filtering process is done the selected dataset is now used for training the model used for target prediction. The disadvantage of the method is to set threshold parameters. The summary of the other emerging based methods is shown in Table 5.

where \(\mu _1\), \( \mu _2,\), \( {\sigma }_1^2\) , \( {\sigma }_2^2 \) are the means and variances of class1 and class2.

3.2 Deep learning based review

Deep learning is an associate field of machine learning (ML) which includes artificial neural networks (ANN). The ANN is derived from interconnected biological neurons or nodes [38]. The main difference between ML and DL is the types of data used for learning. The ANN is made of a user-defined input layer, a hidden layer (calculate the result of the input layer), and an output layer as the result of the final calculation. The weighted connecting line joins nodes between adjacent layers. The DL is made of deep neural networks i.e. three or more hidden layers. Each neuron transfers its input into single output by using a nonlinear activation function in a feed-forward manner. A fully connected neural network has one hidden layer called a multi-layer perceptron (MLP). An MLP is simplest form of DL [61, 152,153,154]. Jeong et al. [57] presented a technical review on GAN-based methods and focused on major GAN architectures used in medical image augmentation and balancing for improving classification and segmentation tasks. A fully connected three-layer feed-forward neural network is shown in Fig. 7.

Aksher et al. [40] proposed CNN based model for sock price prediction. The model handle imbalance and feature selection problem. They used 2D deep neural network with a new rule-based labeling algorithm and feature selection model. The approach gives out-performance but it is limited to only stock trade prediction. Zhao et al.[30] proposed normalized CNN-based models for rolling bearing machinery fault detection and diagnosis. The model used the CNN layer for mapping the feature through a series of convolutional operations. The model gives good accuracy in imbalanced conditions. Wang et al. [155] proposed CNN to identify particular emitters with a received electromagnetic signal. The model tackles the imbalance problem in both gain and phase of signal context and improved the performance over the traditional feature-based approach. Chen et al. [156] proposed an ensemble-based deep learning (DL) convolutional neural network (CNN) method to handle imbalanced data. They designed an improved loss function to rectify biasing of learning models towards the majority class. The loss function enforces CNN hidden layers and other associated functions which reduce misclassified samples in lagging layers. The method initiates adjacent layers to create miscellaneous actions and re-fix errors in former layers according to a batch-wise manner. The major problem with the method is not combining advanced loss functions and novel data generation for better learning capability in high-quality data.

Yan et al. [157] proposed an extended DL method for the unbalanced multimedia dataset. The method integrates bootstrapping, DL and CNN. The method fed low-level features to CNN and justified feasibility in achieving out performance with reduced time complexity. Akil et al. [59] presented a new deep CNN for automated low and high-grade segmentation of brain tumors to maximize feature extraction from MRI images. The proposed method resolves two major issues class imbalance and overlapped image patches. For handling imbalance issues they use equal sample images and examine loss function results. Antom Bohm et al. [158] presented an approach using full CNN for image object detection and segmentation. The approach translates overlapped image objects into non-overlapped equivalents. Banarjee et al. [159] proposed two approaches based on CNN and RNN for information synthesizing computed tomography of clinical data. The model presented a report in word, sentence, and document levels. It outperforms in f1 score value which is desirable in the highly imbalanced dataset. Gao et al. [160] proposed a method based on three layers of CNN with two dense layers. CNN uses thirty-two one-dimensional kernel filters in each of the three layers having sizes three, twenty-two, and twenty-one respectively. The layers process one-dimensional raw data and extract useful features which supply dense layers for classification. The problem with this method is to use simple imbalance solutions.

Rai and Chatarjee [161] proposed a hybrid CNN_LSTM and ensemble-based model which automatically detects the cordial attack in ECG data. SMOTE oversampling technique was used to balance the dataset and better performance in minority class accuracy. It is used in clinical diagnosis and need not to required feature extraction and other preprocessing technique. Gupta et al. [22] proposed a model to tackle class imbalance based on LSTM and improved one-vs-one in frequent and infrequent intrusion detection in the network. The model achieved a higher detection rate with reduced computational time. Gao et al. proposed LSTM_RNN for arrhythmia detection in ECG imbalanced data [162]. The LNN is used to retain valuable information. To handle skewness in data SMOTE, random under-sampling and distribution-based methods were used. Tran et al. [163] proposed LSTM based framework to handle an imbalance in the multiclass dataset. The approach uses to detect botnets in domain generative algorithms. It achieves a 7% higher micro average in precision, and recall as compared to main LSTM and other cost-sensitive based methods.

Dong et al. [164] developed a novel DL model for handling imbalanced data. The model is based on incremental minority batch rectification using hard data sample mining at learning time. The model degrades the dominant effect of the majority by identifying the boundary of sparse minority samples in a batch-wise manner. The method includes the rectification loss function of a class that deployed deep neural structural design. The problem with the model is not considering the majority class and focusing only on the minority for making a cost-effective class rectification loss (CRL) function. Lin et al. [165] proposed a model based on reinforcement DL to formulate the problem by sequential decision-making process using deep quality of state-action function. In this method, the agent acts awarding or penalizing the learning model on the input sample. The model becomes more sensitive towards minorities by rewarding them more in comparison to the majority sample. The major problem with this model is to design a novel reward function and learning algorithm for a multiclass dataset. Yuan et al. [17] introduced a regularized framework for ensemble DL to tackle the multiclass imbalance problem. The method employs regularization for penalizing classifiers in the misclassification stage which were correctly classified in the previous phase. During the training phase repeatedly misclassified treated as a hard sample and extraordinary focus is required of the learner. The drawback of the method is used only in stratified sampling cases and has higher computational time complexity.

Wang et al. [166] proposed a deep neural network (DNN) for handling imbalanced problems. The DNN mainly focus on CRL to achieve optimal parameter for iteratively minimizing training errors. DNN uses the mean squared false error (MSFE) loss function to confine equally majority and minority class errors. It is inspired by the concept of true positive and negative rates. Limitation of DNN to explore effective MSFE loss functions for different NN architecture. Stoyanav et al. [58] proposed dice loss function (DLF) to examine the sensitivity of loss function for learning and different imbalance ratio of labels in 2D and 3D segmentation tasks. They also proposed maintaining the class imbalance property of the DLF. Zhang et al. [167] proposed a deep belief network based on evolutionary cost sensitivity (ECS) for imbalanced data. It optimized the misclassification cost of training data based on the G-mean objective function. The proposed model first optimizes misclassification error cost and then applies DBN. Dablain et al. [168] proposed DeepSMOTE which contain three components: encoder/ decoder, SMOTE, loss function. It generates high-quality and informative artificial images which are right for visual examination. The problem with the method does not apply to graph and text-based data. Andrei et al. [169] demonstrated how DL techniques can be utilized to detect overlapped speech. For this purpose, they use 10 different speakers to produce overlapped speech and analyze how DL techniques are helpful in this aspect.

Alia et al. proposed [170] hybrid DL and visual framework for detecting the behavior of pedestrians. The two main components of the work are extraction of motion information and annotation of pushing patches. The combination of CNN + RNN was used for calculating the density of the crowd. The limitation of the work is, that it cannot be used in real applications but it depends on recorded video. It will not work in case of recording with a live-moving camera. Wang et al proposed [171] DL generative model which is vigorous to imbalanced classifications. The new synthetic instance is generated with the cooperation of the Gaussian distribution property. The work is limited to the integration loss while producing a new synthetic instance. Liu et al. [32] proposed a model based on GAN with feature enhancement (FE) used for fault detection purposes in mechanical rolling bearing. The GAN extends imbalance data and FE collects faulty features. The model is not applicable in semi-supervised learning examples. The training process requires a lot of resources hence computational complexity is high. Yue et al. proposed [172] modified GAN to balance and augment Egyptian characters written on bone in the form of hieroglyphs. The dynamically selected augmented data then use to train the DL classifier. Using this concept they proposed a novel model for character recognition. It is useful only for larger datasets. Liu et al. [173] presented an overview of DL-based industrial applications by enhancing samples. They also discussed explainable DL methods. Arun et al. proposed [174] a novel model based on LSTN + RNN to predict country-wise cumulative COVID-19 infected cases. The LSTN, RNN with the combination of gated recurrent unit performs nonuniform is a major problem with this model even though it depends on regionalism data. Liu et al. [24] proposed a novel data synthesis method based on GAN for enhancing deep features. Later on, it tested imbalanced data for fault diagnosis in the rolling bearing machine. The time complexity is high due to the adversarial training strategy. Ding et al. [19] designed a model based on GAN for intrusion detection in a network. Hybrid sampling is used to reduce the imbalance ratio. The KNN and GAN are used for under and oversampling respectively. The model has not considered the effect on the majority while generating a minority sample. It is unable to solve the overlapping problem. The summarized issues and limitations in deep learning based models are given in Table 6.

Table 7 shows top deep learning neural networks along with brief descriptions and application areas.

3.3 Big data based review

Yin et al. [7] proposed an approach for efficient and safe Tunnel-Boring-Machine operation on imbalanced big data. In this approach, they first preprocessed training data using a mining technique and then used the adaptive-synthesis (ADASYN) algorithm to reduce the imbalance. finally, hybrid ensemble learning is used to identify the geological rock class. khattak et al. proposed deep learning based model for detecting theft activity by illegal pattern in electricity consumption. The challenging task in such bi data is class imbalance which was resolved by using adaptive synthetic and Tomeklinks method [89]. Sripriya et al. [88] proposed model for handling imbalance issue in drug discovery big data environment. to handle class imbalance issue, synthetic instances generated using SMOTE then combination of Gradient boost, logistic regression and k-nearest neighbor techniques used to protein selection for discovery of relevant drugs. Javaid et al. [8] proposed a method for electricity theft detection using LSTM+CNN on big data. The imbalance is handled by the ADASYN approach where theft behavioral instances are in minority and class of interest. Jonson et al. [204] conducted experimental study based on random undersampling, random oversampling and its combination to handling imbalance problem in big data domain and concluded that oversampling performs better than undersampling. Wang et al. [11] proposed an imbalanced and big data framework for extreme ML in distributed and parallel computing environments. Sleeman et al. [9] proposed a framework for a multiclass imbalanced big data environment based on novel SMOTE to overcome data distribution limitations. The framework is augmented through informative resampling and novel-portioning-SMOTE on spark nodes for a big volume of data. Maurya et al. [10] presented a framework for imbalance and big data domain. The method outperforms in G-mean,f1-score, and error rate on several big volume datasets as compared to state-of-the-art methods. For maximizing the classification performance metrics convex loss function was introduced. Justin et al. conducted a study for evaluating the use of deep learning and resampling on imbalanced big data in various domains such as fraud detection and medicare [12]. The study concluded that random sampling is the most preferred method to handle imbalanced big data issues. Zhai et al. proposed an under-sampling and ensemble-based method for the classification of larger volume datasets. It used the MapReduce approach to translate big data clusters into subsets to maintain data distribution adaptive manner. Yan et al. [205] proposed a framework based on borderline margin loss by separating minority and majority samples in the overlapped region for a bigger volume dataset. Major big data issues is shown in Table 8.

4 Comparative analysis with existing survey

This section describes a detailed comparative analysis of our survey with existing surveys in overlapped and imbalanced domains. The presented analysis is based on different characteristics whether these have been included in the survey or not. Class imbalance, class overlap, categorization of handling methods, advantages and disadvantages of the methods, limitations of the methods, important findings and research gaps, and performance metrics with highlighted outperformed one by handling method. The analysis includes surveys between the years 2016 to 2022 in the context of deep learning, machine learning, and big data environment. Table 9 provides a detailed comparative analysis of this survey with existing surveys.

Branco et al. [81] grouped existing methods to handle imbalanced and overlapped datasets into data pre-processing, special-purpose learning method, prediction post-processing, and hybrid methods. The data pre-processing approaches include methods that change class distribution in the imbalanced dataset to make it balanced. These methods are further grouped into resampling, active learning, and weighting data space. The advantages of these methods are: compatible with any existing learning tools and model classification models are more interpretable to reduce bias. The special-purpose learning method modifies the existing algorithm to make it compatible with imbalanced and overlapped datasets. The main drawback of this approach is the restriction of choice, changing of target loss functions, and prerequisite deep knowledge of algorithms. The prediction post-pre-processing approaches use the original dataset and the learning algorithm only manipulates the predictions of models as per preference to user and imbalance.

These methods were further regrouped into the threshold and cost-sensitive post-pre-processing method. The hybrid methods are obtained by combining resampling and special-purpose learning methods.

Neelam et al. [82] grouped existing solutions to handle imbalanced and overlapped datasets into data-level methods, algorithm-level methods, ensemble and hybrid methods, and other different methods. The data level methods resample the dataset to reduce the negative impact on the classification model. The algorithm-level methods modify the existing models or design a new algorithm which sensitive to imbalanced and overlapped datasets. The ensemble and hybrid method uses multiple samples with replacement of dataset and each sample trained either single model independently and behaves like multiple models and final decision of testing data taken by the averaging result of the model trained by different samples. The other methods are based on feature selection, instance selection, and principal component analysis by using different complexity measure functions.

Kaur et al. [83] conducted a review on imbalanced dataset challenges in machine learning applications. The authors presented reviews on methods based on data pre-processing, algorithm, and hybrid approach. The review search criteria are based on parameters: definition, nature, challenges, algorithms, evaluation metrics, and domain area of the problem and issue.

Johnson and Khoshgoftaar [38] categorized existing methods to deal with class imbalanced datasets for learning algorithms: data-level methods, algorithm-level methods, and hybrid methods. Data level methods effort to shrink the intensity of imbalance all through various data resampling methods. Algorithm-level methods for handling class imbalance, usually implemented with a weight or cost representation, comprising modifying the fundamental learner or its amount produced to decrease biasing to the majority instance. At last, hybrid systems purposefully merge both sampling and algorithm-based methods.

Pattaramom et al. [2] presented a technical review on existing approaches to handle class imbalance and class overlapping and its negative effects on the performance of classification models. The experimental results showed that increases in the overlap percentage allow decreases in the performance of models but in the case of class imbalance meant not always have a performance effect. Both overlap and imbalance decrease sensitivity but when the overlap percentage is small, changes in imbalance do not show any impact on model performance however at a higher overlap percentage, degradation of sensitivity is due to class imbalance meant. This review shows that the class overlapping effect degrades the sensitivity more in comparison to the imbalance. Xiong et al. [84] conducted a systematic study on the class overlapping effects and showed that overlapping-based methods are more powerful in comparison to other methods. In this method entire data is divided into the overlapped and non-overlapped regions by using a well-known classification algorithm and then overlapped region issue is resolved using any one of the following strategies: Discarding, Merging and Separating overlapped regions in which separating strategy is good and shows better improvement in performance metrics.