Abstract

Computer vision applications require high-quality images with a lot of information. Images captured in poor weather conditions such as fog or haze can affect the visibility of the images, which can lead to negative interferences. Fog/haze removal is a technique that can improve image quality and restore the true details of the objects. Currently, the image dehazing methods, which focus primarily on enhancing the overall contrast of the image, fail to deliver quality results because the light source distribution is not specified, or the cost functions are not suited to mathematical constraints. This paper proposes an efficient restoration approach based on a Cross Entropy-Based Deep Learning Neural Network (CE-DLNN). Initially, the input images are pre-processed by using the Intensity-based Dynamic Fuzzy Histogram Equalization (IDFHE) method. Then, the Dark Channel Prior (DCP) of the enhanced image is estimated, and the corresponding transmission map. Then the important features are extracted and applied as input to the CE-DLNN, which gives clear haze/fog images. From the classified output, the haze/fog images are applied for the Modified Structure Transference Filtering (MST) to reconstruct the dehaze/defog images. Then, the effectiveness of the proposed system is illustrated. The proposed method achieved a classification accuracy of 97.08%. In comparison with the existing methods, the proposed method has attained better results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the field of computer vision has been heavily focused on the restoration of image quality due to haze [7]. The quality of natural images captured using devices such as smartphones and cameras is degraded due to weather conditions such as haze, fog, and rain [1]. These weather conditions act by blocking the visibility of the sky and reducing the overall image quality [2, 3]. Although the factors that limit visibility can cause a certain amount of damage to the image captured by a camera, low visibility due to haze or fog can also affect the quality of images. In transport, this can lead to serious accidents [4]. Due to the importance of image processing in various applications such as remote sensing and driver assistance systems, proper visibility is often required for these systems [6]. The images degraded by fog or haze cannot be used for automation without pre-processing [5].

Image restoration is the process of removing haze from a subfield of an image [8]. It mainly focuses on the modeling of image degradation caused by hazy weather [9]. Aside from physical modeling, image processing also involves the use of various image enhancement techniques such as contrast enhancement, brightness enhancement, and image recognition [10]. In earlier approaches, the challenge of haze in images was addressed by enhancing contrast as a means of reducing its adverse effects [11]. However, due to the presence of haze, it is now considered a highly challenging process to perform. This process involves dealing with various factors such as noise, data dependence, and complexity [12].

In recent years, various works have been performed to improve the process of dehazing by developing a deep-learning framework that can be used to analyze and predict the effects of haze on an image. The framework was able to obtain the correlation between the haze and the clear image by performing the haze reduction method in the grayscale or the color image [13, 14].

Various image dehazing techniques use visual cues to capture the statistical and deterministic properties of hazy images [15]. There are currently two types of image dehazing techniques: single-spectral (visible band) and multispectral. Significant advancements have been made in the field of single-spectral-based image dehazing methods. Several prior- and constraint-based techniques have been introduced, including unrelated surface shading and transmission, dark-channel prior (DCP), boundary constraint, low contrast property, and color attenuation prior. When dealing with thick or dense haze, the effectiveness of a single spectral (visible band) image dehazing method significantly diminishes, as the transmission becomes extremely low. In such scenarios, the only result is the amplification of noise. The multispectral technique deals with the near-infrared spectrum of light, it refines the transmission map and produces a clear dehazed image. These methods have contributed to notable progress in addressing the challenges of image dehazing [16]. Ordinary outdoor foggy images can be effectively cleared of fog using single-image defogging-based methods [17]. Several image dehazing techniques are also available in different types. These include patch-wise [32,33,34], pixel-wise [35], scene-wise [36, 37], and non-local-wise [38, 39]. The execution efficiency of haze removal is delayed by redundant computation and increased processing times in existing dehazing techniques. These techniques, including pixel-wise, patch-wise, scene-wise, and nonlocal-wise approaches, suffer from this issue due to the similarity of spatial structure. Furthermore, although each of these prior methods has its own advantages, they are incapable of effectively handling all practical situations. This limitation can result in vulnerable images and visual inconsistencies [18].

In recent times, deep learning theory has gained considerable attention and has proven to be successful in image-dehazing applications. By training on a large number of samples, powerful image dehazing systems can be developed. By leveraging a large number of samples for training, one can achieve powerful image dehazing systems. For instance, a trainable end-to-end system has been developed, which utilizes convolutional neural networks (CNN) to estimate scene transmission by incorporating various existing image priors [29]. In subsequent work, a method was proposed in [40] that utilizes a multi-scale CNN (MSCNN) to effectively learn features for determining the transmission. The authors of [41] introduced a dehazing model named All-in-One Dehazing Network (AOD-Net), which is constructed using CNN. AOD-Net offers the key advantage of directly generating haze-free results from a single hazy image using a lightweight CNN. Notably, this approach relies on processing local patches within individual images. Despite the ability of deep dehazing methods based on learning strategies to integrate or learn haze-related features and overcome the limitations of the patch-wise strategy, they do come with certain drawbacks. For instance, these methods may struggle to handle dense haze and require a large number of training samples [18]. In this paper, a novel cross-entropy-based deep learning neural network for the restoration of dehaze/defog images is proposed to address these issues. In this proposed CE-DLNN the remainder layer is considered to improve the classification accuracy.

The main contributions of the proposed work are,

-

Utilizing the IDFHE method the quality of the image has been improved without degrading the edges and blur of the images.

-

Using CE-DLNN the foggy and clear images are identified. In DLNN (Deep Learning Neural Network), the input layer receives extracted features as input data, which are then processed and analyzed by a number of hidden layers (HLs). The output layer (OL) produces the predicted result. However, the classifier often faces accuracy issues due to the selection of weight values. To address this problem, the proposed methodology introduces the remainder layer, which incorporates the behavior of storing previous training images. This allows for improved accuracy and performance in the classification process.

-

For restoring the Fog-free images MST technique is used. Generally, the existing variants of the Spatial Transfer Function (STF) used for extracting "detail halos" from an image struggle to effectively handle the escalating filtering strengths of guide and input images. To address these limitations, the Mean and Variance in MSTF (Multi-Scale Transfer Function) are calculated using the Bayesian model averaging function. This approach helps overcome the drawbacks associated with the previous STF variants.

The rest of the paper is structured as follows: In Section 2, approaches related to the restoration of defog images are explained. Section 3 explains the proposed restoration scheme. The performance of the proposed methodology is analysed in Section 4 and Section 5 concludes the paper with future enhancement.

2 Literature survey

Over the past few decades, numerous techniques have been suggested to effectively eliminate fog and haze. Some of the single image dehazing recent techniques are listed here.

Aneela Sabir et al. [19] developed a modified DCP using fog density and guided image-processing techniques to improve the transmission map (TM). The guided image filter was able to speed up the map's refinement. It also introduced segmentation techniques to improve the image's overall quality. Initially, the atmospheric light was calculated for each segment. For each segment, the TM was calculated using the average value of atmospheric light. The results of their evaluation revealed that the modified DCP was more accurate than the existing techniques. However, this approach was only suitable for images having large sky regions. Haseeb Hassan et al. [20] proposed an effective and automatic dehazing method that can be used to estimate the intensity of atmospheric light in an image. Superpixel segmentation was then used to segment the input image. The intensity of each super pixel was then compared with the intensity of each individual superpixel to extract the maximum possible super pixel. After that, an initial TM was estimated based on measured atmospheric light. Then the rolling guidance filter was used to refine the TM. The resulting haze-free image was then produced by combining the refined transmission and atmospheric light into a haze-imaging model. Yin Gao et al. [21] presented a dual-fusion method for single-image dehazing. The sky and non-sky regions of the haze image were divided by segmentation. A multi-region fusion (MRF) method was introduced for single-image smoothness and to optimize the transmission properly. The fusion method was created using the brightness transform function to remove the haze from a single image effectively. The results of the experiments revealed that the multi-region fusion method performed better than the state-of-the-art dehazing techniques. However, it was not able to achieve a precise segmentation between the sky and non-sky regions. Sabiha Anan et al. [22] introduced a framework that combines the segmentation and restoration techniques for the sky and non-sky regions. The framework isolated the regions and restored their respective parts using a binary mask formulated based flood-fill algorithm. The foggy sky part was restored by using Contrast Limited Adaptive Histogram Equalization (CLAHE) and the non-sky part was by modified DCP. The resulting image was blended using the restored parts. The results of the tests revealed that the MRF method performed better than the state-of-the-art dehazing techniques. However, it was not able to achieve a precise segmentation between the sky and non-sky regions. Dilbag Singh et al. [23] explored a dehazing model utilizing three novel concepts. Initially, an input image was decomposed using a mask into low and high-frequency regions based on image gradient magnitude. The depth information from an input foggy image is then extracted using a Gradient sensitive loss (GSL). Thereafter an efficient filter named a Window-based integrated means filter (WIMF) was designed to refine the TM. The results of the experiments revealed that the proposed technique was able to achieve significant results. The hyperparameter tuning issue of the method was not solved, which degraded the performance of the system. Apurva Kumari et al. [24] introduced a method for achieving visibility restoration based on gamma transformation and thresholding. The first step was to determine the atmospheric light value. This value was used to validate the image's color and contrast. The gamma transformation method was then used to determine the depth of the haze. The scene radiance was then restored using the same method. The results of the study revealed that the algorithm performed remarkably well compared to other methods. The limitation of the method was not suitable for dense fog images.

3 Proposed dehaze/defog image restoration system

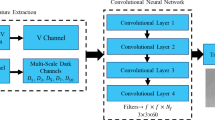

Images obtained under adverse weather conditions such as fog or haze can severely limit the visibility of the scene. These conditions can also lead to low contrast and faded colors in the images. They also decrease the visibility significantly depending on the intensity of the fog and haze. Such images cannot be used for other applications without pre-processing. In order to restore visibility, a process known as dehazing is performed. The process of dehazing an image involves removing the haze layer and bringing back the vibrant color and structure of the image. This paper introduces a novel visibility restoration approach based on a Cross Entropy-based Deep Learning Neural Network (CE-DLNN) classifier. The proposed method classifies the clear and haze/fog images and reconstructs the dehaze/defog images using the MST method. The block diagram of the proposed methodology is shown in below Fig. 1,

3.1 Pre-processing

The input images \(B_{n}\) are applied for several pre-processing steps to prepare the data for further processing. In the proposed methodology, the input images are pre-processed by using the Intensity-based Dynamic Fuzzy Histogram Equalization (IDFHE) technique to obtain the resultant high-quality image for which the intensity is almost equivalent to the average intensity of the input image. IDFHE is a histogram equalization technique, which uses the fuzzy statistics of digital images. The existing contrast-limited adaptive histogram equalization [42] and Gaussian linear contrast stretching model [43] need more iterations to enhance the images. In IDFHE, first, to handle the inaccuracy of Gray-level values, the histogram is created and it's divided into multiple sub-histograms. Then the sub-histograms are assigned to a replacement dynamic range and equalized separately by the HE approaches. Existing Histogram Equalization techniques tend to introduce some visual noise and undesired enhancement, including over-enhanced, under-enhanced, and intensity saturation effects. The proposed method takes into account the varying intensity levels in the image to avoid the issue of intensity saturation. It also computes the histogram using the fuzzy membership function. The subsequent stages of the IDFHE are detailed as follows,

Histogram computation

Computation of histogram decreases the imperfectness of grey value in a better way and offers a smooth histogram. Thus, the histogram, which is the series of real numbers is computed and divided into the number of sub-histograms using the intensity preservation measure. It can be expressed as,

where, \({B}_{Int(n)}\) gives the measure of intensity preservation, the histogram \({H}_{F(k)}\) represents the number of occurrences of grey levels around \(k\), \({\chi }_{{B}_{n}{\prime}k}\) is the fuzzy membership function used to handle the inaccuracy of grey value properly, \(u,v\) are the sustenance of the membership function. Then, the histogram is divided into several sub-histograms based on the intensity preservation measure.

Dynamic range allocation and equalization

The histogram partitions obtained from the above step are mapped to a dynamic range using the spanning function. The dynamic range of the output sub-histogram is denoted as,

where, the spanning function \((spn={k}_{high}-{k}_{low})\), factor \((fac)\), output dynamic range \((D{R}_{k})\) are the parameters useful for the dynamic equalization process, \({L}_{k}\) is the total number of pixels in the sub-histogram, \(N\) is the sequence of real numbers from the histogram. The output dynamic range of the \(k^{th}\) sub histogram \((D{R}_{k})\) is obtained from the range of \([{k}_{start},{k}_{stop}]\) as,

Then, the equalization of each sub histogram is done by remapping the histograms. After the equalization, the new image with new intensity levels can be estimated as,

where, \({B}_{enh(n)}\) is the enhanced image with a new intensity level corresponding to the \({k}^{th}\) intensity level on the original image.

3.2 Dark channel prior

After pre-processing, the enhanced image \({B}_{enh(n)}\) is then used to estimate the DCP to find the haze thickness in the image. The DCP is a type of statistic of haze-free outdoor images. It can be calculated by estimating the low intensity of the haze in the image. For instance, in most haze-free images, the low intensity (close to zero in at least one-colour channel) of the haze is observed in the local patches that have multiple colour channels. The dark channel for an image can be defined as,

where, \({B}_{enh(cc(n))}(x,y)\) denotes the Colour Channel (CC) of the input image \({B}_{enh(n)}\), the colour channel includes Red, Green, and Blue (RGB) colour space, \(\Psi\) is the local patch based on \(x,y\), \(D{C}_{{B}_{enh}(n)}\) is the output image after estimating the dark channel in which the intensity is very low except the sky region. Therefore, the dark channel \(D{C}_{{B}_{enh}(n)}\) is selected as the lowest value among three colour channels and all pixels in \(\Psi\).

3.3 Transmission map estimation

The dark channel (DC) computed for the image \(D{C}_{{B}_{enh(n)}}\) is then used to estimate the transmission map (TM). To find the TM, atmospheric light needs to be computed. Let, \(N\) be the brightest pixel value in the DC and \(m\) is a temporary variable. As a first step, \(D{C}_{{B}_{enh(n)}}\) is compared with the max value of \(N\). If both values are equal, the atmospheric light \({L}_{Atmp}\) is regarded as the input image pixels corresponding to pixel \(N\) pixel locations. Then, the input image is divided with its respective colour channel atmospheric light \({L}_{Atmp}\) to generate the TM. It can be expressed as,

where, \(T{M}_{D{C}_{{B}_{enh(n)}}}\) denotes the estimated TM, \(\alpha\) is the parameter whose value depends on the fog density of the input image.

3.4 Feature extraction

After the estimation of the TM, the important features such as Standard deviation, Mean gradient, Information entropy, colour, Correlation, Kurtosis, Skewness, SURF, HOG features, edge, GLCM Features, and wavelet features are extracted from the image \(T{M}_{D{C}_{{B}_{enh(n)}}}\).

Colour features

Colour features are the most significant characteristic of an image, which helps to understand photometrical information of different colour channels. Color is defined based on the presence of color information like distribution of color, dominant color, or colour moments. The proposed work utilizes the color moments like mean, variance, and standard deviation as the colour features. The colour features \({\Phi }_{clrfea}\) are expressed as,

where,

where,\({\phi }_{mean}\), \({\phi }_{var}\), \({\phi }_{sd}\) are the mean, variance, and standard deviation of an image of size \(i*j\), \(B(x,y)\) denotes the pixel intensity values.

Kurtosis and skewness

The skewness gives the degree of symmetry or asymmetry in the distribution where the kurtosis measures how much the tails of the distribution deviate from the normal distribution. The features are computed as,

where, \({\Phi }_{skn}\), \({\Phi }_{kur}\) are the extracted skewness and kurtosis features.

Information entropy

It describes the amount of information provided by the image. Entropy describes how much uncertainty or randomness there is in an image. It can be expressed as,

where, \({\Phi }_{E}(B)\) is the entropy corresponds to the probability distribution \(p({B}_{n})\).

SURF

Speed-Up Robust Features (SURF) is based on adding of 2D Haar wavelet responses and makes efficient use of integral images. SURF features are extracted by using the following steps: (1) scale-space extrema detection (2) key-point localization (3) orientation assignment and (5) feature point descriptors. The image is filtered by the Gaussian kernel and the Hessian matrix used to detect the interest points is constructed as,

where, \({d}_{xy}(x,t)\) denotes the first-order derivative of the filter's result in \(x\) and \(y\) direction, \({M}_{H}(x,t)\) denotes the Hessian matrix in point \(x\) of the image at scale \(t\).

After the key points are detected, Haar wavelet responses are calculated to assign an orientation to each point. Then, the square region along the obtained orientation is constructed and split into \(4\times 4\) sub-regions. For each sub-region, the features are then weighted in horizontal and vertical directions to extract the feature's descriptor.

HOG

The histogram of gradient (HOG) operator is applied to focus on the structure or shape feature vector. In the HOG vectors, the image is divided into smaller regions, and the gradient and orientation are calculated for each region. The magnitude \({G}_{\vartheta }\) of the gradients \({\vartheta }_{p}\) and \({\vartheta }_{q}\) the \(p,q\) direction and the orientation is calculated as,

where, \({\vartheta }_{p},{\vartheta }_{q}\) are the horizontal and vertical gradients, \(\partial\) is the gradient orientation.

Then the histogram (or features) for each region is generated by using the gradients and orientation values. Once the histogram is formed, the histogram vector of all regions is normalized and combined to get the features for the entire image. The final representation of the HOG feature is denoted as \({\Phi }_{HOG}\).

Edge

Edge feature from the image is obtained by using the steps called noise removal, differentiation, non-maximum suppression, double thresholding, and edge tracking. In the canny edge detection, first, the image has smoothed and the gradients, which ensure the presence of edges are taken. Then, the non-maxima suppression in which the points are not at the maximum is suppressed by computing the magnitude and direction of the gradients is performed to detect the edges. The strong and weak edges are identified by taking two thresholds namely, upper threshold \(({\tau }_{up})\) and lower threshold \(({\tau }_{lw})\) in double thresholding. Final edges are categorized with the help of edge tracking as,

where, \(Str{g}_{E},W{k}_{E},No{n}_{E}\) are the categorized as strong, weak, and non-edges, \(\vartheta (x,y)\) is the gradient to determine the type of the edges.

GLCM

The Gray-level co-occurrence matrix (GLCM) is a way of extracting image properties related to second-order statistical texture features. The GLCM matrix is a representation of the number of rows and columns that equal the grey levels of an input image. It is constructed by taking into account the spatial relationships between the values in the image and the rows and columns.

The GLCM features are expressed as,

where, \({\Phi }_{GLCM}\) denotes the extracted GLCM features, \({\phi }_{n}\) denotes the \({n}^{th}\) GLCM feature.

Wavelet features

The wavelets are used in extracting features from the input images. The Discrete Wavelet Transform (DWT) is used for extracting the wavelet coefficients from the images, which are the function of scale and position and localizes the frequency information. The different frequency components studied are expressed as,

where, \({\lambda }_{x,y}\) refers to the coefficients represent the frequency component in the signal \(g(B)\), the functions \({\varpi }_{hpf}\), \({\varpi }_{lpf}\) represents the high frequency (HF) and low-frequency (LF) filter coefficients.

The DWT decomposes the image into two components: the LF and the HF components. The LF components provide global description and detail information, while the HF components provide information about finer details. The LF content represents that the approximation of the original image is further decomposed for 2nd-level approximation and image details. The process is continued until the required level of resolution is obtained.

Thus, the final set of features extracted are expressed as,

where, \({\Phi }_{fea(n)}\) denotes the number of features extracted, \({\Phi }_{N}\) represents the \({N}^{th}\) number of features.

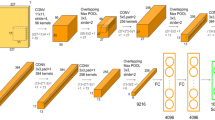

3.5 Classification

The extracted features \({\Phi }_{fea(n)}\) are rendered as input to the Cross Entropy-based Deep Learning Neural Network (CE-DLNN). DLNN is a neural network with an input layer (IL), output layer (OL), and multiple hidden layers (HL). In DLNN, the input layer of neurons receives the input data that are the extracted features, the input data is processed and analysed by the number of HLs, and then the OL produces the predicted result. Normally, the classifier suffers from the accuracy problem for the reason of weight value selection. To solve this problem, the remainder layer is considered in the proposed methodology, which consists of the behaviour of storing the previous training images. The remainder layer also stores the weight values of the previous training. Thus, the remainder layer is highly helpful for weight selection. It increases the accuracy. Then, the normal loss calculation is not well suitable for all types of input. So, in this research, the loss is calculated based on the cross-entropy. The architecture of the proposed CE-DLNN is shown in Fig. 2,

The procedure of each neuron processing the data is as follows,

-

Input data is fed into the neuron.

-

Multiplying weights to each input.

-

Summing the product of weights and input data and adding bias.

-

Applying activation function.

-

Producing output and labelling.

The mathematical calculation to attain the output of the neurons is expressed as,

where, \({D}_{l}\) is the output layer to produce the output of the network, \({\Omega }_{af}\) denotes the activation function, \({\Theta }_{n}\) is the weights multiplied with the input, and \(\wp\) is the bias value-added to the summation. During the classification process, once the output is computed, the current weight values between the input and HL, and the hidden and output layer get copied into the corresponding reminder units and are used in the next time step. The reminder layer stores the weight values as,

where, \({D}_{rl}\) denotes the output of the reminder layer, \({D}_{il,hl,ol}\) denotes the output of the IL, HL, and OL. The OL contains the output of two classes called, clear image \({B}_{clr}(x,y)\) and haze/fog image \({B}_{hz/fg}(x,y)\).

Then, the loss is estimated by calculating the difference between the actual and predicted outputs for all classes. The proposed work utilized the cross-entropy function for prediction, and it can be expressed as,

where, \(C{E}_{LF}\) represents the loss of the model, \(L\) represents the number of output data,\({D}_{l}\),\(\stackrel{\iff }{{D}_{l}}\) are the actual and predicted class outputs. Algorithm 1 shows the procedure followed in the proposed CE-DLNN method.

3.6 Restoring haze/fog-free image

After classification, the Haze/Fog image \({B}_{hz/fg(n)}\) is restored as a Haze/Fog-free image by applying the restoration technique called modified Structure Transference Filtering (MSTF) technique. Structure transference filtering (STF) is known as the structure transferring property of the guidance filter where the filter can transfer the structure from the guidance to the filter output. In STF, a guidance image that could be identical to the analysed input image is used to guide the process and the dehaze image is reconstructed by refining the transmission map. In general, the STF variants that are used for extracting “detail halos” from an image are not able to handle the increasing filtering strengths of guide and input images. To overcome the drawbacks, the Bayesian model averaging function is used to calculate the Mean and Variance in MSTF.

The Guidance filter, which consists of the STF, takes the inputs of the analysed image \({B}_{hz/fg(n)}\) to be filtered and the guidance image \({B}_{Guide(n)}\) to transfer the predefined structure to the image to be filtered. The structure to be transferred is defined by the guidance vector field. The output image is obtained as,

where, \({c}_{j}\),\({d}_{j}\) are the linear coefficients, \({B}_{refined\left(n\right)}\) denotes the output after refining the transmission map (TM). The linear coefficients are computed by minimizing the objective function as,

Where, \(\partial\) denotes the objective function, \(\beta\) is the regularization parameter,

, \({\mathfrak{I}}_{\mathit{var}(j)}\) are the mean and variance of \({B}_{Guide(n)}\) in window cantered at \(j\), \({B}_{hz/fg(n)}{\prime}\) is the mean of \({B}_{hz/fg(n)}\) in \({A}_{j}\). The

, \({\mathfrak{I}}_{\mathit{var}(j)}\) are the mean and variance of \({B}_{Guide(n)}\) in window cantered at \(j\), \({B}_{hz/fg(n)}{\prime}\) is the mean of \({B}_{hz/fg(n)}\) in \({A}_{j}\). The

, \({\mathfrak{I}}_{\mathit{var}(j)}\) are computed using the Bayesian model averaging function

, \({\mathfrak{I}}_{\mathit{var}(j)}\) are computed using the Bayesian model averaging function

as,

as,

Where, \(\xi\) denotes the weights that indicate the plausibility of each scenario, \(\Delta\) represents the delay of assessments. After refining the transmission map the dehaze/defog image is reconstructed as,

Then, the dehaze/defog image \(B_{deh/def(n)}\) obtained is used for restoration.

4 Result & discussion

To assess the effectiveness of the proposed restoration method, various experiments are conducted in this section. The proposed defog/dehaze image restoration system is implemented in the working platform of MATLAB.

4.1 Database description

The proposed method utilized the real-world images (Tiananmen, y01, y16, Pumpkin, Stadium, Swan, Cone, House, and Mountain) and synthetic images taken from the Realistic Single Image Dehazing (RESIDE) (https://sites.google.com/view/reside-dehaze-datasets/reside-standard) dataset for the experimental evaluation. The RESIDE dataset is a large-scale benchmark that contains five different sub-groups.

The above Figs. 3, 4, and 5 show the input images and the resultant dehazed images with their estimated transmission map of synthetic and real-world images of RESIDE datasets. Figures 3(a), 4(a), and 5(a) show the input images, Figs. 3(b), 4(b), and 5(b) shows the estimated transmission map. Finally, the obtained dehaze/defog images are shown in Figs. 3(c), 4(c), and 5(c). The Figs. 6, 7, 8, 9, 10, 11, 12, 13, 14, and 15 show the results comparisons of the proposed with original and existing methods results. The proposed technique outperformed the existing methods described in [24] in terms of enhancing dehazed/defogged images.

4.2 Quantitative analysis of real-world images

In this section, the quantitative analysis for the proposed and existing methods is conducted. The analysis is done based on the real-world images using the performance metrics such as contrast gain (CG), sigma, E-value, R-value, and visual contrast measure (VCM). Then, the structural similarity index measure (SSIM), image visibility measurement (IVM), and Peak-to-signal Noise Ratio (PSNR).

The performance metrics used for the analysis are defined as follows,

where, \(VCM\) is the Visual contrast measure, \(NL{A}_{sd}\) denotes the number of local areas in with standard deviation, \(NL{A}_{t}\) is the total number of local areas, Contrast gain \((CG)\) is the difference between the mean contrast of the defoggy and foggy images, \({E}_{val}\) is the E-value, \(NV{E}_{{B}_{n}}\) and \(NV{E}_{{B}_{dhz/dfg(n)}}\) are the number of visible edges in the original and restored image, R-value \(({R}_{val})\) is the ratio of average gradients in the original and gradient images.

Table 1 shows the performance measurement of 1 (a) contrast gain and 1 (b) sigma values for the proposed and existing haze/fog removal methods. Contrast gain gives the mean contrast difference amid dehaze/defog and haze/fog images whereas sigma gives the percentage of saturated pixels. To obtain improved performance, the contrast gain should be higher, and the sigma value should be lower. On analysing the above Table 1(a), the contrast gain attained by the proposed method for all images is higher than the existing methods and in terms of sigma value also the proposed method obtained better performance. More saturated pixels indicate poor quality of restored images. Therefore, the higher sigma value attained by Huang et al. [27] is not acceptable which highlighted in Table 1(b). A visual inspection of the proposed technique revealed that it is less susceptible to the artifacts produced by the structure transference filtering variants. This suggests that it is more efficient to restore the image's quality.

Table 2 demonstrates the performance of the proposed and existing restoration methods based on the performance metrics, 2(a) VCM 2(b) E-value, and 2(c) R-value. VCM parameter is used to measure the visual contrast of the images and the higher values of VCM produce the clearer images. The E and R values are the measures of visibility of the images. In Table 2(a), the proposed method achieved a VCM value higher than the existing methods. Then, the analysis of the results of Table 2(b) and (c) deliver that the E and R-values of the existing methods are smaller and higher for several images respectively. But the proposed method achieves the same level of results for all images, which suggests a better performance of the proposed method. Hence, the analysis justifies the advantage of the proposed approach over the existing [18, 25,26,27], and [24] methods.

Figure 16 shows the SSIM, PSNR, IVM, and VCM comparisons of the proposed and existing methods for the three real-world images such as forest, cone, and pumpkin. A large value of PSNR and SSIM gives the smaller image distortion and better structural similarity between the observed output and the actual image. From the above charts, it can be said that the proposed technique has the highest values of SSIM and PSNR. It is evident that the recovered images that are clearer compared to the existing methods. Concerning IVM and VCM, the existing [29] and [30] obtained much lower performance than the other methods and the IVM and VCM of the proposed method are very high than the prevailing methods. Thus, the analysis states that the proposed method is superior to existing methods.

4.3 Result analysis of synthetic images

In this section the results of city and Gugong images of synthetic images are shown in Fig. 17. The foggy input images and the resultant defog images with their estimated transmission maps are shown.

The quantitative result analysis of the synthetic images for the proposed and existing [29,30,31], and [24] method’s comparison is shown in Table 3. The parameters used for the analysis are the same as those used in the analysis of real-time images except that mean square error (MSE) is used instead of PSNR.

In analysing the above Table 3, the results obtained by the existing methods are varied as higher and lower for contrast gain, E-value, and R-value. The values of the proposed method are better than the existing methods, which shows that the results are improved. In the above Table 3 SSIM, MSE, and VCM of the proposed and existing methods are shown. The synthetic images such as Gugong and city are used for the analysis. The SSIM achieved by the proposed method is slightly close to the existing [29] and [24] for the Gugong image and very high than the existing methods for the city image. However, the lower MSE of the proposed method indicates more acceptance of the observed images. In terms of VCM, for the image Gugong, the performance of existing [29] and [30] are very lower, and the existing [31] and [24] achieved better results than the existing [29] and [30] methods and lower performance than the proposed method. For the city image also, the proposed method has better performance. Thus, the analysis delivers that the recovered results of the proposed method are similar to the actual ground truth among all the methods.

The above Fig. 18 evaluates the efficacy of proposed and existing methods in terms of computation time. The implementation of the existing [25] is computationally high. Compared to the [25] method the computation time of the [26] and [27] is crucially low for all images. For [28], the estimated time is lower than the [25] and higher than other methods. But improving the quality of images and classifying the haze-free images progressively makes the proposed method take lower processing time for all images, which indicates the efficiency of the proposed method.

4.4 Performance analysis of classification

In this section, the performance of the proposed CE-DLNN is analysed with existing methods such as Deep Neural Network (DNN), Neural Network (NN), Adaptive Neuro-Fuzzy Interference System (ANFIS), and Optimized ANFIS (OANFIS). The analysis is done concerning the quality metrics such as sensitivity, specification, accuracy, precision, recall, F-measure, Negative Predictive Value (NPV), False Positive Rate (FPR), False Negative Rate (FNR), Mathews Correlation Coefficient (MCC), False Detection rate (FDR), False Rejection Rate (FRR).

Figure 19 (a-j) analyses the performance of the proposed CE-DLNN and existing DNN, NN, ANFIS, and OANFIS classifiers concerning the performance metrics namely Sensitivity, specificity, and accuracy are widely used statistics to quantify the ability of the model the prediction of clear and haze/fog images. F-Measure produces a single score that takes into account both precision and recall concerns. NPV is the probability that subjects with several true negatives, which means the greater the better. MCC is used to measure the quality of classification, which implies greater the value better the performance in terms of prediction as clear and haze/fog images. Some other statistics such as FPR, FRR, FDR and FNR should be lower for the improved performance. Figure 19 (a-j) visually demonstrates the superior performance of the proposed classifier across all quality metrics. The predictive capabilities of the proposed classifier significantly surpass those of existing methods, indicating its advanced performance level.

5 Conclusion

An efficient restoration methodology is presented in this paper. The proposed technique is utilized a novel CE-DLNN method for classifying the clear and haze/fog images and MST for refining the transmission map to produce the defog/dehaze images. The proposed method consists of six phases. The proposed CE-DLNN and MST are compared to existing methods to verify the system's effectiveness. Experimental results are proved that the proposed methods outperformed existing methods. The accuracy of the proposed method is improved by 43.68% over the existing methods. The proposed system is able to reduce the computation time of the image processing process by filtering out the haze-free images. An analysis of the proposed method revealed that it produces better quality images with less saturated pixels and enhanced contrast. The results of the study revealed that the proposed method is more effective than the existing methods when it comes to reconstructing images. In the future, the work can be extended to design the defogging system for live videos.

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Sharma N, Kumar V, Singla SK (2021) Single image defogging using deep learning techniques past, present and future. Arch Comput Methods Eng 28:4449–4469

Kuanar S, Mahapatra D, Bilas M, Rao KR (2021) Multi-path dilated convolution network for haze and glow removal in nighttime images. Vis Comput. https://doi.org/10.1007/s00371-021-02071-z

Riaz S, Anwar MW, Riaz I, Kim H-W, Nam Y, Khan MA (2022) Multiscale image dehazing and restoration an application for visual surveillance. Comput Mater Continua 70(1):1–17

Thanh LT, Thanh DNH, Hue NM, Surya Prasath VB (2019) Single image dehazing based on adaptive histogram equalization and linearization of gamma correction. 25th Asia-Pacific Conference on Communications (APCC), 6–8 November, Ho Chi Minh City, Vietnam

Tufail Z, Khurshid K, Salman A, Khurshi K (2019) Optimisation of transmission map for improved image defogging. Inst Eng Technol 13(7):11161–11169

Banerjee S, Chaki S, Jana S, Chaudhuri SS (2020) Fuzzy logic-refined colour channel transfer synergism based image dehazing. IEEE Region 10 Symposium, 5–7 June, Dhaka, Bangladesh

Liu W, Yao R, Qiu G (2019) A physics based generative adversarial network for single image defogging. Image Vis Comput 92:1–15

Yousaf RM, Habib HA, Mehmood Z, Banjar A, Alharbey R, Aboulola O (2019) Single image dehazing and edge preservation based on the dark channel probability-weighted moments. Math Probl Eng 2019:1–11

Feiniu Yuan Yu, Zhou XX, Shi J, Fang Y, Qian X (2020) Image dehazing based on a transmission fusion strategy by automatic image matting. Comput Vis Image Underst 194:1–11

Ma RQ, Shen XR, Zhang SJ (2020) Single image defogging algorithm based on conditional generative adversarial network. Math Probl Eng 2020:1–8. https://doi.org/10.1155/2020/7938060

Du J, Zhang J, Zhang Z, Tan W, Song S, Zhou H (2020) RCA-NET: image recovery network with channel attention group for image Dehazing. In: McDaniel T, Berretti S, Curcio I, Basu A (eds) Smart multimedia. ICSM 2019. Lecture notes in computer science, vol 12015. Springer, Cham. https://doi.org/10.1007/978-3-030-54407-2_28

Li B, Ren W, Dengpan Fu, Tao D, Feng D, Zeng W, Wang Z (2015) Benchmarking single image dehazing and beyond. J Latex Class Files 14(8):1–13

Aiswarya Menon N, Anusree KS, Jerome A, Sreekumar K (2020) An enhanced digital image processing based dehazing techniques for haze removal. Proceedings of the Fourth International Conference on Computing Methodologies and Communication, 11–13 March, Erode, India

Yue BX, Liu KL, Wang ZY, Liang J (2019) Accelerated haze removal for a single image by dark channel prior”. Front Inf Technol Electron Eng 20:1109–1118

Ren W, Pan J, Zhang H, Cao X, Yang M-H (2019) Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int J Comput Vision 128:240–259

Kumar R, Kaushik BK, Balasubramanian R (2019) Multispectral transmission map fusion method and architecture for image dehazing. IEEE Trans Very Large Scale Integr (Vlsi) Syst 27(11):2693–2697

Zhu Z, Luo Y, Qi G, Meng J, Li Y, Mazur N (2021) Remote sensing image defogging networks based on dual self-attention boost residual octave convolution. Remote Sens 13(16):1–19

Ju M, Ding C, Jay Guo Y, Zhang D (2019) IDGCP Image dehazing based on gamma correction prior. IEEE Trans Image Process 29:3104–3118

Sabir A, Khurshid K, Salman A (2020) Segmentation-based image defogging using modified dark channel prior. J Image Video Proc 2020:6. https://doi.org/10.1186/s13640-020-0493-9

Hassan H, Bashir AK, Ahmad M, Menon VG, Afridi IU, Nawaz R, Luo B (2020) Real-time image dehazing by superpixels segmentation and guidance filter. J Real-Time Image Proc 18:1555–1575

Gao Y, Li Q, Li J (2020) Single image dehazing via a dual-fusion method. Image Vis Comput 94:1–22

Anan S, Khan MI, Saki MM, Kowsar KD, Dhar PK, Koshiba T (2021) Image defogging framework using segmentation and the dark channel prior. Entropy 23(3):1–21

Singh D, Kumar V, Kaur M (2021) Image dehazing using window-based integrated means filter. Multimed Tools Appl 7(5):34771–34793

Kumari A, Sahoo SK, Chinnaiah MC (2021) Fast and efficient visibility restoration technique for single image dehazing and defogging. IEEE Access 9:48131–48146

He K (2011) Single image haze removal using dark channel prior. Dissertation, The Chinese University of Hong Kong

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Xu H, Guo J, Liu Q, Ye L (2012) Fast image dehazing using improved dark channel prior. IEEE International Conference on Information Science and Technology, March 23–25, 2012, Wuhan, Hubei, China

Huang S-C, Chen B-H, Wang W-J (2014) Visibility restoration of single hazy images captured in real-world weather conditions. IEEE Trans Circuits Syst Video Technol 24(10):1814–1824

Cai B, Xu X, Jia K, Qing C, Tao D (2016) DehazeNet: an end-toend system for single image haze removal. IEEE Trans Image Process 25(11):5187–5198

Tang G, Zhao L, Jiang R, Zhang X (2019) Single image dehazing via lightweight multi-scale networks. In: Proc. IEEE International Conference on Big Data (Big Data), pp. 154–169

Berman D, Treibitz T, Avidan S (2016) Non-local image dehazing. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27–30, Las Vegas, NV, USA

Wang WC, Yuan XH, Wu XJ, Liu YL (2017) Fast image dehazing method based on linear transformation. IEEE Trans Multimed 19(6):1142–1155

Xiao CX, Gan JJ (2012) Fast image dehazing using guided joint bilateral filter. Visual Comput 28(6–8):713–721

Ju MY, Ding C, Zhang DY, Guo YJ (2018) BDPK: Bayesian Dehazing using prior knowledge. IEEE Trans Circuits Syst Video Technol 29(8):2349–2362

He KM, Sun J, Tang XO (2011) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

Ju MY, Zhang DY, Wang XM (2017) Single image dehazing via an improved atmospheric scattering model. Visual Comput 33(12):1613–1625

Yuan H, Liu CC, Guo ZX, Sun ZZ (2017) A region-wised medium transmission based image dehazing method. IEEE Access 5:1735–1742

Berman D, Treibitz T, Avidan S (2016) Non-local image Dehazing. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1674-1682

Berman D, Treibitz T, Avidan S (2020) Single image Dehazing using Haze-Lines. IEEE Trans Pattern Anal Mach Intell 42(3):720–734

Ren WQ, Liu S, Zhang H, Pan JS, Cao XC, Yang M-H (2016) Single image Dehazing via multi-scale convolutional neural networks. European Conference on Computer Vision (ECCV). pp. 154– 169

Li B, Peng X, Wang Z, Xu J, Feng D (2017) Aod-Net: all in one dehazing network. IEEE International Conference on Computer Vision (ICCV). pp. 4770–4778

Rao RV, Prasad TJC (2021) Content-based medical image retrieval using a novel hybrid scattering coefficients - bag of visual words - DWT relevance fusion. Multimed Tools Appl 80:11815–11841

Rao RV, Prasad TJC (2023) An efficient content-based medical image retrieval based on a new Canny steerable texture filter and Brownian motion weighted deep learning neural network. Vis Comput 39:1797–1813

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest in the submission of this article for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sabitha, C., Eluri, S. Restoration of dehaze and defog image using novel cross entropy-based deep learning neural network. Multimed Tools Appl 83, 58573–58606 (2024). https://doi.org/10.1007/s11042-023-17835-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-17835-z