Abstract

Mobile and handheld electronic products such as smartphones and dashboard cameras are frequently used to record occurrences of traffic accidents nowadays. Most existing studies require at least one entire lane marking as a reference to calculate a certain amount of driving distances and are not adapted to a targeted vehicle with a rapidly changing speed in a short time. Therefore, existing approaches cannot provide the desired accuracy of speed estimation in real-life scenarios. In this study, we obtain dynamic time and space data from recorded footage using dashboard cameras and then apply photogrammetry and cross-ratio methods to estimate the vehicle speed. In addition, the proposed method is applied to other cars' speed estimation and ego-speed estimation even though both cars are moving. The experimental results show that given the frame rate on the recorded footage, our proposed method only needs one object in each frame to estimate the vehicle speed even at a rapidly changing speed. Our proposed method shows that the difference between the estimated speed and the reference speed by the Global Positioning System (GPS) is smaller than 1 km/h when only one car is on the move, and smaller than 3 km/h when both cars are on the move. Nevertheless, the difference between the estimated speed and the reference speed is between 1.46 km/h and 5.28 km/h when one car moves by AUPD method. To our knowledge, there is no existing method that estimated the front vehicle speed when both cars are on the move using dashboard cameras.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Driving speed is usually one of the bases for judging whether the driver is at fault in an accident during the identification of vehicle driving accidents and judicial proceedings. Because vehicle speed can be used to analyze and judge the responsibility of the vehicle involved in the accident, many studies have been devoted to finding better and more accurate methods to estimate the vehicle speed of the accident vehicle.

Many methods of speed measurement are currently in use. Klein et al. introduce the inductive loop speed measurement [1]. Two induction loops are embedded in the front and rear of the road section when paving, and a signal is generated when the vehicle passes over the loop. Since the distance between the two loops is known, the vehicle speed can be calculated by measuring the time difference between the two loops. Krishnan et al. proposed the interval speed measurement to estimate vehicle speed [2]. A vehicle identification system is set up at two fixed points on the road. By collecting a vehicle’s information such as its license plate and passing time, its speed can be easily calculated. Another method used to measure vehicle speed is radar [3, 4]. Using a speed measurement system settled on the ground, radio waves are transmitted to vehicles in motion. According to the Doppler effect, the faster the vehicle speed, the higher the frequency of reflected waves received by the speed measurement system. The system automatically calculates the vehicle speed from the frequencies of the transmitted and receives reflected radio waves. Zou et al. used laser light to make speed measurements [5]. Two distances can be measured by transmitting two infrared signals at a fixed time interval. Since the speed of light is constant, the speed of the target can be obtained by dividing the difference between the two distances by the time interval of the transmission. More research used digital images to estimate vehicle speed [6,7,8,9]. They used on-site recording equipment to identify distances that are visible in the footage and then measure the time the vehicle takes to travel that distance to estimate its speed. Adnan et al. [10] grouped these methods into three categories: invasive, non-invasive, and off-road. Invasive methods include inductive loops, pneumatic tubes, etc. Non-invasive methods such as radar, laser, and digital image speed measurements. Probe vehicles, radar guns, and laser guns are off-road speed measurement methods.

Those above-mentioned vehicle speed estimation methods require their equipment to be pre-installed at the accident scene, which is impractical for many unexpected accidents. As a result, those methods cannot provide useful information concerning the accident for judicial proceedings. In contrast, an on-site video recording device can provide such a need. Recently, the commonly used video recording devices for recording car accidents can be divided into two types, fixed-point static filming monitors, such as a traffic camera installed in the intersection, and dashboard cameras, such as a dashboard camera, which can record on the move at any time. Accidents often occur in places where traffic cameras are unable to capture, either due to dead spots that they do not capture or the absence of them altogether. Therefore, based on the protection of their rights in traffic accidents, dashboard cameras gradually become the necessary equipment to protect the rights of driving.

Nowadays, more and more drivers prefer to install a dashboard camera on their vehicles to record the entire driving process. In the event of an accident, video recordings can be used as reference evidence to determine responsibility. In addition to presenting the instantaneous location, trajectory, and speed of the vehicles involved before and after the accident, these images also provide a notarized account of the incident. Through the recording, not only can they determine their driving speed, but they also can view the speed of other moving vehicles. The video analysis of driving speeds has become a widely used method for an estimate the speed of vehicles involved in traffic accidents and provides important evidence for judicial investigations. Because the existence of traffic accident images is very common nowadays, and because the video recording data is easy to keep, immediate and clear, it has become important information for road traffic accident management.

In traffic accident reconstruction, the use of digital images to estimate the speed of the involved vehicle is an important process in accident analysis. This process is used to focus on identifying the distance that is visible in the video and then measuring the time for the vehicle to pass that distance to estimate its speed. However, when there are no clear lane markings or landmarks to locate the car, accurate speed estimation becomes difficult or even impossible. In the past [6,7,8,9], the traffic appraisal digital image measurement process was based on the image frame method. Before the speed of a vehicle was estimated, it was always necessary to take measurements at the accident scene or on the monitor screen to obtain the distance traveled by the vehicle and its corresponding time interval. The distance traveled is then divided by the time interval to obtain the average speed. In this method, the key process is how to obtain the exact distance traveled and the corresponding time interval. However, the time interval used in these methods [6,7,8,9] often lasts several seconds or at least several image frame intervals. Even if the exact travel distance and time interval have been obtained, the average speed is hardly representative of the instantaneous speed, especially when the vehicle is in the process of acceleration or deceleration. In some practical cases, the average vehicle speed estimated from the above image-frame-based digital image measurement method will be close to, but slightly lower than, the maximum speed in the estimated information. In cases where the speed may be underestimated, the maximum speed of the moving vehicle in the estimation data may exceed the maximum speed limit of the road but cannot be detected. Liu et al. [11] proposed a pixel-based method to estimate the relative instantaneous velocity of a vehicle from Closed-circuit television (CCTV) images. However, CCTV systems are not set up everywhere. Therefore, to compensate for this drawback, it is necessary to calculate the instantaneous speed of the vehicle in traffic identification. To correctly sort out the liability, it will be important to find an effective method to estimate the instantaneous speed of the vehicles involved in an accident. Thus, the contributions of this paper are as follows:

-

1)

we propose a framework to obtain dynamic time and space data from recorded footage using dashboard cameras and then apply photogrammetry and cross-ratio methods to other cars' speed estimation and ego-speed estimation even though both cars are moving; and

-

2)

according to our experiment, our proposed framework only needs one single lane marking in each frame to accurately estimate the vehicle speed even at a rapidly changing speed.

The paper is organized as follows: Sect. 2 shows related works about estimating vehicle speed by image frames; the proposed method is described in Sect. 3; Sect. 4 demonstrates experimental results, the limitations of application are given in Sect. 5 and Sect. 6 concludes this research.

2 Related work

As per the previous descriptions, the use of digital images to estimate the speed of the involved vehicle is an important process in accident analysis. The section will introduce in detail the related research that estimated the vehicle speed based on digital images. In 2010, Hoogeboom et al. [6] designed two experiments to determine vehicle speed based on CCTV images. The two experiments respectively positioned the testing vehicle in the same camera and different cameras in a CCTV system and then used a simple distance-time calculation to calculate the vehicle speed. The location of the vehicle is derived through the observation of independent observers or some reference points for comparison, and then the images are obtained by the monitor to estimate the speed of the moving vehicle. According to the experiment result, they measured the speed estimation uncertainty based on the traditional observative vehicle positions on CCTV images.

Kim et al. [7] designed a vehicle speed estimate method (VSEM) that applies a virtual plane and virtual reference lines to estimate the vehicle speed on a video. Although the difference between the estimated speed and the GPS-based speed is very close, they found that the reference position (virtual lines) of the vehicle did not fit exactly with the boundary of the speed analysis section causing the error of the vehicle speed estimation. In other words, the error is caused by the manual location of the vehicle positions on videos. Shim et al. [8] proposed an effective method for estimating vehicle speed using a recorded video from a car dashboard camera. This method considered the case of vehicle speed measurement under different road conditions and accurately measure the speed of the vehicle at that time than Kim et al.’s method [7].

Epstein and Westlake [9] proposed a case report that used reverse projection photogrammetry and file metadata on digital video recorder (DVR) videos to estimate the vehicle speed in a real accident. They used the reverse projection photogrammetry technique to locate the vehicle position in the real world and extracted the time interval based on the metadata of videos by the FFprobe software. After the speed comparison with the Bosch event data recorder (EDR) data, the estimated speed is found to be within an average of ± 1.43538 miles per hour (MPH).

However, the above methods [6,7,8,9] often estimate the vehicle speed at several seconds or at least several image frame time intervals, even if the exact travel distance and time interval have been obtained, the average speed is hardly representative of the instantaneous speed, especially when the vehicle is in the process of acceleration or deceleration. In some practical cases, the average vehicle speed estimated from the above image-frame-based digital image measurement method will be close to, but slightly lower than, the maximum speed in the estimated information.

Liu et al. [11] first proposed a pixel-based method to estimate the relative instantaneous velocity of a vehicle. An image (or a frame) captured by the recording device is a project result from the three-dimensional (3D) space to a two-dimensional (2D) representation. For the projection, through the use of an imaginary projection plane, the outline of each part of the object is projected onto the image plane with point projection. A line is used to connect the points on the projection plane to form the image, which is called the projection of the object on the imaginary plane. That is, each 3D position in the real world has been mapped to a two-dimensional (2-D) point on the screen, and the mapping or transformation from the 3D space to the 2D space follows the projection geometry principle [12]. This can be impractical for data acquired by a smartphone or dashboard camera during the accident. The actual distance per pixel is used to estimate the distance of the vehicle in successive images, which is divided by the time of the image frame to obtain the speed of the vehicle. However, if the recording direction of the video recorder or monitor is not perpendicular to the direction of vehicle travel, an uneven distance between adjacent pixels occurs and this method cannot be used. Although entropy-based approaches [13,14,15] improve the accuracy of vehicle speed estimation, they need to acquire multiple characteristics of the desired vehicle for speed estimation.

Wong et al. [16] first proposed a method to directly estimate the speed of a vehicle by combining the images obtained from a fixed monitor with a cross-ratio [17]. Figure 1 depicts the projection of line AD from the 3D real world to the 2D spatial lines A'D' of the image frame. If the four points A, B, C, and D in Fig. 1 are co-linear in the 3D real world and transformed into 2D space by projection, then each point A', B', C', and D' in 2D space is also co-linear. The co-linearity of any point does not change when the projection is transformed, so it is a projection invariant [18]. In other words, a straight line in the real world is still a straight line in the image. The cross-ratio speed analysis method is one combining the starting and ending frame images, by using the combined frame to calculate the cross-ratio of four points first, and then using the cross-ratio to estimate the distance the vehicle moves to calculate its speed.

The cross-ratio (AB; CD) of the four co-linear points A, B, C, and D is calculated as Eq. (1) [17]:

where |XY| represents the distance between X and Y points.

The cross-ratio of any four points of the line is preserved by projective transformation and is projection invariant. In the real world, the four co-linear points A, B, C, and D are projected to A', B', C’, and D', respectively, in the image space. If (A'B';C'D') represents the cross-ratio of the four-projection points A' to D', then (AB;CD) and (A'B';C'D') are equal.

In Fig. 1, A and C are the positions of the front and rear wheels on the path at time point T2; B and D are the positions of the front and wheels on the path at time point T1; \(d\) is the distance the car travels; and \(l\) is the axle distance of the vehicle. The four points A to D form a straight line, whose cross-ratio (AB; CD) can be expressed by \(d\) and \(l\) as follows:

The equation can be rewritten as:

Finally, through the invariance of the cross-ratio, the cross-ratio (A'B';C'D') of the four points A' to D' in the 2D image space is equal to the cross-ratio (AB;CD) of the four points A to D in the 3D real world, and d can be obtained as follows:

If the axle distance of the car is known, then the distance the car travels can be determined. Once the distance is obtained, the speed of the car can be determined from the time elapsing between the two image frames (T2—T1) by a simple distance-time equation. The speed of the vehicle is monitored by a tachograph using a car of known size traveling along a straight road at different speeds. The distance of the vehicle traveled is obtained by calculating the cross-ratio using the pixel distance of the captured images, and then the corresponding speed is calculated based on the time difference between the images, which enables the speed to be estimated directly from the recorded images without reference to the exact physical location of the vehicle on the road. However, the disadvantage of the proposed method also requires the use of a fixed monitor.

Han et al. [19] used the images of the dashboard camera on the vehicle to estimate the vehicle’s speed. They also applied the cross-ratio technique to estimate the possible speed of the vehicle in the real case and compared it with the traditional method of estimating the speed of the vehicle by the time difference between the distance of the vehicle passing the visible landmarks or objects. They concluded that the cross-ratio method was superior to the conventional method for estimating the actual vehicle speed because in the cross-ratio method, if a single marker is fully visible in both frames, it can be used as a reference regardless of the duration, in contrast, the conventional method requires a complete marker as a reference. However, the lack of impact factors evaluation, such as the various shooting angle, the various traveling speeds, etc., and estimated error analysis are the main disadvantages.

Recently, due to the popularity of artificial intelligence, some studies [20,21,22,23,24] used deep learning and object detection techniques for vehicle speed estimation. However, these methods need the preset camera and fixed angle to train the network weights and are not suitable for use in real forensic cases.

In this study, a new vehicle speed estimation method based on the cross-ratio method is proposed. Three scenarios under various conditions are designed to estimate the instantaneous velocity of the moving vehicle by the stationary dashboard camera, the instantaneous velocity of other moving vehicles, and the ego-speed by the moving dashboard camera. The speed data from the Global Positioning System (GPS) installed in the dashboard camera on the testing vehicle is used to evaluate the accuracy of our proposed method. The mean, standard deviation, and coefficient of variation are calculated to find out the error range of the cross-ratio for estimating the actual speed under various scenarios.

3 Proposed method

The proposed method is carried out in 3 scenarios, as described in Fig. 2. The estimation on a stationary platform simulates the situation where the speed estimation of a moving vehicle under various conditions is captured by the on-site monitor, such as a traffic camera. The estimation on a moving platform simulates two scenarios where the estimation of the ego-speed when moving with a dashboard camera installed, and the estimation of the speed of other vehicles involved in the accident while moving.

3.1 Static estimation of moving vehicle

In this step, a straight road was selected for the speed measurement test. A stationary vehicle is parked on the side of the road, and the vehicle is equipped with a dashboard camera to record another vehicle at a constant speed at shooting angles of 0, 45, and 90 degrees. The experimental vehicle passes through the observation area of the vehicle at a constant speed along a straight path. The speedometers on the experimental vehicle are set at three constant speeds of 50 km/h, 60 km/h, and 70 km/h while the GPS speed of the vehicle is measured at 44 km/h, 54 km/h, and 64 km/h, respectively. The video recording of the vehicle running on the measured road section is used as a data source for estimating the speed of the vehicle. The range of vehicle speed observed in this experiment is 40-70 km/h, the speed of driving commonly found on most general roads and highways.

Figure 3c is the fused image showing two image frames of the dashboard camera at the T3 timestamp (as shown in Fig. 3a) and the T4 timestamp (as shown in Fig. 3b). If the wheelbase of the test vehicle is given, the cross-ratio can be used to estimate the distance traveled by the vehicle.

3.2 Estimation of the ego-travel speed while moving

The same straight road is selected for the speed measurement test in this step. The experimental vehicle is driven at constant speeds of 50 km/h, 75 km/h, and 100 km/h. The corresponding GPS speeds of the vehicle are measured at 45 km/h, 69 km/h, and 93 km/h, respectively. Due to the limited road condition, the vehicle speed range in this experiment is between 40–100 km/h, which falls within the speed of driving on general roads and highways.

Here, a car equipped with a dashboard camera is driven along a straight path at a fixed speed. The two image frames of the dashboard camera at the T5 timestamp (as shown in Fig. 4a) and the T6 timestamp (as shown in Fig. 4b) are fused to form the image in Fig. 4c. Then the virtual 2-dimensional space image is employed to calculate the distance of the receding line, the distance before the car travels forward.

During the markings of lines and points due to some low frame rate or low resolution of the dashboard camera, they become blurred when zoomed in, and it is impossible to accurately mark the lines or points. Therefore, to detect the pixels at the edge of the reticle more easily and stable, we first use the rgb2gray function in MATLAB software to convert the captured image into grayscale (Fig. 5a). Then, based on the entropy and statistical property of an image, we employ the adapt-thresh function which takes the average intensity near each pixel as its binarized threshold (Fig. 5b) instead of the fixed threshold. The binarize function is applied to convert the original image with the adapt binarized threshold into a binarized image (Fig. 5c). Finally, the continuous binarized images are fused. (Fig. 5d).

3.3 Estimation of the speed of other vehicles on moving

This step selects the straight road for the speed measurement. The vehicle (the rear car in Fig. 6a equipped with a dashboard camera is driven along the straight path at a fixed speed, and meanwhile, another test vehicle passes at a constant speed in the adjacent lane at a higher speed. The rear vehicle is equipped with a dashboard camera at a constant speed of 40 km/h. The vehicle speed measured by GPS is 39 km/h, and the whole process of video recording the image frames of the experimental vehicle running on the measured road section is used as a data source for estimating the moving speeds of the ego-travel and the experimental vehicle. The speedometer on the other experimental vehicle was set at constant speeds of 55 km/h and 70 km/h, and the GPS speeds are measured at 50 km/h and 64 km/h, respectively. The range of vehicle speed observed in this experiment is 40-70 km/h. which is common on most general roads and highways in a city. Additionally, due to the traffic law and insurance policy, the speed limitation in the testing site is set to 70 km/h.

The two image frames at the T7 timestamp (Fig. 6a) and T8 timestamp (Fig. 6b) of the dash cam are captured and fused into the image in Fig. 6c. From the white dotted line in the lane line on the screen and the wheelbase of the test vehicle, the distance traveled by the ego-traveling vehicle and the relative distance traveled by the test vehicle can be obtained, and then their speeds can be also estimated.

4 Experiment and analysis

In the present study, the experimental vehicle is moving at a constant speed to estimate the vehicle speed of the video, and the dynamic data of time and space are captured from the DVR video, i.e., the dashboard camera. Then the cross-ratio method is employed to estimate the speed of the vehicle which is then compared with the speed obtained using the Global Positioning System (GPS) on the vehicle as a benchmark.

4.1 Experimental equipment

The experimental equipment includes the vehicles, dashboard cameras, and global positioning systems (GPS) on the vehicles. The experimental vehicles are set to drive at the same speed during the test. One of the vehicles is a 2014 Ford Focus 4-door gasoline sports model with a commercial dash camera installed on the front windshield of the vehicle. The lens of the dash camera (PAPAGO GoSafe 738 rearview mirror dashboard camera) is a 130° ultra-wide-angle lens. The other vehicle is a 2018 Honda CRV 1.5 VTi-S with its original dashboard camera (Generplus). Both vehicles are equipped with GPS (PAPAGO GTM202) to measure their speeds. The whole process of recording the videos of the vehicles moving on the measured road section is used as a data source for estimating the speeds of the vehicles.

We do not use the speedometers of the vehicles for analysis and evaluation, mainly because the vehicle speedometers record the forward rolling distance of the tire, which is complicated by tire wear, tire size (replacement of tires or rims), tire inflation, and the potential error from the factories of the speedometer suppliers. In addition, according to Article 22 of the Vehicle Safety Testing Directions in Taiwan, vehicle speedometers must be tested under no-load conditions, i.e., the car is empty vehicle weight except for the driver and necessary instruments, and the indicated speed must never be less than the actual speed. The following relationship should be satisfied between the speed (V1) indicated by the speedometer dial and the real speed (V2):

That is, if the true speed is 40 km/h, the speedometer should be displayed at 40 ~ 48 km/h. While the GPS records the overall displacement of the vehicle, in the case of constant-speed driving, the error of the GPS speed relative to the speed of the vehicle speedometer is small.

4.2 Selection of reference marks

When the recorded vehicle speed is estimated by the cross-ratio, a non-moving object with a measurable length (distance) should be used as a reference. For example, streetlights, trees, or a constant space between lane markings are good references. To record the position of the vehicle in the video and measure the driving distance, in the present study, the axle of the vehicle (the distance between the front and rear wheels) and the white dotted line in the lane of the observed road section are used as the reference points for distance measurement. According to Article 182 of the Regulations for Road Traffic Signs, Markings, and Signals in Taiwan, lane lines are employed to divide lanes and instruct drivers to follow the lanes. A typical lane line is a white dotted line with a length of 4 m, a spacing of 6 m, and a width of 10 cm.

4.3 Analyzing tools

In the present study, the Virtual Dub software (Ver. 1.10.4) is used to load the video files and capture the image frames in the continuous video. The number of frames captured in the video files depends on the storage format of the video recording device. The experiments in the present study are all shot using the camera in 1920 × 1080 Full HD mode. After the images are captured by Virtual Dub, the PAPAGO GoSafe 738 and Generplus dashboard cameras can record 30 and 25 frames per second, respectively, so the time difference between consecutive image frames for each camera is 1/30 s and 1/25 s, respectively.

In the present study, we use the FFmpeg component in its framework to capture image frames. In forensic analysis, FFmpeg can be used to examine the original data as well as play, transmit, and convert images without transcoding [25]. The National Institute of Standards and Technology (NIST) establishes forensic science-related standards. The Scientific Working Group on Imaging Technology (SWGIT) and the Scientific Working Group on Digital Evidence (SWGDE) are responsible for proposing standards and recommendations for imaging technology and digital evidence [26]. The SWGDE has developed FFmpeg-related specifications and recommendations for processing video data for further analysis or demonstration [25].

Afterward, we use MATLAB (R2019a) to fuse the consecutive image frames. The four points A’, B’, C’, and D’ in the composite image are collinear since the experimental vehicle travels along a straight path. The path A’B’C’D’ in the virtual 2D image space is the projected line of the rectilinear path ABCD in the real world. The pixel-to-pixel distances of A’D’, B’C’, A’D’, and B’D’ are measured directly from the composite image, and then the cross-ratio is determined.

Finally, we use the ruler tool in Adobe Photoshop (CS6 13.0) to measure the distance of the four image pixels of A’, B’, C’, and D’ on the screen, and calculate the actual movement of the vehicle by the method of cross-ratio. The distance is divided by the time difference between the image frames to obtain the speed of the vehicle in consecutive image frames.

4.4 Experimental results

The data and analysis results obtained from the ego-travel, another vehicle, and relative vehicle speeds according to the above-mentioned three experiments are described below. Some videos are also used in [27] and the cross-ratio calculation function could be downloaded from https://dtcloud.cpu.edu.tw/index.php/s/8uxSU2BRQ2KaTj7.

4.4.1 Static estimation of moving vehicle

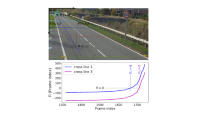

When the roadside vehicle is stationary, three different shooting angles of 0°, 45°, and 90° are used to record the speeds of the experimental vehicles, which were measured by GPS to be 44 km/h, 54 km/h, and 64 km/h, respectively. In addition, according to the various vehicle speed, 44 km/h, 54 km/h, and 64 km/h, the number of frames in which the testing vehicle exists in the shooting area is 20, 17, and 14, respectively. The wheelbase of the experimental vehicle in the continuous images is calculated by the cross-ratio method. It is used as the reference point for distance measurement to compare the results with the average vehicle speed estimated from the relationship between the shooting area and the vehicle speed for calculations 19, 16, and 13 times, respectively. The results are shown in Table 1 and Fig. 7.

The estimated speeds of the experimental vehicles at different GPS speeds and different angles. a., b., and c. are at a GPS vehicle speed of 44 km/h, 54 km/h, and 64 km/h, respectively; d., e., and f. are at an angle of 0 degrees; 45 degrees, and 90 degrees, respectively. Note that the upper and lower limits are each one standard deviation

There is no significant difference in the average vehicle speed estimated by the cross-ratio method at different vehicle speeds at different angles. In addition, the average vehicle speed estimated by the cross-ratio method at the same angle at different vehicle speeds for a high-speed vehicle is found to have a smaller coefficient of variation than that for a low-speed vehicle; that is, the case where the vehicle is traveling at a high speed will be the lower measurement error of the video recording speed measurement method. When the conditions of the measurement errors generated in the process of image capture, computer manual interpretation, and measurement are consistent, the distance traveled per unit of time is inversely proportional to the measurement error.

4.4.2 Estimation of the ego-speed on the move

The experimental vehicle is driven at low speed (45 km/h), medium speed (69 km/h), and high speed (93 km/h) at the GPS speed, respectively. We use the four-meter white dotted line in the 31 consecutive image frames recorded by the self-driving dash camera as the reference point for the distance measurement and then compare it with vehicle speed calculated by the cross-ratio method.

At the low, constant speed of 45 km/h, the average speed estimated by the cross-ratio method is 45.62 km/h, with a standard deviation of 2.978 and a coefficient of variation of 0.065. At the medium, constant speed of 69 km/h, the average vehicle speed estimated by the cross-ratio method is 69.80 km/h, with a standard deviation of 2.742 and a coefficient of variation of 0.039. At a high, constant speed of 93 km/h, the average speed estimated by the cross-ratio method is 92.42 km/h, with a standard deviation of 2.716 and a coefficient of variation of 0.029. The detailed results are shown in Table 2 and Fig. 8.

The estimated speed of the low-speed vehicle has a larger coefficient of variation e than that of the high-speed vehicle. That is, the estimation for the vehicle traveling at a low speed leads to more measurement errors caused by the speed measurement method using recorded images. If the conditions of the measurement errors generated in the process of image capture, computer manual interpretation, and measurement are consistent, the driving distance per unit time and the measurement error are inversely proportional.

4.4.3 Estimation of the speeds of other vehicles on the move

In this scenario, the ego-traveling vehicle is traveling at a constant GPS speed of 39 km/h, the experimental vehicle travels on the adjacent lane at two different GPS speeds of 50 km/h and 64 km/h. In addition, according to the various vehicle speed, 50 km/h and 64 km/h, the number of frames in which the experimental vehicle exists in the shooting area is 10 and 7, respectively.

In the analysis, the cross-ratio method is used to calculate the wheelbase of the experimental vehicle in the continuous image frames recorded by the dashboard camera on the rear vehicle, which is employed as a reference point for distance measurement to estimate the relative speed of the two vehicles and then add the speed of the rear car estimated by the traffic line as the distance measurement reference point. The added sum is the estimated speed of the vehicle in front. The estimated vehicle speed averages of 9 and 6 times are shown in Table 3 and Fig. 9.

To estimate other vehicles based on the dashboard camera video of the moving vehicle, the cross-ratio method is used to calculate the speed of the other vehicle and then add the relative speed. Although the result is slightly higher than the GPS speed, it is an acceptable estimation. Therefore, it is feasible to estimate the speed of other vehicles from the tachograph of the moving vehicle in a cross-comparison manner.

4.4.4 Comparison of vehicle speeds on the screen

In this section, we compare the speed of the center and both sides of the screen of the experimental vehicle traveling at different speeds, which is shot by a stationary vehicle on the roadside at a 90-degree shooting angle in 4.4.1, Static estimation of a moving vehicle. Then, we compare it with the speed obtained by the image pixel estimation described by Liu et al. [11].

-

A)

Comparison of vehicle speeds between the center and both sides of the screen

To record the scene of the accident as much as possible, a wide-angle lens is used in the vehicle's dashboard camera. However, the images obtained by the wide-angle lens appear to have barrel distortion of expansion deformation and spatial stretching parallax [28]; the straight line at the edge is stretched into a curve.

We divide the vehicle frames according to the experimental screen into the center and the two sides parts and estimate the speed of the vehicle 13 or 19 times respectively by the cross-ratio method. Then, the results are analyzed and shown in Table 4 and Fig. 10.

Although the estimated vehicle speed in the center of the screen is lower than the GPS vehicle speed and also has a lower estimated vehicle speed, standard deviation, and coefficient of variation values compared to the vehicles on both sides, the differences are not significant. Therefore, for the images captured by the wide-angle lens, although the distortion of the images does have an impact on the accuracy of the speed, the error in estimating the vehicle speeds between the center and both sides is not significant.

-

B) Comparison with image pixel estimation of vehicle speeds

In 4.4.1, consecutive image frames of 20, 17, and 14 are obtained at 44 km/h, 54 km/h, and 64 km, respectively. Then, the 10th, 9th, and 7th image frames are selected from the consecutive image frames of 44 km/h, 54 km/h, and 64 km/h, respectively. Since it is impossible to keep vertical the dashboard camera and the continuous images of the vehicle, the pictorial unit pixel distance (PUPD) between the horizontally adjacent pixels in the image frame is used to calculate the pixel distance between the center points of the two wheels. With the actual wheelbase distance (2,648 mm) of the experimental vehicle, the actual unit pixel distance (AUPD) described by Liu et al. [11] is used to compare the results. The detailed results are shown in Table 5 and Fig. 11.

The vehicle speed estimated by AUPD [11] is lower than the actual GPS vehicle speed. Compared with the proposed method, the estimated speed of AUPD has a larger error, a large standard deviation, and a large coefficient of variation value. The cross-ratio method is more suitable for estimating the speed of the vehicle, primarily because it does not use the pixel distance of a single image but the reference object to estimate the entire continuous images, which is easy to generate a smaller difference in the screen far from the central point.

4.4.5 Effect of cross-ratio at low resolution

In the roadside stationary vehicle in 4.4.1, the images of the experimental vehicle traveling at a constant speed are recorded at three different GPS speeds of 44 km/h, 54 km/h, and 64 km/h, and three different shooting angles of 0°, 45°, and 90°. The FFmpeg is used to reduce the resolution of the original image frame from 1920 × 1080 to 960 × 540, and then the results of the average vehicle speeds estimated by the relationship between the shooting angle and the vehicle speed are compared. The results are shown in Table 6 and Figs. 12–13.

For the estimation of the speeds of the other vehicle from the images taken with the reduced resolution from the stationary vehicle, there is little difference between the speeds of the original resolution and those of the reduced resolution. Therefore, the accuracy of the estimated speed is also feasible when estimating the other vehicle's speed on a lower-resolution screen using the cross-ratio method. However, after the resolution is reduced, there are larger standard deviation and coefficient of variation values compared with the original resolution results.

5 Limitations of the approach used

According to the experiments in this study, the estimation of vehicle speeds using the proposed method for the reduced resolution videos has a larger standard deviation and coefficient of variation values than the original resolution results. Therefore, it should be careful to apply the proposed method in low-resolution, serious smear, and blurred videos. We will cope with these problems in future work.

6 Conclusion

Existing methods for calculating vehicle speed are based on image frames, and the estimated speed usually requires a certain driving distance, which is not suitable for estimating the instantaneous speed of a vehicle whose driving speed varies greatly. This paper verifies the reliability of our proposed speed estimation method by evaluating the actual vehicle speed. Our proposed method based on the cross-ratio method is an application in which the projected geometrical cross-ratio remains unchanged. Even if the image resolution is low, the speed of the relevant vehicle can be determined directly from the video of the dashboard camera. In addition, the experimental results show that when our proposed method is used to estimate the vehicle speed, the estimated vehicle speed variation coefficient of low-speed driving is relatively large, therefore, this method is also suitable for speed calculation with speeding violations.

Nowadays, a dashboard camera is widely used. Image analysis on dashboard videos can be used to reconstruct the accident scene and evaluate whether the vehicles in the accident took action. Besides, it also provides a more in-depth understanding and solution to disputes in vehicle accidents.

The dashboard camera usually has a higher resolution to obtain a clear image of the accident. However, because the monitors at the intersections need to have a larger viewing angle and shoot for 24 h, the quality and frame rate of the recorded video is not as good as those of the dashboard cameras. Our results show that vehicle speeds estimated using the cross-ratio method at lower resolutions have very small gaps compared with those at the original resolution but have larger standard deviation and coefficient of variation values. Therefore, the following research can focus on the surveillance video of the intersection monitors, which will help to improve the identification quality of vehicle driving accidents and help resolve more controversial issues about driving speed.

Data availability

The data that support the findings of this study are available from Wen-Chao Yang but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of Wen-Chao Yang (una135@mail.cpu.edu.tw). For replicability, we make the cross-ratio calculation function in an Excel file available to the public and could be downloaded from https://dtcloud.cpu.edu.tw/index.php/s/8uxSU2BRQ2KaTj7.

Abbreviations

- GPS:

-

Global Positioning System

- CCTV:

-

Closed-circuit television

- VSEM:

-

Vehicle speed estimate method

- DVR:

-

Digital video recorder

- EDR:

-

Event data recorder

- MPH:

-

Miles per hour

- 3D:

-

Three-dimensional

- 2D:

-

Two-dimensional

- NIST:

-

The National Institute of Standards and Technology

- SWGIT:

-

The Scientific Working Group on Imaging Technology

- SWGDE:

-

The Scientific Working Group on Digital Evidence

- PUPD:

-

The pictorial unit pixel distance

- AUPD:

-

The actual unit pixel distance

References

Klein LA, Mills MK, Gibson DR (2006) Traffic detector handbook: Vol I (No. FHWA-HRT-06–108). Turner-Fairbank Highway Research Center, Accessed 5 October 2022. https://www.fhwa.dot.gov/publications/research/operations/its/06108/06108.pdf

Krishnan R (2008) Travel time estimation and forecasting on urban roads. Dissertation, Centre for Transport Studies, Imperial College London. https://doi.org/10.1080/23249935.2016.1151964

Weber N, Moedl S, Hackner M (2002) A novel signal processing approach for microwave Doppler speed sensing. In : 2002 IEEE MTT-S Int Microw Symp Dig 3:2233–2235. https://doi.org/10.1109/MWSYM.2002.1012317

Park SJ, Kim TY, Kang SM, Koo KH (2003) A novel signal processing technique for vehicle detection radar. IEEE MTT-S Int Microw Symp Dig 1:607–610. https://doi.org/10.1109/MWSYM.2003.1211012

Zou J, Yan P, Tian Y, Wei L (2018) A novel vehicle velocity measurement system based on laser ranging sensors. In: 2018 IEEE Int Conf Applied Syst Invent (ICASI) 613–616. https://doi.org/10.1109/ICASI.2018.8394329

Hoogeboom B, Alberink I (2010) Measurement uncertainty when estimating the velocity of an allegedly speeding vehicle from images. J Forensic Sci 55(5):1347–1351. https://doi.org/10.1111/j.1556-4029.2010.01412.x

Kim JH, Oh WT, Choi JH, Park JC (2018) Reliability verification of vehicle speed estimate method in forensic videos. Forensic Sci Int 287:195–206. https://doi.org/10.1016/j.forsciint.2018.04.002

Shim KS, Park NI, Kim JH, Jeon OY, Lee H (2021) Vehicle speed measurement methodology robust to playback speed-manipulated video file. IEEE Access 9:132862–132874. https://doi.org/10.1109/ACCESS.2021.3115500

Epstein B, Westlake BG (2019) Determination of vehicle speed from recorded video using reverse projection photogrammetry and file metadata. J Forensic Sci 64(5):1523–1529. https://doi.org/10.1111/1556-4029.14053

Adnan MA, Zainuddin NI (2013) Vehicle speed measurement technique using various speed detection. In: Instrumentation, IEEE Bus Eng Industrial Applications Colloq (BEIAC). https://doi.org/10.1109/BEIAC.2013.6560214

Liu S, Yang X, Cui J, Yin Z (2017) A novel pixel-based method to estimate the instantaneous velocity of a vehicle from CCTV images. J Forensic Sci 62(4):1071–1074. https://doi.org/10.1111/1556-4029.13381

Modenov PS, Parkhomenko AS (2014) Projective Transformations: Geometric Transformations, vol 2. Academic Press, New York and London

Yang L, Luo JC, Song XW, Li ML, Wen PW, Xiong ZX (2021) Robust vehicle speed measurement based on feature information fusion for vehicle multi-characteristic detection. Entropy 23(7):910. https://doi.org/10.3390/e23070910

Yang L, Li QY, Song XW, Cai QJ, Hou CP, Xiong ZX (2021) An improved stereo matching algorithm for vehicle speed measurement system based on spatial and temporal image fusion. Entropy 23(7):866. https://doi.org/10.3390/e23070866

Bouziady AE, Thami ROH, Ghogho M, Bourja O, Fkihi SE (2018) Vehicle speed estimation using extracted SURF features from stereo images. In: 2018 Int Conf Intell Syst Comput Vision (ISCV), 17737798. https://doi.org/10.1109/ISACV.2018.8354040

Wong TW, Tao CH, Cheng YK, Wong KH, Tam CN (2014) Application of cross-ratio in traffic accident reconstruction. Forensic Sci Int 235:19–23. https://doi.org/10.1016/j.forsciint.2013.11.012

Milne JJ (2015) an elementary treatise on cross-ratio geometry: with historical notes, scholar’s choice, pp 10–11

Brannan DA, Esplen MF, Gray JJ (2011) Geometry. Cambridge University Press

Han I (2016) Car speed estimation based on cross-ratio using video data of car-mounted camera (black box). Forensic Sci Int 269:89–96. https://doi.org/10.1016/j.forsciint.2016.11.014

Kumar A, Khorramshahi P, Dhar P, Chellappa R, Lin WA, Chen JC (2018) A semi-automatic 2D solution for vehicle speed estimation from monocular videos. In: IEEE/CVF Conf Comp Vision Pattern Recognit Workshops (CVPRW). https://doi.org/10.1109/CVPRW.2018.00026

Rais AH, Munir R (2021) Vehicle speed estimation using YOLO, Kalman filter, and frame sampling. In: 2021 8th Int Conf Advanced Inform: Concepts, Theory Applications (ICAICTA). https://doi.org/10.1109/ICAICTA53211.2021.9640272

Lin CJ, Jeng SY, Lioa HW (2021) A real-time vehicle counting, speed estimation, and classification system based on virtual detection zone and yolo. Math Probl Appl Syst Innovations IoT Appl 2021:1577614. https://doi.org/10.1155/2021/1577614

Prajwal, Navaneeth, Tharun, Kumar A (2022) Multi-vehicle tracking and speed estimation model using deep learning. In: 2022 14th Int Conf Contemporary Comput. 258–262. https://doi.org/10.1145/3549206.3549254

Farid A, Hussain F, Khan K, Shahzad M, Khan U, Mahmood Z (2023) A fast and accurate real-time vehicle detection method using deep learning for unconstrained environments. Appl Sci 13(5):3059. https://doi.org/10.3390/app13053059

SWGDE (2022) SWGDE Technical Notes on FFmpeg, Accessed 5 October 2022. https://drive.google.com/file/d/1OH9tNLeZ3_aqXlVJsUNB6nr3SR1ULR74/view

SWGIT (2022) Guidelines for Image Processing, Accessed 5 October 2022. https://www.crime-scene-investigator.net/swgit-section5.pdf

Su KA (2021) The study on evaluating vehicle speed with digital images. Central Police University, Taiwan, ROC, Thesis

Jenkins FA, White H E (2001) Fundamentals of optics, 4th ed. McGraw-Hill Education, pp 171–173

Acknowledgements

This work on this paper was supported by the Ministry of the Interior, Republic of China (Taiwan). (Project No. 112-0805-02-28-01).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Competing interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial or nonfinancial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, WC., Jiang, J., Mao, A. et al. The study on the estimation of vehicles speed using a dashboard camera. Multimed Tools Appl 83, 45777–45798 (2024). https://doi.org/10.1007/s11042-023-17171-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-17171-2