Abstract

Machine Learning (ML) and Deep Learning (DL) have achieved high success in many textual, auditory, medical imaging, and visual recognition patterns. Concerning the importance of ML/DL in recognizing patterns due to its high accuracy, many researchers argued for many solutions for improving pattern recognition performance using ML/DL methods. Due to the importance of the required intelligent pattern recognition of machines needed in image processing and the outstanding role of big data in generating state-of-the-art modern and classical approaches to pattern recognition, we conducted a thorough Systematic Literature Review (SLR) about DL approaches for big data pattern recognition. Therefore, we have discussed different research issues and possible paths in which the abovementioned techniques might help materialize the pattern recognition notion. Similarly, we have classified 60 of the most cutting-edge articles put forward pattern recognition issues into ten categories based on the DL/ML method used: Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Generative Adversarial Network (GAN), Autoencoder (AE), Ensemble Learning (EL), Reinforcement Learning (RL), Random Forest (RF), Multilayer Perception (MLP), Long-Short Term Memory (LSTM), and hybrid methods. SLR method has been used to investigate each one in terms of influential properties such as the main idea, advantages, disadvantages, strategies, simulation environment, datasets, and security issues. The results indicate most of the articles were published in 2021. Moreover, some important parameters such as accuracy, adaptability, fault tolerance, security, scalability, and flexibility were involved in these investigations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Presently, researchers are captivated by big data, which poses a formidable challenge due to the amalgamation of four primary parameters (velocity, diversity, volume, and quality) that delineate the data flow for pattern detection [58, 113, 128]. Numerous sources of data, both homogeneous and heterogeneous, strive to embody these criteria [22, 23, 56]. Additionally, big data encompasses a repertoire of techniques and tools employed to scrutinize vast amounts of unstructured data, including videos and images [2, 54, 61]. Processing unstructured data presents a formidable task as it lacks the comprehensive structure characteristic of regular data formats, owing to its frequent alterations [24, 102, 105]. One prominent tool that addresses potential challenges and effectively handles large data sets is Hadoop [17, 45, 126]. The progressive advancement in pattern recognition approaches for both structured and unstructured data processing continually expands [44, 114, 117]. This capacity necessitates greater attention to data analysis methodologies that effectively manage these immense and diverse volumes of information [5, 57]. Several analytical techniques have been developed to fulfill the need for high-quality data analysis functions. These encompass visualization, pattern recognition, statistical analysis, Machine Learning (ML), and Deep Learning (DL), all of which contribute to extracting meaningful patterns from extensive data sets [59, 60].

Pattern recognition and other diverse computational methods have proven to be valuable assets in leveraging the potential of big data [25, 132]. Big data fusion with DL and ML has further enhanced computational pattern recognition, leading to insightful predictive findings from acquired data [82, 137]. However, it is important to acknowledge the inherent challenge of dealing with all attributes within vast and similar datasets found in big data [139, 140]. Therefore, new approaches for data certification and conformity must be explored. Advancements in computing technology have opened up possibilities for uncovering hidden values in massive datasets by utilizing various pattern recognition algorithms, which were previously cost-prohibitive [43, 65]. The emergence of pattern recognition has prompted the development of technologies that facilitate real-time accessibility, storage, and analysis of enormous data volumes [40, 87]. Notably, big data methods for visual pattern recognition differ in two key aspects [123, 125]. Firstly, big data refers to data sets that are too large to be stored on a single device [143, 144]. Secondly, the absence of structure in traditional data necessitates the replication of the big data concept, requiring specific tools and approaches [16, 121]. Innovations like Hadoop, Bigtable, and MapReduce have revolutionized visual pattern recognition, addressing significant challenges associated with efficiently handling vast data volumes [103, 129]. Various applications, such as simple Database (DB), NoSQL, Data Stream Management System (DSMS), and Memcached, can be employed for big data, with Hadoop standing out as the most popular and suitable choice [86, 116].

In our study, this paper contributes by conducting a comprehensive Systematic Literature Review (SLR) to evaluate the utilization of DL/ML methods in pattern recognition, addressing previous gaps in the literature. It focuses on practical approaches and categorizes them into ten distinct groups, providing a detailed analysis of each group's advantages, disadvantages, and applications. The paper consolidates findings, considers various factors, and offers a wide range of techniques, contributing to advancements in the field of pattern recognition. We undertook an integrated SLR to comprehensively examine the utilization of DL/ML methods in pattern recognition. Previous SLRs have failed to comprehensively evaluate all aspects of DL/ML approaches in this domain, prompting our research to fill this gap. Consequently, our paper primarily focuses on practical DL/ML approaches within the context of pattern recognition. The significance of our research lies in its exploration of diverse and efficient DL/ML methodologies employed to tackle pattern recognition challenges. We thoroughly analyzed, consolidated, and reported findings from similar publications through the SLR. Additionally, we categorized DL/ML approaches for pattern recognition into ten distinct groups, encompassing Convolutional Neural Network (CNN), Recurrent Neural Network (RNN), Generative Adversarial Network (GAN), Autoencoder (AE), Ensemble Learning (EL), Reinforcement Learning (RL), Random Forest (RF), Multilayer Perception (MLP), Long-Short Term Memory (LSTM), and hybrid models. Each group was meticulously examined, considering various factors such as advantages, disadvantages, security implications, simulation environment, dataset, and the DL/ML approach employed in pattern recognition. The paper emphasizes the techniques and applications of DL/ML methods in pattern recognition, presenting a wide range of techniques that contribute to advancements in this field. Furthermore, we delved deeply into future work that must be implemented in future studies. Overall, this paper's contributions are:

-

Reviewing the present issues pertinent to DL/ML methods for pattern recognition;

-

Presenting a systematic overview of previous works on pattern recognition;

-

Evaluating each approach that emphasized DL/ML methods with diverse aspects;

-

Planning the key aspects that will allow the researchers to develop future works;

-

Explaining the definitions of pattern recognition methods used in various studies.

The subsequent compilation constitutes the framework of this article. The subsequent section elucidates the principal viewpoints and suitable terminology of DL/ML approaches employed in pattern recognition. Section 3 scrutinizes the relevant review papers. Section 4 encompasses the research methodology and tools employed for paper selection. Section 5 encompasses the chosen papers subjected to study and evaluation. The following section presents a comprehensive comparison and discussion of the outcomes, as expounded in Section 6. Section 7 deliberates on future endeavors, while Section 8 elucidates the ramifications. Furthermore, Table 1 provides a catalog of the abbreviations employed in the research.

2 Basic concepts and corresponding terminologies

In this part, we have provided a quick definition of important terms such as DL, ML, big data, and pattern recognition.

2.1 ML and DL

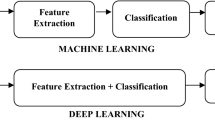

ML is a subset of Artificial Intelligence (AI) that enables computer programs to learn and adapt without human intervention [80, 142]. ML algorithms analyze vast amounts of data to detect patterns and make predictions in various fields such as advertising, finance, fraud detection, and more [62, 133]. It can process diverse data types like words, images, and clicks, making it applicable to digital storage. DL, a branch of ML, uses Artificial Neural Networks (ANN) to simulate the human brain's functioning [31, 141]. DL extracts feature from data by employing multiple hidden layers and progressively abstracts information. With increasing data analysis, DL can identify hidden patterns [67, 90]. It learns from processed data, autonomously extracting features without human involvement [68, 119]. DL techniques have revolutionized language modeling, exemplified by Google Tran slate’s contextual translations facilitated by DL-based Natural Language Processing (NLP). DL's ability to handle complex data and perform advanced tasks positions it at the forefront of AI technologies [3, 75].

2.2 What are big data and its usage?

Big data refers to a vast amount of ever-increasing data sets in a variety of formats, including structured, semi-structured, and unstructured information [74, 92]. Because of the complicated nature of big data, which necessitates powerful algorithms and robust technology, it is defined by the three primary criteria listed below.

-

I.

Volume: A huge amount of digital data is produced continuously from millions of applications and devices. More than several exabytes of data are increasingly produced each year.

-

II.

Diversity: Big data is generated in a variety of formats by several distant sources. Great data series include unstructured and structured data and local, private, completed, or uncompleted data.

-

III.

Distribution: Big data is being used as a successful solution in many fields, including smart grid, E-earth, the Internet of Things (IoT), public utilities, transportation and logistics, political services and government surveillance, and so on. DL/ML, on the other hand, objectively contributes to acquiring knowledge and making judgments for a variety of vital purposes, such as pattern recognition, recommendation engines, informatics, data mining, and autonomous control systems.

2.3 What is pattern recognition?

The detection of the features or data deployment that offer information about a specific system or data set is referred to as pattern recognition [50, 63]. In the professional context, a pattern may be a continuously repeating sequence of data over time that can be used to predict trends, specific configurations of image characteristics that recognize objects, frequent combinations of words and phrases for NLP, or particular groups of behavior on a network that can demonstrate an attack through virtually infinite other likelihoods [81, 89]. Pattern recognition, in essence, crosses several areas of IT, including biometric identity, security and AI, and big data analytics [76, 93]. Pattern recognition is distinct from ML, in which the pattern recognizer, unsupervised, and supervised learning methodologies are widely used during training [34, 94]. In supervised ML, the human contributor gives a representative set of configurable data to characterize the patterns [85, 98]. Unsupervised ML minimizes the use of a human element and pre-existing knowledge [35, 97]. In this approach, the algorithms are trained to discover new patterns without using existing labels simply by being familiar with a large data set. On the other hand, DL can be used to train pattern recognizers alongside machines regarding networks [10].

3 Relevant reviews

In this paper, we have presented a detailed assessment of independent ML/DL algorithms for large data pattern identification in cyber-physical systems and a discussion of the research contributions of these various approaches. Several related survey studies and journal articles based on ML/DL approaches in big data were studied in this regard. Even though we attempt to categorize articles, some of them may not correspond to one category. By the same token, Bai, et al. [11], to make pattern recognition robust and efficient, reviewed several articles accepted on explainable ML/DL. Their broad review of representative studies and current improvements in explainable ML/DL for effective and robust pattern recognition is of high quality, presenting the latest development of interpretability of DL strategies, well-organized and compacted network architectures in particular pattern recognition and new adversarial attacks, and stability preparation is investigated. Moreover, Paolanti and Frontoni [83] put forward new trends and methods of pattern recognition used in various fields, and different pattern recognition techniques have been reviewed. By putting special regard to ML, DL, and statistics, the authors investigated possible solutions for systems development. They mentioned elements like intelligent systems, devices, and end-to-end analytics. Then they examined multiple various fields of pattern recognition applications with particular attention to biology and biomedical, surveillance, social media intelligence, Direct Connect Hub (DCH), and retail.

Also, Zerdoumi, et al. [130] talked about large data, visual pattern recognition, and categorization. They discussed the potential advantages of ML algorithms for pattern recognition in huge data. They emphasized unresolved research difficulties related to the use of pattern recognition in big data. They performed a thorough literature review to demonstrate the applicability of multi-criteria decision approaches and DL algorithms to big data concerns. Moreover, Bhamare and Suryawanshi [13] offered an overview and analysis of several well-known tactics used at various levels of the pattern recognition system and research subjects' recognition and applications that are at the forefront of this intriguing difficult field. They presented pattern recognition frameworks based on several ML algorithms. On this basis, they examined 33 similar experiments from 2014 to 2017.

Smart city development is only one of several domains where common technology has significantly impacted it. As Atitallah, et al. [7] demonstrated this by reviewing several current studies. The primary goal of their research is to look into the use of IoT big data and DL analytics in the enhancement and development of smart cities. Following that, they identified IoT technology and demonstrated the computing foundation and ML/DL applications used by IoT data analytics, which includes fog, cloud, and edge computing. As a result, they investigated well-known DL architectures and their applications, disadvantages, and benefits. Furthermore, as ML and big data analytics have demanded progressive leaps and bounds in information systems and boundaries, Zhang, et al. [135] promised bibliometric research to examine the primary writers' contributions, countries, and organizations/universities in terms of citations, yield, and bibliographic coupling. As a result, they provided valuable information for potential participants and audiences regarding new research topics.

Similarly, the epidemic of chronic illnesses such as CoronaVirus Disease (COVID-19) gave healthcare facilities to populations all over the world [96, 108, 110]. With the advancement of the IoT, these wearables were able to collect context-specific data pertinent to behavioral, physical, and psychological health. By taking this issue into mind, Li, et al. [53] gave an in-depth evaluation of big data analytics in IoT healthcare by evaluating chosen relevant surveys to identify a research gap. Also, they provided cutting-edge smart health. Also, a detailed analysis of related reviews' weaknesses and strengths is shown in Table 2.

4 Methodology of research

The SLR approach was used in this section to understand better Autonomous ML/DL strategies for big data pattern recognition. The SLR is a critical examination of all research on a specific scope. This section will provide an in-depth discussion of ML approaches to pattern identification. Following that, we seek verification of the research selection technique. Subsequent subsections outline the search technique and include Research Questions (RQ) and selection criteria.

4.1 Question formulation

The primary purpose of this study is to categorize, recognize, survey, and assess certain specific existing articles in ML/DL techniques for pattern recognition applications. To achieve the discussed purpose, the aspects and characteristics of the techniques can be thoroughly researched using an SLR. Understanding the main concerns and challenges encountered thus far is the next goal of SLR in this phase. We proposed several RQs that had been pre-specified:

-

RQ 1: How can we identify the paper and select the ML/DL techniques in pattern recognition?

This is covered in Section 4.

-

RQ 2 What are the most important potential solutions and unanswered questions in this field?

Section 7 will present the outstanding issues.

-

RQ 3: How can the ML/DL methods in pattern recognition be categorized in big data? What are some of their instances?

The answer to this question can be found in Section 5.

-

RQ 4: What methods do the researchers use to conduct their investigation?

4.2 The paper selection procedure

The following four stages design the paper selection and search procedure for this research. This procedure is depicted in Fig. 1. Table 3 displays the terms and keywords for searching the articles at the first level. The articles in this set are the outcome of a typical electronic database query. Electronic databases used include Springer Link, ACM, Scopus, Elsevier, IEEE Explore, Emerald Insight, Taylor and Francis, Peerj, Dblp, ProQuest, and DOAJ. Books, chapters, journals, technical studies, conference papers, and special issues are also established. Stage 1 has 612 items allocated to it. Figure 2 displays the distribution of articles by publication.

Stage 2 consists of two processes for determining the total number of articles to be researched. Figure 3 depicts the publisher's distribution of articles at this point. The papers are initially judged based on the criteria shown in Fig. 4. 305 articles are still present. In stage 2, the survey papers are extracted.; out of the 305 papers that remained in the previous stage, 35 (11.47%) were survey papers. There are presently 188 papers available. In step 3, the titles and abstracts of the articles were examined. Finally, 95 publications that met the stringent conditions were chosen to analyze and investigate the other papers. The distribution of the selected papers by their publishers is shown in Fig. 5. There were 60 manuscripts left for the final round, and Fig. 6 displays the journals that published the studies at that point.

5 Techniques for autonomous ML for big data pattern recognition

This section investigates autonomous ML/DL algorithms for large data pattern detection in a variety of applications. We are going to touch on distinct articles in the following paragraphs. 10 categories of ML/DL techniques, including CNNs, RNNs, GANs, AEs, ELs, RLs, RFs, MLPs, LSTMs, and hybrid emphasis studied articles, are appropriately organized into them. Figure 7 depicts the proposed assortment of ML/DL Techniques used in pattern recognition.

5.1 CNN mechanisms for pattern recognition

CNN is one of the most important ML/DL techniques because it can take an input image, assign importance (learnable biases and weights) to distinct objects/facets in the image, and compare them. In comparison, CNN requires less pre-processing than other techniques. CNN can learn these features/filters the same way trained filters in primary mechanisms are hand-engineered. CNN's architecture is inspired by the connection between the pattern of the human brain's neurons and the structure of the visual cortex. Individual neurons respond to spurs in a restricted visual field area known as the receptive field. Such fields congregate to overlap adequately to cover the entire visual region. In this regard, Awan, et al. [8] used a Deep Transfer Learning (DTL) method known as the Apache Spark system, which is a large data framework that uses a 100%-accuracy CNN. ResNet50, VGG19—on COVID-19 chest X-ray images—and Inception V3 are three architectures used to quickly identify and isolate positive COVID-19 patients [111, 112]. However, COVID-19/pneumonia/normal detection accuracy was 98.55% for the ResNet50 and VGG19 models and 97% for the inceptionV3 design. The authors investigated weighted recall, weighted precision, and accuracy as DTL operation metrics. The results of ResNet50, VGG19, and InceptionV3 were excellent, and these three models for binary-class assortment provided 100% detection accuracy. While categorizing the three classes, VGG19, ResNet50, and Inception V3 achieved 98.55%, 98.55%, and 97% accuracy, respectively.

Furthermore, one of the most important financial markets is the stock market, which generates a lot of money, but the most difficult challenge that has not been solved is deciding which stocks to buy and when to buy or sell shares. With this issue in mind, Sohangir, et al. [101] provided the idea of using DL systems to construct the sentiment analysis feature for StockTwits. CNN, doc2vec, and LSTM were among the models used to analyze stock market ideas submitted on StockTwits. The authors used n-grams, bi-grams, unigrams, and the CNN method to extract document sentiment efficiently. Then, they used logistic regression based on a set of terms. They concluded that the CNN method effectively extracts stock emotion from their utterances.

Also, Hossain and Muhammad [39] proposed an emotion recognition system based on big emotional data and a DL method big data comprises video and voice, in which a speech signal is processed in the beginning to obtain a Mel-spectrogram in the frequency domain, and can be considered an image. As a result, a CNN employed the Mel-spectrogram. The authors employed 2D and 3D CNN for the voice and video signals, respectively, and their results showed that the ELM-based fusion performed better than the categorizer's composition because ELMs add a significant degree of non-linearity to the feature fusion.

Also, Ni [79] evaluated CNN to generate many visual attributes, then lowered the number of calculations, followed by a dimension reduction by the pooling layer. The increased ReLU performance, ReLU employed, and the effect of less performance of the model were investigated for the network structure based on LeNet-5 to be more helpful for face image processing. The authors used CelebA as a training set for the model and LEW as a testing set for performance testing. As a result, the produced LeNet-5 model with A-softmax loss had a shorter training time when using A-software and softmax loss between LeNet-5, implying a faster convergence speed in this model. Following that, A-softmax loss was employed throughout an LFW testing set, and as a result, the recognition accuracy of the produced LeNet-5 was significantly greater than that of LeNet-5With increased size, the recognition rate of the two models increased, and the difference between the two models widened.

By the same token, Xu, et al. [122] created an emotion-sensitive learning framework that analyzes the cognitive state and approximates the learners' focus and mood based on head posture and facial expression in a non-invasive manner. As a result, the learners' emotions are assessed based on their facial expressions. They concluded that their suggested method can approximate learner attention and sentiment with 88.6% and 79.5% accuracy rates, demonstrating the system's strength for evaluating sentiment-sensitive learning cognitive circumstances.

Additionally, Li, et al. [55] presented a deep CNN model to reach the hierarchical properties of huge data by extending the CNN from vector space to tensor space using the tensor representation paradigm. To avoid overfitting and improve training efficiency, a tensor convolutional procedure is provided to fully use the local properties and topologies present in the huge data. Furthermore, they applied a high-order back promotion algorithm for teaching the deep convolutional computational model's parameters in the high-order space. Finally, tests on three datasets, SNAE2, CUAVE, and STL-10, demonstrated their model's capacity to learn big data and traditional data features.

Finally, Sevik, et al. [95] created a deep network capable of recognizing both letters and fonts in Turkish. A pre-trained network has been taught using around 13 thousand images to accomplish this goal. The letter and font identification training accuracies are 100% and 73.44%, respectively. Because the type of faces is similar, they used a possibility calculation after determining the network output to improve the font recognition percentage. Although the first test image font's accuracy is 14/26% because the probability is greater than 0.5%, they recognized it as Arial, and the function was slightly improved as a result. Following then, 12 images containing letters were addressed to the network test. As a result, letter identification accuracy with this network was roughly 100%, but font accuracy recognition was low. Table 4 discusses the CNN methods used in pattern recognition and their properties.

5.2 RNN mechanisms for pattern recognition

An RNN is a type of ANN in which node connections form either an undirected or directed graph based on a transitory sequence. As a result, it exhibits a transient dynamic style. RNNs, which are derived from feedforward neural networks, can process varying length sequences of inputs by utilizing their interior state (memory). As a result, they can be employed for tasks such as unsegmented, connected speech recognition, or handwriting recognition. "RNN" refers to a network's class with an infinite impulse response, whereas "CNN" refers to a class with a finite impulse response. Both classes of networks show a transient dynamic manner. In this regard, Jun, et al. [47] presented a mechanism for character extraction based on RNN AEs. The RNN AEs range the initial skeleton information more discriminatively and decrease unrelated data, which is especially significant with the LSTM AE, which performed better than the Generic Encapsulation (GRE AE). As a result, the characteristics shape the recognition operation of RNN DMs (Direct Messages) and other DMs. Through the DMs, the GRE DM outperforms the GRE AE, and the GRE DM outperforms the LSTM DM in terms of accuracy. The RNN AE-DM hybrid structures that are nourished with the characteristics perform better than the separate RNN SMs nourished with the initial skeleton information. They do so with less training time and fewer learning elements. Furthermore, the RNN AE-two-pace DM's training is more efficient than the End-to-End model's single training with a similar input stream.

Chancán and Milford [15] suggested an RNN + CNN model that can learn meaningful transient connections from a single image sequence in a large drawing dataset.; when standard sequence-based techniques surpass in terms of runtime, computing requirements, and accuracy. The authors used a minor two-layer CNN to examine DeepSeqSLAM's end-to-end training method, but their basic results showed that the CNN element does not generalize well to dramatic visual differences, which was estimated given that these models require a large amount of data for efficient generalizing and training. They tested their method on two large benchmark datasets: Oxford RobotCar and Nordland, which logged over 10 km and 728 km tracks, respectively, over a year with varying seasons, lighting conditions, and weather. On Nordland, they compared their model with two sequence-based mechanisms along the entire road under seasonal fluctuations, using a sequence length of 2, and showed that their model could attain above 2% AUC for SEQSLAM and 72% AUC in compared with 27% AUC for Delta Descriptors.; when the arrangement time is reduced from roughly 1 h to 1 min.

As well, Gao, et al. [30] proposed an effective RNN transducer-based Chinese Sign Language Recognition (CSLR) method. They used RNN-Transducer in CSLR for the first time. To begin, they created a multi-level visual hierarchy transcription network using phrase-level BiLSTM, gloss-level BiLSTM, and frame-level BiLSTM to examine multi-scale visual semantic properties. Following that, a lexical anticipating network was used to model the contextual data from sentence labels. Finally, a collaborative network seeks to learn language representations as well as video properties. It was then fed into an RNN-Transducer to optimize adjustment learning between sentence-level labels and sign language video. Extensive examinations of the CSL dataset confirmed that the provided H2SNet can achieve higher authenticity and faster velocity.

Besides, Hasan and Mustafa [36] suggested an effective mechanism for robust gait recognition using an RNN that is related to Gated Recurrent Units (GRU) architecture and is exceptionally powerful in capturing the transient dynamics of the human body gesture sequence and executing recognition. They created a low-dimensional gait characteristic descriptor derived from 2D that mixes human gesture data, is unaffected by diverse covariate factors, and is efficient in describing the dynamics of various gait paradigms. According to their findings, the experiment using the CASIA A and CASIA B gait datasets demonstrated that the given methodology surpasses the current approaches.

As offline Persian handwriting recognition is an issue task due to the Persian scripts' cursive essence and sameness through the Persian alphabet letters, Safarzadeh and Jafarzadeh [91] proposed a Persian handwritten word identifier based on a continuous labeling mechanism with RNN. A Connectionist Temporal Classification (CTC) loss operation is also exploited to remove the segmentation pace required in convolutional systems. Following that, the layers are used to exploit the sequence of features from a word picture. Overall, the RNN layer with CTC performance was used for labeling the input succession. As a result, they demonstrated that this composition is an appropriate robust recognizer for the Persian language. Consequently, they tested the approach on IFN/ENIT, Arabic, and Persian datasets.

Furthermore, Zhao and Jin [138] enhanced a "doubly deep" approach in temporal and spatial layers of recurrent and convolutional networks for performance recognition. To begin, they presented a developed p-non-local performance as a common efficient element for capturing long-distance relationships. Second, they proposed Fusion KeyLess Attention in the class forecast level merging with the backward and forward bidirectional LSTM to learn the sequential essence of the information more effectively and elegantly, which employs a multi-epoch model fusion based on the confusion matrix. The authors tested the proposed model on two heterogeneous datasets, Hollywoods and HMDB51, which resulted in the model outperforming standard models and thus just using Rotating Graphics Base (RGB) features for performance action recognition based on RNN. Table 5 discusses the RNN methods used in pattern recognition and their properties.

5.3 GAN mechanisms for pattern recognition

A GAN is a type of ML/DL framework that learns to produce new information with the same statistics as the training set in a given set. A GAN educated on images, for example, can produce new images that appear to human observers to be at least allegedly genuine, with multiple realistic qualities. Despite being primarily proposed as a type of generative model for unsupervised learning. GANs have also been shown to aid reinforcement learning, semi-supervised learning, and entirely supervised learning. The main principle behind a GAN is "indirect" training among the separator, a further neural network that can determine how much input is common-sense and constantly updated. This indicates that the producer is not educated to reduce the distance to a certain image but rather to deceive the separator. This allows the model to learn an unsupervised behavior. In this regard, Luo, et al. [66] presented a Face Augmentation GAN (FA-GAN) to reduce the impact of uneven property distributions. The authors used a hierarchical disentanglement module to decouple these attributes from the identity representation. Graph Convolutional Networks (GCNs) are also employed for geometric data recovery by exploring the interrelationships between local zones to provide identity protection in face information augmentation. Broad examinations of face reconstruction, identification, and manipulation revealed the efficacy of their proposed approach.

Additionally, Gammulle, et al. [28] addressed the problem of fine-grained action fragmentation in sequences in which various performances are proposed in an unsegmented video stream. The authors introduced a semi-supervised frequent GAN model for fine-grained human activity segmentation. A Gated Context Extractor (GCE) module, a combination of gated attention units, seizes transient context data and leads it among the generator model for increased functionality segmentation. GAN is created to enable the model to satisfy the action taxonomy accompanying the unsupervised, normal GAN learning process due to learning features in a semi-supervised behavior. Finally, the result showed that it could outperform the current state-of-the-art on three major datasets: MERL shopping and Tech egocentric performances dataset and 50 salads.

Also, Fang, et al. [27] presented a face-aging approach called Triple-GAN for organizing age-processed faces. Triple-GAN has adjusted increased adversarial loss to emphasize the synthesized faces’ realism and learn efficient mapping along age margins. Rather than resolving ages as independent clusters, triple translation loss has been coupled to an additional model to the intricate solidarity of multiple age ranges and simulates more realistic age enhancement, another enhancing the generator's predominance. Multiple qualitative and quantitative examinations performed on CACD, MORPH, AND CALFW showed the efficiency of their proposed mechanism.

Chen, et al. [21] presented the NM-GAN anomaly distinction model, which incorporates the discrimination network D in the GAN-like architecture as well as the reconstructing network R. Their work provided a significant contribution by regulating the generalization capability of detection capabilities of networks D and R by embedding the noise map into an end-to-end adversarial learning technique at the same time. The authors provided the model to improve the discriminator's detection capability and the generative model's generalization capability in an integrated architecture. According to the results of the studies, their model outperforms most competing models in terms of stability and accuracy, demonstrating that the offered noise-modulated adversarial learning is efficient and trustworthy.

Finally, Men, et al. [72] developed an attribute-Decomposed GAN, a new generative model for controllable person image combination capable of producing realistic person images with desired human attributes derived from various source inputs. The authors fundamentally integrated human traits as distinct codes in the hidden space and subsequently obtained flexible and sequential management of attributes through combination and interpolation performances in vivid style representations. They specifically presented a design that incorporates two encoding routes connected by style block connections for the aim of principal hard mapping deconstruction into multiple accessible subtasks. They then used an off-the-shelf human decomposer to exploit component layouts and feed them into a shared global texture encoder for decomposed hidden codes. As a result, they concluded that their proposed approach is more effective than the existing ones. Table 6 summarizes the GAN approaches and their properties in pattern recognition.

5.4 AE mechanisms for pattern recognition

An AE is a type of ANN that is used to learn effective coding of unlabeled data. An attempt to recreate the input from the encoding authenticates and purifies the encoding. The AE learns to serve a data set, typically for dimensionality reduction, by training the network to ignore irrelevant information. The variant present addresses the need to force known representations to assume useful properties. AEs are used for a variety of tasks, including feature detection, anomaly detection, facial recognition, and determining the meaning of words. Furthermore, AEs are generative models: they are capable of producing new information that appears to be input information by accident. By this token, Simpson, et al. [100] presented a reduced sequenced modeling mechanism based on the availability of output and input data for developing a representation that can mimic the reaction of nonlinear infrastructure systems under "unseen" compelling time histories. They demonstrated the modeling approach and its efficacy on various nonlinear systems of variable size and complexity.

Also, Kim, et al. [49] introduced a parallel end-to-end Text-To-Speech (TTS) system that generates more natural-sounding audio than the previous two-step approaches. Their technique modified variable assumptions raised using normalizing streams and an adversarial training procedure, which developed generative modeling's stunning strength. The authors also proposed a stochastic duration predictor to unify speech with different rhythms from input text. Their model asserted the natural one-to-many link in which text input can be spoken in numerous directions with diverse rhythms and pitches using the unpredictable modeling over hidden variables, the stochastic duration predictor. A subjective human evaluation of the LJ speech, a unique speaker dataset, showed that their method surpassed the best publicly available TTS systems and achieved a Metal Oxide Semiconductor (MOS) comparable to trustworthiness.

Furthermore, Utkin, et al. [107] developed a mechanism for modeling an anticipating DL model that combines the variational AE and the conventional AE. The variational AE provided a series of vectors based on the previously described picture embedding at the testing or describing level. Following that, the conventional AE's directed decoder section rebuilt a succession of images that configured a heatmap explaining the original explained image. Finally, they tested their model on two well-known datasets, CIFAR10 and MNIST.

Additionally, et al. [84] developed a generative AE model with dual contradistinctive losses to produce a generative AE that simultaneously acts on both reconstruction and sampling. The suggested model, known as the dual contradistinctive generative AE (DC-VAE), combined an instance-stage discriminative loss with a set-level adversarial loss, both of which are contradistinctive. They analyzed extensive experimental conclusions by DC-VAE over various resolutions consisting of 32 × 32, 64 × 64, 128 × 128, and 512 × 512 are recorded. The two contradistinctive losses in VAE function concord in DC-VAE resulted in specific quantitative and qualitative operations gained across the baseline VAEs missing architectural variances.

Moreover, Bhamare and Suryawanshi [13] proposed an end-to-end algorithm, VGELDA, that complemented diverse deduction and graph AEs for IncRNA-disease contributions forecasting. VGAELDA included two types of graph AEs. The association of both the VGAE for substitute training and graph representation learning by various assumptions intensified the ability of VGAEDA to grasp effective low-dimensional representations from high-dimensional characteristics and therefore allowed the accuracy and robustness for forecasting IncRNA-disease contributions. Their analyses highlighted the solvation of the designed co-training framework of IncRNA for VGAELDA, a geometric matrix issue for grasping effective low-dimensional representations by a DL method.

Besides, Atitallah, et al. [7] developed a 5-layer AE-based model for detecting unusual network traffic. The primary architecture and parts of the suggested model were developed as a result of a thorough investigation into the influence of an AE model's major function indicators and recognition accuracy. According to their results, their model achieved the maximum accuracy using the proposed two-sigma outlier availability method and Metropolitan Area Exchange (MAE) as the rebuild loss criterion. The authors used MAE based on rebuild loss performance to achieve the maximum accuracy for the AE model used in network anomaly recognition. In comparison to alternative model architectures, the suggested model with the optimal number of neurons exploited at each latent space layer delivers the best function. Finally, they tested the model using the widely used NSL-KDD dataset. Compared to similar models, the performance attained 90.61% accuracy, 98.43% recall, 86.83% precision, and 92.26% F1 score.

On the other hand, Zhang, et al. [135] introduced an attack architecture, Anti-Intrusion Detection AE (AIDAE), to create features to disable the IDE. An encoder in the framework sends parts into a hidden space, while many decoders reconstruct the sequence and distinct properties accordingly. The authors tested the framework using datasets from UNSW-NB15, NSL-KDD, and CICIDS2017, which resulted in the system degrading the detection function of existing Intrusion Detection Systems (IDSs) by producing features. Table 7 discusses the AE methods used in pattern recognition and their properties.

5.5 EL mechanisms for pattern recognition

EL mechanisms in ML/ DL and statistics use many learning algorithms to provide higher predictive functions than each component learning algorithm solidarity. In contrast to a statistical ensemble in statistical mechanics, an ML ensemble, which is often unlimited, includes just a limited specific sequence of alternative models but normally seeks a more flexible structure to exist amongst those substitutes. With this in mind, Abbasi, et al. [1] presented an EL called ElStream uses seven various artificial and real datasets for assortment. Various ensemble and ML algorithms based on majority voting are used. The ELStream technique employed elegant ML algorithms that are evaluated using f-scores and accuracy criteria. The baseline approach achieved the highest accuracy of 92.35%, but the ElStream mechanism achieved the highest accuracy of 99.99%, displaying a skilled utility of 7.64%. According to their findings, the proposed Elstream method can identify idea drifts and categorize data more accurately than earlier research.

By this token, Zhang, et al. [134] suggested an EL model that directly forecasted Vickers hardness, which consists of anomalous load-dependent hardness, with quantitative accuracy. Their approach was confirmed by developing a unique hold-out test set of hard materials and analyzing eight metal disilicides. Both provided excellent assurance in achieving hardness at all loads for both materials. The model used to anticipate the hardness of 66,440 is part of Pearson's crystal dataset, which contains probable hard characteristics in just 68 previously unexplored materials. The proposed approach of direction finding is set to update the search for innovative hard material by leveraging ML's effectiveness, transferability, and scalability.

Additionally, Lee, et al. [52] proposed a unified ensemble technique called SUNRISE, which is compatible with different off-policy RL algorithms. Two important components that have been integrated are (a) an interference technique that selected functions for effective inspection by using the highest upper-confidence limits and (b) Weighted Bellman backups relied on ambiguity approximates from a Q-ensemble to re-weight marked q-values. The authors implemented the method among agents using Bootstrap with random initialization to show that these various ideas are highly orthogonal and can be beneficially integrated, as well as the subsequent development of the performance of existing off-policy RL algorithms, such as Rainbow DQN and a Soft Actor-Critic, for both separate and continuous control tasks on both high-scale and low-scale ecosystems.

Onward, Mohammed, et al. [73] contributed to the critical improvement of an entirely digital COVID-19 test [109] using ML mechanisms to analyze cough recordings. They developed a way for creating crowdsourced cough sound examples by breaking/insulating the cough sound into non-overlapping coughs and utilizing six different representations from each cough sound. It was assumed that there was unnoticeable data loss or frequency deformation. They did not attain more than 90% accuracy due to a large degree of overlap among the class of characteristics. However, this unbiased selection criterion ensures that the predictive model is as independent kinds of the pattern and categorizer as possible.

On the other hand, Khairy, et al. [48] presented a voting prepositioning and boosting ensemble model for banknote recognition. A mixture of ten algorithms and nine different pairings were sampled, yielding an exact accuracy rate. Experiments on Swiss franc banknote and banknote authentication datasets showed that ensemble algorithmic models could create accurate identification of exclusive methods. With the banknote authentication dataset, voting and AdaBoost served for a maximum of 100% and 99.90%, respectively, while the Swiss franc dataset served for a maximum of 99.50 percent. As a result, testing and analyzing the offered models confirmed their adequacy and applicability for detecting counterfeit banknotes.

Zhang, et al. [136] advocated employing ML technologies to solve the PPH predictive detection problem. Two principal contributions were (1) the well-organized EL approaches and (2) the amassing of a big clinical dataset. Their DIC and PPH datasets each have 212 and 3842 records. The trained prediction detection model produced accurate findings. As a result, the accuracy of real PPH detection would increase to 96.7%; The overall accuracy of anticipating Disseminated Intravascular Coagulation (DIC) can surpass 90%. Table 8 displays the EL methods used in pattern recognition and their properties.

5.6 RL mechanisms for pattern recognition

RL is a branch of ML concerned with how intelligent agents should be enforced in an ecosystem to increase the concept of crowded awards. RL is one of the three main ML patterns found in supervised and unsupervised learning. RL differs from supervised learning because it does not require labeled output/input pairs to be presented, and it does not require sub-optimal performance to be adjusted. Because dynamic programming approaches are utilized in the context of RL algorithms, the environment typically begins in the shape of a Markov decision process. Automatic surgical gesture recognition, such as competence appraisal and conducting sophisticated surgical inspection tasks, is a fundamental advancement in robot-assisted surgery. In this regard, Gao, et al. [29] suggested a framework for simultaneous surgical gesture assortment and segmentation based on RL and tree search. An agent was instructed whose direct actions were appropriately reviewed via tree search to collect and segment the surgical video in a human-like behavior. The proposed tree search algorithm unified the outputs from two designed value networks and neural networks policy. Overall, the proposed method consistently outperformed existing strategies on the suturing task of the JIGSAWS dataset in terms of edit, accuracy, and F1 score. Finally, they discussed the usage of tree search in the RL framework for robotic surgical applications.

Benhamou and Saltiel [85] also handled the difficult task of modifying and determining portfolio commitment to the crisis ecology. The authors exploited contextual data with the help of a second deep RL sub-network. The model considered portfolio approaches standard disparity, such as contextual data, and portfolio methods over multiple rolling periods, such as the Citigroup economic surprise index, risk aversion, and bond-equity correlation. The additional contextual data made the dynamic property manager agent's learning more resilient to crises. Furthermore, using the standard deviation of portfolio strategies generated a significant indication for future crises. Their model outperformed typical financial models in terms of functionality.

Besides, Wang and Deng [114] provided an adoptive border to learn balanced operation for many races that rely on large border losses. The proposed RL is based on a Race Balanced Network (RL-RBN), which formulated the procedure of discovering the optimal borders for non-caucasian as a Markov decision procedure and used deep Q-learning to learn rules for an agent to choose the proper border by estimating the Q-value operation. Agents reduced the skewness of attributes distributed between races. They also created two ethnicity-aware education databases. The datasets BUPT-Balancedfaced and BUPT-Global face were used to analyze racial prejudice from both algorithm facets and data. Several large-scale analyses of the RFW database showed that RL-RBN successfully lowers racial prejudice and learns a fairer operation.

In addition, Wang, et al. [115] modeled an online key decision process in dynamic video segmentation as a deep RL issue and learned an impressive scheduling rule from special data about the history and the procedure of maximizing global return. They also looked into dynamic video segmentation on face videos, which has never been done previously. They demonstrated that the operation of their reinforcement key scheduler surpasses that of alternative baselines in terms of running velocity and efficient key selections by analyzing the 300VW dataset. According to their findings, their provided method was generalizable to various modes, and they introduced an online key-frame decision in dynamic video segmentation for the first time.

Further, Ma, et al. [69] proposed a DL solution for robust action identification with WiFi that exploits an RL agent to recognize the original neural architecture for the identification algorithm. They evaluated the provided design using real-world tracks of 5 activities carried out by seven people. The introduced concept achieved 97% average identification accuracy for unidentified receiver directions/places and unseen people. When the neural architecture was manually examined, the RL agent exhibited a 15% improvement in accuracy. In collaboration with the RL agent, the state machine improved the additional 20% accuracy by learning transient dependencies from previous assortment outcomes. Two public datasets assess the presented design and reach 80% and 83respectively.

Moreover, Gowda, et al. [32] proposed a centroid-based model that clustered semantic and visual models, considered full training instances at once, and generalized precision to samples from previously undiscovered classes. They optimized the clustering using RL, which is serious for their model to work. They discovered that it consistently outperformed the proposed model in the most standard datasets, including HMDB51, Olympic Sports, and UCF101, by calling the presented method CLUSTER, which was both in generalized zero-shot learning and zero-shot assessment. They also outperformed their model in the image-board competition. Table 9 lists the RL methods and their attributes utilized in this topic.

5.7 RF mechanisms for pattern recognition

RF decision is an aggregate learning technique for assortment, regression, and other tasks that involves constructing many decision trees during training. For assorting tasks, the RF yield is the class picked by the majority of trees. The average forecast or mean of exclusive trees is returned for regression tasks. RFs are ideal for decision trees since they have a habit of overfitting to the training series. RFs outperform decision trees on average, but their accuracy is lower than that of gradient-increased trees. In this regard, Awan, et al. [9] proposed a solution to a security problem that resulted in a secure platform for social media users. The solution used facial recognition and Spark ML lib to train 70% of the profile data on ML and then investigated the remaining 30% of data to investigate prediction and accuracy. Their prediction model was based on words such as reading datasets from CSV characteristic engineering training data using RF, displaying learning curves, plotting confusion matrix, and plotting ROC cure. They achieved 94% accuracy. The limitation of this plan consisted of multiple false positive outcomes that can alter the result operation by up to 6%.

Also, Moussa, et al. [77] applied the fractional coefficients method for facial recognition scope. In addition, they applied RF and SVM in face recognition over the Euclidean distance. They then compared and examined the functions of RF and SVM to categorize the characteristic vectors, and the results of the assortment issued from various characteristics created the model's outstanding benefit, followed by selecting the Discrete Cosine Transform (DCT) coefficients. The authors demonstrated efficient results of applying the RF in terms of accuracy when compared to SVM and Euclidean distance while the face recognition algorithm is investigated. As a result, despite SVM, unique decision trees in the RF instructive performances were automatically used more frequently in the training phase, resulting in separate predictions blended to generate an accurate RF.

Besides, Marins, et al. [71] established an approach for identifying and categorizing problematic events across the operational performance of O&G generation lines and wells. They considered seven types of faults with normal performance status. The enhanced system used a categorizer based on the RF algorithm and a Bayesian non-convex optimization technique to optimize the system hyperparameters. Three tests were included to evaluate the system's capability and robustness in diverse fault recognition/taxonomy settings: tests 1and 2 regarded the binary normal × faulty situations, that the flaws were standing altogether and exclusively, respectively; test 3 draws the multiclass scenario, that the system operated simultaneous fault recognition and assortment and is the best for functional utilization. Besides the high accuracy, the system also reached a short recognition latency, detecting the fault before finishing 88% of its temporal period, so it generated more time for the conductor to decrease associated destructions.

Moreover, Jiao, et al. [46] focused on a computational TTCA recognizer called iTTCA-RF, utilizing the hybrid characteristics of Global Positioning System Data (GPSD), PAAC, and GAAPC. Using the MRMD-successful characteristic selection approach and IFS theory, the top 263 relevant characteristics were chosen to construct the best operation predictor. In this manner, the imbalance problem was addressed by utilizing the SMOTE-Tomek resampling process. ITTCA-RF reaches the best CV appraising BACC value of 83.71% which is 4.9% higher than the related valuing of the prior stated best predictor. The independent experiment BACC point was 73.14% development of 2.4%, and joint Sp and Matthews Correlation Coefficient (MCC) values enhanced by 4.0% and 4.6% accuracy respectively as well.

Additionally, Hafeez, et al. [33] developed a model to identify the action; each action derived based on the character derived from a method of directional angle, time-domain, and depth motion map, for the Huthe man Action Recognition (HAR) system. They used multiple RF algorithms as a categorizer with a benchmark UTD-MHAD dataset and achieved an accuracy of 90%. As a result, they demonstrated that the identification handled by their method is much improved in terms of imprecision and efficiency.

Besides, Langroodi, et al. [51] provided a fractional RF algorithm to develop an accurate activity detection model. They tested the generalizability of the suggested technique by applying it to three case studies in which several scenarios were constructed. Consequently, they reached these results: (1) The current Frame Relay Forum (FRF) can give equivalent operation to contemporary DL-based activity detection systems with only a fraction of the training dataset used in earlier techniques, with an accuracy of up to 94% for articulated equipment and 99% for rigid body equipment. (2) Compared to other baseline superficial learners, FRF performs better in accuracy, recall, and precision.; (3) With an accuracy of 86.2%, the FRF approach can forecast activities of an actual piece of equipment in varied shapes/sizes. In a repeated scenario of testing the technique on scaled RC equipment, FRF achieved an accuracy of 72.9%, which is equivalent to the results reported in existing machine-learning-based techniques.

Moreover, Akinyelu and Adewumi [4] developed a content-based phishing recognition system that bridged a recent gap discovered in their research. The authors employed and documented the use of RF ML in categorizing phishing strikes. The primary goal is to upgrade created phishing email categorizers with greater forecasting accuracy and fewer features. Afterward, they examined the proposed method on a dataset including 2000 ham and phishing emails, a series of eminent phishing email features extracted and exploited by the ML algorithm with a consequence categorizing accuracy of 99.7% with a trivial false positive rate of about 0.06%. Table 10 deliberates the RNN approaches used in pattern recognition and their properties.

5.8 MLP learning mechanisms for pattern recognition

A class of feedforward ANN called multilayer perceptron, which is utilized vaguely, sometimes means any feedforward ANN, usually severely points to networks combined of several layers of perceptron, terminology, and see. The multilayer perceptron is commonly referred to as a "vanilla" neural network, especially when they comprise a single hidden layer. An MLP includes as many as three-node layers: a concealed layer, an input layer, and an output layer. Each node is a neuron with nonlinear activation performance except for the input nodes. For training, MLP employs a supervised learning method known as backpropagation. MLP is distinguished from a linear perceptron by its several layers and non-linear activation. It is capable of distinguishing information that is not linearly divisible. By the same token, de Arruda, et al. [6] focused on improving a systematic method of recognition-among concepts from feature selection, pattern recognition, and network science-the features that are especially particular to prose and poetry. The authors drew on the Gutenberg database for poetry and prose. They summarized the texts in terms of total recognition of the phones and rhymes. Their contour was characterized in terms of some supplied criteria, which included the coefficient of the diversity of time intervals and the mean, which is then used to choose amongst data property selectors. They expressed the connection of patterns as a complex network of instances.

Also, Chen, et al. [18] introduced an LPR-MLP hybrid pattern that utilizes ReliefF, PCA, and Local Binary Pattern (LBP) due to process image information and meteorological mechanics information, and thus exploited MLP to forecast its health stage, then solved the issue of forecasting the health state of transmitting lines below multimode, high-dimension, heterogeneous, nonlinear, information. According to their findings, the LPR-MLP pattern outperformed the other classic patterns in terms of forecasting accuracy and function. Their provided model generated a fresh notion and effective transmission line health forecasting methodologies, but the rough character of the feature identified from data photos is a disadvantage.

Also, Zhang, et al. [131] presented a new MorphMLP architecture that focused on collecting local information at low-stage layers while gradually shifting to focus on long-term modeling at high-stage layers. They specifically designed a fully-connected-Like layer, understood as MorphFC, of two morphable filters that enhanced their receptive field progressively over the width and height dimensions. They also offered to modify the MorphFC layer in the video spectrum freely. They created an MLP-like backbone for learning video outlines for the first time. Finally, they looked at large-scale tests on picture assortments, semantic fragmentation, and video assortment.

Similarly, Chen, et al. [20] presented a typical MLP-like architecture, CycleMLP, that was an adaptable backbone for dense forecasting and visual recognition. Compared to recent MLP architectures such as Gmlp, ResMLP, and MLP-Mixer, whose architectures complement picture size and are hence unachievable in object detection and fragmentation, CycleMLP offers two advantages. (1) it achieved linear computing complexity to image size by employing local windows. (2) It could handle a variety of image sizes. Mutually, prior MLPs had O(N2) computations owing to fully spatial relations. The authors constructed a family of patterns that exceed present MLPs and even state-of-the-art Transformer-based patterns. CycleMLP-Tiny outperformed Swin-Tiny by 1.3% mIoU on ADE20K dataset with lower FLOPs. Additionally, CycleMLP displayed great zero-shot robustness on the ImageNet-C dataset as well.

Moreover, Hou, et al. [41] proposed an MLP-like network architecture for visual detection called Vision Permutator. They showed that individually encoding the width and height data can greatly develop the pattern action compared to current MLP-like patterns that consider the two spatial sizes as one. Despite the significant advancement over concurrently famous MLP-like patterns, a significant downside of the given Permutator is the scaling issue in spatial sizes, which is prevalent in other MLP-like patterns. Because the characteristics' forms in the fully-connected layer are designed, processing input photos with arbitrary forms is impossible, making MLP-like patterns difficult to exploit in downstream tasks with different-sized input images. Table 11 discusses the MLP methods used in pattern recognition and their properties.

5.9 LSTM mechanisms for pattern recognition

LSTM is a type of artificial RNN architecture used in DL. Unlike traditional feedforward neural networks, LSTM contains a feedback loop that can process individual data points and entire data sequences. A standard LSTM module consists of an input gate, a cell, a forget gate, and an output gate. The cell refers to values at arbitrary time intervals, and the three gates control the current of data in and out of the cell. LSTM networks are designed to categorize, analyze, and forecast data based on time-series information, which is a challenge when training typical RNNs. Associated invulnerability to gap length is a benefit of LSTM over RNNs, hidden Markov models, and other continuous learning strategies in several applications. In this regard, Xia, et al. [120] proposed a DNN that composed convolutional layers with LSTM for human activity detection. The CNN weight attributes concentrated mostly on the fully-connected layer. In response to this characteristic, a GAP layer was used to change the fully-connected layer beneath the convolutional layer, significantly reducing model features while maintaining a high recognition rate. In addition, after the GAP layer, a BN layer was added to enhance the pattern's convergence and apparent effect. Ultimately, the F1 score achieved 92.63%, 95.78%, and 95.85% accuracy on the OPPORTUNITY, UCI-HAR, and WISDM datasets. Also, they investigated the effect of some hyper-parameters on model actions like the filter amount, the kind of optimizers, and batch size.

Also, Ullah, et al. [106] suggested an effective framework for real-world anomaly recognition in supervision ecosystems with high accuracy on present anomaly recognition datasets. Their framework's generic pipeline used the LSTM model from continuous frames, which were traced by a unique multi-layer BD-LSTM for ordinary and anomalous class classification. The examined results showed an enhanced accuracy of 3.14% for the UCF-Crime dataset and 8.09% for the UCFCrime2Local dataset. Recently, the accuracy of their framework is insufficient for low difference and requires development, especially as the UCF-Crime dataset consists of very challenging classifications.

Besides, Rao, et al. [88] presented a generic unsupervised technique called AS-CAL to learn efficient performance agents from unlabeled skeleton information for performance recognition. They presented a method for learning essential performance patterns by comparing the similarity of increased skeleton sequences altered by various novel increase methodologies, allowing their technology to realize the fixed pattern and discriminative performance features from unlabeled skeleton sequences. They also proposed using a queue to build a more stable, memory-effective dictionary with variable management of preceding encoded keys to simplify contrastive learning. Computer-Aided Engineering (CAE) was established as the ultimate function representation for performing action detection. Their technique beats existing hand-crafted and unsupervised learning mechanisms, and its function is comparable to or even better than some supervised learning mechanisms.

Moreover, Huang, et al. [42] presented an LSTM technique for the recognition of 3D objects in a sequence of LiDAR point cloud observations. Their method conceals status variables linked to 3D points from previous object recognitions and relies on memory, which varies depending on vehicle ego-motion at each time step. A sparse 3D convolution network that co-voxelized the input point cloud and concealed state at each frame and memory is the foundation of their LSTM. Tests on Waymo Open Dataset displayed that their algorithm reached the outperformed results and acted with a single initial baseline of 7.5%, a multi-frame object baseline of 6.8%, and a multi-frame object recognition baseline of 1.2% of accuracy.

In addition, Liu, et al. [64] proposed a new spatiotemporal saliency-based multi-stream ResNet and a new spatiotemporal saliency-based multi-stream ResNet with attention-conscious LSTM for function recognition; these two techniques included three supplementary currents: a spatial current fed by RGB frameworks, a transient current fed by optical flood frameworks, and a spatiotemporal saliency current fed by spatiotemporal saliency graphs. Compared to convolutional two-stream-based and LSTM-based patterns, the presented techniques Synchronous Transport Signal (STS) can produce spatiotemporal object background data while reducing foreground intrusion, confirming efficiency for human performance recognition and the STS-ALSTM multi-stream pattern achieved the highest accuracy when compared to input with individual modalities. Table 12 shows the LSTM methods used in pattern recognition and their properties.

5.10 Hybrid methods for pattern recognition

Contrary to the other systems that are simple enough to solve the detection issues, dynamic environments have to synthesize some approaches to tackle the sophistication of pattern recognition. Such a situation necessitates the use of hybrid techniques that combine two or more DL techniques. So, Mao, et al. [70] proposed a System Activity Report (SAR) image provision mechanism relying on Cognitive Network-Generative Adversarial Network (CN-GAN), which mixes LSGAN and Pix2Pix. A limit of regression performance was added to the producer's loss performance to reduce the mean square path between the produced and the actual instances. By considering Pix2Pix, random noise is exchanged by the noise images inputted to LSGAN. Based on the convolutional CNN technique, a light network architecture designed to avoid the issue of high model sophistication and overfitting resulted in the addition of a deep network structure, allowing the detection operation to be developed. MSTAR data regulation was used in the productive pattern training and goal detection tests. These results demonstrated that CN-GAN can resolve SAR image difficulties with a small instance suitably and powerful speckle noise.

Also, Wang, et al. [118] presented a new mechanism relying on the utilization of a GAN and CNN for Public Domain (PD) pattern recognition categorization in Geographic Information System (GIS) on unbalanced instances. The unbalanced instances equalized using this mechanism. A WD2CGAN is designed to offer fault instances for an unbalanced instance caused by a faulty signal. Furthermore, the deconstructed hierarchical investigation space automatically constructs an ideal CNN for PD in the GIS. Finally, the PD pattern identification in GIS under imbalanced cases is recognized using the trained ASCNN and WD2CGAN. When compared to traditional GAN, the WD2CGAN instance equalization processing developed by about 1% shows clear advantages. Simultaneously, in comparison with traditional CNN, the recognition accuracy of ASCNN is enhanced by a minimum of 0.4%, and its parameter amount and space of storage are particularly decreased. Consequently, the results validated the superiority of the presented WD2CGAN and ASCNN models.

Besides, Nandhini Abirami, et al. [78] presented an effective assortment framework for the account of retinal fundus image recognition to prevail over these obstacles. They began by preprocessing the input image from the publicly accessible STARE database in three stages: (a) specular reflection elimination and smoothing, (b) contrast increase, and (c) retinal region extension. The features recovered from the preprocessing image using Multi-Scale Discriminative Robust Local Binary Pattern (MS-DRLBP), based on RGB element selection, LBP descriptor, and Gradient operation. Finally, the images were classified using a hybrid CNN and RBF model that divided the retinal fundus images into four categories: Copy Number Variation (CNV), Designated Router (DR), New Radio (NR), and Advanced Micro Devices (AMD). Examined results of the presented mechanism gave an accuracy of 97.22% in comparison with the other present methodologies. Table 13 shows the hybrid methods used in pattern recognition and their properties.

In addition, Butt, et al. [14] introduced DL considering method over an RNN that gained successful consequences over Arabic text datasets like Alif and Activ. RNN’s operation in sequence learning methods has been significant in previous works, particularly in text transcription and speech recognition. The attention layer allowed people to obtain a concentrated scope of the input sequence, resulting in faster and easier learning. The authors developed the lowering inline error rate in preprocessing by creating a new dataset of one word on an image from Alif and Activ. They interpreted it with an accuracy of 85% to 87%. This model reached better results than those based on a typical CNN, RNN, and hybrid CNN-RNN.

Furthermore, Subhashini, et al. [104] used the DNN-Radial Basis Function (DNN-RBF) for performing. To remove noise from the input signal, accessible speech samples are preprocessed using a Wiener filter, and the Mel Frequency Cepstral Coefficients (MFCC) features of this preprocessed signal are retrieved. The Gaussian Mixture Model (GMM) super vector estimated an i-vector with reduced dimensionality. The Texas Instruments/ Massachusetts Institute of Technology (TIMIT) dataset is used to evaluate the function of this speaker detection algorithm. The efficiency of the provided algorithm is then evaluated using multiple functions such as recall, precision, and accuracy. Through AHHO-based DNN-RFB, accuracy, precision, and recall values are achieved at 94.92%, 89.87%, and 94.67%, respectively. The performance of DNN-RBF developed in the presence of an adaptive optimization method in speaker recognition. Table 13 discusses the Hybrid techniques used in pattern recognition and their properties.

6 Results and comparisons

The previous section investigated several papers that used DL/ML approaches in pattern recognition issues. DL techniques are being used to train computers for various tasks, such as face recognition, image classification, object identification, and computer vision. In this approach, computer vision seeks to mimic human perception, its many performances, and DL behavior by providing computers with the necessary data. This section involves five subsections that evaluate various aspects of DL/ML methods: DL methods applications, DL method for pattern recognition, datasets of DL methods, criteria of DL/ML methods, and result and analysis. Pattern recognition practices utilize various methods to extract meaningful information from data. One commonly employed method is the use of machine learning algorithms, such as SVMs, Random Forests, and MLP neural networks. SVMs are effective in binary classification tasks, finding an optimal hyperplane to separate data points. Random Forests combine multiple decision trees to improve accuracy and handle complex datasets. MLP neural networks consist of interconnected layers of artificial neurons and are effective in learning complex patterns. Another popular method is deep learning, which involves the use of deep neural networks, such as CNNs and LSTM networks. CNNs excel in image and video analysis, capturing spatial hierarchies, while LSTMs are suitable for sequential data analysis, preserving temporal dependencies. Ensemble learning methods, including AdaBoost and Bagging, combine multiple models to enhance prediction accuracy. Reinforcement learning techniques, such as Q-learning and Policy Gradient, enable machines to learn optimal decisions through interactions with an environment. These methods provide a diverse toolbox for practitioners in pattern recognition, allowing them to tackle various tasks and achieve accurate results.

6.1 DL applications for big data pattern recognition

In this section, we will discuss a variety of applications of DL techniques in pattern recognition.: (a) Virtual assistants such as Google Assistant, Amazon Echo, Siri, and Alexa all use DL to provide you with a personalized user experience. They are trained to recognize the user's voice and accent and provide you with a secondary human experience amid machines by utilizing deep neural networks that replicate speech and human tone. (b) DL is used in the iPhone's Facial Recognition to detect data points from the user's face to unlock the phone in photos. DL used a large number of data points to create a precise map of a user's face, which the built-in algorithm then uses for detection. (c) NLP: Some well-known applications gaining traction include document summarization, language modeling, sentiment analysis, question answering, and text classification. (d) Healthcare: Primitive illness and condition recognition, quantitative imaging, and the availability of decision support tools for experts are all having a significant impact on life science, medicine, and healthcare. (e) Data from geo-mapping, GPS, and sensors are merged in DL to develop models that specify recognized directions, street signs, and dynamic components like congestion, traffic, and pedestrians. (f) DL models for text generation perfect spelling, style, punctuation, grammar, and tone are required to replicate human behavior. (g) CNN enables digital image processing, which can later be separated into handwriting, object recognition, facial recognition, etc. Figure 8 shows the frequency of parameters used in evaluations of papers, and based on the evaluation, accuracy (29.6%), delay (15.8%), and availability (11.3%), respectively, are the most frequent parameters studied in the investigated papers. Also, Fig. 9 demonstrates the frequency of different DL methods for pattern recognition. As is shown in this figure, visual recognition (26.7%), image recognition (20.0%), and speech recognition (5.0%), respectively, are the most frequent pattern recognition applications which use DL methods.

6.2 DL methods for big data pattern recognition

DL mechanisms are representation-learning methods with numerous degrees of representation, achieved by combining plain but non-linear modules which each exchange the representation at one stage (beginning with the raw input) in a representation at a higher, somewhat abstract stage.

6.2.1 CNN methods