Abstract

The principal objective of video/image captioning is to portray the dynamics of a video clip in plain natural language. Captioning is motivated by its ability to make the video more accessible to deaf and hard-of-hearing individuals, to help people focus on and recall information more readily, and to watch it in sound-sensitive locations. The most frequently utilized design paradigm is the revolutionary structurally improved encoder-decoder configuration. Recent developments emphasize the utilization of various creative structural modifications to maximize efficiency while demonstrating their viability in real-world applications. The utilization of well-known and well-researched technological advancements such as deep Convolutional Neural Networks (CNNs) and Sentence Transformers are trending in encoder-decoders. This paper proposes an approach for efficiently captioning videos using CNN and a short-connected LSTM-based encoder-decoder model blended with a sentence context vector. This sentence context vector emphasizes the relationship between the video and text spaces. Inspired by the human visual system, the attention mechanism is utilized to selectively concentrate on the context of the important frames. Also, a contextual hybrid embedding block is presented for connecting the two vector spaces generated during the encoding and decoding stages. The proposed architecture is investigated through well-known CNN architectures and various word embeddings. It is assessed using two benchmark video captioning datasets, MSVD and MSR-VTT, considering standard evaluation metrics such as BLEU, METEOR, ROUGH, and CIDEr. In accordance with experimental exploration, when the proposed model with NASNet-large alone is viewed across all three embeddings, the BERT findings on MSVD Dataset performed better than the results obtained with the other two embeddings. Inception-v4 outperformed VGG-16, ResNet-152, and NASNet-Large for feature extraction. Considering word embedding initiatives, BERT is far superior to ELMo and GloVe based on the MSR-VTT dataset.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

When it comes to providing a decent caption for a video clip that is free of many semantic errors or misconceptions, humans are pretty capable. Humans can easily explain it and may be quite accurate in their portrayal. However, it is always arduous for computers to identify objects and predict their associated relationships. Image captioning primarily started with traditional retrieval and template-based methods. However, though they remained reliable and accurate at that time, those methods may not be too relevant nowadays. The reason is the advancement of Deep Learning and the immense advantages of Natural Language Processing (NLP) [1]. With the advent of CNNs and other their improved variants, it became significantly easier to analyze visual frames and anticipate the most appropriate sentences. Fig. 1 illustrates an example of video clips being described using an English description provided by a proposed model in order to comprehend any given video.

The advancements in NLP have enabled machines to grasp English-like descriptions in the form of word embeddings, thanks to the development made in this field. Text synthesis, neural machine translation, and information retrieval are just a few applications that use word embeddings.

During the initial round of preprocessing, we extracted frames from the videos. The collected frames are modified to match the input dimensions of the pre-trained CNN models VGG-16, NASNet-Large, Inception-v4, and ResNet-152. The goal of the proposed framework is to produce a summary of English-like phrases for video transcripts. To achieve this, we created a novel encoder-decoder framework based on a Contextual Hybrid Embedding Network (CHEN) in the encoder and a Residual Connection Network (RCN) in the encoder. The proposed model encodes features derived using a pretrained CNN model to acquire the spatial and temporal characteristics of a video.

Essentially, video captioning is the act of converting visual information to text. Decoding videos is more complex compared to images since videos contain spatial and temporal features. While CNNs are likely to grab the spatial attributes included in images, the temporal features contained in videos are apprehended using one or more LSTM [2, 3] layers in tandem with one or more convolution layers.

Attention is necessary when performing language translation, visual content description, or question answering. Additionally, attention has evolved as a fundamental component of the Neural encoder-decoder paradigm, which is employed for all activities involving information transfer from one sequence to another. Soft attention [4] is employed in our proposed method to increase performance, and the context vector for the decoder is constructed as a weighted sum of the encoder output states.

The evolution of image and video captioning methods creates new avenues in various real-world problem domains, including assisting human with different degrees of visual impairment, self-driving vehicles, human-robot interaction, automatic video subtitling, and video surveillance [1, 5].

The proposed model includes a multi-head attention component. This concept of attention was extended to provide a more comprehensive interpretation of the input textual sequence. The most straightforward representation of this textual input sequence is an Embedding vector, which logically comprehends words’ meaning. The input sequence is logically divided into distinct heads. This indicates that specific segments of this context vector can acquire knowledge about various facets of the meanings of each word and how they connect to other terms in the sequence. As a result, it contributes to a more meaningful interpretation of words.

The following are the most significant contributions made to this research work.

-

The proposed model was motivated by efforts to extract features using a range of deep neural trained networks, including NASNet Large [6], Inception-v4 [7], ResNet152 [8], and VGG16 [9].

-

The framework experimented with various combinations of recent word embeddings such as BERT [10], ELMO [11], and Glove [12] and compared the results to several suggested neural networks.

-

We adapted the notion of a Short Connection Encoder, which overcomes the concerns of vanishing gradients and accuracy saturation.

-

We attempted to use the novel Contextual Hybrid Embedding Block to augment the model with additional contextual data.

-

We modelled extra attention to the decoding stage by using multi-headed attention.

-

Trials on two real-world video captioning datasets have been conducted to demonstrate the proposed model’s superiority to existing state-of-the-art approaches.

-

The model’s outcomes were measured using a variety of conventional performance indicators, including BLUE [13], METEOR [14], ROUGE [15], and CIDEr [16].

The rest of this article is arranged into the following sections: Section 2 is devoted to a discussion of related work. Section 3 discusses the numerous components that comprise the proposed framework. Section 4 contains thorough explanations of the studies undertaken and the outcomes for video captioning. Section 5 summarises the work and suggests further directions.

2 Related Works

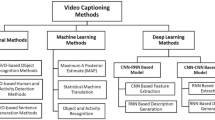

Image and video captioning are the most widely used vision-based innovations, addressing a variety of real-world issues such as retrieving relevant videos, enabling visually impaired persons to comprehend real-world events, and visualizing and analyzing recordings. The older approaches, such as template-based and retrieval-based, appear to be outdated, notwithstanding their use to some extent. The recent interest and advancements in this field include neural image caption generation using deep learning.

Most of the initial work focused on image captioning, then eventually broadened to incorporate video captioning. Vinayals et al. [17] built a deep-generative model for autonomously generating captions for images by combining CNN and Recurrent Neural Networks (RNN). You et al. [18] incorporate top-down and bottom-up methods for extracting additional characteristics of the image and coupling them with an RNN capable of selectively attending to semantic features identified in the image.

The paper [19] presented a sequence-to-sequence framework of CNN and RNN with an added attention module and an additional memory unit for the encoder and decoder to supplement the memory of the RNN. However, in this approach, only the RNN component is used for training. The author [20] suggested a graph-based partitioning and summarization technique, as well as two distinct types of GCN-LSTM interaction modules. However, the research coupled with graph representations to explore the word relationships.

The research [21] developed a novel layered technique with adaptive attention that explicitly addresses the spatial or temporal attention necessary for picking certain regions or frames for word prediction. Additionally, the strategy emphasized low-level visual cues and high-level verbal contexts. However, the strategy may perform even better when numerous hLSTMat-based models are used in an ensemble.

The study [22] devised a new sibling convolutional encoder that encodes videos concurrently using a dual branch architecture. This combined semantic extraction model is coupled with "soft attention" and fed to RNN-based decoder unit. However, the RNN decoder has a limited memory capacity; other deep networks such as LSTM and GRU have been shown to be more successful. Xu et al. [23] constructed an attention network to investigate attention fusion in both a hierarchical and end-to-end fashion. It also contains a sizable number of encoder and fusion attention modules.

For future time step decoding, Bin et al. [24] presented the Bi-directional LSTM, which processes videos in either forward or reverse orientations. It also develops a conceptual framework for soft attention that focuses on items with specified probabilities. Song et al. [25] introduced MS-RNNs, an end-to-end architecture that embraces stochastic variables to cater to data uncertainty.

Yang et al. [26] annotate the videos using generative adversarial networks (GANs). The caption generator provides captions for recordings. However, the discriminator determines if words are accurate or made up. Zheng et al. [27] introduced an interface that identifies actions by associating to both the subject and video dynamics. Their behaviour is then translated using both the subject’s embedding and the video’s temporal properties.

The article [28] proposed a computerised method for classifying skin diseases using MobileNet V2 and Long Short Term Memory in conjunction with deep learning (LSTM).

The study [29] compared the classification and differentiation of COVID-19 and non-COVID-19 lung images using various CNNs. The understanding of different CNNs for the classification and detections of images is explained in studies [30,31,32,33,34].

3 Proposed Framework

The typical structure of a CNN consists of several convolutional layers followed by a pooling layer. As shown in Fig. 2, this procedure can be repeated indefinitely to produce convolutional and pooling layer stacks, which in turn produce fully linked layers and a final classification target. Before the classification layer, the activation could be a simplified representation of the images. The network’s parameters are learned with back-propagation.

RNNs can be trained to map sequences when the alignment of the inputs and outputs is known in advance; however, it was unclear whether they could be used to solve problems in which the lengths of the inputs and outputs vary. This challenge was addressed by training an RNN to map input sequences to a fixed-length vector, followed by mapping the vector to an output sequence using a second RNN. This is commonly referred to as the encoder-decoder framework in common parlance. Another well-known issue with RNNs is that it can be difficult to train them to acquire long-range dependencies. Nonetheless, it is well-established that LSTMs, which feature deliberately programmable memory units, can learn long-range temporal relationships.

The LSTM component is shown in Fig. 3. To summarise the sequence of observed inputs up to that point, the LSTM model is based on a memory cell c that encodes each input. Multiplying sigmoidal gates with a range of zero to one are used to modify the cell. The LSTM’s gates determine whether the LSTM stores the input value (if the layer evaluates to 1) or discards it (if it evaluates to 0). The LSTM is able to learn complex long-term dependencies thanks to the interplay of its three gates: the input gate (i) which controls whether the LSTM considers its current input \((x_{t})\); the forget gate (f), which allows the LSTM to forget its previous memory \((c_{t})\); and the output gate (o), which controls how much memory to transfer to the hidden state \((h_{t})\). This leads to the following definition of LSTM recurrences:

-

\(W_{*h}\) : Weights that relate each gate in the LSTM to previous hidden states.

-

\(W_{*x}\) : Weighing units that connect the current input to each gate.

-

\(\sigma \) : Sigmoid nonlinear activation functions.

-

\( \phi \) : Hyperbolic tangent nonlinear activation functions.

-

\(\odot \) : Represents the operation of element-by-element multiplication.

Thus, the LSTM’s gates enable it to represent a sequence through the acquisition of long-term dependencies. Thus, LSTMs are capable of “encoding" a sequence of inputs into a vector and “decoding" the vector to generate a sequence of outputs.

Initially, during the preprocessing stage, we retrieved frames from the videos. The retrieved frames are adjusted to fit the input dimensions of pre-trained CNN models, specifically VGG-16, NASNet-Large, Inception-v4, and ResNet-152. The proposed framework’s purpose is to generate a summary of English-like sentences for the videos. To accomplish this, we designed a novel encoder-decoder framework that is based on a Contextual Hybrid Embedding Network (CHEN) and a Residual Connection Network (RCN) in the encoder. The proposed model uses features extracted using the pretrained CNN model as input and encodes them to acquire the spatial and temporal features of the video. Fig. 4 illustrates the proposed approach.

3.1 Preprocessing

Successive frames in a video have a lot in common. Therefore they have the same number of characteristics. We chose a set of essential frames from the video to decrease the number of features. The extraction of key frames is based on a template matching scheme. Equation (6) [35] is used to compute the template match score.

Where I, Temp, and \(T_{Score}\) denote, respectively, the image, the template, and the template match score. (x, y) specifies the pixel coordinates. The parameter \(x'\) can be any value between 0 and the width of the template, while \(y'\) can be any value between 0 and the height of the template. In this work, we compute the similarity between two frames, I, and Temp. Where I denotes the current keyframe, and Temp denotes the next frame in the sequence. If the \(T_{Score}\) value is greater than the threshold, the frame Temp is skipped due to its similarity to the keyframe I, and the next frame in the sequence is set as Temp. If the \(T_{Score}\) is less than the threshold, the key frame Temp is chosen, and the process continues until the end of the frame sequence. The algorithm used to select key frames is shown below:

Algorithm 1 for selecting key frames using template matching

3.1.1 Feature Extraction

The proposed system begins by feeding videos to a pre-trained neural network, which captures features and generates matching feature vectors. The dataset video clips are divided into frames and then enlarged and scaled to fit the input requirements of the suggested pre-trained deep learning models, which are used to represent the majority of videos. The pre-processed images are consecutively fed into a CNN model. NASNet-Large, Inception-v4, ResNet-152, and VGG-16 were all attached to the proposed architecture and deployed to study various outcomes.

3.1.2 NASNet-Large

Neural Architecture Search Network Large (NASNet-Large) receives a video frame with a resolution of \((331 \times 331)\) as input and generates a feature vector with a resolution of 4032 per frame. While the basic concept of NASNet-Large has been predefined, like in [36], the structural blocks or cells have not been determined. Alternatively, they are studied using a reinforcement learning search strategy, in which the number of iterations and convolutional filters are considered as free parameters and scaled. It is associated with two types of cells, normal and reduction cells, to create a feature vector.

3.1.3 VGG-16

VGG-16 was devised at Oxford (Visual Geometry Group). It supports an image with a resolution of \(224 \times 224\) pixels as an input. The resulting feature vector is 4096 bytes in length. VGG-16’s convolutional layers employ a small receptive field \(3 \times 3\), the smallest size achievable while still capturing all the features. Additionally, \(1 \times 1\) convolution filters also exist that conduct a linear transformation on the input before delivering it to a ReLU unit. VGG-16 consists of three completely connected layers, and ReLU activates all hidden layers.

3.1.4 Inception-v4

A deep neural network architecture, Inception-v4 simplifies the design and incorporates more inception modules than Inception-V3, which was the previous version of the Inception family. There are no ties to the original Inception here. It is possible to train it without dividing up the replicates using memory optimization for back-propagation. Reduction Blocks" were introduced in Inception-v4 to modify the grid’s width and height. The older versions didn’t expressly include reduction blocks, although the functionality was in place.

3.1.5 ResNet-152

By adopting the residual representation functions, ResNet-152 may form a dense network of 152 layers. ResNet-152 offers shortcut connections to match the previous layer’s input to the subsequent layer without modifying the input. Bypassing a connection, you can create a more extensive network. ResNet-152 proposed the principle of residual learning, wherein a feature’s subtraction from its input is learned via shortcut connections. It has been demonstrated that residual learning can enhance model training performance, particularly when the model contains a multi-layer perceptron with much more than 20 layers. Moreover, it addresses the issue of deep network accuracy degradation.

3.2 Terminology and Notation

The concept of representing video clips that require captioning, mathematically can be expressed as, V = \(\{v_1, v_2, v_3...., v_n\}\), where n is frame count value. As explained earlier, the visual features retrieved from video V with the help of pre-trained models can be visualized with the following details. The F = \(\{f_1, f_2, f_3, ..., f_n\}\) \(\epsilon R^{d_i*n}\), where \({d_i}\) represents dimensional view of the feature vector for a single frame. The feature vector of the caption is visualized by the symbol \(W \epsilon R^{d_w*c}\), where \(d_w\) represents the size of a word embedding and c represents the caption’s word count. The overall view of the model’s captioning relationships can be viewed as \(W' \epsilon R^{d_w*c}\).

3.3 Encoder-Decoder

The presented model’s encoder is a two-layered LSTM made of short connections or residual layers that impose non-linearity on the data processing. This unit permits and absorbs temporal characteristics extracted at the frame level during the preparatory stage from the two-dimensional pre-trained CNN data. LSTMs attempt to limit saturation inaccuracy by converting a series of frames to a fixed-dimensional vector representation.

The primary focus of the decoding stage is to develop the ability to guess the caption based on the video data. Each word in the caption is created separately and predicted with a proper contextual understanding of the surrounding words of the generated word. Thus, given an input sequence and the Encoder’s output, the Decoder anticipates the caption’s next word. We employ attention that operates with separate heads called multi-head in the model that incorporates the encoder result and the decoder state to pay attention to some areas of the encoded output that impact the final decoding unit output. The loss function used to represent the translation of videos to words is shown in Equation (7).

The value of, \(N_w\) depicts the caption word count. The Equation (8) signifies the likelihood of the predicted word \(w_t\) being formed given the previous output words \(w_1\), \(w_2\), ..., \(w_{t-1}\) and the output from the encoding unit E.

3.4 Contextual Hybrid Embedding Network (CHEN)

This proposed novel unit converts the feature map F from the video created during the preliminary stage to a 768-Dimension feature map using an LSTM layer. The resulting feature map was then compared to the hidden state of an LSTM auto-encoder trained on the caption set during the training phase. The auto-encoder uses the regenerated contextual caption vector with a fixed dimension to anticipate the next word to the decoding stage. As the contextual caption vector summarises the captions, we termed it a sentence vector.

Now we assume, if the language model’s sentence vector is SV and the sentence vector from CHEN is \(SV'\) , then the loss is determined as given in Equation (9).

Where \(e_k\) defined in Equation (10).

3.5 The Proposed Multi-Head Attention Mechanism

The suggested model is motivated by the concept of multi-head attention, which cycles through an attention mechanism many times in parallel. Multiple attention heads, intuitively, enable distinct elements of the sequence to be attended to differently. The attention unit achieves training stability by enhancing consistent performance improvements above conventional attention.

The proposed framework’s Encoding stage always provides detailed information about the video. This contextual information is also contingent on the model’s comprehension of the frames. However, for the Decoding stage to predict the next caption term being given the current term, just a subset of contextual data attributes must be selected. Thus, scalar dot product attention [37] enables the Decoding stage to focus exclusively on a portion of the Encoding unit data. Take the following into account: Equation (11), Q, The value field stores data about the Encoder’s output, whereas the K field stores data about the Decoder’s previous state. The encoder output is weighted for various regions, depending on the current state of the decoder, in order to build a context vector for the following word. This is one of the heads of the attention system’s several heads. Multiple heads enable the Decoder to simultaneously attend to data from the Encoder at various points in heterogeneous representational spaces. Equation (11) provides the mathematical definition for single-head attention.

Equation (12) visualises the concept of envisaged multi-head attention.

The representational values \(R_d\) signifies the decoder output states, \(R_e\) symbolizes the encoder output states, {\({W^Q, W^K, W^V}\)} \(\epsilon R^{d_i*d_n}\) are the defined weights for multiple attention heads. The key notion \({d_i}\) represents the dimension of the attention input, and \({d_n}\) depicts the count of units in an attention head.

3.6 Training-Phase

To initiate, the model is trained using the language model to generate the relevant sentence vector for all captions in the datasets. Additionally, as illustrated Fig. 4, the proposed architecture is trained using hybrid loss built by merging \(loss_1\) and \(loss_2\) bridge the semantic gap between video and words. The hybrid loss is defined in Equation (13).

The variable \(\lambda \) symbolizes a tuneable hyper-parameter with values ranging from 0 to 1.

4 Experiment, Results, and Analysis

4.1 Datasets

Numerous experiments with various parameter combinations are conducted, and performance is evaluated using various benchmark evaluation metrics. Additionally, the proposed system’s performance is compared to existing works using MSVD [38] and MSR-VTT [39] datasets.

4.1.1 MSVD

The dataset contains approximately 1,970 short varying video clips from YouTube, each with an accompanying human-generated description. On average, each video contains roughly 41 descriptions. Around 80,000 clip-description pairings are included in this dataset collection, with each clip being explained in a different language. This work is entirely written in English. The suggested model used the same dataset split as [40]. For training, we used 1,200 YouTube videos; for validation we used 100 clips; and for testing used 670 clips, respectively.

4.1.2 MSR-VTT

The dataset is a big-scale, open-source benchmark dataset comprising twenty key classes and differing video materials for the purpose of bridging vision and language. It contains 10,000 excerpts from 7180 videos. These video clips encompass different classes. Each clip lasts between ten and thirty seconds on average, totalling 41.2 hours. There are 200K sentence-clip combinations available, totalling 1.8 million words and 29316 distinct words.

4.2 Evaluations Metrics

The video and image captioning method is a result of collaboration between deep neural networks and advancements in natural language processing techniques. The NLP-related benchmark measures are often used to evaluate automatically created captions and reference captions explained in the subsequent subsections. All of these indicators were applied to the aforementioned standard datasets.

4.2.1 Bilingual Evaluation Understudy (BLEU)

The BLEU is a score that is calculated by comparing a predicted machine translation against human-generated reference. Although BLEU was intended for machine translation, it may be used to analyze text output for a variety of natural language processing jobs. A perfect match is scored 1.0, but a perfect mismatch is valued 0.0. It is based on a comparison of the lexical count of words in the candidate translation (predicted words) with reference text, where if n=1 then it corresponds to a token, and a n=2 corresponds to a word pair. Order of the words is not considered during comparison. It is just concerned with the total count of terms that match the reference or actual caption. Mathematically, the above metric is defined in Equation (14).

Where, Precision is defined below in Equation (15) below:

The value, \({C_{W}}\) denotes the number of correct words in generated sentences. The value, \({T_{W}}\) signifies the number of words in the generated sentence.

4.2.2 METEOR

METEOR was presented as a solution to BLEU’s [31] difficulties. METEOR substituted semantic matching for the exact lexical matching required by BLEU. METEOR makes use of the English lexical database WordNet to account for a range of match types, including exact word, stemmed word, synonymy, as well as paraphrase matching. METEOR scores for predicted and reference sentences are determined by calculating using unigram Precision P and unigram Recall R, as described in Equations (16) and (17), respectively.

Where, \({U G_{P R}}\), the count of unigrams in the predicted and reference sentences, and \({U G_{P}}\) is the count of unigrams in the predicted sentence. Further, the Recall is defined in the Equation (17).

Where, \({U G_{R}}\) denotes the count of unigrams in the reference. The mean harmonic index (F) is derived using Precision, and Recall is defined in Equation (18).

A penalty is designed and implemented for longer matches containing non-adjacent mappings between the predicted and reference sentences. Penalty \((P_{n})\) relation is defined as depicted in Equation 19:

Where, C denotes the count of chunks as well as UM stands for the number of matched unigrams. Consequently, the METEOR index for a certain alignment can be calculated using the following Equation (20):

The higher the METEOR score, the more closely it is associated with human judgement.

4.2.3 ROUGE

The ROUGE metric was developed to evaluate text summaries. It uses n-grams to determine the recall score of the generated phrases that correspond to the reference sentences.

Recall and Precision is calculated for the longest common sub-sequence in Equations (21) and (22) respectively.

The F-measure score in Equation (18) can be calculated using \({L C S}\) for predicted summary \(P_{s}\) of length \(L_{p}\) and reference summary \(R_{s}\) of length \(L_{r}\) as depicted in Equation (23).

Here, the value \({L C S\left( R_{s}, P_{s}\right) }\) denotes the longest common subsequence of \(R_{s}\) and \(P_{s}\). \(\beta \) is the ratio of LCS-Precision to LCS-Recall.

4.2.4 CIDEr

CIDEr is an assessment technique for human consensus-based image description. The main task of this evaluation metric is to compare a predicted sentence to a single or a collection of human-annotated reference captions for the image. It performs stemming and transforms all candidate and reference sentences’ words to their root forms. Each phrase is regarded by CIDEr as a collection of n-grams containing between one and four words. It calculates the number of n-grams that exist in both the predicted and reference phrases to encode the consensus between them. N-grams, which are extremely prevalent in reference phrases, have been given a reduced weight using the Term Frequency Inverse Document Frequency technique (TF-IDF). The \({CIDEr}_{n}\) is calculated as in Equation (24):

In this, \(g^{n}\left( c_{i}\right) \) is a vector denoting all n-grams with length n and \(\left\| g^{n}\left( c_{i}\right) \right\| \) represents magnitude. Same is true for \(g^{n}\left( s_{i j}\right) \).

Summary: A list of all the metrics used in this paper is summarized shortly as the following. The better the anticipated sentence, the higher the BLEU-4 score. A higher CIDEr value indicates that the candidate sentence is more similar to the reference sentence. A high METEOR value indicates consistent order between candidate sentences and reference sentences. Machine translation accuracy improves as the ROUGE value rises.

4.3 Experiments on MSVD Dataset

4.3.1 Data preprocessing

We selected 28 key frames from each video clip. Each frame is given to the NASNet-Large CNN unit, which extracts a 4032-dimensional feature vector from it. Thus, for each video, we obtain a 28*4032-D feature vector. In this step, each video contains at least 28 frames. Therefore padding is unnecessary here. Captions for the clips are compiled from a variety of sources and vary in length. As a result, we eliminate the punctuation from the captions, lower case them, and tokenize them. The vocabulary is refined to eliminate misspelled and uncommon terms. Captions are lengthened to a maximum of twenty characters. Any caption that exceeds the maximum length is clipped, whereas captions with fewer than 20 tokens are padded. 768-D, 300-D, or 1024-D vectors are created for each token utilizing word embedding algorithms such as BERT, GloVe, and Elmo. We assessed the efficiency of numerous word embeddings using the proposed model in Results and Analysis. The tokens bos and eos denote the start and end of the caption, respectively. While pad and unk serve as padding and unknown words, respectively.

The suggested approach is motivated by the need to validate video captioning using datasets. The approach was initially validated using the MSVD dataset. The video to be processed is represented by the 28*4032 feature vector. It is then transferred to the Encoder stage, which is a two-layer LSTM with a residual layer or a few short connections. It is believed that the LSTM contains 512 units. Thus, we acquire a 28*512-dimensional vector from layer one and a 28*512-dimensional vector from layer two, resulting in a 28*1024-dimensional vector from the encoder. A single 768-unit LSTM layer and a pre-trained LSTM autoencoder comprise the Contextual hybrid embedding block. The unit count of the attention layers is set to 512. It is expected that the Decoder stage LSTM contains a total of 1024 units. Adam Optimizer is employed for training with an 6e-4 learning rate and a batch size of 64. A beam search with a beam width of three is used for testing.

4.3.2 Results and Analysis on MSVD

Numerous investigations were conducted using the proposed technique on the MSVD dataset based on the train-validation-test split described in [40].

The Table 1 exhibits the effect of performance scores on the three embeddings with pre-trained Feature Descriptors of our novel proposed approach. When BERT alone is considered, NASNet-Large exceeds all other models except for the METEOR score. The advantage of this model is that it employs two convolutional cells, which aids in obtaining the optimal output. It employs a controller to optimize performance by utilizing fewer parameters but also floating-point operations.

Table 1 also relates the ELMO embedding performance of all Feature Descriptors. According to the statistics, the proposed model outperformed all other models. The suggested model takes advantage of ELMO’s benefits in terms of syntax, semantics, and polysemy notions in order to get superior outcomes.

The obtained results also provide an overview of the proposed model with pre-trained all Feature Extractors with a GloVe vector embedding. The suggested Feature Extractor outperformed all other models. The reason for this is that context-sensitive data augment both the encoding and decoding levels. This reliably extracts the region and its associated captions from the embeddings. Additionally, the multi-head considerably improves performance.

When the proposed model with NASNet-large alone is seen across all the three embeddings, the BERT findings outperformed the results obtained with the other two embeddings. The BERT makes use of the target word’s context by examining the surrounding terms. This novel combination of the suggested NASNet and BERT word embedding outperforms the others.

Table 1 also summarises the performance of three different embeddings while using Feature Descriptor, Inception-v4 as a reference. The study demonstrates that BERT with Inception-v4 outperforms other methods. Notable explanations for this could include the increased number of inception modules on the encoding unit as well as the bidirectionally trained embedding that provide a more accurate sense of language context and flow than single-direction language models.

Additionally, the experimental findings obtained using the Feature Extractor VGG-16, and three-word embeddings followed a very similar pattern. While the results are marginally different for all three, BERT retains the performance advantage. This demonstrates how BERT works extremely consistent across nearly all Feature Descriptors.

The efficiency of Feature Descriptor ResNet-152 and its effectiveness on three embeddings is compared in Table 1. The score observation demonstrates that BERT surpasses the other two embeddings, despite the small variation in outcomes. It is also clear that the ResNet-152 performance score outperforms VGG-16 in practically every parameter. BERT’s best feature is that it is extremely bidirectional and contextual. Transformers are used in the BERT, while LSTMs are used in the other two variants. The descriptor is a multi-layered method that yields better results when used with the BERT because of its more detailed discoveries.

Figure 5 depicts a sample plot of the accuracy and loss. The red and green lines represent the training phase’s loss and accuracy, respectively. On the MSVD dataset, the proposed model is trained for 300 epochs using a learning rate of 6e-4, the Adam optimizer, and a batch size of 64.

4.3.3 Qualitative Analysis

Figure 6 depicts the sample input test videos taken from MSVD dataset. Table 2 reports the ground-truth captions and the predicted captions for sampled videos in Fig. 6 using several feature extractors in the proposed framework. In a few cases such as Fig. 6 (d), however, the model incorrectly identifies the composite action and objects. The proposed framework, on the other hand, performed a good job of identifying objects and activities. Further, model also makes advantage of contextual hybrid embedding, which aids in the development of relevant phrases.

4.3.4 Performance Comparison With Existing Works

On the MSVD dataset, Table 3 compares the performance of a proposed model with other recent and state-of-the-art approaches. All other models were clearly outperformed by our suggested model with the Feature Descriptor NASNet-Large. We also experimented with the other two Feature Descriptors in this paper and compared them to the suggested model. The proposed model clearly outperformed in all performance criteria. B@4, METEOR, ROUGE, and CIDEr scores are shown in Table 3. The results show that the suggested model was more accurate than the other models at recognizing the exact collection of words in the dataset, as measured by the n-gram hit ratio. Using the CIDEr metric, the suggested model came in fourth place. The model received significant ROUGE and METEOR ratings when compared to other models. Overall, the results demonstrate that a model with a short encoder-to-contextual vector relationship improves performance. In addition, the defined model combination learns a new set of information for the encoder’s succeeding phases, as well as a collection of data from the embeddings.

4.4 Experiments on MSR-VTT

Let us suppose for convenience that the CNN feature extraction method and word embedding are NASNet-Large and BERT, respectively. The video to be studied is represented by the 80*4032 feature vector. It is then supplied to the encoding and a two-layer LSTM with either a short link or a residual layer. It is believed that the LSTM contains 512 units. Thus, we obtain an 80*512-D vector from layer one and another 80*512-D feature vector from layer two, yielding an 80*1024-D vector from the encoding stage. The contextual hybrid embedding block is composed of a single 768-unit LSTM layer and a semantic sentence vector. The attention layers’ unit count is set to 512. It is expected that the Decoder LSTM contains a total of 1024 units. Adam Optimizer is used for training, with an 8e-4 learning rate and a batch size of 64. During testing, a beam search with a beamwidth of three is used.

4.4.1 Data preprocessing on MSR-VTT

Each key frame is sent into a pre-trained CNN model, which generates an N-dimensional feature vector from it. N is 4032, 4096, 2048, and 2048 for NASNet-Large, VGG-16, Inception-v4, and ResNet-152, respectively. The videos’ captions are derived from a number of sources and range in length. As a consequence, we remove all punctuation, lowercase, and tokenize the captions. The vocabulary has been whittled down to eliminate misspellings and rare terms. Captions are increased in length by a maximum of twenty characters. Captions that exceed the maximum length are clipped, and captions that include fewer than twenty tokens are padded. For each token, a 768-D, 300-D, or 1024-D vector is generated using methods such as BERT, GloVe, and Elmo. In the Results section, we assess the performance of our model to that of several word embedding. The bos and eos tokens denote the start and the end of the caption, respectively, while pad serves as a padding token and unk is used to forecast unknown phrases.

4.4.2 Results and Analysis on MSR-VTT

We equate our method’s efficiency to that of state-of-the-art techniques on the MSR-VTT dataset utilising BLEU, METEOR, ROUGE, and CIDEr metrics. The proposed framework assessed the results qualitatively and quantitatively. The qualitative outcome includes both the ground truth captions as well as the captions obtained with the proposed model. In the quantitative results, we contrasted the BLEU, METEOR, ROUGE, and CIDEr scores to previously published studies. For feature extraction in this work, we used four pre-trained CNN models: NASNet-Large, VGG-16, ResNet-152, and Inception-v4. Inception-v4 surpasses NASNet-Large, VGG-16 and ResNet-152 in experiments.

Three distinct word embedding approaches are used, including BERT, ELMO, and Glove. We compared the results of each feature extraction technique to BERT, ELMO, and Glove embedding. BERT embedding outdoes Elmo and Glove in investigations. The same pattern is used to extract features from NASNet-Large, Inception-v4, ResNet-152, and VGG-16. Table 4 compares the performance of word embedding for NASNet-Large features. We can see that BERT embedding produced superior outcomes for all metrics.

Table 4 compares the performance of all pre-trained feature extractors in the unique proposed framework discussed in our study. When BERT embedding was utilized, it is clear that the Inception-v4 feature extractor topped all other models. The strength of Inception-v4 is that it extracts features by utilizing more inception modules than most other models. The Inception-v4 model outperformed earlier versions due to its capacity to learn additional parameters from big datasets such as the MSR-VTT and its use of bidirectional embedding features.

When, Table 4 is considered for comparison, NASNet-Large’s with various embedding strategies demonstrate that the BERT embedding composition is capable of producing practically any performance statistic. As previously noted, for the MSVD dataset, the NASNet equally performs better on larger datasets like MSR-VTT too.

Table 4 can also be viewed to compare an Inception-v4’s performance to practical values derived from three embeddings. The model outperformed ELMO and GLoVe embeddings significantly. The learning capability of the network, along with parallel max pooling and more inception modules on Inception-v4, improved the overall results. Additionally, a comparative analysis of a simple ResNet-152 model to three different embedding strategies is made. When BERT embedding was incorporated, the model outperformed the other embedding scores. The boost in virtually all scores is due to the addition of additional layers to learn complicated features, residual connections that provide more information to each level of the hidden layers, and a context-sensitive vector coupled to the encoding stage.

We also noted in Table 4, a correlation between VGG16 and three-word embedding models. The model’s success with BERT demonstrates the critical nature of transformer-attached embeddings that fully comprehend the context of words within the reference phrase. As a result, the next stage predicts better sentences based on the first stage’s input word. By using all the experimental analysis, BERT outperformed Elmo and Glove with respect to all the feature extraction techniques and the evaluation metrics.

Figure 7 depicts a sample plot of the accuracy and loss. The red and green lines represent the training phase’s loss and accuracy, respectively. On the MSR-VTT dataset, the proposed model is trained for 300 epochs using a learning rate of 6e-4, the Adam optimizer, and a batch size of 64.

4.4.3 Qualitative Analysis

Figure 8 depicts the sample input test videos taken from MSR-VTT dataset. Table 5 reports the ground-truth and the predicted captions for sampled videos in Fig. 8 using several feature extractors in the proposed framework. The proposed framework performed a good job of identifying objects and activities, when the Inception-v4 is used as the Feature Extractor. It utilizes the featured BERT as the embedding. The overall observation is here that inception modules facilitate the good performance when BERT is used. Further, model also makes advantage of contextual hybrid embedding, which aids in the development of relevant phrases or words.

4.4.4 Performance Comparison With Existing Works

We compared the proposed model to numerous existing works on the MSR-VTT dataset, and the findings are provided in Table 6. The proposed model achieved greater performance in terms of BLEU4, and METEOR score than the previous methods. Considering ROUGE and CIDEr the proposed model achieved comparable results to those of best scores. Overall the proposed technique indicated excellent results on all the performance metrices. Thus, the findings reveal models efficiency in capturing the best visual features and predicting the suitable captions for videos.

Figure 9 is depicted with all the results comparison of four models and their performance metrics. The study shows that Inception-v4 outperforms all the other models due to the fact that more inception modules associated and achieving greater accuracy.

5 Conclusion

Motives for video captioning include its ability to make the video more approachable to Deaf and hard-of-hearing individuals, to help concentrate on and recall data more readily, and to view it in sound-sensitive environments. The proposed framework thoroughly investigates the spatial and temporal information in the video frame sequence. The video captioning encoder was improved in this research work by incorporating a novel contextual hybrid embedding block and short connections. Notably, the contextual block, also known as the semantic phrase vector, represents the video in the caption space within the encoder. It aids the decoder in maximizing the model’s overall performance by providing additional information. We have validated our method and analysis by conducting experiments with the MSVD and MSR-VTT datasets. The proposed framework was compared to other existing standard methods. It was discovered that our methodology produced significantly better results. The suggested model is also being compared to other feature extraction techniques such as NASNet-Large, VGG-16, Inception-v4, and ResNet-152. Several word embedding approaches, such as BERT, ELMo, and Glove, are used to assess the model’s performance. When the proposed model with NASNet-large alone is compared to all three embeddings, the BERT findings on the MSVD dataset outperformed those obtained with the other two embeddings. The BERT uses the context of the target word by analyzing the surrounding terms. This innovative combination of NASNet and BERT word embedding outperforms the competition. As measured by the n-gram hit ratio, the results also indicate that the proposed model was more accurate than other state-of-the-art models at recognizing the exact collection of words in the dataset. The results of the proposed model with NASNet-large on the MSVD dataset and Inception-v4 Feature Descriptors on the MSR-VTT dataset demonstrate that a model with a novel short encoder-to-contextual vector relationship improves performance. In addition, the defined model combination learns a new set of data for the encoder’s subsequent phases and a collection of data from the embeddings. Regarding word embedding strategies, BERT excels ELMo and Glove by a wide margin. Future research would compare the results of various feature extraction techniques, such as I3D. Another option is to analyze the model using different video datasets. The proposed model can be improved to yield the captioning process’s efficiency by using a three-dimensional neural network in conjunction with two-dimensional CNNs.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Amirian S, Rasheed K, Taha TR, Arabnia HR (2020) Automatic image and video caption generation with deep learning: A concise review and algorithmic overlap. IEEE Access. 8:218386–400

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural computation. 9(8):1735–80

Su Y, Xia H, Liang Q, Nie W (2021) Exposing DeepFake Videos Using Attention Based Convolutional LSTM Network. Neural Processing Letters. 53(6):4159–75

Gao L, Guo Z, Zhang H, Xu X, Shen HT (2017) Video Captioning With Attention-Based LSTM and Semantic Consistency. IEEE Transactions on Multimedia. 19(9):2045–55

Cao P, Yang Z, Sun L, Liang Y, Yang MQ, Guan R (2019) Image captioning with bidirectional semantic attention-based guiding of long short-term memory. Neural Processing Letters. 50(1):103–19

Zoph B, Vasudevan V, Shlens J, Le QV. Learning Transferable Architectures for Scalable Image Recognition. CoRR. 2017;abs/1707.07012

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA. Inception-v4, inception-resnet and the impact of residual connections on learning. In: Thirty-first AAAI conference on artificial intelligence; 2017.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2016. p. 770-8

Simonyan K, Zisserman A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In: The 3rd International Conference on Learning Representations (ICLR2015); 2015. Available from: https://arxiv.org/abs/1409.1556

Devlin J, Chang M, Lee K, Toutanova K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. CoRR. 2018;abs/1810.04805

Peters ME, Neumann M, Iyyer M, Gardner M, Clark C, Lee K, et al. Deep contextualized word representations. CoRR. 2018;abs/1802.05365. Available from: http://arxiv.org/abs/1802.05365

Pennington J, Socher R, Manning CD. GloVe: Global Vectors for Word Representation. In: Empirical Methods in Natural Language Processing (EMNLP); 2014. p. 1532-43. Available from: http://www.aclweb.org/anthology/D14-1162

Papineni K, Roukos S, Ward T, Zhu WJ. Bleu: a Method for Automatic Evaluation of Machine Translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics; 2002. p. 311-8

Banerjee S, Lavie A. METEOR: An Automatic Metric for MT Evaluation with Improved Correlation with Human Judgments. In: Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization. Ann Arbor, Michigan: Association for Computational Linguistics; 2005. p. 65-72

Lin CY. ROUGE: A Package for Automatic Evaluation of Summaries. In: Text Summarization Branches Out. Barcelona, Spain: Association for Computational Linguistics; 2004. p. 74-81. Available from: https://www.aclweb.org/anthology/W04-1013

Vedantam R, Zitnick CL, Parikh D. CIDEr: Consensus-based Image Description Evaluation. CoRR. 2014;abs/1411.5726

Vinyals O, Toshev A, Bengio S, Erhan D. Show and Tell: A Neural Image Caption Generator. CoRR. 2014;abs/1411.4555

You Q, Jin H, Wang Z, Fang C, Luo J. Image Captioning with Semantic Attention. CoRR. 2016;abs/1603.03925

Lin JC, Zhang CY. A New Memory Based on Sequence to Sequence Model for Video Captioning. In: 2021 International Conference on Security, Pattern Analysis, and Cybernetics SPAC); 2021. p. 470-6

Zhang Z, Xu D, Ouyang W, Zhou L (2021) Dense Video Captioning Using Graph-Based Sentence Summarization. IEEE Transactions on Multimedia. 23:1799–810

Gao L, Li X, Song J, Shen HT (2020) Hierarchical LSTMs with Adaptive Attention for Visual Captioning. IEEE Transactions on Pattern Analysis and Machine Intelligence. 42(5):1112–31

Liu S, Ren Z, Yuan J (2021) SibNet: Sibling Convolutional Encoder for Video Captioning. IEEE Transactions on Pattern Analysis and Machine Intelligence. 43(9):3259–72

Xu N, Liu A, Nie W, Su Y (2018) Attention-in-Attention Networks for Surveillance Video Understanding in Internet of Things. IEEE Internet of Things Journal. 5(5):3419–29

Bin Y, Yang Y, Shen F, Xie N, Shen HT, Li X (2019) Describing Video With Attention-Based Bidirectional LSTM. IEEE Transactions on Cybernetics. 49(7):2631–41

Song J, Guo Y, Gao L, Li X, Hanjalic A, Shen HT (2019) From Deterministic to Generative: Multimodal Stochastic RNNs for Video Captioning. IEEE Transactions on Neural Networks and Learning Systems. 30(10):3047–58

Yang Y, Zhou J, Ai J, Bin Y, Hanjalic A, Shen HT, et al. Video Captioning by Adversarial LSTM. IEEE Transactions on Image Processing;27(11):5600-11

Zheng Q, Wang C, Tao D. Syntax-Aware Action Targeting for Video Captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020.

Srinivasu PN, SivaSai JG, Ijaz MF, Bhoi AK, Kim W, Kang JJ. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors. 2021;21(8). Available from: https://www.mdpi.com/1424-8220/21/8/2852

Yang Y, Zhang L, Du M, Bo J, Liu H, Ren L, et al. A comparative analysis of eleven neural networks architectures for small datasets of lung images of COVID-19 patients toward improved clinical decisions. Computers in Biology and Medicine. 2021;139:104887. Available from: https://www.sciencedirect.com/science/article/pii/S0010482521006818

Alok N, Krishan K, Chauhan P. Deep learning-Based image classifier for malaria cell detection. Machine Learning for Healthcare Applications. 2021:187-97

Negi A, Kumar K, Chauhan P. Deep neural network-based multi-class image classification for plant diseases. Agricultural informatics: automation using the IoT and machine learning. 2021:117-29

Kumar K, Nishanth P, Singh M, Dahiya S (2022) Image Encoder and Sentence Decoder Based Video Event Description Generating Model: A Storytelling. IETE Journal of Education. 63(2):78–84

Kumar K, Shrimankar DD (2018) F-DES: Fast and Deep Event Summarization. IEEE Transactions on Multimedia. 20(2):323–34

Negi A, Kumar K. Classification and detection of citrus diseases using deep learning. In: Data science and its applications. Chapman and Hall/CRC; 2021. p. 63-85

Vision OOSC. OpenCV -Object Detection,;. Accessed: 12-12-2021. https://docs.opencv.org/3.4.3/df/dfb/group__imgproc__object.html

Zoph B, Vasudevan V, Shlens J, Le QV. Learning transferable architectures for scalable image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2018. p. 8697-710

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention Is All You Need. CoRR. 2017;abs/1706.03762

Chen D, Dolan W. Collecting Highly Parallel Data for Paraphrase Evaluation. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies. Portland, Oregon, USA: Association for Computational Linguistics; 2011. p. 190-200

Xu J, Mei T, Yao T, Rui Y. Msr-vtt: A large video description dataset for bridging video and language. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2016. p. 5288-96

Venugopalan S, Rohrbach M, Donahue J, Mooney R, Darrell T, Saenko K. Sequence to Sequence – Video to Text. In: 2015 IEEE International Conference on Computer Vision (ICCV); 2015. p. 4534-42

Yan C, Tu Y, Wang X, Zhang Y, Hao X, Zhang Y et al (2020) STAT: Spatial-Temporal Attention Mechanism for Video Captioning. IEEE Transactions on Multimedia. 22(1):229–41

Sah S, Nguyen T, Ptucha R (2020) Understanding temporal structure for video captioning. Pattern Analysis and Applications. 23(1):147–59

Hao X, Zhou F, Li X. Scene-Edge GRU for Video Caption. In: 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC). vol. 1; 2020. p. 1290-5

Xu J, Wei H, Li L, Fu Q, Guo J (2020) Video Description Model Based on Temporal-Spatial and Channel Multi-Attention Mechanisms. Applied Sciences. 10(12):4312

Wei R, Mi L, Hu Y, Chen Z (2020) Exploiting the local temporal information for video captioning. Journal of Visual Communication and Image Representation. 67:102751

Nabati M, Behrad A (2020) Video captioning using boosted and parallel Long Short-Term Memory networks. Computer Vision and Image Understanding. 190:102840

Aafaq N, Akhtar N, Liu W, Gilani SZ, Mian A. Spatio-temporal dynamics and semantic attribute enriched visual encoding for video captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2019. p. 12487-96

Chen T, Zhao Q, Song J. Boundary Detector Encoder and Decoder with Soft Attention for Video Captioning. In: Asia-Pacific Web (APWeb) and Web-Age Information Management (WAIM) Joint International Conference on Web and Big Data. Springer; 2019. p. 105-15

Lin JC, Zhang CY. A New Memory Based on Sequence to Sequence Model for Video Captioning. In: 2021 International Conference on Security, Pattern Analysis, and Cybernetics (SPAC). IEEE; 2021. p. 470-6

Pei W, Zhang J, Wang X, Ke L, Shen X, Tai Y. Memory-Attended Recurrent Network for Video Captioning. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019. p. 8339-48

Yadav N, Generating Naik D, Description Short Video, using Deep-LSTM and Attention Mechanism. In, (2021) 6th International Conference for Convergence in Technology (I2CT). IEEE 2021:1–6

Nabati M, Behrad A (2020) Multi-sentence video captioning using content-oriented beam searching and multi-stage refining algorithm. Information Processing & Management. 57(6):102302

Wang J, Wang W, Huang Y, Wang L, Tan T. M3: Multimodal memory modelling for video captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition; 2018. p. 7512-20

Yang Y, Zhou J, Ai J, Bin Y, Hanjalic A, Shen HT et al (2018) Video captioning by adversarial LSTM. IEEE Transactions on Image Processing. 27(11):5600–11

Shekhar CC, et al. Domain-specific semantics guided approach to video captioning. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; 2020. p. 1587-96

Funding

No funds, grants, or other support was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Naik, D., C D, J. Video Captioning using Sentence Vector-enabled Convolutional Framework with Short-Connected LSTM. Multimed Tools Appl 83, 11187–11213 (2024). https://doi.org/10.1007/s11042-023-15978-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15978-7