Abstract

Skin cancer is one of the world’s scariest diseases, having taken the lives of thousands of people. It can be treated if diagnosed at the right time. According to WHO, every year, approximately 3 million non-melanoma and 130,000 malignant melanomas occur worldwide. Many existing technologies have demonstrated that computer-aided systems can be useful in the early identification of cancer. One of the major challenges in computer-aided diagnostic systems is accurate segmentation of the lesion and extraction of features for successful classification and detection. The study’s main goal is to recognize and segment cancerous parts of skin from the collected samples and then categorize them into separate affected and non-affected regions. The proposed model first performs the identification and separation of infected regions from the sample. This is performed by converting the RGB cell image into a greyscale colour scale. The background subtraction approach is used to track only cell structures from the image by eliminating the background, and region props are applied for segmentation from skin images. In the second phase, features from the segmented images are extracted. These features include homogeneity, contrast, energy, correlation, and some hybrid features. In the third phase, the differential analyzer approach (DAA) algorithm is used to select the significant features. In the final phase, the efficiency of the suggested optimization method is validated using different classifiers. The suggested methodology is applied to an ISIC 2018 dataset available. This result outperforms all other published papers that used the same dataset. Classification accuracy is notably higher in comparison to other approaches not following the DAA optimization algorithm. Validation of results is further extended through feature reduction ratio and still remarkable results concerning classification accuracy of 96% are achieved. The validity of the approach is examined using different classifiers including KNN, SVM, Naïve Bayes, decision tree, and random forest-based mechanism.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cancer is one of the world’s deadliest diseases, claiming the lives of countless people. A genetic variant causes cancer, which allows the cells to develop irregularly. Cancer can occur in numerous ways, including leukaemia, lung cancer, meningioma, melanoma, and several others [5]. The importance of skin elements in the human being is shown by the fact that even little changes in their functioning can have an impact on other body parts and organs [64]. Skin is exposed to the environment. As a result, skin infections and contamination are more common. Skin cancer caused by melanoma affects millions of people [24].

Skin cancer is caused by harmful ultraviolet radiations and the abnormal growth of skin cells called melanocytes [71]. Melanocytes produce colour pigments known as melanin in their specific cells known as melanosomes, which are then transferred to the adjoining epidermis through the use of the dendritic process [39]. During the growth period, melanocytes grow from the neural crest and move to different spots throughout the skin during the growth processes [70]. Skin cancer can be categorized into melanoma and non-melanoma skin cancer. As per the report of the American Cancer Society, the approximated increase in the prevalence of melanoma in 2018 is 91,270, with a death rate of 9320 [63]. According to WHO, every year, approximately 3 million non-melanoma and 130,000 malignant melanomas occur worldwide [7]. As per a new study on Asian skin cancer trends, fair-skinned people had a three-fold higher risk of skin cancer than those with darker skin [68]. Melanoma is categorised into 5 stages from stage 0 to Stage 4 as shown in Fig. 1 [27, 33].

-

Stage 0: This is the first stage of melanoma and specifies that the cancerous cells are present but only in the epidermis layer of the skin and have not grown to any other part of the body.

-

Stage 1: This stage indicates that cancer has reached outside the epidermis but not to any other part of the body, and it lies between 0.8 mm and 2.0 mm in thickness.

-

Stage 2: In this stage, the thickness of the melanoma is bigger than 2.0 mm but less than 4.0 mm, just like in stage 1. This also does not spread to any other part.

-

Stage 3: The cancer cells have started to grow in the dermic layer and have taken place into contiguity with the nerves at this point. The thickness of melanoma at this stage is greater than 4.0 mm.

-

Stage 4: This stage indicates that the melanoma has grown in other organs of the body. At this stage, it is arduous to treat melanoma [6].

Stages of skin cancer [33]

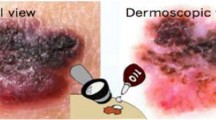

Early diagnosis of this disease can provide appropriate treatment for melanoma. In recent years, several diagnostic techniques have been designed to help with identifying skin cancer outside of clinical examination [26]. Tests and procedures to identify skin cancer include biopsy, imaging tests, adhesive patch biopsy, electrical impedance spectroscopy, multispectral imaging, ultrasonography, optical coherence tomography, reflectance confocal microscopy, photoacoustic imaging, Raman spectroscopy, other radiological techniques like computed tomography (CT), magnetic resonance imaging (MRI), and many others are among them [12, 30, 52]. Since skin lesions and healthy cells are so similar, visual examination during a physical screening of skin lesions might lead to an incorrect diagnosis [45]. During the past few years, dermoscopy has been used to assist doctors in improving the screening for melanoma skin lesions [48]. Dermoscopy is a non-invasive imaging technique that uses polarized light to create a high-resolution image of a skin lesion [36]. It removes the skin’s surface reflection, allowing you to examine the morphology’s underlying features. Even though the technique enhances diagnosis accuracy over the visual examination, dermatologists still find it difficult to screen through dermoscopy images attributed to the prevalence of artefacts and reflections in the images [11]. Since many cancer boundaries are undetectable or hazy, visual identification is challenging, time-consuming, subjective, and error-prone [69].

Computer-Aided Diagnosis (CAD) has become critical in removing all of these obstacles, assisting specialists in incorrectly interpreting images and reaching the correct diagnosis conclusion [59]. Over the last two decades, there has been a surge of interest in the automated evaluation of digitised images to assist doctors in distinguishing early melanoma from benign skin lesions [46]. Additionally, such systems abbreviate the duration it takes to identify a problem and improve the accuracy of the final results [54]. As a result, automated computerised diagnostic tools for skin lesions are critical in assisting and supporting dermatologists in making decisions [47]. It has been seen that melanoma early identification increases a patient’s chance of survival. In cases where it is not caught early, its death rate has also dramatically increased. If discovered and treated promptly, malignant melanoma is always curable. On the other hand, an illness with a late diagnosis is harder to treat and may even be fatal because it has the potential to advance and spread to other body areas.

1.1 Novelty of proposed system

-

Proposed a novel system for skin cancer classification and detection using image processing.

-

During pre-processing bottom hat filter is applied to tackle noise in terms of hairs, and DCT and Color space conversion are used for performing image enhancement

-

Background subtraction method is implemented along with mid point analysis to enhance the system performance during segmentation.

-

A novel differential analyser approach has been proposed in this paper to enhance the performance of the proposed system during feature extraction using slope-based mechanism for retaining as well as rejecting the features from skin cancer images.

This work focus on the development of a novel system for skin cancer classification and detection. The several disciplines included in this study—image pre-processing, segmentation, feature extraction and classification—all contribute significantly to the extraction of the skin lesion, its classification and detection. The rest of the paper is organized as under section 2 presents Dataset and Tools used, section 3 gives a brief review of the related work, section 4 gives the proposed model and phases of the proposed approach, and section 5 gives the evaluation of the proposed system and section 6 is the last section conclude the overall work.

2 Dataset and tools

The dataset used is SIM ISIC [20], a published dataset corresponding to skin cancer detection as shown in Fig. 2. The test results are also available with the dataset. This means a predefined training model can be applied to test the accuracy of the system. The images contained within the dataset have both affected and non-affected skin tissues. The coordinates of the affected skin were also specified within different files. Simulation is conducted within Math works Mat lab 2018b. To design our proposed system, we used the visualization tools provided within the Mat lab. Visualization tools help in building an interactive interface that is quite easy to use.

Sample dataset [47]

3 Related work

In recent years, different skin cancer (melanoma) detection techniques have been developed by segmenting and extracting multiple dermoscopic features. Melanoma detection in dermoscopic images typically involves four stages. The first stage is preprocessing, which removes all artefacts, hair, and other noisy effects from the image. For this purpose, various filters can be used. The second stage is segmentation, which divides the input image into lesion regions and normal skin. In other words, this stage determines the correct boundary in the given image. The extraction of features is the third stage. It gathers the various characteristics or features of the lesion, such as shape, colour, texture, and so on. Classification is the last stage. The classifier had to select whether the given lesion is benign or malignant at this point. In the upcoming section, a brief review of the related study is presented year-wise.

Lee et al., 1997 [41] Dull Razor hair removal is proposed as a new technique that employs a bilinear interpolation method to eliminate hair pixels. This technique comprises three basic steps: (1) morphological closing operation to locate the location of dark hairs; (2) bilinear interpolation to replace hair pixels; and (3) an adaptive median filter to smooth the final output. Celebi et al., 2009 [17] have demonstrated a method for dermoscopy image enhancement. The approach finds the ideal weights to translate existing Color images to the analogous grey-level image by maximising Otsu’s histogram bimodality measure. Zhou et al., 2011 [1] have developed a new mean shift-based gradient vector flow (GVF) technique for directing inner and outer dynamism in the right direction. The calculation of force vectors in the imaging domain is the starting point for the energy force. The mean shift of the pixels in the region constrains the deformation of the region enclosed by the evolving border. To accomplish the segmentation process in images, they integrated a mean-field term within the standard GVF objective function. Barata et al., 2012 [21] have developed a new method for extracting melanin regions from dermoscopy images that requires a series of steps. The dermoscopy image is first subjected to a preprocessing technique. A set of directed filters and linked component analysis (CCA) are then used to identify the pigmented network “lines”. Finally, attributes from the discovered region are retrieved and applied to train an AdaBoost algorithm to identify each lesion in terms of the melanin region’s presence. Abbas et al., 2013 [2] have demonstrated a hair removal technique that identifies and removes hair in the CIE L*u*v* colour space using morphological operations and thresholding. On these fine systems that are based on their illuminance, a hair mask was constructed using a defined thresholding method. Finally, a morphological operation replaced each masked pixel with an average of its non-masked neighbours.

Aswin et al., 2014) [56] have presented a novel approach for detecting melanoma based on a genetic algorithm (GA) and ANN methods. The dull-Rozar technique is used for removing hair from the images, and regions of interest (ROI) have been obtained using the Otsu thresholding technique. In addition, the GLCM method was used to retrieve distinctive features from segmentation results. Following that, a hybrid ANN and GA classification algorithm was used to classify lesion images into malignant and benign classes. Lee and Chen, 2014 [44] have described a technique for finding an ideal threshold value and correctly delineating malignant boundaries from skin scans based on the fuzzy c-mean approach (FCM) and a type-2 fuzzy set algorithm. Throughout the preprocessing stage, they also used the 3D colour constancy method to reduce shadows and the impacts of skin tone changes in images.

Giotis et al., 2015 [16] proposed MED-NODE, a unique expert system for computer-assisted cancer detection. Their objective is to use simple digital photographs of tumours and three types of features to distinguish melanoma from nevocellular naive. Collected descriptors about the texture and colour of tumours are combined with a set of subjective observations about additional visual aspects of the lesions to determine if a tumour is cancerous or non-cancerous. Abuzalegah et al., 2015 [49] proposed a system to recognize and eliminate the hairs from the lesion. To remove the hairs, the authors created directional filters by deducting the Gaussian filter from the isotropic filter. The hair gap is then filled with the mean of edge pixels, which is consequently saved as a pixel value at the gap’s location. Dhane et al., 2015 [75] performed a comparative analysis of five different filters to remove the impulse/random noise using mathematical morphological procedures. The goal of this paper is to choose the ideal filter for the pre-processing of digital wound images taken with a camera 75 randomly chosen wound photos from the generated image collection and the online chronic wound image database were subjected to these five filters.

Premaladha et al., 2016 [10] has developed a new segmentation method called Normalized Otsu’s Segmentation (NOS), which regulates the issue of constant luminance to separate the damaged skin tissues from the normal tissues. In the same year, Premaladha et al. [10] implemented the Contrast Limited Adaptive Histogram Equalization (CLAHE) approach and a median filter to enhance the image contrast. Dhane et al., 2016 [72] proposed a novel technique for scar detection by using digital photographs acquired with a portable optical camera. The method for clustering that is suggested uses a spectral approach and is based on an affinity matrix. The spectral clustering (SC) process entails creating a Laplacian similarity matrix based on the Ng-Jorden-Weiss method. Premaladha et al., 2016) [10] have developed classification techniques, such as Deep Learning-based Neural Networks and Hybrid Ad Boost-Support Vector Machine (SVM) algorithms, are fed with fifteen features developed and extricated from the ROI of images.

In the 2017 year, a simple weighted Otsu thresholding method based on the Otsu threshold was proposed by Zortea et al., [25]. The first threshold is intended by considering all of the testers in the image as well as cross-diagonal testers weighted by an independent estimate derived from skin pixels in the peripheral region. The method is straightforward and effective for lesions obscured by coarse hairs. Xie et al., 2017 [22] have proposed a self-generating neural net to separate skin lesions. Coloured edges and texture-based features from the lesion are extracted. The proposed work divides skin cancer into two categories: cancerous and non-cancerous.

Xie et al., 2017 [22] introduced a skin lesion classification system that divided lesions into noncancerous and cancerous groups. The proposed paradigm is divided into three stages. Initially, a self-generating NN was employed to recover diseases from images. The data on boundary, appearance and colour details were retrieved in the second phase. The dimensionality of the features was reduced using principal component analysis (PCA), permitting the optimal amount of characteristics to be determined. In the last step, lesions were classified using a NN ensemble approach. In the same year, Satheesa et al. [76] presented a non-invasive computerized dermoscopy system for diagnosing skin lesions that take into account the predicted depth of lesions. It is proposed to use 2D dermoscopic pictures to rebuild a 3D skin lesion. The adaptive snake technique is applied to obtain ROI from the data. The calculated depth map is fitted to the existing 2D surface to produce the 3D reconstruction. The depth and 3D form features are extracted based on the generated 3D tensor structure. Feature selection is used to inspect the impact of quality and combinations of features on decision-making. Choudhary and Biday, 2017 [40] has suggested an ANN-based skin cancer diagnosis model which employs a maximum entropy thresholding approach to segment images. To retrieve distinctive properties of melanomas, a grey-level co-occurrence matrix (GLCM) was used. The input images were categorised into either malignant or benign stages of melanoma by employing a feed-forward ANN.

To determine the optimum technique for chromatic aberration, RGB pictures of skin ulcers were analysed in a comparative analysis of colour constancy approaches and found that the weighted grey edge method is best (Maity et al. 2018) [8]. A unique segmentation approach based on full-resolution convolutional networks (FrCN) was presented by Al-Masni et al., 2018 [5]. The recommended FrCN technique captures full-resolution features from each pixel in the input data without any intermediate steps, eliminating the need for pre-or post-processing processes such as artefact removal, illumination, or additional strengthening of the segmented skin lesion boundaries.

A system for melanoma and non-melanoma classification was proposed by Savy Gulati et al., 2019 [61] in which preprocessing measures are first carried out to clarify and improve dermoscopic images by eliminating unwanted artefacts. Then, using active contour-based segmentation, the primary area of interest is constructed. Moreover, colour, structure, and texture are retrieved and fed into the classification model (SVM) for efficient and precise malignant and non-malignant tumour categorization. Carrera and Dominguez, 2019 [43] have implemented a histogram equalization approach to enhance image contrast, and a dilation approach is applied to eliminate hairs or skin defects.

In 2020 year, Monika et al. [18] implemented a dull razor approach proposed by [41] to eliminate all unnecessary hair elements from the affected skin, and then the image is smoothed with a Gaussian filter. The median filter is applied for DE noise and to preserve the borders of the lesion. For skin lesion classification, a framework for extracting deep features from various well-developed and pre-trained deep CNNs was proposed by Mahbod et al., 2020 [28]. Deep features were generated using pre-trained Alex Net, ResNet-18, and VGG16, which were then input into a multi-class SVM Classification model. The results of the classifiers were then merged to classify the data. On the ISIC 2017 database, the proposed model was validated. Monika et al., 2020 [18] used Colour-based k-means clustering in the segmentation phase since colour is a significant characteristic in determining the kind of malignancy. Asymmetry, border, colour, diameter (ABCD), and grey level co-occurrence matrix (GLCM) are used to extract statistical and textural features.

Murugan et al., 2021 [51] have proposed work that uses an image processing approach for the classification and detection of skin melanoma. In the preprocessing phase, the median filter is applied to the region of interest (ROI), and the mean shift method is used to separate the ROI. To extract the features from ROI Moment Invariant features like Gray Level Run Length Matrix and Gray Level Co-occurrence Matrix were used. Classifying the malignant and non-malignant cells is achieved by using support vector machines, probabilistic neural networks, and random forests.

A comparative study of various techniques for skin cancer classification and detection on the different dataset are presented in Table 1. Satheesa et al. [76] used three different datasets ISIC, PH2 and ATLAS and applied an adaptive snake technique for segmentation, 3D reconstruction of dermoscopic images for feature extraction and SVM, AdaBoost and BOF for classification. Codella et al. [19] implemented FCN for segmentation and CNN, U-NET and DRN for classification on the ISIC dataset and achieved an accuracy of 76%. Zortea et al. [25] has used Microscopic images and applied SWOT for segmentation. Smaoui and Derbel [66] have applied Multi-threshold Otsu for segmentation, ABCD rule for feature extraction and TVD rule for classification on the PH2 dataset and achieved an accuracy of 90%. Carrera and Dominguez [43] have achieved an accuracy of 75% by applying the Optimal threshold for segmentation and SVM, and DT for classification on the ISIC dataset. Al-Masni et al. [5] have applied FCRN on the ISIC and PH2 data set for the segmentation of cancer images with 95% accuracy. Murugan et al. [50] has achieved an accuracy of 85% by applying the Watershed approach for segmentation, the ABCD rule for feature extraction and SVM, KNN, and RF for classification on the ISIC dataset. Thaajwer and Ishanka [3] have used the ISIC dataset and achieved an accuracy of 85% by implementing the Otsu segmentation and Watershed method for segmentation and NN, SVM, and CNN for classification. Khan et al. [38] have proposed an Improved K-means clustering for segmentation and SVM for classification on the DERMIS dataset and achieved an accuracy of 96%. Kassem et al. [35] have applied Google net for feature extraction and Multiclass SVM for classification on the ISIC dataset with an accuracy of 81%. Albert [4] has applied CNN and SVM on the Med Node dataset and achieved an accuracy of 91%. Hohn et al. [32] achieve an accuracy of 92% by applying deep learning CNN. Jiang et al. [34] applied DRA-Net on the Histopathological Image set and achieved an accuracy of 86%. Murgan et al. [51] have used the mean shift method for segmentation and SVM, probabilistic NN, and random forests for classification and achieved an accuracy of 89%.

In preprocessing, various challenges are observed in the literature. (i) The original datasets contain images with high resolution, which impose a high cost of calculation. As a result, images must be scaled because direct scaling can cause structural deformation in lesions. (ii) In cases where the lesion and skin tone are very similar, the images may have low contrast. It’s perplexing to identify the dissimilarity between an ordinary and a malicious mole in these instances. (iii) Another issue is the existence of noise in the images, such as hair and noise, which might make it difficult to analyse the imaged skin lesions properly. In segmentation, images exhibiting higher inconsistencies at the lesion centre compared to the boundary inconsistencies yields poor segmentation results. Furthermore, in some situations, a higher threshold value set for segmentation causes a regression area at the image centre, resulting in the false detection of lesions. Many features that aren’t required for classification complicate the classifier and increase computational time, lowering classification accuracy. The best aspects of the skin cancer photographs should depict the region’s characteristics. As a result, the finest method to identify lesion features is required. Therefore, a novel system is required to develop which can handle these all challenges and achieve better performance than the state-of-the-art methods. In the next section, the proposed system methodology will be discussed.

4 Proposed model

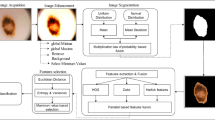

The proposed model for skin cancer classification and detection consists of five different phases. Each phase of the proposed model is explained in this section and the overall architecture is shown in Fig. 3.

4.1 Image preprocessing

The image preprocessing in the proposed work is accomplished by applying an ensemble-based approach. This pre-processing phase consists of consists removing hairy parts, enhancing, and restoring the image. This is perhaps the most crucial to achieving high classification accuracy. A two-fold approach is used in the pre-processing. In the first part, long thin objects in the form of hairs will be identified and neighbourhood pixel colour and intensities will be replaced with the current pixel. This overall operation will be under the bottom hat filtering mechanism.

The overall operation of hair removal consists of reading the image and converting the RGB image into a grayscale. RGB images contain a lot of information about colour which we do not need during this operation. After conversion, the result is stored within [x,y]. The image will be evaluated corresponding to the region boundaries obtained with the cross-shaped structured element. P_size variable determines the maximum size of the allowed object. In case the maximum size (P_Size) is exceeded the threshold values, these values will be replaced with the neighbourhood intensity levels denoted with Object−1(x,y) by using algorithm 1.

In the next part, image enhancement takes place. Image enhancement highlights the useful information present within the image and reduces the redundant information within the image as well. The proposed model used individual colour-checking and stretching mechanisms to introduce uniformity at each point within the image. To overcome the non-uniformity illuminations contrast enhancement strategy with discrete cosine transformation (DCT) [13] is applied to achieve the same. The overall operation of enhancement with DCT and contrast enhancement is given as under Fig. 4

-

Convert RGB image to HSV.

-

Apply DCT

-

Apply correction factor

-

H=CF*H

-

S=CF*S

-

V=CF*V

-

Apply inverse DCT

-

Convert HSV to RGB

An RGB image will be converted to HSV colour space. The letters “R”, “G”, and “B” stands for red, green, and blue, which act as the primary colour. “H”, “S”, and “V” indicate a hue, saturation, and value. To apply DCT, the image is first portioned into orthogonal parts. This means the image is divided into 8 × 8 blocks. The standard work within DCT is from top to bottom and from inside to outside, scanning each block [14]. Overhead scanning of this sort requires overhead calculations, and boundary evaluation is an issue. In the proposed work, this scanning procedure is revered. Better boundary value identification is generated through this approach. The result generated from inside-outside scanning and the suggested approach are shown in Fig. 5.

After finding the boundary value the next step is image compression. For this, a quantization procedure will be applied to compress the blocks. During compression, frequencies that are less important are discarded. The correction coefficient for this purpose is required to be evaluated. This is given through the following equation

Here “CF” is the correction factor. \( LL\underset{i}{\to } \)is the similarity-valued matrix of the adaptive histogram equivalent image and LLi is the singular valued matric of the input image [57]. The correction factor (CF) will be multiplied by the colour channel. It is observed that the scale factor of “CF” gives better clarity and hence CF is used as the scale factor in contrast enhancement. The result corresponding to image enhancement is shown in Fig. 6.

4.2 Segmentation

Region separation is critical as it will be used for the analysis of individual regions for abnormalities. The novelty in the region separation phase is in terms of the direction of processing. Normally, processing in the region separation phase is performed through an inside-outside test classifying the edge within the image. The issues are discussed in [23] but in the proposed work this process is reversed. Contemporary region separation-based mechanism boundary identification is an issue. Thus, cancerous region extraction may not be appropriate. The extracted regions with the proposed approach are given in Fig. 7. The advantage of reversing the direction includes the minimum time in the identification of the region of interest in the proceeding pass in the segmentation. Starting the process from inside and moving towards the outside cannot be terminated until the boundary is encountered, but using the proposed approach, control will move from the boundary of the region and move inwards, towards the nucleus, establishing threshold points where variation in intensity levels occurs. If no deviation is detected while scanning 50% of the region, the entire region will be labelled as non-critical.

Region separation phases initially identify the boundary (B) through the colour intensity (clr) levels. To identify the region (R), colour plays a vital role. The colour is identified using Eq. 2.

The colour comparison with the neighbouring pixel identifies the region boundary (RB) and is stored within the Bx,y variable where B is the boundary. Since there are multiple regions, multiple boundaries corresponding to different regions will be detected. Therefore, we need the subscripted variable ‘I’ with the boundary variable.

The diameter of the region is estimated to obtain the dimension of the region. The dimension of the region will be divided by “2” to obtain the radius, and this will give the nucleus (Ni) of the region is determined using Eq. 3

The region’s boundaries will give the Centre of the region. The normal flow of the system will now be from the \( \max \left({B}_{x_i,{y}_i}\right)\ \mathrm{nucleus}. \)

The region extraction phase is critical in the process of proposed segmentation. The process first identifies the critically labelled segments. The region corresponding to the critical part has boundary values. A set of intensity (Int) values will be stored within a buffer (Bfi) corresponding to these boundary values. These intensity values corresponding to the region will be used in the background elimination and to extract the region of interest. The nucleus intensity values greater than 0 will be stored within the buffer for further processing and values less than or equal to 0 will be discarded. The primary equations used for the same region extraction are based on the valid nucleus determination given within Eq. 4.

The result in terms of region separation and extraction is more accurate through improved inside-outside test and region extraction mechanism shown in Fig. 8.

Using the proposed approach region overlapping issue is resolved. The properties extracted, and their information extracted are as under in Table 2.

For the skin lesion segmentation, the object is represented individually by the centroid, major and minor axes. Figure 9 shows the outcome of this phase.

A background subtraction mechanism is used to remove the background (Bgi) colour and the foreground image is retained. The background colour with the skin image has a maximum of 255 intensity levels. The region extracted from the region separation and the intensities extracted from the region separation are used in the background subtraction process. This is expressed in Eq. 5.

This intensity will be subtracted from the rest of the image to obtain the image without a background. After subtracting the background, the region of interest is obtained. The highest correlated segments are obtained using a correlation-based mechanism. The most positively correlated segments will be retained, and the rest of the segments will be rejected. The relationship between the components is extracted using bwconncomp. The coordinates of the region having the highest correlation are obtained using Regionprops. The overall working of the proposed method is given below in algorithm 2.

Algorithm 2

4.3 Feature extraction and selection

The feature extraction from the image is the next phase in skin lesion detection. Feature extraction is accomplished with the help of the bag of feature approach. The novelty in this approach is introduced by first of all modelling the assignments of local descriptors. These are termed contributor functions. After this multiple assignment strategy is used for reconstructing local features using neighbouring visual words from the vocabulary. Reconstruction weights are calculated from quadratic programming. These weights will be used to form contributor functions. Features possessing the highest weight will be extracted and retained. Feature selection is accomplished by a novel differential analyser approach (DAA). Each feature is supposed to possess certain linearity. A differential approach used will try to fit the feature values over linear dimensions. Features not fitting the dimension will be rejected.

Color, shape, and texture features were discovered to be the most relevant for the detection of chronic melanoma images [60]. These features are mentioned below: Table 3 shows the different types of features and their counts that were retrieved from the image.

-

Shape Features: These represent an area, the length of the parameter’s major and minor axes, and some other features that differentiate the extracted lesion from other lesions.

-

Color Features: This is an important factor in distinguishing normal skin from abnormal skin lesions. As a result, the lesion can be distinguished using this feature.

-

Texture features: these are extracted from the image’s lesion only. Homogeneity, energy, colour, contrast, energy, entropy, variance, standard deviation, and so on are examples of these characteristics. Texture features are obtained at 0 and 90-degree angles.

-

Hybrid features: all of the features listed above are merged in this group.

To choose the relevant features, the differential analyzer approach (DAA) algorithm is used [37]. DAA is a meta-heuristic technique that works in the same way as genetic algorithms [58]. Each population unit can be either male or female. Every child is regarded as a potential solution to the problem. The male population is referred to as “X,” while the female population is referred to as “Y.” The number of repetitions required for integration is determined by calculating the Max and Min values from the extracted parameters, as shown in Eq. (6).

Where “I” is the size of the population, dx denotes the highest statistical variance between the maximum and minimum population of males, and dy represents the highest statistical variance between the maximum and minimum population of females. The total number of iterations allowed is calculated using Eq. (7).

The ‘S’ variable indicates the steps, the total number of repetitions after which the algorithm automatically terminates. To obtain offspring representing a possible solution, the crossover factor will be calculated from both the male and female populations. Equation (8) is used to compute the crossover factors represented by XC and YC.

The population’s selection for offspring is determined by XC and YC. Equation (9) is used to produce the next generation of offspring.

Xn and Yn are the next male and females selected to produce offspring. The fitness of these parameters is evaluated using a linear fitter equation, represented by Eq. (10) where “m” denotes the slope and “c” denotes the interceptor.

The objective function for retaining current offspring is in terms of error. This error rate is necessary to be less than the prescribed tolerance otherwise, the offspring is rejected. The error is evaluated using Eq. (11).

If the produced offspring fulfils the optimization function, it is retained. Equation (12) represents the error rate required to be less than the prescribed tolerance.

‘ε’ is the specified tolerance, which must be less than 0.001. Otherwise, optimality is not attained, and the process is repeated.

The feature set extracted from the image equivalent to the specific region determines the optimal values that can be plotted linearly. For example, the range of values extracted from the region image has local maxima represented as dx = 10 and dy = 20. The minimum set of values obtained is given as (10,20) and the maximum value is given as (20,40). We calculate steps = dy = 20. The value of xincr will be 0.5, and the value of yincr will be 1. The range of values obtained through the DAA approach is listed in Table 4.

These values of X and Y are compared against the extracted values. Out of the range of values, values closely matched with the line coordinates are retained, and the rest of the values not satisfying the line coordinates are rejected. Optimal feature extraction will be achieved after the end of this algorithm.

This algorithm extracts shape, size, and texture features from region images. Following the extraction, each feature is subjected to some normalisation mechanisms. This process reduces the difference among feature values. For the evaluation process, the optimization function is used. The prescribed tolerance is predetermined, and the retrieved results will be compared to the prescribed tolerance to determine which offspring are the best. Each fittest offspring is labelled with a specific feature. The crossover factor is changed to the weight factor obtained in Eq. (8) to optimise the DAA. When compared to a standard genetic algorithm, the convergence rate of this approach is remarkable. The number of iterations varied between 10, 20, and 30, and reaches 100, which improved the DAA’s performance toward the best possible solution. The parameter setting for DAA is given in Table 5.

Since the amount of features extracted correlating to texture and shape is confined, the DAA algorithm is only implemented for colour and hybrid features. The texture and colour features before and after implementing DAA are specified in Table 6.

Each optimised feature can be classified as either male or female. The crossover factor determines which male and female will be used to produce offspring. This offspring is the best feature that is kept in the final feature matrix. Table 6 specifies that half of the features are rejected and half of the features are chosen using the DAA algorithm. Figure 10 represents the operation of DAA.

4.4 Normalization

This phase is necessary to improve classification accuracy [65]. This phase closes the gap between the highest and lowest extracted feature values. To generate a feature matrix, three different normalisation mechanisms are used: depth-based, min-max, and grey-level scaling. Each of these strategies is used to ensure that the features extracted are satisfied in a linear fashion.

-

Depth-based Normalization: To compare two different feature sets, this step estimates the depth of sequencing.

-

Min-Max Normalization (MMN): This technique linearly adjusts the raw data to predetermined lower and upper bounds. Normally, the data is rescaled between 0 and 1 or − 1 and 1 [65].

-

Greyscale normalization: It can be performed by scaling the entire image to the range values from 0 to 1. It works by normalizing each column or row to a range of values from 0 to 1.

4.5 Classification

The proposed model represents the binary classification problem. In detection, there were only different possibilities: affected or not affected. Because the size and number of extracted features vary in the proposed system, different classification techniques are required for validation. KNN (K-nearest Neighbor) evaluates various neighbours using Euclidean distance [42], a support vector machine that uses several polynomial and linear functions [47], using a random forest method [50] for classifying malignant cells. a decision tree for developing classification rules over segmented skin image data [67]. The k-fold technique was used to assess the model’s performance. The entire dataset is divided into eightfold if the fold is taken to be 8. The primary detection process corresponds to affected and non-affected regions. The classification is accomplished with the help of KNN, SVM, Decision tree, naïve Bayes and random forest approach.

4.5.1 K- nearest neighbour

This is one of the popular classification techniques in which unknown instances are classified based on their similarity/distance with the records in the training set [42]. Either similarity or distance of a test record is computed with every record of the training set. Then, all the records are sorted according to the proximity function (similarity/distance) [9]. Afterwards, top-k records are chosen to be the k-nearest neighbours of the test record. If k-nearest neighbours (KNN) of a record contain instances of more than one class then the decision is made according to the majority vote [67].

4.5.2 Support vector machine

A support vector machine (SVM) is a non-probabilistic binary linear classifier used to analyse data for classification and regression analysis [73]. It belongs to the family of supervised learning models with associated learning algorithms. A model generated by an SVM training algorithm assigns new examples to one category of the two marked categories given a set of training examples [47].

4.5.3 Random Forest

As the name of this algorithm illustrates, a forest is a collection of trees. It comes under the umbrella of an ensemble classifier [15]. The training set is divided into several random subsamples spaces (sub-training sets). A decision tree is created for each training subset leading to several decision trees known as random forests. A test sample is assigned a class label by each decision tree. The final decision is made based on a majority vote of the decision trees [50].

4.5.4 Naïve Bayes classifier

A group of classification algorithms built on Bayes’ Theorem are known as naive Bayes classifiers [31]. It is not a single algorithm, but a group of algorithms that follow the same fundamental idea that each pair of features being classified is independent of the others [54].

4.5.5 Decision tree classifier

A decision tree (DT) is generated from a training dataset [51]. The leaf nodes represent the class labels while the intermediate nodes split the dataset into subsets. The division of the training set continues till all the records in the training set are covered by the decision tree [53].

The proposed approach used an ensemble-based approach in achieving the classification of test images. The best possible approach in terms of classification accuracy will be selected automatically through an ensemble-based approach. E.g. if used five classifiers give the result as (1,1,1,0,0), the overall result will be 1. As multiple classifiers predicted similar results, classification accuracy using an ensemble-based approach was high.

5 Experimental results and discussion

5.1 Evaluation of the proposed system

The proposed model first performs preprocessing in which hairy part removal is accomplished with the help of a lower hat filter. DCT and Color space conversion are used for performing image enhancement this enables the detection and separation of infected regions from the sample. The RGB cell image is transformed into greyscale for this purpose. Background subtraction is used to track only lesions from an image, and region props are used to segment skin images. The features are extracted from the segmentation results. Homogeneity, contrast, energy, correlation, and some hybrid features are among these. The differential analyzer approach (DAA) algorithm is used to select the relevant features in the third phase. In the final phase, various classifiers were used to certify the outcome of the proposed approach.

Different classification systems have advantages and disadvantages. It’s tough to say which classification approach is the best for a certain study. As shown in Tables 8, 9 and 10, different classification outcomes can be achieved contingent on the classifier(s) used in the dataset, the number of melanomas in the dataset, and the number of distinguishing characteristics. When choosing a classification technique to apply, various aspects must be considered, including diverse sources for collecting image datasets, accessibility of classification tools, cost factors, computational resources, and training data.

5.1.1 Metrics for performance evaluation

The suggested method is evaluated using three parameters: accuracy, sensitivity and specificity. They are discussed as follows.

Accuracy (Ac) refers to the overall performance of a system. Accuracy ensures that the results produced by the system are true and accurate to avoid misdiagnosis. It is calculated using Eq. (13).

Sensitivity (Sn) denotes the capability of a system to recognize people who have the disease. It shows how good the system is in identifying the person with an actual disease, if the numerical data is high then the possibility of identifying the person with the disease also increases. It is calculated using Eq. (14).

Specificity (Sp) denotes the capability of a system to recognize people who do not have the disease. It shows how good the system is in identifying an individual who does not have a disease, if the numerical data is high then the possibility of identifying healthy persons also increases. It is calculated using Eq. (15).

TP means true positive rate (detection of abnormality in a person with disease), TN means true negative rate (in a healthy individual, no abnormalities are detected.), FP means false positive (identification of an anomaly in a healthy individual) and FN means false negativity (no abnormality detected in a person with disease).

5.1.2 Results of segmentation

Figure 11 depicts the experimental outcome of the segmentation phase. This is accomplished by implementing background subtraction with a midpoint and region prop mechanism.

Figure 12 depicts the outcomes of the malignant and benign skin separation experiments. The Skin images and normal and abnormal separation mechanisms enclose the abnormal shape with boundaries. The grid structure is formed using boundary value analysis, and the outcome accurately separates lesions from normal skin.

The Jacobi index [55] is used to evaluate segmentation performance. The Jacobi index compares the true and estimated results. Jacobi index is given as under

The JI index ranges from 0 to 1. True positive values were set to 1 if both the estimation and the fact were 1. In the incident of an inappropriate prediction, the false-positive and negative values are determined to 1. The proposed method has TP = 25, FN = 1, TN = 1, and a segmentation accuracy of 92.0. In some cases, the accuracy is even greater than 100%.

5.1.3 Result of normalization

This phase seeks to increase the accuracy of classification by merging all of the feature sets (colour, texture, and location) into a feature vector. We chose characteristics that assist the classification framework the most, rather than utilising traditional feature selection approaches that select features based on their performance in the original input feature space. Three normalisation techniques are employed in the feature matrix, each of which ranges the feature values to fit in a linear fashion. As a result, three distinct normalised feature matrices were generated: grey scaling, min-max, and depth based. Following the specified operation, the three normalisation matrices are shown in Table 7.

5.1.4 Result of different classifier

Evaluation of classification accuracy with the selected feature by the DAA approach with different normalization. The proposed approach performs three different normalizations and the best, average, and worst possible classification accuracy is recorded. The simulation results with different normalization mechanisms are recorded in Table 8, Table 9 and Table 10. The proposed approach used an ensemble-based approach in achieving the classification of test images. The best possible approach in terms of classification accuracy will be selected automatically through an ensemble-based approach. E.g. if used five classifiers give the result as (1,1,1,0,0), the overall result will be 1. As multiple classifiers predicted similar results, classification accuracy using an ensemble-based approach was high.

The performance of the SVM classifier is better than all other classifiers as shown in Table 8, 9 and 10. The performance of the SVM classifier in the case of greyscale normalization is better than the min-man and depth-based normalization. Therefore, this classification result of SVM (accuracy 96.21%) in the case of DAA will be used in the next section for comparison with the state of the art techniques. The limitation of our work is that we have used only one dataset ISIC with two cases (melanoma and benign) for classification and detection. Results may very based on dataset to be used.

5.2 Result comparison with the state of art methods

The performance of the proposed system is compared with the state of art methods on publically available dataset ISIC data as shown in Table 11. Authors in [3, 35, 43, 50, 51] uses the ISIC dataset and applied different techniques for preprocessing, segmentation, feature extraction and classification and achieved the highest accuracy of 89% which is less than the proposed system.

5.3 Comparison of results with SVM

To evaluate the performance of the SVM classifier on the ISIC dataset in the proposed system comparison with the different existing classifiers on the same dataset is shown in Table 12. Authors in [28, 55, 62, 74] use the same dataset and different classifiers for melanoma classification and achieved the highest accuracy of 96.00%. Our proposed system is reliable, resilient, and efficient which achieves an accuracy of 96.21% on the same dataset.

Almost every existing paper lacked optimization in feature selection. The selection ratio in the proposed work was 50%. The reduction can be furthered in the feature selection mechanism to determine variation. As seen from the result section, exponential variation was observed. There are the following reasons for the improvement in the results:

-

In this method, some important features are extracted and employed. Each element influenced a different aspect of skin lesion because each type of skin lesion has a distinct colour. Differentiation can be recognised using colour features, Shape features and textural features.

-

A digital differential analyser algorithm is used since it has the least calculation complexity. The extracted features were scaled to fit onto a line. Due to optimised space exploration, DDA ensures a better selection of features and avoids local minima. This model selects optimised features by verifying these features against optimised functions.

The experimental findings of dermoscopy images of the proposed approach are compared to the factual data in terms of qualitative evaluation. In addition to the qualitative evaluation, a comparison of numerous other methodologies is conducted. According to the evaluation metric, the method produced the best outcomes. As a result, we believe that our effective and reliable method can assist dermatologists in making a more accurate and timely diagnosis. To validate the efficiency of the proposed model, a classification accuracy comparison is made in Tables 11 and 12 with existing models working on the same dataset.

6 Conclusions and future scope

This article focused on the early diagnosis of acute skin cancer. The main goal is to identify and classify each type of skin cancer into cancerous and non-cancerous categories. The publically available ISIC dataset has been used to train and test the proposed system. The implementation of the proposed system has been done in different phases. In the first phase, preprocessing is performed in which image resizing, hair removal, and image enhancement is done. Segmentation is the next phase that divides the image into critical and non-critical sections background subtraction, region props, and separation has been used. The skin lesion separation mechanism identifies abnormal images with black-coloured boundaries. In the next phase, features are extracted from the segmented image. After that, three distinct normalization mechanisms have been used for the normalization of the features, and the best result obtained corresponds to grey-scaling normalization. The DAA approach is applied for the optimized feature selection and is completed based on offspring and functional suitability. The offspring represents the best possible solution selected and will be restrained if specified tolerances are not met. The final stage is the classification that determines whether the image is affected or not. The proposed model achieved a classification accuracy of 96% overall, which is enough to prove the validity of the results.

The results of this study suggest that researchers focus on increasing the accuracy of a suitable classifier and reducing false negative classifications in the future. Then a system like this can be useful as a medical assistant to assist with the manual examination and save a lot of time. More data sets with different skin cancer imaging sources are needed for further study so that we can improve the recognition reliability using results from certain classes.

References

Abbas Q, Garcia IF, Emre Celebi M, Ahmad W, Mushtaq Q (2013) A perceptually oriented method for contrast enhancement and segmentation of dermoscopy images. Skin Res Technol 19(1):1–8. https://doi.org/10.1111/j.1600-0846.2012.00670.x

Abuzaghleh O, Barkana BD, Faezipour M (2015) Noninvasive real-time automated skin lesion analysis system for melanoma early detection and prevention. IEEE J Transl Eng Health Med 3:1–12

Ahmed Thaajwer MA, Piumi Ishanka UA (2020) Melanoma skin cancer detection using image processing and machine learning techniques. ICAC 2020 - 2nd Int Conf Adv Comput Proc, pp. 363–368. https://doi.org/10.1109/ICAC51239.2020.9357309.

Albert BA (2020) Deep learning from limited training data: novel segmentation and ensemble algorithms applied to automatic melanoma diagnosis. IEEE Access 8:31254–31269. https://doi.org/10.1109/ACCESS.2020.2973188

Al-masni MA, Al-antari MA, Choi M-T, Han S-M, Kim T-S (2018) Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput Methods Prog Biomed 162:221–231. https://doi.org/10.1016/j.cmpb.2018.05.027

American Cancer Society (2021) About melanoma skin cancer what is melanoma skin cancer? https://www.cancer.org/content/dam/CRC/PDF/Public/8823.00.pdf, pp. 1–14

Apalla Z, Lallas A, Sotiriou E, Lazaridou E, Ioannides D (2017) Epidemiological trends in skin cancer. Dermatol Pract Concept 7(2):1–6. https://doi.org/10.5826/dpc.0702a01

Aswin RB, Jaleel JA, Salim S (2014) Hybrid genetic algorithm - Artificial neural network classifier for skin cancer detection. 2014 Int. Conf. Control. Instrumentation, Commun. Comput. Technol. ICCICCT 2014, pp. 1304–1309. https://doi.org/10.1109/ICCICCT.2014.6993162

Ballerini L, Fisher RB, Aldridge B, Rees J (2013) A color and texture based hierarchical K-NN approach to the classification of non-melanoma skin lesions. Lect Notes Comput Vis Biomech 6:63–86. https://doi.org/10.1007/978-94-007-5389-1_4

Barata C, Marques JS, Rozeira J (2012) A system for the detection of pigment network in dermoscopy images using directional filters. IEEE Trans Biomed Eng 59(10):2744–2754. https://doi.org/10.1109/TBME.2012.2209423

Barata C, Ruela M, Francisco M, Mendonca T, Marques JS (2014) Two systems for the detection of melanomas in dermoscopy images using texture and color features. IEEE Syst J 8(3):965–979. https://doi.org/10.1109/JSYST.2013.2271540.M

Blundo A, Cignoni A, Banfi T, Ciuti G (2021) Comparative analysis of diagnostic techniques for melanoma detection: A systematic review of diagnostic test accuracy studies and meta-analysis. Front Med 8:637069. https://doi.org/10.3389/fmed.2021.637069

Britanak V, Yip P, Rao KR (2007) Discrete cosine and sine transforms: general properties, fast algorithms and integer approximations. Academic Press Inc., Elsevier Science, Amsterdam,

Buemi A, Bruna A, Mancuso M, Capra A, Spampinato G (2010) Chroma noise reduction in DCT domain using soft-thresholding. Eurasip J Image Video Process 2010:1–13. https://doi.org/10.1155/2010/323180

Caie PD, Dimitriou N, Arandjelović O (2021) Precision medicine in digital pathology via image analysis and machine learning. Artif Intell Deep Learn Pathol:149–173. https://doi.org/10.1016/b978-0-323-67538-3.00008-7

Carrera EV, Ron-Dominguez D (2018) A computer-aided diagnosis system for skin cancer detection. 4th International Conference on Technology Trends. In: Botto-Tobar M, Pizarro G, Zúñiga-Prieto M, D’Armas M, Zúñiga Sánchez M (eds.) Technology Trends. CITT 2018. Communications in Computer and Information Science, vol 895, Springer, Cham, pp 553–563.https://doi.org/10.1007/978-3-030-05532-5_42

Celebi ME, Iyatomi H, Schaefer G, Stoecker WV (2009) Lesion border detection in dermoscopy images. Comput Med Imaging Graph 33(2):148–153. https://doi.org/10.1016/j.compmedimag.2008.11.002

Choudhari S, Biday S (2014) Artificial neural network for skin cancer detection. Int J Emerg Trends Technol Comput Sci (IJETTCS) 3(5):147–153

Codella NCF et al (2017) Deep learning ensembles for melanoma recognition in dermoscopy images. IBM J Res Dev 61(4–5):1–15

Codella NCF et al. (2018) Skin lesion analysis toward melanoma detection: A challenge at the 2017 International symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC), Proc - Int Symp Biomed Imaging, vol. 2018-April, no. Isbi, pp. 168–172. https://doi.org/10.1109/ISBI.2018.8363547.

Dhane DM, Maity M, Achar A, Bar C, Chakraborty C (2015) Selection of optimal Denoising filter using quality assessment for potentially lethal optical wound images. Procedia Comput Sci 58:438–446. https://doi.org/10.1016/j.procs.2015.08.059

Dhane DM, Krishna V, Achar A, Bar C (2016) Spectral clustering for unsupervised segmentation of lower extremity wound beds using optical images. J Med Syst 40(9):1–10. https://doi.org/10.1007/s10916-016-0554-x

Flores-Vidal PA, Olaso P, Gómez D, Guada C (2019) A new edge detection method based on global evaluation using fuzzy clustering. Soft Comput 23(6):1809–1821. https://doi.org/10.1007/s00500-018-3540-z

Geller AC, Clapp RW, Sober AJ, Gonsalves L, Mueller L, Christiansen CL, Shaikh W, Miller DR (2013) Melanoma epidemic: an analysis of six decades of data from the Connecticut tumor registry. J Clin Oncol 31(33):4172–4178. https://doi.org/10.1200/JCO.2012.47.3728

Giotis I, Molders N, Land S, Biehl M, Jonkman MF, Petkov N (2015) MED-NODE: a computer-assisted melanoma diagnosis system using non-dermoscopic images. Expert Syst Appl 42(19):6578–6585. https://doi.org/10.1016/j.eswa.2015.04.034

Glazer AM, Rigel DS, Winkelmann RR, Farberg AS (2017) Clinical diagnosis of skin Cancer: enhancing inspection and early recognition. Dermatol Clin 35(4):409–416. https://doi.org/10.1016/j.det.2017.06.001

Gonzalez-Correa CA, Tapasco-Tapasco LO, Salazar-Gomez S (2020) Three electrode arrangements for the use of contralateral body segments as controls for electrical bio-impedance measurements in three medical conditions. IFMBE Proc 72:113–119. https://doi.org/10.1007/978-981-13-3498-6_17

Gulati S, Bhogal RK (2019) Detection of malignant melanoma using deep learning, vol 1045. Springer, Singapore

Hasan MK, Elahi MTE, Alam MA, Jawad MT, Martí R (2022) DermoExpert: skin lesion classification using a hybrid convolutional neural network through segmentation, transfer learning, and augmentation. Inf Med Unlocked 28:100819. https://doi.org/10.1016/j.imu.2021.100819

Heibel HD, Hooey L, Cockerell CJ (2020) A review of noninvasive techniques for skin Cancer detection in dermatology. Am J Clin Dermatol 21(4):513–524. https://doi.org/10.1007/s40257-020-00517-z

Hekler A, Utikal JS, Enk AH, Hauschild A, Weichenthal M, Maron RC, Berking C, Haferkamp S, Klode J, Schadendorf D, Schilling B, Holland-Letz T, Izar B, von Kalle C, Fröhling S, Brinker TJ, Schmitt L, Peitsch WK, Hoffmann F, … Thiem A (2019) Superior skin cancer classification by the combination of human and artificial intelligence. Eur J Cancer 120:114–121. https://doi.org/10.1016/j.ejca.2019.07.019

Höhn J, Krieghoff-Henning E, Jutzi TB, von Kalle C, Utikal JS, Meier F, Gellrich FF, Hobelsberger S, Hauschild A, Schlager JG, French L, Heinzerling L, Schlaak M, Ghoreschi K, Hilke FJ, Poch G, Kutzner H, Heppt MV, Haferkamp S, … Brinker TJ (2021) Combining CNN-based histologic whole slide image analysis and patient data to improve skin cancer classification. Eur J Cancer 149:94–101. https://doi.org/10.1016/j.ejca.2021.02.032

Jaworek-Korjakowska J (2016) Computer-aided diagnosis of micro-malignant melanoma lesions applying support vector machines. Biomed Res Int 2016:4381972. https://doi.org/10.1155/2016/4381972

Jiang A, Jefferson IS, Robinson SK, Griffin D, Adams W, Speiser J, Winterfield L, Peterson A, Tung-Hahn E, Lee K, Surprenant D, Coakley A, Tung R, Alam M (2021) International journal of women ’ s dermatology skin cancer discovery during total body skin examinations. Int J Women’s Dermatol 7(4):411–414. https://doi.org/10.1016/j.ijwd.2021.05.005

Kassem MA, Hosny KM, Fouad MM (2020) Skin lesions classification into eight classes for ISIC 2019 using deep convolutional neural network and transfer learning. IEEE Access 8:114822–114832. https://doi.org/10.1109/ACCESS.2020.3003890

Kato J, Horimoto K, Sato S, Minowa T, Uhara H (2019) Dermoscopy of melanoma and non-melanoma skin cancers. Front Med 6:180. https://doi.org/10.3389/fmed.2019.00180.American Cancer Society (2018) Cancer Facts & Figures 2018. American Cancer Society Atlanta

Khan MU, Beg MR, Khan MZ (2012) Improved line drawing algorithm: An approach and proposal, no. November, pp. 322–327. https://doi.org/10.3850/978-981-07-1403-1_713

Khan MQ, Hussain A, Rehman S, Khan U, Maqsood M (2019) Classification of melanoma and nevus in digital images for diagnosis of skin cancer. IEEE Access 7:90132–90144. https://doi.org/10.1109/ACCESS.2019.2926837

Khan NH et al (2022) Skin cancer biology and barriers to treatment: Recent applications of polymeric micro/nanostructures. J Adv Res 36:223–247. https://doi.org/10.1016/j.jare.2021.06.014

Lee H, Chen YPP (2014) Skin cancer extraction with optimum fuzzy thresholding technique. Appl Intell 40(3):415–426. https://doi.org/10.1007/s10489-013-0474-0

Lee T, Ng V, Gallagher R, Coldman A, McLean D (1997) Dullrazor®: a software approach to hair removal from images. Comput Biol Med 27(6):533–543. https://doi.org/10.1016/S0010-4825(97)00020-6

Linsangan NB, Adtoon JJ (2018) Skin cancer detection and classification for moles using K-nearest neighbor algorithm. ACM Int Conf Proc Ser:47–51. https://doi.org/10.1145/3309129.3309141

Mahbod A, Schaefer G, Wang C, Dorffner G, Ecker R, Ellinger I (2020) Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput Methods Prog Biomed 193:105475. https://doi.org/10.1016/j.cmpb.2020.105475

Maity M, et al. (2018) Selection of colour correction algorithms for calibrating optical chronic ulcer images. Advanced Computational and Communication Paradigms. Springer, Singapore, 561–570

Marin-Gomez FX, Vidal-Alaball J, Poch PR, Sariola CJ, Ferrer RT, Peña JM (2020) Diagnosis of skin lesions using photographs taken with a mobile phone: An online survey of primary care physicians. J Prim Care Community Health 11:2150132720937831. https://doi.org/10.1177/2150132720937831

Masood A, Al-Jumaily AA (2013) Computer aided diagnostic support system for skin cancer: A review of techniques and algorithms. Int J Biomed Imaging 2013:323268. https://doi.org/10.1155/2013/323268

Masood A, Al-jumaily A, Anam K (2014) Texture analysis based automated decision support system for classification of skin cancer using SA-SVM. Lect Notes Comput Sci 8835:101–109

Menzies SW, Emery J, Staples M, Davies S, McAvoy B, Fletcher J, Shahid KR, Reid G, Avramidis M, Ward AM, Burton RC, Elwood JM (2009) Impact of dermoscopy and short-term sequential digital dermoscopy imaging for the management of pigmented lesions in primary care: a sequential intervention trial. Br J Dermatol 161(6):1270–1277. https://doi.org/10.1111/j.1365-2133.2009.09374.x

Monika MK, Vignesh NA, Kumari CU, MNVSS K, Lydia EL (2020) Skin cancer detection and classification using machine learning. Mater Today Proc 33:4266–4270. https://doi.org/10.1016/j.matpr.2020.07.366

Murugan A, Nair SAH, Kumar KPS (2019) Detection of skin cancer using SVM, random forest and kNN classifiers. J Med Syst 43(8):1–9. https://doi.org/10.1007/s10916-019-1400-8

Murugan A, Nair SAH, Preethi AAP, Kumar KPS (2021) Diagnosis of skin cancer using machine learning techniques. Microprocess Microsyst 81:103727. https://doi.org/10.1016/j.micpro.2020.103727

Narayanamurthy V, Padmapriya P, Noorasafrin A, Pooja B, Hema K, Firus Khan A'Y, Nithyakalyani K, Samsuri F (2018) Skin cancer detection using non-invasive techniques. RSC Adv 8(49):28095–28130. https://doi.org/10.1039/c8ra04164d

Panigrahi R, Borah S (2019) Classification and analysis of Facebook metrics dataset using supervised classifiers. Elsevier Inc

Pathan S, Prabhu KG, Siddalingaswamy PC (2018) Techniques and algorithms for computer aided diagnosis of pigmented skin lesions—a review. Biomed Signal Process Control 39:237–262. https://doi.org/10.1016/j.bspc.2017.07.010

Perez F, Vasconcelos C, Avila S, Valle E (2018) Data augmentation for skin lesion analysis,” Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics), vol. 11041 LNCS, pp. 303–311. https://doi.org/10.1007/978-3-030-01201-4_33

Premaladha J, Ravichandran KS (2016) Novel approaches for diagnosing melanoma skin lesions through supervised and deep learning algorithms. J Med Syst 40(4):1–12. https://doi.org/10.1007/s10916-016-0460-2

Rajan BK, Harshan HM, Venugopal G (2020) Venugopal, veterinary image enhancement using DWTDCT and singular valuedecomposition. In: Proceedings of the 2020 IEEE International Conference on Communication and Signal Processing,ICCSP 2020, pp 920–924. https://doi.org/10.1109/ICCSP48568.2020.9182414

Rostami M, Berahmand K, Forouzandeh S (2021) A novel community detection based genetic algorithm for feature selection. J Big Data 8(1):1–27. https://doi.org/10.1186/s40537-020-00398-3

Ruela M, Barata C, Marques JS, Rozeira J (2017) A system for the detection of melanomas in dermoscopy images using shape and symmetry features. Comput Methods Biomech Biomed Eng Imaging Vis 5(2):127–137. https://doi.org/10.1080/21681163.2015.1029080

Saghir U, Devendran V (2021) “A brief review of feature extraction methods for melanoma detection. 2021 7th Int. Conf Adv Comput Commun Syst ICACCS 2021, pp. 1304–1307. https://doi.org/10.1109/ICACCS51430.2021.9441787.

Satheesha TY, Satyanarayana D, Prasad MNG, Dhruve KD (2017) Melanoma is skin deep: A 3D reconstruction technique for computerized dermoscopic skin lesion classification. IEEE J Transl Eng Heal Med 5:1–17. https://doi.org/10.1109/JTEHM.2017.2648797

Serte S, Demirel H (2019) Gabor wavelet-based deep learning for skin lesion classification. Comput Biol Med 113:103423. https://doi.org/10.1016/j.compbiomed.2019.103423

Siegel RL, Miller KD, Jemal A (2018) Cancer statistics, 2018. CA Cancer J Clin 68(1):7–30. https://doi.org/10.3322/caac.21442

Singh N, Gupta SK (2019) Recent advancement in the early detection of melanoma using computerized tools: an image analysis perspective. Skin Res Technol 25(2):129–141. https://doi.org/10.1111/srt.12622

Singh D, Singh B (2020) Investigating the impact of data normalization on classification performance. Appl Soft Comput 97:105524. https://doi.org/10.1016/j.asoc.2019.105524

Smaoui N, Derbel N (2018) Simple but efficient approach for image based skin cancer diagnosis. 2018 15th Int. Multi-Conference Syst. Signals Devices, SSD 2018, pp. 274–280. https://doi.org/10.1109/SSD.2018.8570526.

Taunk K, De S, Verma S, Swetapadma A (2019) A brief review of nearest neighbor algorithm for learning and classification. 2019 Int Conf Intell Comput Control Syst ICCS 2019, no. May 2019, pp. 1255–1260. https://doi.org/10.1109/ICCS45141.2019.9065747.

Urban K, Mehrmal S, Uppal P, Giesey RL, Delost GR (2021) The global burden of skin cancer: a longitudinal analysis from the global burden of disease study, 1990–2017. JAAD Int 2:98–108. https://doi.org/10.1016/j.jdin.2020.10.013

Vestergaard E, Macaskill P, Holt PE, Menzies SW (2008) Dermoscopy compared with naked eye examination for the diagnosis of primary melanoma: a meta-analysis of studies performed in a clinical setting. Br J Dermatol 159(3):669–676. https://doi.org/10.1111/j.1365-2133.2008.08713.x

Wu X, Hammer JA (2014) Melanosome transfer: it is best to give and receive. Curr Opin Cell Biol 29(1):1–7. https://doi.org/10.1016/j.ceb.2014.02.003

Wu S, Cho E, Li WQ, Weinstock MA, Han J, Qureshi AA (2016) History of severe sunburn and risk of skin Cancer among women and men in 2 prospective cohort studies. Am J Epidemiol 183(9):824–833. https://doi.org/10.1093/aje/kwv282

Xie F, Fan H, Li Y, Jiang Z, Meng R, Bovik A (2017) Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans Med Imaging 36(3):849–858. https://doi.org/10.1109/TMI.2016.2633551

Zhang Y (2012) Support vector machine classification algorithm and its application. Commun Comput Inf Sci, vol. 308 CCIS, no. PART 2, pp. 179–186. https://doi.org/10.1007/978-3-642-34041-3_27

Zhang J, Xie Y, Xia Y, Shen C (2019) Attention residual learning for skin lesion classification. IEEE Trans Med Imaging 38(9):2092–2103. https://doi.org/10.1109/TMI.2019.2893944

Zhou H, Schaefer G, Celebi ME, Lin F, Liu T (2011) Gradient vector flow with mean shift for skin lesion segmentation. Comput Med Imaging Graph 35(2):121–127. https://doi.org/10.1016/j.compmedimag.2010.08.002

Zortea M, Flores E, Scharcanski J (2017) A simple weighted thresholding method for the segmentation of pigmented skin lesions in macroscopic images. Pattern Recogn 64:92–104. https://doi.org/10.1016/j.patcog.2016.10.031

Acknowledgements

I am very thankful to my new PhD supervisor Dr. Shailendra Kumar Singh, Assistant Professor, LPU, Punjab, India for his continuous support in the revision, restructuring, and proof reading of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

There are no conflicts of interest and the research is not funded by any organisation/institute. The infrastructure and support are provided by Lovely Professional University where my PhD is going on.

Data sharing does not apply to this article as no datasets were generated or analyzed during the current study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Saghir, U., Hasan, M. Skin cancer detection and classification based on differential analyzer algorithm. Multimed Tools Appl 82, 41129–41157 (2023). https://doi.org/10.1007/s11042-023-14409-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14409-x