Abstract

Texture analysis plays an important role in many image processing applications to describe the objects. On the other hand, visual Fabric Defect Detection (FDD) is a highly research field in the computer vision. Surface defect refers to abnormalities in the texture of the surface. So, in this paper a dual purpose benchmark dataset is proposed for texture image analysis and surface defect detection. The first framework is based Segmentation with Contrast Limited Histogram Equalization (CLAHE) and finally FE for Classification (SCFC). The SCFC depends on improvement using CLAHE in addition to pre-processing followed by segmentation by OT and finally FE for classification task. The second scheme is relied on merging the features of A Trous Algorithm with Homomorphic Method (HM) (AH) following by Segmentation and Feature Extraction (FE) for Classification (AHSFC). The AHSFC depends on enhancement using AH in addition to pre-processing followed by segmentation using Optimum Global Thresholding (OT) and finally FE for the detection or classification task. The performance quality metrics for the suggested techniques are entropy, average gradient, contrast, Sobel edge magnitude, sensitivity, specificity, precision, accuracy and identification time.Simulation results prove that the success of both techniques in detecting the FDD. By comparing the first and the second presented algorithms, it is clear that the second suggested technique gives superior for the FDD the clothing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A confident understanding of fabric behaviour and characteristics are vital in the design and development of a functional garment. For instance, a warp knit mesh fabric made of 100% polyester designed to wick moisture away from the skin, with the quick dry ability, making it ideal for every daywear and preferred in extreme performance requirements. On the other hand, Georgette is a balanced plain-woven fabric generally made of 100% polyester with high twist yarns giving the fabric less smooth appearance used in fashion apparel. Textile materials have evolved in recent times and fabrics play a significant role in the development of sportswear industry. In fact, it reflects the quality of a brand and its identity [6, 12, 16].

The goal of image enhancement is to enhance the contrast among adjacent regions or features in order to support activities such as FDD and monitoring [20]. Among image enhancement techniques, it is shown the wavelet and homomorphic filtering techniques proposed in enable dynamic range compression and contrast enhancement simultaneously [1, 17, 19]. All these approaches concentrate on reinforcing the details of the image to be enhanced in terms of frequency domain [2, 18].

The AT is a powerful tool in image decomposition. If the image is decomposed using the AT, the details can be separated into the higher frequency sub-bands [1, 15]. Also, we use the homomorphic enhancement algorithm for transforming these details to illumination and reflectance components. Then, the reflectance components are amplified showing the details, clearly. Finally a wavelet reconstruction process is performed to get an enhanced image with much more details [3–5, 14, 21].

In the recent research is gear fault diagnosis in the manufacturing line using image recognition where used wavelet packet bispectrum to process the gear vibration signals [8]. For detecting fluff quality of fabric surface using optimal sensing where optimal sensing assesses fluff quality using practical method [9].

For solving problem of manually measuring fabric surface for thickness by using three-dimensional reconstruction of fleece evaluation [10]. Fabric defects effects on the company of the clothes. Because of the defected fabrics has to be sold at lower cost. So using deep learning to detection those defects in the fabrication of clothes [7, 11, 13, 22].

The motivation and problem definition of this paper:

-

Fabric defects directly affects the profit margins of the company. As the defected fabrics has to be sold at lower cost.

-

To minimize value loss due to variety of defect occurring in the fabric, a manufacturer should try to minimize those defects by taking suitable remedies.

An innovation and Contributions of this paper:

-

The main objective is to obtain an high resolution fabric image with as much details as possible for defects detection

-

Design and development of approaches to make fabric defects fabric.

-

Design and development of approaches to make classification for defects fabric more efficient by reducing their process time.

-

Building an efficient fabric defects detection system.

This research suggests two proposed approaches for FDD. The first approach is based on the SCFC. The second approach depends on the AHSFC. This paper is organized as follows. Section 2 gives motivation and related works. Section 3 gives a discussion of fabric defects. Section 4 surveys the CLAHE. Section 5 gives a discussion of defects classification. Section 6 gives feature extraction presents. Section 7 classification of fabric defects. Section 8 explains segmentation. Section 9 gives the AT. Section 10 gives the HM. Section 11 gives the AH. Section 12 presents the first proposed approach. Section 13 presents the second proposed approach. In section 14, performance metrics is spotted. Section 15 gives a discussion of the simulation results. Finally, section 16 clears the conclusion and future work.

2 Motivation and related works

This framework drives its motivation and importance through the paper topic and the pictures that are utilized for FDD. This research deals with an important topic derived from the problems addressed for the FDD. The basic objective of this proposal analyzes the fabric images [1, 17, 19, 20] by computerization for enabling the radiologist to detect and classify the FDD. This research presents two new approaches for FDD.

The first suggested approach is based SCFC. The SCFC depends on improvement by CLAHE followed by OT and finally FE for FDD. This research suggests two proposed modes for FDD. The first mode is based on the SCFC. The second method depends on the AHSFC. AHSFC is relied on enhancement with AH following by OT and FE for Classification and FDD. Compared to the most relevant work [8–10], this framework depends on performance evaluation with entropy, average gradient, contrast, Sobel edge magnitude, sensitivity, specificity, precision,accuracy and identification time. It is clear that the obtained results in this framework are the best from the previous works that introduced in [7, 11, 13, 22].

3 Fabric defects

It is generally a fault that spoils the quality of material. Faults in fabric results into reduction of its cost as well as reduces its value in the market from consumer point of view. A fabric defect is any abnormality in the fabric that hinders its acceptability by the consumer. For example of some the fabric defects in woven fabric are coloured flecks,knots, slub,broken ends woven in a bunch,broken pattern,double end, float, gout, hole, cut, or tear, lashing.

Importance FDD

-

With increasing in demand of quality fabric now customers are more concerned about the quality of the material.

-

In order to fulfill demand of quality material it is importance to avoid defects.

-

A significant reduction in the price of fabric is seen due to presence of faults.

-

It also affects the brand name.

4 CLAHE

This method is depended on applying the HE on pictures and creating out the modification for clip limit after the histogram process.

sing to avoid the noise problem and drawback of the HE.

The steps of this model can be summarized as follows:

1. Split the pictures into non - overlapping tiles.

2. The histograms of each tile are performed.

3. Apply a clip limit to obtain improved images.

4. Optimized for the clip limit CL to get high resolution of images to enable of FDD.

The clip limit CL is obtained by following equation [2, 19]:

where CL is a the clip limit, α is a clip factor, M and N are the region size in gray scale value .The maximum clip limit is obtained for α = 100.

5 Defects classification

It is in a many shapes in particular: knots, slub,broken ends woven in a bunch,broken pattern,double end, float, gout, hole, cut, or tear, lashing. It with progressively unpredictable shapes is processed easily. Hence the fabric picture that contains simple defect it’s not carcinogenic, while the dangerous picture is broken pattern hole, cut, or tear in fabric image .it is difficult to processing these defects.

6 Feature extraction

Most merits are produced from the abnormalities of the fabric picture. The extraction of these methods play very significant role in detecting defects of fabric image due to the nature of them. It proves that texture features are helpful in separating good and typical defected fabric. They can disengage isolate good and defected fabric image with classification.

7 Classification of fabric defects

MATLAB program is created out and suggested to improve the detection and classification of FDD in the fabric pictures, these classification steps are:

-

1.

Examine if the picture contains defect or not.

-

2.

On the off chance that no defect discovered, at that point ordinary picture.

-

3.

Whenever discovered defect then- defected picture.

-

4.

Segmentation:

-

Performing the OT on fabric image.

-

Detect the boundaries of picture by Edge Map (EM) with Canny Detection (CD).

-

-

5.

Classifying the defect dependent on shape of defect, and CD

-

Separate the defect area from the picture.

-

Whenever defect area is thick and thin or hole or tear or cut weft then smash defect.

-

The detection process of the Region-of-Interest (ROI) exudate edges is performed by utilizing the EM that detects the edges with low contrast.

8 Segmentation

This step is dividing a fabric image into regions with similar properties. The objective of this stage is to get the location and classify suspicious into benign or difficult. This process depended on an OT method. It is a non-parametric and unsupervised way of automatic threshold selection for segmentation of images. It is ideal as in it expands the between-class change, a notable measure utilized in factual discriminant examination [14, 21].

where M × N is the dimensions of the image, ni is the total number of pixels in the image with level i. Suppose we select a threshold k, and use it to split the picture into two classes: C1 and C2. Utilizing this threshold, the occurrence, P1(k) that a pixel is assigned to class C1 is given by the cumulative sum as follows:

The pixels of the input image can be appointed to L gray levels, k is a selected threshold from 0 < k < L-1.

The probability of Class C2 occurrence is,

where P1(k) is the probability of Class C1.

The mean intensity values of the pixels assigned to class C1,

The mean intensity values of the pixels assigned to class C2

where P2(k) is the probability of class C2.

The global mean is known by,

The problem is to discover an optimum magnitude for k that maximizes the way realized by this equation:

where σB2(k) is the between-class variance achieved by:

and σG2(k) is the global variance defined as,

where the optimum threshold is the k* that maximizes σB2(k).

9 AT

The AT decomposes an image into subbands using the “a’ trous” filtering approach [12, 15] in several consecutive stages. The low pass filter used in this process has the following mask for all stages [1, 2]:

Each difference between filter outputs of two consecutive stages is a subband of the original picture. We obtain an approximation picture. The difference between the inputs and the approximation images give entropy values. The first detail plane w1. If this process is repeated, we can obtain more detail planes w2 up to wn and finally an approximation plane pn.

10 HM

An image can be used represented as a product of two factors as in the following equation [3, 6]:

where f(n1, n2) is the obtained picture pixel value, i(n1, n2) is the light illumination incident on the object to be imaged and r(n1, n2) is the reflectance of that object .

It is known that illumination is approximately constant since the light falling on all objects is approximately the same. The only change between object images is in the reflectance component. This method is shown in Fig. 1.

If we apply a logarithmic process on Eq. (12), we can change the multiplication process into an addition process as follows:

The first term in the above equation has small variations but the second term has large variations as it corresponds to the reflectivity of the object to imaged. By attenuating the first term and reinforcing the second term of Eq. (13), we can reinforce the image details.

11 AH

In this approach, we merge the benefits of the HM and AT techniques. First, the image is decomposed into sub bands by the AT. Then, each sub band is processed, separately, using the HM to reinforce its details. This way is depicted in Fig. 2.

The steps of this scheme can be summarized as follows:

-

1.

Decompose the fabric image into four subbands p3, w1, w2 and w3 by the AT and the low pass mask of Eq. (11).

-

2.

Apply a logarithmic operation an each sub band to obtain the illumination and reflectance components of the subbands w1, w2 and w3 as they contain the details.

-

3.

Perform a reinforcement process of the reflectance factor in each sub band and an attenuation operation of the illumination factor.

-

4.

Reconstruct each sub band from its illumination and reflectance.

-

5.

Perform an inverse AT on the obtained sub-bands by adding p6, w1, w2, w3, w4, w5 and w6 after the HM processing to get the improved image.

12 The first proposed approach

The ASFC presents an efficient model for FDD in fabric images. It depends on improvement by CLAHE in addition to pre-processing followed by segmentation digital fabric pictures and separate the defected regions by classifying these pictures relied on FE, shape of defect, based on the CD sharpness. Then the system is decide if fabric image is not defected or defected, and determines whether the defected one is benign or smash. The graphic form of this technique is declared in the Fig. 3.

The steps of this scheme as bellows:

-

1.

Obtaining the original image

-

2.

Apply the CLAHE way on fabric picture as preprocessing process.

-

3.

Create out segmentation stage on the produced image.

-

4.

Perform the FE process on the obtained picture.

-

5.

Split the defected areas by classifying these pictures depended on the FE, shape of defect by the CD sharpness.

-

6.

Finally apply classification process on the acquired image.

-

7.

Then the system is deciding if fabric image is defected or not and determines if the defected one is smash.

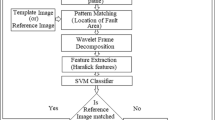

13 The second proposed scheme

The AHSFC presents an efficient model for FDD in fabric pictures. It depends on improvement by AH in addition to pre-processing followed by segmentation digital fabric images and separate the defected regions by classifying these pictures relied on FE, shape of defect, based on the CD sharpness. Then the system is decide if fabric image is not defected or defected, and determines whether the defected one is benign. The graphic form of this technique is declared in the Fig. 4.

The steps of this scheme as bellows:

-

8.

Picking up the original picture from the camera

-

9.

Implement the AH algorithm on fabric picture as preprocessing process.

-

10.

Create out segmentation stage on the produced image.

-

11.

Perform the FE process on the obtained image

-

12.

Split the defected areas by classifying these pictures depended on the FE, shape of defect by the CD sharpness.

-

13.

Finally apply classification process on the acquired image.

-

14.

Then the system is deciding if fabric image is defected or not and determines if the defected one is smash.

14 Performance metrics

This section presents the quality metrics used for the evaluation of enhancement results. These metrics include entropy (E), contrast improvement factor (CIF), average gradient (AG), Sobel edge magnitude SE), Sensitivity (SEN), Precision (PR), Specificity (SP), Accuracy (ACU) and Identification Time (IT) [6, 16].

The philosophy of E that it is metric of the amount of information in a random variable. The histogram of an image can be interpreted in the form of the PDF if it is normalized by the image dimensions. Assuming an equally spread histogram, this equivalent to a uniform random variable. The uniformly distributed random variable has equal probabilities of events leading to maximum entropy .on the other hand discrete peaked histograms as in black and white pictures have low amount of information. Hence the objective of processing of pictures is to get close to histograms with uniform distributions. The E of an image is expressed as follows [1, 3]:

where E is the entropy of the image, 255 is the maximum level for an 8-bit gray-scale image. The larger the number of levels in an image, the higher is the entropy. The pi is the probability of occurrence of pixels in the image having intensity ‘i’. Suppose the number of pixels having intensity ‘i’ is ni and the image contains n pixels\( , \kern1em {p}_i=\raisebox{1ex}{${n}_i$}\!\left/ \!\raisebox{-1ex}{$n$}\right. \).

where CIF is the percentage contrast enhancement factor, CO is the original picture contrast, and Ce is the enhanced picture contrast.

In image processing applications many edges mean much information. Unfortunately, the pictures are dark preventing the edges and details to appear. So, our objective is to reinforce edges revealing more details. Mathematically edges can be obtained with differentiation in both directions in images. Hence, AG is expressed as follows [3]:

where AG is the average gradient of the picture, f(x, y) is the original image, M × N is the dimensions of the picture.

Another metric for edge intensity is the magnitude of both horizontal and vertical derivatives defined as SE is expressed as follows [3]:

where ∇f is the sobel edge magnitude, fx and fy are two pictures that at each point contain the horizontal and vertical derivative approximations, respectively as shown in Fig. 5.

To estimate fx, we need to differentiate with x, and need to differentiation mask and also fy we need to differentiate with y, and need to differentiation mask as shown in Fig. 6.

Another perspective to look at the quality of pictures is to enhance the content of the fabric pictures. In the following sections, we’ll look at how to evaluate classification models using four other metrics are SEN, PR, SP and ACU.

Sensitivity

This factor is capacity of a classifier to recognize the positive outcomes quantitatively is assessed which is given as:

where TP is True Positives and FN is False Negatives.

Specificity

This index is capacity of a classifier to distinguish the negative outcomes is given as:

where TN is True Negatives, FP is False Positives, and FN is False Negatives.

Precision

This metric is defined as the proportion of true positive against all possible results and is given as:

where TP is True Positives, and FP is False Positives.

Accuracy

This metric decides the productivity of classifier as far as obvious positive and genuine negatives demonstrating the extent of genuine outcomes

where TP is True Positives, TN is True Negatives, FP is False Positives, and FN is False Negatives.

where TP represents the number of samples whose labels are positive, and the actual forecasts are positive, FP indicates the number of samples whose labels are negative, and the actual forecasts are positive. FN represents the number of samples whose labels are positive, and the actual forecasts are negative.

15 Simulation results

This section presents several simulation cases executed on seven different pictures to evaluate the two suggested mode. The evaluation metrics for FDD quality are E, CIF, AG, ∆f, SEN, PR, SP, ACU and IT .

These results adopt a strategy of presenting the original fabric pictures with their enhanced versions using the first proposed approach and the second proposed approach. The first approach is based on the SCFC. The second scheme depends on the AHSFC.

The results of FDD for two proposed algorithms are shown in Fig. 6. The performance metrics results for two schemes of the first case are summarized in Table 2.

A similar experiment has been carried out on other fabric picture. The results of the FDD for two proposed algorithms are shown in Fig. 6 to Fig. 12. The obtained results show that the second proposed scheme succeeded in the FDD, and it achieves the best details that have been obtained. Furthermore, it is clear that the second proposed scheme has succeeded in giving the best improvement results for the FDD from both the visual quality and performance metrics. The obtained numerical results are summarized in Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10 and Table 11.

Table 2 presents that the quality metrics of the first experiment. It has been shown that, the first approach has the least E value from the second mode. It has been shown that the value of AG is very low for the first technique and low for the second algorithm. It has been shown that the first mode has the least SE value with comparing by the second mode. With comparing between the two proposed schemes, clear that the second presented mode achieves the maximum values with E, AG and for the first case.

16 Discussion

To further confirm the effectiveness of the first and second presented modes, the cases on many other fabric pictures.

datasets are presented. The defect detection algorithm proposed in this work could distinguish between normal and defect images and identify specific fabric defects.

Therefore, accuracy (ACU) and Identification time are used as metrics for evaluation. The former reflects the algorithm’s ability to distinguish between normal and defective fabric pictures, whereas the latter reflects the time of mode’s ability to recognize specific fabric defects. The obtained results of these suggested techniques are explained in Figs. 6, 7, 8, 9, 10, 11 and 12.

In this section, seven cases have been created out on different fabric pictures to evaluate the performance of the two presented techniques. The evaluation metrics for image quality are E, CIF, AG, ∆f, SE, SEN, PR, SP and ACU. The numerical properties of the seven case are shown in Table 1.

The results of the first case are cleared in Fig. 6. Figure 6a presents the original fabric images. The first purposed algorithm, the second purposed algorithm with Three Subband (TS) and with Six Subband (SS), the first purposed algorithm results are declared in Fig. 6b to Fig. 6h respectively. A similar case has been carried out on other fabric pictures and the results are cleared in Figs. 7, 8, 9, 10, 11 and 12.

The obtained results for all cases are declared in Figs. 6, 7, 8, 9, 10, 11 and 12. The obtained results show that the second suggested mode succeeded in the improvement of FDD. Furthermore, it is clear that the second purposed technique has succeeded in giving the best FDD results from both the visual quality and performance metrics. .

Table 2 presents that the quality metrics of the first experiment. It has been cleared that, the first mode has the least E value from the second framework. It has been shown that the value of AG is very low for the first way and low for the second mode. It has been shown that the first approach has the least SE value with comparing by the second approach. With comparing between the two modes, clear that the second framework achieves the maximum values with E, AG and SE for the first experiment.

Table 3 presents that the quality metrics of the second experiment. It has been shown that, the first approach has the least E value from the second . It has been shown that the value of AG is very low for the first approach and low for the second way. It has been shown that the first approach has the least SE value with comparing by the second mode. With comparing between the two suggested ways, clear that the second proposed scheme achieves the maximum values with E, the average gradient and SE for the second case.

A similar experiment has been carried out on other fabric images and the results are cleared in Table 4 and Table 5.

A similar experiment has been carried out on other fabric pictures and the results are cleared in Table 6 and Table 7.

Table 8 shows the performance metrics of FDD for seventh case. It has shown that the more value of the E the increasing the features of the fabric image. It has shown that the more value of AG the increasing the FDD of the fabric picture.

Table 9 presents that the contrast enhancement results of the first experiment and the second experiment. It has been shown that, the first approach has the least contrast enhancement value from the second approach for the first experiment. It has been shown that the first approach has the least contrast enhancement value with comparing by the second approach. With comparing between two proposed approaches, clear that the second proposed approach achieves the maximum values with the contrast enhancement for all experiments.

Table 10 presents that the numerical IT results of all experiments. It has been shown that, the first approach has the longer time of IT ms value from the second mode for all cases. With comparing between two proposed schemes, clear that the second suggested approach is the fastest in time for all cases.

Table 11 presents that the numerical SEN, PR, SP and ACU results for all experiments. It has been shown that, the first algorithm has the least values of SEN PR, SP and ACU with respect to the second technique for all experiments. With comparing between two proposed ways, clear that the second suggested mode achieves the maximum values with SEN, PR, SP and ACU for all experiments.

Finally, Table 12 presents a summary of the performance of the suggested scheme alongside results reported for similar schemes.

As shown from the table, the ACU values for presented schemes change between 91 to 92. It is clear that the ACU value of the second proposed scheme is the largest from the other ways. The ACU value of the [7] is high. The ACU value of the [22] has the lowest from the other techniques. The ACU value of the second suggested scheme is the largest with respect all the traditional techniques. With respect to the IT values for presented schemes change between 60 to 92 ms. It is clear that the IT value of the second proposed scheme is the fastest IT from the other algorithms. The IT value of the first approach is long IT. The IT value of the [7] is 35 ms. The IT value of the [13] is 3 ms. The IT value of the second suggested scheme is the lowest with respect all the traditional ways. So, clear that the second proposed scheme is the best with comparing the traditional techniques. Relative to the similar studies reported in the table, ACU and IT values for presented techniques outperform those in [7, 11, 13, 22].

17 Conclusion and future work

This framework presents two proposed modes for FDD. The first scheme is based on the SCFC. The second way depends on the AHSFC. The quality assessments for evaluated this paper are E, CIF, AG, ∆f, SEN, PR, SP, ACU and IT. The obtained results for suggested second scheme ensure high ACU values and the fastest in IT compared with the other traditional schemes. The obtained results using the second suggested mode reveal its ability to enhancement and FDD in the clothes with comparing the first technique. In the future work, the presented techniques can be recommended deep learning models for classification of the obtained images.

Data availability

All data generated or analyzed during this study are included in this published article (and its supplementary information files).

References

Ashiba HI, Awadallah KH, El-Halfawy SM, El-Samie FEA (2008) Homomorphic enhancement of infrared images using the additive wavelet transform. Prog Electromag Res C 1:123–130

Ashiba HI, Mansour HM, Ahmed HM, El-Kordy MF, Dessouky MI, El-Samie FEA (2018) Enhancement of Infrared images based on efficient histogram processing. Wirel Pers Commun 99:619–636

Ashiba HI, Mansour HM, Ahmed HM, El-Kordy MF, Dessouky MI, Zahran O, El-Samie FEA (May 2019) Enhancement of IR images using histogram processing and the Undecimated additive wavelet transform. Multimed Tools Appl 78(9):11277–11290

Brzozowskia K, Frankowskab E, Piaseckia P, Zięcina P, Zukowski P, Walecka RB (2011) The use of routine imaging data in diagnosis of cerebral pseudoaneurysm prior to Angiography. Eur J Radiol 80:e401–e409

Fan J, Yang J, Wang Y, Yang S, Ai D, Huang Y, Song H, Wang Y, Shen D (2019) Deep feature descriptor based hierarchical dense matching for X-ray angiographic images. Comput Methods Prog Biomed 175:233–242

Gonzalez RC, Woods RE (2008) Digital ImageProcessing

Jin R, Niu Q (2021) "Automatic Fabric Defect Detection Based on an Improved YOLOv5", Hindawi ,Mathematical Problems in Engineering , Article ID 7321394, pp.1–13 https://doi.org/10.1155/2021/7321394

Jin S, Fan D, Malekian R, Duan Z, Li Z (2018) An image recognition method for gear fault diagnosis in the manufacturing line of short filament fibres. Insight-Non-Destruct Test Cond Moni 60(5):270–275

Jin S, Lin Q, Bie Y, Ma Q, Li Z (2020) A practical method for detecting fluff quality of fabric surface using optimal sensing. Elektronika IR Elektrotechnika 26(1):58–62

Jin S, Chen Y, Yin J, Li Y, Gupta MK, Fracz P, Li Z (2020) Three-Dimensional Reconstruction of Fleece Fabric Surface for Thickness Evaluation. Electronics 9(9):1–12

Jing J, Zhuo D, Zhang H (2020) “Fabric defect detection using the improved YOLOv3 model,” Journal of Engineered Fibers and Fabrics, vol. 15

Kumbhar U, Patil V, Rudrakshi S (2013) Enhancement Of Medical Images Using Image Processing In Matlab. Int J Engin Res Technol 2(4):2278–0181

Kuznetsova A, Maleva T, Soloviev V (2020) “Detecting apples in orchards using YOLOv3 and YOLOv5 in general and close-up images,” in Proceedings of the International Symposium on Neural Networks, Cairo, Egypt.

Latha R, Senthil Kumar S, Manohar V (2010) Segmentation of linear structures from medical images. Proc Comp Sci 2:303–306

Lim JS (1990) Two -Dimensional signal and image processing. Prentice Hall Inc

MATLAB (2010) Version 7.10.0. Natick, Massachusettse: The MathWorks Inc.

Rajalingam B, Priya R (2017) Multimodality Medical Image Fusion Based on Hybrid Fusion Techniques. Int J Engin Manufact Sci 7(1):2249–3115

Rajalingam B, Priya R (2017) A novel approach for multimodal medical image fusion using hybrid fusion algorithms for disease analysis. Int J Pure App Mathem 117(15):599–619

Singh BB, Patel S (2017) Efficient Medical Image Enhancement using CLAHE Enhancement and Wavelet Fusion. Int J Comput Appl 167(5):0975–8887

Wang X, Liu P, Wang F (2010) Fabric-skin friction property measurement system. Int J Clothing Sci Technol 22(4):285–296

P. Wildner, M. Stasiołek, M. Matysiak (2020) " Differential diagnosis of multiple sclerosis and other inflammatory CNS Diseases", Multiple Sclerosis and Related Disorders vol. 37, No. 101452

Wu Z, Zhuo Y, Li J, Feng Y, Han B, Liao S (2018) A Fast monochromatic fabric defect Fast detection method based on convolutional neural network. J Comp-Aided Des Comp Grap 30(12):2262

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ashiba, H.I., Ashiba, M.I. Novel proposed technique for automatic fabric defect detection. Multimed Tools Appl 82, 30783–30806 (2023). https://doi.org/10.1007/s11042-023-14368-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14368-3